Recap Memory Hierarchy 1 Unified vs Separate Level

- Slides: 7

Recap: Memory Hierarchy 1

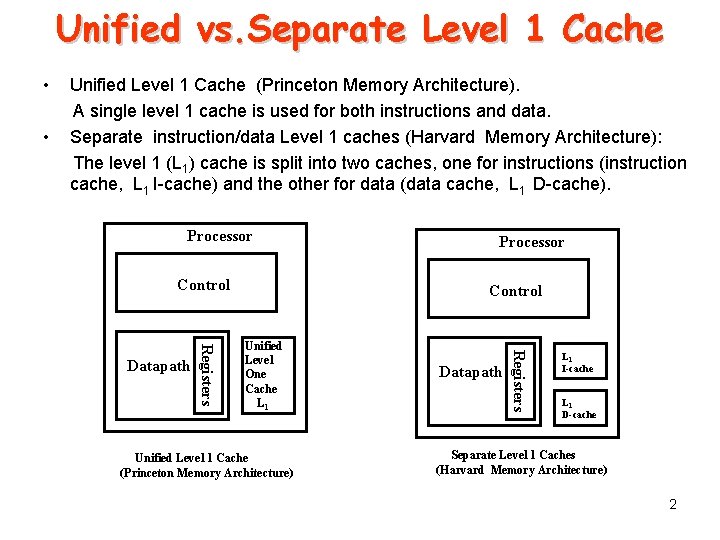

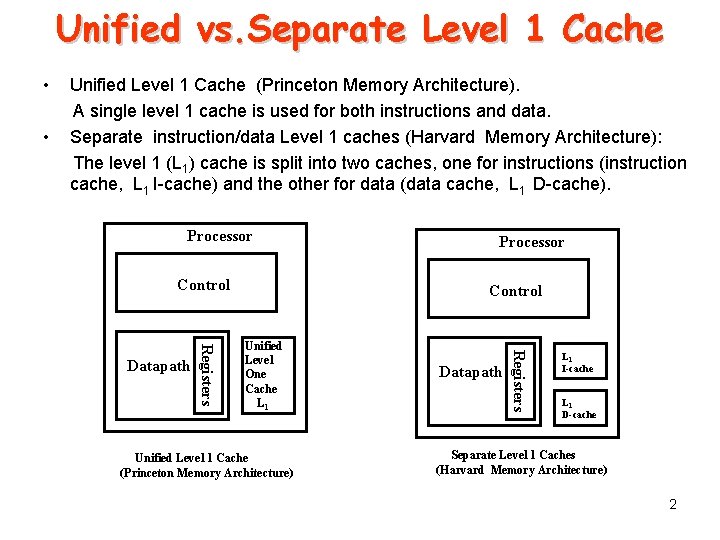

Unified vs. Separate Level 1 Cache • • Unified Level 1 Cache (Princeton Memory Architecture). A single level 1 cache is used for both instructions and data. Separate instruction/data Level 1 caches (Harvard Memory Architecture): The level 1 (L 1) cache is split into two caches, one for instructions (instruction cache, L 1 I-cache) and the other for data (data cache, L 1 D-cache). Processor Control Unified Level One Cache L 1 Unified Level 1 Cache (Princeton Memory Architecture) Datapath Registers Datapath Processor L 1 I-cache L 1 D-cache Separate Level 1 Caches (Harvard Memory Architecture) 2

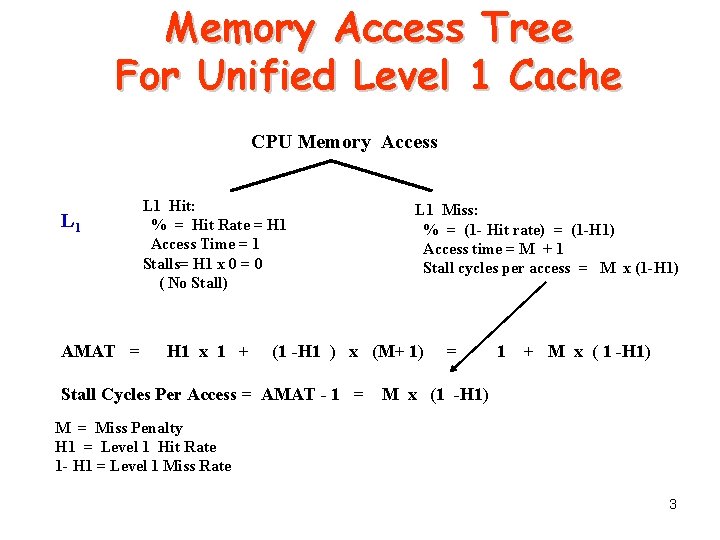

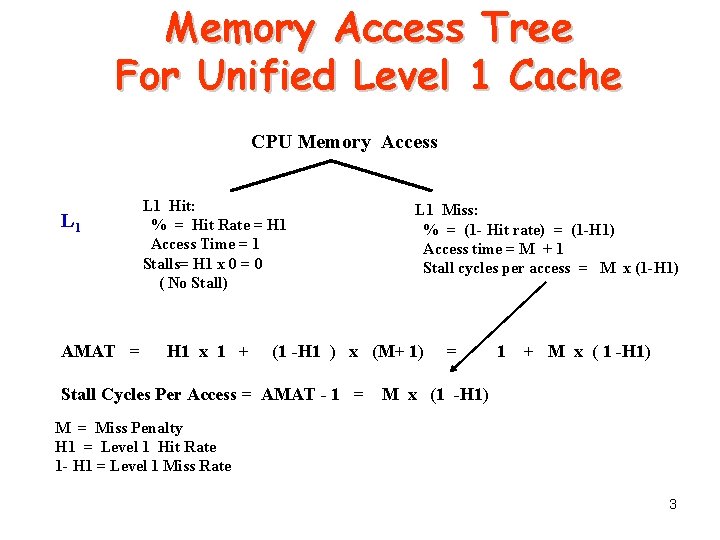

Memory Access Tree For Unified Level 1 Cache CPU Memory Access L 1 AMAT = L 1 Hit: % = Hit Rate = H 1 Access Time = 1 Stalls= H 1 x 0 = 0 ( No Stall) H 1 x 1 + L 1 Miss: % = (1 - Hit rate) = (1 -H 1) Access time = M + 1 Stall cycles per access = M x (1 -H 1) (1 -H 1 ) x (M+ 1) Stall Cycles Per Access = AMAT - 1 = = 1 + M x ( 1 -H 1) M x (1 -H 1) M = Miss Penalty H 1 = Level 1 Hit Rate 1 - H 1 = Level 1 Miss Rate 3

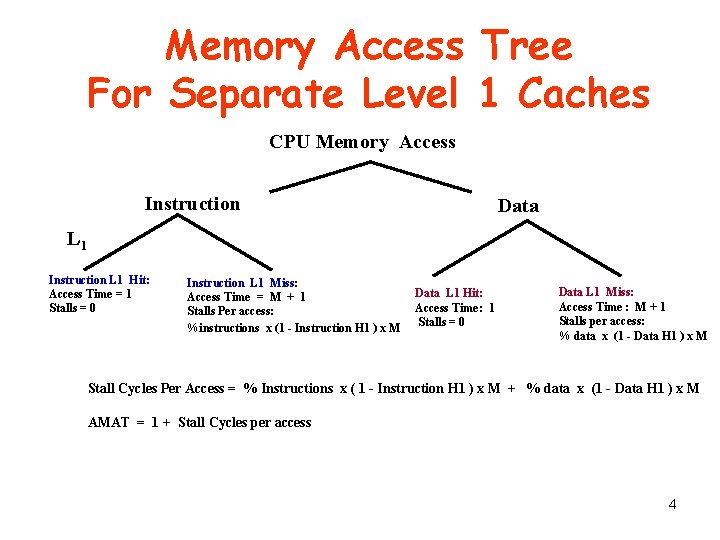

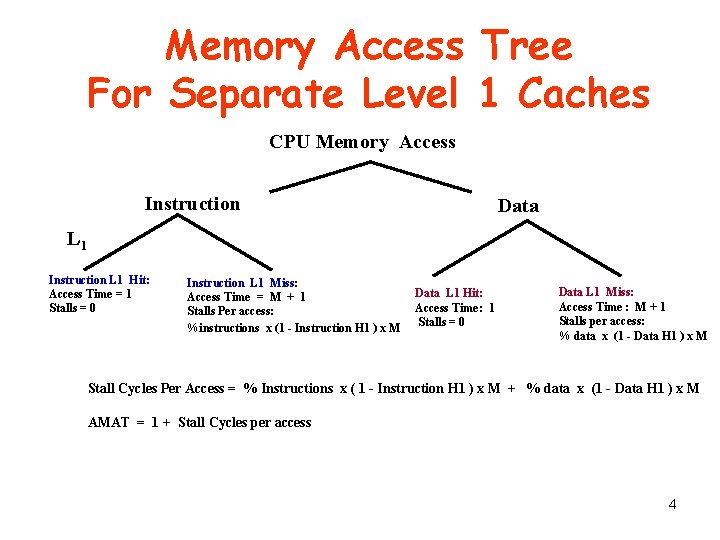

Memory Access Tree For Separate Level 1 Caches CPU Memory Access Instruction Data L 1 Instruction L 1 Hit: Access Time = 1 Stalls = 0 Instruction L 1 Miss: Access Time = M + 1 Stalls Per access: %instructions x (1 - Instruction H 1 ) x M Data L 1 Hit: Access Time: 1 Stalls = 0 Data L 1 Miss: Access Time : M + 1 Stalls per access: % data x (1 - Data H 1 ) x M Stall Cycles Per Access = % Instructions x ( 1 - Instruction H 1 ) x M + % data x (1 - Data H 1 ) x M AMAT = 1 + Stall Cycles per access 4

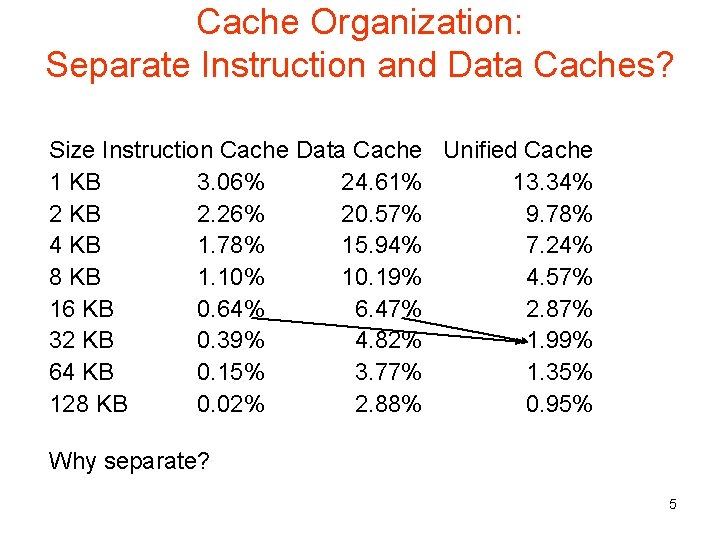

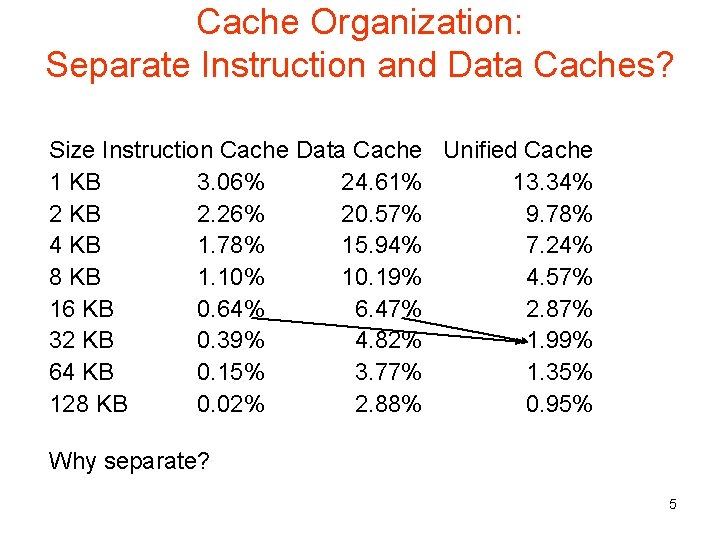

Cache Organization: Separate Instruction and Data Caches? Size Instruction Cache Data Cache Unified Cache 1 KB 3. 06% 24. 61% 13. 34% 2 KB 2. 26% 20. 57% 9. 78% 4 KB 1. 78% 15. 94% 7. 24% 8 KB 1. 10% 10. 19% 4. 57% 16 KB 0. 64% 6. 47% 2. 87% 32 KB 0. 39% 4. 82% 1. 99% 64 KB 0. 15% 3. 77% 1. 35% 128 KB 0. 02% 2. 88% 0. 95% Why separate? 5

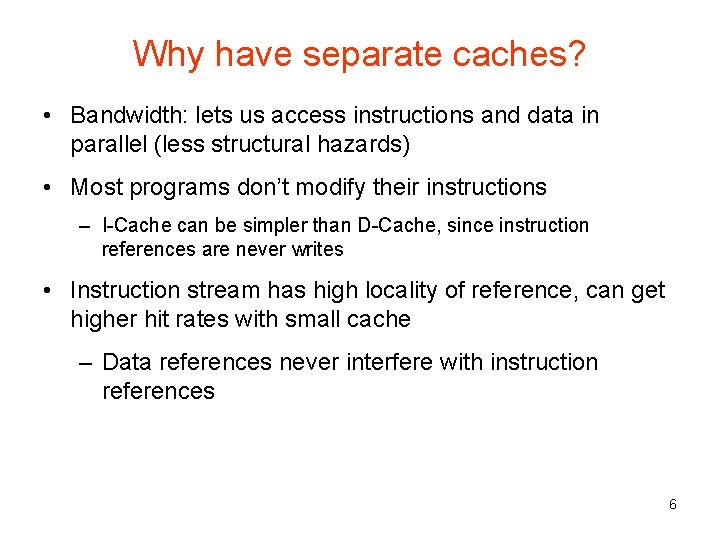

Why have separate caches? • Bandwidth: lets us access instructions and data in parallel (less structural hazards) • Most programs don’t modify their instructions – I-Cache can be simpler than D-Cache, since instruction references are never writes • Instruction stream has high locality of reference, can get higher hit rates with small cache – Data references never interfere with instruction references 6

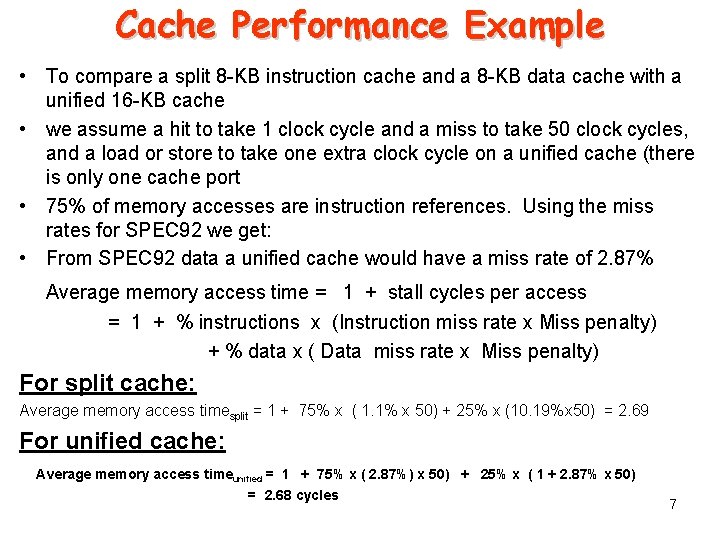

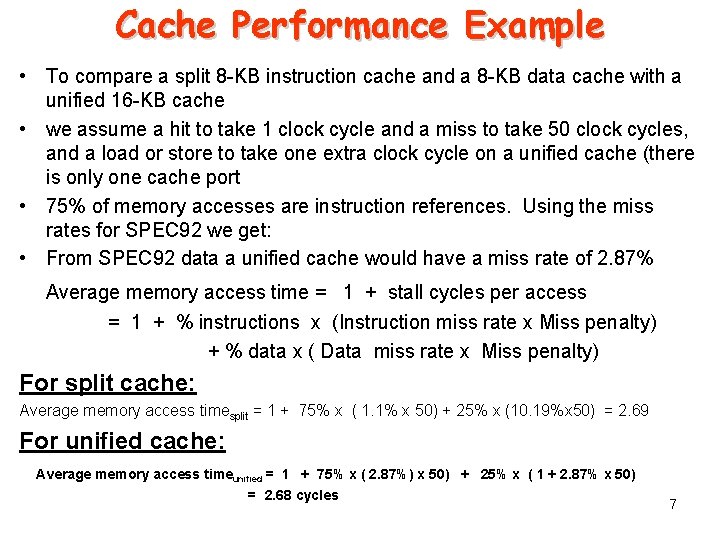

Cache Performance Example • To compare a split 8 -KB instruction cache and a 8 -KB data cache with a unified 16 -KB cache • we assume a hit to take 1 clock cycle and a miss to take 50 clock cycles, and a load or store to take one extra clock cycle on a unified cache (there is only one cache port • 75% of memory accesses are instruction references. Using the miss rates for SPEC 92 we get: • From SPEC 92 data a unified cache would have a miss rate of 2. 87% Average memory access time = 1 + stall cycles per access = 1 + % instructions x (Instruction miss rate x Miss penalty) + % data x ( Data miss rate x Miss penalty) For split cache: Average memory access timesplit = 1 + 75% x ( 1. 1% x 50) + 25% x (10. 19%x 50) = 2. 69 For unified cache: Average memory access timeunified = 1 + 75% x ( 2. 87%) x 50) + 25% x ( 1 + 2. 87% x 50) = 2. 68 cycles 7