Recap 1 Measuring Performance A computer user response

- Slides: 16

Recap 1

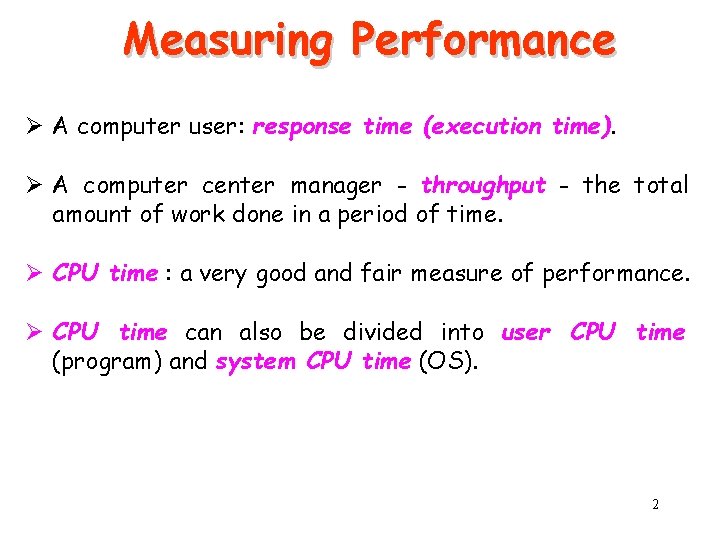

Measuring Performance Ø A computer user: response time (execution time). Ø A computer center manager - throughput - the total amount of work done in a period of time. Ø CPU time : a very good and fair measure of performance. Ø CPU time can also be divided into user CPU time (program) and system CPU time (OS). 2

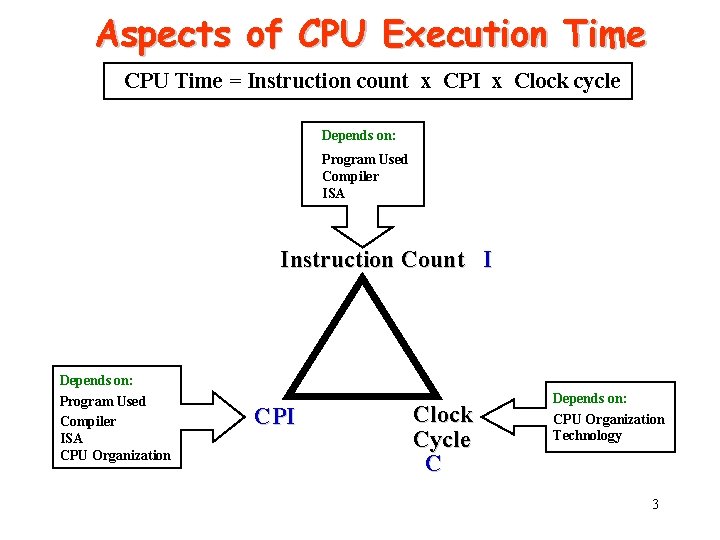

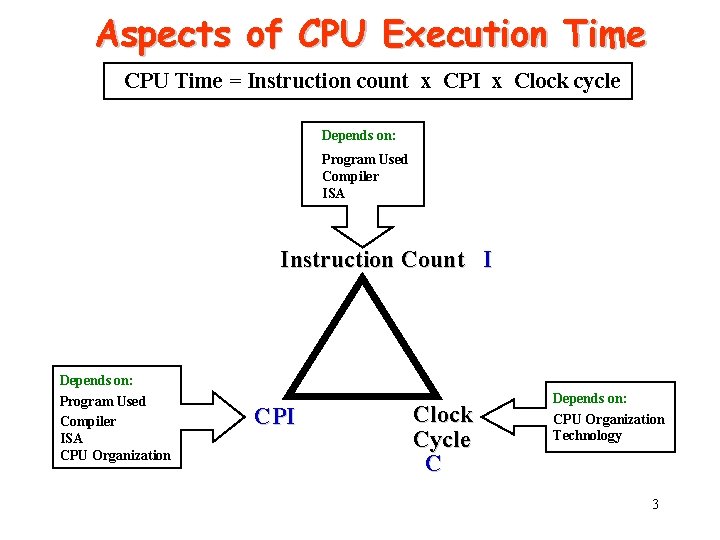

Aspects of CPU Execution Time CPU Time = Instruction count x CPI x Clock cycle Depends on: Program Used Compiler ISA Instruction Count I Depends on: Program Used Compiler ISA CPU Organization CPI Clock Cycle C Depends on: CPU Organization Technology 3

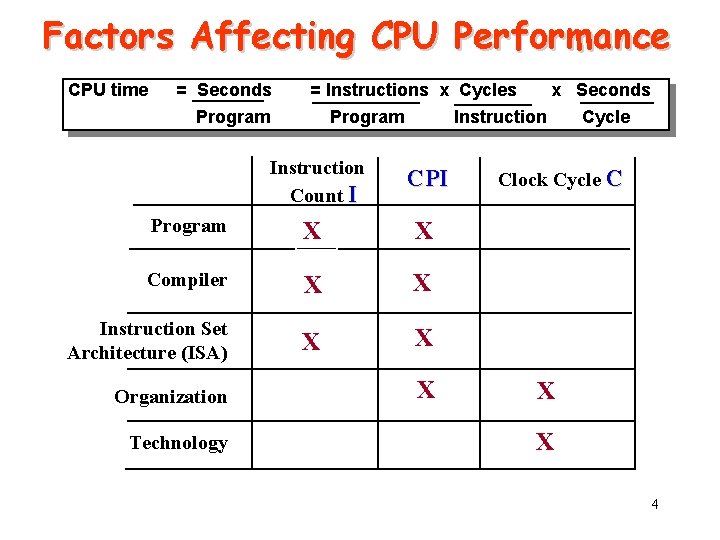

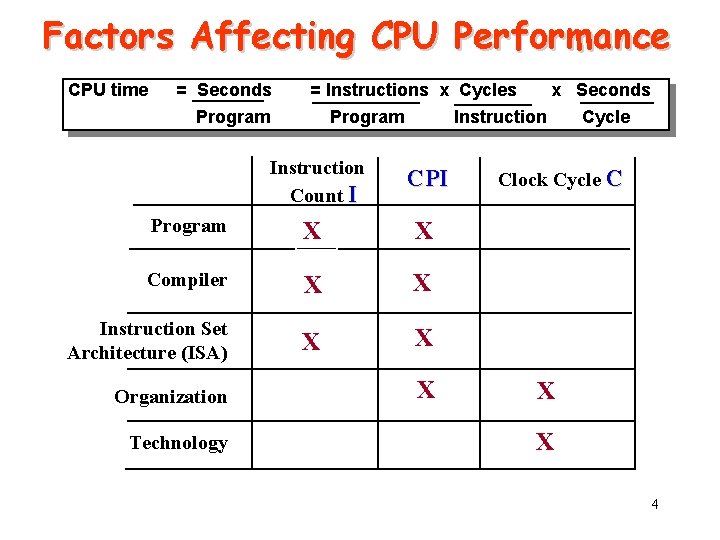

Factors Affecting CPU Performance CPU time = Seconds = Instructions x Cycles Program Instruction Count I CPI Program X X Compiler X X Instruction Set Architecture (ISA) X X Organization Technology x Seconds X Cycle Clock Cycle C X X 4

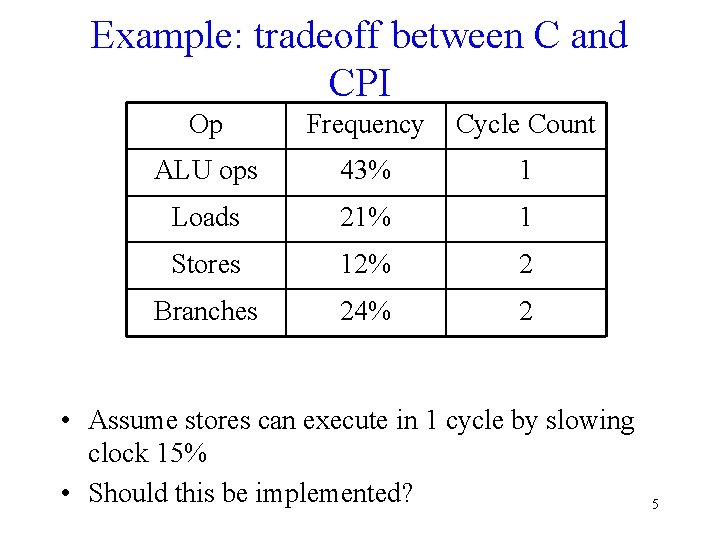

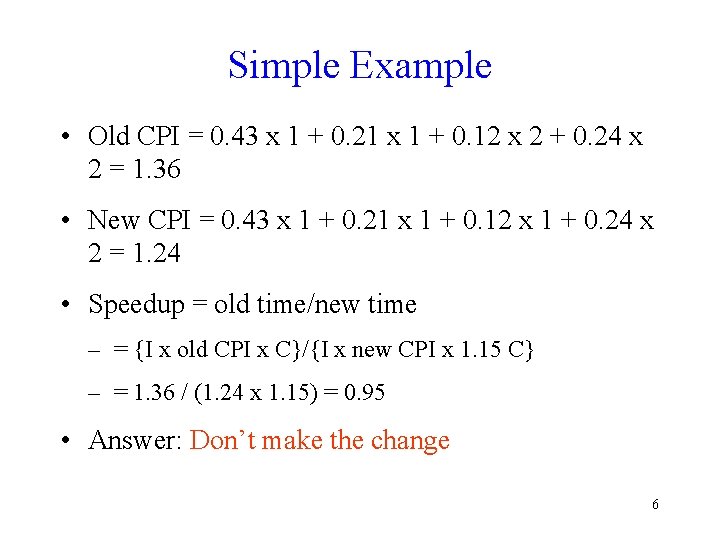

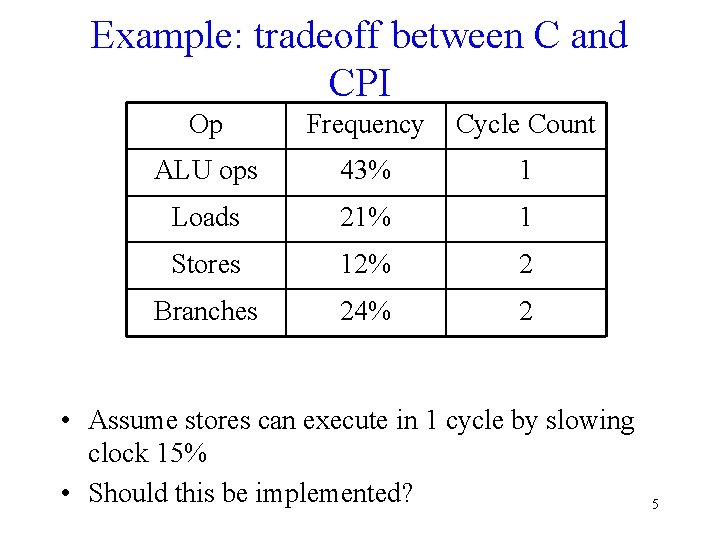

Example: tradeoff between C and CPI Op Frequency Cycle Count ALU ops 43% 1 Loads 21% 1 Stores 12% 2 Branches 24% 2 • Assume stores can execute in 1 cycle by slowing clock 15% • Should this be implemented? 5

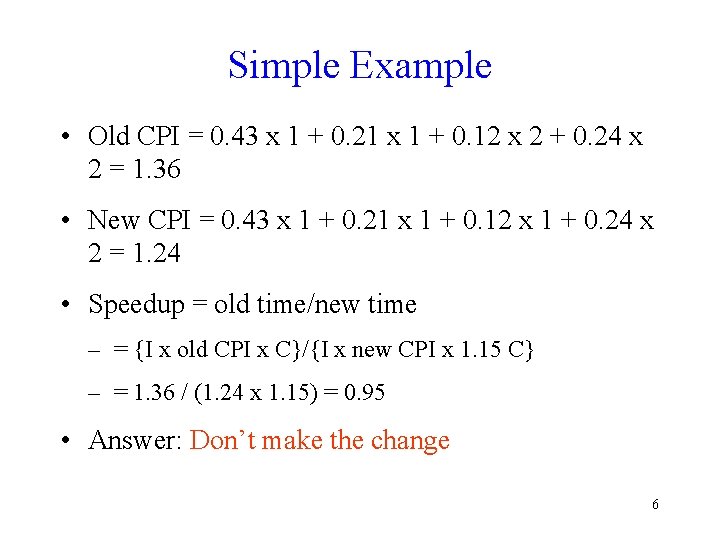

Simple Example • Old CPI = 0. 43 x 1 + 0. 21 x 1 + 0. 12 x 2 + 0. 24 x 2 = 1. 36 • New CPI = 0. 43 x 1 + 0. 21 x 1 + 0. 12 x 1 + 0. 24 x 2 = 1. 24 • Speedup = old time/new time – = {I x old CPI x C}/{I x new CPI x 1. 15 C} – = 1. 36 / (1. 24 x 1. 15) = 0. 95 • Answer: Don’t make the change 6

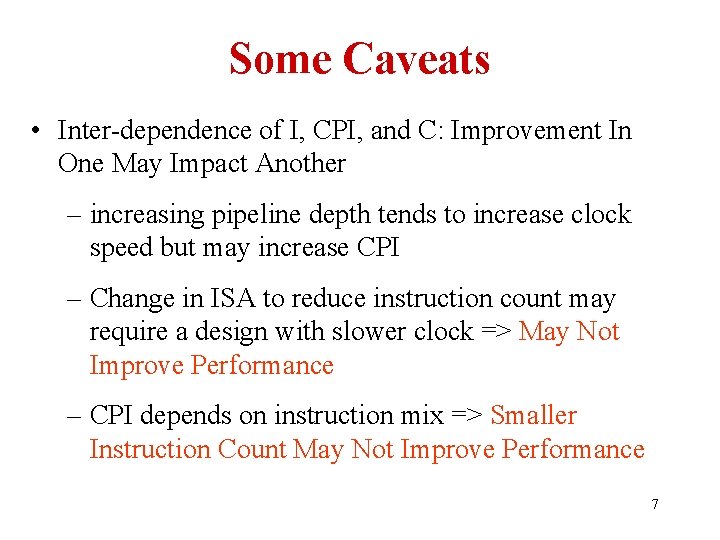

Some Caveats • Inter-dependence of I, CPI, and C: Improvement In One May Impact Another – increasing pipeline depth tends to increase clock speed but may increase CPI – Change in ISA to reduce instruction count may require a design with slower clock => May Not Improve Performance – CPI depends on instruction mix => Smaller Instruction Count May Not Improve Performance 7

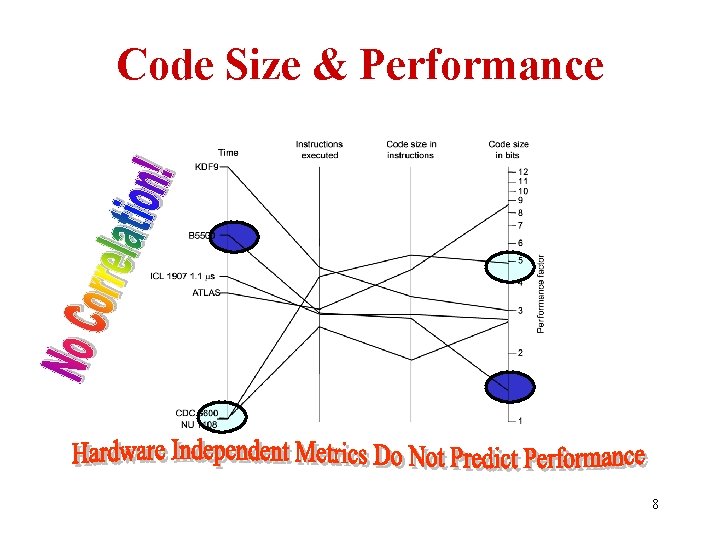

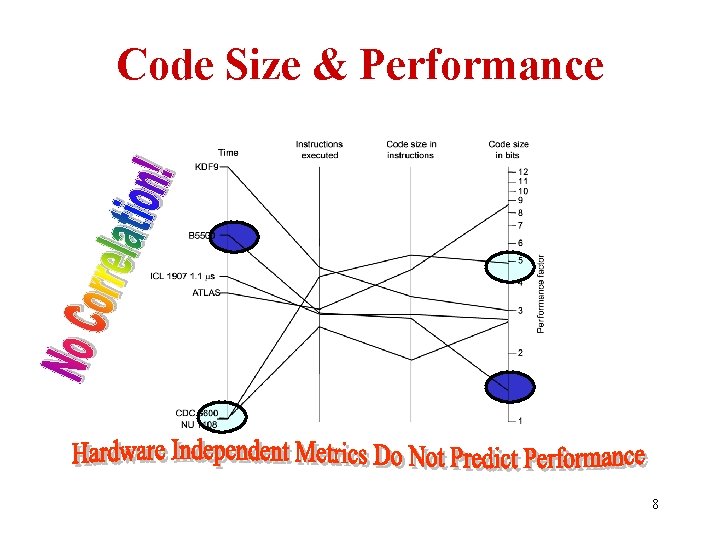

Code Size & Performance 8

Benchmarks and Benchmarking • In lack of a universal task pick some programs that represent common tasks • Use representative programs to compare performance of systems: • CAUTIONS: – Comparisons are as good as the benchmarks are in representing your real workload. – Many parameters affect measured performance 9

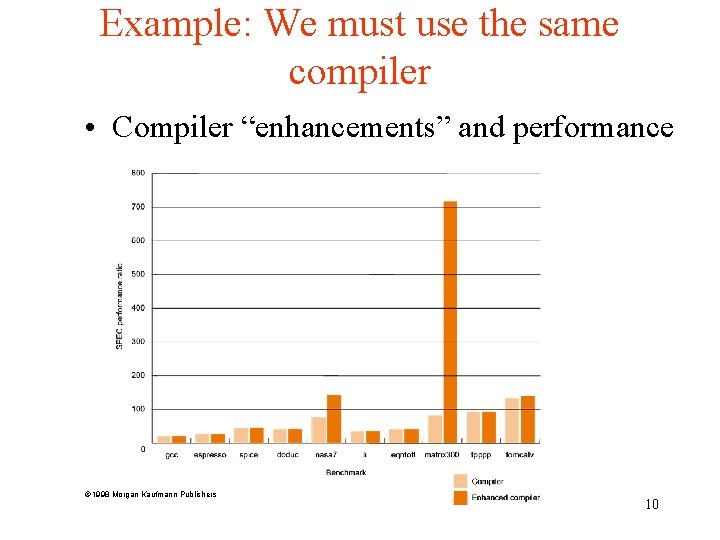

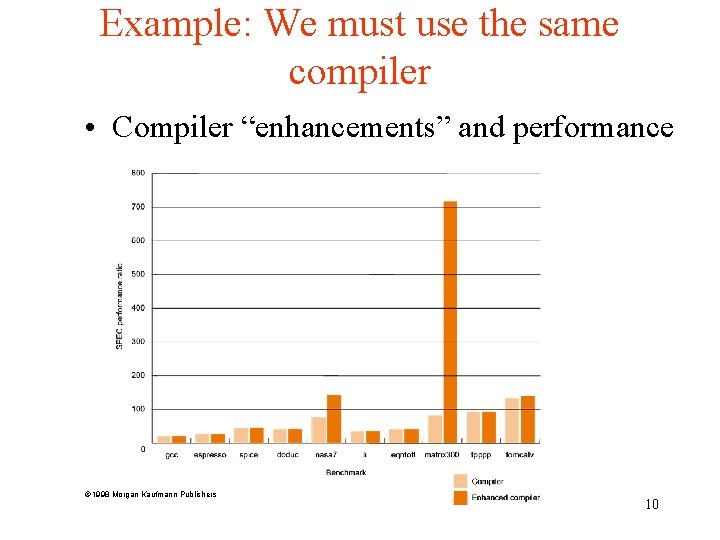

Example: We must use the same compiler • Compiler “enhancements” and performance 1998 Morgan Kaufmann Publishers 10

Benchmark Suites • A Suite Is a Collection of Representative Benchmarks From Different Application Domains • Weakness of Any One Benchmark Likely to Be Compensated By Another • Standard Performance Evaluation Corporation (SPEC) – Most Popular Benchmark Suite – Suite Consists of Kernels, Small Fragments, Large Applications – SPEC 2006: CINT 2006, CFP 2006 – http: //www. spec. org/ • Benchmark suites for servers – SPECSFS: measures performance of File servers – SPECWeb: measurers performance of Web servers 11

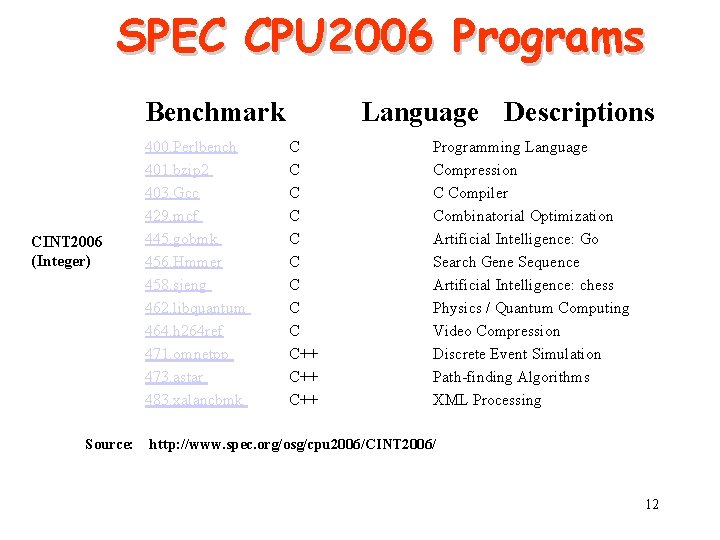

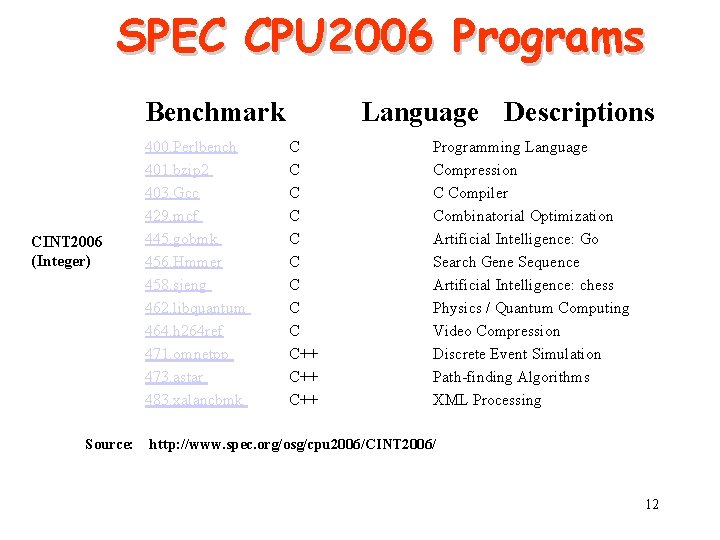

SPEC CPU 2006 Programs Benchmark CINT 2006 (Integer) Source: 400. Perlbench 401. bzip 2 403. Gcc 429. mcf 445. gobmk 456. Hmmer 458. sjeng 462. libquantum 464. h 264 ref 471. omnetpp 473. astar 483. xalancbmk Language Descriptions C C C C C++ C++ Programming Language Compression C Compiler Combinatorial Optimization Artificial Intelligence: Go Search Gene Sequence Artificial Intelligence: chess Physics / Quantum Computing Video Compression Discrete Event Simulation Path-finding Algorithms XML Processing http: //www. spec. org/osg/cpu 2006/CINT 2006/ 12

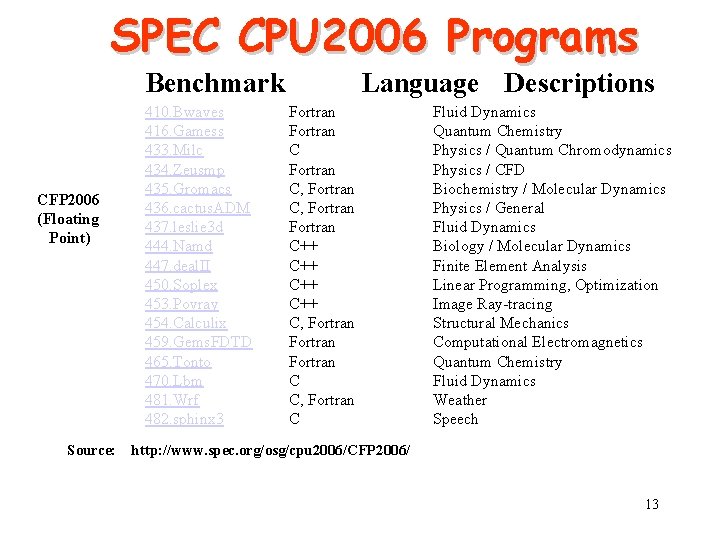

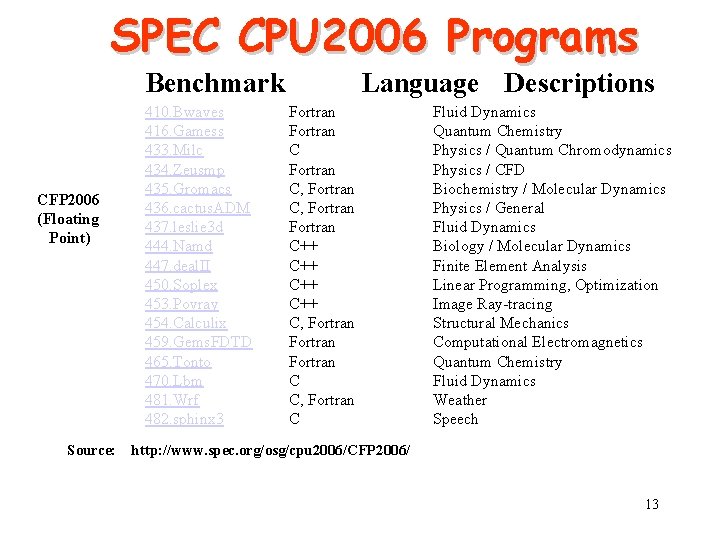

SPEC CPU 2006 Programs Benchmark CFP 2006 (Floating Point) Source: 410. Bwaves 416. Gamess 433. Milc 434. Zeusmp 435. Gromacs 436. cactus. ADM 437. leslie 3 d 444. Namd 447. deal. II 450. Soplex 453. Povray 454. Calculix 459. Gems. FDTD 465. Tonto 470. Lbm 481. Wrf 482. sphinx 3 Language Descriptions Fortran C, Fortran C++ C++ C, Fortran C C, Fortran C Fluid Dynamics Quantum Chemistry Physics / Quantum Chromodynamics Physics / CFD Biochemistry / Molecular Dynamics Physics / General Fluid Dynamics Biology / Molecular Dynamics Finite Element Analysis Linear Programming, Optimization Image Ray-tracing Structural Mechanics Computational Electromagnetics Quantum Chemistry Fluid Dynamics Weather Speech http: //www. spec. org/osg/cpu 2006/CFP 2006/ 13

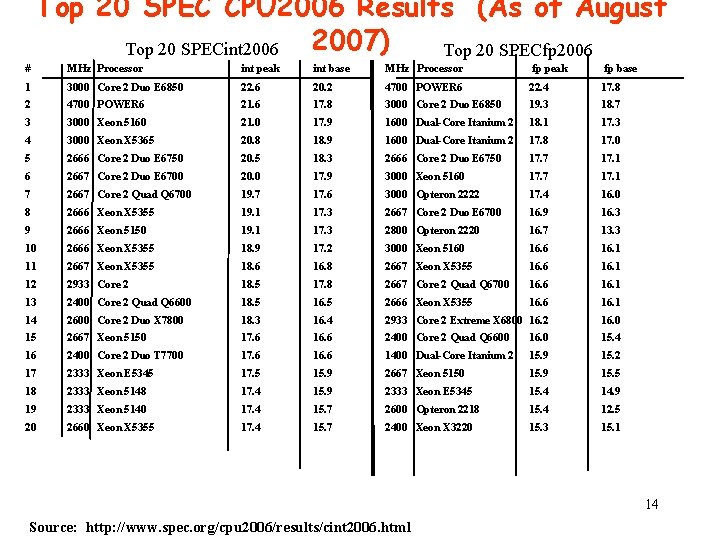

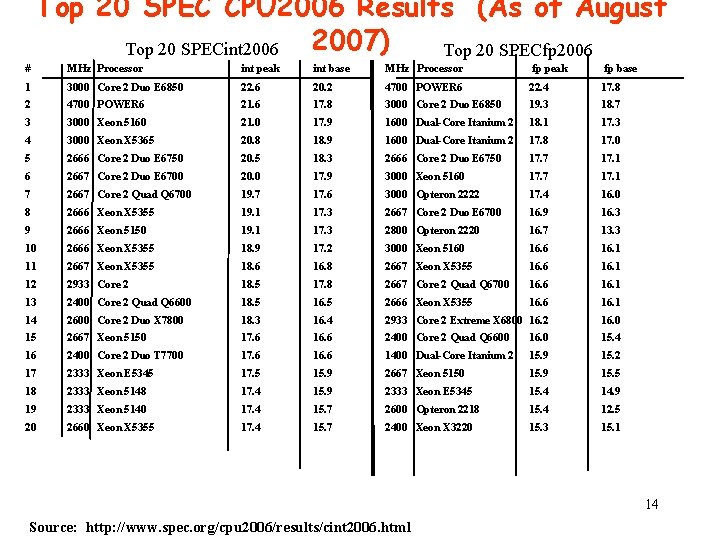

Top 20 SPEC CPU 2006 Results (As of August 2007) Top 20 SPECint 2006 Top 20 SPECfp 2006 # MHz Processor int peak int base MHz Processor fp peak fp base 1 3000 Core 2 Duo E 6850 22. 6 20. 2 4700 POWER 6 22. 4 17. 8 2 4700 POWER 6 21. 6 17. 8 3000 Core 2 Duo E 6850 19. 3 18. 7 3 3000 Xeon 5160 21. 0 17. 9 1600 Dual-Core Itanium 2 18. 1 17. 3 4 3000 Xeon X 5365 20. 8 18. 9 1600 Dual-Core Itanium 2 17. 8 17. 0 5 2666 Core 2 Duo E 6750 20. 5 18. 3 2666 Core 2 Duo E 6750 17. 7 17. 1 6 2667 Core 2 Duo E 6700 20. 0 17. 9 3000 Xeon 5160 17. 7 17. 1 7 2667 Core 2 Quad Q 6700 19. 7 17. 6 3000 Opteron 2222 17. 4 16. 0 8 2666 Xeon X 5355 19. 1 17. 3 2667 Core 2 Duo E 6700 16. 9 16. 3 9 2666 Xeon 5150 19. 1 17. 3 2800 Opteron 2220 16. 7 13. 3 10 2666 Xeon X 5355 18. 9 17. 2 3000 Xeon 5160 16. 6 16. 1 11 2667 Xeon X 5355 18. 6 16. 8 2667 Xeon X 5355 16. 6 16. 1 12 2933 Core 2 18. 5 17. 8 2667 Core 2 Quad Q 6700 16. 6 16. 1 13 2400 Core 2 Quad Q 6600 18. 5 16. 5 2666 Xeon X 5355 16. 6 16. 1 14 2600 Core 2 Duo X 7800 18. 3 16. 4 2933 Core 2 Extreme X 6800 16. 2 16. 0 15 2667 Xeon 5150 17. 6 16. 6 2400 Core 2 Quad Q 6600 16. 0 15. 4 16 2400 Core 2 Duo T 7700 17. 6 16. 6 1400 Dual-Core Itanium 2 15. 9 15. 2 17 2333 Xeon E 5345 17. 5 15. 9 2667 Xeon 5150 15. 9 15. 5 18 2333 Xeon 5148 17. 4 15. 9 2333 Xeon E 5345 15. 4 14. 9 19 2333 Xeon 5140 17. 4 15. 7 2600 Opteron 2218 15. 4 12. 5 20 2660 Xeon X 5355 17. 4 15. 7 2400 Xeon X 3220 15. 3 15. 1 14 Source: http: //www. spec. org/cpu 2006/results/cint 2006. html

Performance Evaluation Using Benchmarks • “For better or worse, benchmarks shape a field” • Good products created when we have: – Good benchmarks – Good ways to summarize performance • Given sales depend in big part on performance relative to competition, there is big investment in improving products as reported by performance summary • If benchmarks inadequate, then choose between improving product for real programs vs. improving product to get more sales; Sales almost always wins! 15

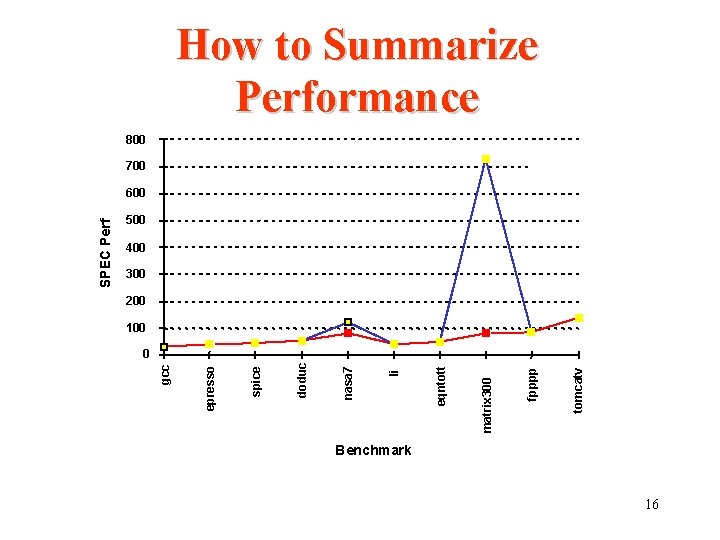

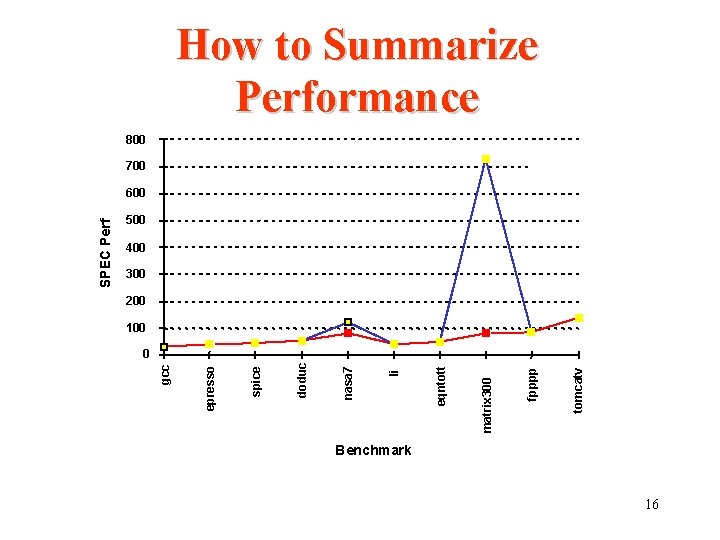

tomcatv fpppp matrix 300 eqntott li nasa 7 doduc spice epresso gcc SPEC Perf How to Summarize Performance 800 700 600 500 400 300 200 100 0 Benchmark 16