Recall from Tuesday Inner Products Definition An inner

- Slides: 5

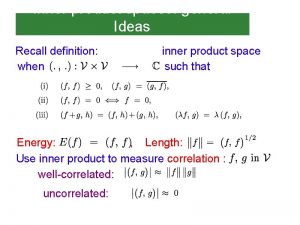

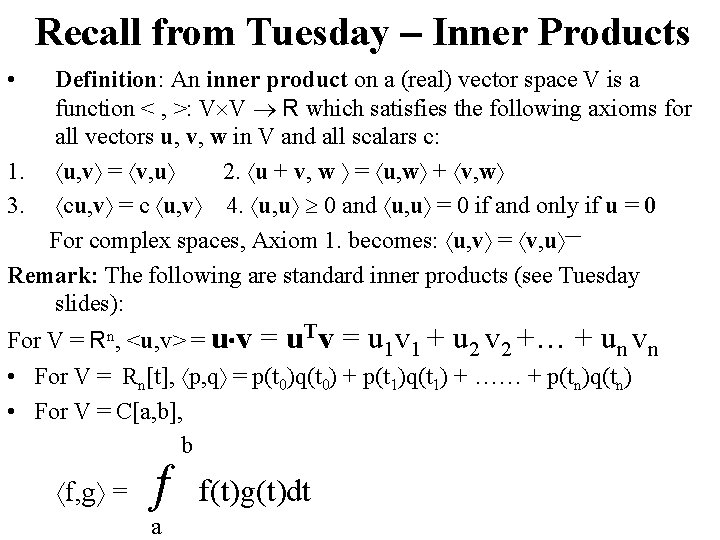

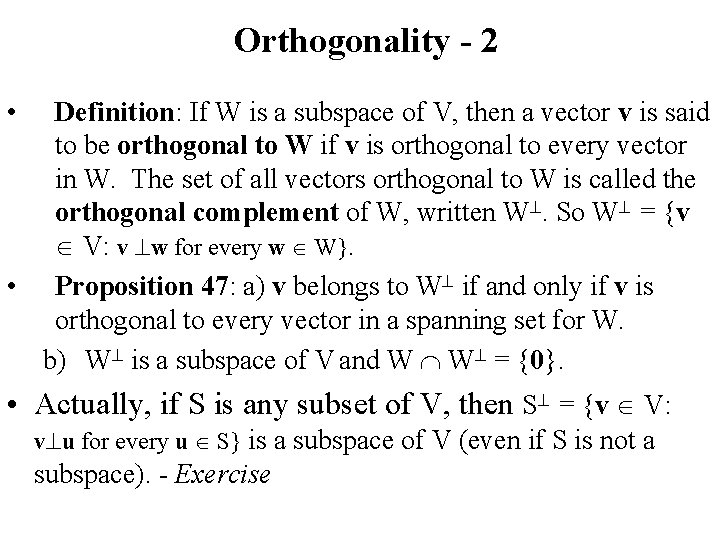

Recall from Tuesday Inner Products • Definition: An inner product on a (real) vector space V is a function < , >: V V R which satisfies the following axioms for all vectors u, v, w in V and all scalars c: 1. u, v = v, u 2. u + v, w = u, w + v, w 3. cu, v = c u, v 4. u, u 0 and u, u = 0 if and only if u = 0 For complex spaces, Axiom 1. becomes: u, v = v, u — Remark: The following are standard inner products (see Tuesday slides): For V = Rn, <u, v> = u v = u. Tv = u 1 v 1 + u 2 v 2 +… + un vn • For V = Rn[t], p, q = p(t 0)q(t 0) + p(t 1)q(t 1) + …… + p(tn)q(tn) • For V = C[a, b], b f, g = a f(t)g(t)dt

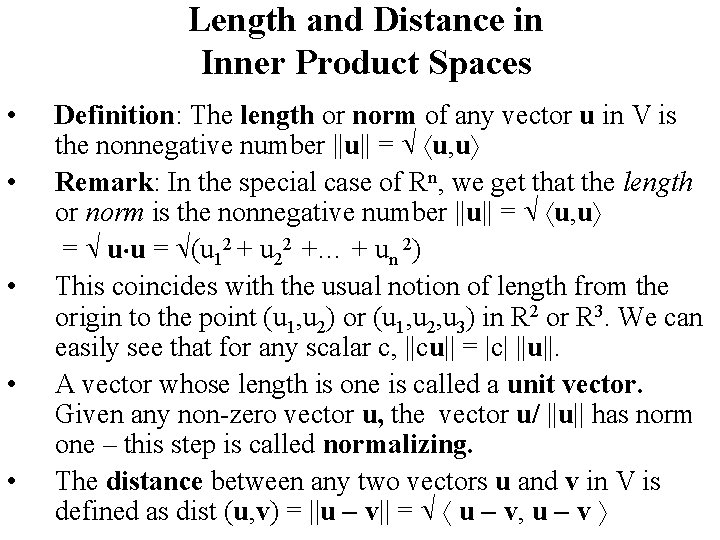

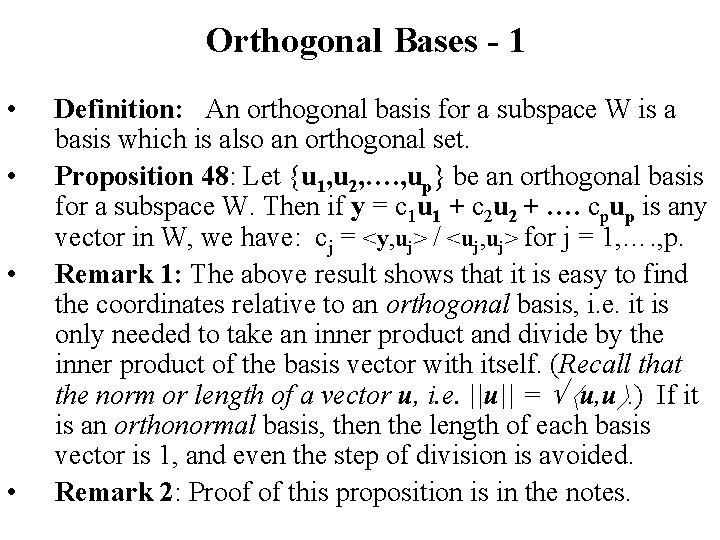

Length and Distance in Inner Product Spaces • • • Definition: The length or norm of any vector u in V is the nonnegative number ||u|| = u, u Remark: In the special case of Rn, we get that the length or norm is the nonnegative number ||u|| = u, u = u u = (u 12 + u 22 +… + un 2) This coincides with the usual notion of length from the origin to the point (u 1, u 2) or (u 1, u 2, u 3) in R 2 or R 3. We can easily see that for any scalar c, ||cu|| = |c| ||u||. A vector whose length is one is called a unit vector. Given any non-zero vector u, the vector u/ ||u|| has norm one – this step is called normalizing. The distance between any two vectors u and v in V is defined as dist (u, v) = ||u v|| = u v, u v

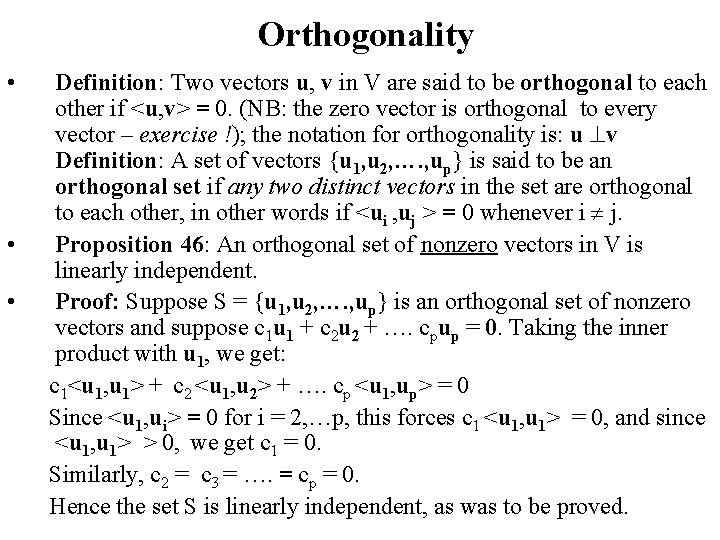

Orthogonality • • • Definition: Two vectors u, v in V are said to be orthogonal to each other if <u, v> = 0. (NB: the zero vector is orthogonal to every vector – exercise !); the notation for orthogonality is: u v Definition: A set of vectors {u 1, u 2, …. , up} is said to be an orthogonal set if any two distinct vectors in the set are orthogonal to each other, in other words if <ui , uj > = 0 whenever i j. Proposition 46: An orthogonal set of nonzero vectors in V is linearly independent. Proof: Suppose S = {u 1, u 2, …. , up} is an orthogonal set of nonzero vectors and suppose c 1 u 1 + c 2 u 2 + …. cpup = 0. Taking the inner product with u 1, we get: c 1<u 1, u 1> + c 2 <u 1, u 2> + …. cp <u 1, up> = 0 Since <u 1, ui> = 0 for i = 2, …p, this forces c 1 <u 1, u 1> = 0, and since <u 1, u 1> > 0, we get c 1 = 0. Similarly, c 2 = c 3 = …. = cp = 0. Hence the set S is linearly independent, as was to be proved.

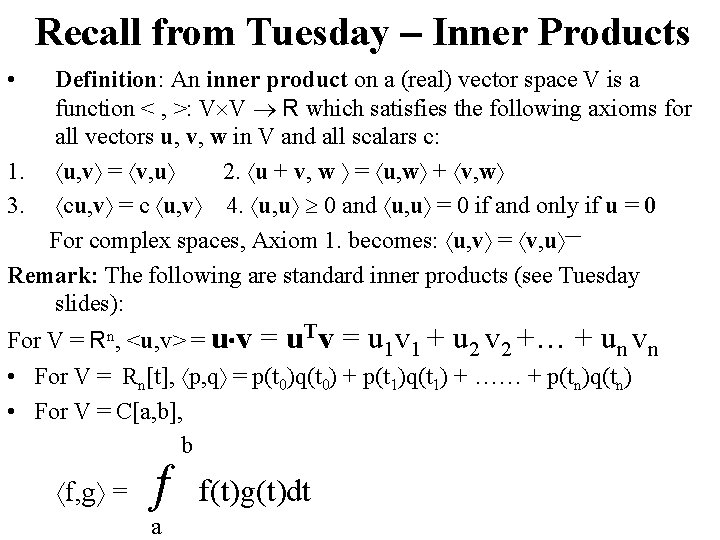

Orthogonality - 2 • Definition: If W is a subspace of V, then a vector v is said to be orthogonal to W if v is orthogonal to every vector in W. The set of all vectors orthogonal to W is called the orthogonal complement of W, written W. So W = {v V: v w for every w W}. • Proposition 47: a) v belongs to W if and only if v is orthogonal to every vector in a spanning set for W. b) W is a subspace of V and W W = {0}. • Actually, if S is any subset of V, then S = {v V: v u for every u S} is a subspace of V (even if S is not a subspace). - Exercise

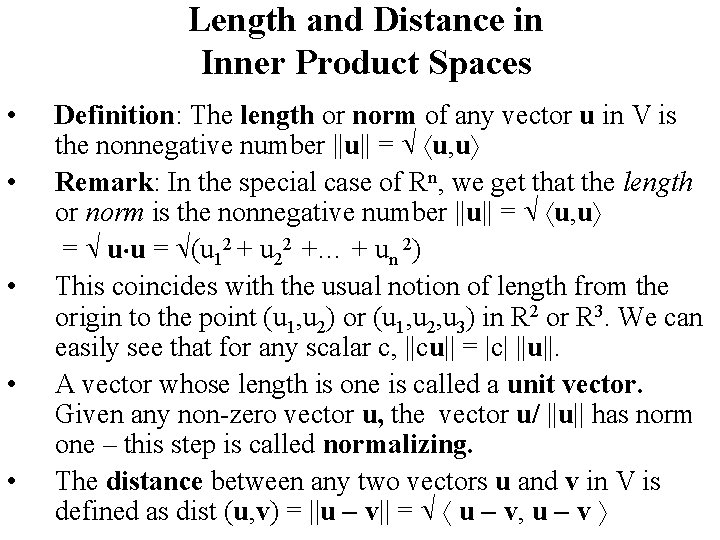

Orthogonal Bases - 1 • • Definition: An orthogonal basis for a subspace W is a basis which is also an orthogonal set. Proposition 48: Let {u 1, u 2, …. , up} be an orthogonal basis for a subspace W. Then if y = c 1 u 1 + c 2 u 2 + …. cpup is any vector in W, we have: cj = <y, uj> / <uj, uj> for j = 1, …. , p. Remark 1: The above result shows that it is easy to find the coordinates relative to an orthogonal basis, i. e. it is only needed to take an inner product and divide by the inner product of the basis vector with itself. (Recall that the norm or length of a vector u, i. e. ||u|| = u, u. ) If it is an orthonormal basis, then the length of each basis vector is 1, and even the step of division is avoided. Remark 2: Proof of this proposition is in the notes.