RealTime Shading Using Programmable Graphics Hardware Shader Programming

Real-Time Shading Using Programmable Graphics Hardware Shader Programming Wan-Chun Ma National Taiwan University

Today’s Schedule Cg Programming n Cg Runtime Setup in Open. GL n Multi-texturing in Open. GL n Bump Mapping n

Cg Programming

Data Types float = 32 -bit IEEE floating point n half = 16 -bit IEEE-like floating point n fixed = 12 -bit fixed [-2, 2) clamping (Open. GL only) n bool = boolean n sampler = handle to a texture sampler n

Declarations of Array / Vector / Matrix n Declare vectors (up to length 4) and matrices (up to size 4 x 4) using built-in data types: ¨ float 4 mycolor; ¨ float 3 x 3 mymatrix; n Declare more general arrays exactly as in C: ¨ float lightpower[4]; ¨ But, arrays are first-class types, not pointers ¨ Implementations may subset array capabilities to match HW restrictions

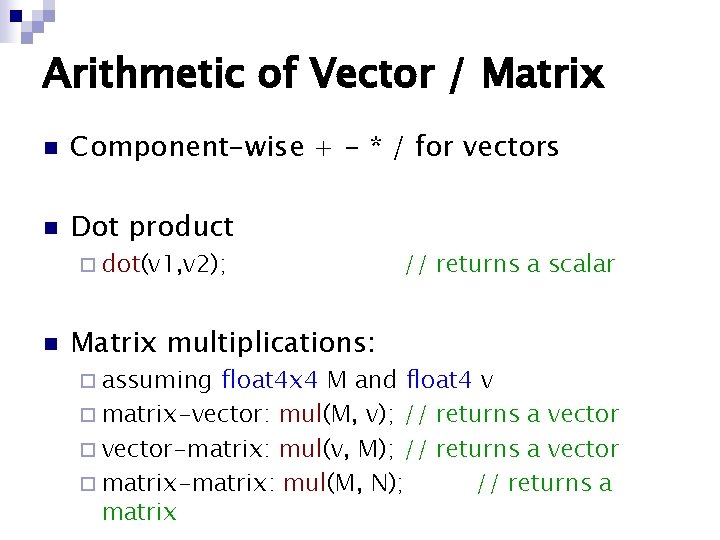

Arithmetic of Vector / Matrix n Component-wise + - * / for vectors n Dot product ¨ dot(v 1, v 2); n Matrix multiplications: ¨ assuming // returns a scalar float 4 x 4 M and float 4 v ¨ matrix-vector: mul(M, v); // returns a vector ¨ vector-matrix: mul(v, M); // returns a vector ¨ matrix-matrix: mul(M, N); // returns a matrix

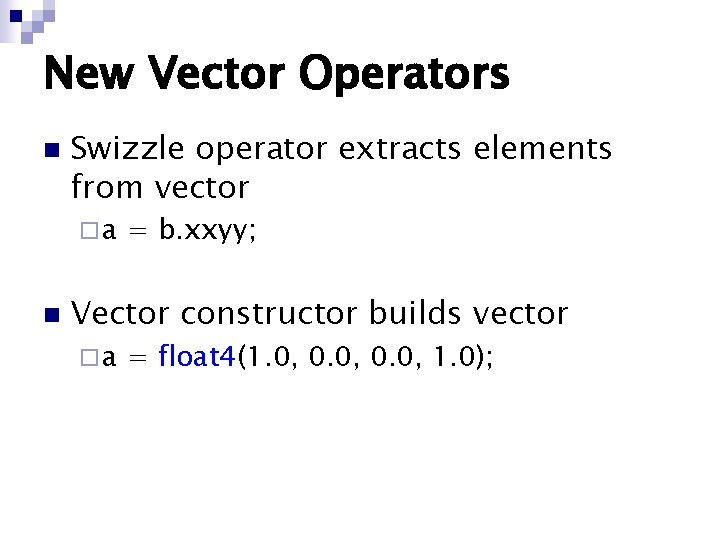

New Vector Operators n Swizzle operator extracts elements from vector ¨a n = b. xxyy; Vector constructor builds vector ¨a = float 4(1. 0, 0. 0, 1. 0);

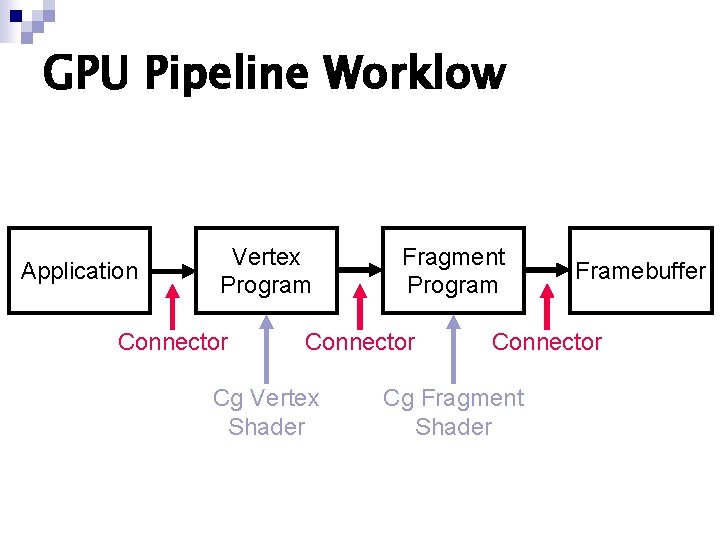

GPU Pipeline Worklow Application Vertex Program Connector Fragment Program Connector Cg Vertex Shader Framebuffer Connector Cg Fragment Shader

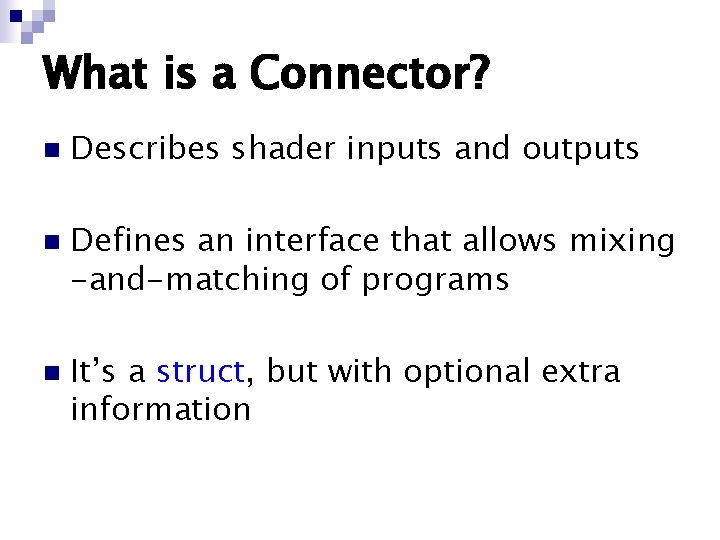

What is a Connector? n n n Describes shader inputs and outputs Defines an interface that allows mixing -and-matching of programs It’s a struct, but with optional extra information

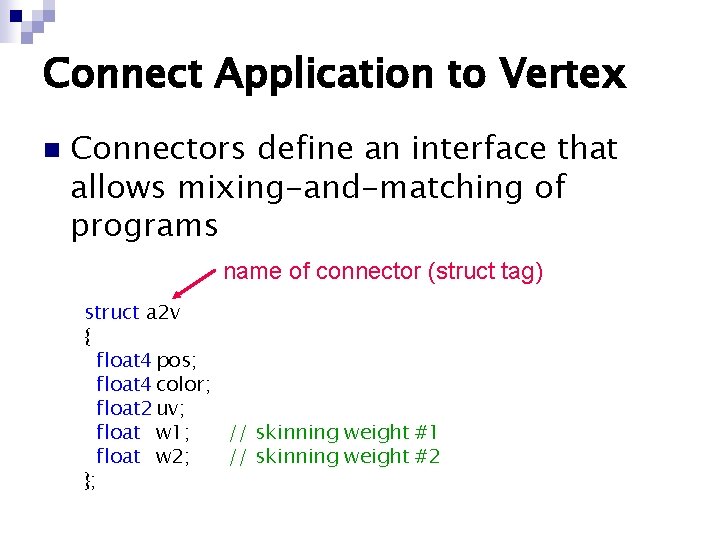

Connect Application to Vertex n Connectors define an interface that allows mixing-and-matching of programs name of connector (struct tag) struct a 2 v { float 4 pos; float 4 color; float 2 uv; float w 1; // skinning weight #1 float w 2; // skinning weight #2 };

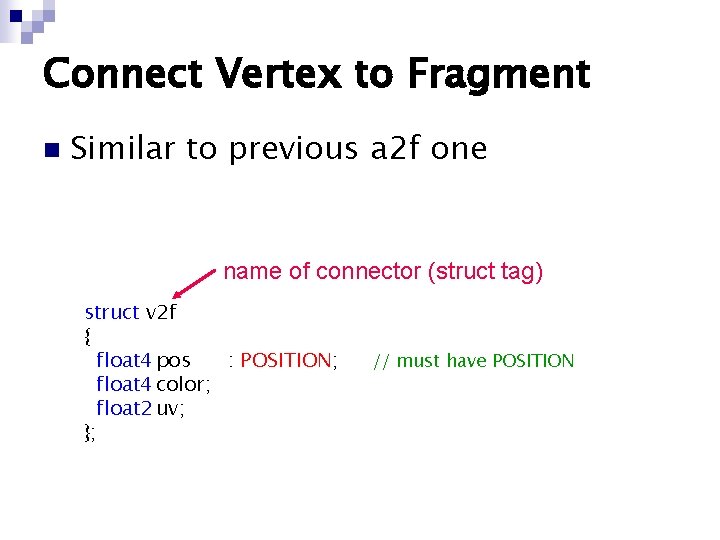

Connect Vertex to Fragment n Similar to previous a 2 f one name of connector (struct tag) struct v 2 f { float 4 pos : POSITION; float 4 color; float 2 uv; }; // must have POSITION

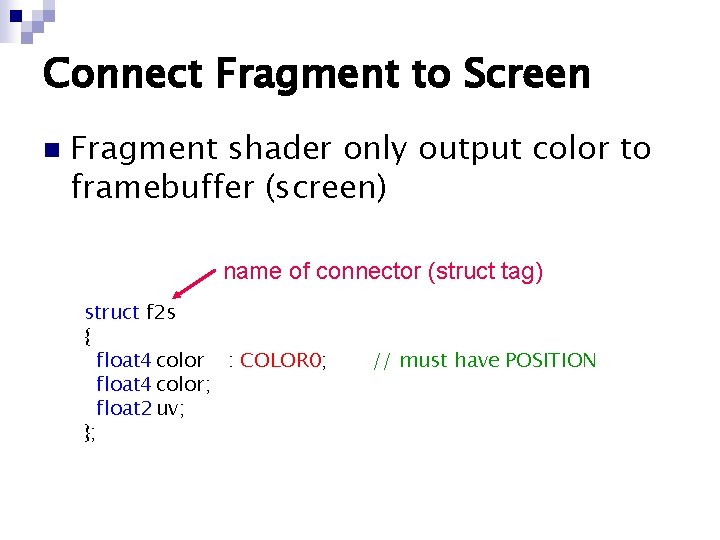

Connect Fragment to Screen n Fragment shader only output color to framebuffer (screen) name of connector (struct tag) struct f 2 s { float 4 color : COLOR 0; float 4 color; float 2 uv; }; // must have POSITION

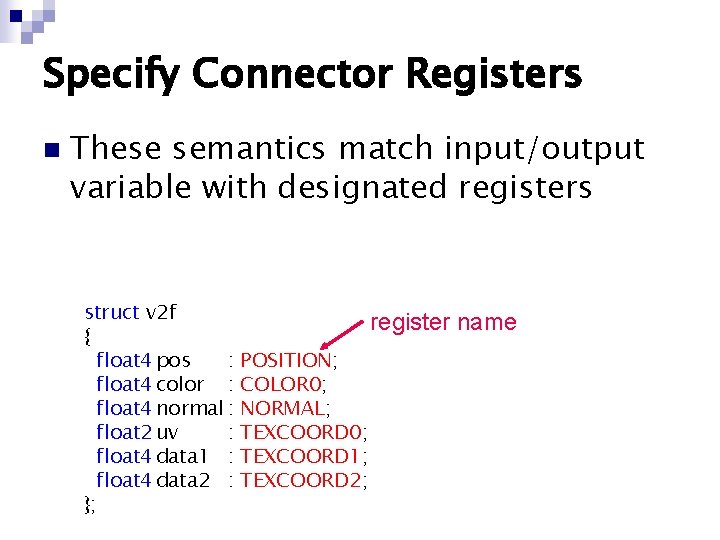

Specify Connector Registers n These semantics match input/output variable with designated registers struct v 2 f { float 4 pos : float 4 color : float 4 normal : float 2 uv : float 4 data 1 : float 4 data 2 : }; register name POSITION; COLOR 0; NORMAL; TEXCOORD 0; TEXCOORD 1; TEXCOORD 2;

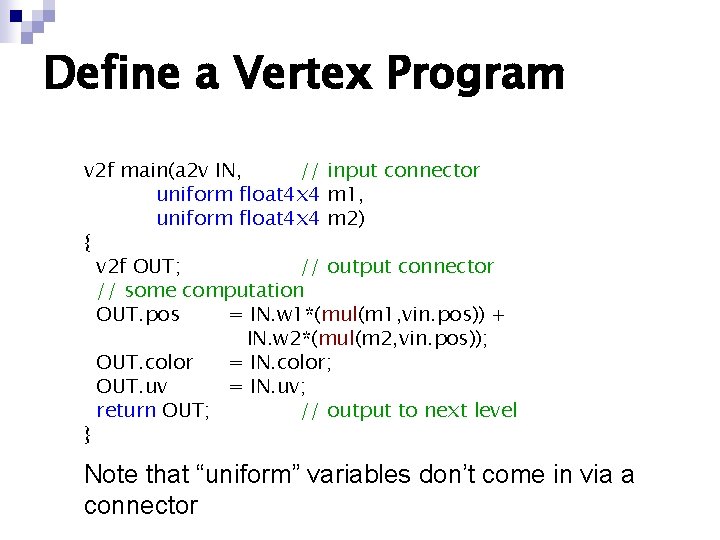

Define a Vertex Program v 2 f main(a 2 v IN, // input connector uniform float 4 x 4 m 1, uniform float 4 x 4 m 2) { v 2 f OUT; // output connector // some computation OUT. pos = IN. w 1*(mul(m 1, vin. pos)) + IN. w 2*(mul(m 2, vin. pos)); OUT. color = IN. color; OUT. uv = IN. uv; return OUT; // output to next level } Note that “uniform” variables don’t come in via a connector

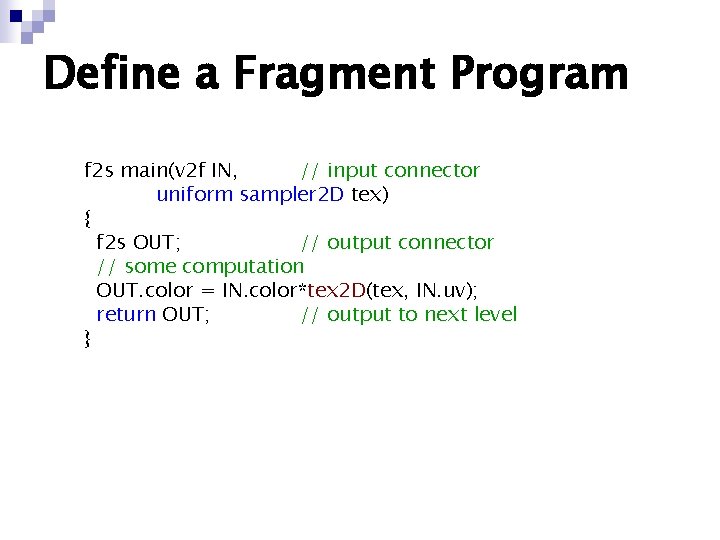

Define a Fragment Program f 2 s main(v 2 f IN, // input connector uniform sampler 2 D tex) { f 2 s OUT; // output connector // some computation OUT. color = IN. color*tex 2 D(tex, IN. uv); return OUT; // output to next level }

Cg Runtime Setup in Open. GL

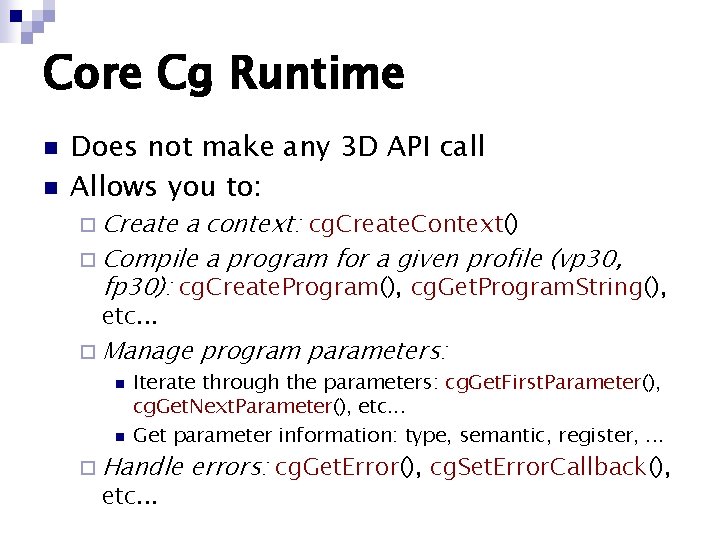

Core Cg Runtime n n Does not make any 3 D API call Allows you to: ¨ Create a context: cg. Create. Context() ¨ Compile a program for a given profile (vp 30, fp 30): cg. Create. Program(), cg. Get. Program. String(), etc. . . ¨ Manage n n program parameters: Iterate through the parameters: cg. Get. First. Parameter(), cg. Get. Next. Parameter(), etc. . . Get parameter information: type, semantic, register, . . . ¨ Handle etc. . . errors: cg. Get. Error(), cg. Set. Error. Callback(),

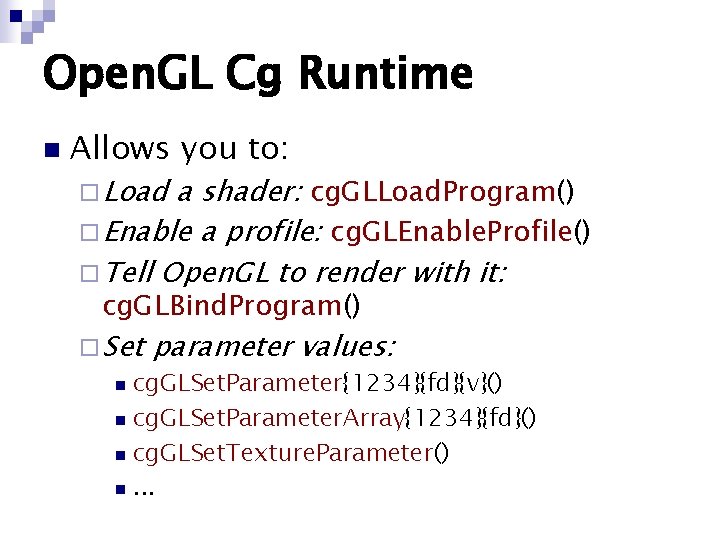

Open. GL Cg Runtime n Allows you to: ¨ Load a shader: cg. GLLoad. Program() ¨ Enable a profile: cg. GLEnable. Profile() ¨ Tell Open. GL to render with it: cg. GLBind. Program() ¨ Set parameter values: cg. GLSet. Parameter{1234}{fd}{v}() n cg. GLSet. Parameter. Array{1234}{fd}() n cg. GLSet. Texture. Parameter() n. . . n

Coffee Break n Next section: Multi-texturing in Open. GL

Multi-texturing in Open. GL

What is Multi-texturing n n As its name, multi-texturing means that you are displaying more than one textures at the same time Usually a object is mapped with several textures ¨ Textures: diffuse, specular, glossy, the other shading coefficient ¨ Maps: depth, normal, tangent

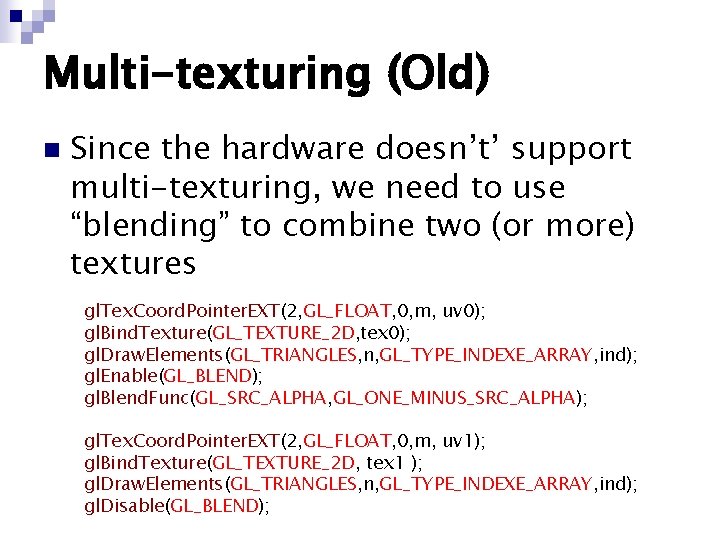

Multi-texturing (Old) n Since the hardware doesn’t’ support multi-texturing, we need to use “blending” to combine two (or more) textures gl. Tex. Coord. Pointer. EXT(2, GL_FLOAT, 0, m, uv 0); gl. Bind. Texture(GL_TEXTURE_2 D, tex 0); gl. Draw. Elements(GL_TRIANGLES, n, GL_TYPE_INDEXE_ARRAY, ind); gl. Enable(GL_BLEND); gl. Blend. Func(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA); gl. Tex. Coord. Pointer. EXT(2, GL_FLOAT, 0, m, uv 1); gl. Bind. Texture(GL_TEXTURE_2 D, tex 1 ); gl. Draw. Elements(GL_TRIANGLES, n, GL_TYPE_INDEXE_ARRAY, ind); gl. Disable(GL_BLEND);

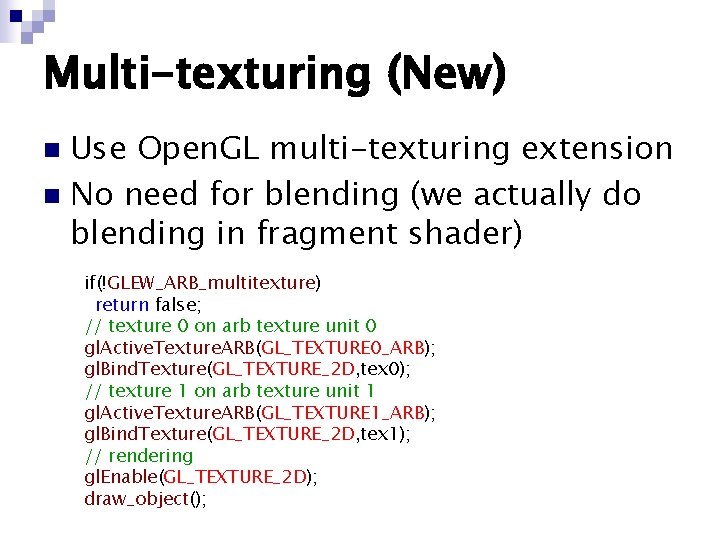

Multi-texturing (New) Use Open. GL multi-texturing extension n No need for blending (we actually do blending in fragment shader) n if(!GLEW_ARB_multitexture) return false; // texture 0 on arb texture unit 0 gl. Active. Texture. ARB(GL_TEXTURE 0_ARB); gl. Bind. Texture(GL_TEXTURE_2 D, tex 0); // texture 1 on arb texture unit 1 gl. Active. Texture. ARB(GL_TEXTURE 1_ARB); gl. Bind. Texture(GL_TEXTURE_2 D, tex 1); // rendering gl. Enable(GL_TEXTURE_2 D); draw_object();

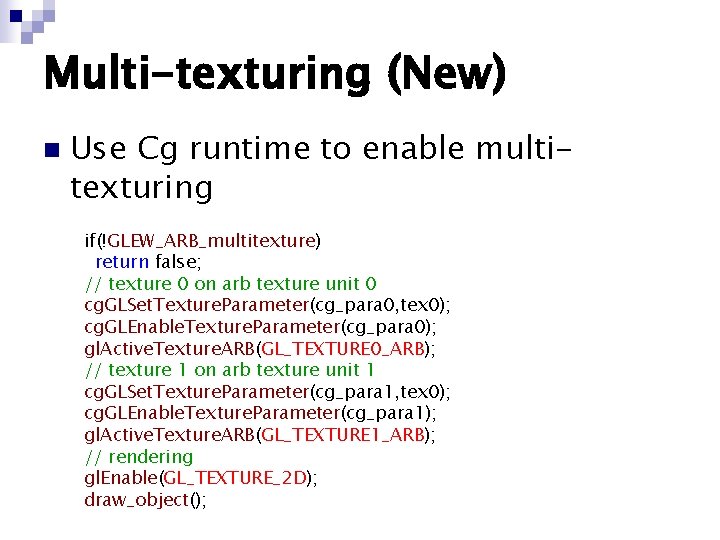

Multi-texturing (New) n Use Cg runtime to enable multitexturing if(!GLEW_ARB_multitexture) return false; // texture 0 on arb texture unit 0 cg. GLSet. Texture. Parameter(cg_para 0, tex 0); cg. GLEnable. Texture. Parameter(cg_para 0); gl. Active. Texture. ARB(GL_TEXTURE 0_ARB); // texture 1 on arb texture unit 1 cg. GLSet. Texture. Parameter(cg_para 1, tex 0); cg. GLEnable. Texture. Parameter(cg_para 1); gl. Active. Texture. ARB(GL_TEXTURE 1_ARB); // rendering gl. Enable(GL_TEXTURE_2 D); draw_object();

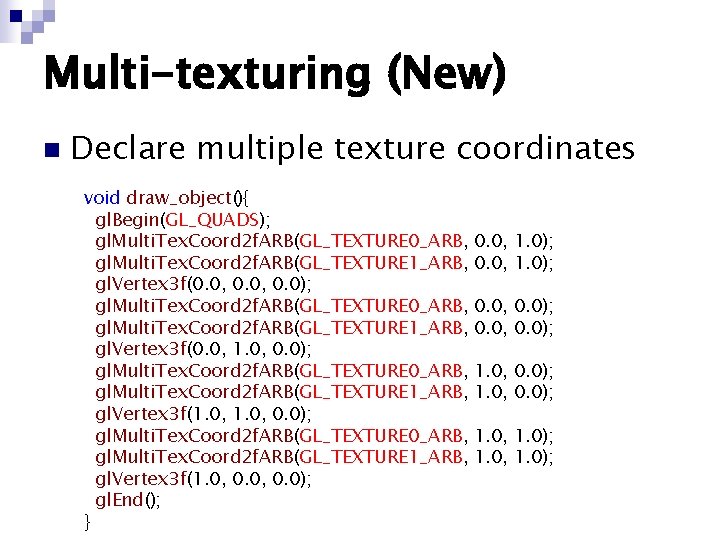

Multi-texturing (New) n Declare multiple texture coordinates void draw_object(){ gl. Begin(GL_QUADS); gl. Multi. Tex. Coord 2 f. ARB(GL_TEXTURE 0_ARB, gl. Multi. Tex. Coord 2 f. ARB(GL_TEXTURE 1_ARB, gl. Vertex 3 f(0. 0, 0. 0); gl. Multi. Tex. Coord 2 f. ARB(GL_TEXTURE 0_ARB, gl. Multi. Tex. Coord 2 f. ARB(GL_TEXTURE 1_ARB, gl. Vertex 3 f(0. 0, 1. 0, 0. 0); gl. Multi. Tex. Coord 2 f. ARB(GL_TEXTURE 0_ARB, gl. Multi. Tex. Coord 2 f. ARB(GL_TEXTURE 1_ARB, gl. Vertex 3 f(1. 0, 0. 0); gl. End(); } 0. 0, 1. 0); 0. 0, 0. 0); 1. 0, 1. 0);

Bump Mapping

What is Bump Mapping n n The idea is to perturb the surface normal of a given surface, and use the normal to calculate the shading [Blinn 1978] Bump mapping simulates the bumps or wrinkles in a surface without the need for geometric modifications to the model

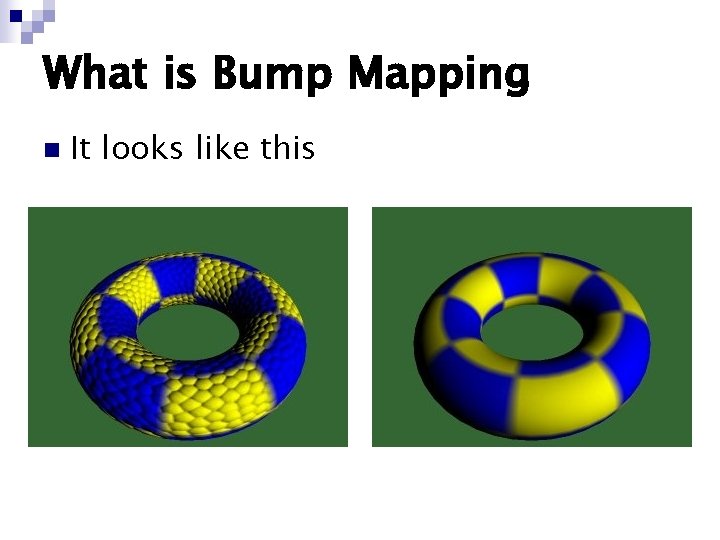

What is Bump Mapping n It looks like this

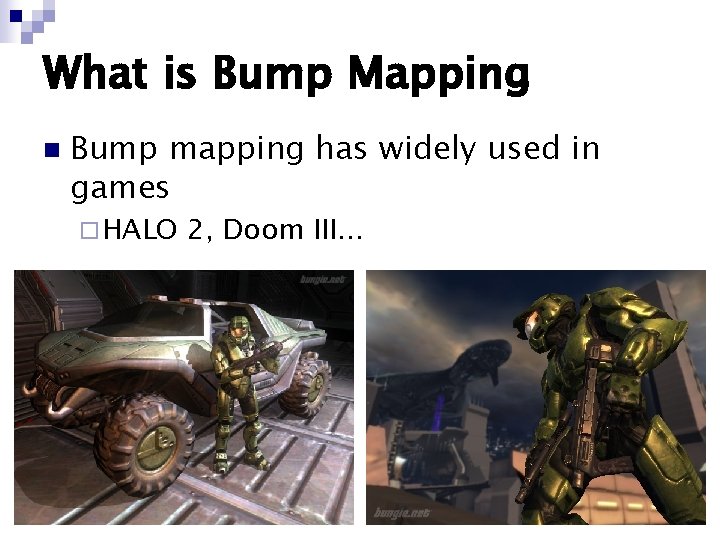

What is Bump Mapping n Bump mapping has widely used in games ¨ HALO 2, Doom III. . .

Texture-Space Lighting n One convenient space for per-pixel lighting operations is called texture-space ¨ It represents a local coordinate system defined at each vertex of a set of geometry ¨ You can think of the Z axis of texture-space to be roughly parallel to the vertex normal ¨ The X and Y axes are perpendicular to the Z axis and can be arbitrarily oriented around the Z axis

Why Texture-Space? n Texture-space gives us a way to redefine the lighting coordinate system on a per-vertex basis

Why Texture-Space? n n If we used model-space or world-space bump maps, we would have to regenerate the entire bump map every time the object morphed or rotated in any way, because the bump map normals will no longer be pointing in the correct direction in model or world space We would have to recompute the bump maps for each instance of each changing object each frame, and it seems not a efficient way

Why Texture-Space? n n Texture-space is a surface-local basis in which we define our normal maps Therefore, we must rotate the lighting and viewing directions into this space as well. We must move the light vectors into texture-space before performing the per-pixel dot product

Generate Texture-Space n Mainly there are two ways to generate texture-space: ¨ Have the artist to draw a normal map and a tangent map for the corresponding model ¨ When loading in a model, create the pervertex tangent-space These will store the axes of the texture-space basis n Generate the texture-space vectors from the vertex positions and bump map texture coordinates n

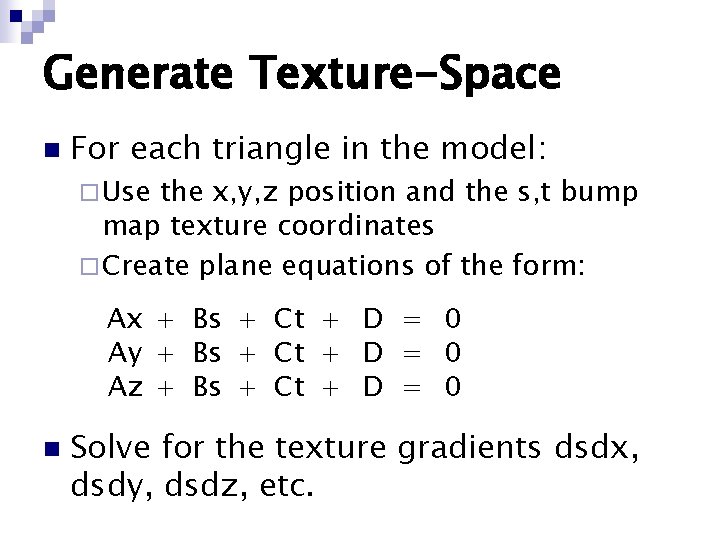

Generate Texture-Space n For each triangle in the model: ¨ Use the x, y, z position and the s, t bump map texture coordinates ¨ Create plane equations of the form: Ax + Bs + Ct + D = 0 Ay + Bs + Ct + D = 0 Az + Bs + Ct + D = 0 n Solve for the texture gradients dsdx, dsdy, dsdz, etc.

Generate Texture-Space n Now treat the dsdx, dsdy, and dsdz as a 3 D vector representing the S axis (dsdx, dsdy, dsdz) ¨ Usually n we call it tangnet G Use a cross product Nx. S to generate T ¨ Usually we call it binormal B ¨ Notice that the up axis of texture-space is close to parallel with the normal N of the vertex ¨ Tangent and binormal is exchangeable to each other

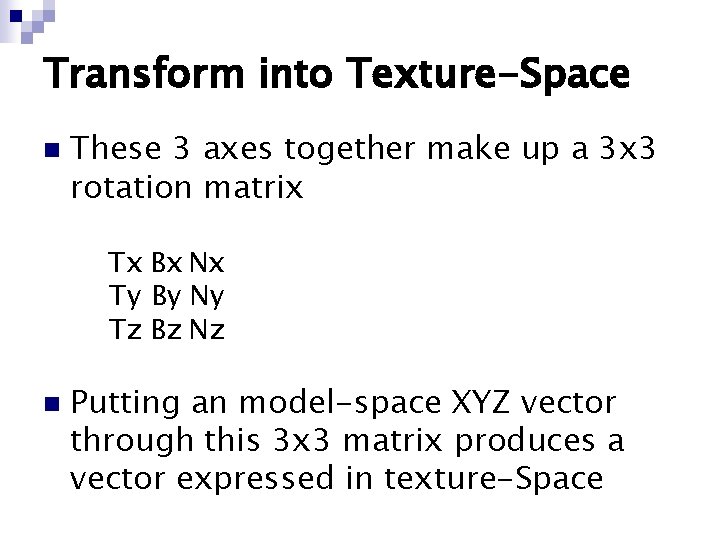

Transform into Texture-Space n These 3 axes together make up a 3 x 3 rotation matrix Tx Bx Nx Ty By Ny Tz Bz Nz n Putting an model-space XYZ vector through this 3 x 3 matrix produces a vector expressed in texture-Space

Per-Vertex Texture-Space n n Now we have what we need to move a lighting and viewing directions into a local space defined at each vertex (or fragment) via the texture-space basis matrix We can do this on the GPU with: ¨ Vertex shader: calculate the rotated lighting and viewing directions first, than transfer them to fragment shader (interpolated result) ¨ Fragment shader: use interpolated position for calculation

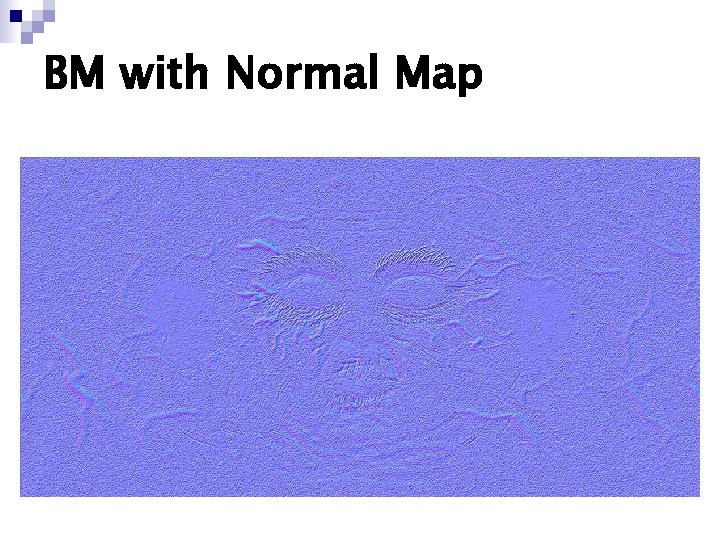

BM with Normal Map

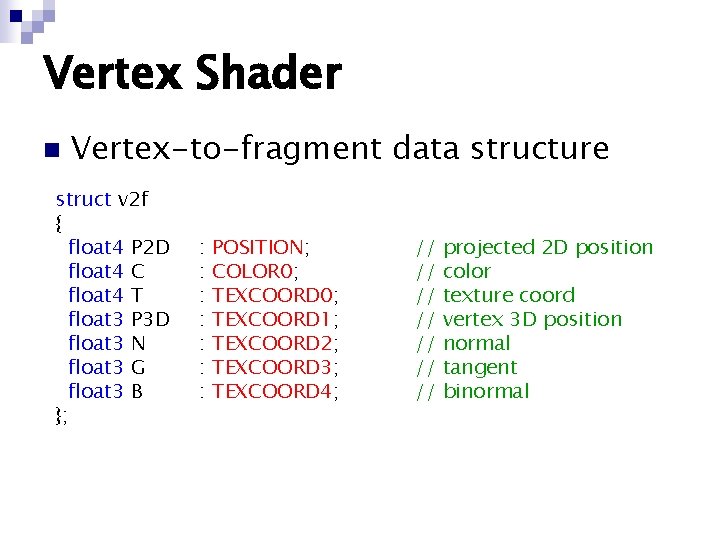

Vertex Shader n Vertex-to-fragment data structure struct v 2 f { float 4 P 2 D float 4 C float 4 T float 3 P 3 D float 3 N float 3 G float 3 B }; : : : : POSITION; COLOR 0; TEXCOORD 1; TEXCOORD 2; TEXCOORD 3; TEXCOORD 4; // // projected 2 D position color texture coord vertex 3 D position normal tangent binormal

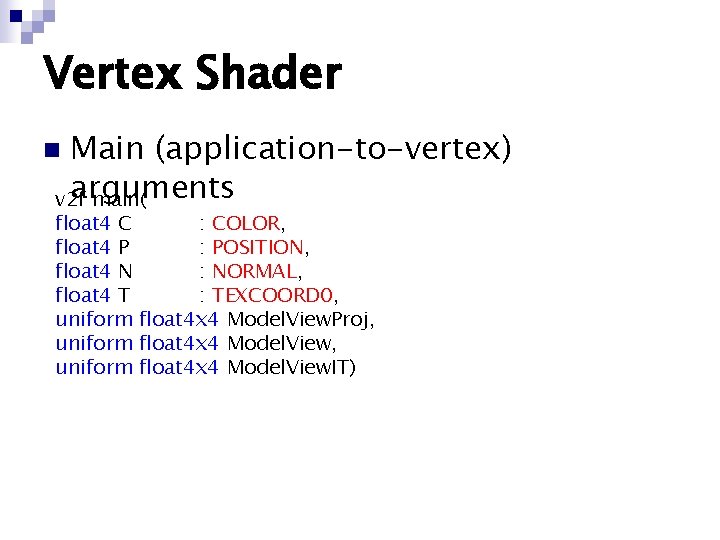

Vertex Shader Main (application-to-vertex) arguments v 2 f main( n float 4 C : COLOR, float 4 P : POSITION, float 4 N : NORMAL, float 4 T : TEXCOORD 0, uniform float 4 x 4 Model. View. Proj, uniform float 4 x 4 Model. View. IT)

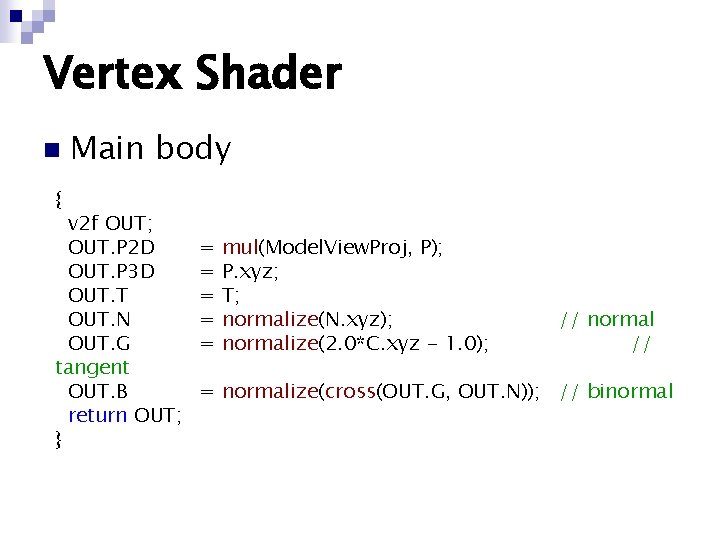

Vertex Shader n Main body { v 2 f OUT; OUT. P 2 D OUT. P 3 D OUT. T OUT. N OUT. G tangent OUT. B return OUT; } = = = mul(Model. View. Proj, P); P. xyz; T; normalize(N. xyz); normalize(2. 0*C. xyz - 1. 0); // normal // = normalize(cross(OUT. G, OUT. N)); // binormal

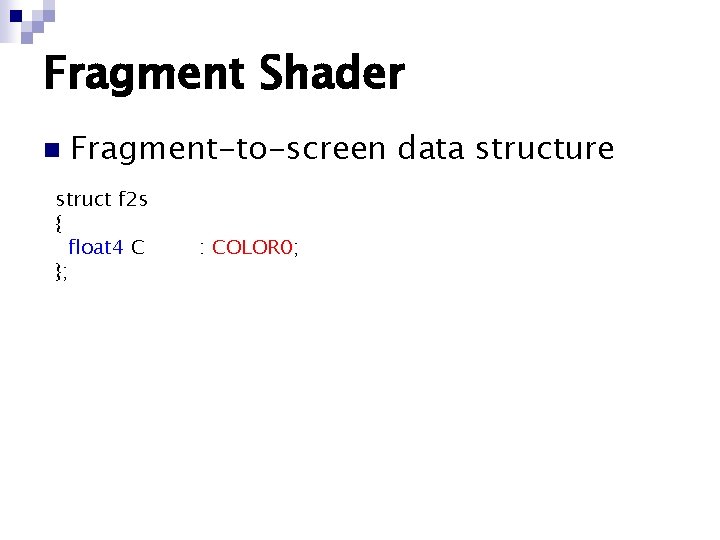

Fragment Shader n Fragment-to-screen data structure struct f 2 s { float 4 C }; : COLOR 0;

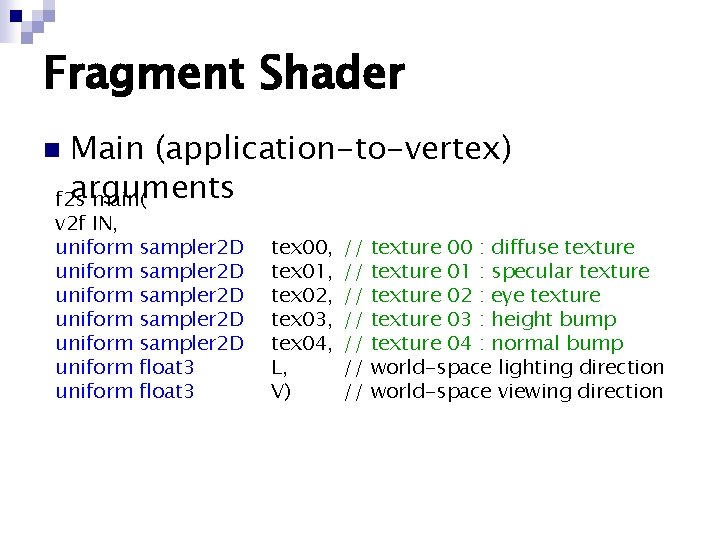

Fragment Shader Main (application-to-vertex) arguments f 2 s main( n v 2 f IN, uniform uniform sampler 2 D sampler 2 D float 3 tex 00, tex 01, tex 02, tex 03, tex 04, L, V) // // texture 00 : diffuse texture 01 : specular texture 02 : eye texture 03 : height bump texture 04 : normal bump world-space lighting direction world-space viewing direction

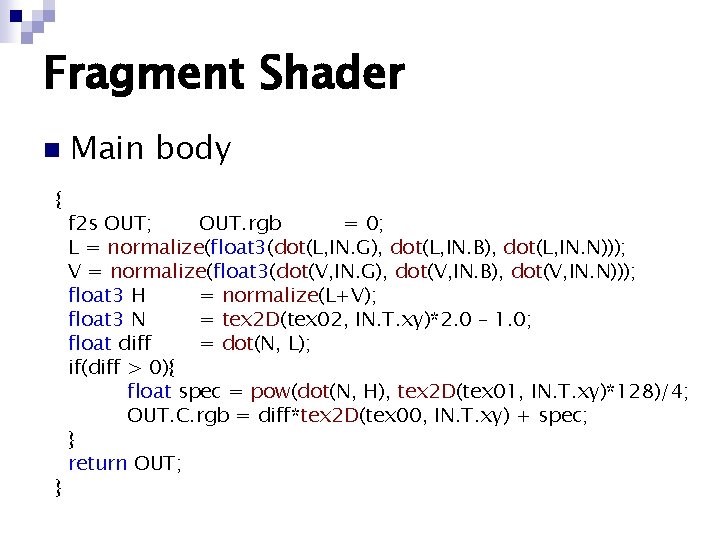

Fragment Shader n { } Main body f 2 s OUT; OUT. rgb = 0; L = normalize(float 3(dot(L, IN. G), dot(L, IN. B), dot(L, IN. N))); V = normalize(float 3(dot(V, IN. G), dot(V, IN. B), dot(V, IN. N))); float 3 H = normalize(L+V); float 3 N = tex 2 D(tex 02, IN. T. xy)*2. 0 – 1. 0; float diff = dot(N, L); if(diff > 0){ float spec = pow(dot(N, H), tex 2 D(tex 01, IN. T. xy)*128)/4; OUT. C. rgb = diff*tex 2 D(tex 00, IN. T. xy) + spec; } return OUT;

BM with Height Map

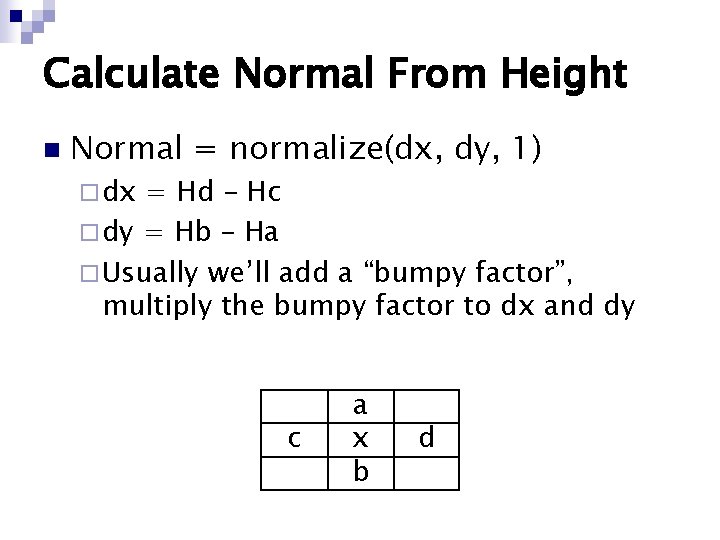

Calculate Normal From Height n Normal = normalize(dx, dy, 1) ¨ dx = Hd – Hc ¨ dy = Hb – Ha ¨ Usually we’ll add a “bumpy factor”, multiply the bumpy factor to dx and dy c a x b d

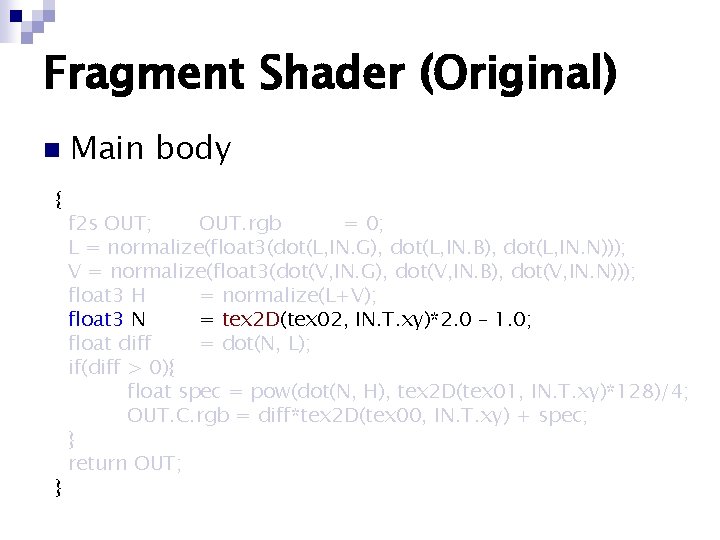

Fragment Shader (Original) n { } Main body f 2 s OUT; OUT. rgb = 0; L = normalize(float 3(dot(L, IN. G), dot(L, IN. B), dot(L, IN. N))); V = normalize(float 3(dot(V, IN. G), dot(V, IN. B), dot(V, IN. N))); float 3 H = normalize(L+V); float 3 N = tex 2 D(tex 02, IN. T. xy)*2. 0 – 1. 0; float diff = dot(N, L); if(diff > 0){ float spec = pow(dot(N, H), tex 2 D(tex 01, IN. T. xy)*128)/4; OUT. C. rgb = diff*tex 2 D(tex 00, IN. T. xy) + spec; } return OUT;

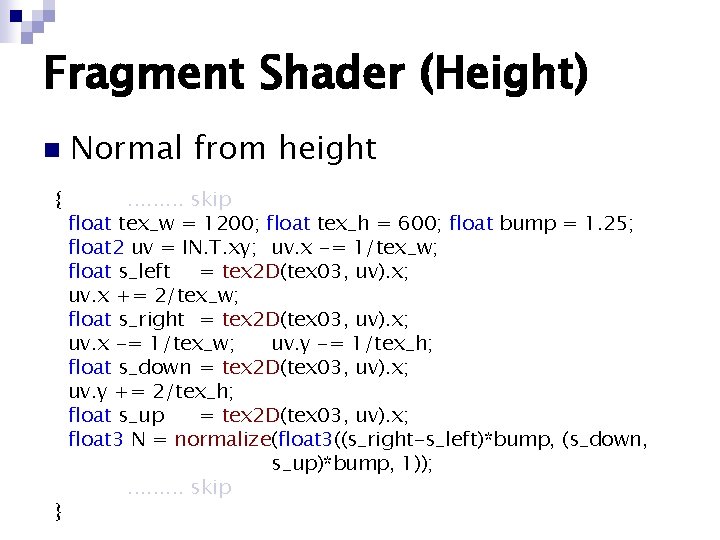

Fragment Shader (Height) n { } Normal from height. . skip float tex_w = 1200; float tex_h = 600; float bump = 1. 25; float 2 uv = IN. T. xy; uv. x -= 1/tex_w; float s_left = tex 2 D(tex 03, uv). x; uv. x += 2/tex_w; float s_right = tex 2 D(tex 03, uv). x; uv. x -= 1/tex_w; uv. y -= 1/tex_h; float s_down = tex 2 D(tex 03, uv). x; uv. y += 2/tex_h; float s_up = tex 2 D(tex 03, uv). x; float 3 N = normalize(float 3((s_right-s_left)*bump, (s_down, s_up)*bump, 1)); . . skip

Try This. . . Anisotropic reflectance model n Noise function n

End

- Slides: 51