Rating scale validation for the assessment of spoken

- Slides: 6

Rating scale validation for the assessment of spoken English at tertiary level Language Testing in Austria: Towards a Research Agenda Dr Armin Berger Department of English University of Vienna

Chapter outline • Introduction • The role of rating scales in performance assessment ◦ Rating scales as operationalisations of test constructs ◦ Controversy about rating scales ◦ Rating scale validation • Research questions and design • Data analysis and results • Discussion and conclusions Rating scale validation for the assessment of spoken English at tertiary level Klagenfurt 19. 10. 2018

Research questions • To what extent do the descriptors of the ELTT speaking scales (presentation and interaction) define a continuum of increasing speaking proficiency? • Does the validation methodology have an effect on the hierarchy of descriptor units? • • Which rating scale descriptors are the most effective ones? • What are raters’ perceptions of the revised speaking scales? Do the revised rating scales function adequately in terms of (a) the discrimination between candidates, (b) rater spread and consistency, and (c) rating scale properties? Rating scale validation for the assessment of spoken English at tertiary level Klagenfurt 19. 10. 2018

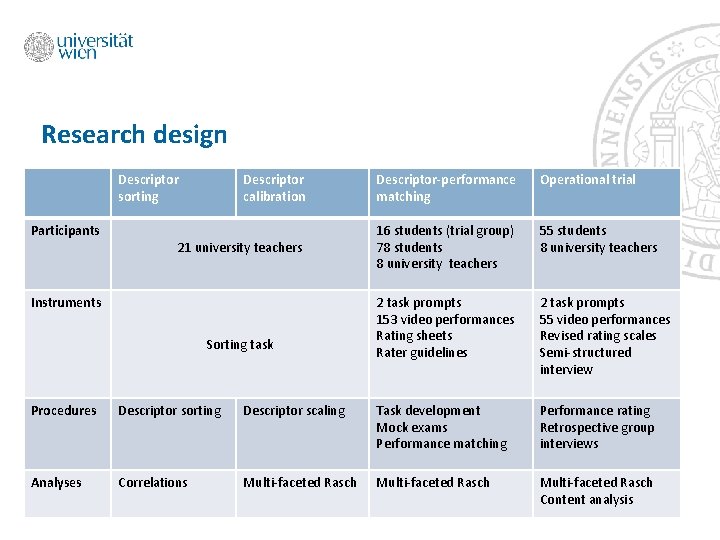

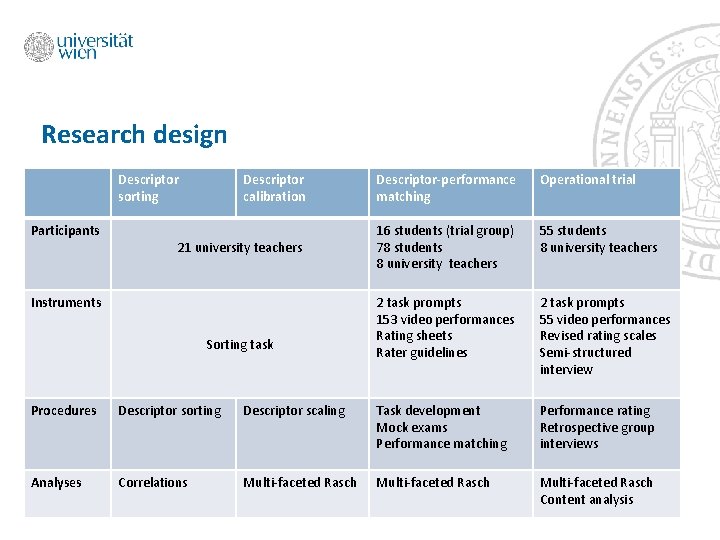

Research design Descriptor sorting Participants Descriptor calibration Descriptor-performance matching Operational trial 21 university teachers 16 students (trial group) 78 students 8 university teachers 55 students 8 university teachers 2 task prompts 153 video performances Rating sheets Rater guidelines 2 task prompts 55 video performances Revised rating scales Semi-structured interview Instruments Sorting task Procedures Descriptor sorting Descriptor scaling Task development Mock exams Performance matching Performance rating Retrospective group interviews Analyses Correlations Multi-faceted Rasch Content analysis

Conclusions • The revised scales function adequately in operational settings. • Evidence-based scale validation is fundamental. • The project has shown the benefits of the symbiosis between intuitive, qualitative and quantitative scale development methods (cf. Galaczi et al. 2011). • Validation methods are complementary rather than interchangeable. • The utility of scale validation methods should be evaluated in terms of their combined effect. Rating scale validation for the assessment of spoken English at tertiary level Klagenfurt 19. 10. 2018

Bibliography Berger, Armin. 2015. Validating analytic rating scales: A multi-method approach to scaling descriptors for academic speaking. Frankfurt am Main: Peter Lang. Co. E/Council of Europe. 2017. Common European Framework of Reference for Languages: Learning, Teaching, Assessment. Companion Volume with New Descriptors. (Provisional Edition). https: //rm. coe. int/cefr-companion-volume-with-new-descriptors 2018/1680787989 (02. 10. 2018) Galaczi, Evelina; ffrench, Angela; Hubbard, Chris; Green, Anthony. 2011. “Developing assessment scales for large-scale speaking tests: A multiple-method approach”. Assessment in Education: Principles, Policy & Practice 18(3), 217 -237. Rating scale validation for the assessment of spoken English at tertiary level Klagenfurt 19. 10. 2018