Rapid Diagnosis of Acute Heart Disease by Cloudbased

Rapid Diagnosis of Acute Heart Disease by Cloud-based High Performance Computing for Computer Vision Oleksii Morozov Physics in Medicine Research Group University Hospital of Basel Switzerland April 8, 2010

The Heart Life-sustaining pump: 2’ 500’ 000 L/year of vital blood Coronary artery disease (CAD) is most frequent cause of heart malfunction and death World largest killer (WHO) ~29% of global death 17’ 100’ 000 lives/year

Cardiology yesterday Tools with relatively low information content

Cardiology today More tools, more information Subjective decision mostly relying on experience of a doctor

Cardiology tomorrow More advanced technologies Multidimensional information High quality, high resolution data Multimodal information Quantitative, objective, integrative computer based analysis Worldwide-networked standards and databases Problems Need for high performance computing in a distributed environment but only for a fraction of the time Global storage network for storing large datasets

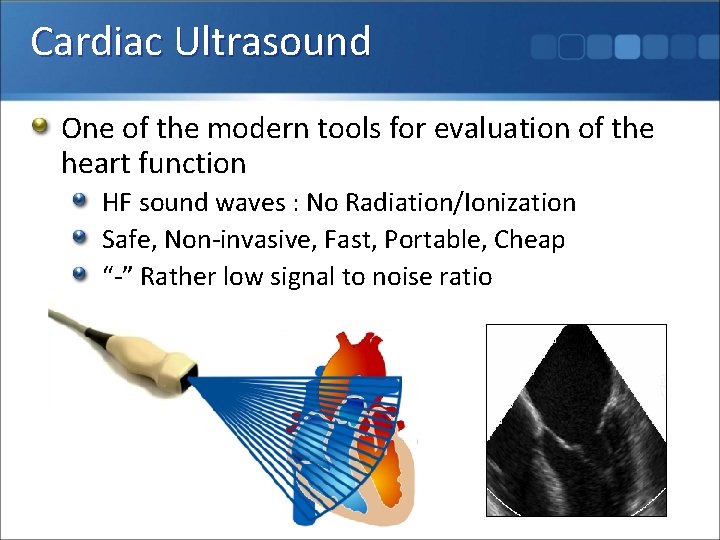

Cardiac Ultrasound One of the modern tools for evaluation of the heart function HF sound waves : No Radiation/Ionization Safe, Non-invasive, Fast, Portable, Cheap “-” Rather low signal to noise ratio

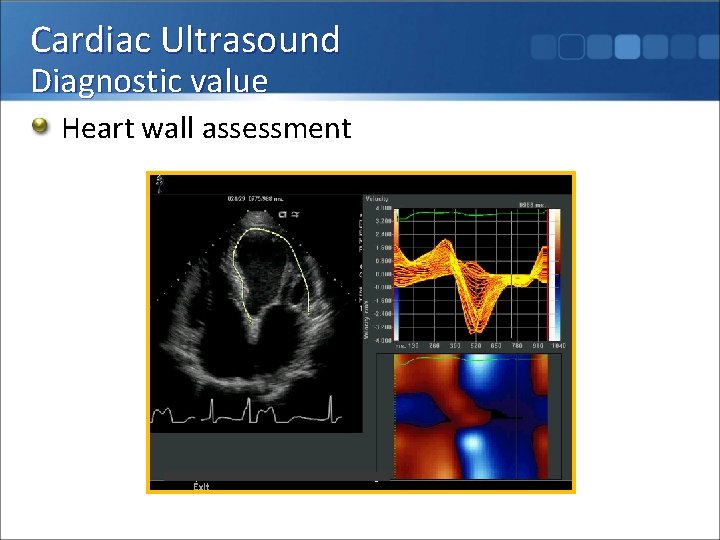

Cardiac Ultrasound Diagnostic value Heart wall assessment

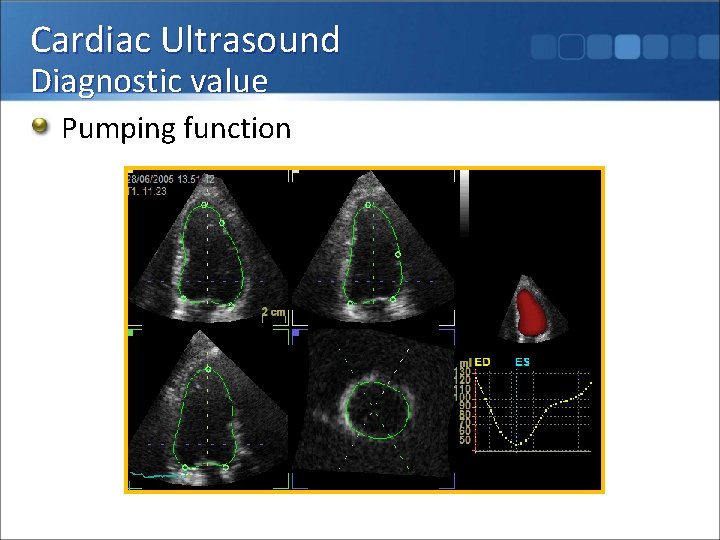

Cardiac Ultrasound Diagnostic value Pumping function

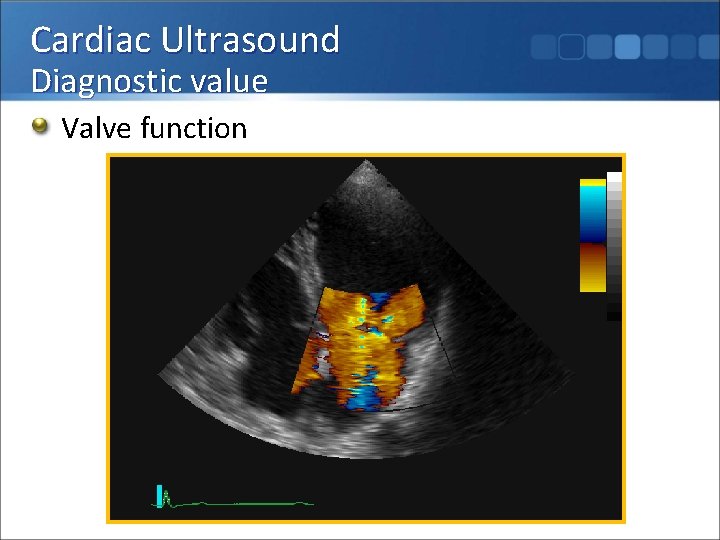

Cardiac Ultrasound Diagnostic value Valve function

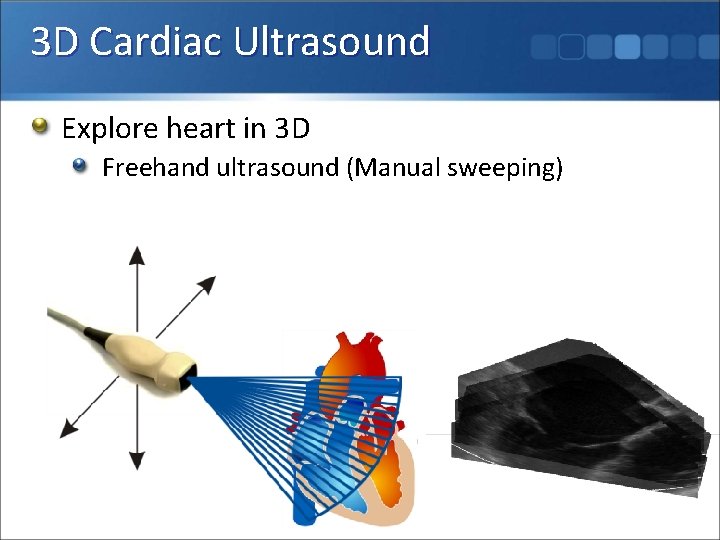

3 D Cardiac Ultrasound Explore heart in 3 D Freehand ultrasound (Manual sweeping)

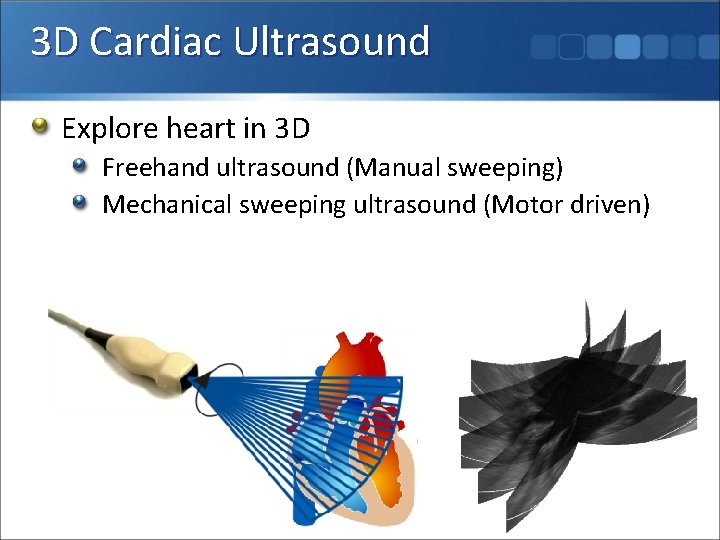

3 D Cardiac Ultrasound Explore heart in 3 D Freehand ultrasound (Manual sweeping) Mechanical sweeping ultrasound (Motor driven)

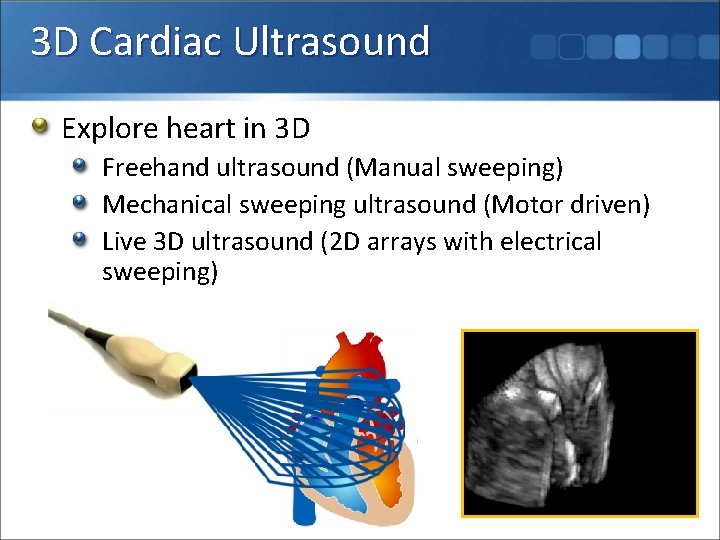

3 D Cardiac Ultrasound Explore heart in 3 D Freehand ultrasound (Manual sweeping) Mechanical sweeping ultrasound (Motor driven) Live 3 D ultrasound (2 D arrays with electrical sweeping)

Cardiac Ultrasound machine = a transducer + a supercomputer 50’ 000 – 500’ 000 USD Idle 90% of the time

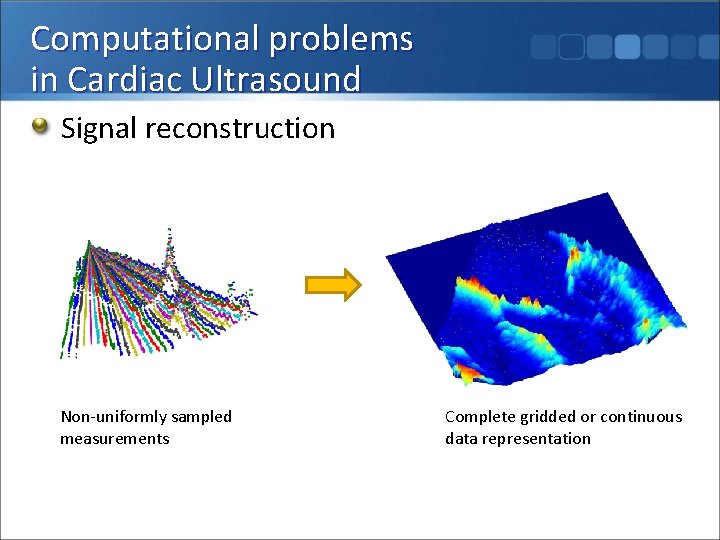

Computational problems in Cardiac Ultrasound Signal reconstruction Non-uniformly sampled measurements Complete gridded or continuous data representation

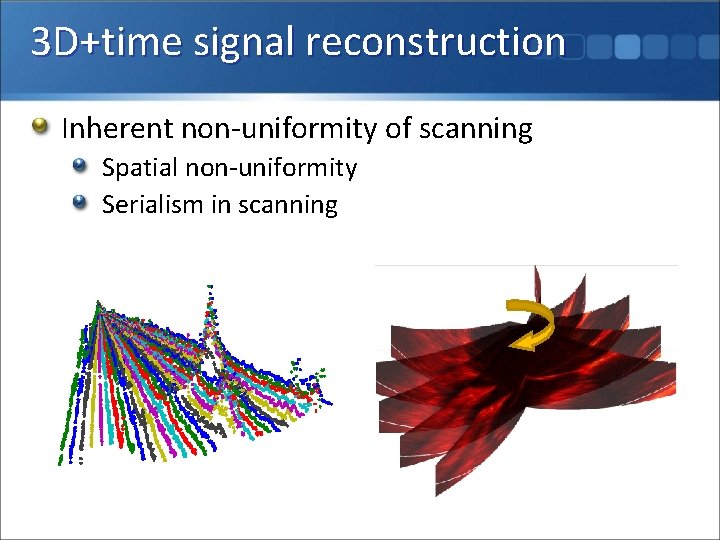

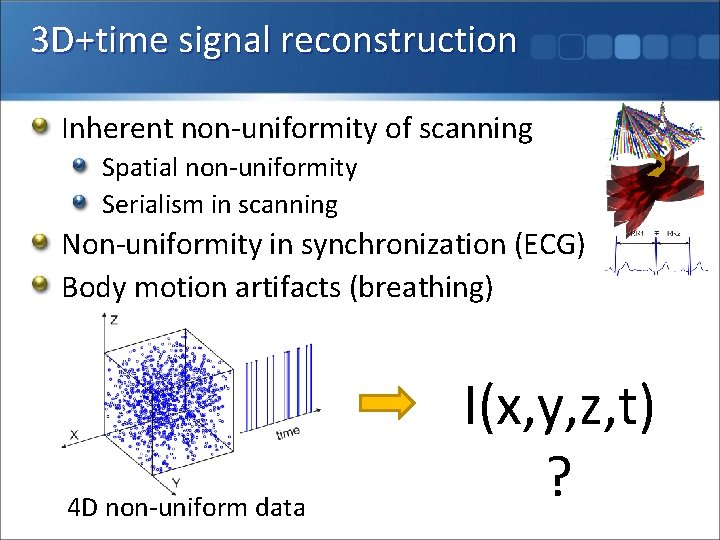

3 D+time signal reconstruction Inherent non-uniformity of scanning Spatial non-uniformity Serialism in scanning

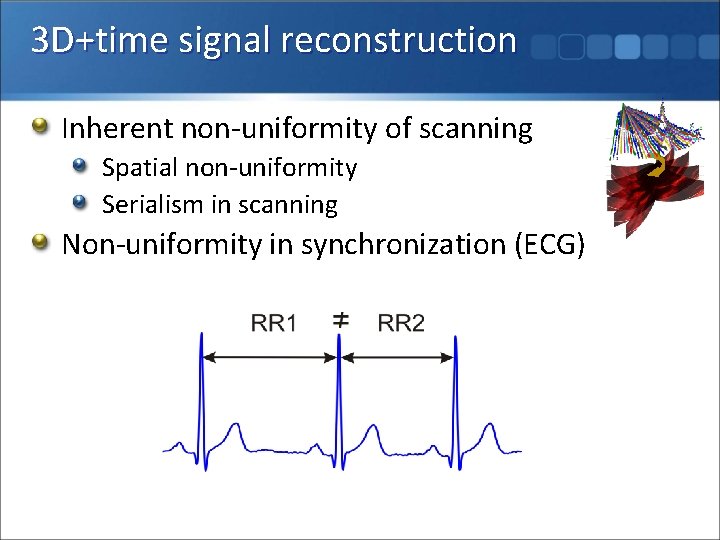

3 D+time signal reconstruction Inherent non-uniformity of scanning Spatial non-uniformity Serialism in scanning Non-uniformity in synchronization (ECG)

3 D+time signal reconstruction Inherent non-uniformity of scanning Spatial non-uniformity Serialism in scanning Non-uniformity in synchronization (ECG) Body motion artifacts (breathing) 4 D non-uniform data I(x, y, z, t) ?

3 D+time signal reconstruction A spline solution B-spline non-uniform interpolation by Arigovindan, Unser (EPFL, Switzerland 2005) Robust global interpolation: handles oversampling and undersampling (gaps) in the data Sparse and well-conditioned alternative to the optimal RBF solution Enjoys multiresolution properties (way to fast solving) Parallelizability of solving process Successfully applied to 2 D problems

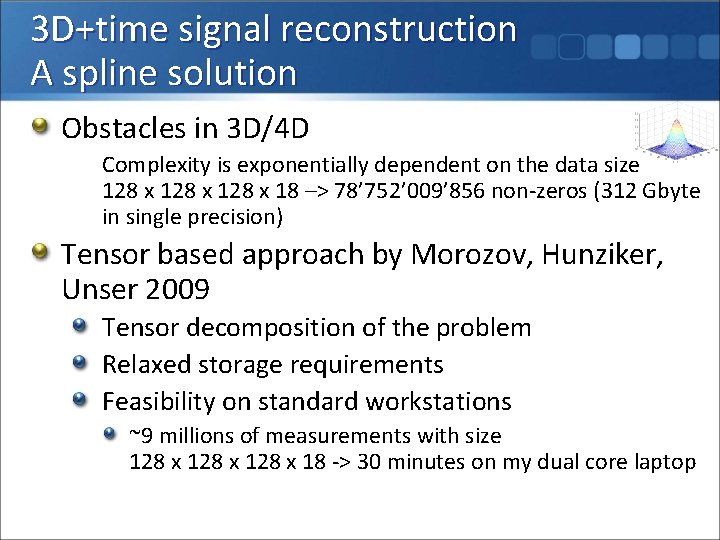

3 D+time signal reconstruction A spline solution Obstacles in 3 D/4 D Complexity is exponentially dependent on the data size 128 x 18 –> 78’ 752’ 009’ 856 non-zeros (312 Gbyte in single precision) Tensor based approach by Morozov, Hunziker, Unser 2009 Tensor decomposition of the problem Relaxed storage requirements Feasibility on standard workstations ~9 millions of measurements with size 128 x 18 -> 30 minutes on my dual core laptop

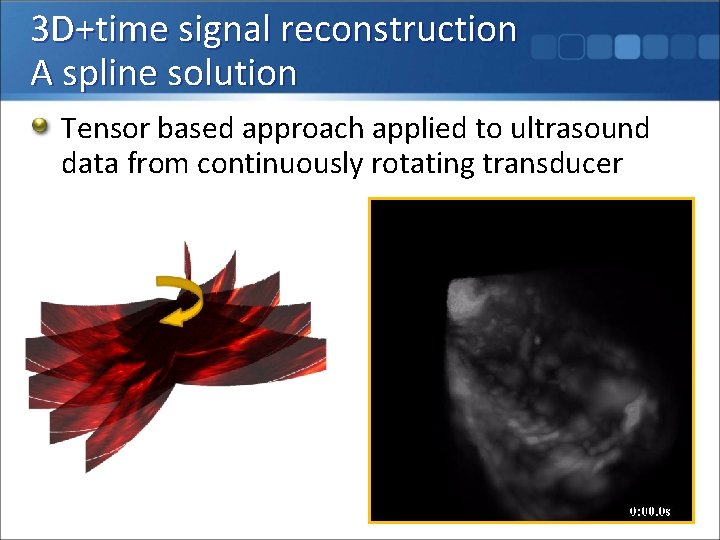

3 D+time signal reconstruction A spline solution Tensor based approach applied to ultrasound data from continuously rotating transducer

Computational problems in Cardiac Ultrasound Tissue/blood motion estimation Doppler Ultrasound imaging (State of the art) Semi-quantitative measurements Full motion reconstruction Generalization of B-spline reconstruction to vector valued data (Arigovindan, Unser 2005) Employing additional constraints from physics of fluids (incompressibility, Navier-Stokes equations)

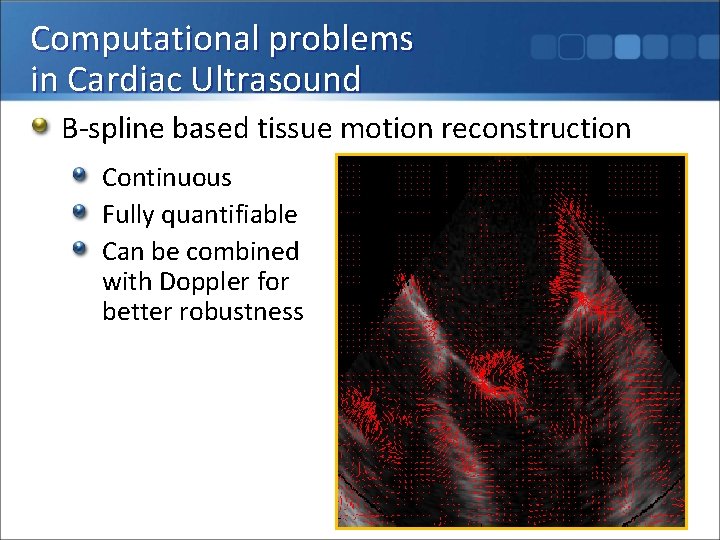

Computational problems in Cardiac Ultrasound B-spline based tissue motion reconstruction Continuous Fully quantifiable Can be combined with Doppler for better robustness

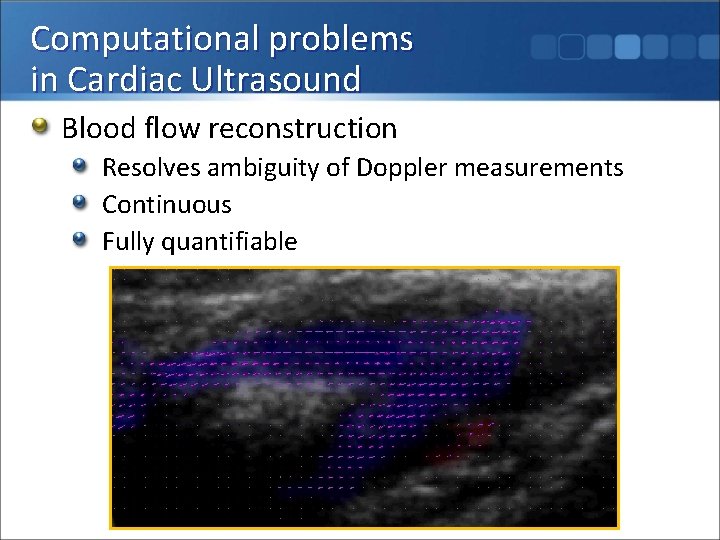

Computational problems in Cardiac Ultrasound Blood flow reconstruction Resolves ambiguity of Doppler measurements Continuous Fully quantifiable

Pathway to distributed supercomputing Multicore (IBM Power 7) claimed 260 GFLOP/chip Cluster (Uni. Basel) 34’ 500 GFLOP/400 cores GPGPU (ATI 4870 X 2) 2’ 000 GFLOP/card GPGPU array FPGA accelerator cards: dozens of GFLOP/chip, up to 512 chips per system, low power In exploration within ICES Microsoft project Cloud - Microsoft Azure

Cloud Ultrasound Processing Service Reasons Processing of large multidimensional multimodal medical data requires vast computational power Building/maintaining own HPC infrastructure is overly expensive Relatively rare use of HPC power (few times per day) Availability at multiple points of care (medical practices and hospital emergency rooms) Unified storage/access of the multimodal medical data

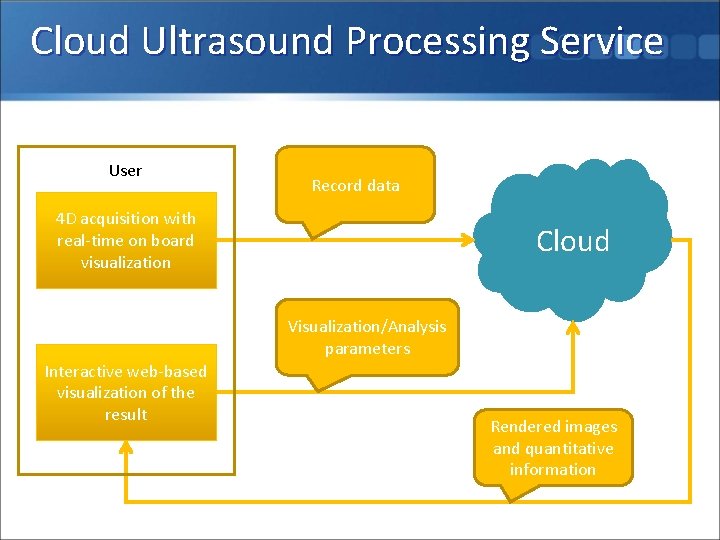

Cloud Ultrasound Processing Service User Record data 4 D acquisition with real-time on board visualization Cloud Visualization/Analysis parameters Interactive web-based visualization of the result Rendered images and quantitative information

Cloud Ultrasound Processing Service Record data Raw data 180 beams x 500 samples x 100 frames x 10 sec -> 85 Mb Additional information (geometry) -> few Kb Lossless compressed DICOM Low latency response to the user by sending first a subpart of the data for coarser resolution reconstruction

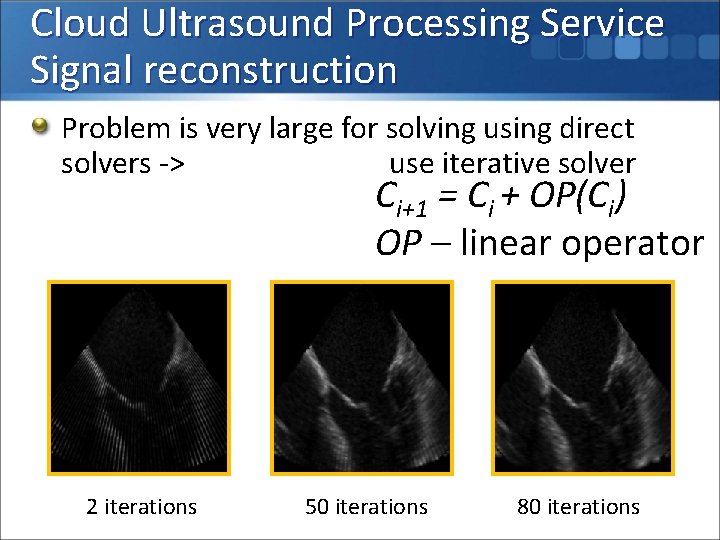

Cloud Ultrasound Processing Service Signal reconstruction Problem is very large for solving using direct solvers -> use iterative solver Ci+1 = Ci + OP(Ci) OP – linear operator 2 iterations 50 iterations 80 iterations

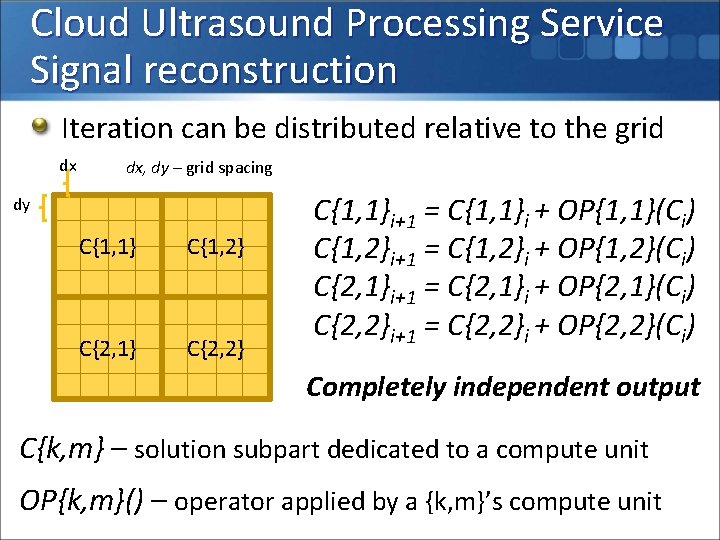

Cloud Ultrasound Processing Service Signal reconstruction Iteration can be distributed relative to the grid dx dx, dy – grid spacing dy C{1, 1} C{1, 2} C{2, 1} C{2, 2} C{1, 1}i+1 = C{1, 1}i + OP{1, 1}(Ci) C{1, 2}i+1 = C{1, 2}i + OP{1, 2}(Ci) C{2, 1}i+1 = C{2, 1}i + OP{2, 1}(Ci) C{2, 2}i+1 = C{2, 2}i + OP{2, 2}(Ci) Completely independent output C{k, m} – solution subpart dedicated to a compute unit OP{k, m}() – operator applied by a {k, m}’s compute unit

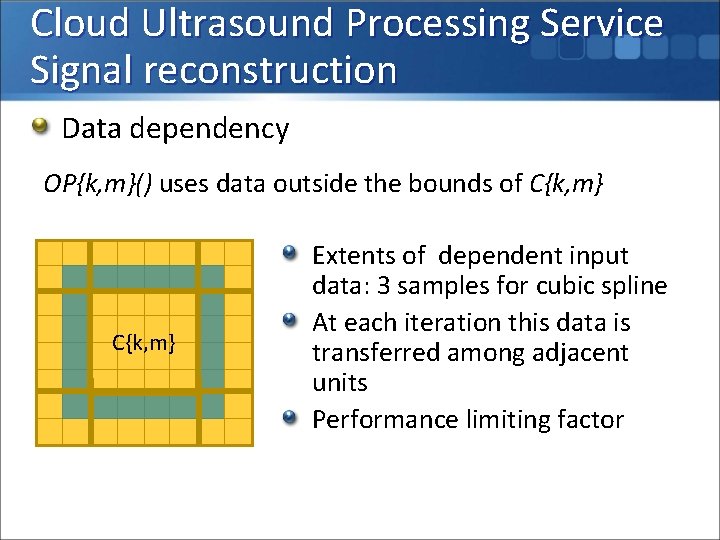

Cloud Ultrasound Processing Service Signal reconstruction Data dependency OP{k, m}() uses data outside the bounds of C{k, m} Extents of dependent input data: 3 samples for cubic spline At each iteration this data is transferred among adjacent units Performance limiting factor

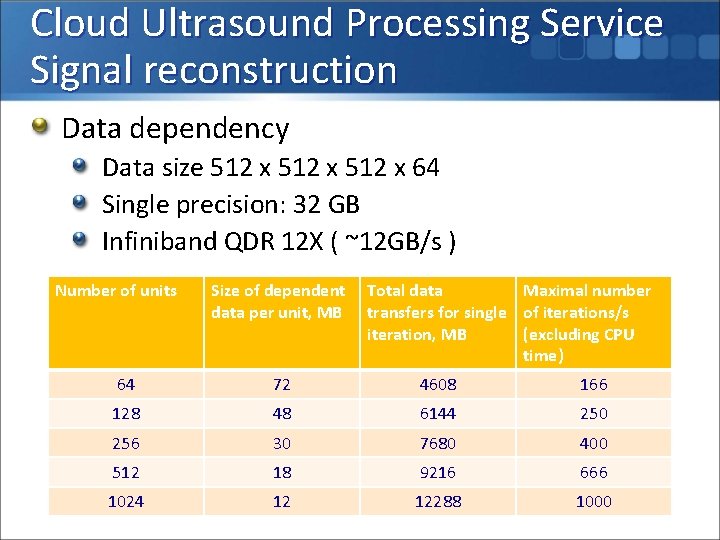

Cloud Ultrasound Processing Service Signal reconstruction Data dependency Data size 512 x 64 Single precision: 32 GB Infiniband QDR 12 X ( ~12 GB/s ) Number of units Size of dependent data per unit, MB Total data Maximal number transfers for single of iterations/s iteration, MB (excluding CPU time) 64 72 4608 166 128 48 6144 250 256 30 7680 400 512 18 9216 666 1024 12 12288 1000

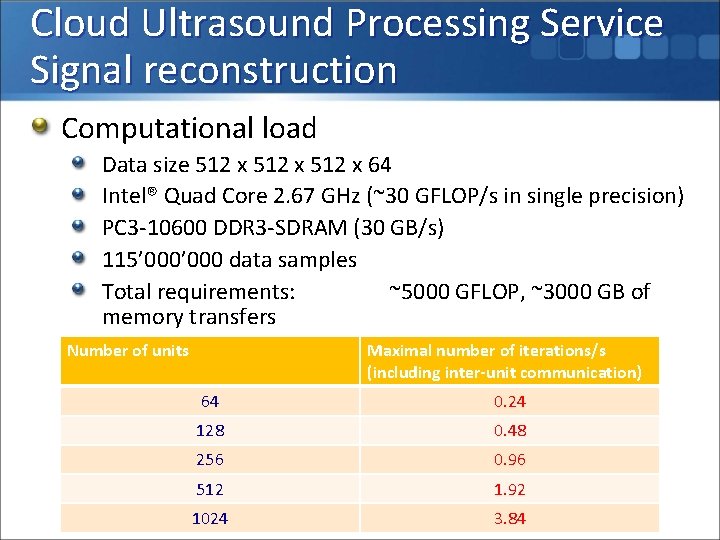

Cloud Ultrasound Processing Service Signal reconstruction Computational load Data size 512 x 64 Intel® Quad Core 2. 67 GHz (~30 GFLOP/s in single precision) PC 3 -10600 DDR 3 -SDRAM (30 GB/s) 115’ 000 data samples Total requirements: ~5000 GFLOP, ~3000 GB of memory transfers Number of units Maximal number of iterations/s (including inter-unit communication) 64 0. 24 128 0. 48 256 0. 96 512 1. 92 1024 3. 84

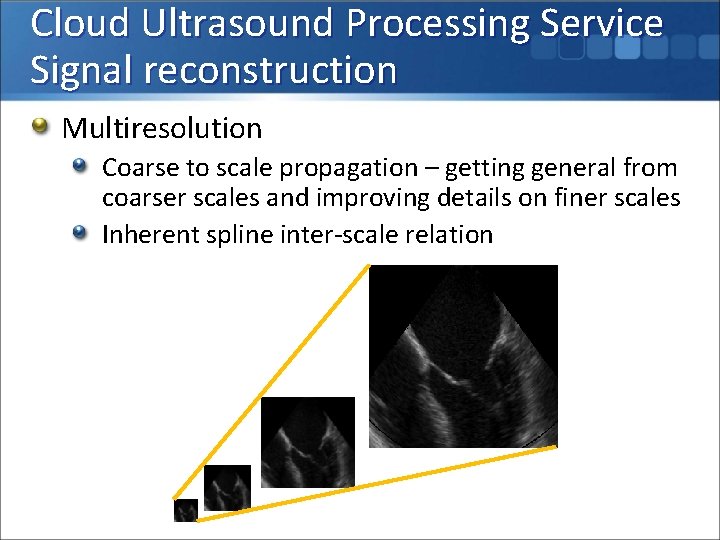

Cloud Ultrasound Processing Service Signal reconstruction Multiresolution Coarse to scale propagation – getting general from coarser scales and improving details on finer scales Inherent spline inter-scale relation

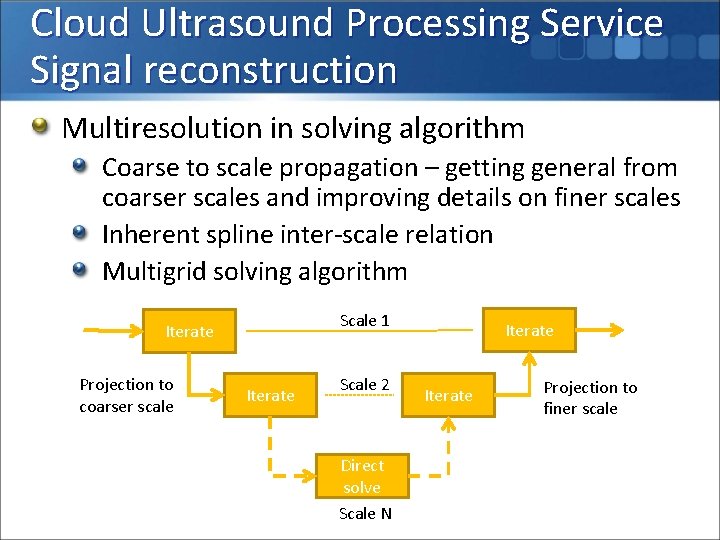

Cloud Ultrasound Processing Service Signal reconstruction Multiresolution in solving algorithm Coarse to scale propagation – getting general from coarser scales and improving details on finer scales Inherent spline inter-scale relation Multigrid solving algorithm Scale 1 Iterate Projection to coarser scale Iterate Scale 2 Direct solve Scale N Iterate Projection to finer scale

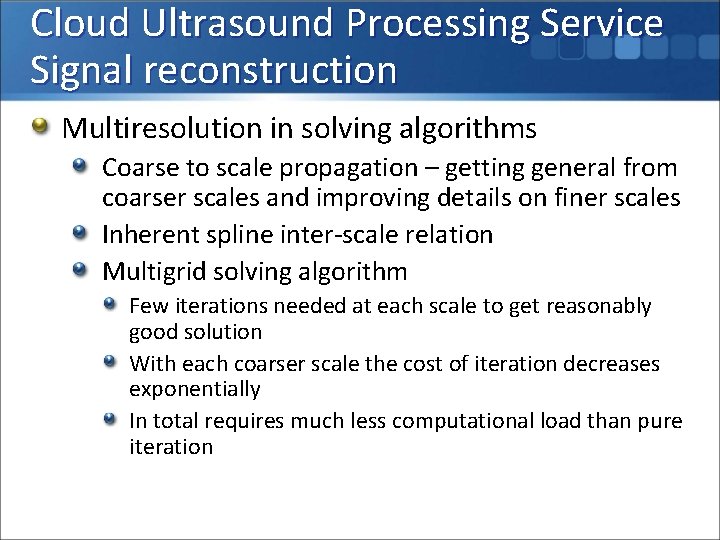

Cloud Ultrasound Processing Service Signal reconstruction Multiresolution in solving algorithms Coarse to scale propagation – getting general from coarser scales and improving details on finer scales Inherent spline inter-scale relation Multigrid solving algorithm Few iterations needed at each scale to get reasonably good solution With each coarser scale the cost of iteration decreases exponentially In total requires much less computational load than pure iteration

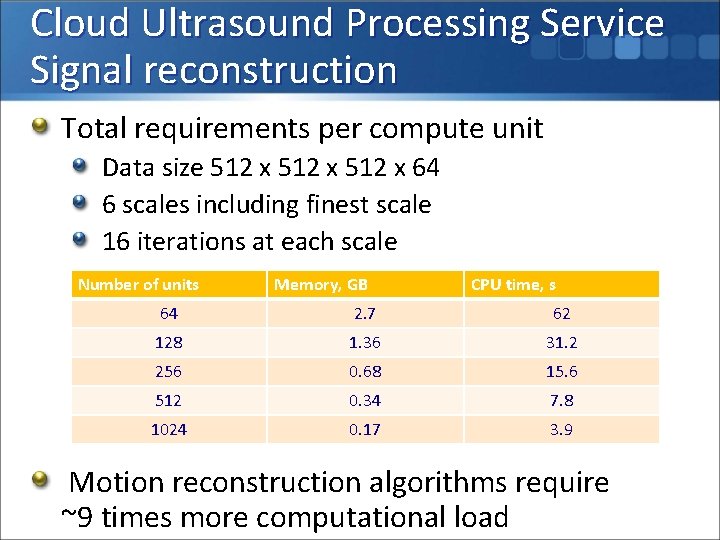

Cloud Ultrasound Processing Service Signal reconstruction Total requirements per compute unit Data size 512 x 64 6 scales including finest scale 16 iterations at each scale Number of units Memory, GB CPU time, s 64 2. 7 62 128 1. 36 31. 2 256 0. 68 15. 6 512 0. 34 7. 8 1024 0. 17 3. 9 Motion reconstruction algorithms require ~9 times more computational load

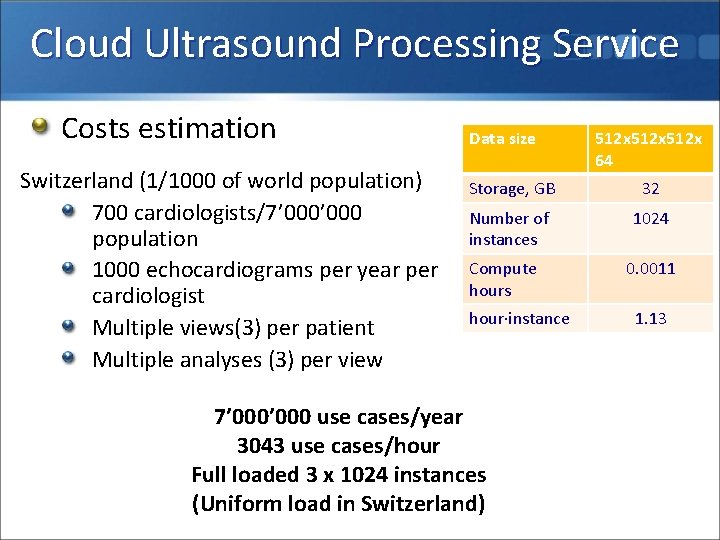

Cloud Ultrasound Processing Service Costs estimation Switzerland (1/1000 of world population) 700 cardiologists/7’ 000 population 1000 echocardiograms per year per cardiologist Multiple views(3) per patient Multiple analyses (3) per view Data size 512 x 64 Storage, GB 32 Number of instances 1024 Compute hours hour∙instance 7’ 000 use cases/year 3043 use cases/hour Full loaded 3 x 1024 instances (Uniform load in Switzerland) 0. 0011 1. 13

Collaborative Research enabled by the Cloud Globally available service for processing, storing and accessing medical data Standardized DICOM interface for unifying data access Involve all interested parties around the world World-wide large scale trials are possible Getting more statistics for rare cases Building reference datasets for known cases

Conclusion An approach to rapid diagnosis of heart disease using cloud based distributed computing Replace ultrasound machine’s supercomputer by a cloud service for remote processing and storage Miniaturization of the medical equipment and decrease of its costs Availability of advanced analysis technologies for objective analysis Availability at multiple points of care Unified storage and access of medical data Enables collaborative research

- Slides: 39