RANDOMIZED ALGORITHMS AND NLP USING LOCALITY SENSITIVE HASH

![Question • What is the hamming distance between two bit stream: A=[0011000], B=[1111001]? • Question • What is the hamming distance between two bit stream: A=[0011000], B=[1111001]? •](https://slidetodoc.com/presentation_image/f06ba47a9fab1abfef45e89294edc21f/image-21.jpg)

- Slides: 34

RANDOMIZED ALGORITHMS AND NLP USING LOCALITY SENSITIVE HASH FUNCTIONS FOR HIGH SPEED NOUN CLUSTERING Chen LUO,Mohamed Abd. El. Rahman Instructor: Dr. Anshumali Shrivastava †Rice University

Outline • Problem Background • Theory • LSH Function Preserving Cosine Similarity (Dimension Reduction) • Fast Search Algorithm (From n 2 to n) • Extending to NLP and Experiment 1

Motivation • What is the meaning of the word: “tezgüno” ? 2

Motivation • Consider the following Context: A bottle of tezgüno is on the table. Everyone likes tezgüno. Tezgüno makes you drunk. We make tezgüno out of corn. • Still not Sure? 3

Motivation • Consider the following Context: A bottle of tezgüno is on the table. Everyone likes tezgüno. Tezgüno makes you drunk. We make tezgüno out of corn. A bottle of beer is on the table. Everyone likes beer. Beer makes you drunk. We make beer out of corn. “Beer” and “tezgüno” have similar context, have similar meaning. 4

Motivation • We want this process to be done automatically by a computer! • So, the main task here is to find similar nouns! Noun Clustering 5

Problem Background • Task: Clustering Very Large scale nouns • n nodes (n nouns) • Each nodes has k features. (Details Later) • Calculate Full Similarity Matrix • Complexity: • Can not be tolerated when n is very large! 6

Problem Background • By 2000 • Over 500 billion readily accessible words on the web • Now • Very Large amount! • We want linear: • Hashing is a good way! 7

Outline • Problem Background • Theory • LSH Function Preserving Cosine Similarity (Dimension Reduction) • Fast Search Algorithm (From n 2 to n) • Extending to NLP and Experiment 8

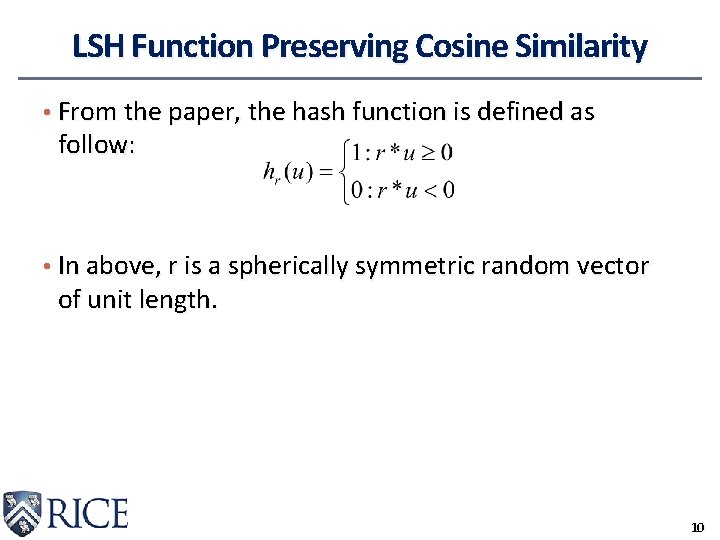

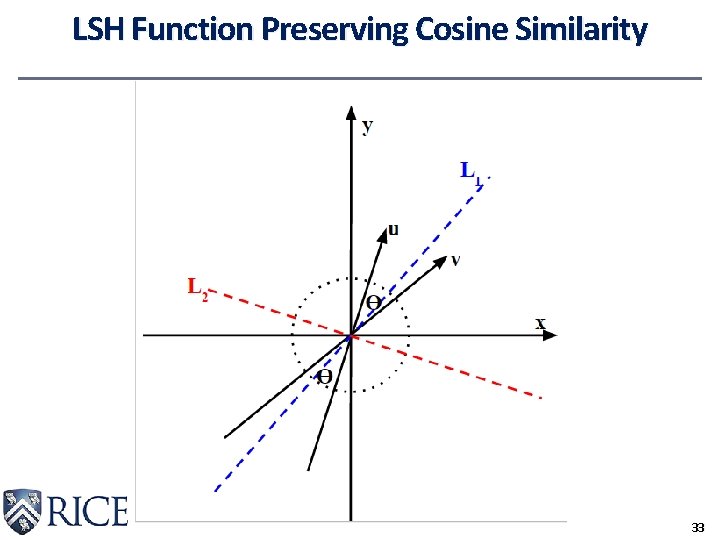

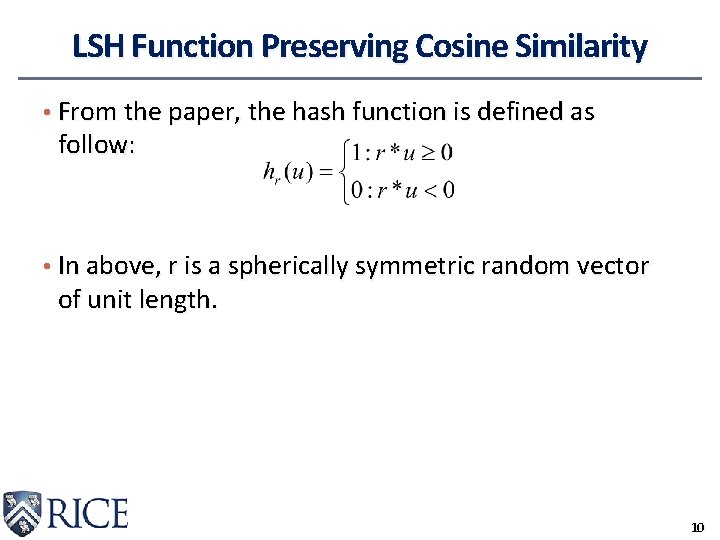

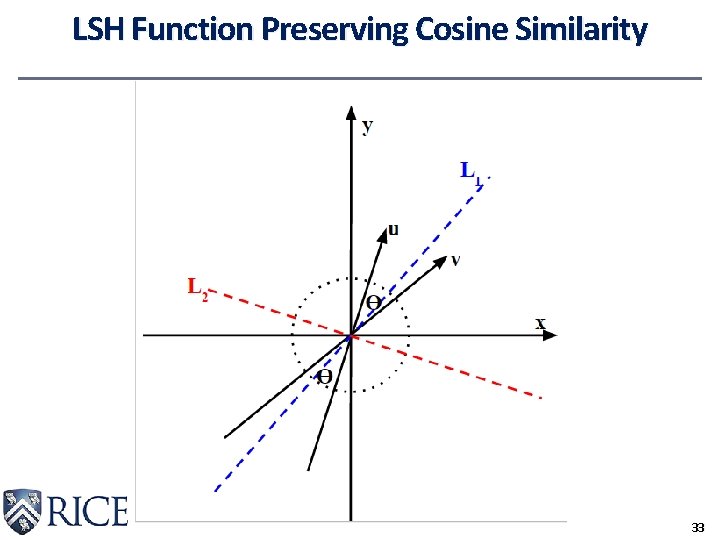

LSH Function Preserving Cosine Similarity • The similarity measure between each node is Cosine Similarity. • Cosine Similarity • We want to design a hash function that preserve this similarity. 9

LSH Function Preserving Cosine Similarity • From the paper, the hash function is defined as follow: • In above, r is a spherically symmetric random vector of unit length. 10

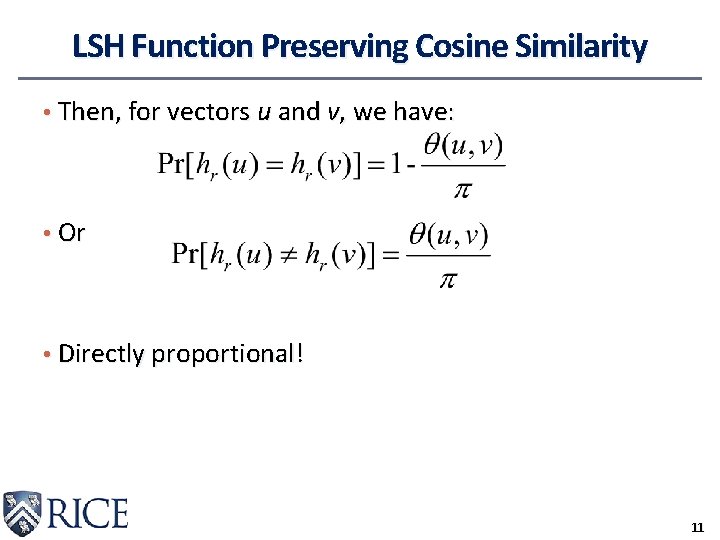

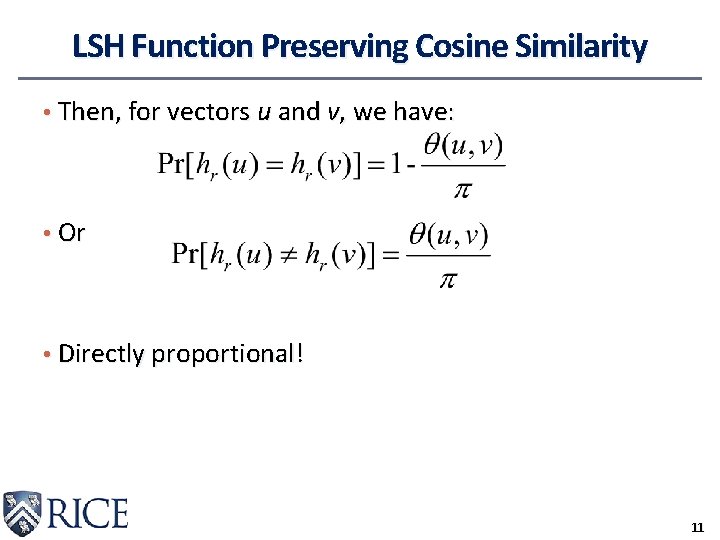

LSH Function Preserving Cosine Similarity • Then, for vectors u and v, we have: • Or • Directly proportional! 11

LSH Function Preserving Cosine Similarity • From the equation bellow: • We can have • Then, we can estimate cosine similarity using: 12

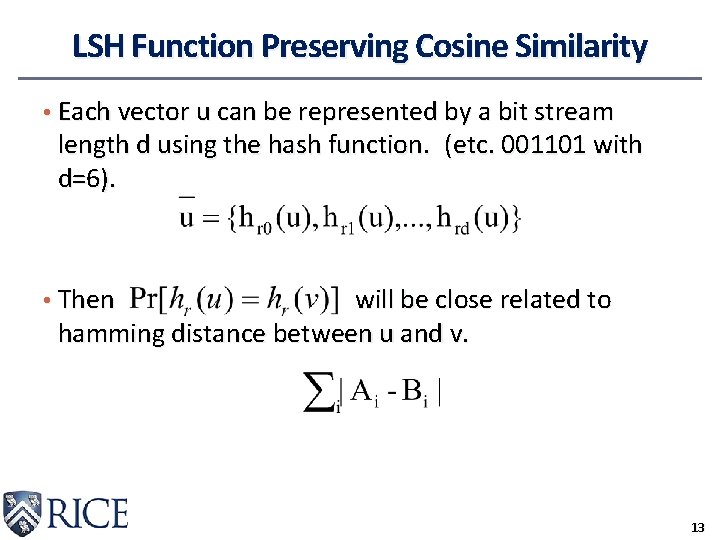

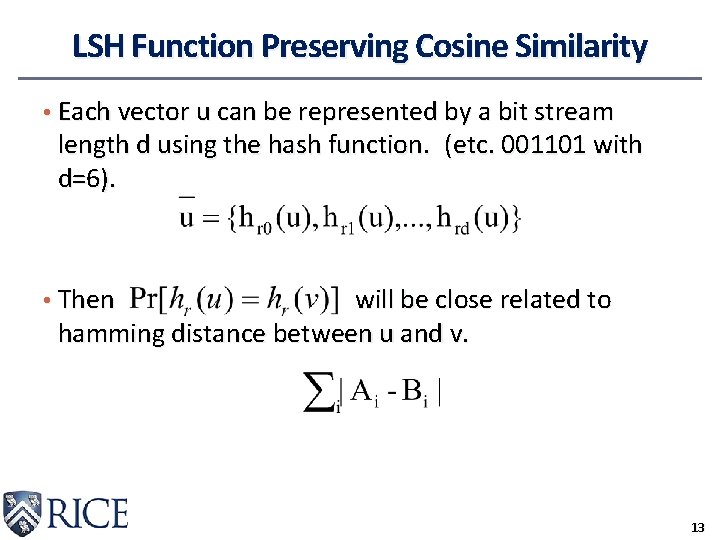

LSH Function Preserving Cosine Similarity • Each vector u can be represented by a bit stream length d using the hash function. (etc. 001101 with d=6). • Then will be close related to hamming distance between u and v. 13

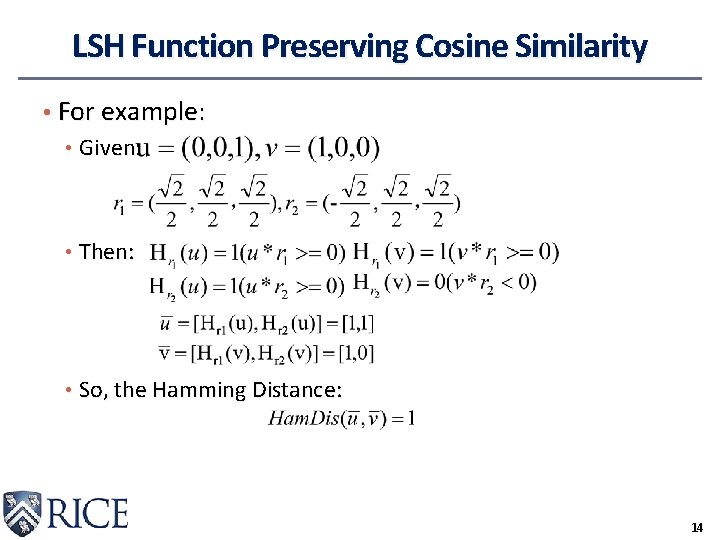

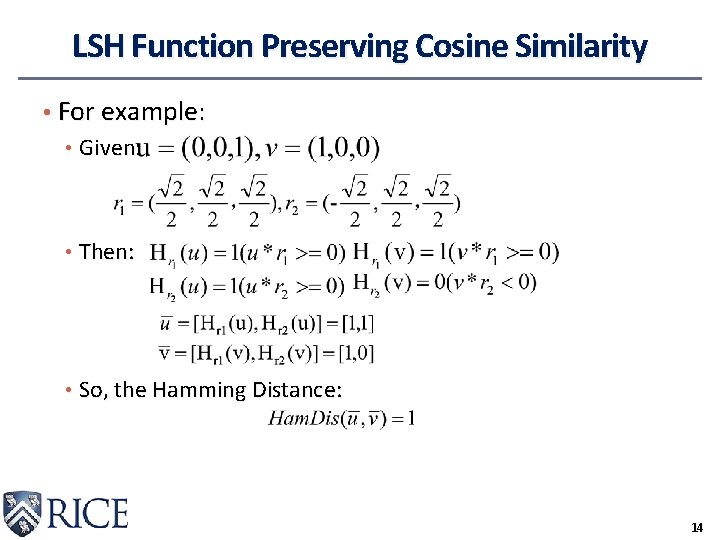

LSH Function Preserving Cosine Similarity • For example: • Given: • Then: • So, the Hamming Distance: 14

LSH Function Preserving Cosine Similarity • Convert: • Finding the cosine distance of two vectors • Finding the Hamming Distance between bit streams Dimension Reduction! But the complexity is still n 2 15

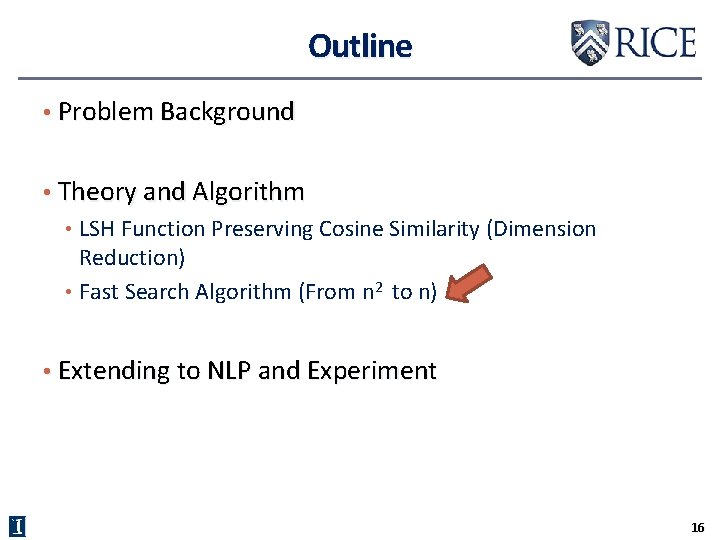

Outline • Problem Background • Theory and Algorithm • LSH Function Preserving Cosine Similarity (Dimension Reduction) • Fast Search Algorithm (From n 2 to n) • Extending to NLP and Experiment 16

Fast Search Algorithm • Task: • Given the signature for each vectors: • Stream bit (e. g. 1001) for each vectors. • Find the nearest neighbors for each vector. 17

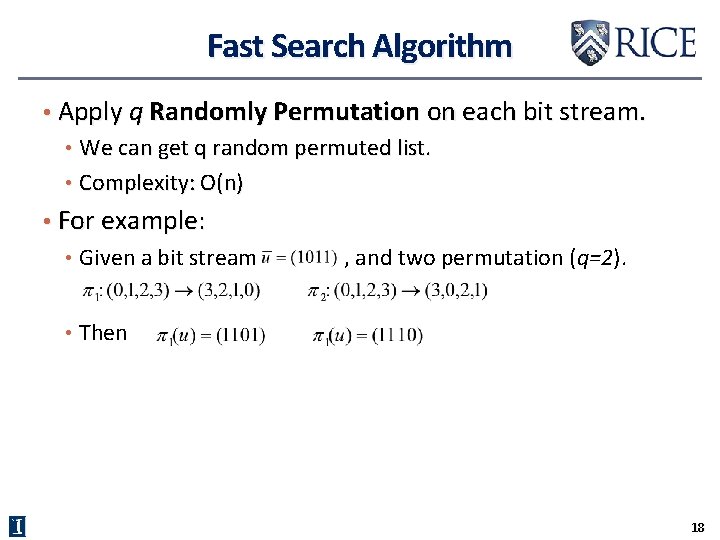

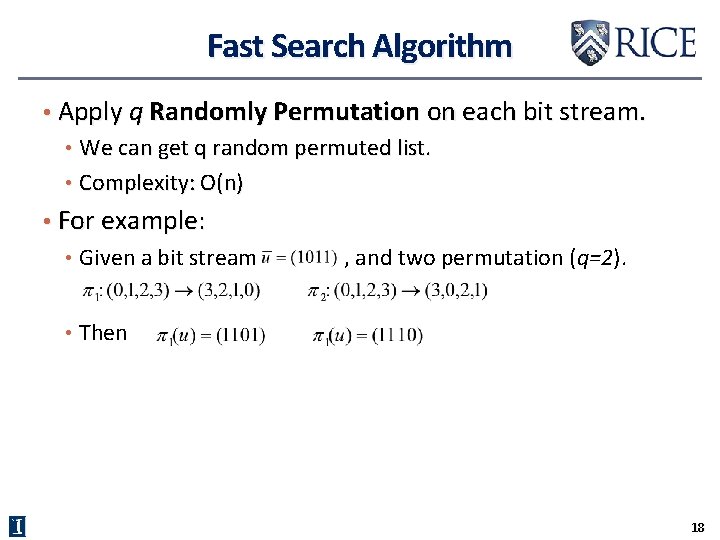

Fast Search Algorithm • Apply q Randomly Permutation on each bit stream. • We can get q random permuted list. • Complexity: O(n) • For example: • Given a bit stream , and two permutation (q=2). • Then 18

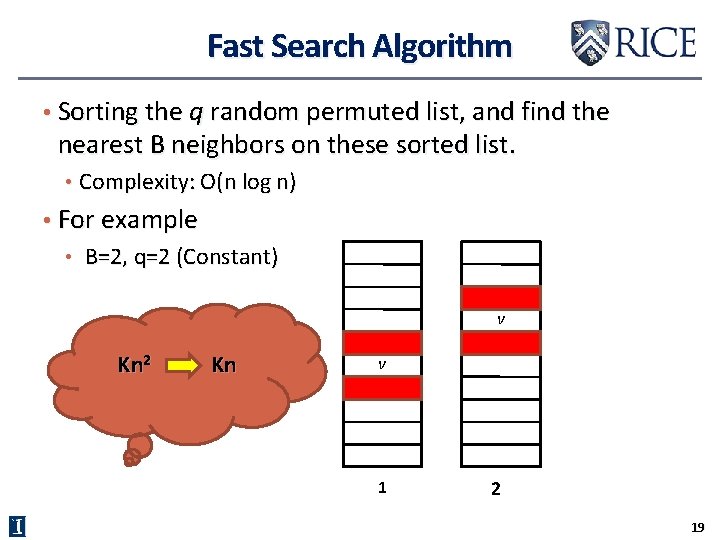

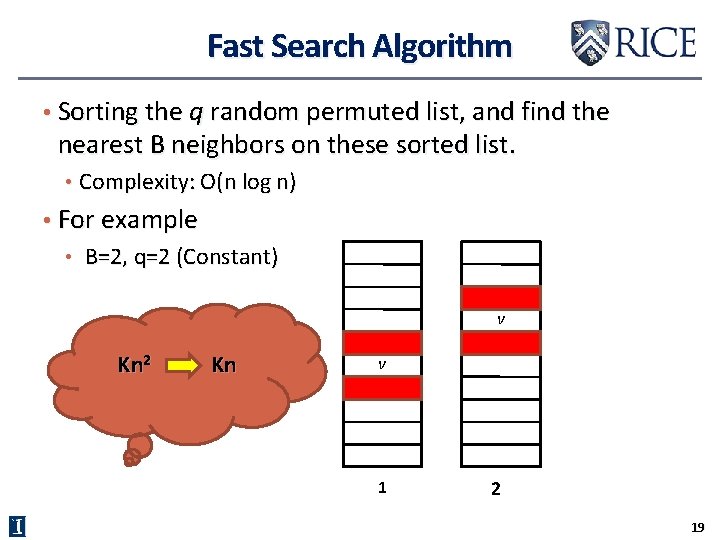

Fast Search Algorithm • Sorting the q random permuted list, and find the nearest B neighbors on these sorted list. • Complexity: O(n log n) • For example • B=2, q=2 (Constant) v Kn 2 Kn v 1 2 19

![Question What is the hamming distance between two bit stream A0011000 B1111001 Question • What is the hamming distance between two bit stream: A=[0011000], B=[1111001]? •](https://slidetodoc.com/presentation_image/f06ba47a9fab1abfef45e89294edc21f/image-21.jpg)

Question • What is the hamming distance between two bit stream: A=[0011000], B=[1111001]? • Ans. Hamming(A, B)=3 • Suppose we have two 2 -dimension vectors u=[1, 0], v=[0, 1]. r is the spherically symmetric random vector. Then what is the value of ? • 20

Outline • Problem Background • Theory and Algorithm • LSH Function Preserving Cosine Similarity (Dimension Reduction) • Fast Search Algorithm (From n 2 to n) • Extending to NLP and Experiment 21

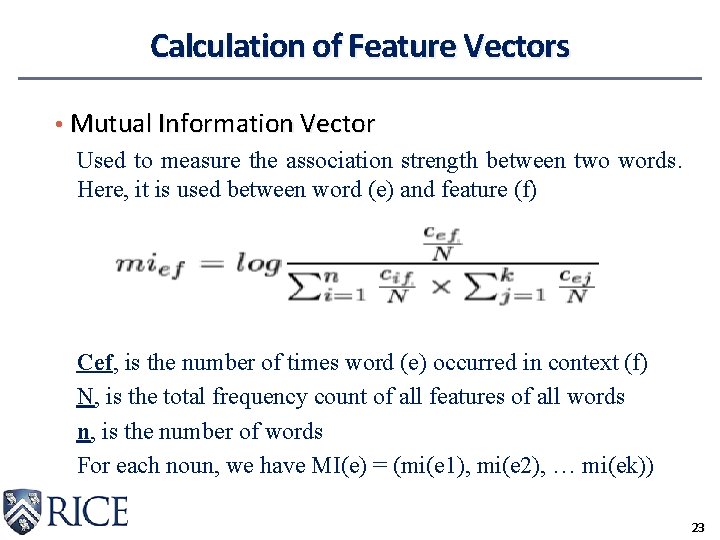

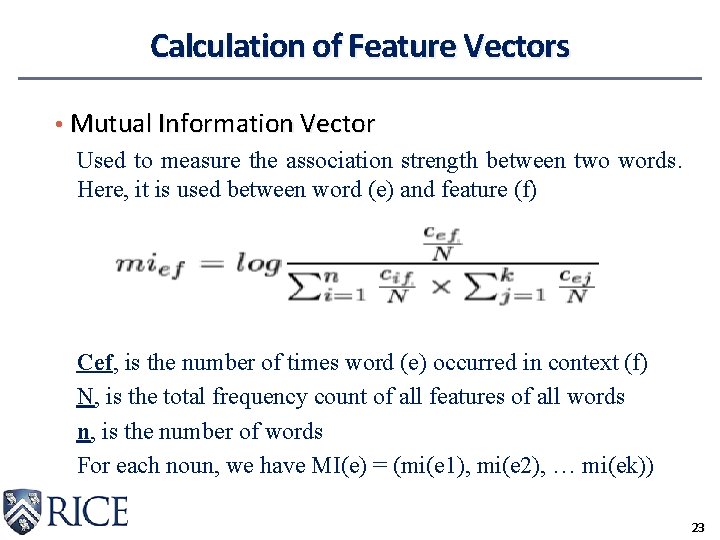

Testing Datasets Corpus Web Corpus Newspaper Corpus Size From 70 million to 31 million web pages (138 GB) “remove non-English docs & duplicate and near duplicate docs” 6 GB Nouns Identification Using a noun-phrase identifier Using the dependency parser Minipar (Lin, 1994) Unique Nouns 655, 495 65, 547 Features Identification for noun vector For each noun phrase ←←noun phrase→→ Take the grammatical context of the noun as in Minipar Feature Size 1, 306, 482 940, 154 22

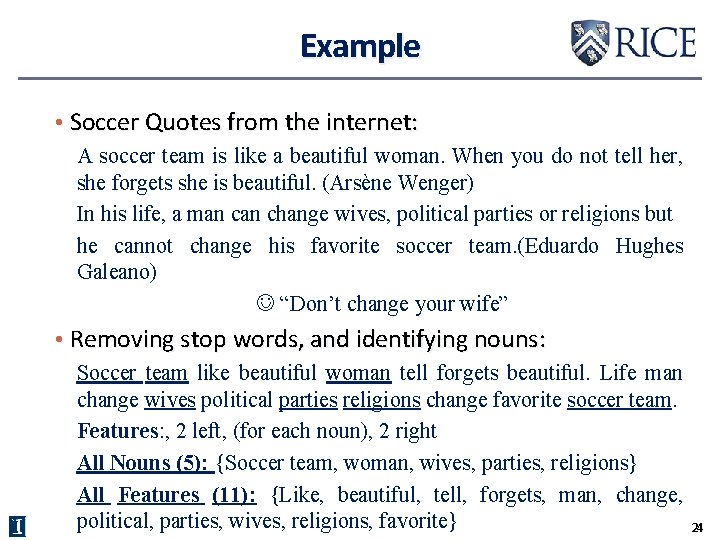

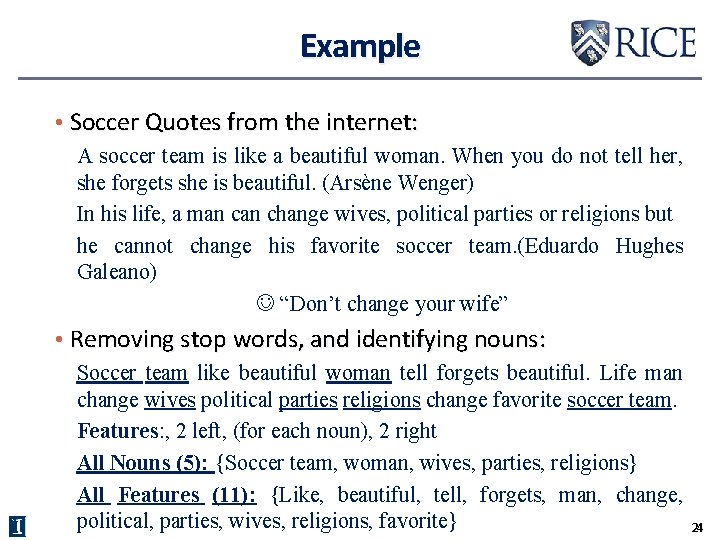

Calculation of Feature Vectors • Mutual Information Vector Used to measure the association strength between two words. Here, it is used between word (e) and feature (f) Cef, is the number of times word (e) occurred in context (f) N, is the total frequency count of all features of all words n, is the number of words For each noun, we have MI(e) = (mi(e 1), mi(e 2), … mi(ek)) 23

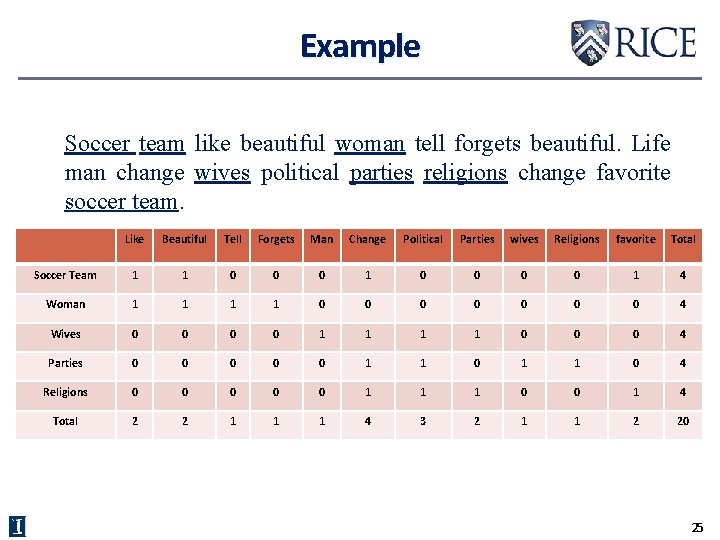

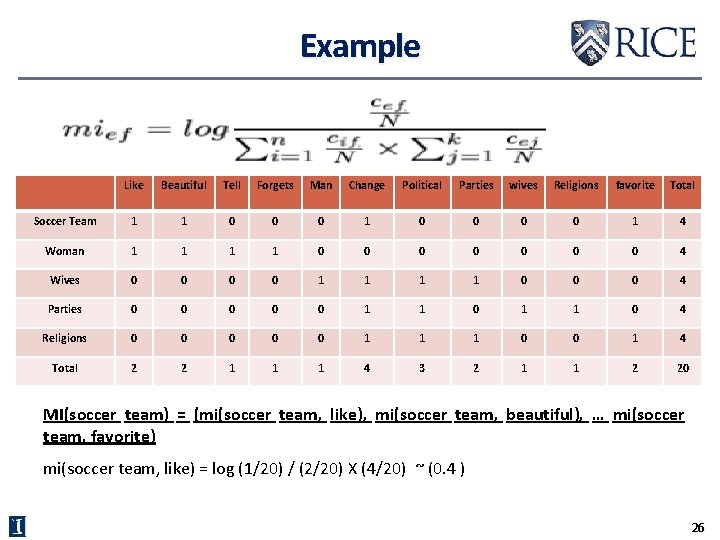

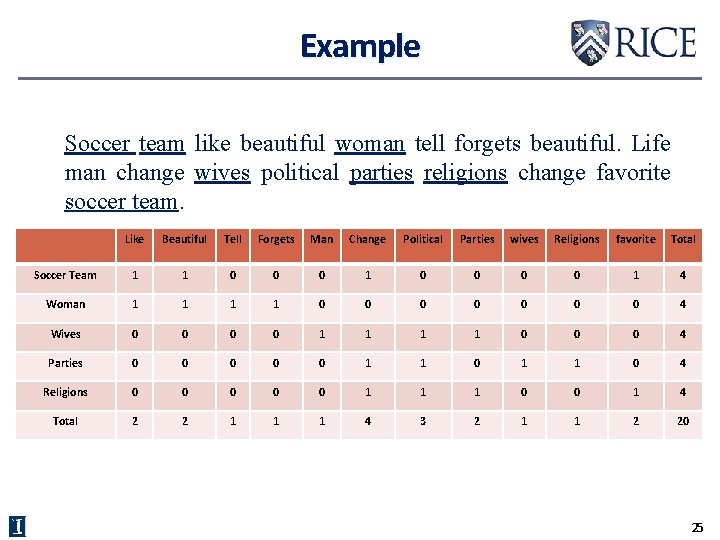

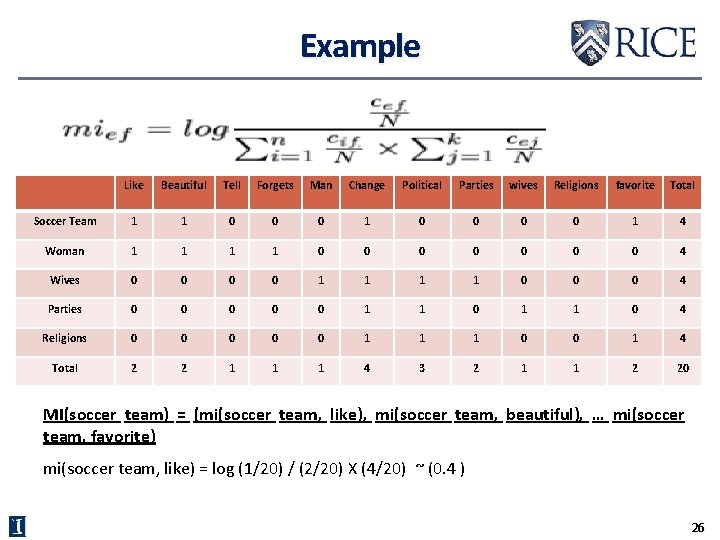

Example • Soccer Quotes from the internet: A soccer team is like a beautiful woman. When you do not tell her, she forgets she is beautiful. (Arsène Wenger) In his life, a man change wives, political parties or religions but he cannot change his favorite soccer team. (Eduardo Hughes Galeano) “Don’t change your wife” • Removing stop words, and identifying nouns: Soccer team like beautiful woman tell forgets beautiful. Life man change wives political parties religions change favorite soccer team. Features: , 2 left, (for each noun), 2 right All Nouns (5): {Soccer team, woman, wives, parties, religions} All Features (11): {Like, beautiful, tell, forgets, man, change, political, parties, wives, religions, favorite} 24

Example Soccer team like beautiful woman tell forgets beautiful. Life man change wives political parties religions change favorite soccer team. Like Beautiful Tell Forgets Man Change Political Parties wives Religions favorite Total Soccer Team 1 1 0 0 0 0 1 4 Woman 1 1 0 0 0 0 4 Wives 0 0 1 1 0 0 0 4 Parties 0 0 0 1 1 0 4 Religions 0 0 0 1 1 1 0 0 1 4 Total 2 2 1 1 1 4 3 2 1 1 2 20 25

Example Like Beautiful Tell Forgets Man Change Political Parties wives Religions favorite Total Soccer Team 1 1 0 0 0 0 1 4 Woman 1 1 0 0 0 0 4 Wives 0 0 1 1 0 0 0 4 Parties 0 0 0 1 1 0 4 Religions 0 0 0 1 1 1 0 0 1 4 Total 2 2 1 1 1 4 3 2 1 1 2 20 MI(soccer team) = (mi(soccer team, like), mi(soccer team, beautiful), … mi(soccer team, favorite) mi(soccer team, like) = log (1/20) / (2/20) X (4/20) ~ (0. 4 ) 26

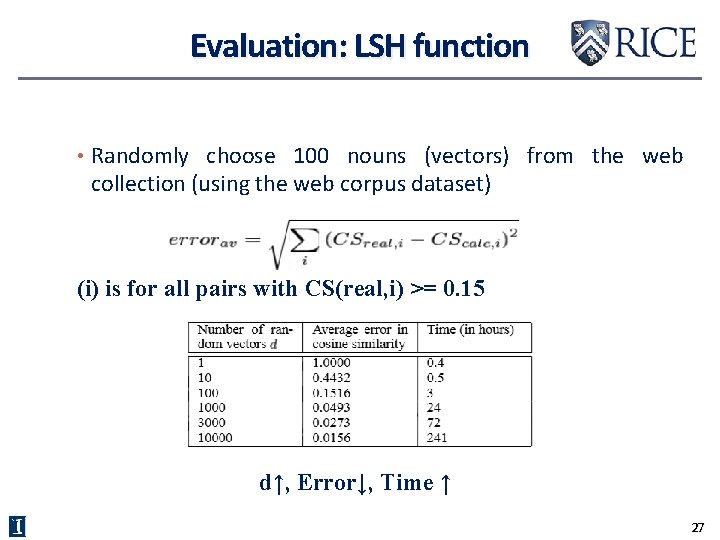

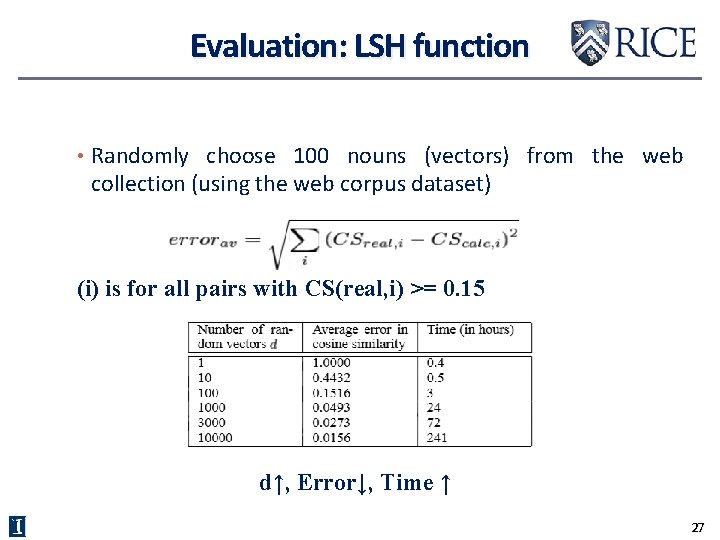

Evaluation: LSH function • Randomly choose 100 nouns (vectors) from the web collection (using the web corpus dataset) (i) is for all pairs with CS(real, i) >= 0. 15 d↑, Error↓, Time ↑ 27

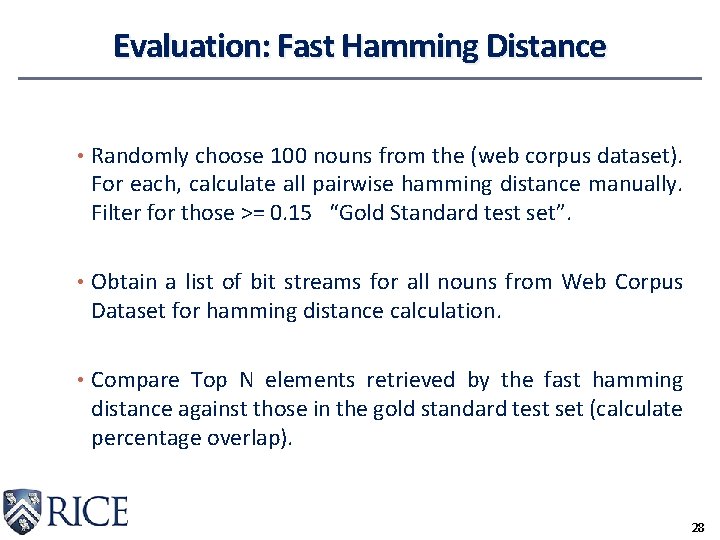

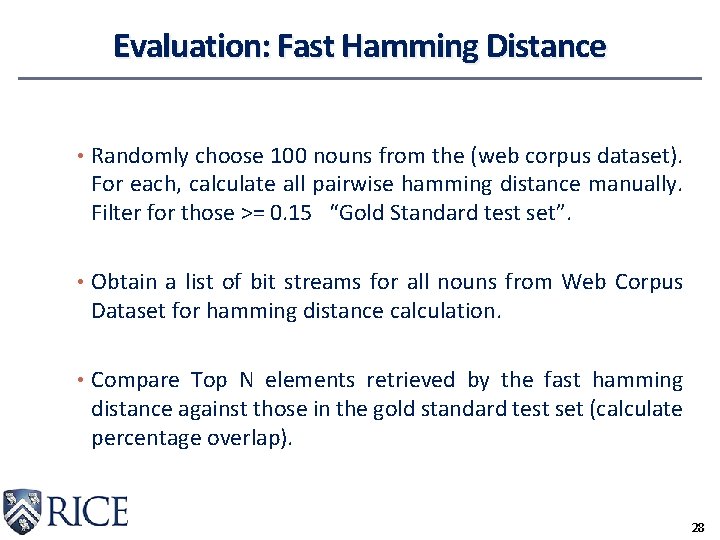

Evaluation: Fast Hamming Distance • Randomly choose 100 nouns from the (web corpus dataset). For each, calculate all pairwise hamming distance manually. Filter for those >= 0. 15 “Gold Standard test set”. • Obtain a list of bit streams for all nouns from Web Corpus Dataset for hamming distance calculation. • Compare Top N elements retrieved by the fast hamming distance against those in the gold standard test set (calculate percentage overlap). 28

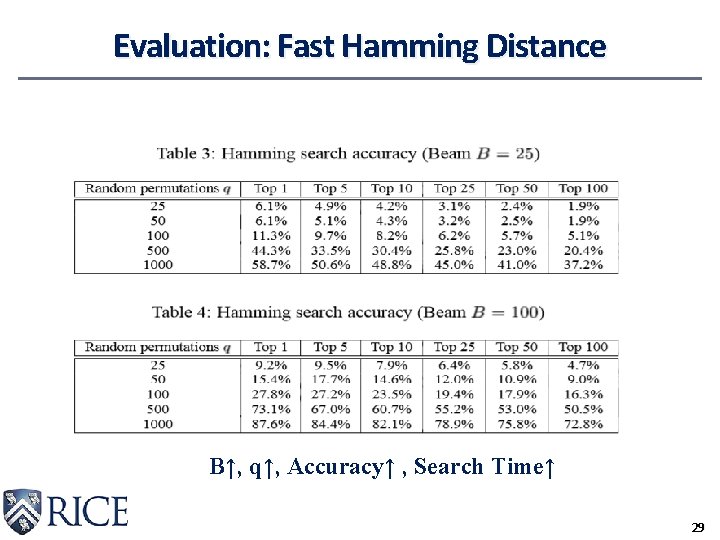

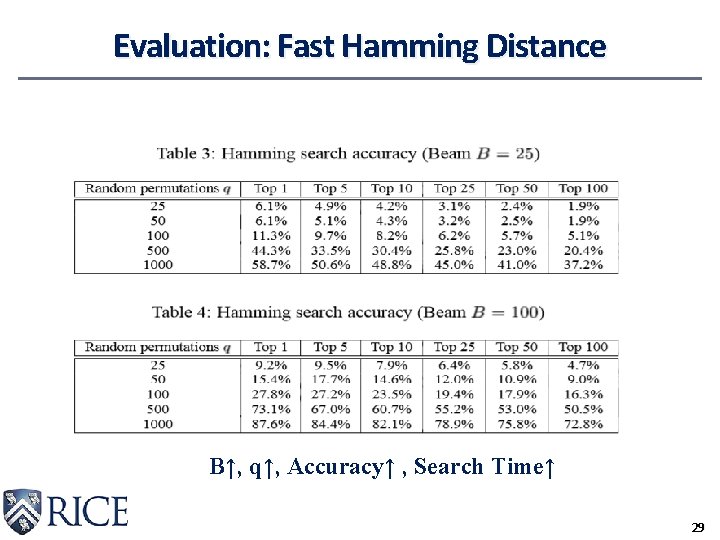

Evaluation: Fast Hamming Distance B↑, q↑, Accuracy↑ , Search Time↑ 29

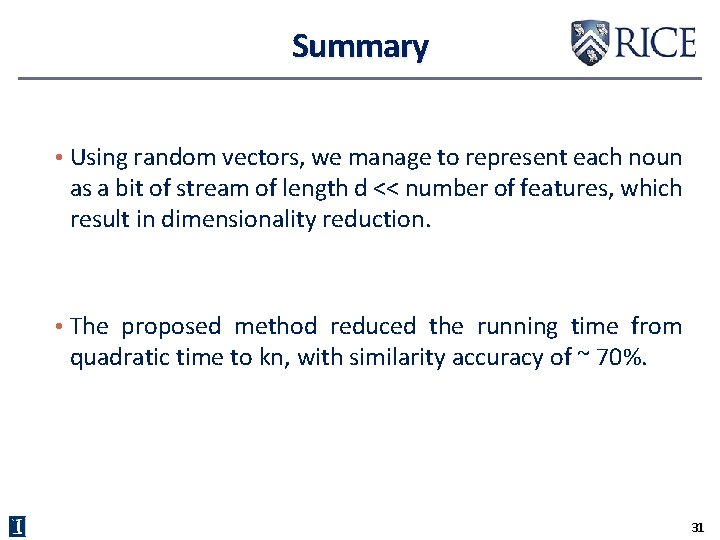

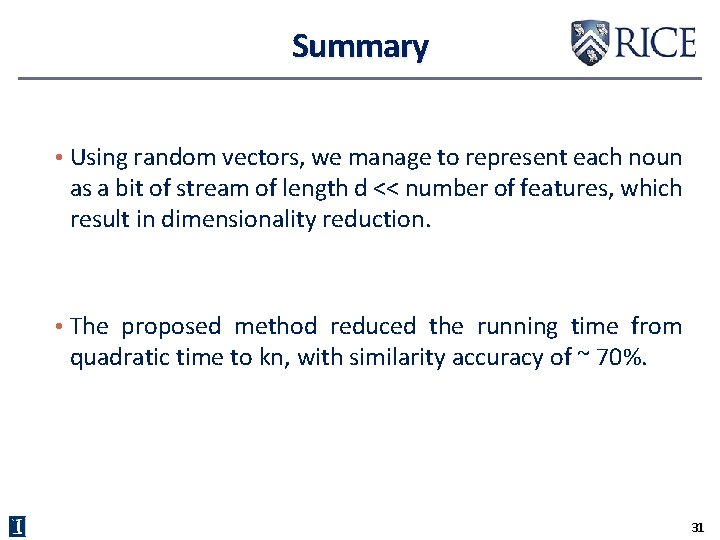

Evaluation: Final Similarity Lists • Using (the Newspaper Corpus). • Randomly choose 100 nouns and calculate top N elements using the randomized algorithm, and compare with those resulted from (Pantel and Lin (2002) system) and calculate (percentage overlap). 30

Summary • Using random vectors, we manage to represent each noun as a bit of stream of length d << number of features, which result in dimensionality reduction. • The proposed method reduced the running time from quadratic time to kn, with similarity accuracy of ~ 70%. 31

RANDOMIZED ALGORITHMS AND NLP USING LOCALITY SENSITIVE HASH FUNCTIONS FOR HIGH SPEED NOUN CLUSTERING Chen LUO,Mohamed Abd. El. Rahman Instructor: Dr. Anshumali Shrivastava †Rice University

LSH Function Preserving Cosine Similarity 33