Random walks on undirected graphs and a little

- Slides: 34

Random walks on undirected graphs and a little bit about Markov Chains Guy

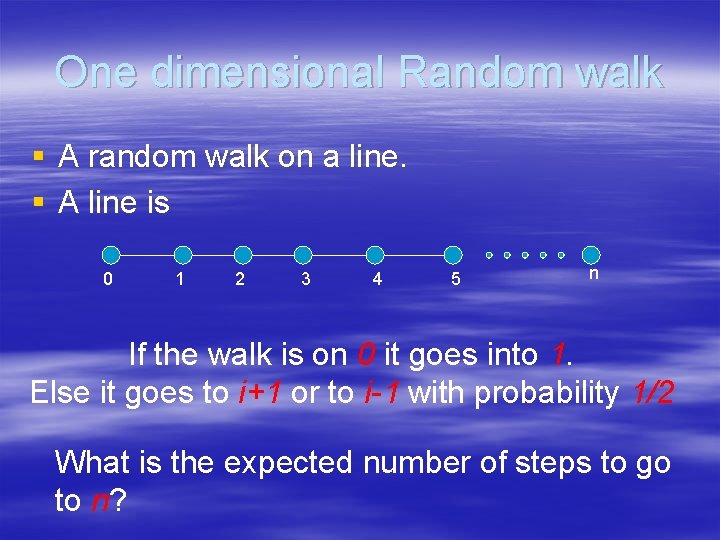

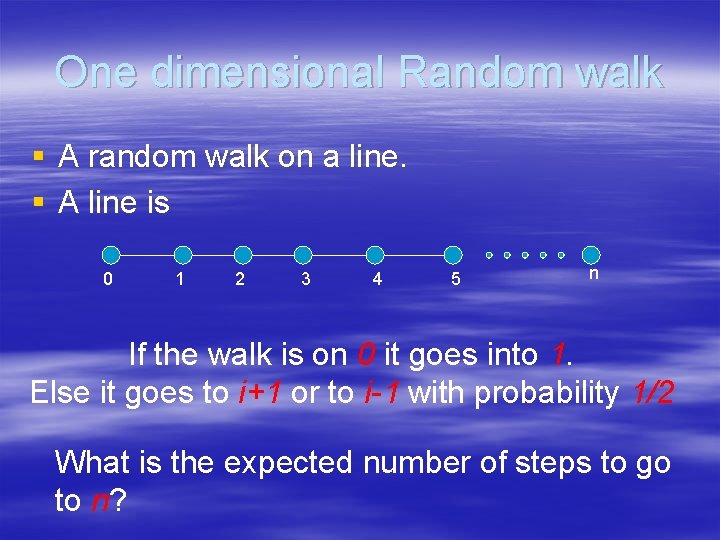

One dimensional Random walk § A random walk on a line. § A line is 0 1 2 3 4 5 n If the walk is on 0 it goes into 1. Else it goes to i+1 or to i-1 with probability 1/2 What is the expected number of steps to go to n?

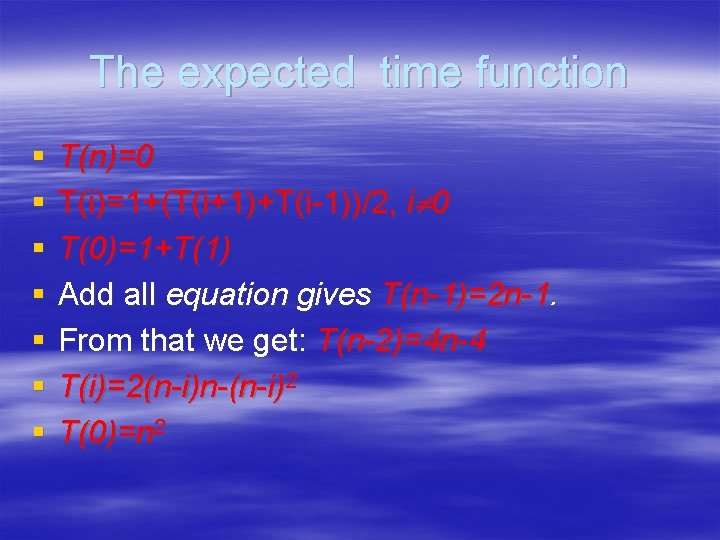

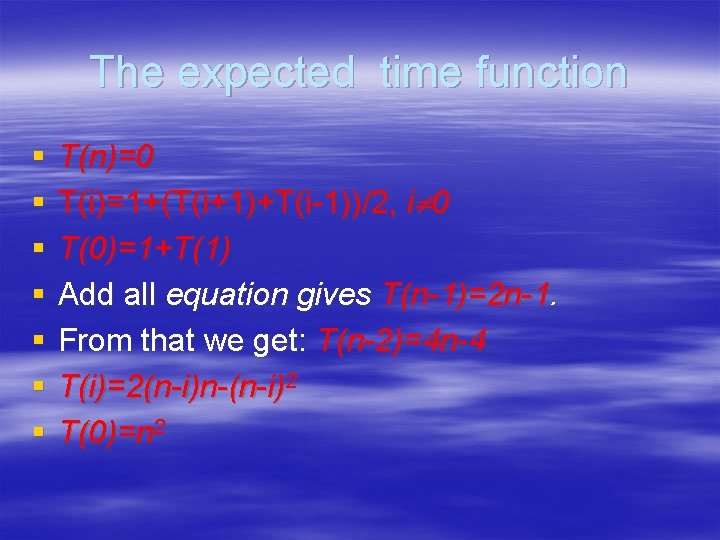

The expected time function § § § § T(n)=0 T(i)=1+(T(i+1)+T(i-1))/2, i 0 T(0)=1+T(1) Add all equation gives T(n-1)=2 n-1. From that we get: T(n-2)=4 n-4 T(i)=2(n-i)n-(n-i)2 T(0)=n 2

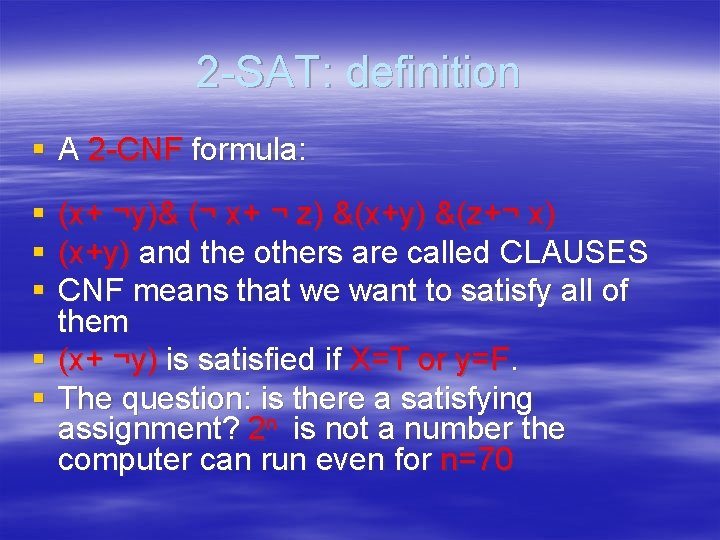

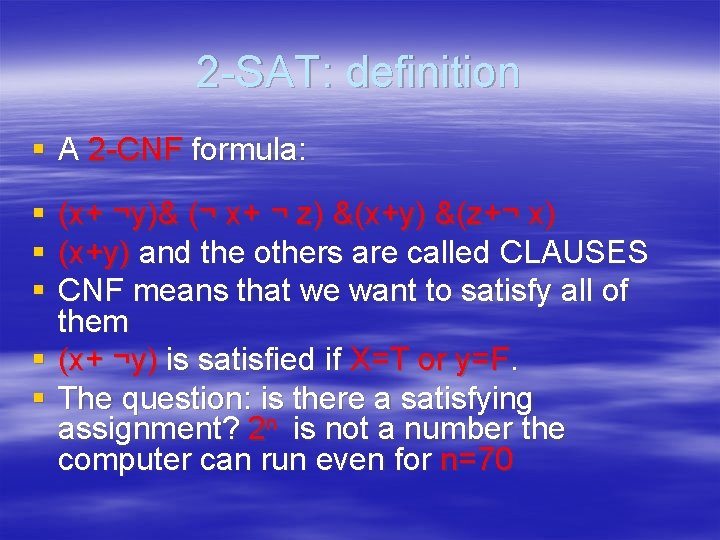

2 -SAT: definition § A 2 -CNF formula: § (x+ ¬y)& (¬ x+ ¬ z) &(x+y) &(z+¬ x) § (x+y) and the others are called CLAUSES § CNF means that we want to satisfy all of them § (x+ ¬y) is satisfied if X=T or y=F. § The question: is there a satisfying assignment? 2 n is not a number the computer can run even for n=70

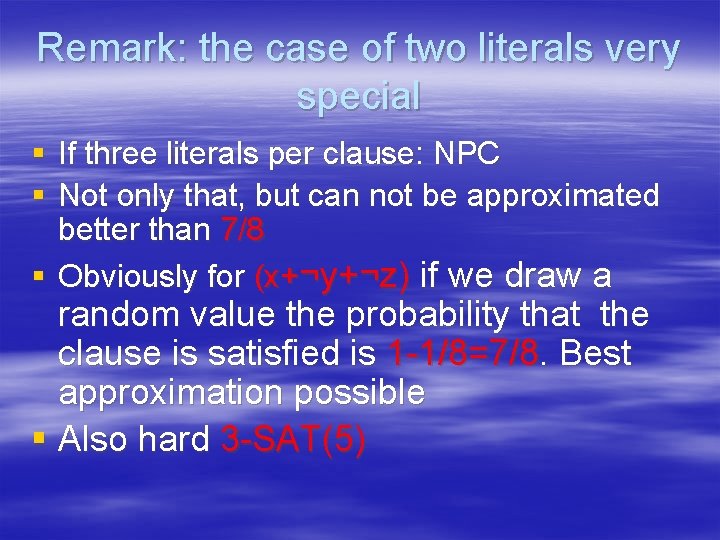

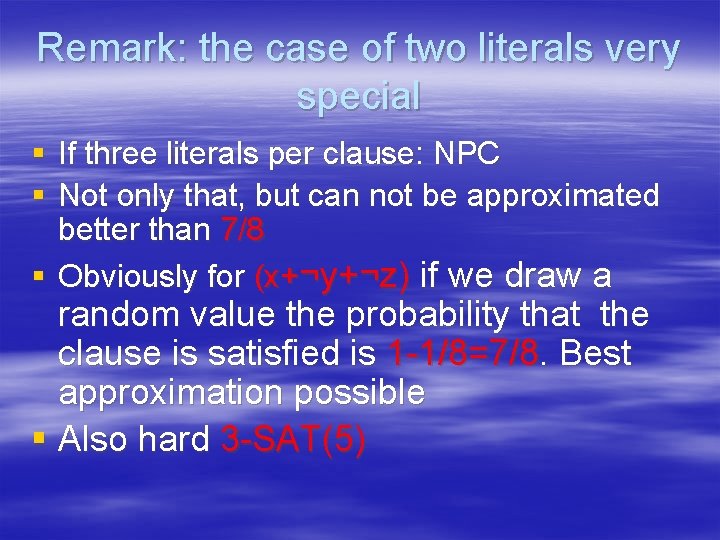

Remark: the case of two literals very special § If three literals per clause: NPC § Not only that, but can not be approximated better than 7/8 § Obviously for (x+¬y+¬z) if we draw a random value the probability that the clause is satisfied is 1 -1/8=7/8. Best approximation possible § Also hard 3 -SAT(5)

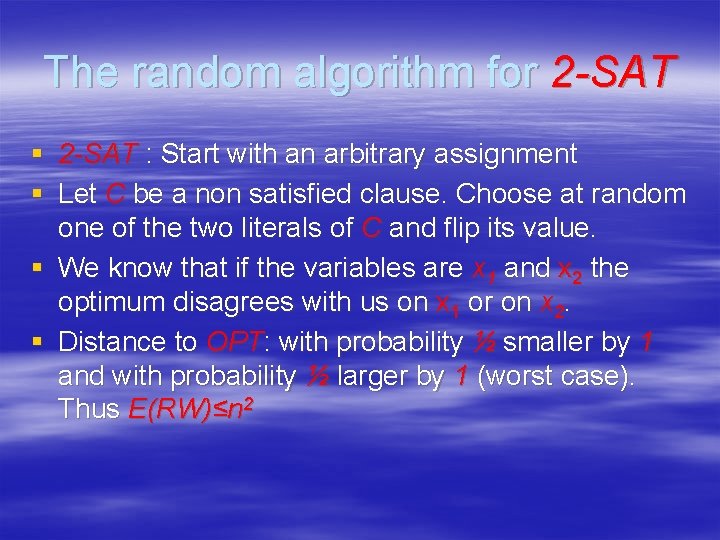

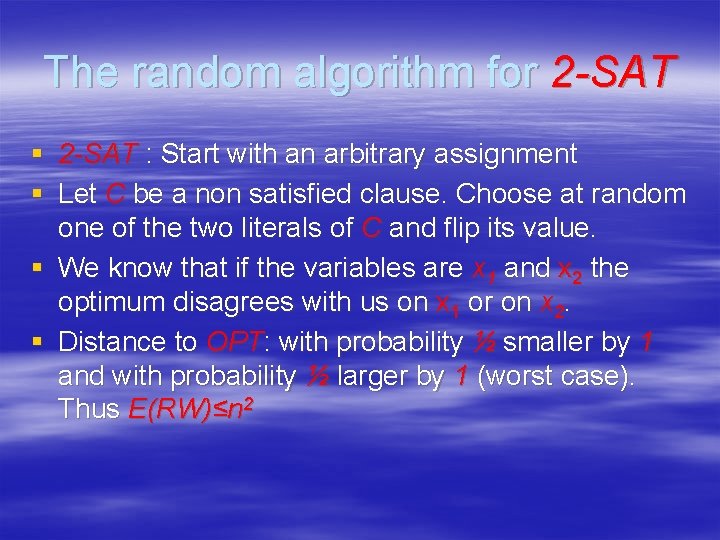

The random algorithm for 2 -SAT § 2 -SAT : Start with an arbitrary assignment § Let C be a non satisfied clause. Choose at random one of the two literals of C and flip its value. § We know that if the variables are x 1 and x 2 the optimum disagrees with us on x 1 or on x 2. § Distance to OPT: with probability ½ smaller by 1 and with probability ½ larger by 1 (worst case). Thus E(RW)≤n 2

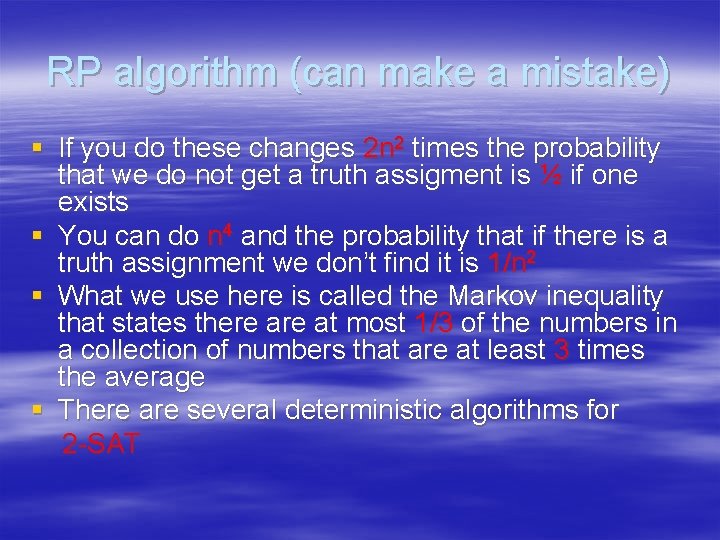

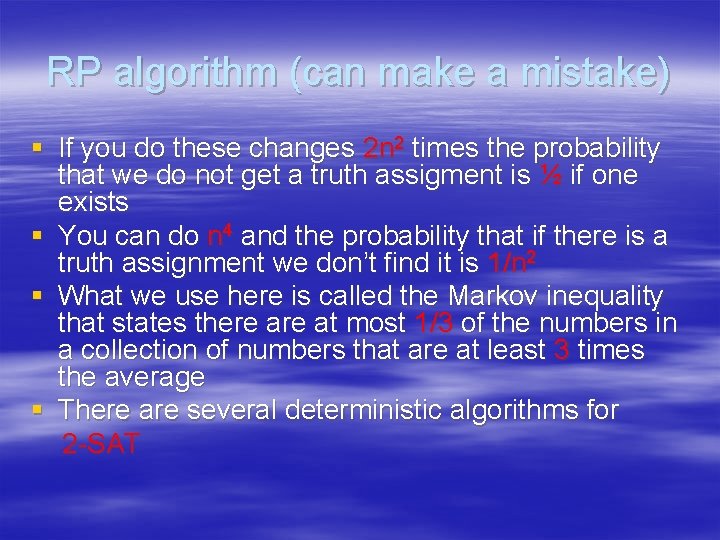

RP algorithm (can make a mistake) § If you do these changes 2 n 2 times the probability that we do not get a truth assigment is ½ if one exists § You can do n 4 and the probability that if there is a truth assignment we don’t find it is 1/n 2 § What we use here is called the Markov inequality that states there at most 1/3 of the numbers in a collection of numbers that are at least 3 times the average § There are several deterministic algorithms for 2 -SAT

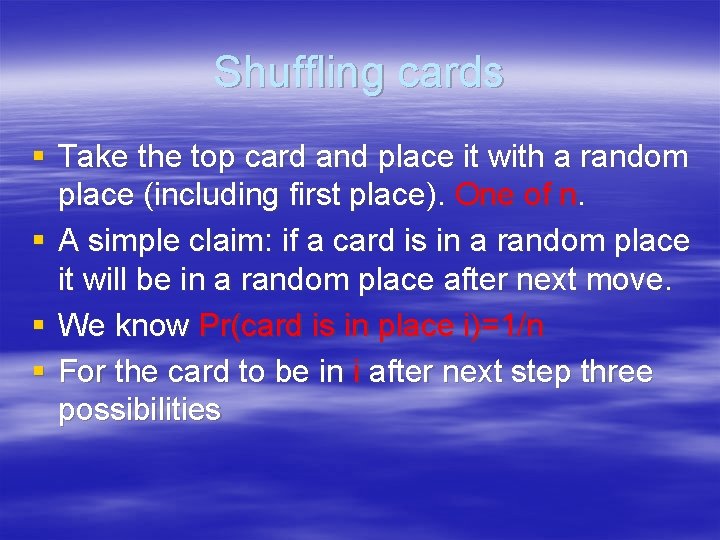

Shuffling cards § Take the top card and place it with a random place (including first place). One of n. § A simple claim: if a card is in a random place it will be in a random place after next move. § We know Pr(card is in place i)=1/n § For the card to be in i after next step three possibilities

Probability of the card to be in place i § One possibility: it is not the first card and it is in place i. Chooses one of i-1 first places. § Second possibility (disjoint events) its at place 1 and exchanged with i. § Third possibility: it is in place i+1 and the first card is placed in one of the places after i+1 § 1/n*(i-1)/n+1/n*1/(n-i)=1/n

Stopping time § If all the cards have been upstairs its random § Check the lowes card. To go up by 1 G(1/n) thus expectation n. § To go from second last to third last G(2/n) and expectation n/2. § This gives n+n/2+n/3+…. = n(ln n+Ө(1)) § FAST

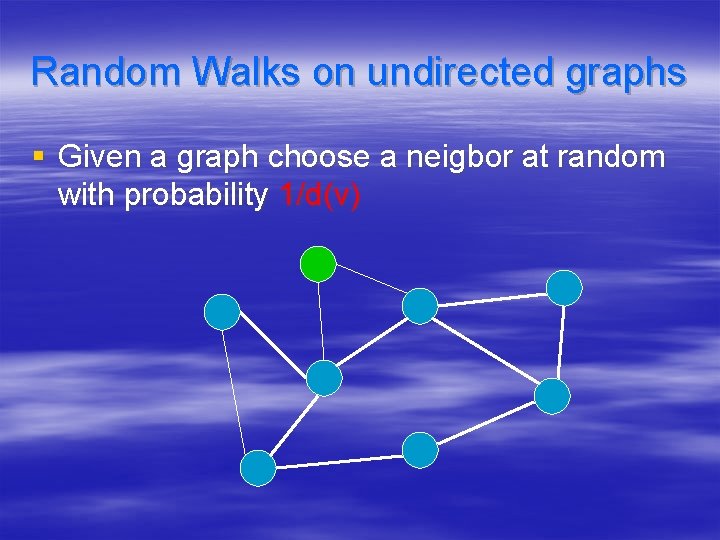

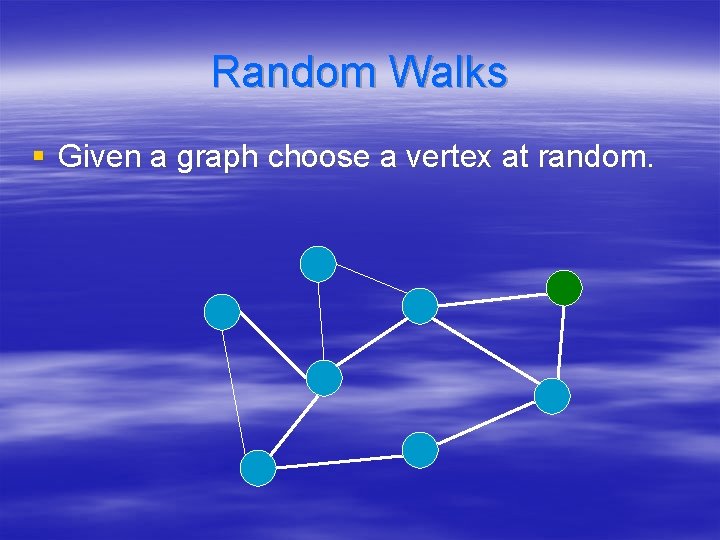

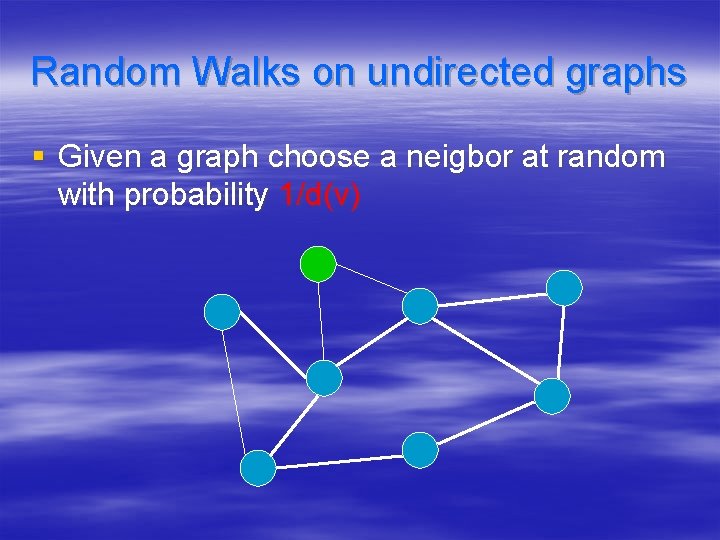

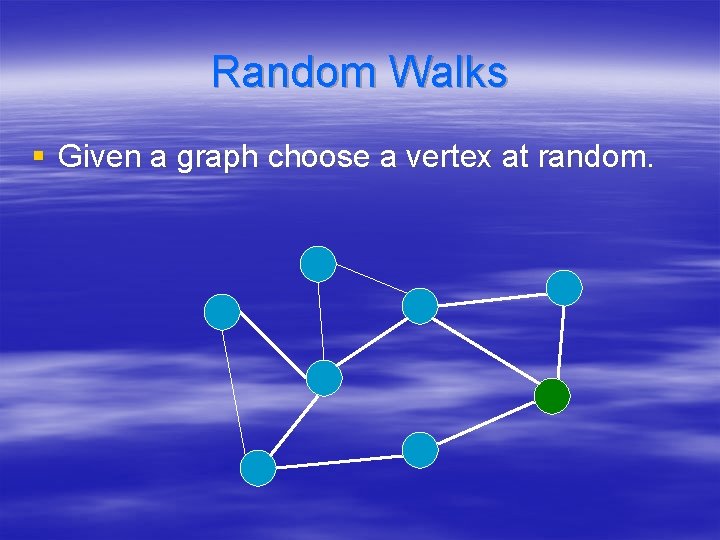

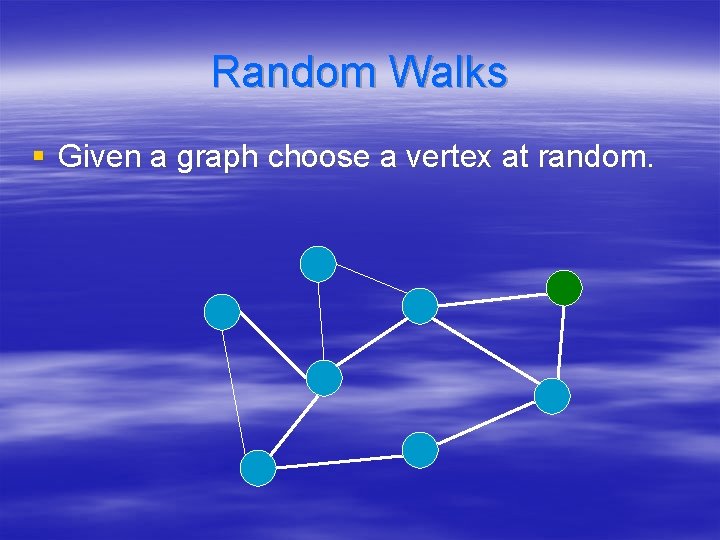

Random Walks on undirected graphs § Given a graph choose a neigbor at random with probability 1/d(v)

Random Walks § Given a graph choose a vertex at random.

Random Walks § Given a graph choose a vertex at random.

Random Walks § Given a graph choose a vertex at random.

Random Walks § Given a graph choose a vertex at random.

Random Walks § Given a graph choose a vertex at random.

Random Walks § Given a graph choose a vertex at random.

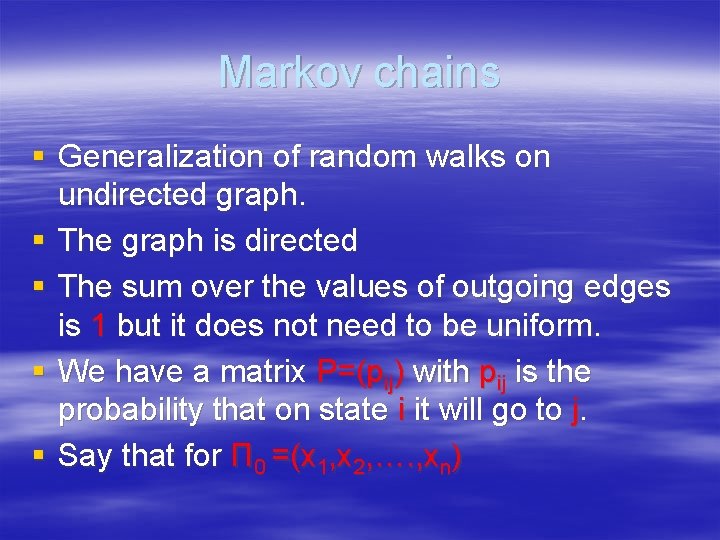

Markov chains § Generalization of random walks on undirected graph. § The graph is directed § The sum over the values of outgoing edges is 1 but it does not need to be uniform. § We have a matrix P=(pij) with pij is the probability that on state i it will go to j. § Say that for Π 0 =(x 1, x 2, …. , xn)

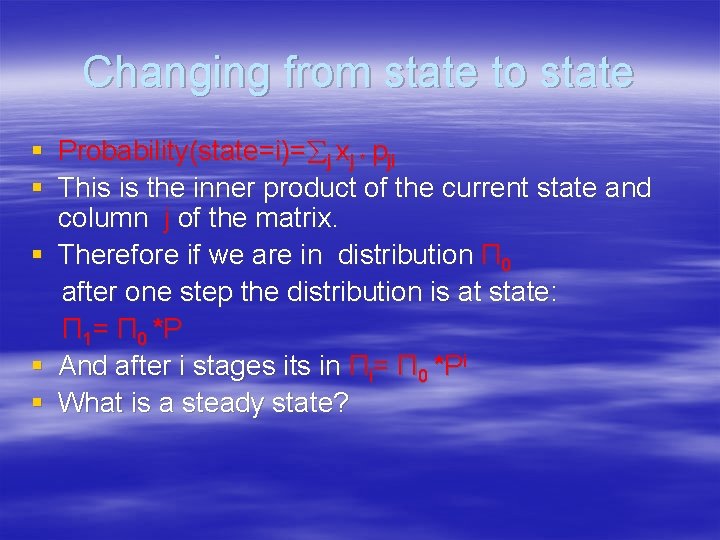

Changing from state to state § Probability(state=i)= j xj * pji § This is the inner product of the current state and column j of the matrix. § Therefore if we are in distribution Π 0 after one step the distribution is at state: Π 1= Π 0 *P § And after i stages its in Πi= Π 0 *Pi § What is a steady state?

Steady state § Steady state is Π so that: Π*P= Π. For any i and any round the probability that it is in i is the same always. § Conditions for convergence: The graph has to be strongly connected. Otherwise there may be many components that have no edges out. No steady state. § hii the time to get back to i from i is finite § Non periodic. Slightly complex for Markov chains. For random walks on undirected graphs: not a bipartite graph.

The bipartite graph example § If the graph is bipartite and V 1 and V 2 are its sets then if we start with V 1 we can not be on V 1 vertex in after odd number of transitions. § Therefore a steady state is not possible. § So we need for random walks on graphs that the graph is connected and not bipartite. The other property will follow.

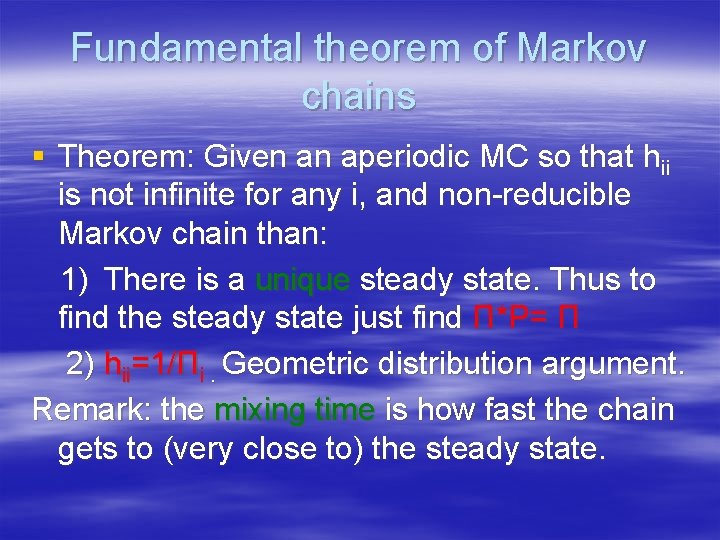

Fundamental theorem of Markov chains § Theorem: Given an aperiodic MC so that hii is not infinite for any i, and non-reducible Markov chain than: 1) There is a unique steady state. Thus to find the steady state just find Π*P= Π 2) hii=1/Πi. Geometric distribution argument. Remark: the mixing time is how fast the chain gets to (very close to) the steady state.

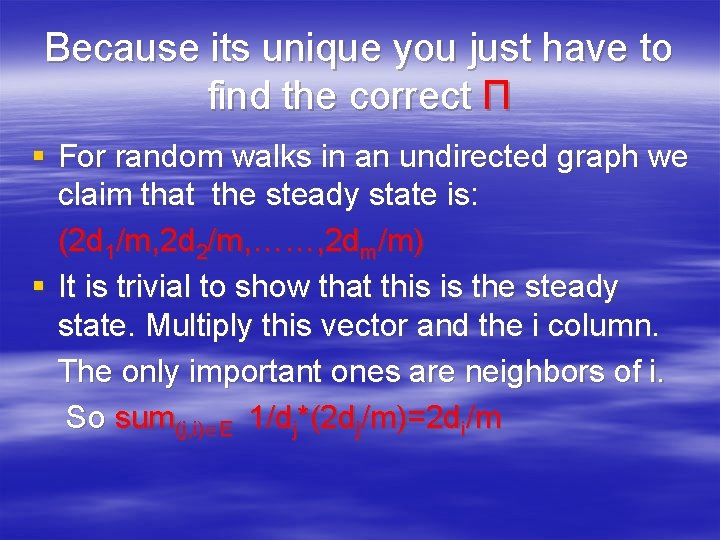

Because its unique you just have to find the correct Π § For random walks in an undirected graph we claim that the steady state is: (2 d 1/m, 2 d 2/m, ……, 2 dm/m) § It is trivial to show that this is the steady state. Multiply this vector and the i column. The only important ones are neighbors of i. So sum(j, i) E 1/dj*(2 dj/m)=2 di/m

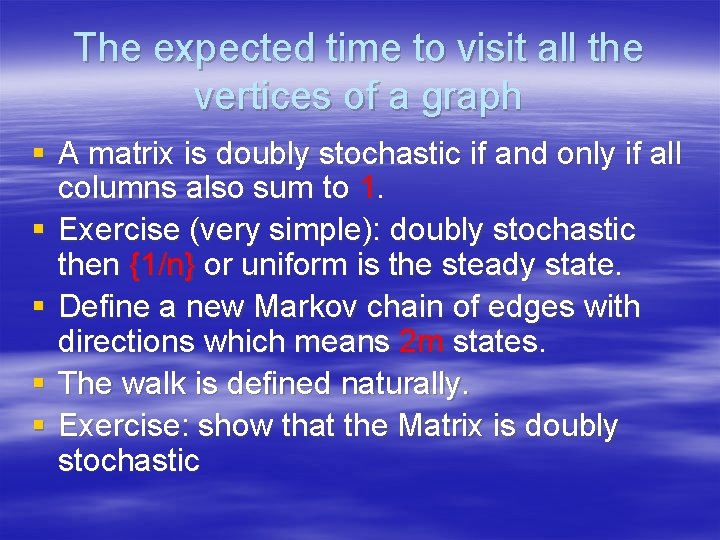

The expected time to visit all the vertices of a graph § A matrix is doubly stochastic if and only if all columns also sum to 1. § Exercise (very simple): doubly stochastic then {1/n} or uniform is the steady state. § Define a new Markov chain of edges with directions which means 2 m states. § The walk is defined naturally. § Exercise: show that the Matrix is doubly stochastic

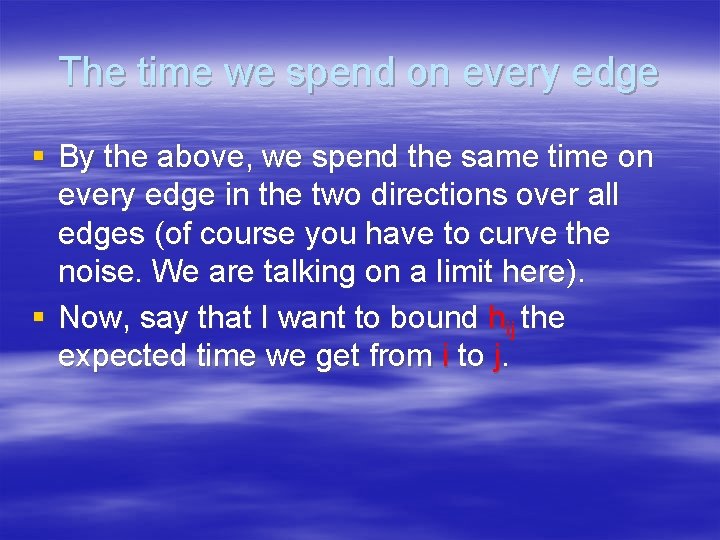

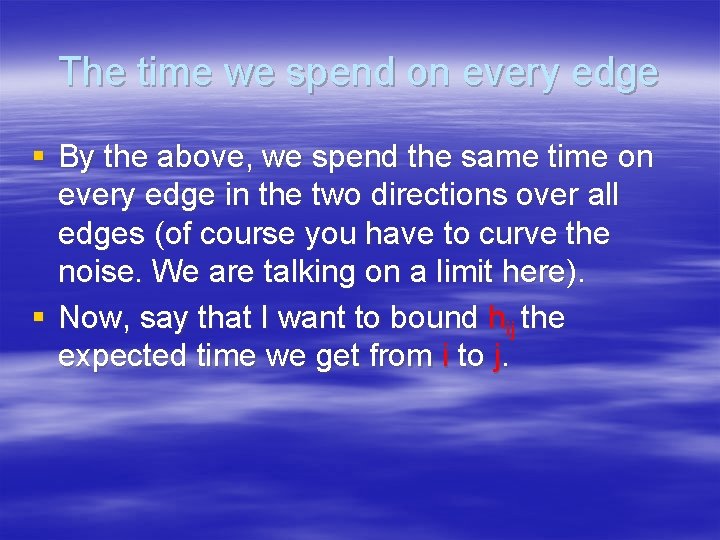

The time we spend on every edge § By the above, we spend the same time on every edge in the two directions over all edges (of course you have to curve the noise. We are talking on a limit here). § Now, say that I want to bound hij the expected time we get from i to j.

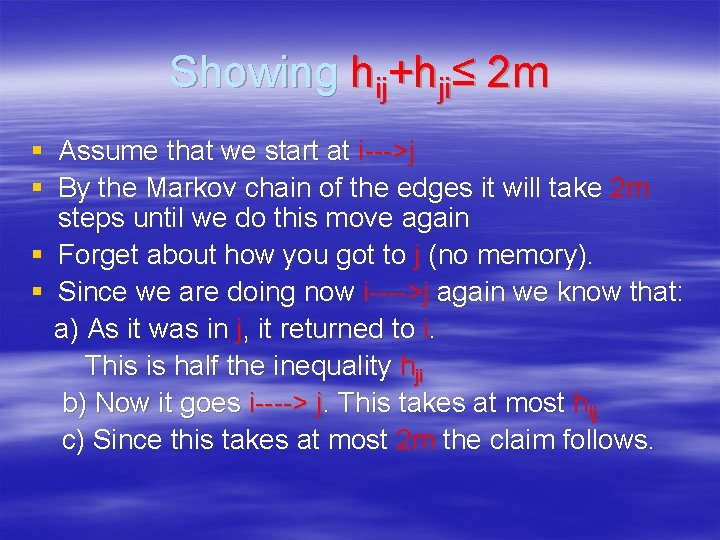

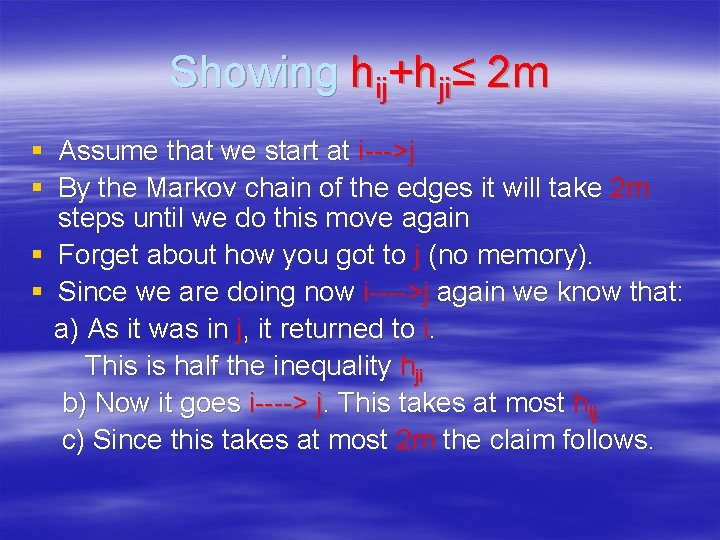

Showing hij+hji≤ 2 m § Assume that we start at i--->j § By the Markov chain of the edges it will take 2 m steps until we do this move again § Forget about how you got to j (no memory). § Since we are doing now i---->j again we know that: a) As it was in j, it returned to i. This is half the inequality hji b) Now it goes i----> j. This takes at most hij c) Since this takes at most 2 m the claim follows.

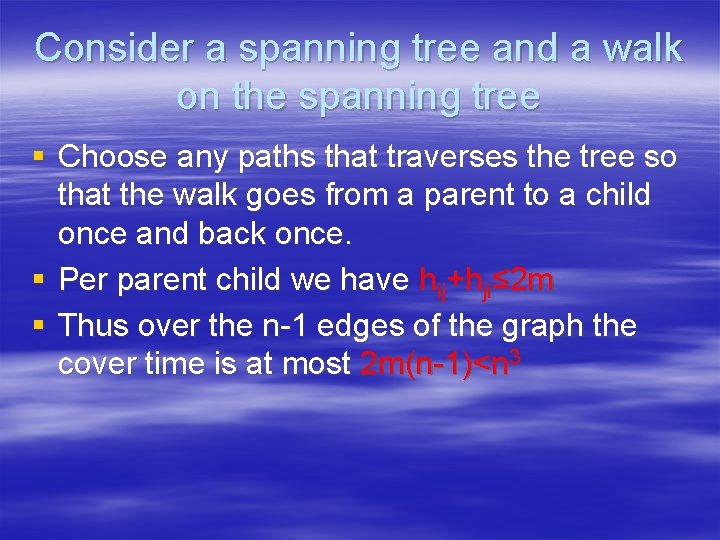

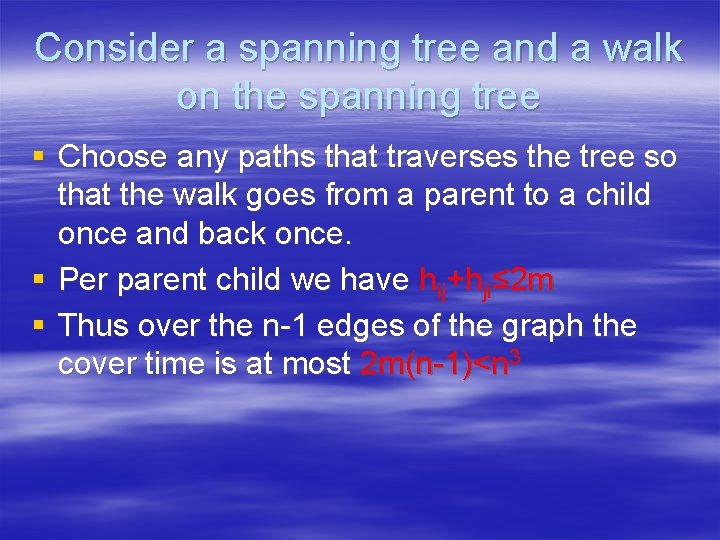

Consider a spanning tree and a walk on the spanning tree § Choose any paths that traverses the tree so that the walk goes from a parent to a child once and back once. § Per parent child we have hij+hji≤ 2 m § Thus over the n-1 edges of the graph the cover time is at most 2 m(n-1)<n 3

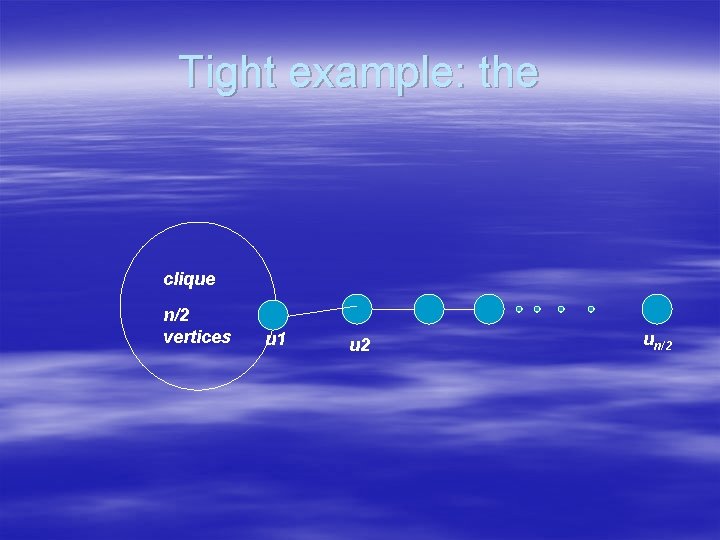

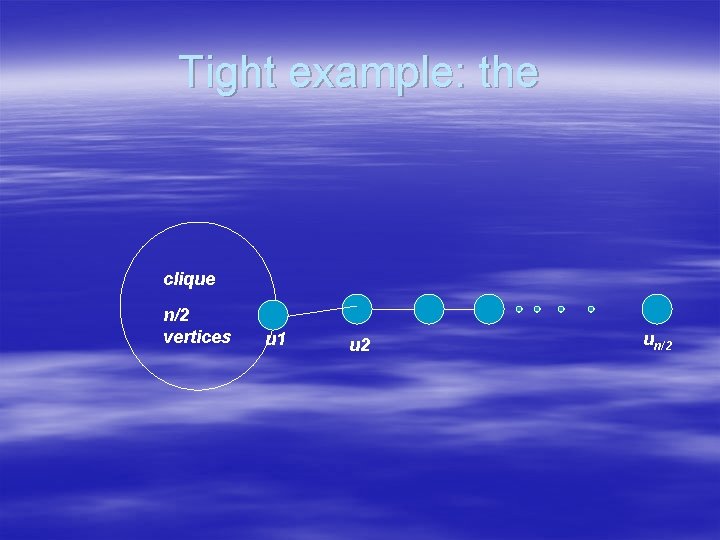

Tight example: the clique n/2 vertices u 1 u 2 un/2

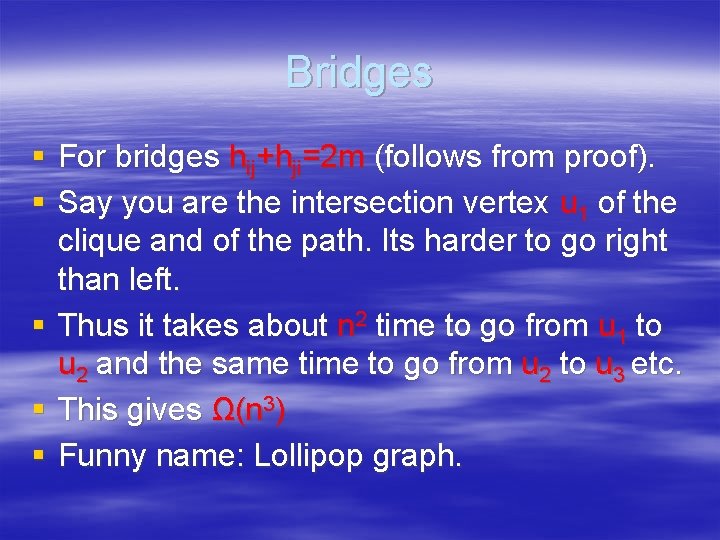

Bridges § For bridges hij+hji=2 m (follows from proof). § Say you are the intersection vertex u 1 of the clique and of the path. Its harder to go right than left. § Thus it takes about n 2 time to go from u 1 to u 2 and the same time to go from u 2 to u 3 etc. § This gives Ω(n 3) § Funny name: Lollipop graph.

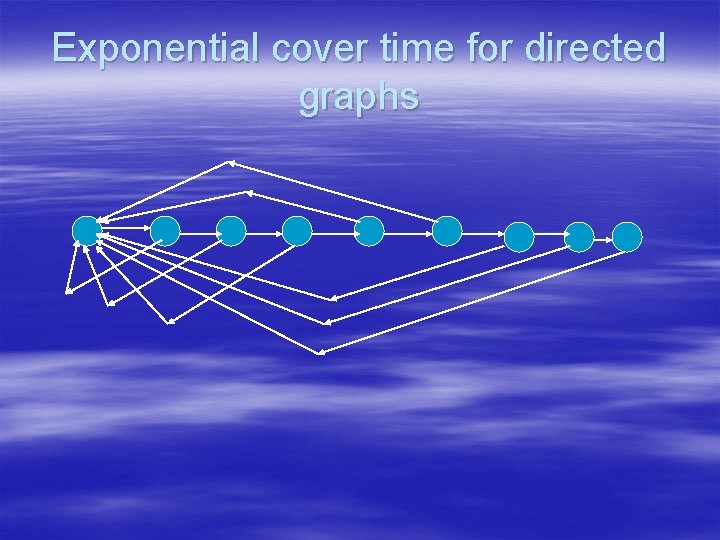

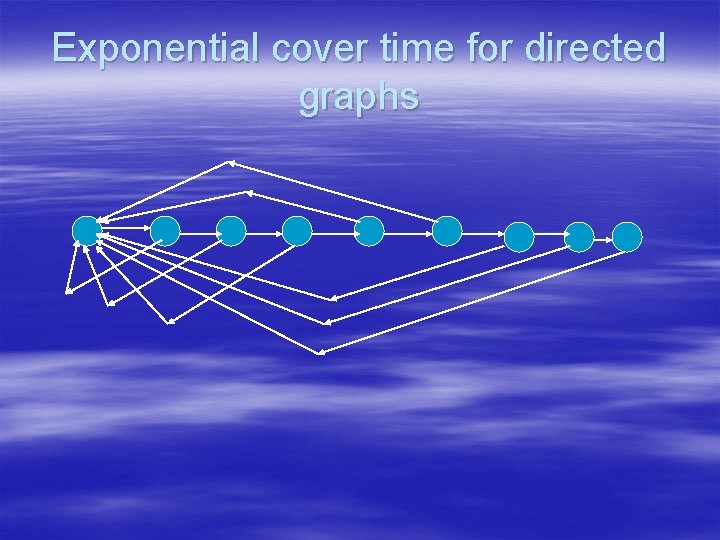

Exponential cover time for directed graphs

Universal sequences § Say that the graph is d-regular. § And the edges of every vertex are denoted by 1, 2, …. , d. § A walks series for d=20 would be 7, 14, 12, 19, 3, 4, 8, 6……. . § The question, will it cover all graphs? § We show that if the series is large enough it covers all graphs.

The length of the sequence § Let L=4 dn 2(dn+2)(log n+1) § What is the probability that a graph is covered? § Let N=4 dn 2, and think of the above as (dn+2)(ln n+1) different parts of sequences of length N. § By previous proof a graph will be cover in expectation in 2 n 2 d steps. With probability at most ½ it is not covered in N steps.

The number of d regular graphs § From n 2 possible edges choose n*d § Thus we have to choose n*d elements and this is bounded by n 2 n*d graphs. § The probability that a graph is not covered is exp(-nd*lg n) § The union bound implies that with large probability all graphs are covered.

One advise for every n § And with this one advice we can cover all graphs with high probability. § Thus we can find out for example if s and t are in the same connected components. § Later it was proved that with O(log n) space you can check if s and t are in the same connected component!! Even though you can remember O(1) items only.