RACM 2 and ARSC Nuts and bolts for

① RACM 2 and ARSC ② Nuts and bolts for RACM Presentation to RACM Meeting May 18, 2010 Please do not redistribute outside of the RACM group

① RACM 2 and ARSC Please do not redistribute outside of the RACM group

Preamble • ARSC’s involvement with RACM has been satisfying and worthwhile – Scientific progress – Community model building – Engagement of postdoctoral fellows – Engagement with multi-agency partners (Do. D, Do. E, Tera. Grid and others) – Arctic theme relevant to ARSC & UAF • ARSC would be pleased to be involved in the upcoming RACM 2 Please do not redistribute outside of the RACM group

RACM 2 Constraints • While ARSC’s HPCMP (Do. D) funding remains stable, the portion allocatable for science, versus HPCMP program support, is much more limited • With the departure of postdocs He & Roberts, the “personnel” tie to RACM 2 is not as clear Please do not redistribute outside of the RACM group

RACM 2 “fit” • ARSC, along with IARC and others at UAF, has enduring interests in many RACM/RACM 2 themes: – Climate modeling and a general high latitudes focus – Model scaling, coupling, and other practical issues – Enhancing aspects of land surface, ocean/ice and atmosphere models for arctic use Please do not redistribute outside of the RACM group

ARSC Benefits to RACM • Large allocations of CPU hours on large systems • Capable & responsive user support • Support of 2 postdoctoral fellows (Roberts & He) • Direct ties to expertise at UAF (IARC & elsewhere) • Deep shared interests in RACM themes Please do not redistribute outside of the RACM group

Potential ways Forward • How can RACM 2 best continue to engage with and benefit from ARSC? One or more of: – PI/Co-I at UAF (ARSC or IARC or elsewhere) – RACM 2 personnel budget to ARSC: consultant, specialist, staff scientist – RACM 2 funding postdoc(s) at ARSC/IARC – RACM 2 payment for cycles, storage. Approximately 10 -15 cents per CPU hour (20112012), $1600/TB storage (including 2 nd copy) – What else? ? ? Please do not redistribute outside of the RACM group

Current Requirements for ARSC HPC Allocations • See www. arsc. edu/support/accounts/acq uire. html#Academic • ARSC project PI at UAF • Tie to arctic research • Note that the largest allocations (including “arscasm”) do get extra scrutiny and attention. Allocations over around 1 M hours are in strategic areas, and in deep enduring interest areas Please do not redistribute outside of the RACM group

Future Requirements for ARSC HPC Allocations • ARSC will be adding UAF user community members to the allocations application review process • The Configuration Change Board of ARSC will, similarly, have user representation • UAF will, we think, provide budget to support ARSC. This might come with some expectations about access to systems that are at least partially UAF-supported • Newby will, we hope, get NSF funding that will allow growth of the academic HPC resources. This will include direct responsibility in what some of the ARSC resources will be available for Please do not redistribute outside of the RACM group

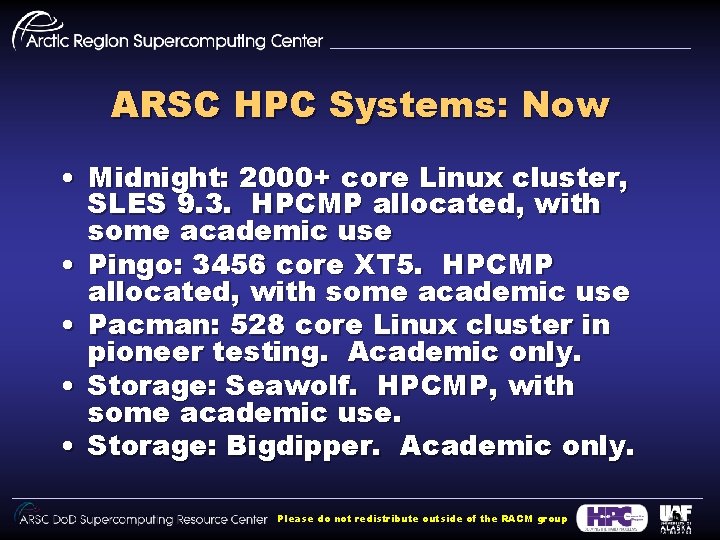

ARSC HPC Systems: Now • Midnight: 2000+ core Linux cluster, SLES 9. 3. HPCMP allocated, with some academic use • Pingo: 3456 core XT 5. HPCMP allocated, with some academic use • Pacman: 528 core Linux cluster in pioneer testing. Academic only. • Storage: Seawolf. HPCMP, with some academic use. • Storage: Bigdipper. Academic only. Please do not redistribute outside of the RACM group

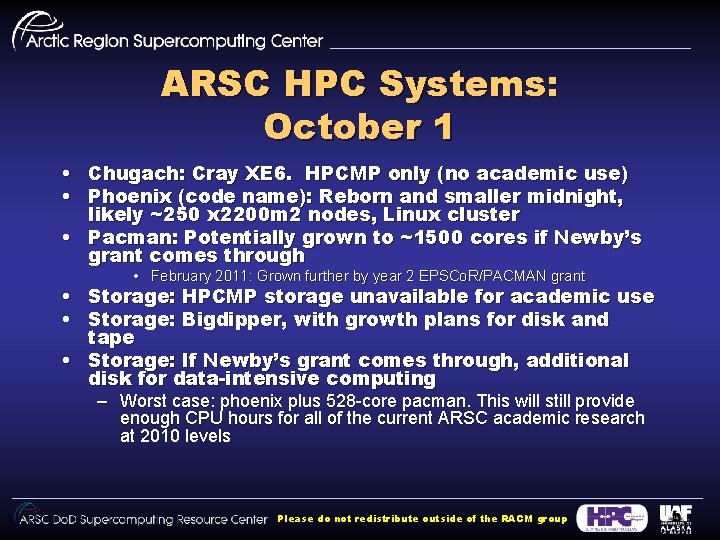

ARSC HPC Systems: October 1 • Chugach: Cray XE 6. HPCMP only (no academic use) • Phoenix (code name): Reborn and smaller midnight, likely ~250 x 2200 m 2 nodes, Linux cluster • Pacman: Potentially grown to ~1500 cores if Newby’s grant comes through • February 2011: Grown further by year 2 EPSCo. R/PACMAN grant • Storage: HPCMP storage unavailable for academic use • Storage: Bigdipper, with growth plans for disk and tape • Storage: If Newby’s grant comes through, additional disk for data-intensive computing – Worst case: phoenix plus 528 -core pacman. This will still provide enough CPU hours for all of the current ARSC academic research at 2010 levels Please do not redistribute outside of the RACM group

② Nuts and bolts for RACM Please do not redistribute outside of the RACM group

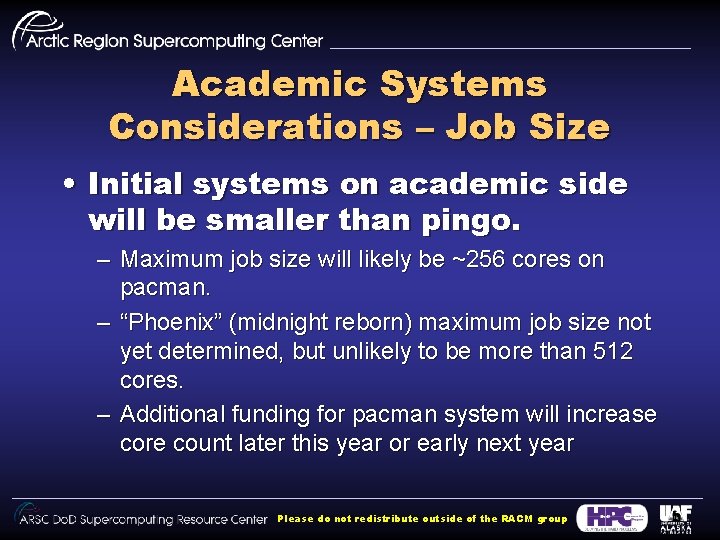

Academic Systems Considerations – Job Size • Initial systems on academic side will be smaller than pingo. – Maximum job size will likely be ~256 cores on pacman. – “Phoenix” (midnight reborn) maximum job size not yet determined, but unlikely to be more than 512 cores. – Additional funding for pacman system will increase core count later this year or early next year Please do not redistribute outside of the RACM group

$ARCHIVE data • By October there will be distinct HPCMP and Academic systems being operated by ARSC. – Team members of both Academic (e. g. ARSCASM) and HPCMP (e. g. NPSCA 242) need to do some planning to make sure data is accessible to others on the team. The ARSC Help Desk has sent messages to people in this category to ask them to set UNIX groups on $ARCHIVE data. – Need to be aware of data locality for pre/post processing. HPCMP systems will be in Illinois and Academic systems in Fairbanks, AK. Please do not redistribute outside of the RACM group

$ARCHIVE data continued • Academic project $ARCHIVE data will be migrated to bigdipper over the summer. – Due to Do. D requirements we will not have $ARCHIVE on bigdipper available via NFS on midnight or pingo. – We may allow passwordless access from midnight and pingo. – bigdipper $ARCHIVE is available via NFS on pacman now and will be on other academic systems later this year. Please do not redistribute outside of the RACM group

Academic System Considerations Continued • $WORKDIR on “Phoenix” and pacman are smaller than pingo. – Can’t support multi-TBs of use by project members on a continual basis. • $ARCHIVE cache for bigdipper (new storage server) is much larger than seawolf. – Files should stay online longer. – Should have better NFS connectivity to $ARCHIVE. – May be able to do some pre/post processing right in $ARCHIVE. Please do not redistribute outside of the RACM group

Other Comments • Help Desk support will stay the same as it has been previously. • Software stack for HPC systems will likely be similar to what we have had with PGI compiler suite as the default. We will probably drop support for Path. Scale. Please do not redistribute outside of the RACM group

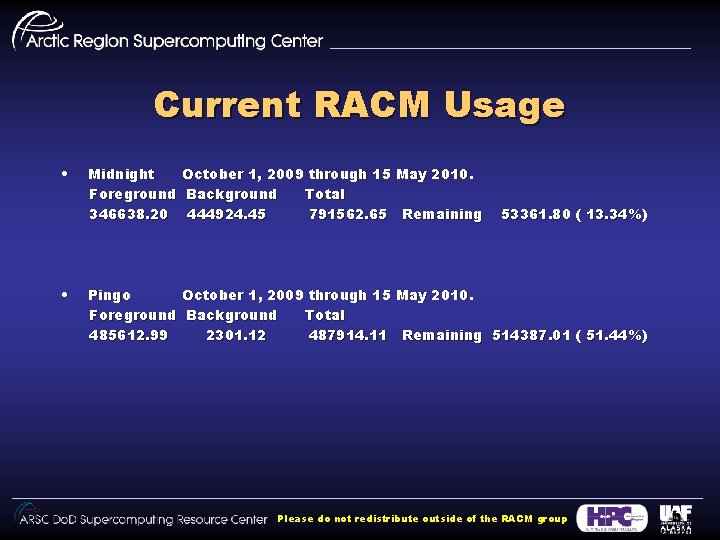

Current RACM Usage • • Midnight October 1, 2009 through 15 May 2010. Foreground Background Total 346638. 20 444924. 45 791562. 65 Remaining Pingo Foreground 485612. 99 53361. 80 ( 13. 34%) October 1, 2009 through 15 May 2010. Background Total 2301. 12 487914. 11 Remaining 514387. 01 ( 51. 44%) Please do not redistribute outside of the RACM group

- Slides: 18