Quick Overview of NPACI Rocks Philip M Papadopoulos

- Slides: 14

Quick Overview of NPACI Rocks Philip M. Papadopoulos Associate Director, Distributed Computing San Diego Supercomputer Center

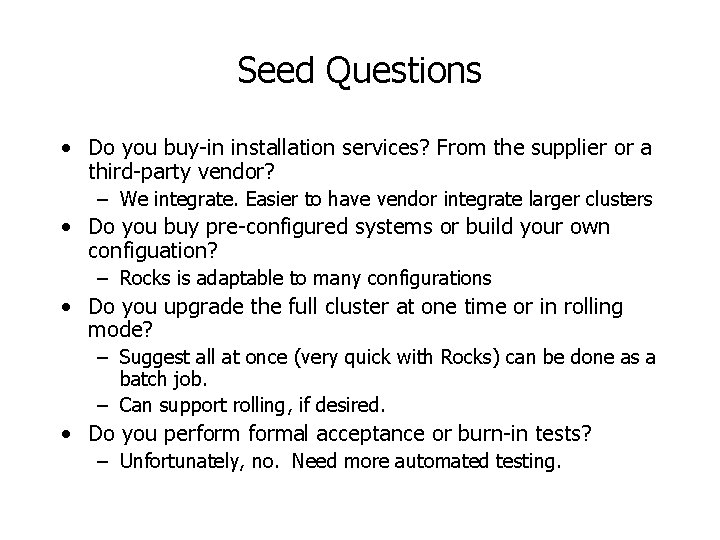

Seed Questions • Do you buy-in installation services? From the supplier or a third-party vendor? – We integrate. Easier to have vendor integrate larger clusters • Do you buy pre-configured systems or build your own configuation? – Rocks is adaptable to many configurations • Do you upgrade the full cluster at one time or in rolling mode? – Suggest all at once (very quick with Rocks) can be done as a batch job. – Can support rolling, if desired. • Do you performal acceptance or burn-in tests? – Unfortunately, no. Need more automated testing.

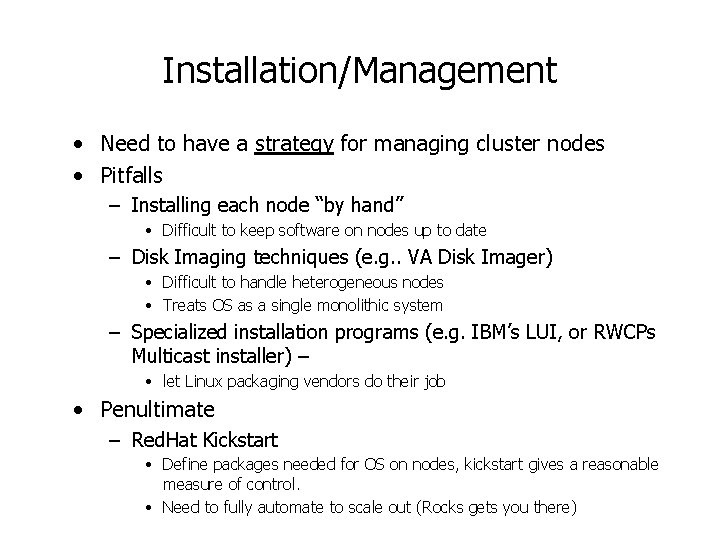

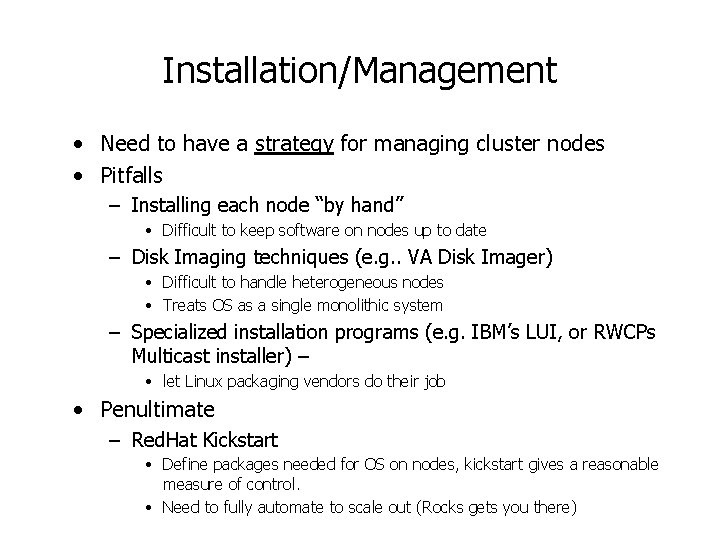

Installation/Management • Need to have a strategy for managing cluster nodes • Pitfalls – Installing each node “by hand” • Difficult to keep software on nodes up to date – Disk Imaging techniques (e. g. . VA Disk Imager) • Difficult to handle heterogeneous nodes • Treats OS as a single monolithic system – Specialized installation programs (e. g. IBM’s LUI, or RWCPs Multicast installer) – • let Linux packaging vendors do their job • Penultimate – Red. Hat Kickstart • Define packages needed for OS on nodes, kickstart gives a reasonable measure of control. • Need to fully automate to scale out (Rocks gets you there)

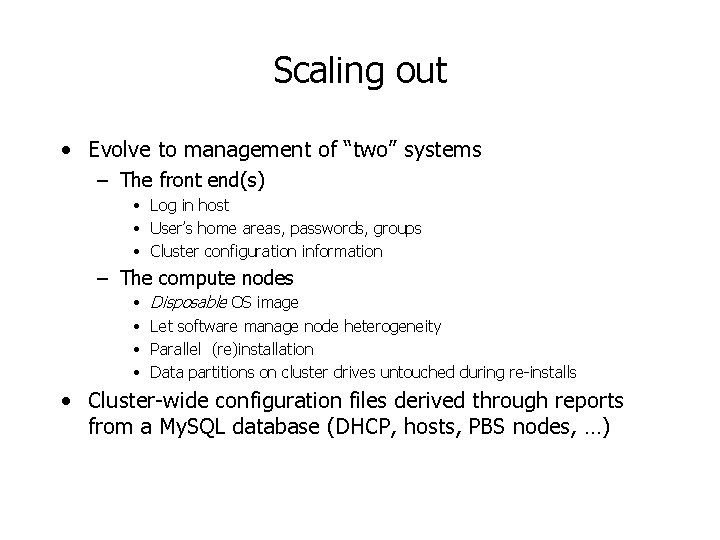

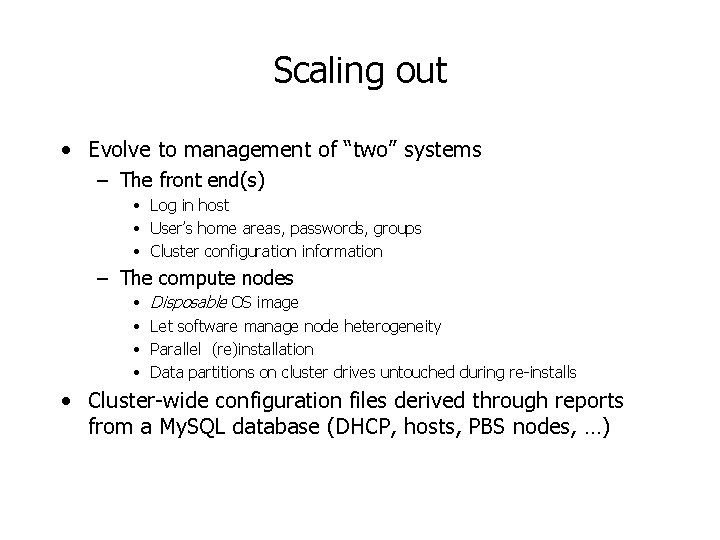

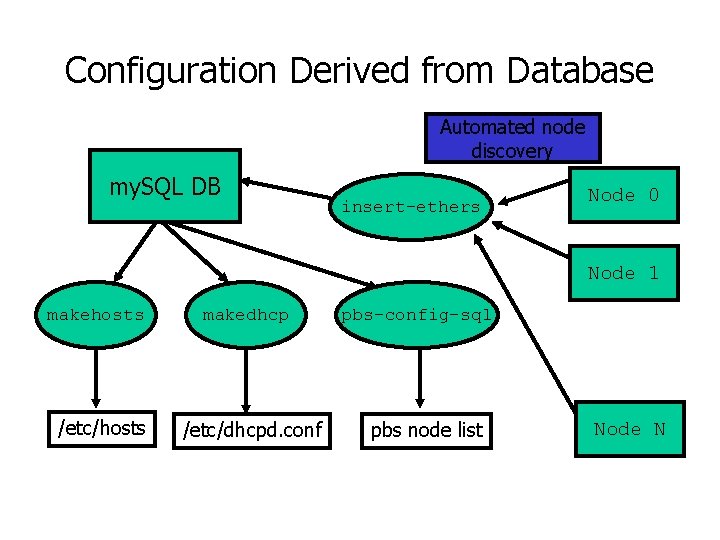

Scaling out • Evolve to management of “two” systems – The front end(s) • Log in host • User’s home areas, passwords, groups • Cluster configuration information – The compute nodes • • Disposable OS image Let software manage node heterogeneity Parallel (re)installation Data partitions on cluster drives untouched during re-installs • Cluster-wide configuration files derived through reports from a My. SQL database (DHCP, hosts, PBS nodes, …)

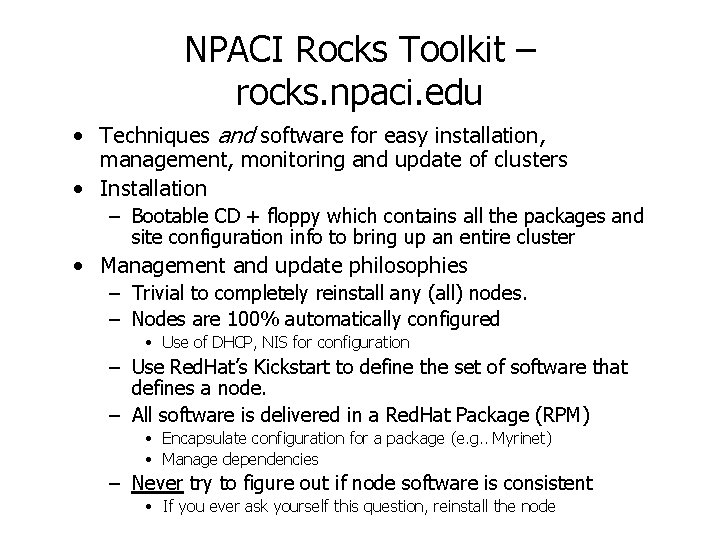

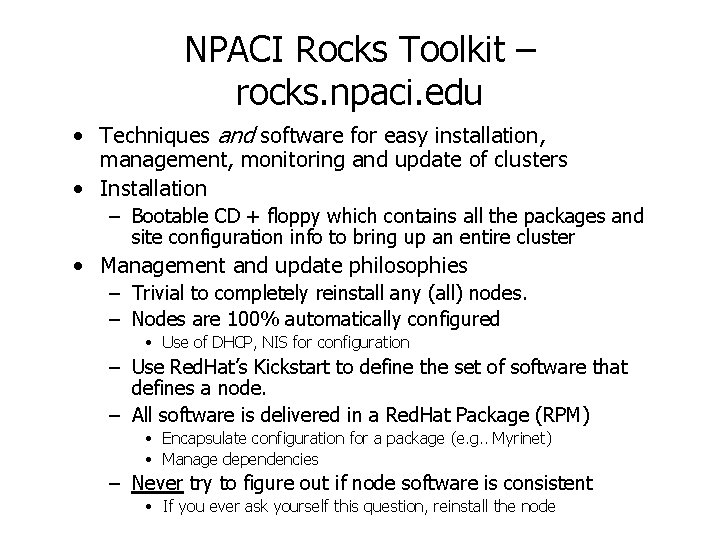

NPACI Rocks Toolkit – rocks. npaci. edu • Techniques and software for easy installation, management, monitoring and update of clusters • Installation – Bootable CD + floppy which contains all the packages and site configuration info to bring up an entire cluster • Management and update philosophies – Trivial to completely reinstall any (all) nodes. – Nodes are 100% automatically configured • Use of DHCP, NIS for configuration – Use Red. Hat’s Kickstart to define the set of software that defines a node. – All software is delivered in a Red. Hat Package (RPM) • Encapsulate configuration for a package (e. g. . Myrinet) • Manage dependencies – Never try to figure out if node software is consistent • If you ever ask yourself this question, reinstall the node

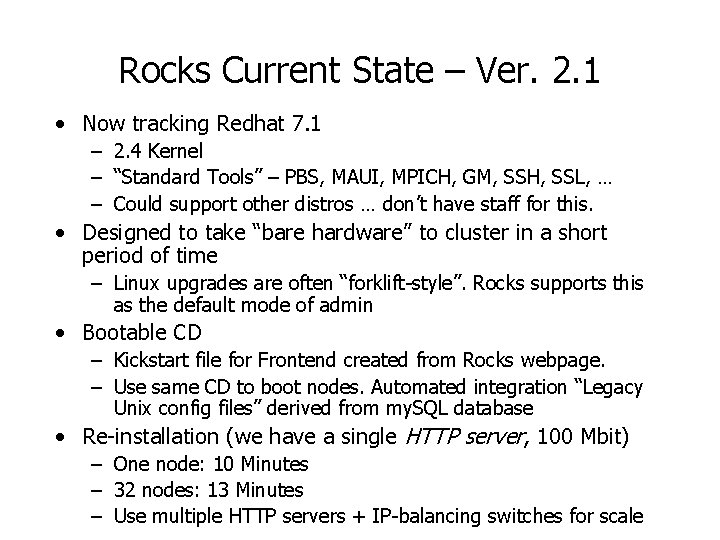

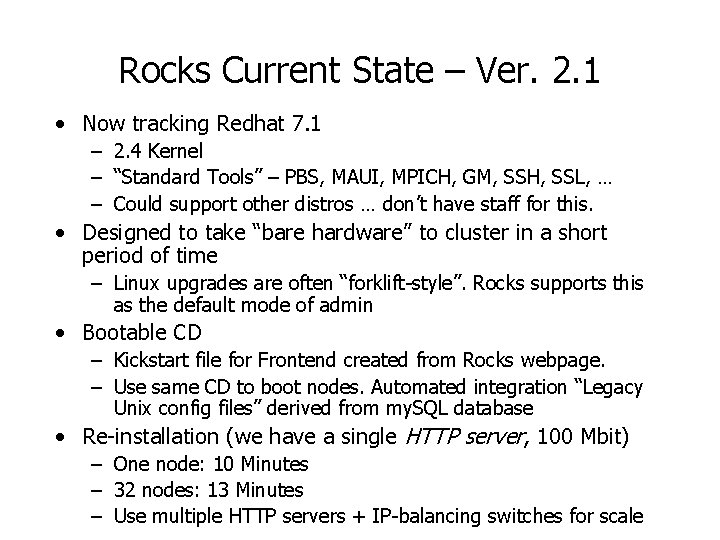

Rocks Current State – Ver. 2. 1 • Now tracking Redhat 7. 1 – 2. 4 Kernel – “Standard Tools” – PBS, MAUI, MPICH, GM, SSH, SSL, … – Could support other distros … don’t have staff for this. • Designed to take “bare hardware” to cluster in a short period of time – Linux upgrades are often “forklift-style”. Rocks supports this as the default mode of admin • Bootable CD – Kickstart file for Frontend created from Rocks webpage. – Use same CD to boot nodes. Automated integration “Legacy Unix config files” derived from my. SQL database • Re-installation (we have a single HTTP server, 100 Mbit) – One node: 10 Minutes – 32 nodes: 13 Minutes – Use multiple HTTP servers + IP-balancing switches for scale

More Rocksisms • Leverage widely-used (standard) software wherever possible – Everything is in Red. Hat Packages (RPM) – Red. Hat’s “kickstart” installation tool – SSH, Telnet (only during installation), Existing open source tools • Write only the software that we need to write • Focus on simplicity – Commodity components • For example: x 86 compute servers, Ethernet, Myrinet – Minimal • For example: no additional diagnostic or proprietary networks • Rocks is a collection point of software for people building clusters – It evolving to include cluster software and packaging from more than just SDSC and UCB – <[your-software. i 386. rpm] [your-software. src. rpm] here>

Rocks-dist • Integrate Red. Hat Packages from – – – Redhat (mirror) – base distribution + updates Contrib directory Locally produced packages Local contrib (e. g. commerically bought code) Packages from rocks. npaci. edu • Produces a single updated distribution that resides on front-end – Is a Red. Hat Distribution with patches and updates applied • Kickstart (Red. Hat) file is a text description of what’s on a node. Rocks automatically produces frontend and node files. • Different Kickstart files and different distribution can coexist on a front-end to add flexibility in configuring nodes.

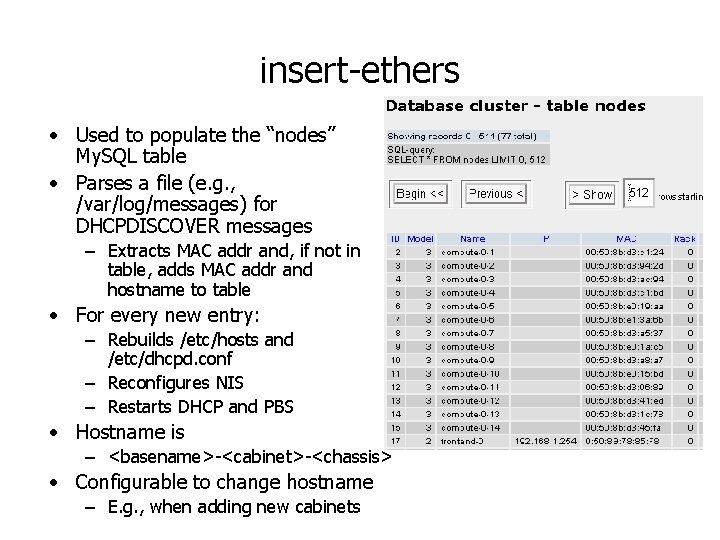

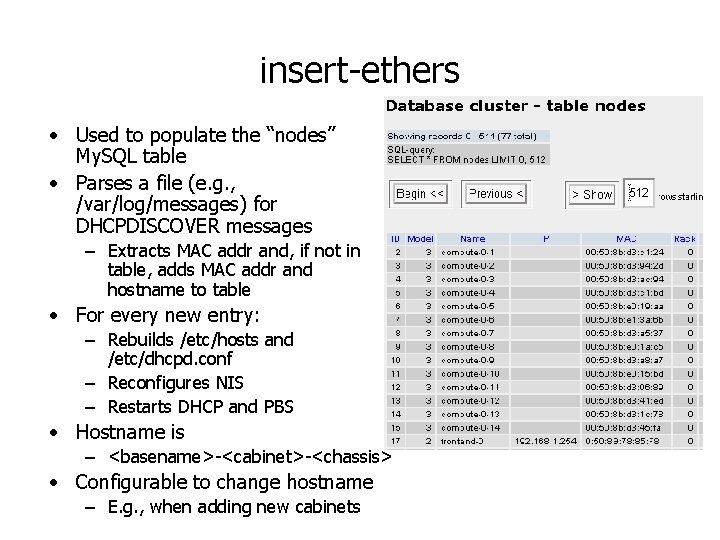

insert-ethers • Used to populate the “nodes” My. SQL table • Parses a file (e. g. , /var/log/messages) for DHCPDISCOVER messages – Extracts MAC addr and, if not in table, adds MAC addr and hostname to table • For every new entry: – Rebuilds /etc/hosts and /etc/dhcpd. conf – Reconfigures NIS – Restarts DHCP and PBS • Hostname is – <basename>-<cabinet>-<chassis> • Configurable to change hostname – E. g. , when adding new cabinets

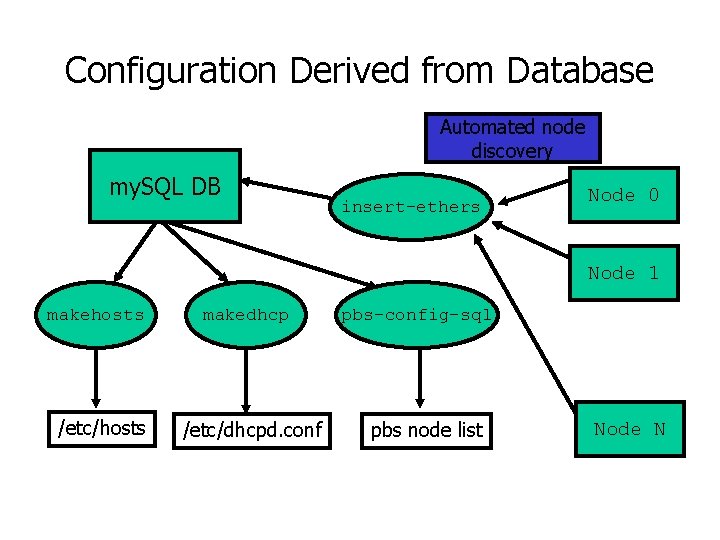

Configuration Derived from Database Automated node discovery my. SQL DB insert-ethers Node 0 Node 1 makehosts /etc/hosts makedhcp /etc/dhcpd. conf pbs-config-sql pbs node list Node N

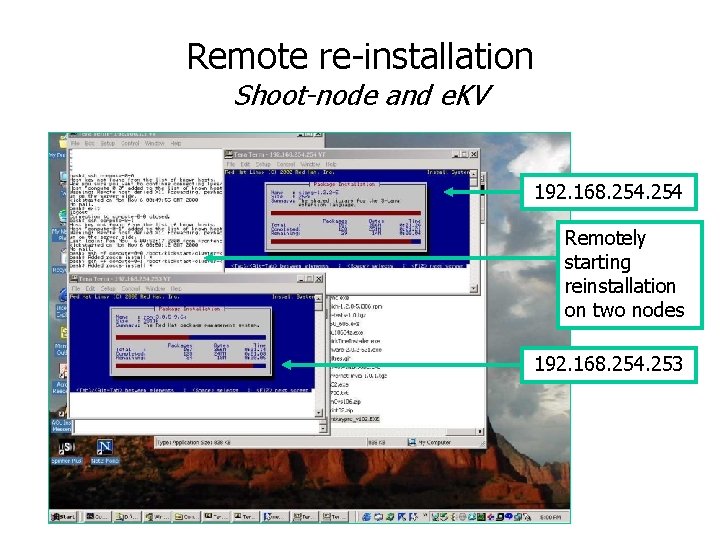

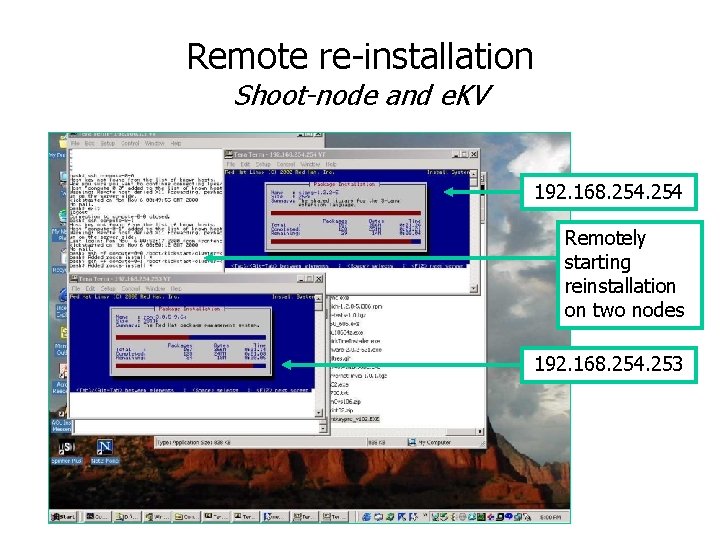

Remote re-installation Shoot-node and e. KV • Rocks provides a simple method to remotely reinstall a node – CD/Floppy used to install the first time • By default, hard power cycling will cause a node to reinstall itself. – Addressable PDUs can do this on generic hardware • With no serial (or KVM) console, we are able to watch a node as installs (e. KV), but … – Can’t see BIOS messages at boot up • Syslog for all nodes sent to a log host (and to local disk) – Can look at what a node was complaining about before it went offline

Remote re-installation Shoot-node and e. KV 192. 168. 254 Remotely starting reinstallation on two nodes 192. 168. 254. 253

Monitoring your cluster • PBS has a GUI called xpsmon. Gives a nice graphical view of up/down state of nodes • SNMP status – Use the extensive SNMP MIB defined by the Linux community to find out many things about a node • • Installed software Uptime Load Slow • Ganglia (UCB) – IP Multicast-based monitoring system – 20+ different health measures • I think we’re still weak here – learning about other activities in this area (e. g. ngop, CERN activities, City Toolkit)

Cern • Cern. ch/hep-proj-grid-fabric • Installation tools : wwwinfo. cern. ch/pdp

Philip papadopoulos

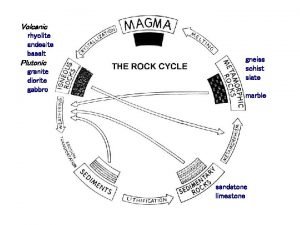

Philip papadopoulos Igneous rock to metamorphic rock

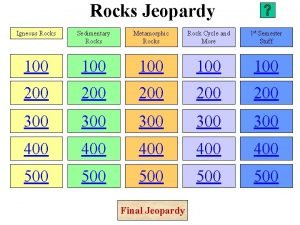

Igneous rock to metamorphic rock Igneous rocks metamorphic rocks and sedimentary rocks

Igneous rocks metamorphic rocks and sedimentary rocks Quick find algorithm

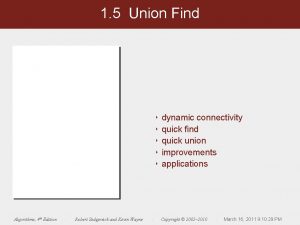

Quick find algorithm Collision forces quick check

Collision forces quick check Dennis papadopoulos

Dennis papadopoulos Andy papadopoulos

Andy papadopoulos Despina papadopoulos brighton

Despina papadopoulos brighton Spyridon papadopoulos

Spyridon papadopoulos Dennis papadopoulos

Dennis papadopoulos Spectroscopy equations

Spectroscopy equations Sandy papadopoulos

Sandy papadopoulos Dennis papadopoulos

Dennis papadopoulos Andesite vs basalt

Andesite vs basalt Extrusive rocks and intrusive rocks

Extrusive rocks and intrusive rocks