Quick and Painless Introduction to Machine Learning Guest

- Slides: 53

Quick and Painless Introduction to Machine Learning Guest Star: David Weigl! J. Stephen Downie Xiao Hu School of Information Sciences Faculty of Education University of Illinois at Urbana-Champaign University of Hong Kong jdownie@illinois. edu xiaoxhu@hku. hk 1

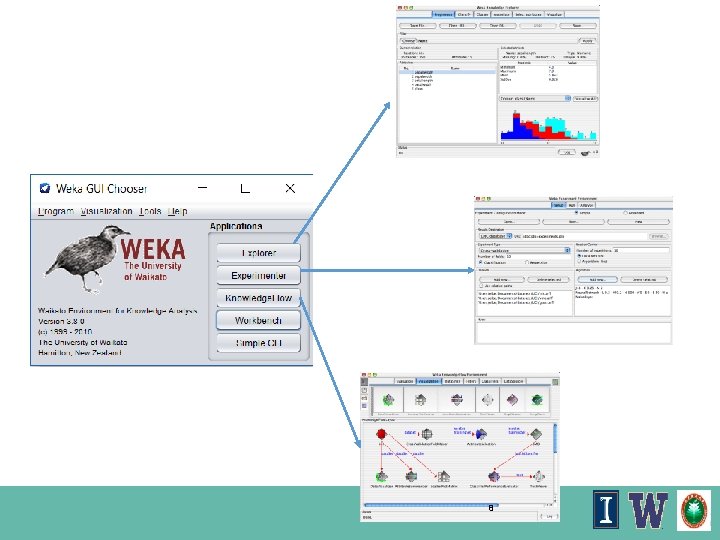

Agenda • Million Mile View of Machine Learning o Machine learning and Data Science o Supervised vs. unsupervised learning § Decision Tree; Support Vector Machines (SVM); K-Nearest Neighbor (k. NN) o Experimentation • Friendly ML Tool: o Weka: Machine learning <<We will work with this one later • Wrap Up and Discussion 2

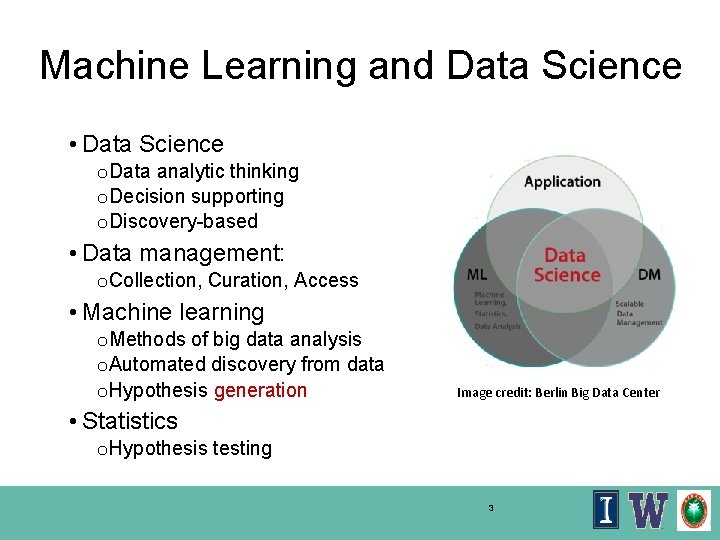

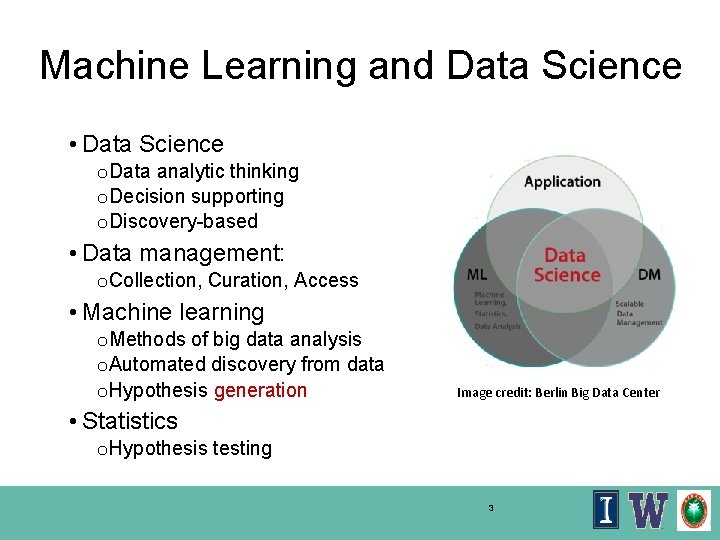

Machine Learning and Data Science • Data Science o Data analytic thinking o Decision supporting o Discovery-based • Data management: o Collection, Curation, Access • Machine learning o Methods of big data analysis o Automated discovery from data o Hypothesis generation Image credit: Berlin Big Data Center • Statistics o Hypothesis testing 3

Examples of ML • Spam email detector • Recommender systems • Fraud detection • Categorizing news stories as finance, weather, entertainment, sports, etc. • Categorizing library materials by catalogs • Categorizing music by mood • Decide whether to play golf according to weather conditions • Market segmentation • Group library patrons into different clusters 4

WEKA: the bird • The Weka or woodhen (Gallirallus australis) is an endemic bird of New Zealand. (Source: Wiki. Pedia) Copyright: Martin Kramer (mkramer@wxs. nl) 5

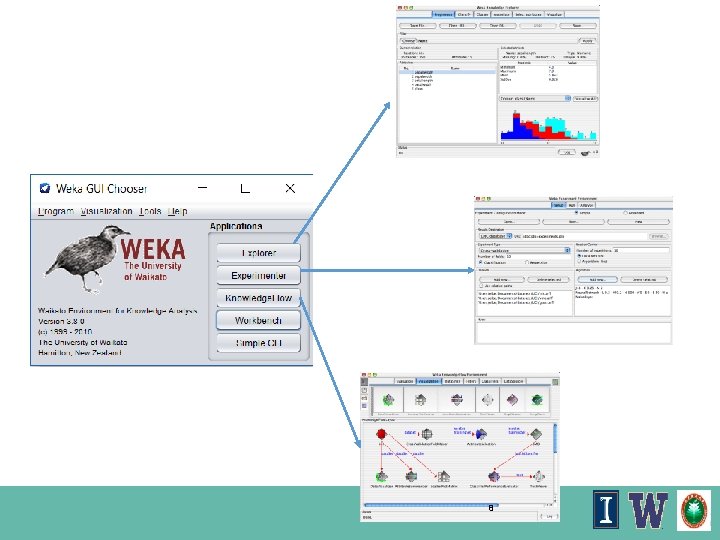

WEKA: The tool n n Machine learning/data mining software written in Java (distributed under the GNU Public License) Used for research, education, and applications Complements “Data Mining” by Witten & Frank Main features: Comprehensive set of data pre-processing tools, learning algorithms and evaluation methods u Graphical user interfaces u Environment for comparing learning algorithms u 6

The Book • Witten, I. H. (2011). Data Mining: Practical Machine Learning Tools and Techniques. Morgan Kaufmann ebook in HKU Lib 7

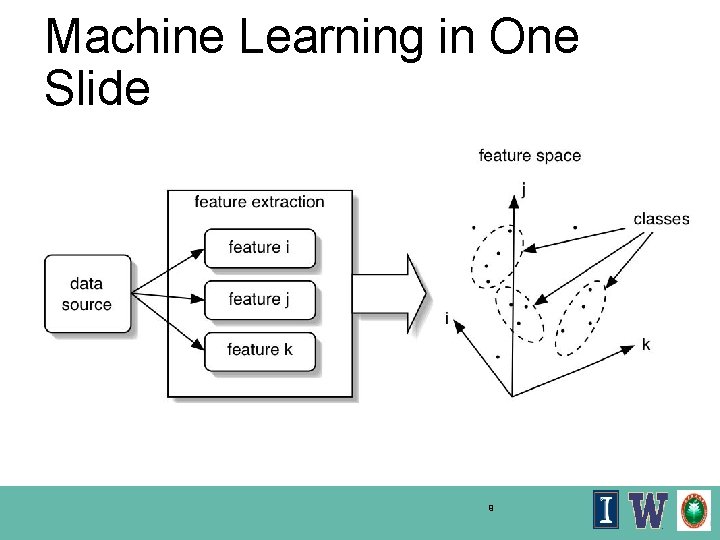

8

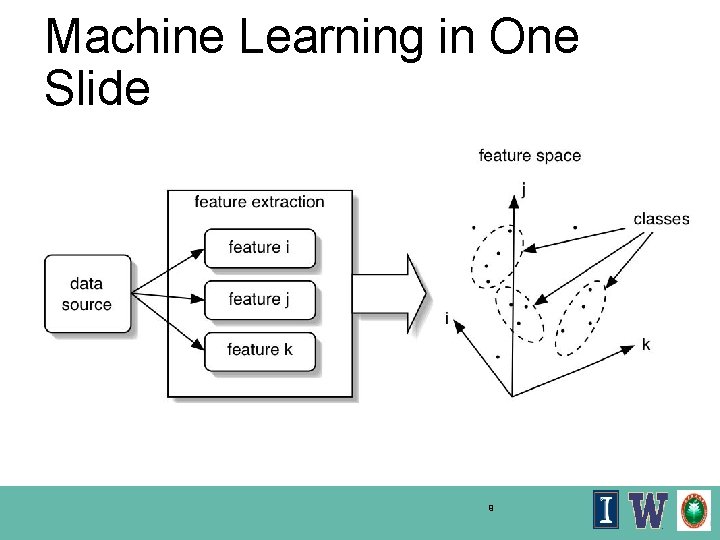

Machine Learning in One Slide 9

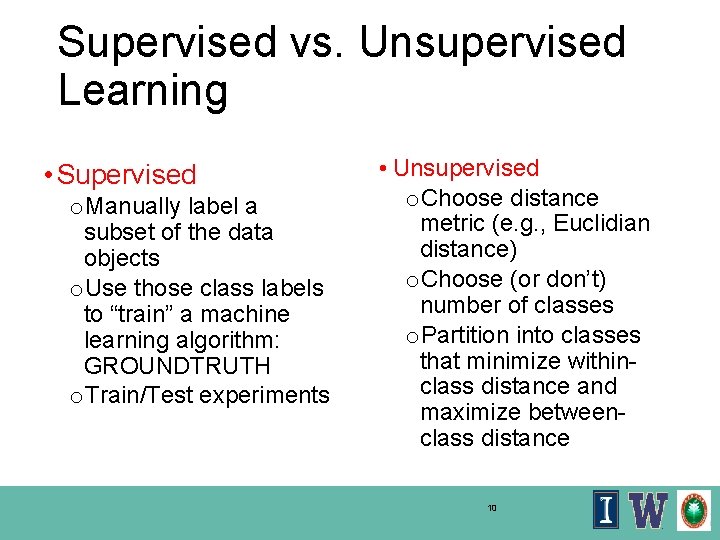

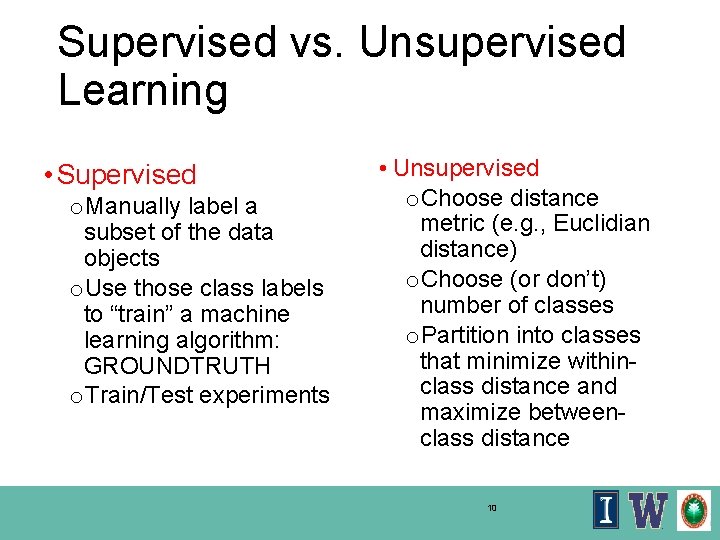

Supervised vs. Unsupervised Learning • Supervised o. Manually label a subset of the data objects o. Use those class labels to “train” a machine learning algorithm: GROUNDTRUTH o. Train/Test experiments • Unsupervised o. Choose distance metric (e. g. , Euclidian distance) o. Choose (or don’t) number of classes o. Partition into classes that minimize withinclass distance and maximize betweenclass distance 10

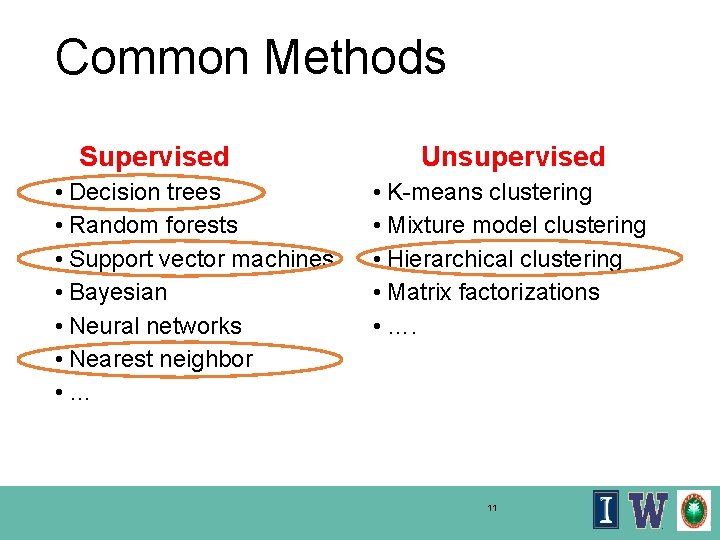

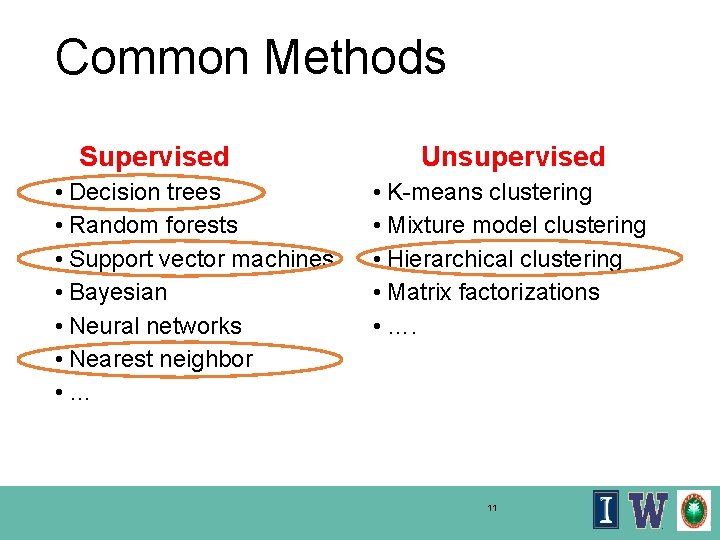

Common Methods Supervised • Decision trees • Random forests • Support vector machines • Bayesian • Neural networks • Nearest neighbor • … Unsupervised • K-means clustering • Mixture model clustering • Hierarchical clustering • Matrix factorizations • …. 11

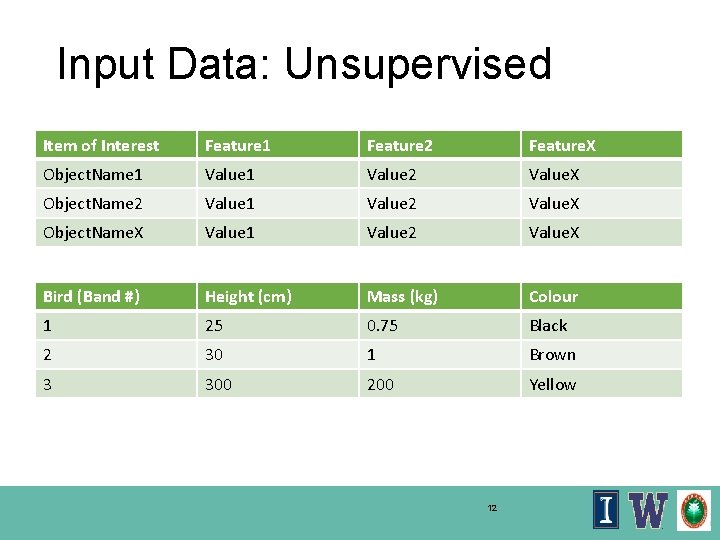

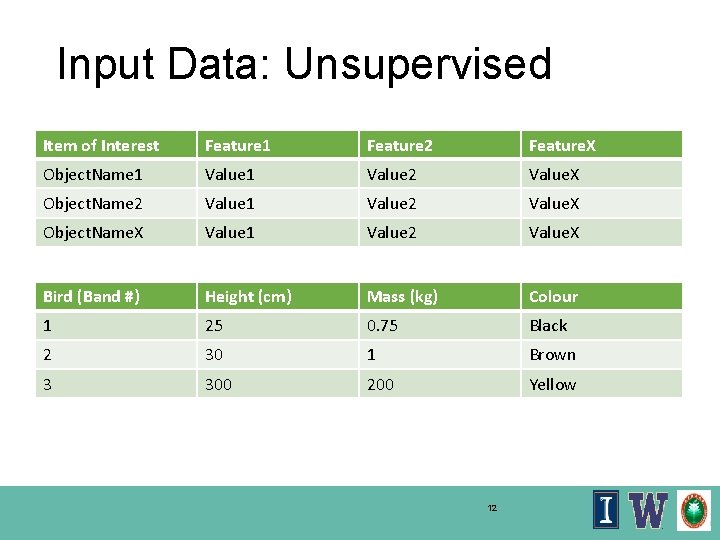

Input Data: Unsupervised Item of Interest Feature 1 Feature 2 Feature. X Object. Name 1 Value 2 Value. X Object. Name 2 Value 1 Value 2 Value. X Object. Name. X Value 1 Value 2 Value. X Bird (Band #) Height (cm) Mass (kg) Colour 1 25 0. 75 Black 2 30 1 Brown 3 300 200 Yellow 12

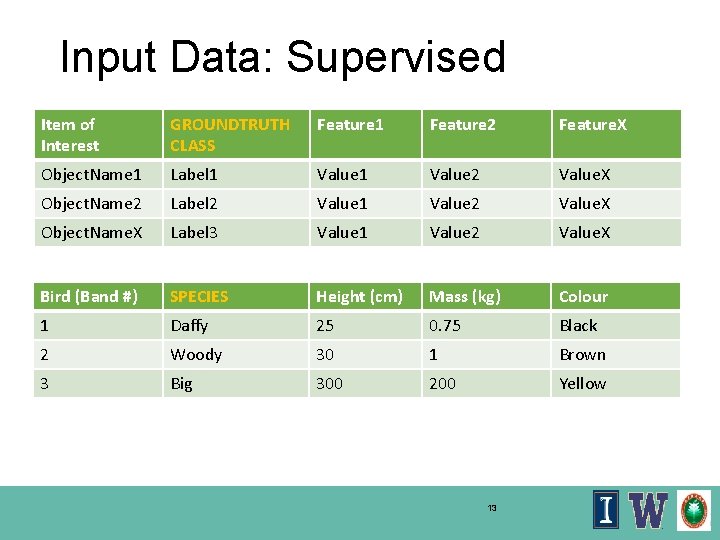

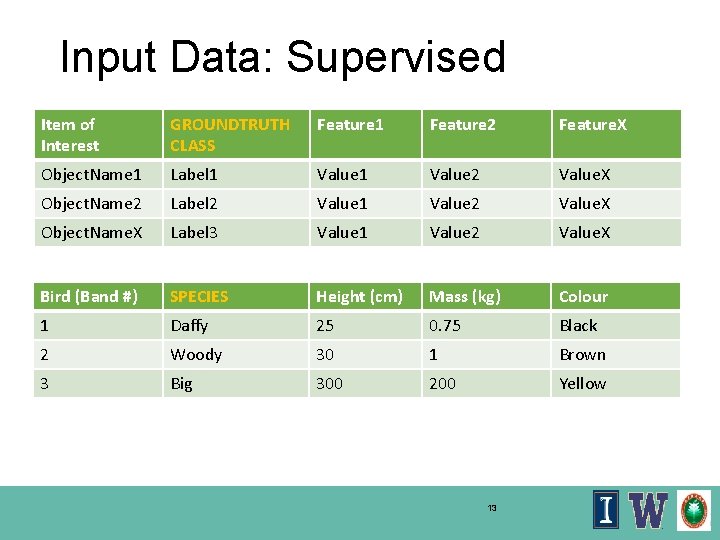

Input Data: Supervised Item of Interest GROUNDTRUTH CLASS Feature 1 Feature 2 Feature. X Object. Name 1 Label 1 Value 2 Value. X Object. Name 2 Label 2 Value 1 Value 2 Value. X Object. Name. X Label 3 Value 1 Value 2 Value. X Bird (Band #) SPECIES Height (cm) Mass (kg) Colour 1 Daffy 25 0. 75 Black 2 Woody 30 1 Brown 3 Big 300 200 Yellow 13

Classification Methods Supervised learning Decision Tree Support Vector Machines (SVM) K-Nearest Neighbor (k. NN) ‹#›

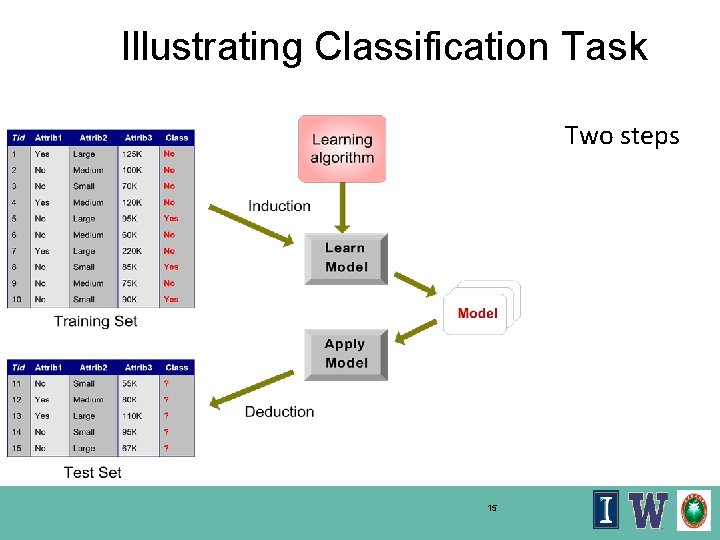

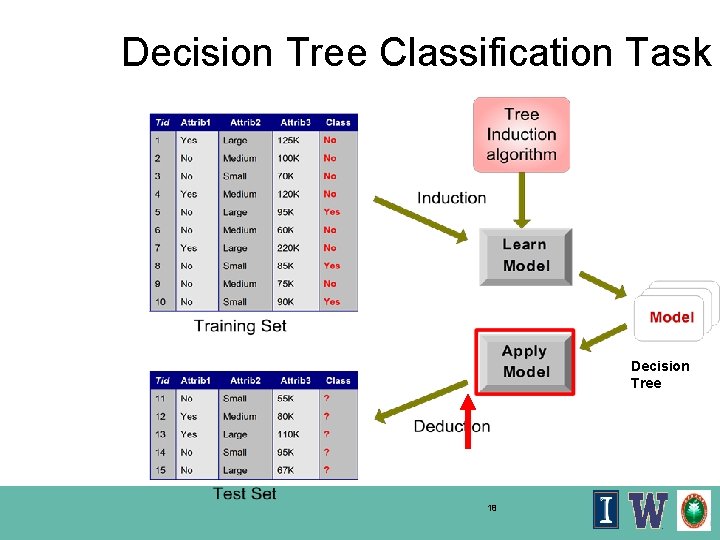

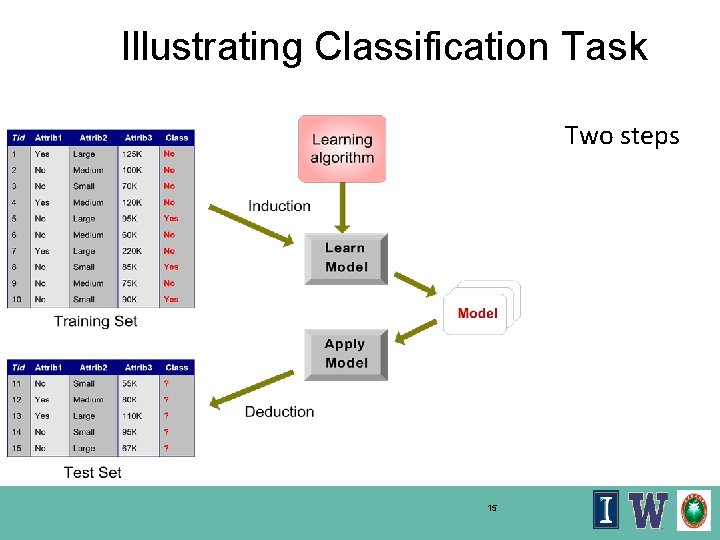

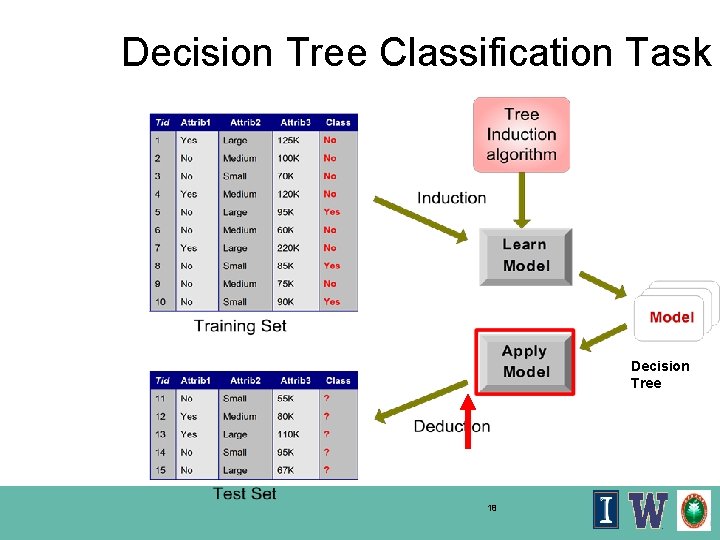

Illustrating Classification Task Two steps 15

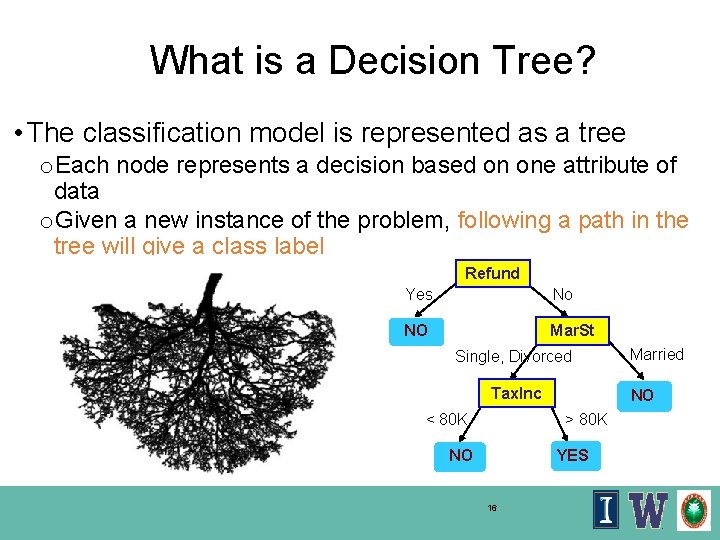

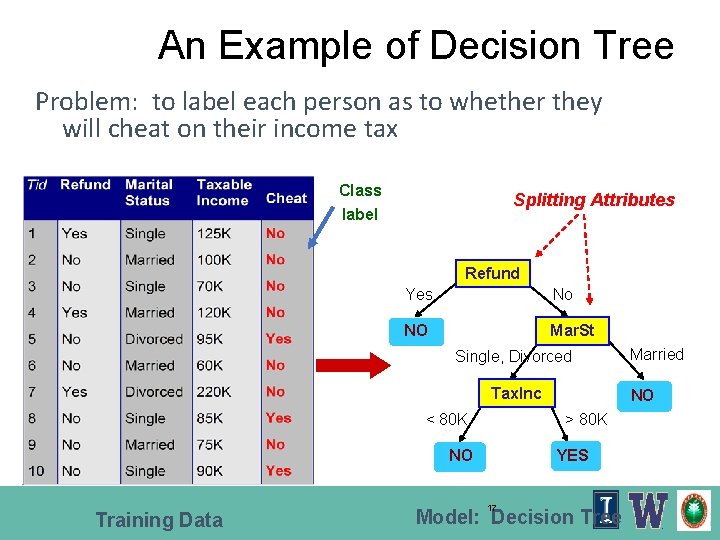

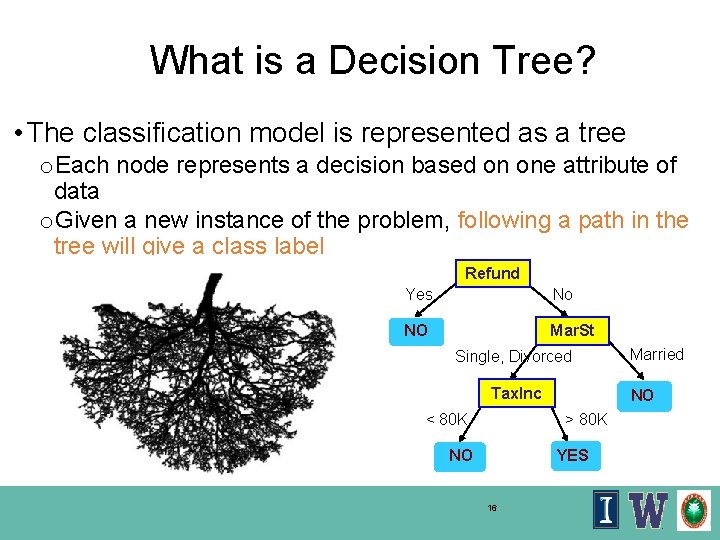

What is a Decision Tree? • The classification model is represented as a tree o. Each node represents a decision based on one attribute of data o. Given a new instance of the problem, following a path in the tree will give a class label Refund Yes No NO Mar. St Single, Divorced Tax. Inc < 80 K NO > 80 K YES NO 16 Married

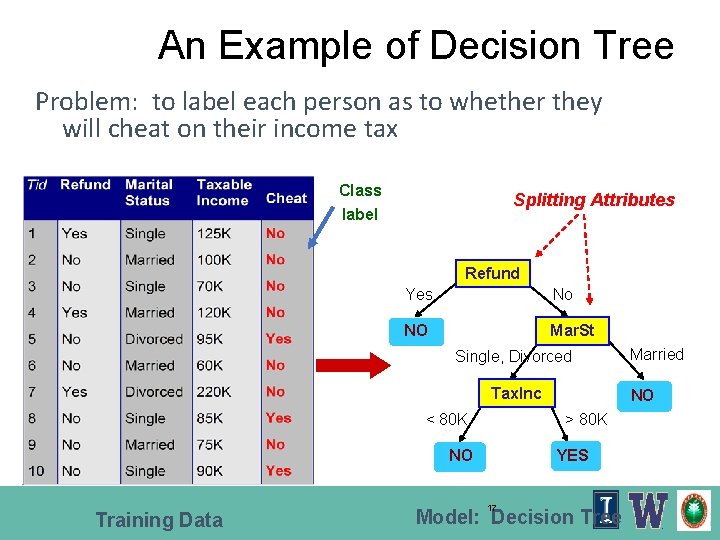

An Example of Decision Tree Problem: to label each person as to whether they will cheat on their income tax Class label Splitting Attributes Refund Yes No NO Mar. St Single, Divorced Tax. Inc < 80 K Training Data NO > 80 K YES NO 17 Married Model: Decision Tree

Decision Tree Classification Task Decision Tree 18

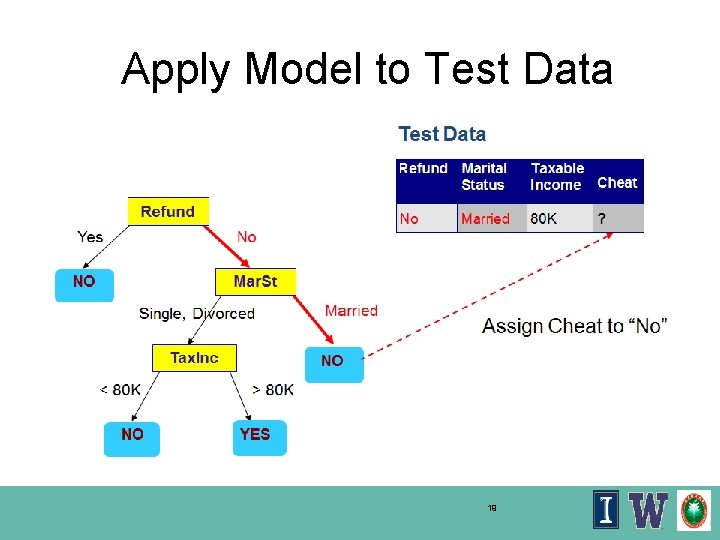

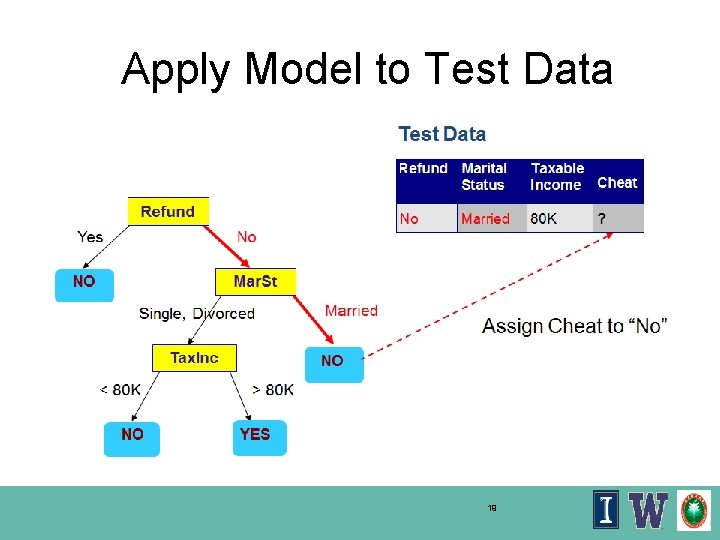

Apply Model to Test Data 19

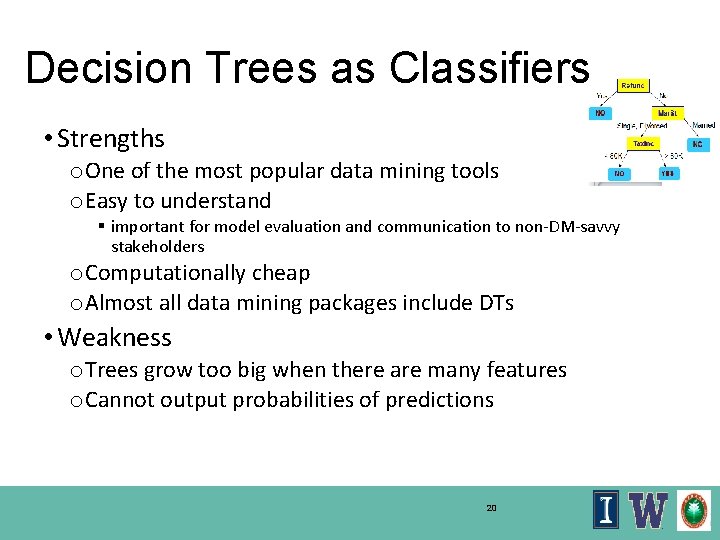

Decision Trees as Classifiers • Strengths o. One of the most popular data mining tools o. Easy to understand § important for model evaluation and communication to non-DM-savvy stakeholders o. Computationally cheap o. Almost all data mining packages include DTs • Weakness o. Trees grow too big when there are many features o. Cannot output probabilities of predictions 20

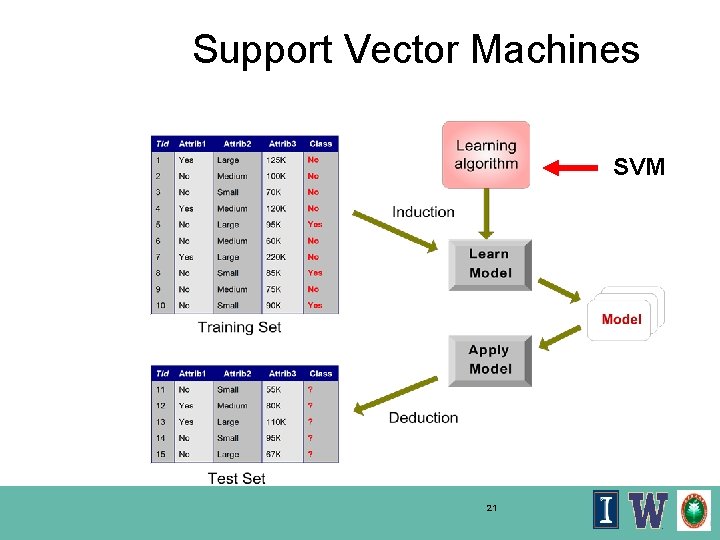

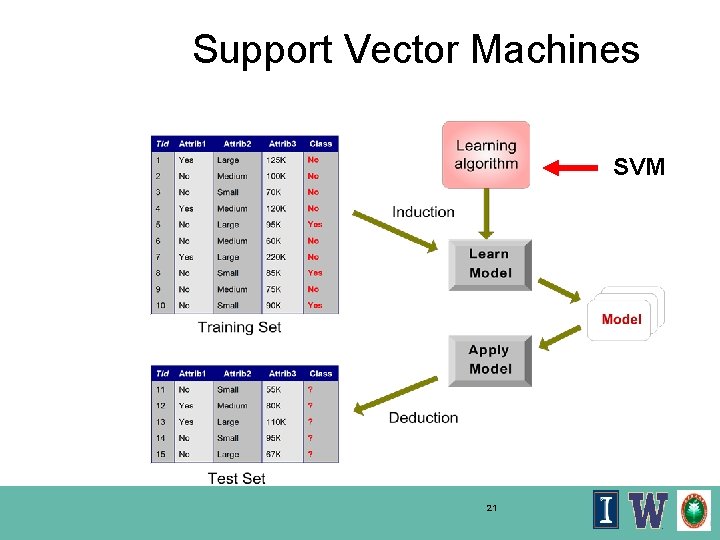

Support Vector Machines SVM 21

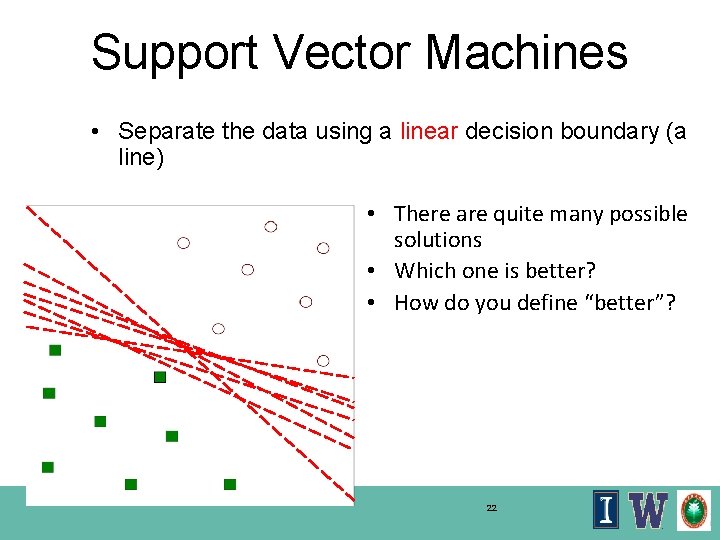

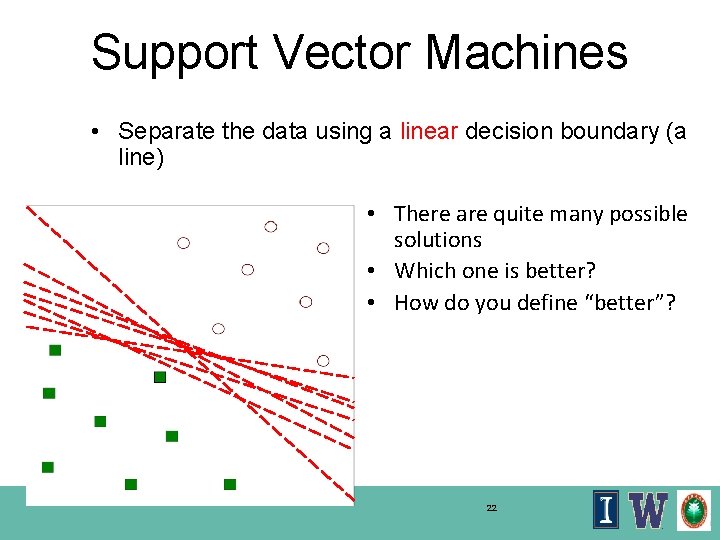

Support Vector Machines • Separate the data using a linear decision boundary (a line) • There are quite many possible solutions • Which one is better? • How do you define “better”? 22

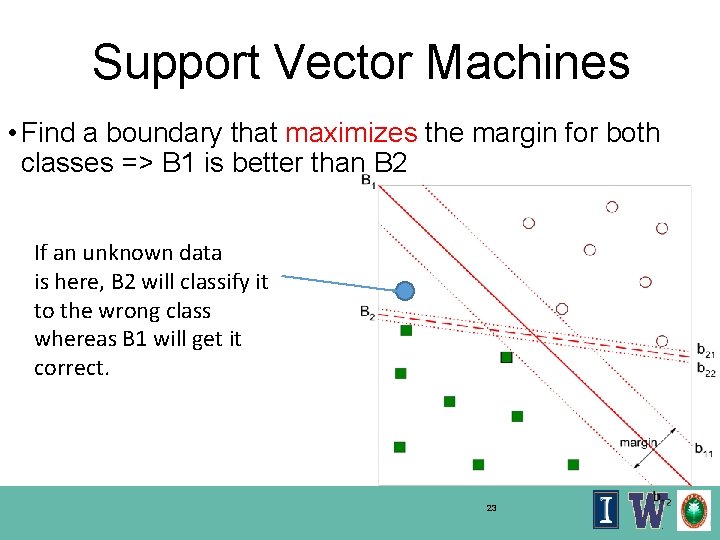

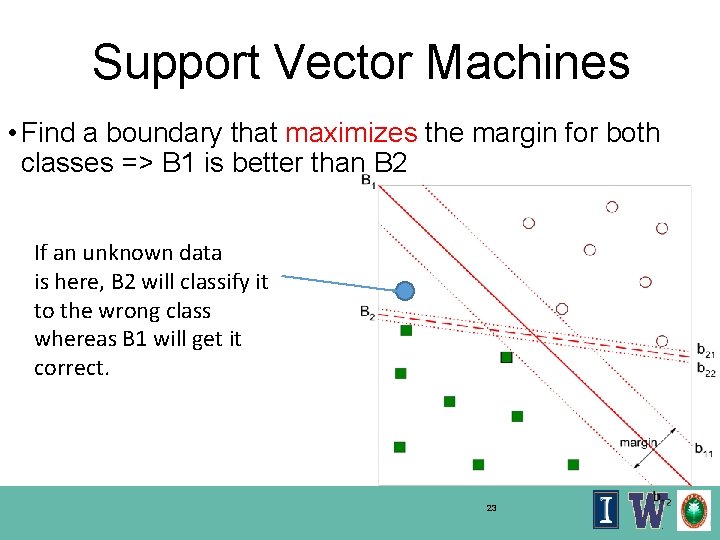

Support Vector Machines • Find a boundary that maximizes the margin for both classes => B 1 is better than B 2 If an unknown data is here, B 2 will classify it to the wrong class whereas B 1 will get it correct. 23

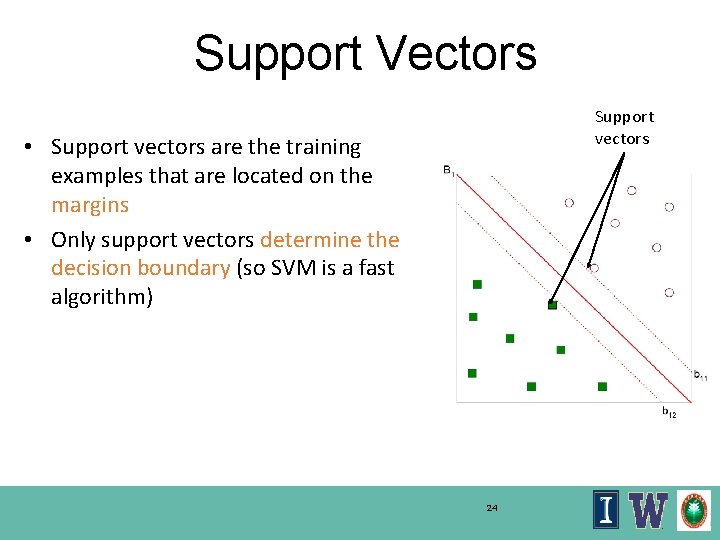

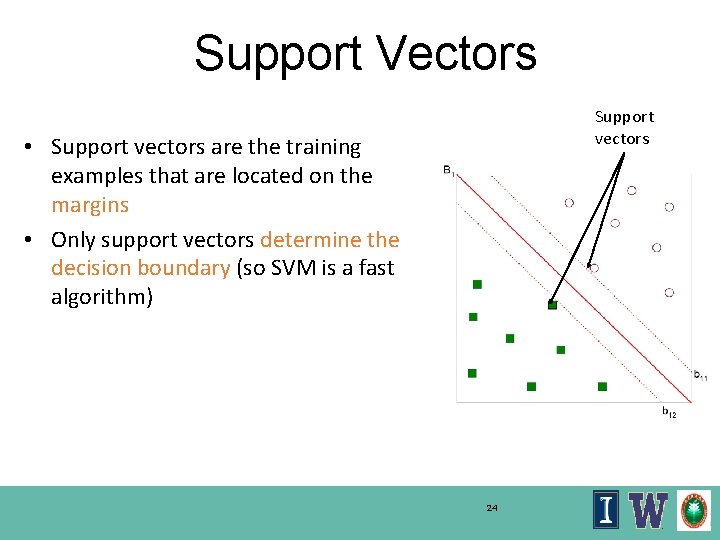

Support Vectors Support vectors • Support vectors are the training examples that are located on the margins • Only support vectors determine the decision boundary (so SVM is a fast algorithm) 24

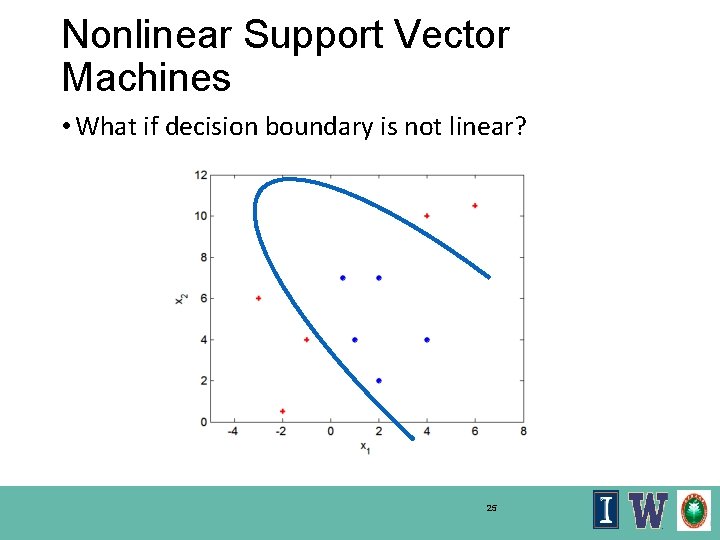

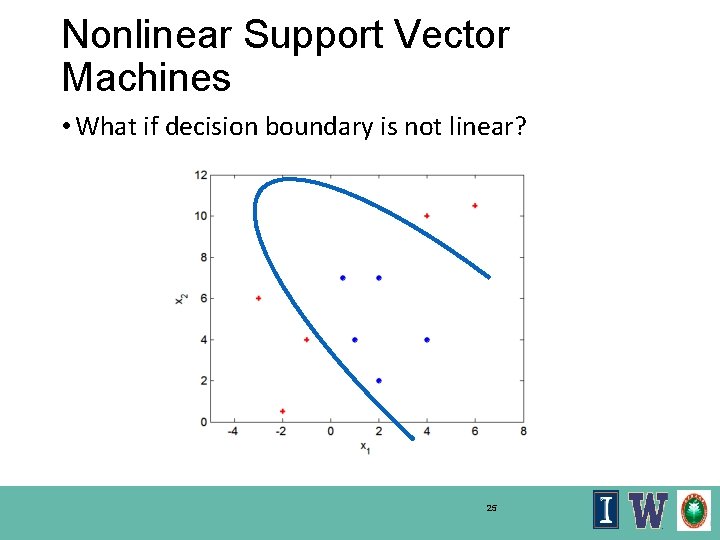

Nonlinear Support Vector Machines • What if decision boundary is not linear? 25

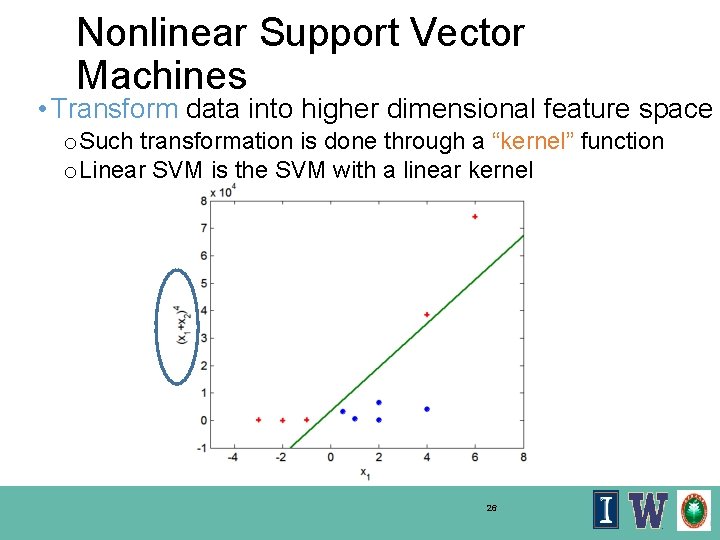

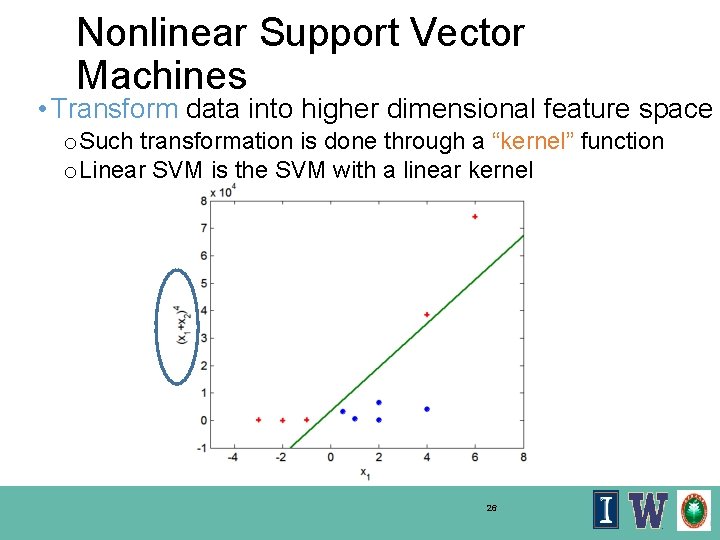

Nonlinear Support Vector Machines • Transform data into higher dimensional feature space o. Such transformation is done through a “kernel” function o. Linear SVM is the SVM with a linear kernel 26

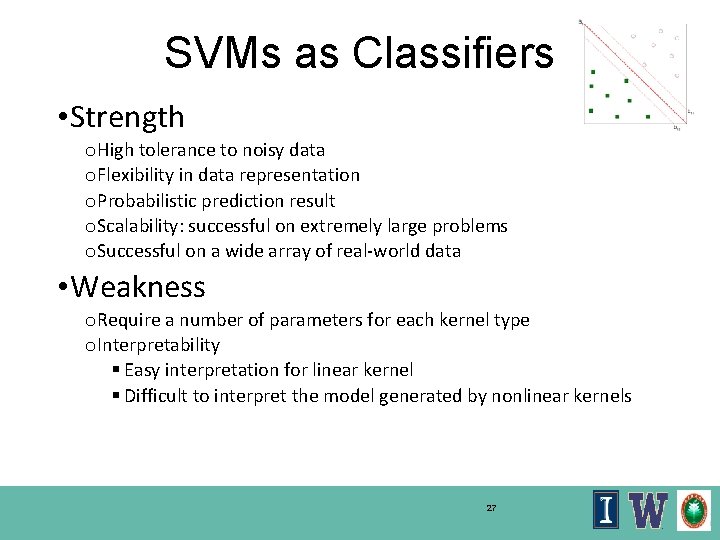

SVMs as Classifiers • Strength o High tolerance to noisy data o Flexibility in data representation o Probabilistic prediction result o Scalability: successful on extremely large problems o Successful on a wide array of real-world data • Weakness o Require a number of parameters for each kernel type o Interpretability § Easy interpretation for linear kernel § Difficult to interpret the model generated by nonlinear kernels 27

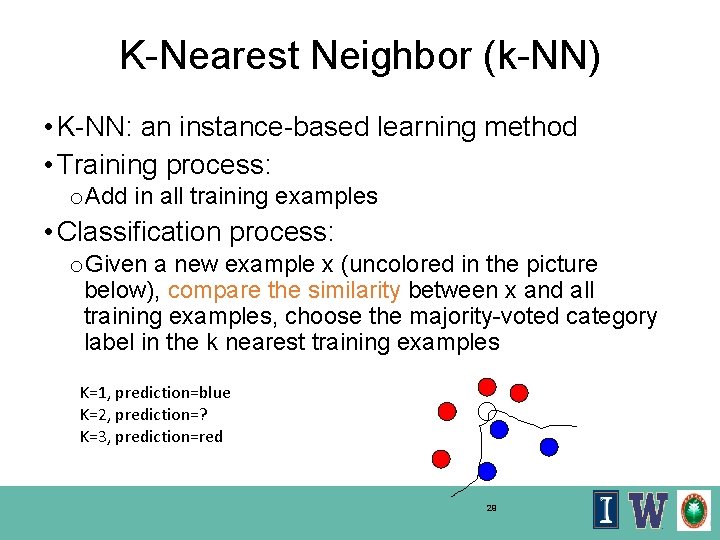

k-NN: Instance-based learning • In contrast to learning methods that construct a general, explicit target function when training examples are provided, e. g. decision tree, SVM • Instance-based learning methods simply store the training examples during training process • Sometimes called “lazy” learners because they build no model (function) and delay processing the training data until new examples must be classified. ‹#›

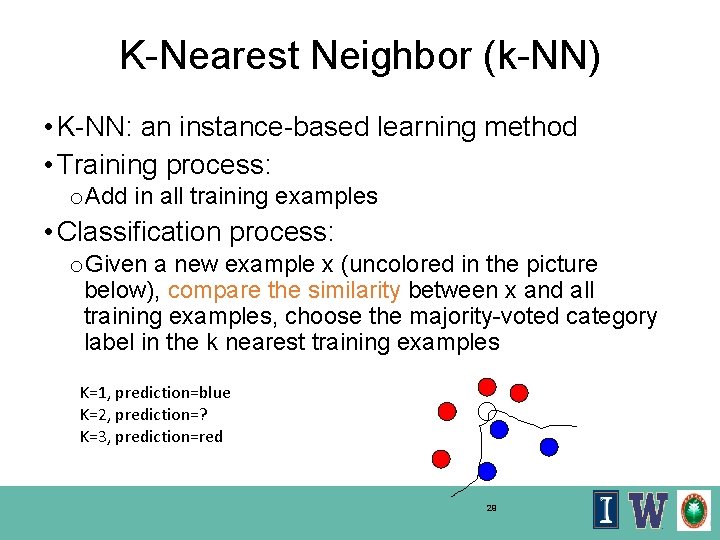

K-Nearest Neighbor (k-NN) • K-NN: an instance-based learning method • Training process: o. Add in all training examples • Classification process: o. Given a new example x (uncolored in the picture below), compare the similarity between x and all training examples, choose the majority-voted category label in the k nearest training examples K=1, prediction=blue K=2, prediction=? K=3, prediction=red 29

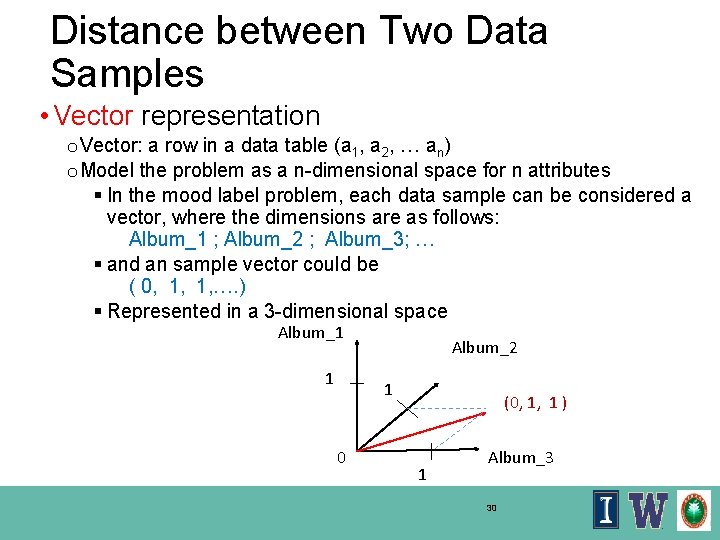

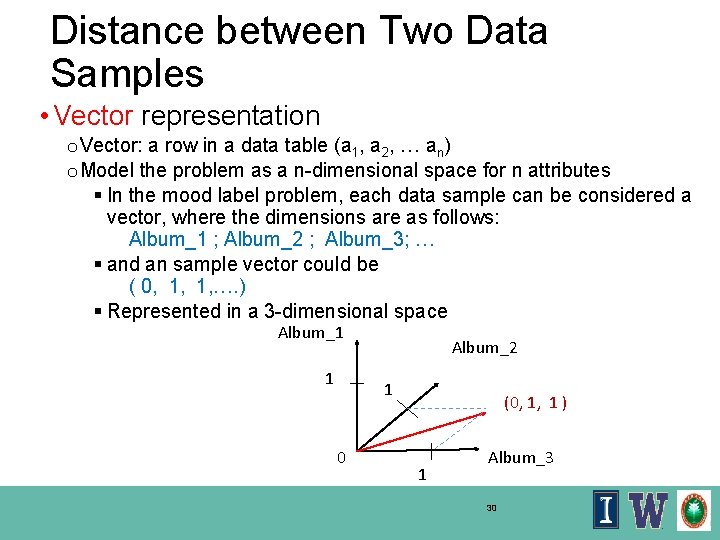

Distance between Two Data Samples • Vector representation o Vector: a row in a data table (a 1, a 2, … an) o Model the problem as a n-dimensional space for n attributes § In the mood label problem, each data sample can be considered a vector, where the dimensions are as follows: Album_1 ; Album_2 ; Album_3; … § and an sample vector could be ( 0, 1, …. ) § Represented in a 3 -dimensional space Album_1 1 Album_2 1 0 (0, 1, 1 ) 1 Album_3 30

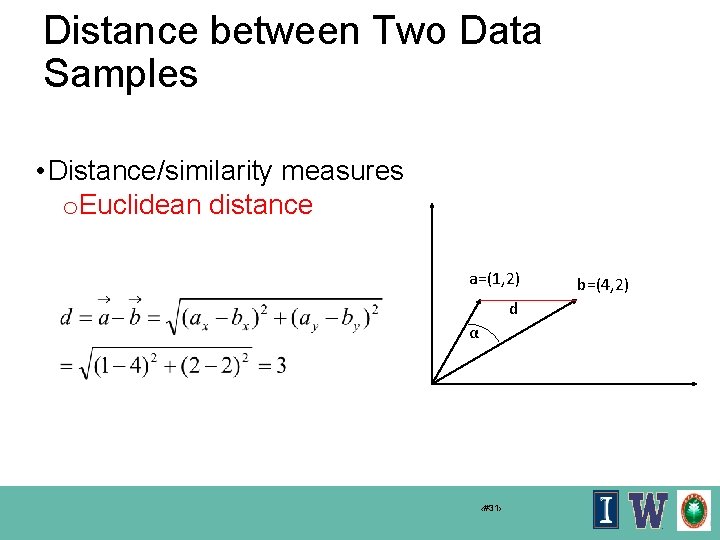

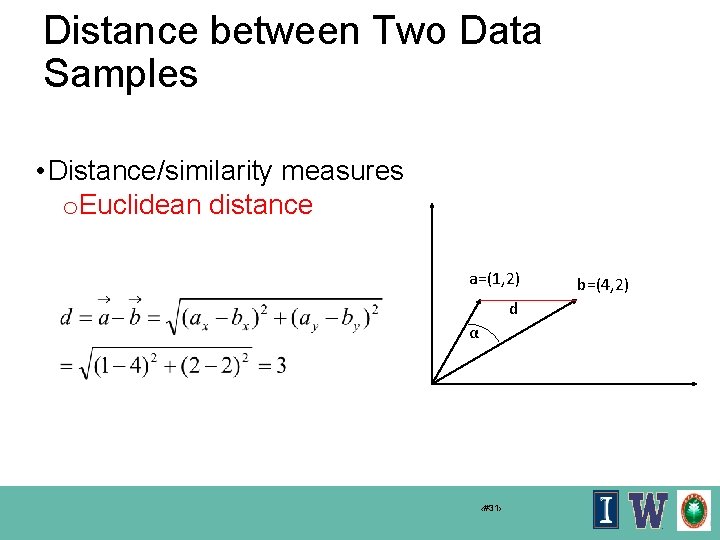

Distance between Two Data Samples • Distance/similarity measures o. Euclidean distance a=(1, 2) d α ‹#31› b=(4, 2)

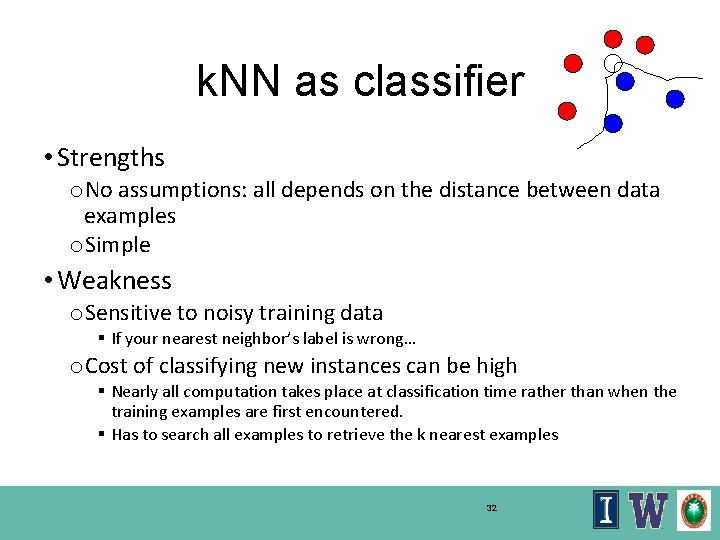

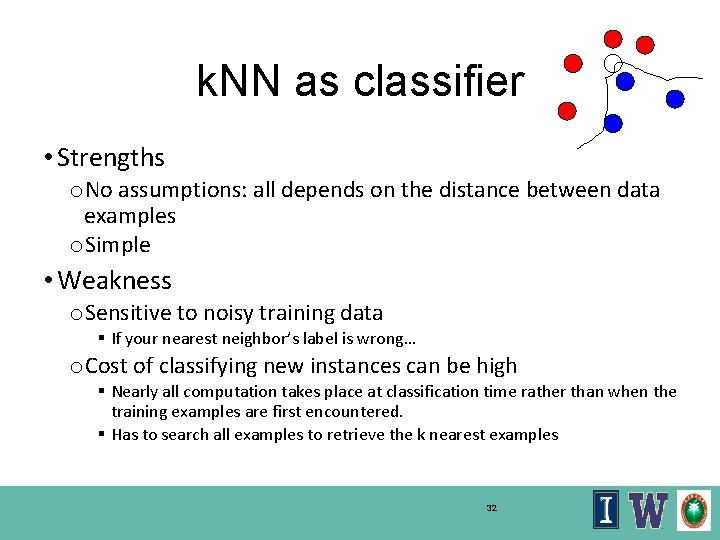

k. NN as classifier • Strengths o. No assumptions: all depends on the distance between data examples o. Simple • Weakness o. Sensitive to noisy training data § If your nearest neighbor’s label is wrong… o. Cost of classifying new instances can be high § Nearly all computation takes place at classification time rather than when the training examples are first encountered. § Has to search all examples to retrieve the k nearest examples 32

Clustering With Try At Home Bonus: The Iris dataset 33

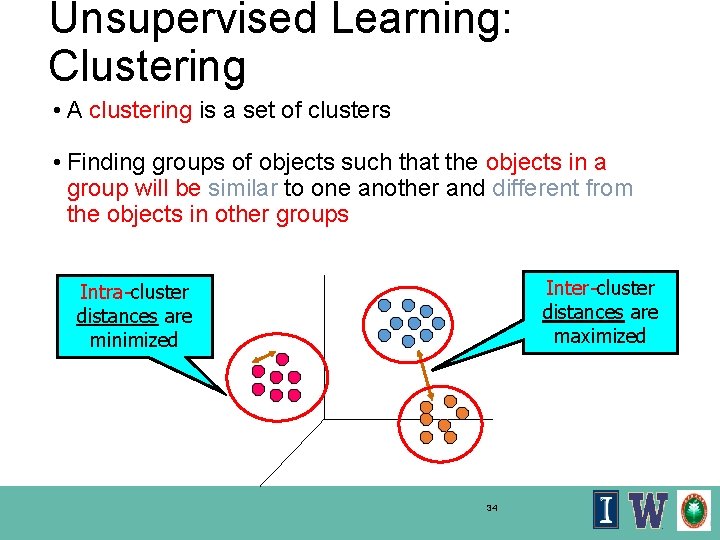

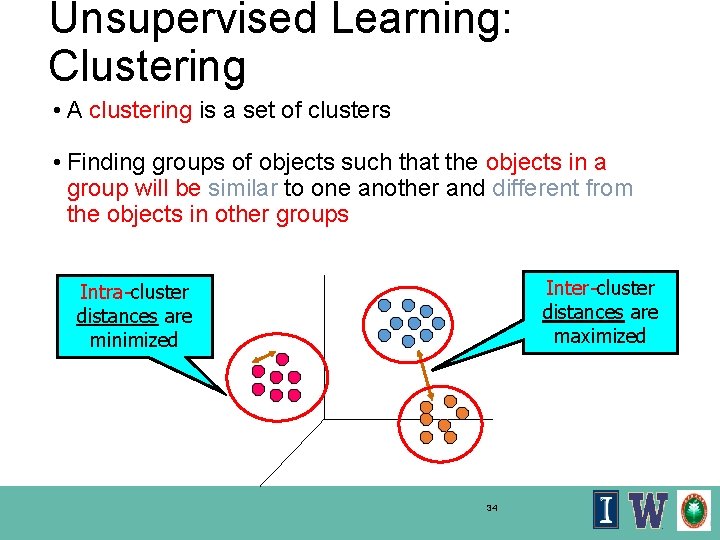

Unsupervised Learning: Clustering • A clustering is a set of clusters • Finding groups of objects such that the objects in a group will be similar to one another and different from the objects in other groups Inter-cluster distances are maximized Intra-cluster distances are minimized 34

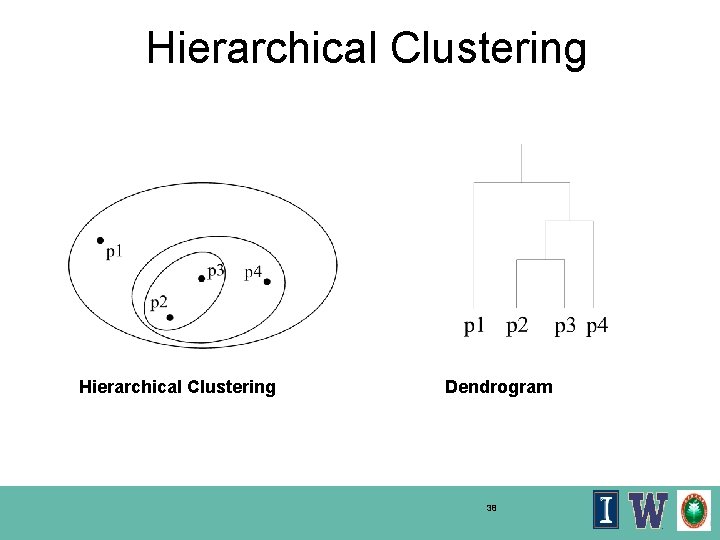

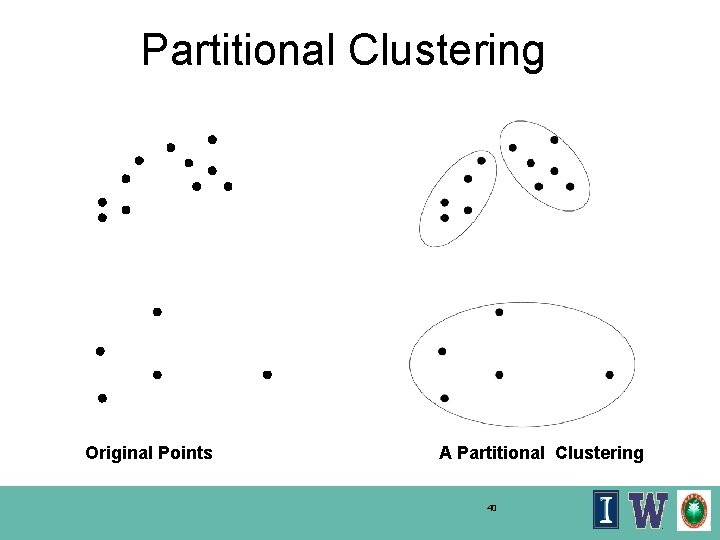

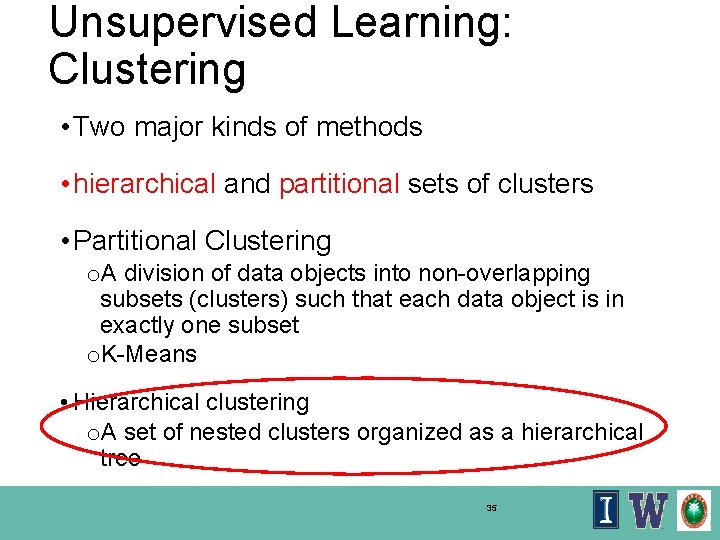

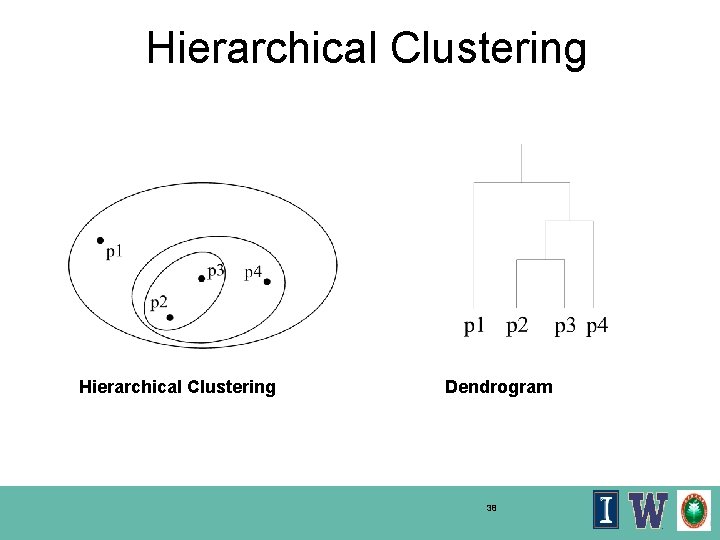

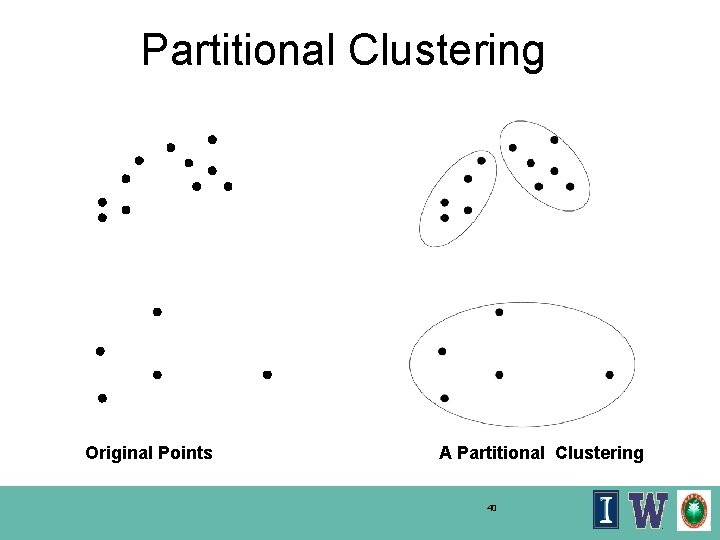

Unsupervised Learning: Clustering • Two major kinds of methods • hierarchical and partitional sets of clusters • Partitional Clustering o. A division of data objects into non-overlapping subsets (clusters) such that each data object is in exactly one subset o. K-Means • Hierarchical clustering o. A set of nested clusters organized as a hierarchical tree 35

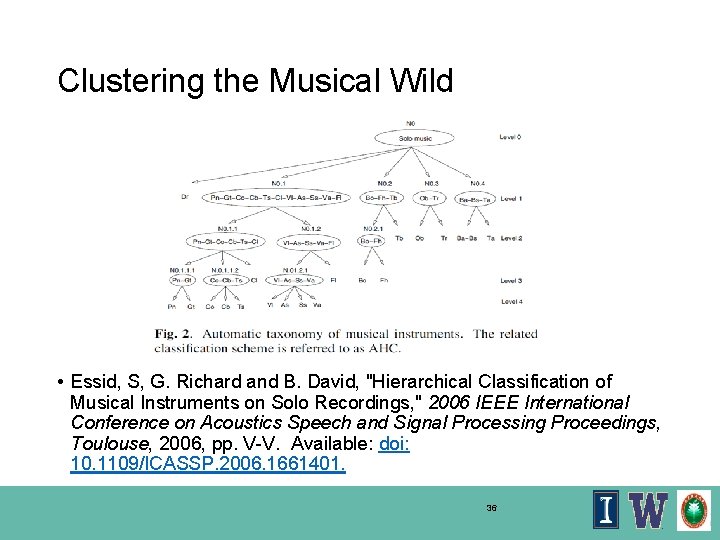

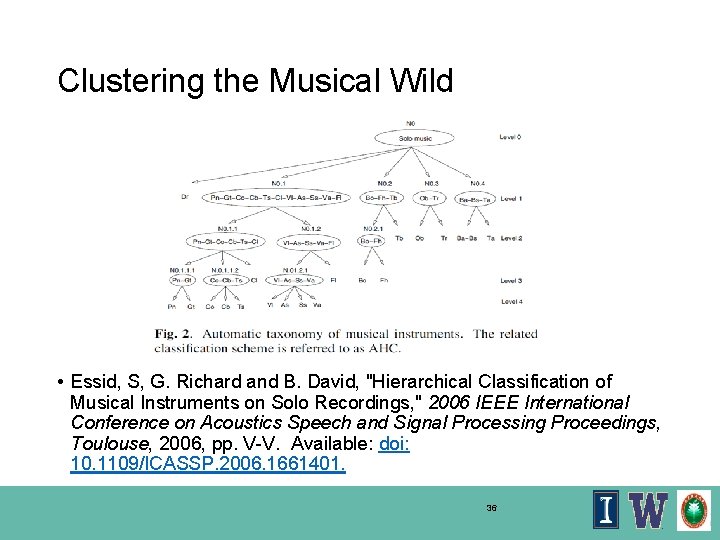

Clustering the Musical Wild • Essid, S, G. Richard and B. David, "Hierarchical Classification of Musical Instruments on Solo Recordings, " 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, Toulouse, 2006, pp. V-V. Available: doi: 10. 1109/ICASSP. 2006. 1661401. 36

Try At Home Clustering Example • Famous Iris dataset o. Used in every ML class o. Small and easy to understand • Data Examples and Helpful Handouts: ohttps: //goo. gl/m. F 79 u. T odownie_ML_oxford_2017_iris_handouts. pdf oweka_hands_on_key_classification. pdf 37

Hierarchical Clustering Dendrogram 38

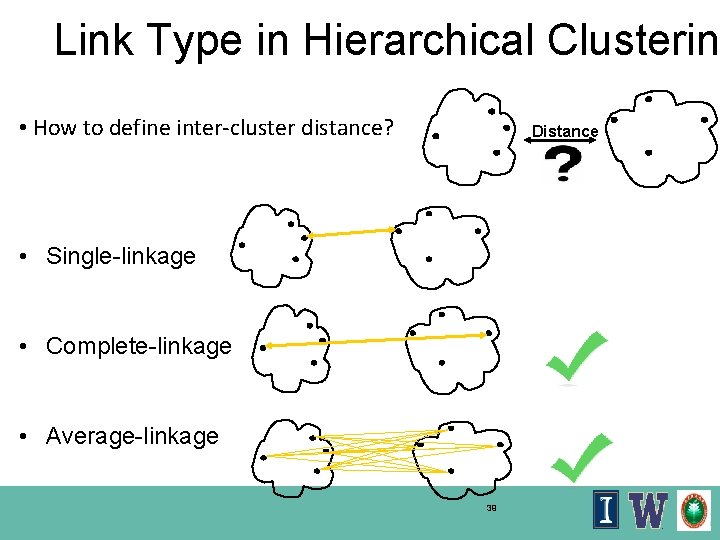

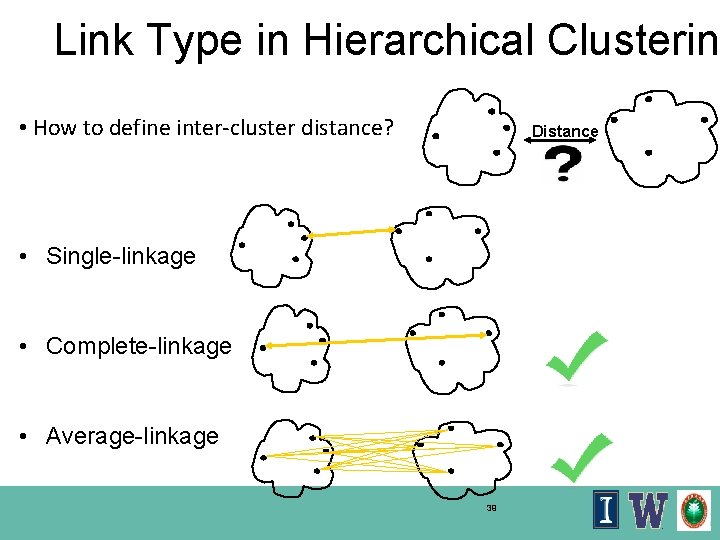

Link Type in Hierarchical Clusterin • How to define inter-cluster distance? Distance • Single-linkage • Complete-linkage • Average-linkage 39

Partitional Clustering Original Points A Partitional Clustering 40

Clustering in WEKA • WEKA contains multiple common clustering schemes: • k-Means, EM, Hierarchical clustering • Visualization of results • Clusters can be visualized and compared to “true” clusters (if given) • First step: load in data 41

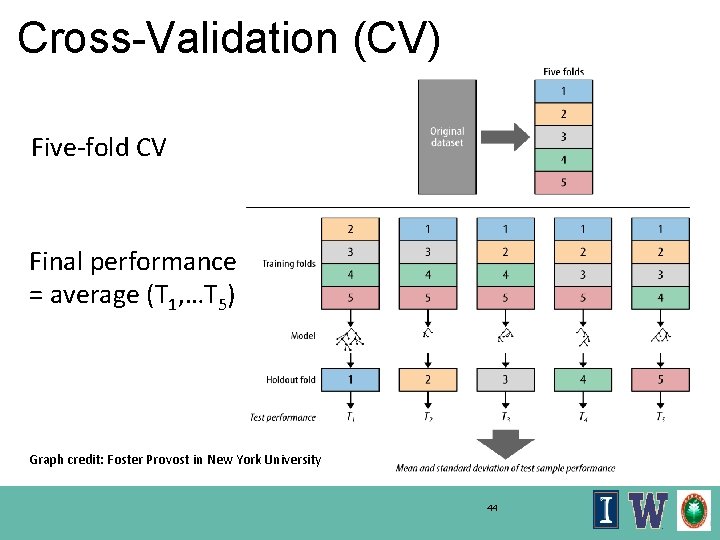

Experimentation • N-Fold Cross-validation o. Not “fair” to train model, then test the model using the same data o Need to have separate TRAIN and TEST data • Problem: groundtruth data can be hard to create limiting amount available with which to train then test • Cross-validation splits one collection into n-fold distinct sets • Model built with one of the subsets held back for testing • Different subset held back each of n-fold subsets • Results averaged 42

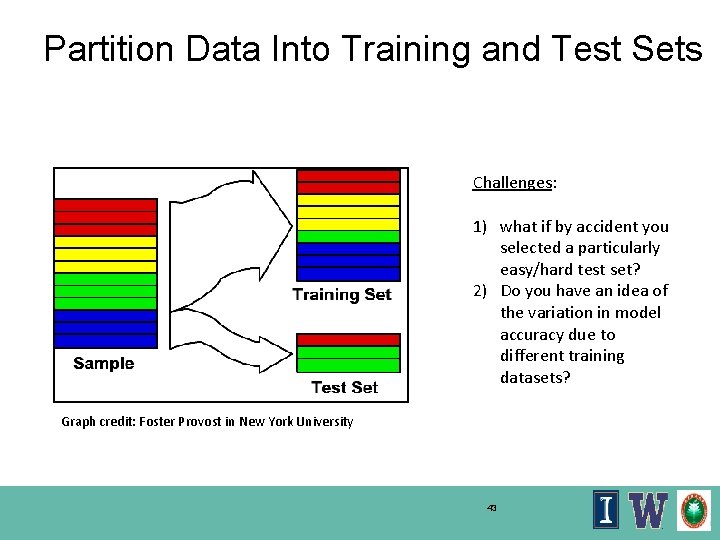

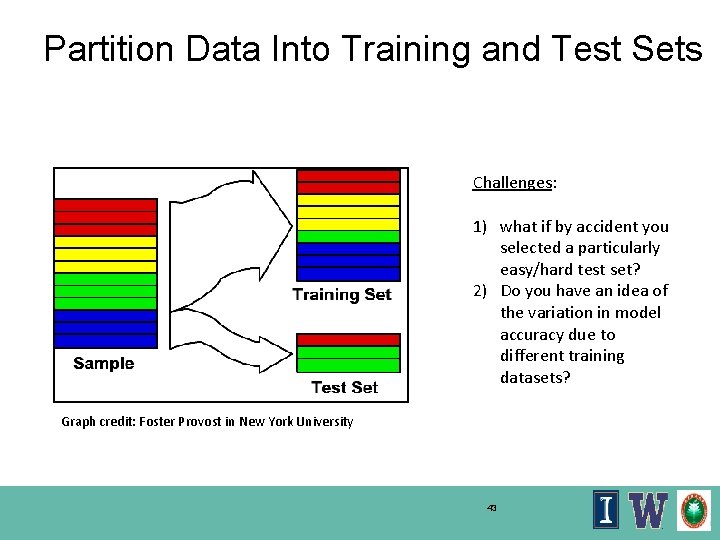

Partition Data Into Training and Test Sets Challenges: 1) what if by accident you selected a particularly easy/hard test set? 2) Do you have an idea of the variation in model accuracy due to different training datasets? Graph credit: Foster Provost in New York University 43

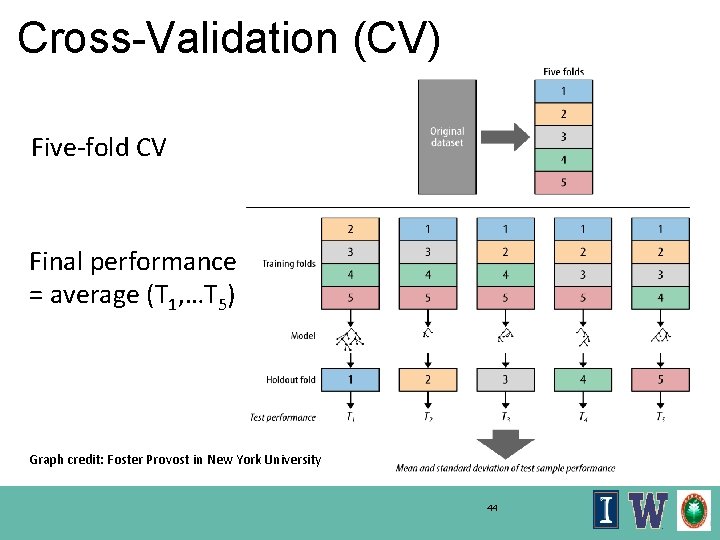

Cross-Validation (CV) Five-fold CV Final performance = average (T 1, …T 5) Graph credit: Foster Provost in New York University 44

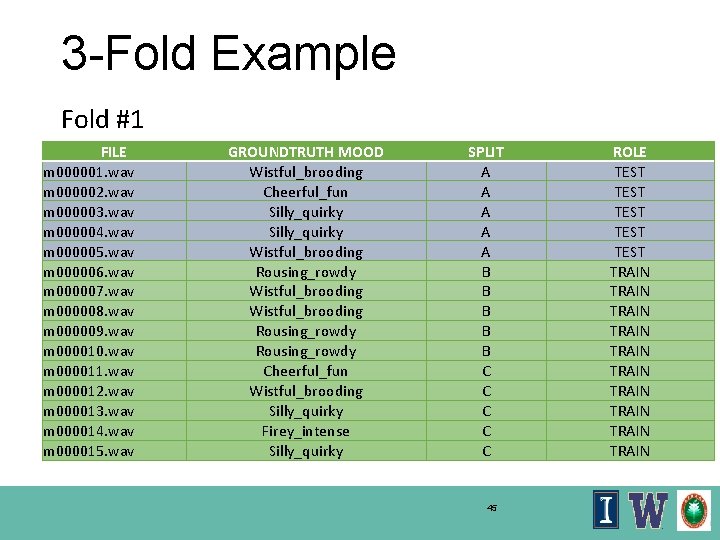

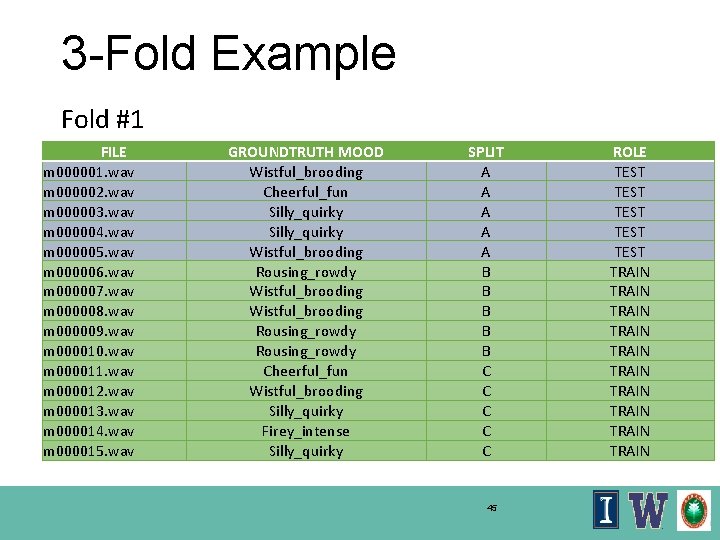

3 -Fold Example Fold #1 FILE m 000001. wav m 000002. wav m 000003. wav m 000004. wav m 000005. wav m 000006. wav m 000007. wav m 000008. wav m 000009. wav m 000010. wav m 000011. wav m 000012. wav m 000013. wav m 000014. wav m 000015. wav GROUNDTRUTH MOOD Wistful_brooding Cheerful_fun Silly_quirky Wistful_brooding Rousing_rowdy Cheerful_fun Wistful_brooding Silly_quirky Firey_intense Silly_quirky SPLIT A A A B B B C C C 45 ROLE TEST TEST TRAIN TRAIN TRAIN

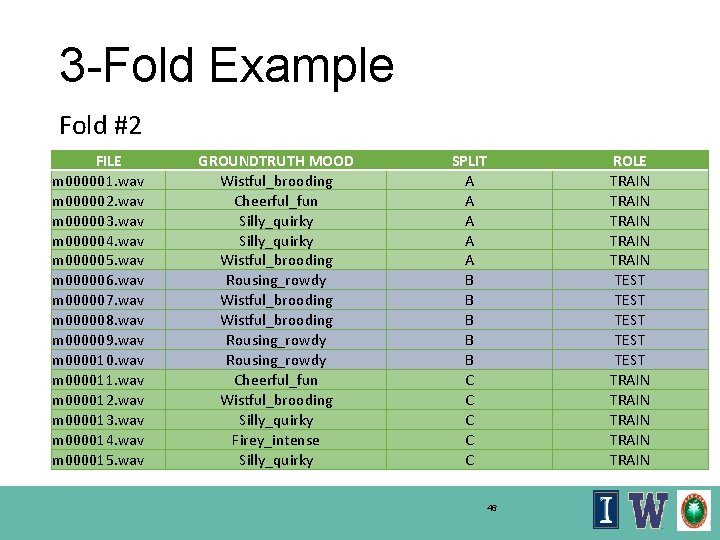

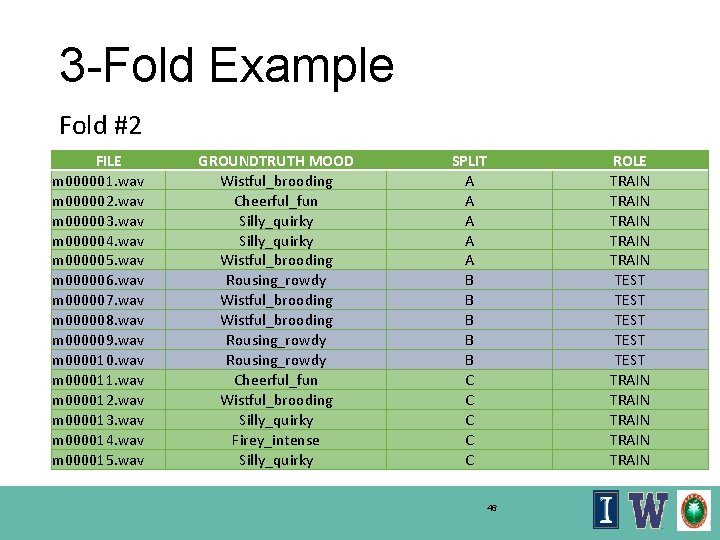

3 -Fold Example Fold #2 FILE m 000001. wav m 000002. wav m 000003. wav m 000004. wav m 000005. wav m 000006. wav m 000007. wav m 000008. wav m 000009. wav m 000010. wav m 000011. wav m 000012. wav m 000013. wav m 000014. wav m 000015. wav GROUNDTRUTH MOOD Wistful_brooding Cheerful_fun Silly_quirky Wistful_brooding Rousing_rowdy Cheerful_fun Wistful_brooding Silly_quirky Firey_intense Silly_quirky SPLIT A A A B B B C C C 46 ROLE TRAIN TRAIN TEST TEST TRAIN TRAIN

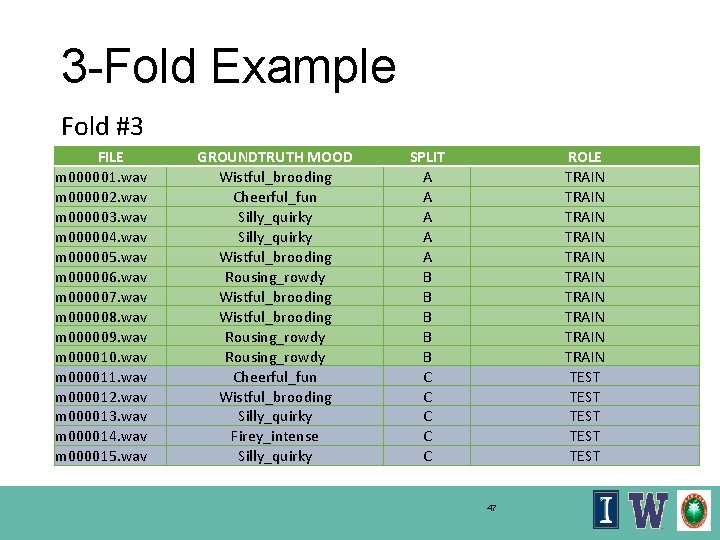

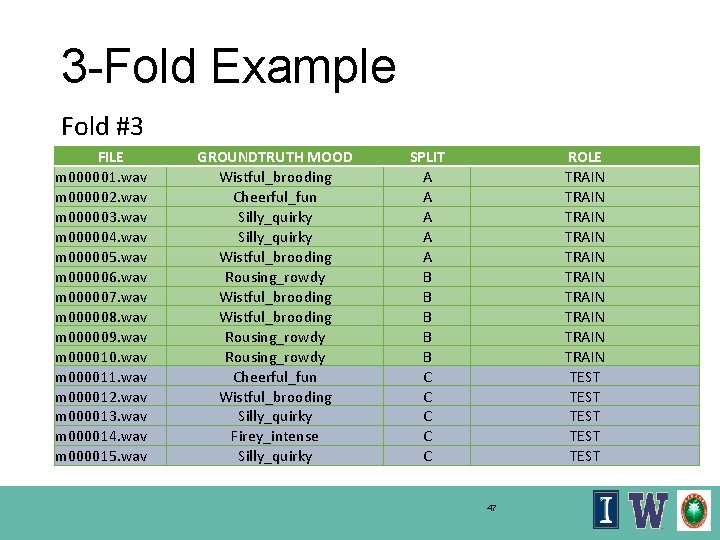

3 -Fold Example Fold #3 FILE m 000001. wav m 000002. wav m 000003. wav m 000004. wav m 000005. wav m 000006. wav m 000007. wav m 000008. wav m 000009. wav m 000010. wav m 000011. wav m 000012. wav m 000013. wav m 000014. wav m 000015. wav GROUNDTRUTH MOOD Wistful_brooding Cheerful_fun Silly_quirky Wistful_brooding Rousing_rowdy Cheerful_fun Wistful_brooding Silly_quirky Firey_intense Silly_quirky SPLIT A A A B B B C C C ROLE TRAIN TRAIN TRAIN TEST TEST 47

About Our Tool • Weka o Machine Learning Group at the University of Waikato, Hamilton, New Zealand o http: //www. cs. waikato. ac. nz/ml/weka/ o Has EXCELLENT associated book § Witten, I. , E. Frank, and M. Hall (2011). Data Mining: Practical Machine Learning Tools and Techniques, Third Edition. New York: Morgan Kaufmann. § http: //www. amazon. com/exec/obidos/ASIN/0123748569/departmofcompute ‹#›

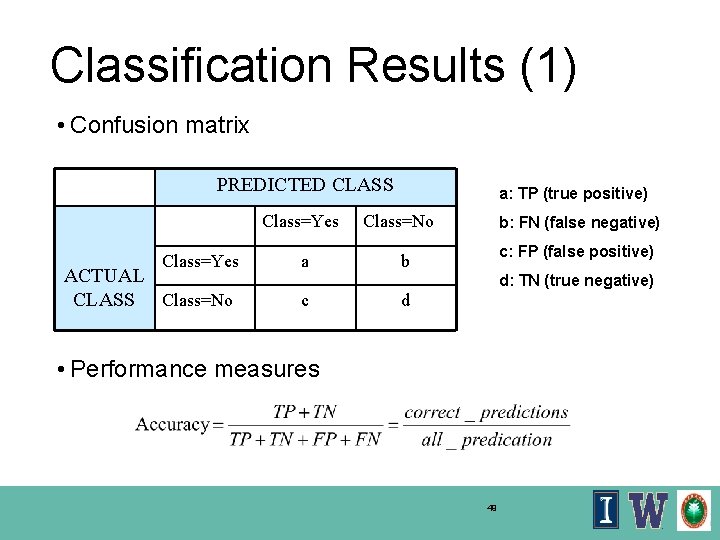

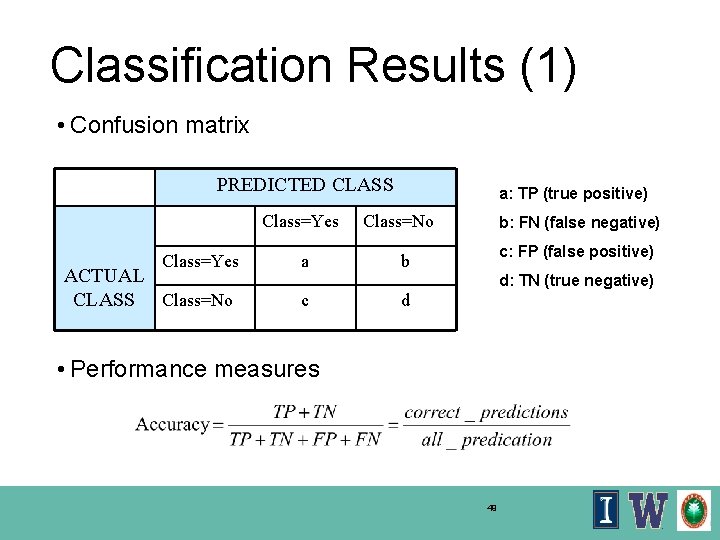

Classification Results (1) • Confusion matrix PREDICTED CLASS Class=Yes ACTUAL CLASS Class=No a a: TP (true positive) Class=No b: FN (false negative) c: FP (false positive) b d: TN (true negative) c d • Performance measures 49

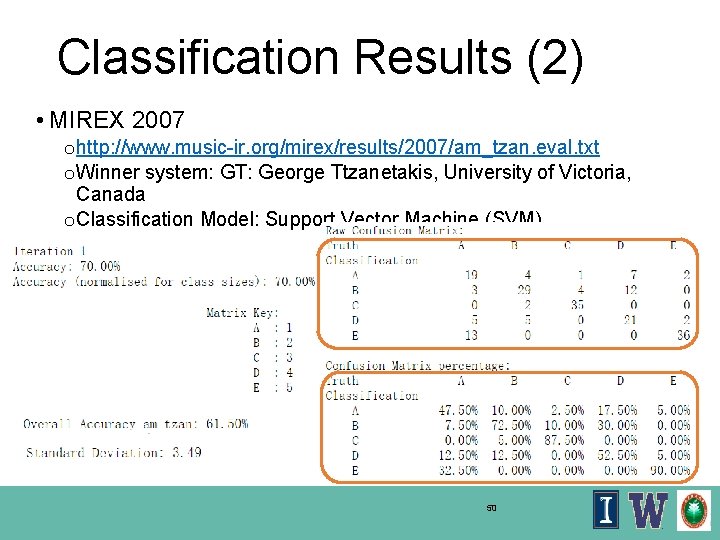

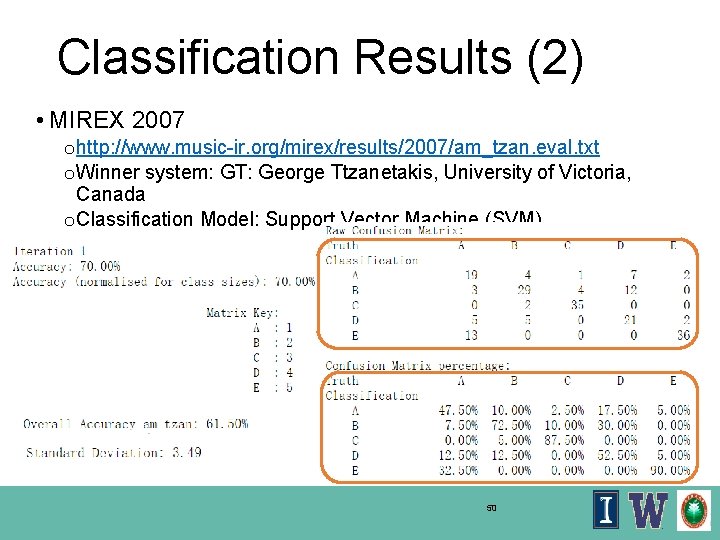

Classification Results (2) • MIREX 2007 o http: //www. music-ir. org/mirex/results/2007/am_tzan. eval. txt o Winner system: GT: George Ttzanetakis, University of Victoria, Canada o Classification Model: Support Vector Machine (SVM) 50

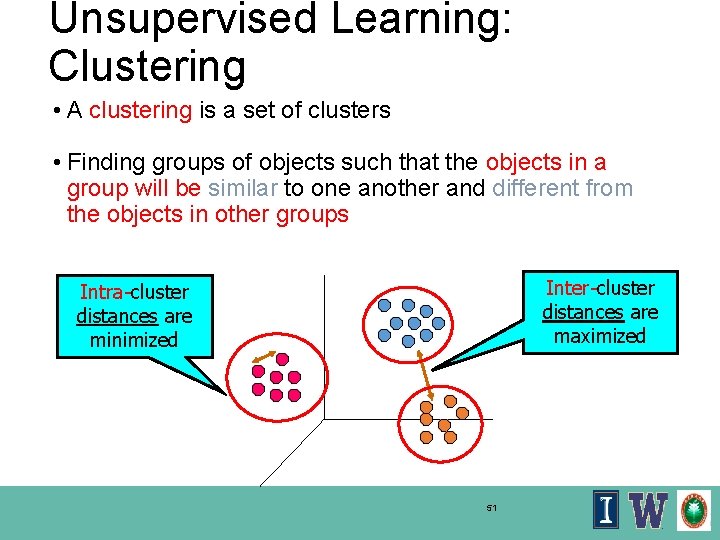

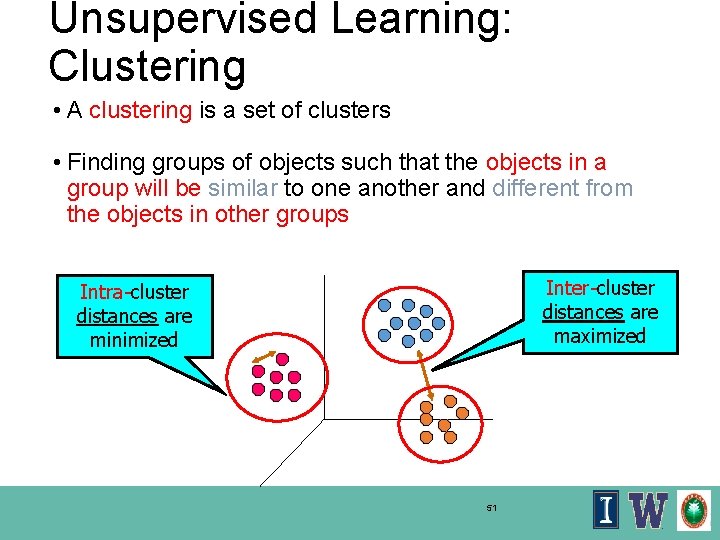

Unsupervised Learning: Clustering • A clustering is a set of clusters • Finding groups of objects such that the objects in a group will be similar to one another and different from the objects in other groups Inter-cluster distances are maximized Intra-cluster distances are minimized 51

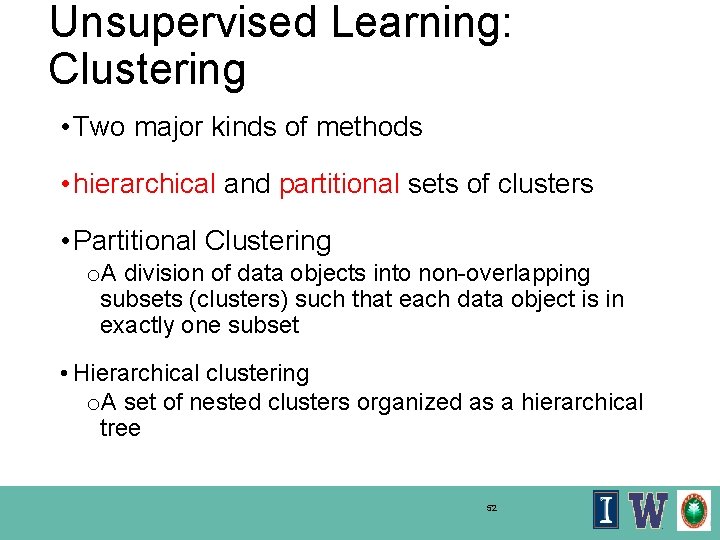

Unsupervised Learning: Clustering • Two major kinds of methods • hierarchical and partitional sets of clusters • Partitional Clustering o. A division of data objects into non-overlapping subsets (clusters) such that each data object is in exactly one subset • Hierarchical clustering o. A set of nested clusters organized as a hierarchical tree 52

Most Important Take. Aways • Be fearless and have fun • Think spreadsheet for your data o A row for each thing in world you are interested in o One column to label the things in world o X number of columns, one for each FEATURE o For SUPERVISED ML one more column with GROUNDTRUTH • No magic feature set • More data is better! • N-fold to separate TRAIN and TEST data • Supervised vs. Unsupervised • Stay tuned for lab with more interesting cases 53