Queue Scheduling Disciplines Ref White Paper from Juniper

- Slides: 31

Queue Scheduling Disciplines Ref: White Paper from Juniper “Supporting Differentiated Service Classes: Queue Scheduling Disciplines” by Chuck Semeria

What does a Queue scheduling discipline do? Objectives of an ideal Queue scheduling discipline: §Fair Distribution of bandwidth to each of the different service classes. §Protection between different service classes on an output port. §Provision for a service class to use unused bandwidth of another service class. §Algorithm that can be implemented in the hardware.

Classic Queue Scheduling Disciplines First-in-first-out Queuing (FIFO) Priority Queuing (PQ) Fair Queuing (FQ) Weighted Fair Queuing (WFQ) Weighted Round Robin Queuing (WRR) Deficit Weighted Round Robin Queuing (DWRR)

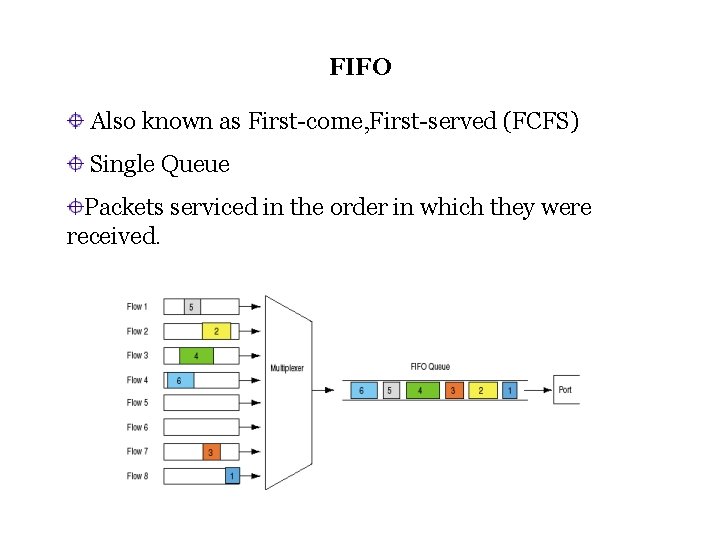

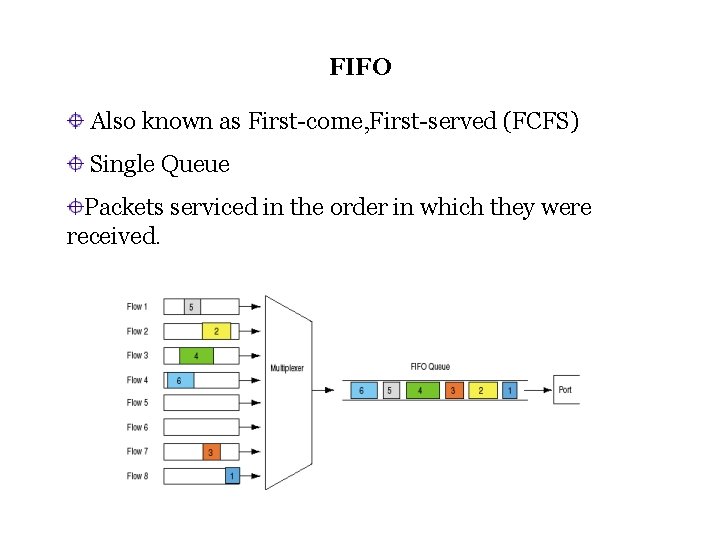

FIFO Also known as First-come, First-served (FCFS) Single Queue Packets serviced in the order in which they were received.

Benefits: • extremely low computational load • behavior of the queue is very predictable-maximum delay determined by the maximum depth of the queue. • as long as queue depth remains short, it provides simple contention resolution for network resolution without significant addition of queuing delay at each hop

Limitations: A single FIFO queue doesn’t allow organizing of packets. (Cannot differentiate packets from one class of service from another) Impacts all flows equally. (mean queuing delay increases as congestion increases- not suitable for real time applications) During congestion UDP flows are benefited more than TCP. (TCP flows may experience increased delay, jitter and reduction in the bandwidth consumed) Bursty flow can consume the entire buffer space and deny service to other flows.

Implementations: generally supported on an output port only when no other queue scheduling discipline is supported.

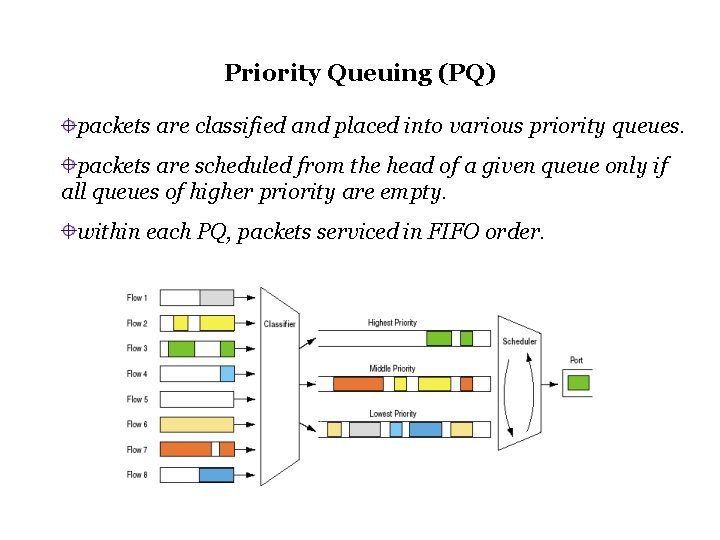

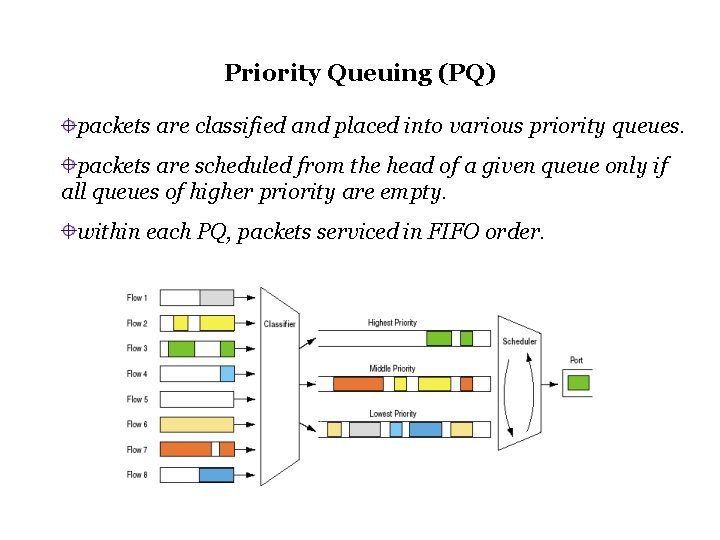

Priority Queuing (PQ) packets are classified and placed into various priority queues. packets are scheduled from the head of a given queue only if all queues of higher priority are empty. within each PQ, packets serviced in FIFO order.

Benefits: low computational load allows organizing packets and treating of different service classes differently.

Limitations: lower priority traffic may experience excessive delay. starvation for lower priority traffic. misbehaving high-priority flow can affect other flows sharing the same queue. cannot solve the problem of UDP flows favored over TCP flows during congestion, seen in FIFO.

Implementations and Applications: PQ generally configured in 2 modes. Strict priority Queuing Rate controlled Priority Queuing Applications of PQ: can enhance network stability during periods of congestions. supports the delivery of a high-throughput, low-delay, lowjitter and low-loss service class. (provided traffic conditioning is done at edges to prevent higher-priority queues to be over-subscribed. )

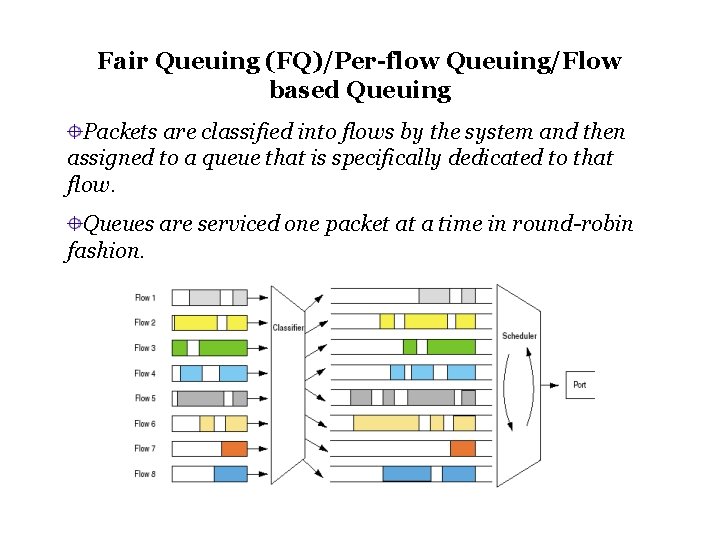

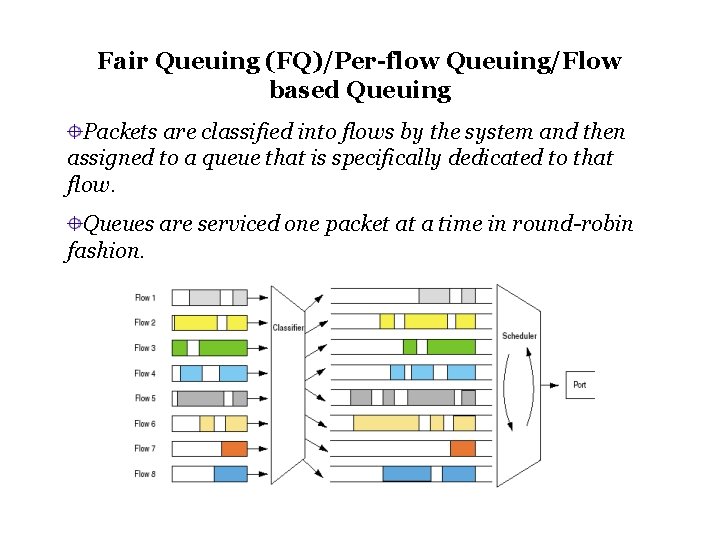

Fair Queuing (FQ)/Per-flow Queuing/Flow based Queuing Packets are classified into flows by the system and then assigned to a queue that is specifically dedicated to that flow. Queues are serviced one packet at a time in round-robin fashion.

Benefits: a misbehaving flow does not affect other flows. Limitations: implementations are in software. doesn’t support flows with different bandwidth requirement. flows containing large packet sizes get a larger size of output port bandwidth than flows with smaller packet sizes. sensitive to order of packet arrivals, may missing a whole cycle no mechanism to support real-time services. its not easy to classify network traffic into well defined flows. generally cannot be configured in core routers because of the large number of potential queues than need to be supported.

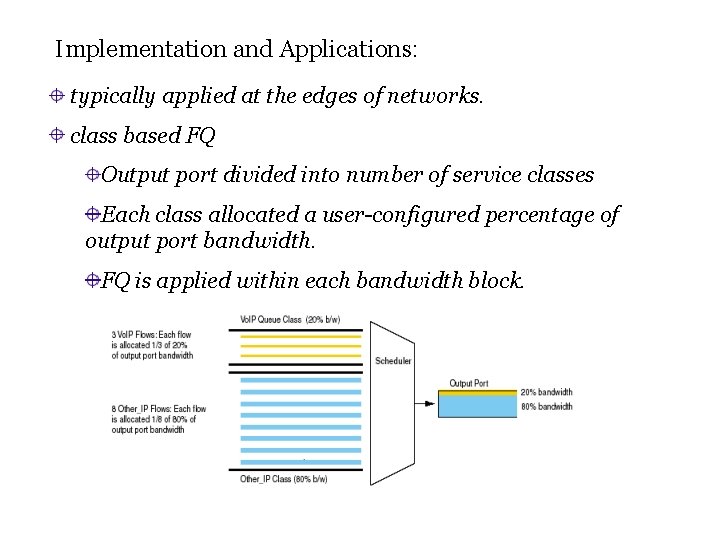

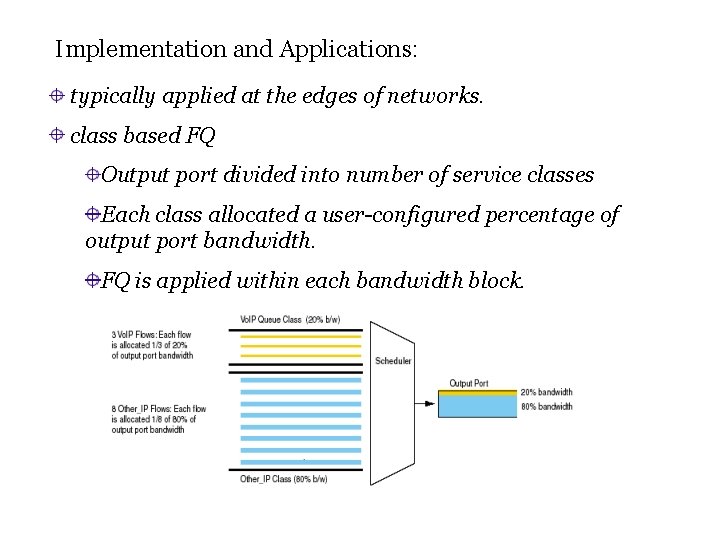

Implementation and Applications: typically applied at the edges of networks. class based FQ Output port divided into number of service classes Each class allocated a user-configured percentage of output port bandwidth. FQ is applied within each bandwidth block.

Weighted Fair Queuing (WFQ) Basis for a class of queue scheduling disciplines to address limitations of FQ. Supports flows with different bandwidth requirements by giving weights. Supports variable length packets so that flows with larger packets are not allocated more bandwidth than flows with smaller packets.

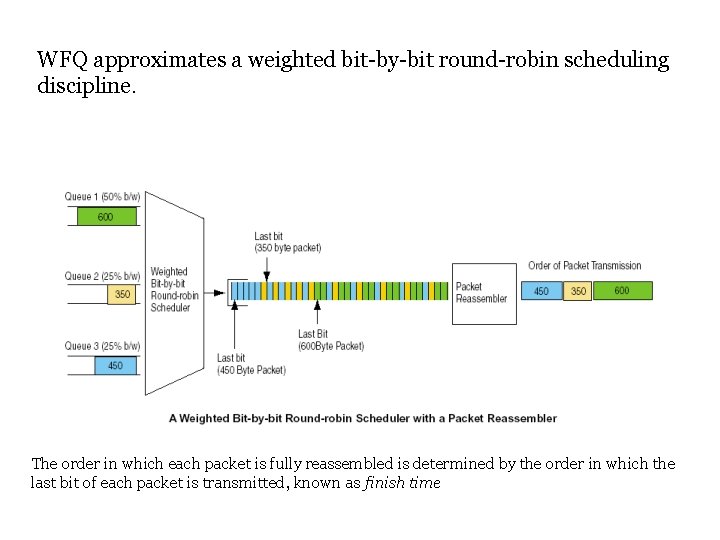

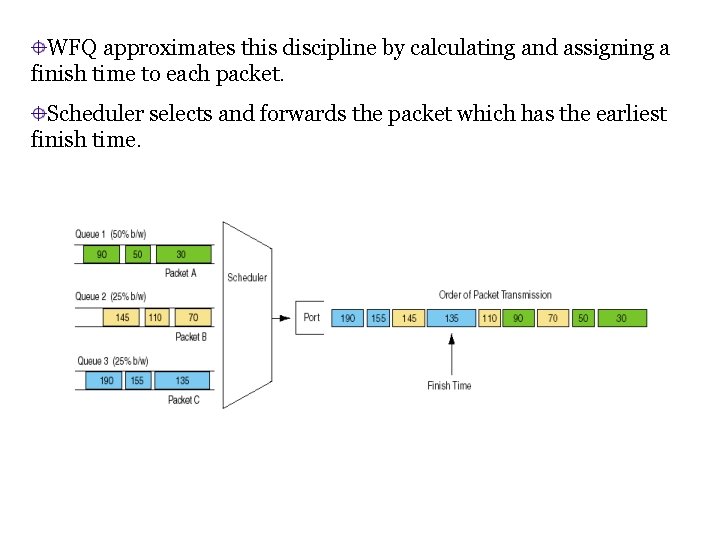

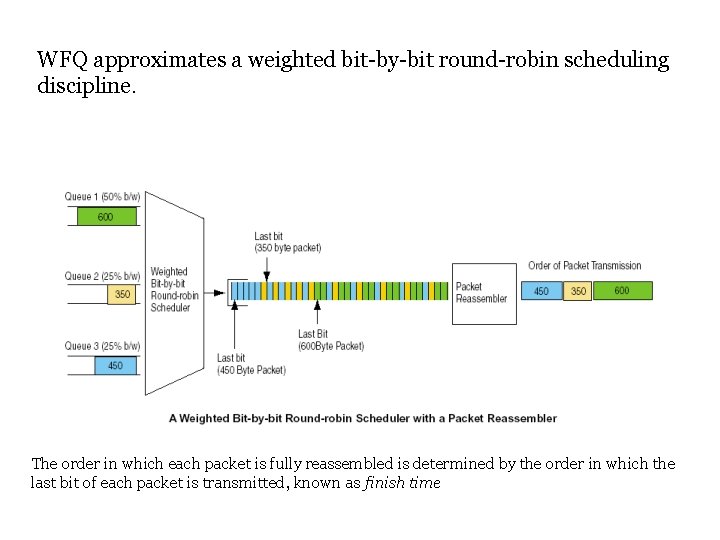

WFQ approximates a weighted bit-by-bit round-robin scheduling discipline. The order in which each packet is fully reassembled is determined by the order in which the last bit of each packet is transmitted, known as finish time

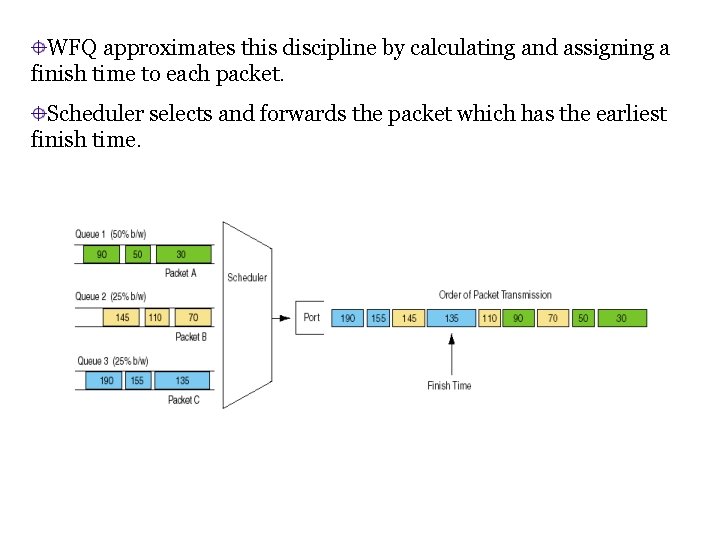

WFQ approximates this discipline by calculating and assigning a finish time to each packet. Scheduler selects and forwards the packet which has the earliest finish time.

Benefits: provides protection to each service class by ensuring a minimum level of output port bandwidth independent of the behavior of other service classes. With traffic conditioning at the edges of a network, WFQ guarantees a weighted fair share of bandwidth to each service class with bounded delay.

Limitations: Vendor implementations are software based and not hardware based. misbehaving flow with in the service class can impact other flows with in the same service class. complex algorithm. Needs maintenance of per-service class states and iterative scans of states on each packet arrival and departure. computational complexity impacts scalability. on high speed interfaces, minimizing delay to the granularity of a single packet transmission considering the computational expense. even if delays are bounded they can be very high.

Enhancements: Class based WFQ Self-clocking Fair Queuing (SCFQ) Worst case Fair weighted Fair Queuing (WF 2 Q) Worst case Fair weighted Fair Queuing+ (WF 2 Q+)

Implementations and Applications: Deployed at edges of networks to provide a fair distribution of bandwidth among a number of various service classes. WFQ can be configured to classify packets into a relatively large number of queues using a hash function. WFQ can be configured to allow the system to schedule a limited number of queues that carry aggregated traffic flows. Class based WFQ can be alternately be used to schedule a limited number of queues that carry aggregated traffic flows.

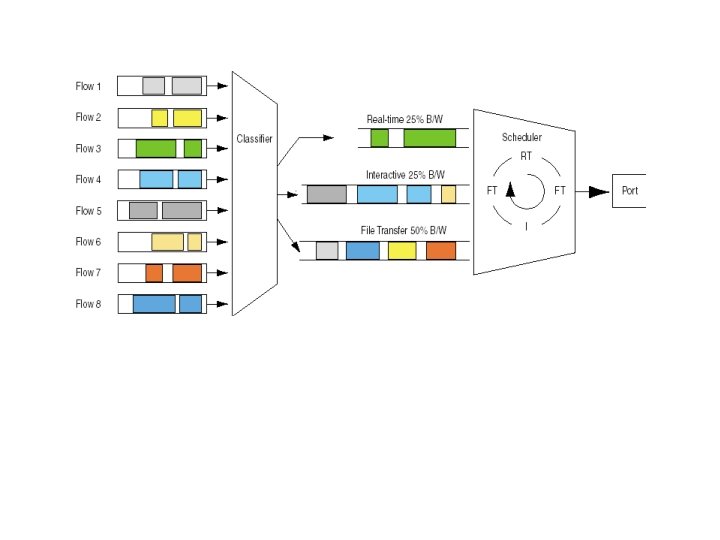

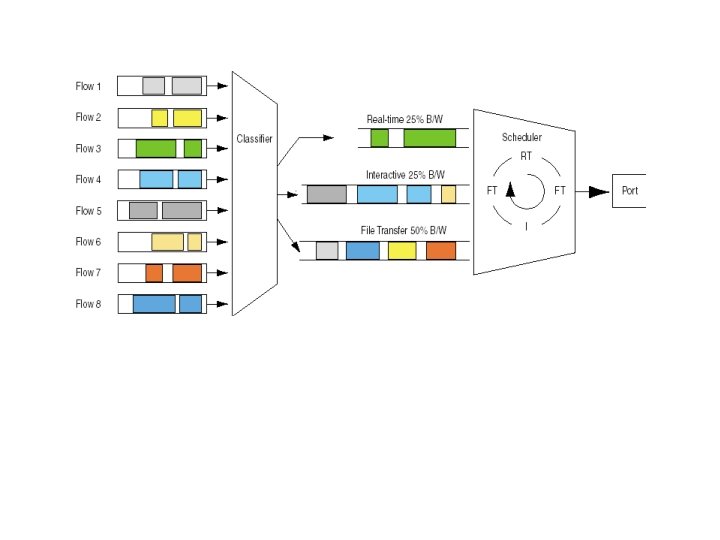

Weighted Round Robin (WRR)/ Class-based Queuing (CBQ) addresses the limitations of FQ model by supporting flows with significantly different bandwidth requirements. Addresses the limitations of the strict PQ model by ensuring that lower-priority queues are not denied access to buffer space and output port bandwidth. Algorithm Packets are classified into various service classes and then assigned to a queue that is specifically dedicated to that service class. Each of the queues are serviced in a round robin order.

The allocation of various amounts of bandwidth to various service classes are done by either: ØAllowing higher bandwidth queues to send more than a single packet each time that it is visited during a service round. ØAllowing each queue to send only one packet each time it is visited, but to visit higher-bandwidth queues multiple number of times in each service round. The delay experienced by packets, the jitter experienced by packets and the packet loss in each queue can be tuned.

Benefits: Can be implemented in hardware. Provides coarse control over the percentage of output port bandwidth allocated to each service class. Ensures that all service classes have access to at least some configured amount to bandwidth to avoid starvation. Provides an efficient mechanism to support the delivery of differentiated service classes to a reasonable number of highly aggregated traffic flows. Classification of traffic by service class provides more equitable management and stability for network applications than the use of priorities or preferences.

Limitations: provides the right percentage of bandwidth class only if all of the packets in all of the queues are of the same size or if the mean packet size is known in advance. Implementations and Applications: It can be deployed in both the core and edges of the network.

Deficit Weighted Round Robin(DWRR) Addresses limitations of WRR by supporting the weighted distribution of bandwidth in case of variable-length packets. Unlike WFQ this can be implemented in the hardware and so can be used on high-speed interfaces. Each queue is configured with a number of parameters. ØA weight that defines the percentage of output bandwidth allocated. ØA Deficit. Counter that specifies the total number of bytes the queue is permitted to transmit each time it is visited by the scheduler. ØA quantum of service proportional to the weight of the queue. The Deficit. Counter for a queue is increased by quantum each time it is visited by the scheduler.

Algorithm: Scheduler goes to each non-empty queue and determines the number of bytes in the packet at the head of the queue. The variable Deficit. Counter is incremented by the value quantum. If size of the packet at the head of the queue is greater than the variable Deficit. Counter scheduler moves on to next queue. If less than or equal to the Deficit. Counter then packet is transmitted to the output port and the Deficit. Counter is reduced by that amount. If queue is empty the Deficit. Counter is made zero.

Benefits: Provides protection among different flows. Provides precise control over the percentage of output port bandwidth allocated to each service class in case of variablelength packets. All service classes have access to at least some configured amount of output bandwidth. Simple and inexpensive algorithm with no maintenance of lot of per-service class state.

Limitations: Because of highly aggregated service classes, a misbehaving flow within a service class may impact other flows in that service class. No end-to-end delay guarantees. May not be accurate.