Questionnaires as Instruments Questionnaires Most frequently used survey

- Slides: 22

Questionnaires as Instruments • Questionnaires – Most frequently used survey instrument – Are scientific instrument just like an MRI machine – Quality of the questionnaire determines accuracy and precision of the measurements – Preferences and attitudes • most often these are measured with self-report scales • participants respond on rating scales – Usually Likert scales from 1 -7 or 1 -9 – For example, an assessment of emotional well-being might include the following items: My mood is generally positive. Strongly disagree 1 ------ 2 ------ 3 ------ 4 ------ 5 ------ 6 ----- 7 Strongly agree I am often sad. Strongly disagree 1 ------ 2 ------ 3 ------ 4 ------ 5 ------ 6 ----- 7 Strongly agree

Questionnaires as Instruments • Psychologists measure different types of variables: – Demographic: (e. g. , age, gender, race, socioeconomic status) • To check for response bias • To categorize data i. e. differences between young and older adults • Use a well know template such as this at University of Arizona FIGURE 5. 5 Although ethnic background is an important demographic variable, accurately classifying people on this variable is not an easy task.

Reliability of Self-Report Measures • Reliability refers to the consistency of measurement. – Assessed by Test-retest reliability: • Administer measure two times to the sample. Individuals’ scores should be consistent over time. • A high correlation between the two scores indicates good test -retest reliability (r >. 80) • r value ranges from 0 (no correlation) to 1 (perfect correlation)

Reliability of Self-Report Measures • How do we improve reliability? – Many similar items on the same construct improves reliability • Multiple questions that focus on the same construct (operational definition) • For example several questions on “quality of sleep” – When the sample is diverse relative to the construct “quality of sleep” • Some participants have poor sleep while others have great sleep • To avoid restricted range of measurements – Testing situation is free of distractions and instructions are clear.

Reliability of Self-Report Measures – Reliable measures make us more confident that we are consistently measuring a construct within a sample, but are reliable measures truthful? • I could reliably measure your research methods knowledge by measuring your height. The taller you are, the better your score. • Is this a truthful or accurate measure of research methods knowledge? – Note: We expect some measures to not produce consistent scores over time. • When people change on a particular variable over time • we expect the measure to have low test-retest reliability • Scores on a math exam before and after taking a math course

Validity of Self-Report Measures • Validity refers to the truthfulness of a measure. • A valid measure assesses what it is intended to measure. – Construct Validity: Does an instrument measure theoretical construct (concept) it was designed to measure? • Such as sleep quality

Validity of Self-Report Measures – Construct Validity: – This seems like a straightforward question, but consider widely used measures of intelligence which include items such as: • comprehension: “Why would people use a secret ballot? ” • vocabulary: “What does dilatory mean? ” • similarities: “How are a telephone and a radio alike? ” – Do these items (and others like them) assess intelligence in a valid manner? • The construct validity of intelligence measures is a matter of heated debate.

Validity of Self-Report Measures • Establishing the construct validity of a measure depends on – convergent validity and – discriminant validity. • Convergent validity refers to the extent to which two measures of the same construct are correlated (go together). – Two different tests of intelligence • Discriminant validity refers to the extent to which two measures of different constructs are not correlated (do not go together).

Example of Construct Validity • Suppose you have developed a new measure of self-esteem (i. e. , a person’s sense of self-worth). • Which constructs listed below would you expect – to show convergent validity ? – to have discriminant validity? – Measures of: • Depression • Well-being • Social anxiety • Life satisfaction • Grade point average

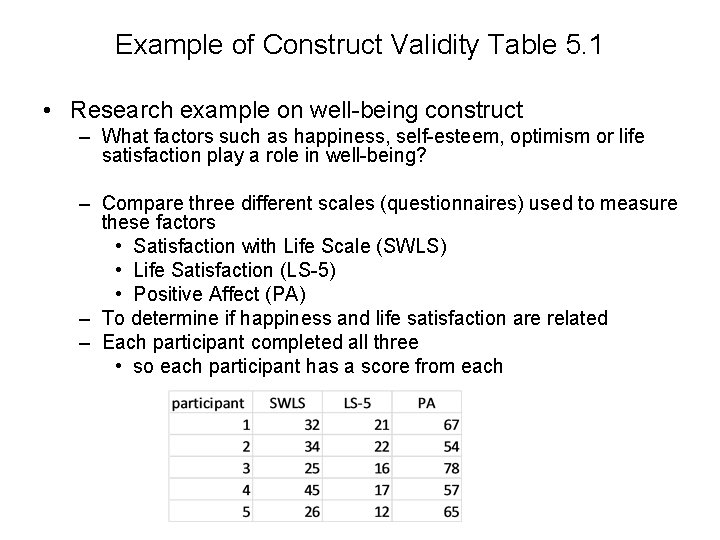

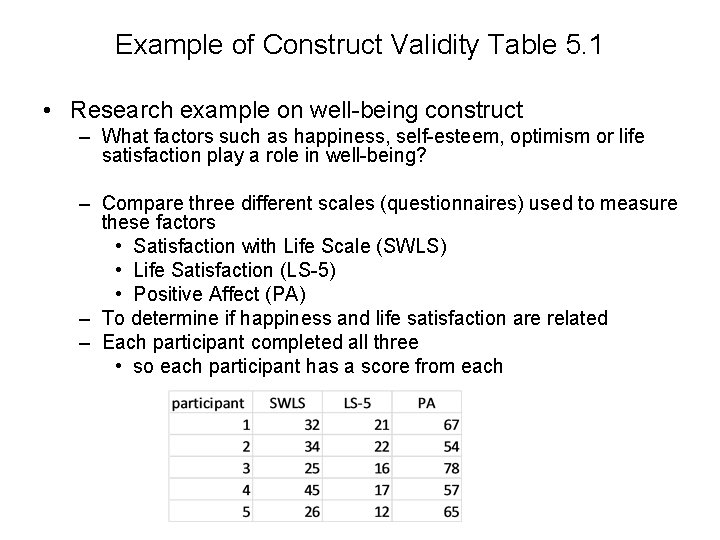

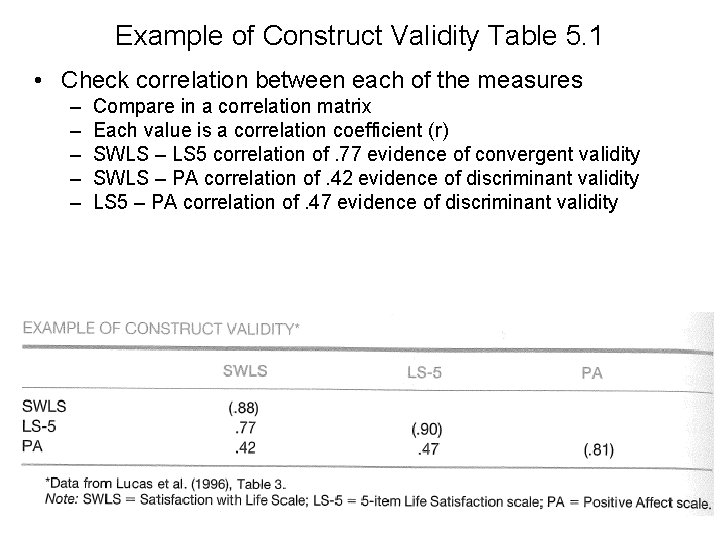

Example of Construct Validity Table 5. 1 • Research example on well-being construct – What factors such as happiness, self-esteem, optimism or life satisfaction play a role in well-being? – Compare three different scales (questionnaires) used to measure these factors • Satisfaction with Life Scale (SWLS) • Life Satisfaction (LS-5) • Positive Affect (PA) – To determine if happiness and life satisfaction are related – Each participant completed all three • so each participant has a score from each

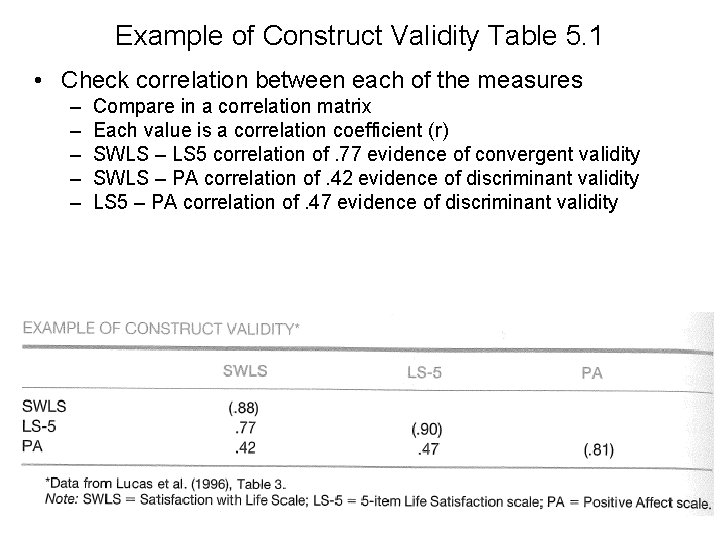

Example of Construct Validity Table 5. 1 • Check correlation between each of the measures – – – Compare in a correlation matrix Each value is a correlation coefficient (r) SWLS – LS 5 correlation of. 77 evidence of convergent validity SWLS – PA correlation of. 42 evidence of discriminant validity LS 5 – PA correlation of. 47 evidence of discriminant validity

Constructing a Questionnaire • • • The best choice for selecting a questionnaire is to use one that already has been established as reliable and valid. If a suitable measure cannot be found, researchers choose to create their own questionnaire. It may seem easy, but a lot goes into developing a reliable and valid questionnaire.

Constructing a Questionnaire • Important steps for preparing a questionnaire: 1. Decide what information should be sought, demographics, constructs, 2. Decide how to administer the questionnaire 3. Write a first draft of the questionnaire. (borrow from other surveys) 4. Reexamine and revise the questionnaire 5. Pretest the questionnaire using a sample of respondents under conditions similar to the planned administration of the survey. 6. Edit the questionnaire, and specify the procedures for its use.

Guidelines for Effective Wording of Questions • Choose how participants will respond: – free-response: Open-ended (fill in the blank) questions allow greater flexibility in responses but are difficult to code. – closed-response: • are quicker to respond to and easier to score • may not accurately describe individuals’ responses • For example: Multiple choice, True-false, Likert Scale • Use simple, direct, and familiar vocabulary – keep questions short (20 or fewer words) – Respondents will interpret the meaning of words – Sensitivity to cultural and linguistics differences in word usage

Guidelines for Effective Wording of Questions • Write clear and specific questions: – Avoid double-barreled questions (e. g. , “Do you support capital punishment and abortion? ”). – Place any conditional phrases at the beginning of the question (e. g. , “If you were forced to leave your current city, where would you live? ” – Avoid leading questions (e. g. , “Most people favor gun control; what do you think? ”). – Avoid loaded (emotion-laden) questions (e. g. , “People who discriminate are racist pigs: T or F”). – Avoid response bias with Likert Scale questions • Word some of the questions in the opposite direction • Original: I get plenty of sleep 1 is Strongly disagree • Reversed : I do not get enough sleep 7 is Strongly disagree

Guidelines for Ordering of Questions • For self-administered questionnaires, place the most interesting questions first to capture respondents’ attention. • For personal and telephone interviews, place demographic questions first to establish rapport with the respondent. • Use funnel questions: Start with the most general questions, and move to more specific questions for a given topic. • Use filter questions: These questions direct respondents to the survey questions that apply directly to them.

Thinking Critically About Survey Research • Correspondence Between Reported and Actual Behavior – People’s responses on surveys may not be truthful. • Reactivity: People sometimes don’t report truthful responses, because they know the information is being recorded. • Social Desirability occurs when people respond to surveys as they think they “should, ” rather than how they actually feel or believe.

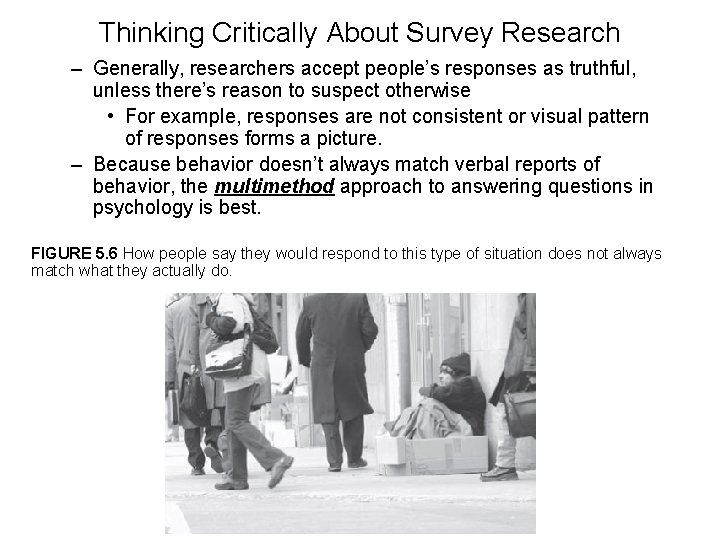

Thinking Critically About Survey Research – Generally, researchers accept people’s responses as truthful, unless there’s reason to suspect otherwise • For example, responses are not consistent or visual pattern of responses forms a picture. – Because behavior doesn’t always match verbal reports of behavior, the multimethod approach to answering questions in psychology is best. FIGURE 5. 6 How people say they would respond to this type of situation does not always match what they actually do.

Thinking Critically About Survey Research • Correlation and Causality – “Correlation does not imply causation. ” – Example: Correlation between being socially active (outgoing) and life satisfaction – Three possible causal relationships: • A causes B – being outgoing causes people to be more satisfied with their life • B causes A – being more satisfied with life causes people to be more outgoing • C causes A and B – A third variable “number of friends” can explain the relationship between socially active (outgoing) and life satisfaction – A correlation that can be explained by a third variable is called a “spurious relationship. ”

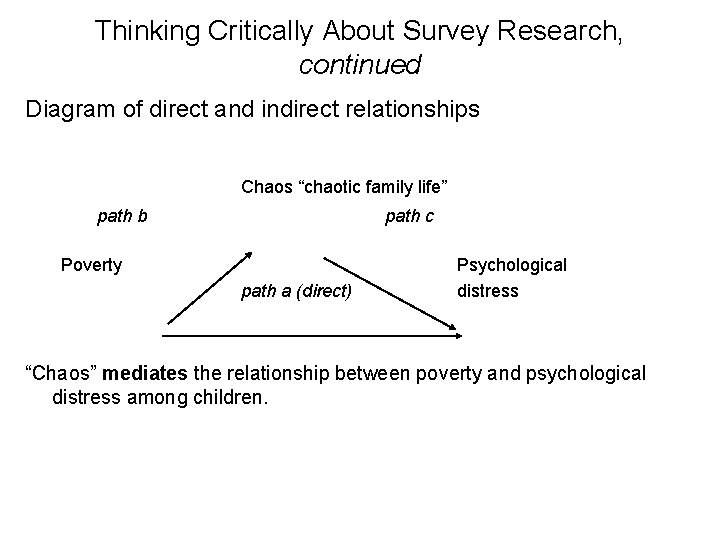

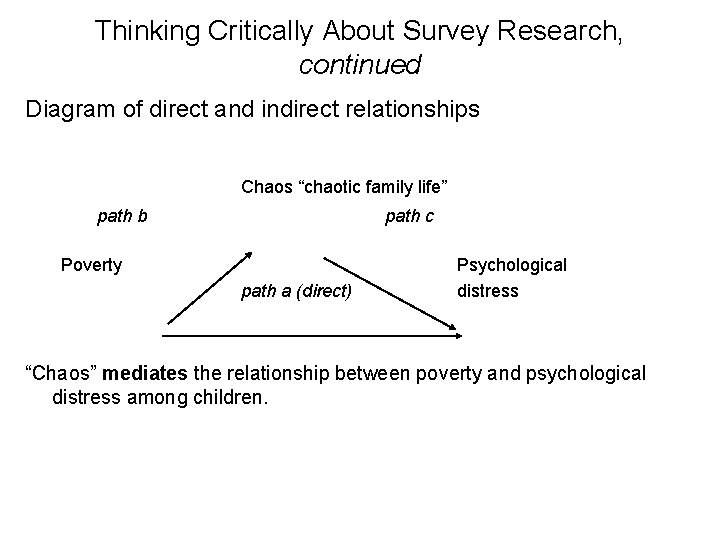

Thinking Critically About Survey Research, continued Diagram of direct and indirect relationships Chaos “chaotic family life” path b path c Poverty path a (direct) Psychological distress “Chaos” mediates the relationship between poverty and psychological distress among children.

Thinking Critically About Survey Research, continued • Path analysis example – A moderator variable may affect the direction and strength of the relationships between Poverty and Psychological distress – Possible moderators: • Sex of the child • Population density (e. g. , rural, urban) • Personality features of children (e. g. , resilience)

Thinking Critically About Survey Research, continued • Path analysis – – Helps us to understand relationships among variables But these relationships are still correlational Cannot make definitive causal statements Other untested variables may be important