Questionnaire Construction Eight Principles Dr John V Richardson

- Slides: 30

Questionnaire Construction: Eight Principles Dr. John V. Richardson Jr. Professor of Information Studies UCLA Department of Information Studies

Presentation Outline l Question Design l Validity Concerns l Methodological Issues l Mini Case Studies

Question Design and Validity Concerns l Eight issues which must be addressed to insure validity of survey results: – – – – Intent of the question Clarity of the question Unidimensionality Scaling Number of questions to include Timing of administration Question order Sample sizes

1. Intent of the Question l RUSA Behavioral Guidelines (1996) – Approachability – Interest in the query, and – Active listening skills l Uni. Focus (300 factor analyses of the hospitality industry) – Friendliness – Helpfulness or accuracy – Promptness of service

2. Clarity of the Question l Data from unclear questions – may be invalid l Use instructions – to enhance question clarity

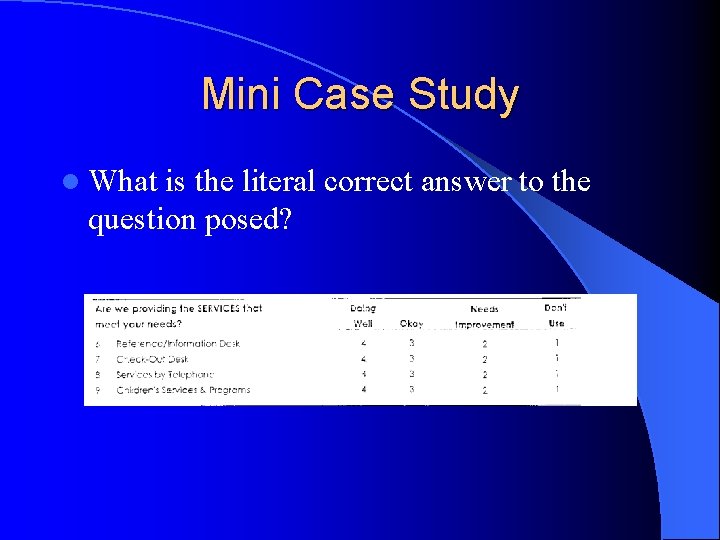

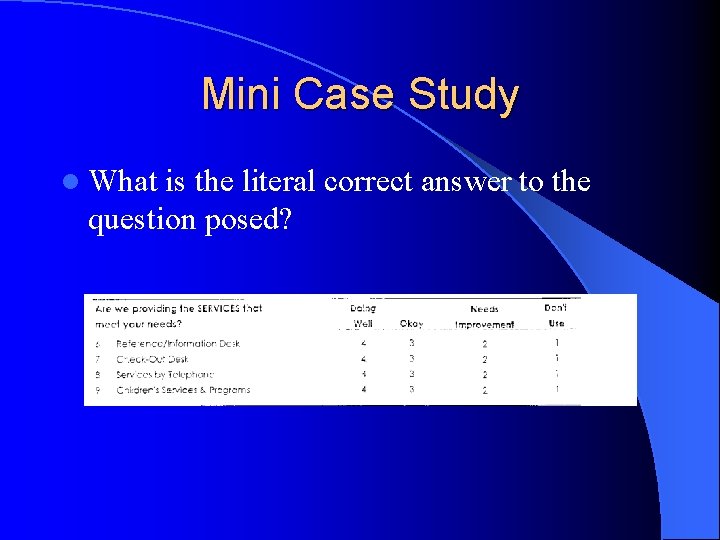

Mini Case Study l What is the literal correct answer to the question posed?

3. Unidimensionality l Unidimensionality is a statistical concept that describes the extent to which a set of questions all measure the same topic

Constellation of Attitudes l Satisfaction l Delight l Intent to Return l Feelings about experiences l Value l Loyalty

4. Scaling l Three key characteristics: – Does the scale have the right number of points (called response options)? – Are the words used to describe the scale points appropriate? – Is there a midpoint or neutral point on the scale?

A. Response Options l. A common four point scale: – Very good, fair, and poor l Distance between very good and good is not the same as the distance between fair and poor l Numeric values associated with these options: – 4, 3, 2, and 1 may lead to invalid results…

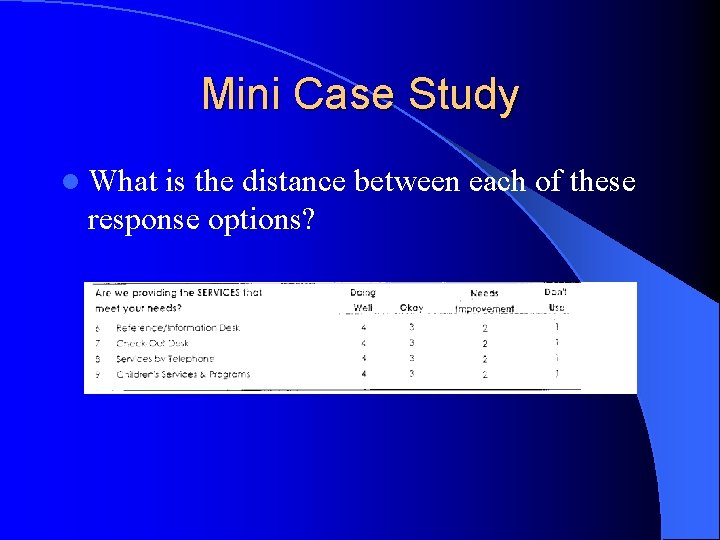

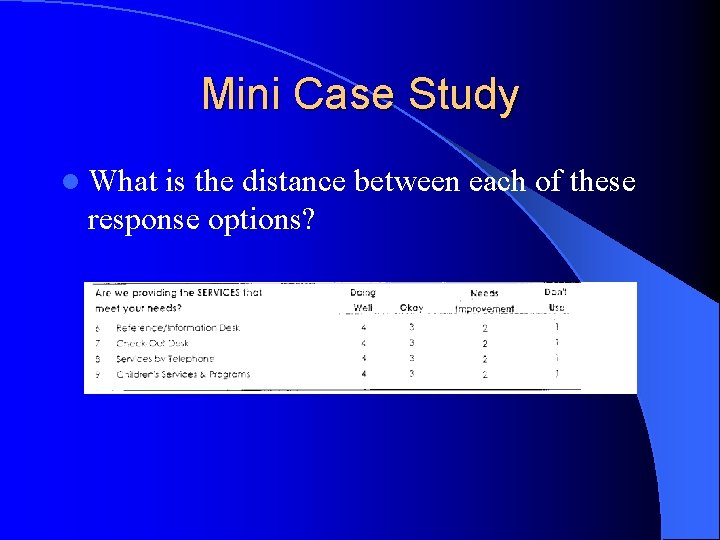

Mini Case Study l What is the distance between each of these response options?

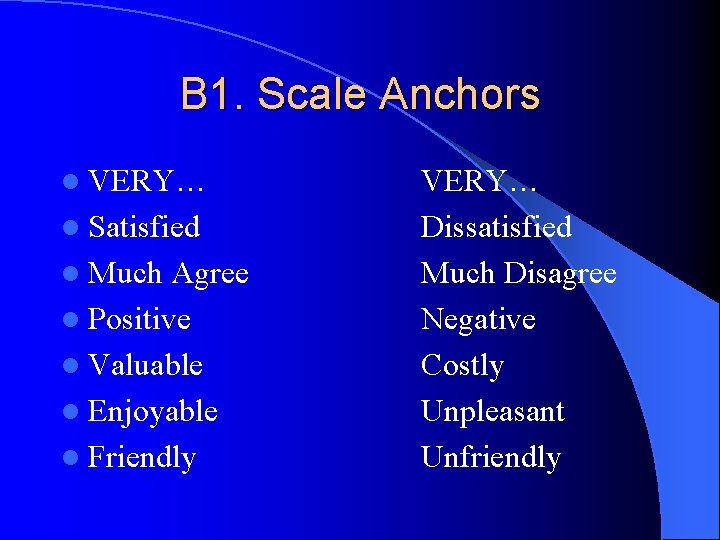

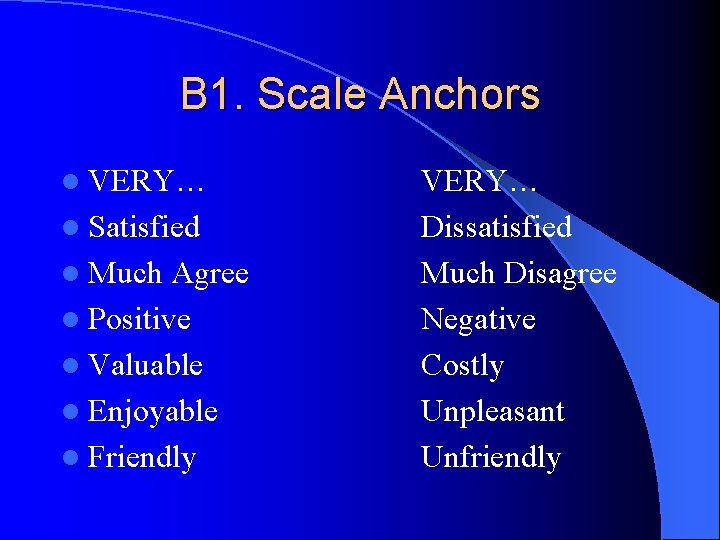

B 1. Scale Anchors l VERY… l Satisfied l Much Agree l Positive l Valuable l Enjoyable l Friendly VERY… Dissatisfied Much Disagree Negative Costly Unpleasant Unfriendly

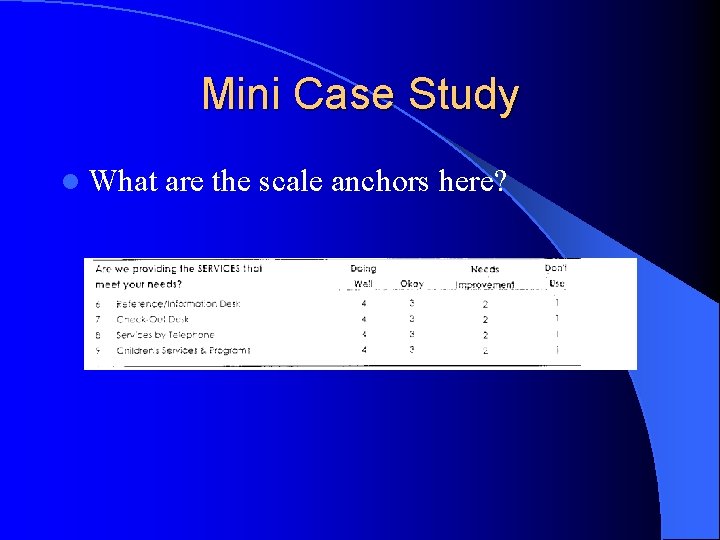

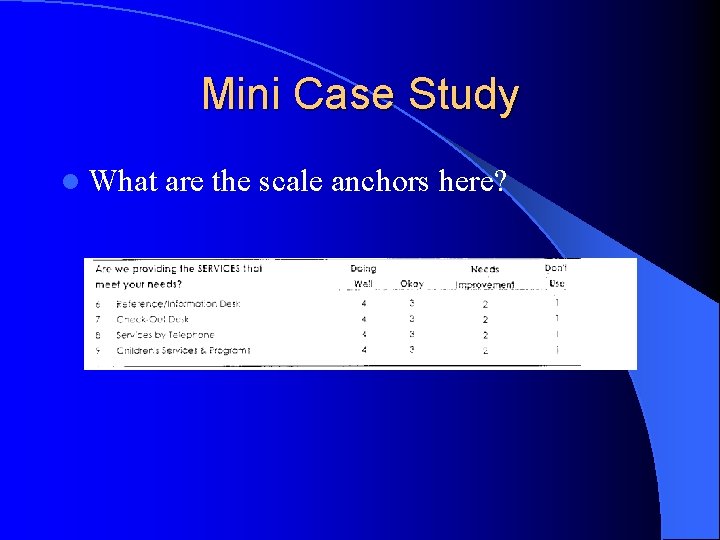

Mini Case Study l What are the scale anchors here?

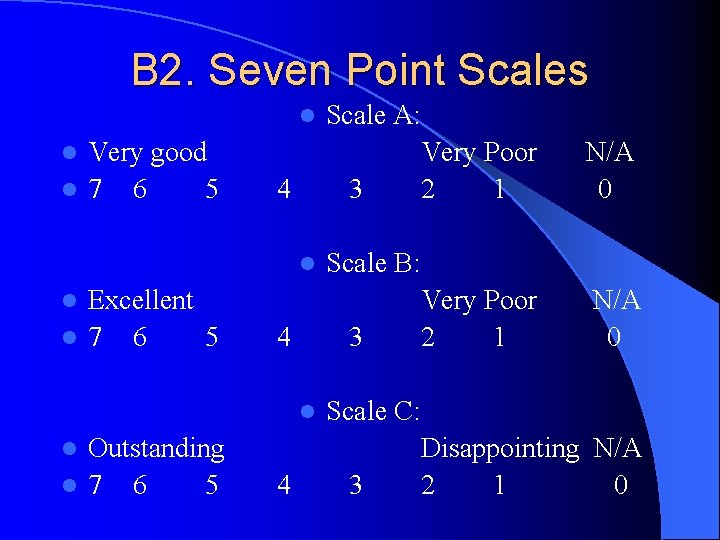

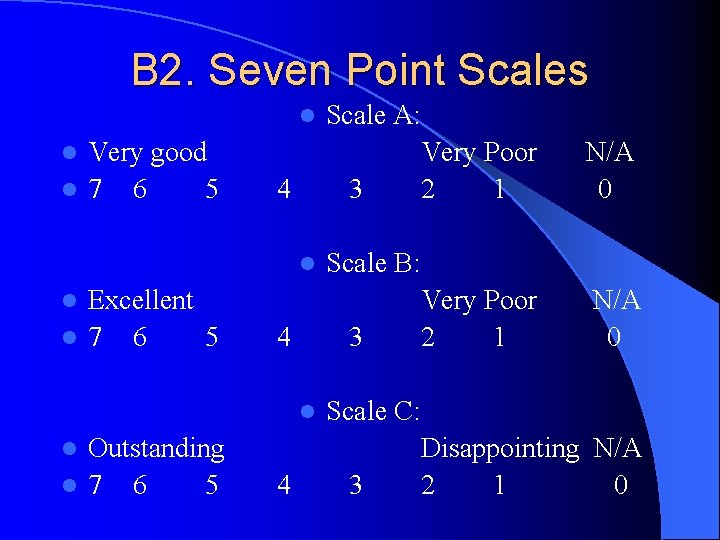

B 2. Seven Point Scales l Very good l 7 6 5 Scale A: l 4 3 l Excellent l 7 6 5 3 l Outstanding l 7 6 5 Very Poor 2 1 N/A 0 Scale C: l 4 N/A 0 Scale B: l 4 Very Poor 2 1 3 Disappointing N/A 2 1 0

C. Wording of Options l The only difference in the preceding slide are the response anchors… – Is very good a rigorous enough expectation? – Would excellent be better? – What about outstanding?

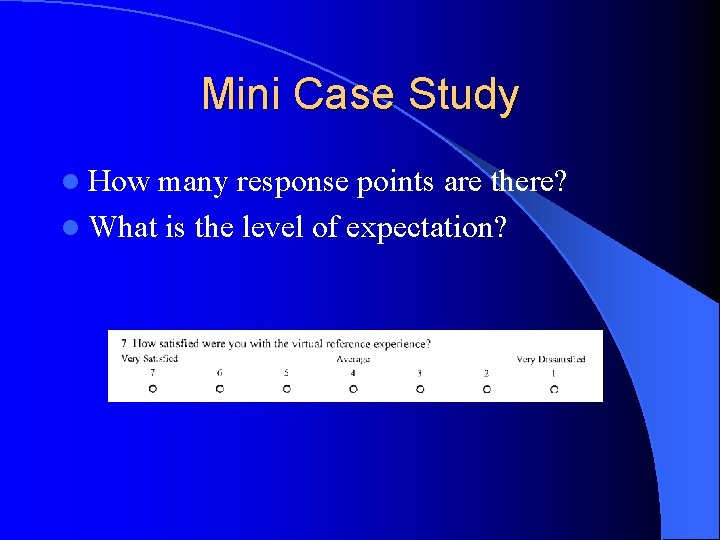

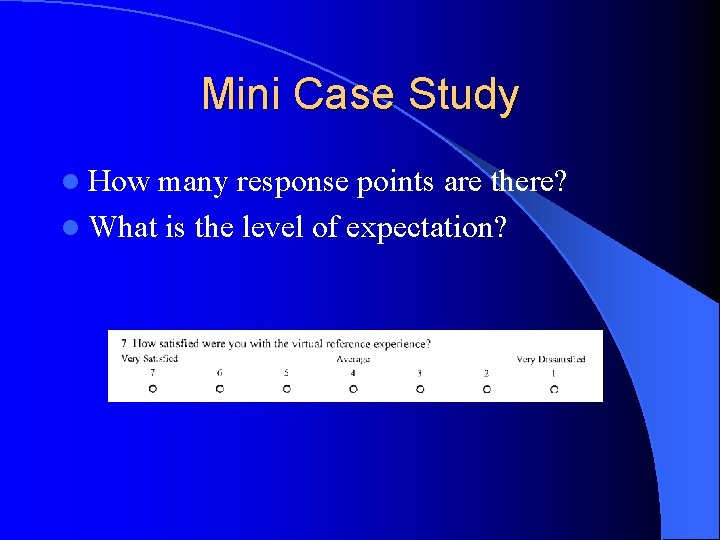

Mini Case Study l How many response points are there? l What is the level of expectation?

D. Midpoint or Neutral Point l The rate of skipped questions increases when a neutral response is not included l Use an odd number of response points l Also, a neutral response provides a way to treat missing data

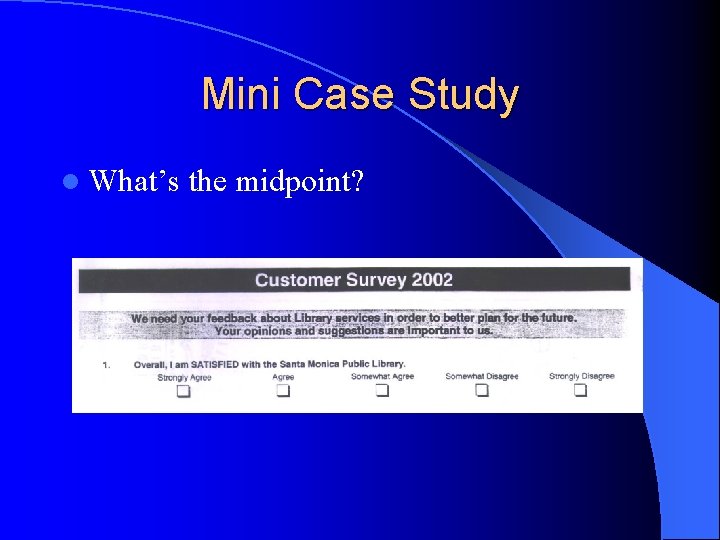

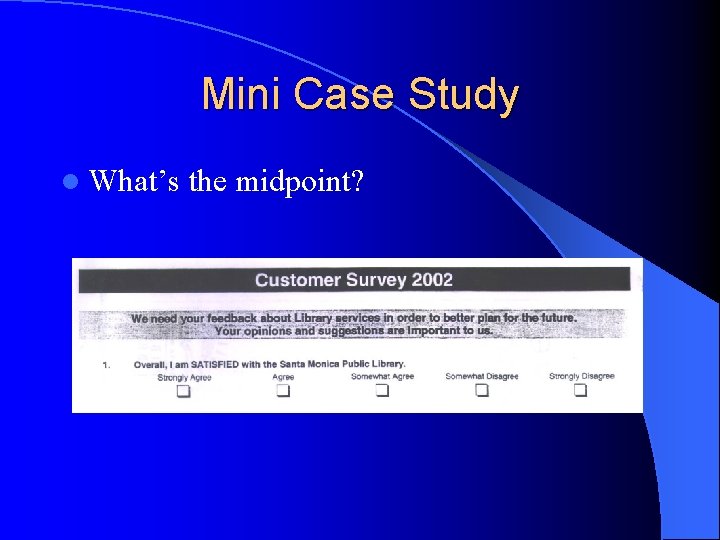

Mini Case Study l What’s the midpoint?

5. Number of Questions l Short enough – So that users will answer all the questions l Long enough – So that enough information is gathered for decision making purposes

A. Longer Surveys l Take more time and effort on the part of the respondent l High perceived “cost of completion” results in partially or completely unanswered questions in surveys

B. Likelihood of Complete Responses l Higher salience or more important the topic to the user, the greater the likelihood that they will complete a longer survey l Multiple questions measuring a single attitude make for longer surveys, although l They also aid in evaluating user attitudes

6. Timing and Ease l During or immediately following – Blurring together? l Cards or mail method (IVR=interactive voice response) l Delay seems to cause more positive results l Electronic reference allows for ease of administration (more on Pa. SS™ later)

7. Question Order l Specific questions first – Technology, resources, or staffing l More general second – Value, overall satisfaction, intent to return – Halo Effect l Four question survey: one overall and three specific questions – Asking general question last produces better data

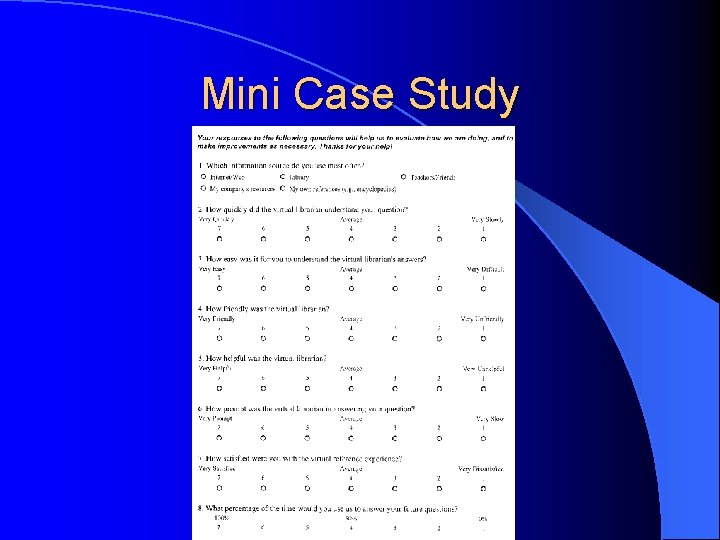

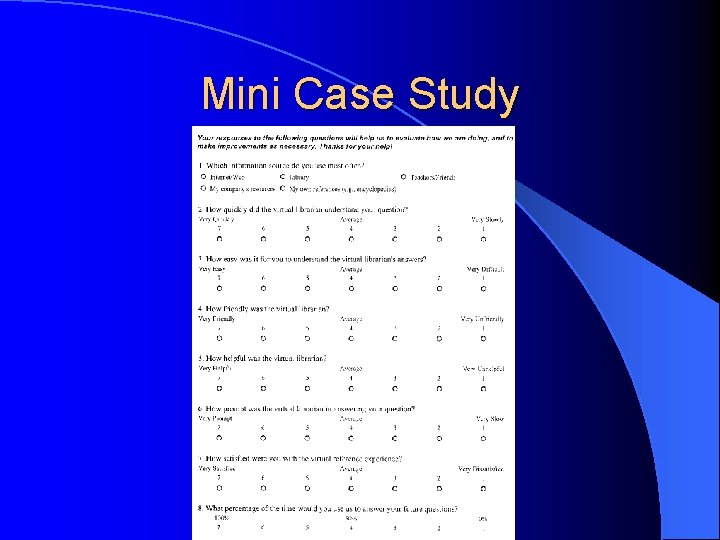

Mini Case Study

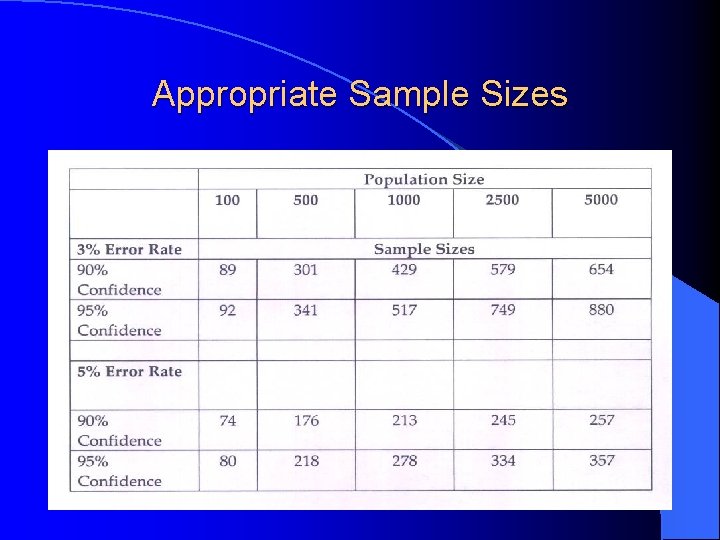

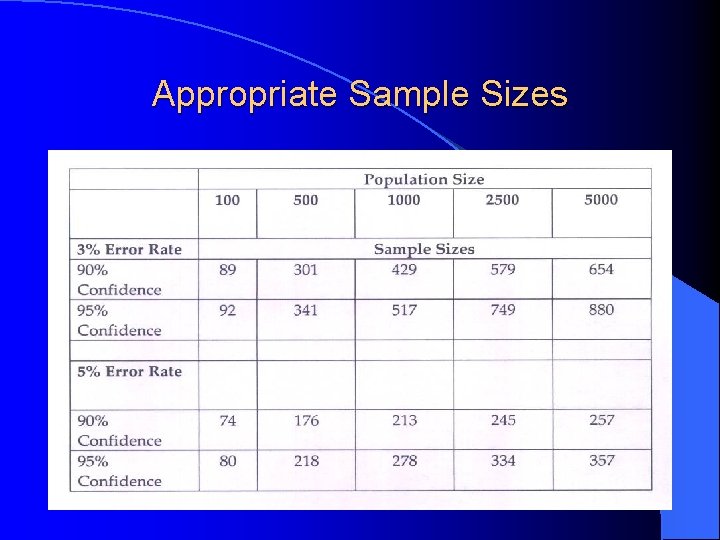

8. Sample Sizes l Depends upon population size – Error rate – Confidence l Consult a table of sample sizes

A. Error Rate l Defined as the precision of measurement l Accurate l Has to plus or minus some figure to be precise enough to know which direction service quality is going (i. e. , up or down)

B. Confidence l Refers to the overall confidence in the results: –. 99 confidence level means that one can be relatively certain that the results are within that range 99% of the time –. 95 confidence level is common –. 90 confidence level is less common, but…a 90 CL requires fewer respondents, but will result in a less accurate survey

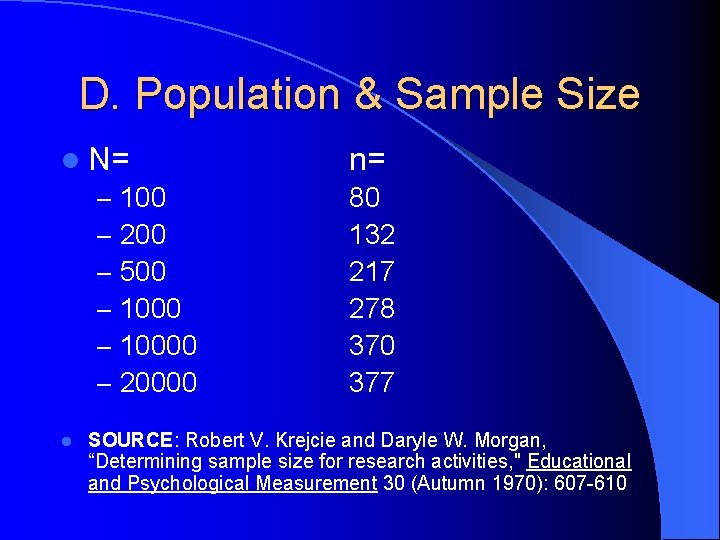

C. Population and Sample l Population (N) refers to the people of interest l Sample (n) refers to the people measured to represent the population l Response rate is the proportion of the population who respond to the survey

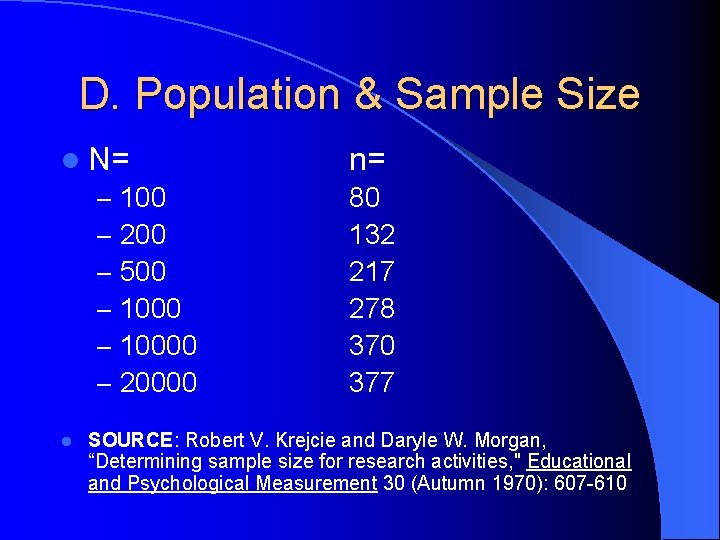

D. Population & Sample Size l N= – 100 – 200 – 500 – 10000 – 20000 l n= 80 132 217 278 370 377 SOURCE: Robert V. Krejcie and Daryle W. Morgan, “Determining sample size for research activities, " Educational and Psychological Measurement 30 (Autumn 1970): 607 -610

Appropriate Sample Sizes