Query Suggestion Query Suggestion n A variety of

- Slides: 17

Query Suggestion

Query Suggestion n A variety of automatic or semi-automatic query suggestion techniques have been developed Ø Ø n Goal is to improve effectiveness by matching related/similar terms Semi-automatic techniques require user interaction to select best suggested terms Query expansion is a related technique Ø Alternative queries, usually offer more terms 2

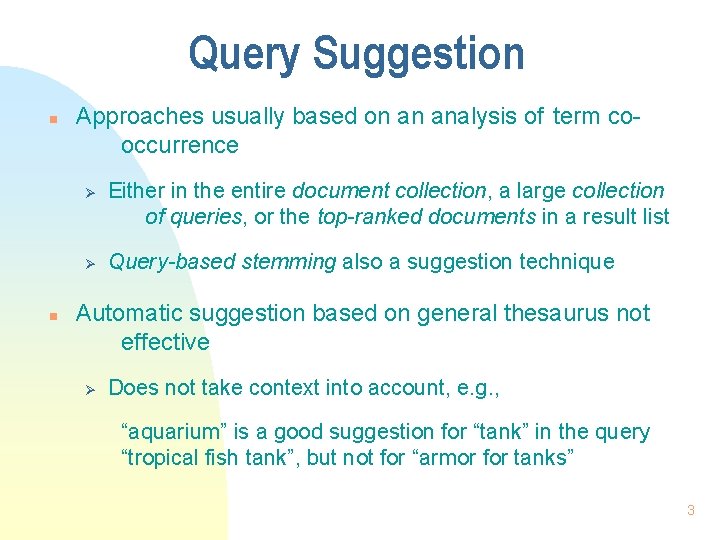

Query Suggestion n Approaches usually based on an analysis of term cooccurrence Ø Ø n Either in the entire document collection, a large collection of queries, or the top-ranked documents in a result list Query-based stemming also a suggestion technique Automatic suggestion based on general thesaurus not effective Ø Does not take context into account, e. g. , “aquarium” is a good suggestion for “tank” in the query “tropical fish tank”, but not for “armor for tanks” 3

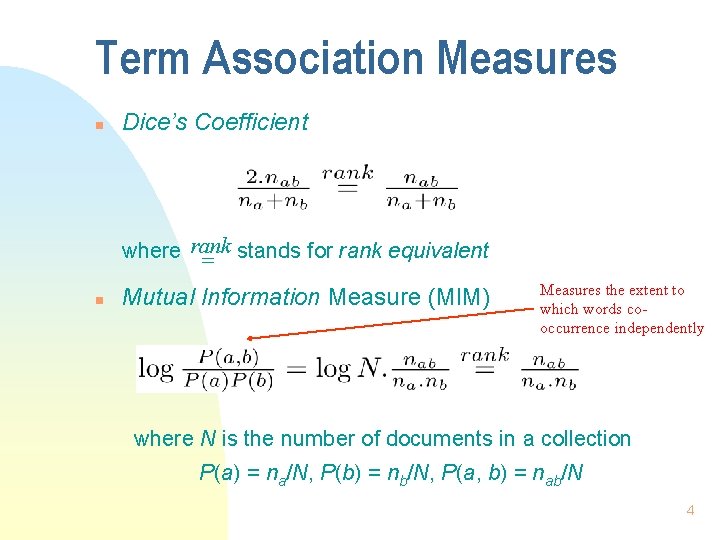

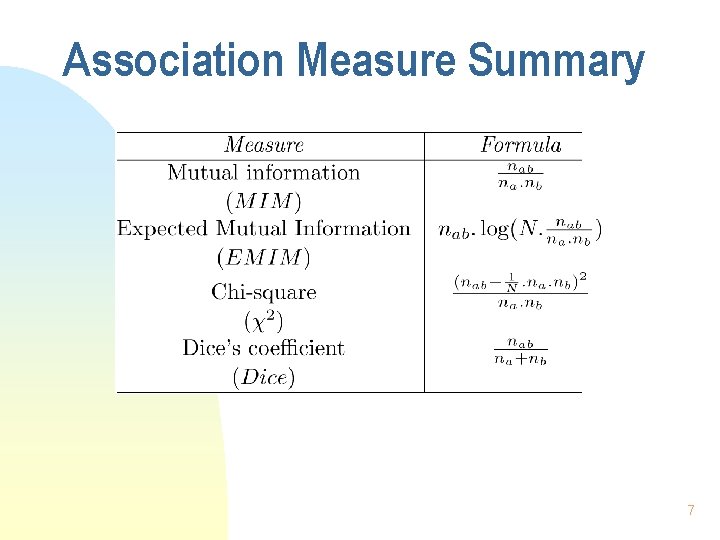

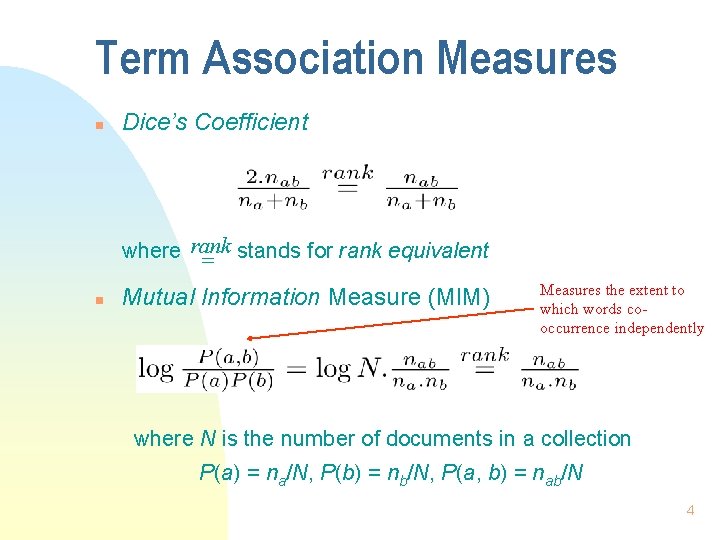

Term Association Measures n Dice’s Coefficient where rank = stands for rank equivalent n Mutual Information Measure (MIM) Measures the extent to which words cooccurrence independently where N is the number of documents in a collection P(a) = na/N, P(b) = nb/N, P(a, b) = nab/N 4

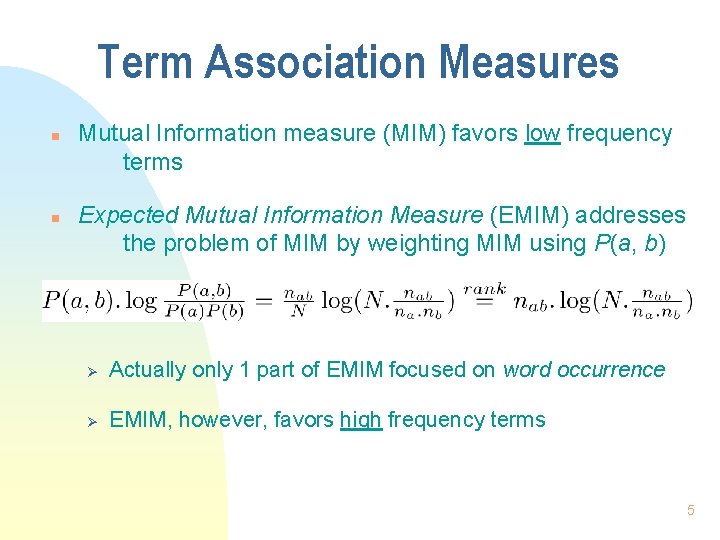

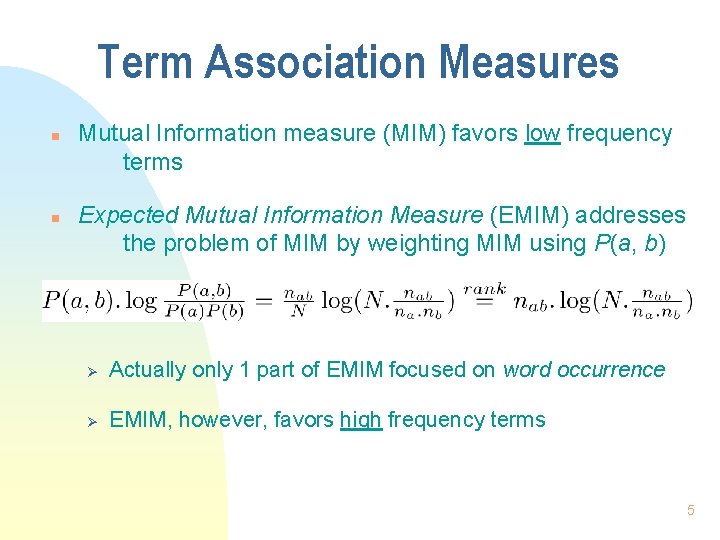

Term Association Measures n n Mutual Information measure (MIM) favors low frequency terms Expected Mutual Information Measure (EMIM) addresses the problem of MIM by weighting MIM using P(a, b) Ø Actually only 1 part of EMIM focused on word occurrence Ø EMIM, however, favors high frequency terms 5

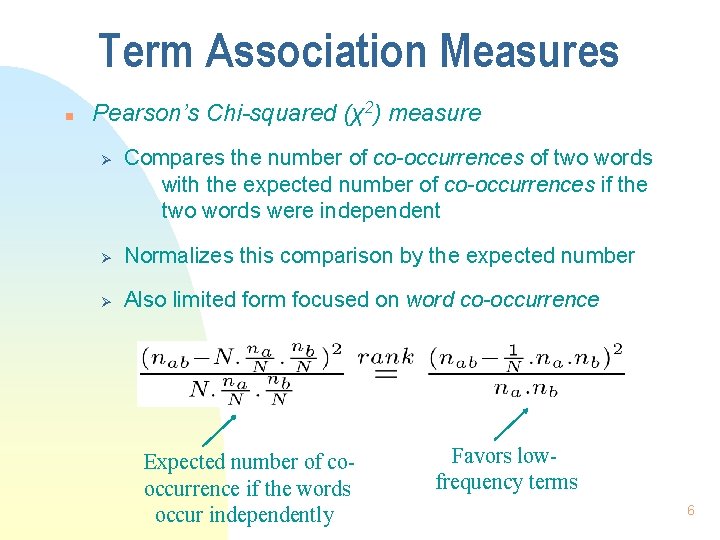

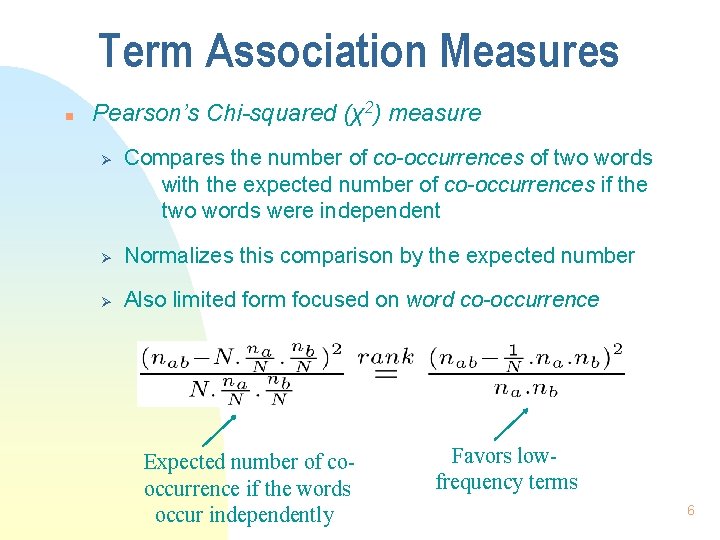

Term Association Measures n Pearson’s Chi-squared (χ2) measure Ø Compares the number of co-occurrences of two words with the expected number of co-occurrences if the two words were independent Ø Normalizes this comparison by the expected number Ø Also limited form focused on word co-occurrence Expected number of cooccurrence if the words occur independently Favors lowfrequency terms 6

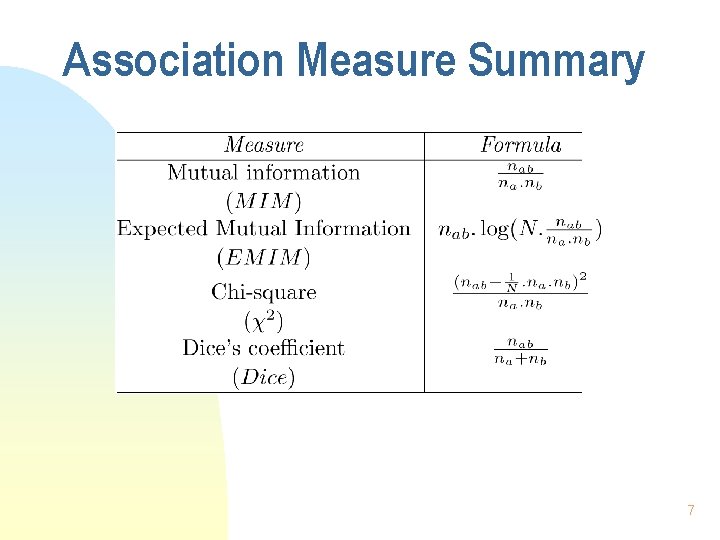

Association Measure Summary 7

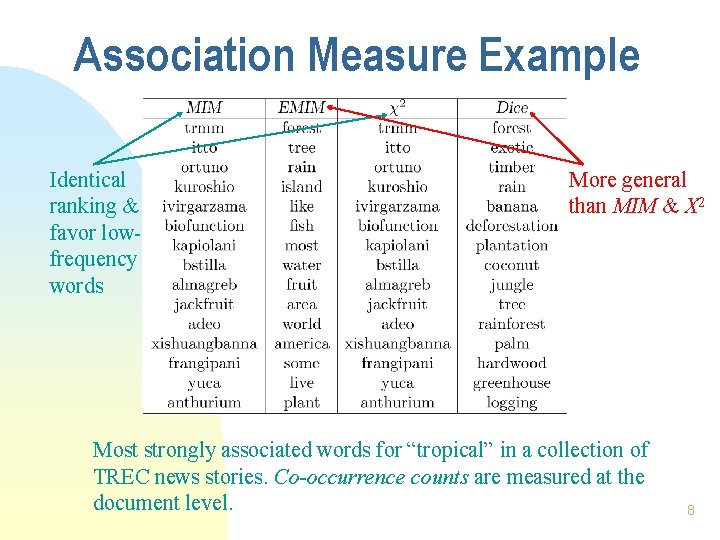

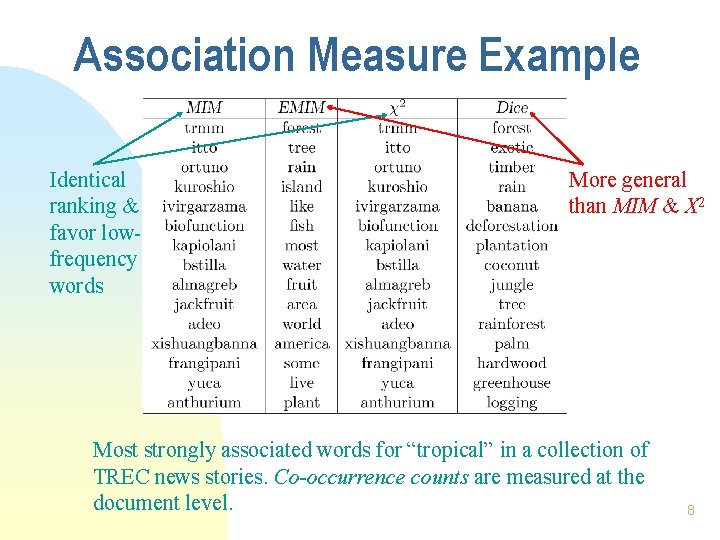

Association Measure Example Identical ranking & favor lowfrequency words More general than MIM & X 2 Most strongly associated words for “tropical” in a collection of TREC news stories. Co-occurrence counts are measured at the document level. 8

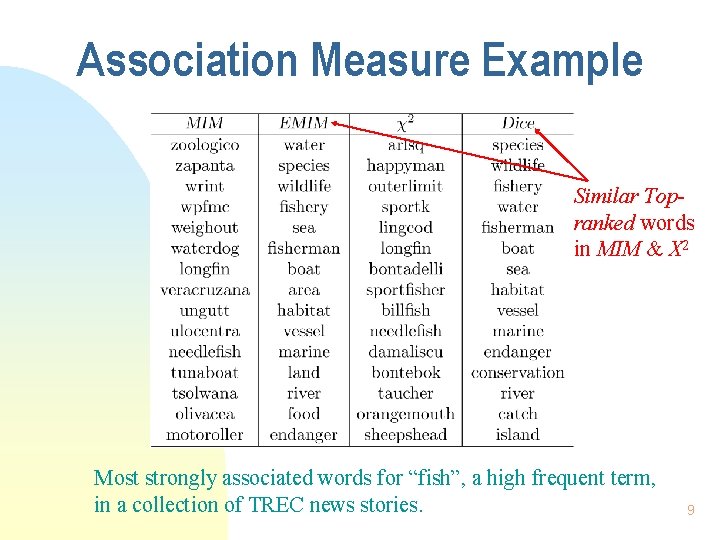

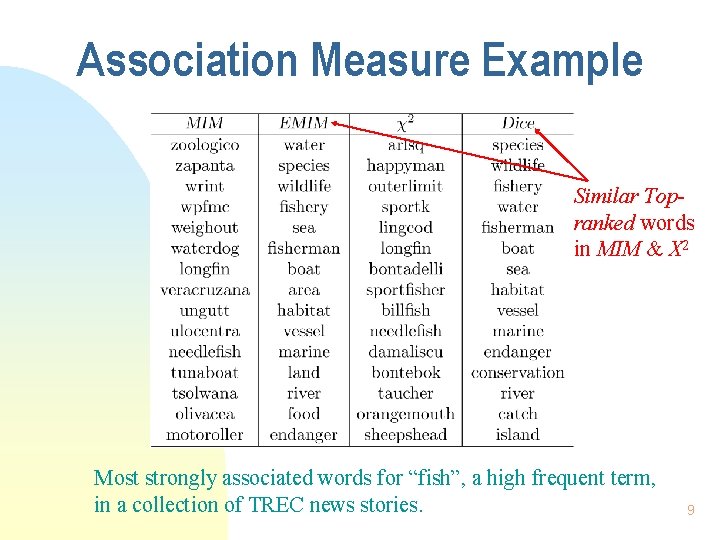

Association Measure Example Similar Topranked words in MIM & X 2 Most strongly associated words for “fish”, a high frequent term, in a collection of TREC news stories. 9

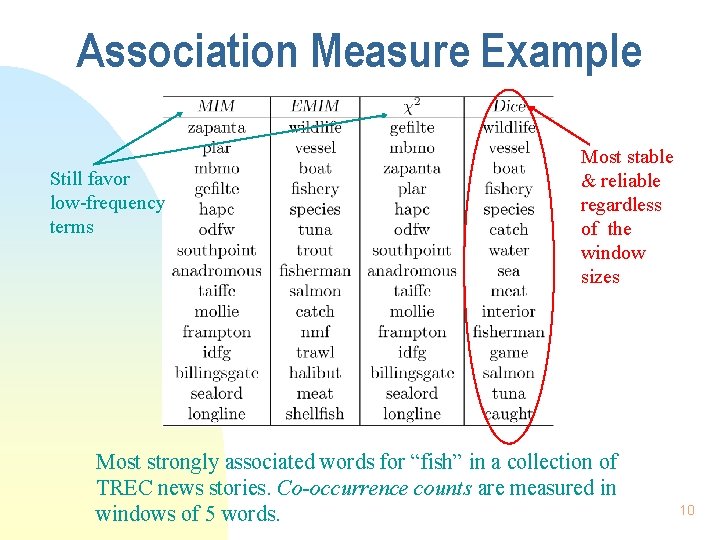

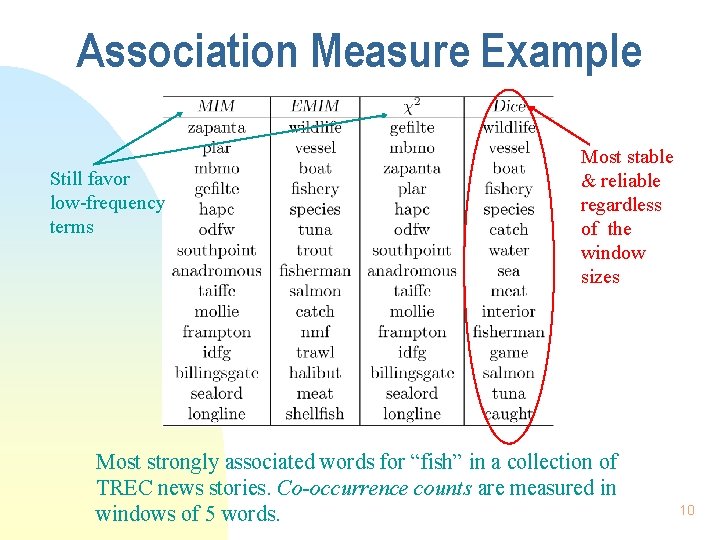

Association Measure Example Still favor low-frequency terms Most stable & reliable regardless of the window sizes Most strongly associated words for “fish” in a collection of TREC news stories. Co-occurrence counts are measured in windows of 5 words. 10

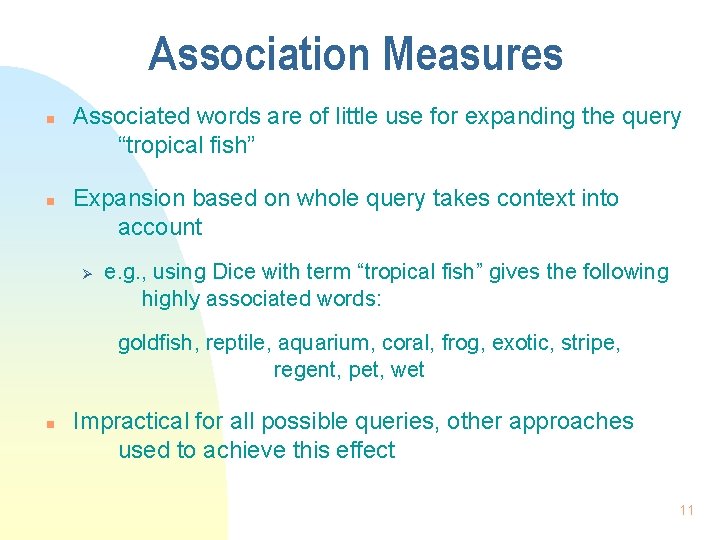

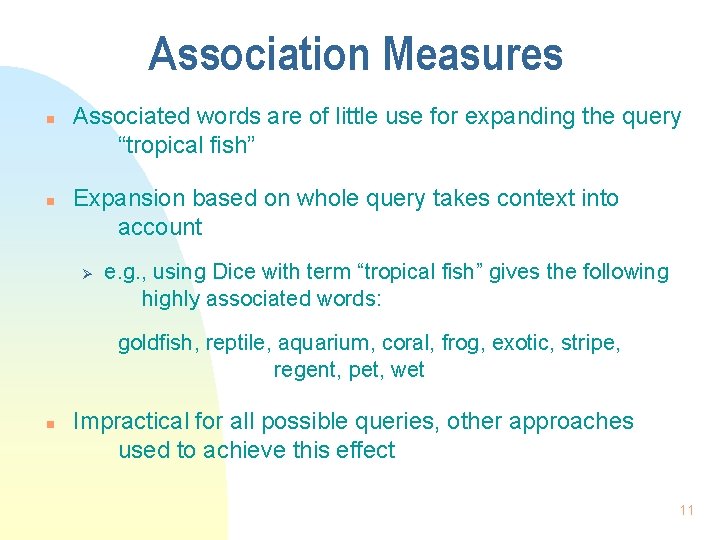

Association Measures n n Associated words are of little use for expanding the query “tropical fish” Expansion based on whole query takes context into account Ø e. g. , using Dice with term “tropical fish” gives the following highly associated words: goldfish, reptile, aquarium, coral, frog, exotic, stripe, regent, pet, wet n Impractical for all possible queries, other approaches used to achieve this effect 11

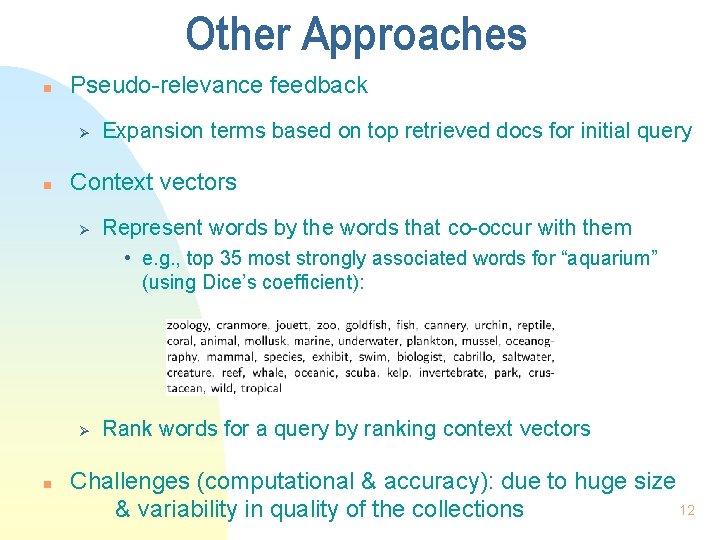

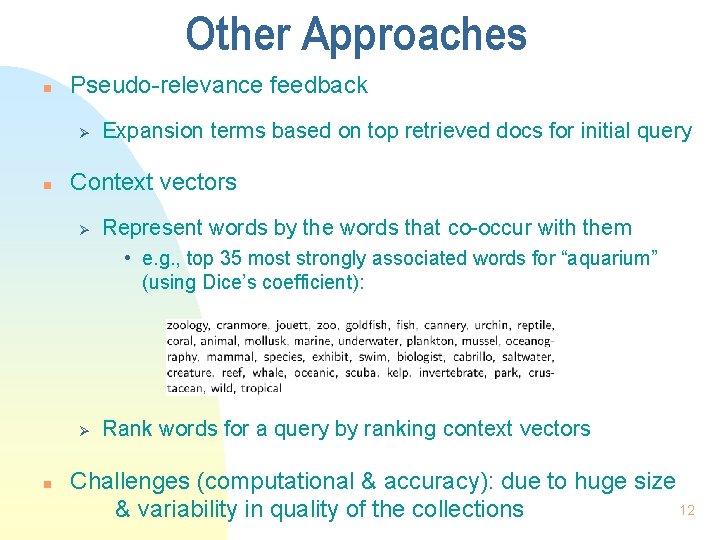

Other Approaches n Pseudo-relevance feedback Ø n Expansion terms based on top retrieved docs for initial query Context vectors Ø Represent words by the words that co-occur with them • e. g. , top 35 most strongly associated words for “aquarium” (using Dice’s coefficient): Ø n Rank words for a query by ranking context vectors Challenges (computational & accuracy): due to huge size 12 & variability in quality of the collections

Other Approaches n Query logs Ø Best source of information about queries & related terms • short pieces of text & click data Ø e. g. , most frequent words in queries containing “tropical fish” from MSN log: stores, pictures, live, sale, types, clipart, blue, freshwater, aquarium, supplies Ø Query suggestion based on finding similar queries • group based on click data 13

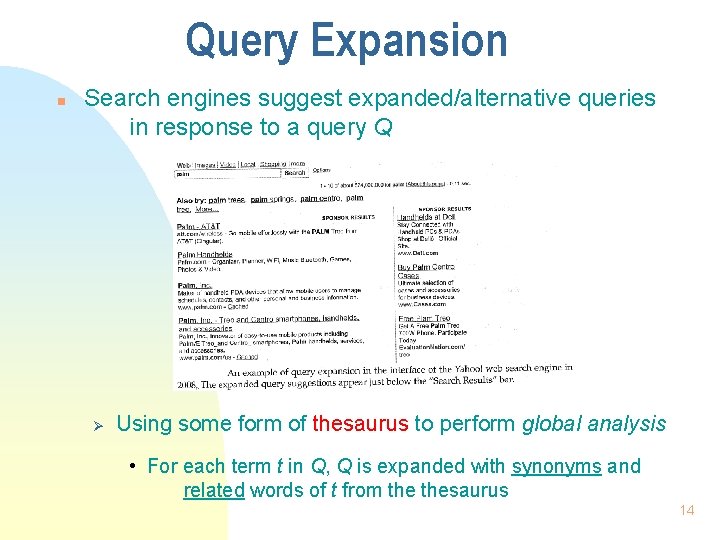

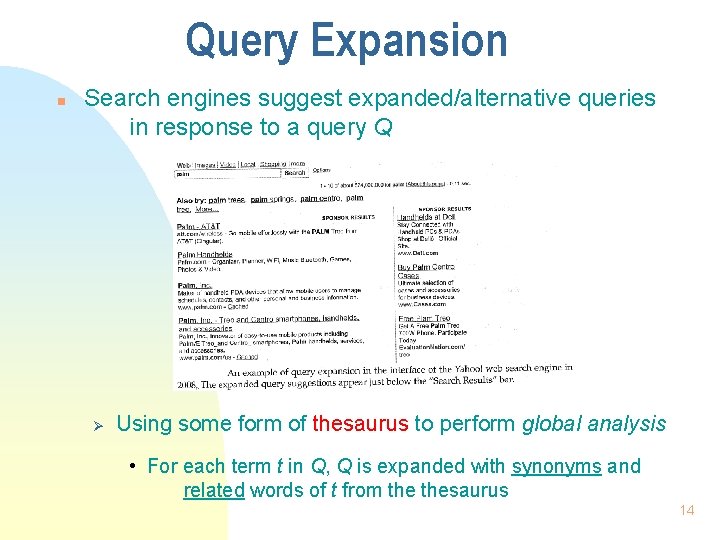

Query Expansion n Search engines suggest expanded/alternative queries in response to a query Q Ø Using some form of thesaurus to perform global analysis • For each term t in Q, Q is expanded with synonyms and related words of t from thesaurus 14

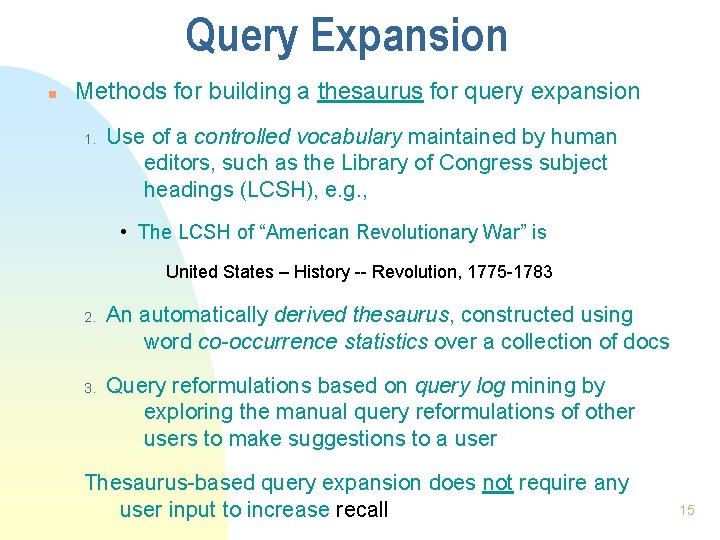

Query Expansion n Methods for building a thesaurus for query expansion 1. Use of a controlled vocabulary maintained by human editors, such as the Library of Congress subject headings (LCSH), e. g. , • The LCSH of “American Revolutionary War” is United States – History -- Revolution, 1775 -1783 2. 3. An automatically derived thesaurus, constructed using word co-occurrence statistics over a collection of docs Query reformulations based on query log mining by exploring the manual query reformulations of other users to make suggestions to a user Thesaurus-based query expansion does not require any user input to increase recall 15

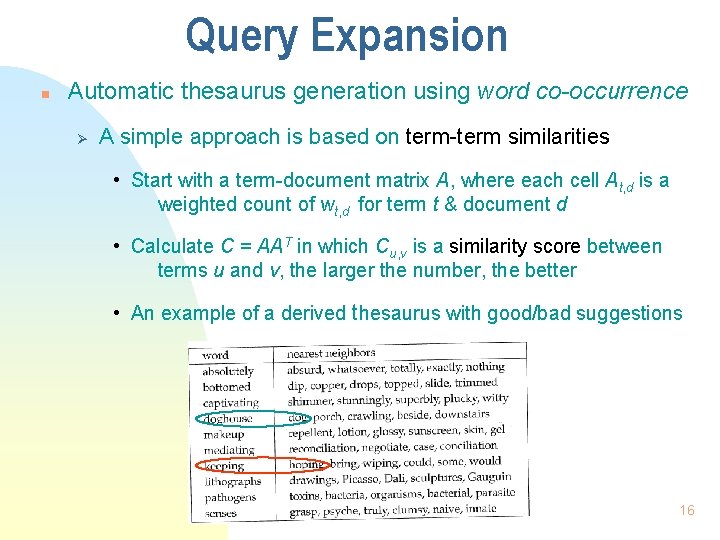

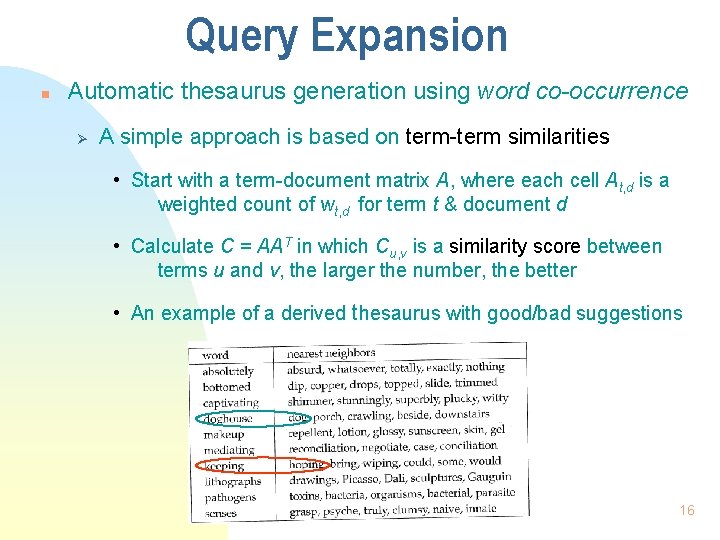

Query Expansion n Automatic thesaurus generation using word co-occurrence Ø A simple approach is based on term-term similarities • Start with a term-document matrix A, where each cell At, d is a weighted count of wt, d for term t & document d • Calculate C = AAT in which Cu, v is a similarity score between terms u and v, the larger the number, the better • An example of a derived thesaurus with good/bad suggestions 16

Query Expansion n The quality of term association is typically a problem in an automatically generated thesaurus Ø Term ambiguity easily introduces irrelevant statistically correlated terms, such as “Apple” can be expanded to “Apple red fruit computer” • Suffer from false positives (FP) and false negatives (FN) Ø Ø High cost to manually produce and update a thesaurus Query expansion often increases recall, but may also significantly decease precision , especially when the query contains ambiguous terms, e. g. , interest rate fascinate evaluate is unlikely to be useful 17