Query processing optimizations Paolo Ferragina Dipartimento di Informatica

- Slides: 36

Query processing: optimizations Paolo Ferragina Dipartimento di Informatica Università di Pisa Reading 2. 3

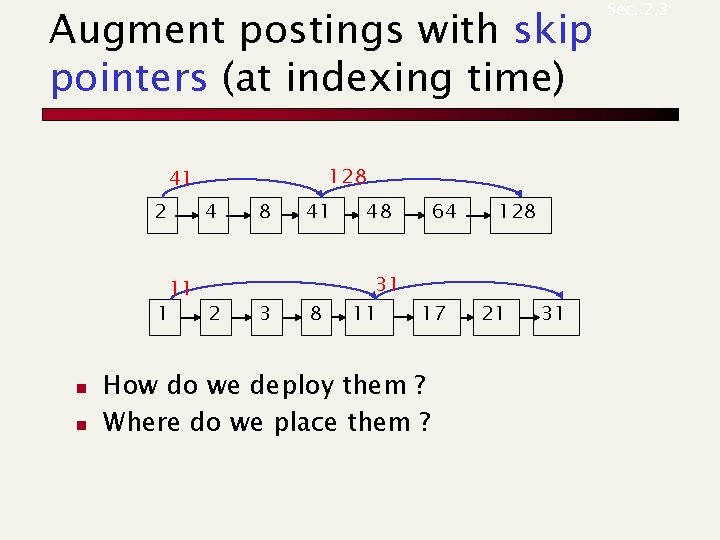

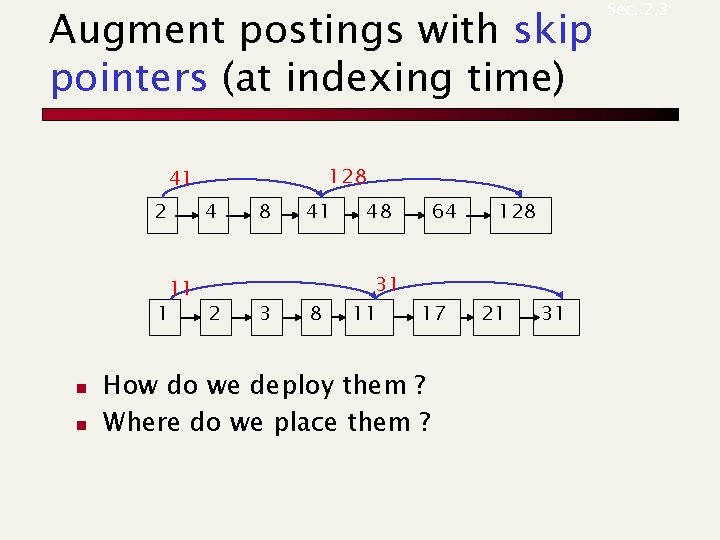

Augment postings with skip pointers (at indexing time) 128 41 2 4 11 1 2 n n 8 3 41 48 8 31 11 64 17 How do we deploy them ? Where do we place them ? 128 21 31 Sec. 2. 3

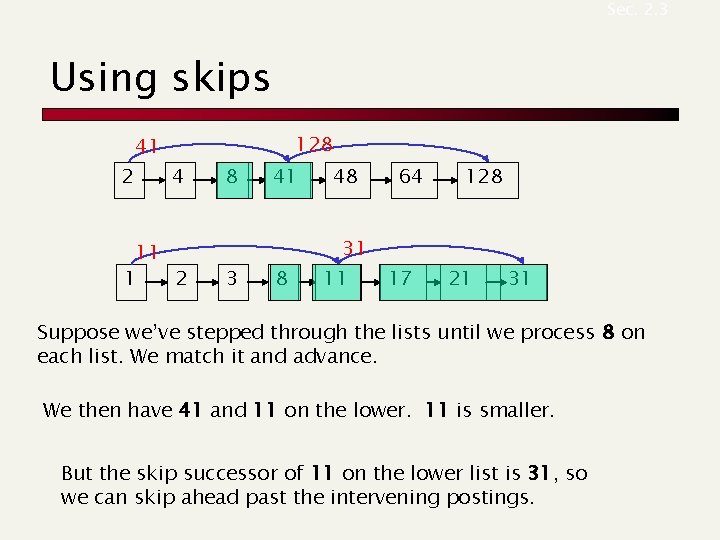

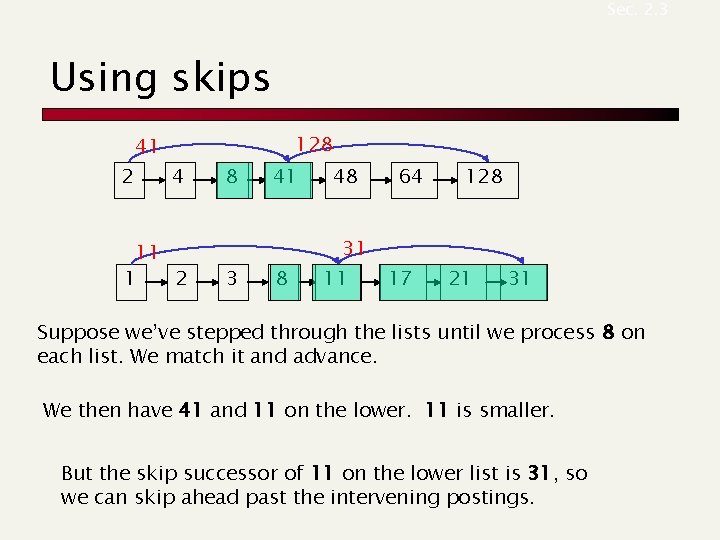

Sec. 2. 3 Using skips 2 41 128 4 11 1 2 8 3 41 48 8 31 11 64 17 128 21 31 Suppose we’ve stepped through the lists until we process 8 on each list. We match it and advance. We then have 41 and 11 on the lower. 11 is smaller. But the skip successor of 11 on the lower list is 31, so we can skip ahead past the intervening postings.

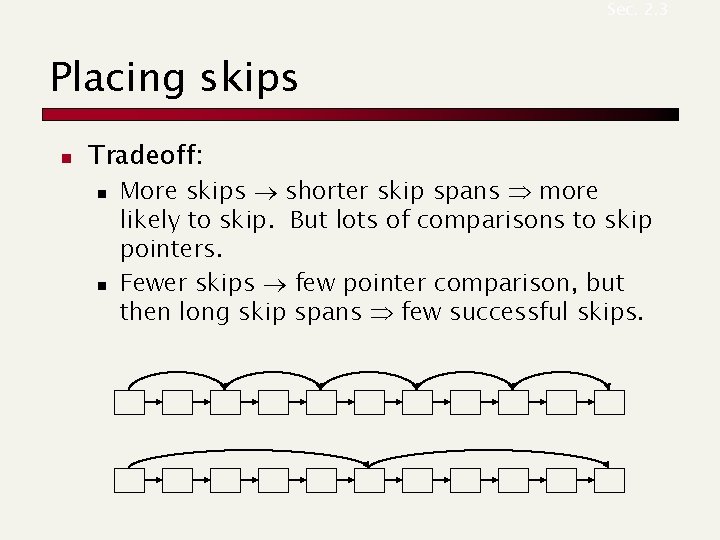

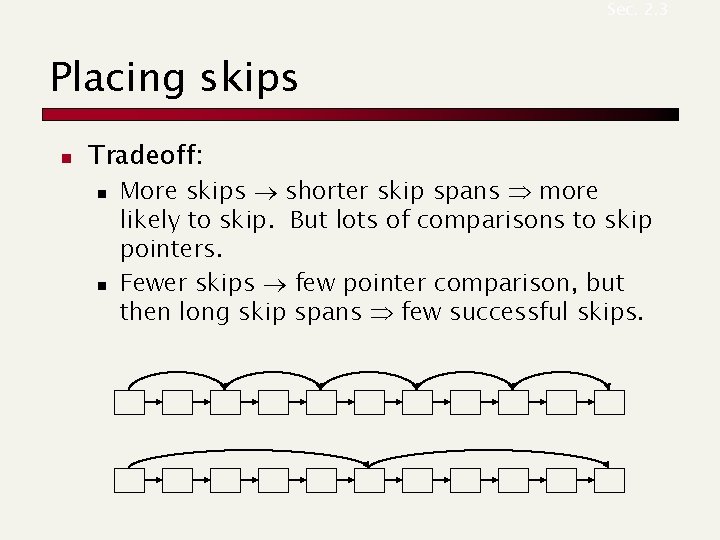

Sec. 2. 3 Placing skips n Tradeoff: n n More skips shorter skip spans more likely to skip. But lots of comparisons to skip pointers. Fewer skips few pointer comparison, but then long skip spans few successful skips.

Sec. 2. 3 Placing skips n n Simple heuristic: for postings of length L, use L evenly-spaced skip pointers. This ignores the distribution of query terms. Easy if the index is relatively static; harder if L keeps changing because of updates. This definitely used to help; with modern hardware it may not unless you’re memorybased n The I/O cost of loading a bigger postings list can outweigh the gains from quicker in memory merging!

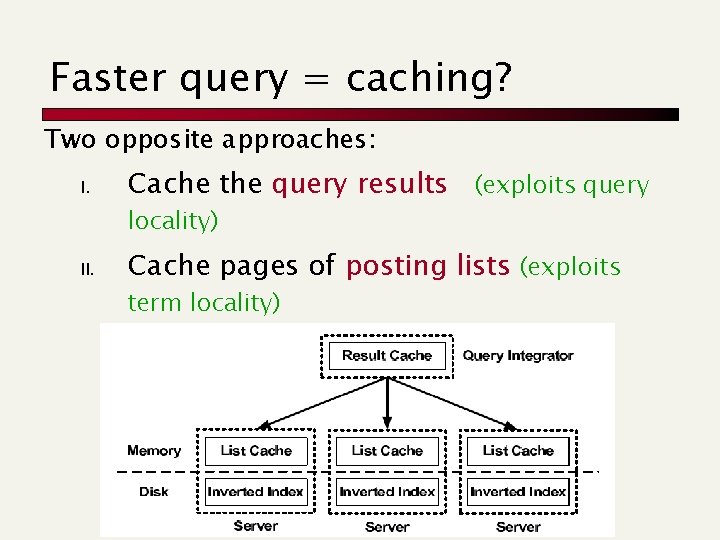

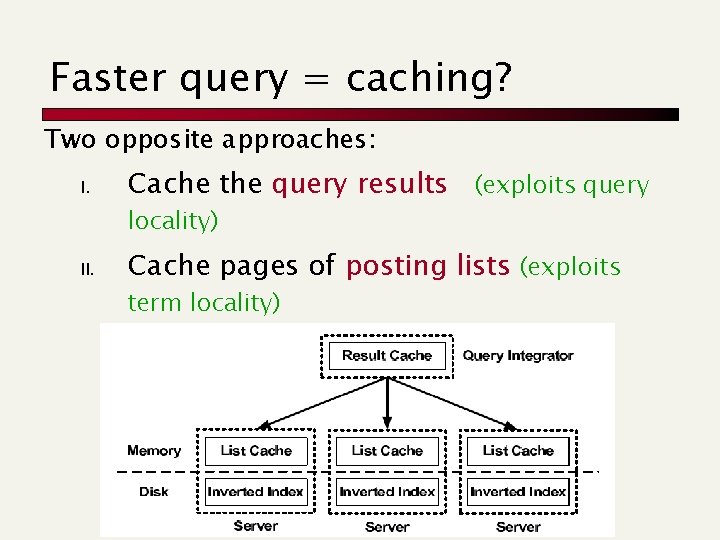

Faster query = caching? Two opposite approaches: I. Cache the query results (exploits query locality) II. Cache pages of posting lists (exploits term locality)

Query processing: phrase queries and positional indexes Paolo Ferragina Dipartimento di Informatica Università di Pisa Reading 2. 4

Sec. 2. 4 Phrase queries n n Want to be able to answer queries such as “stanford university” – as a phrase Thus the sentence “I went at Stanford my university” is not a match.

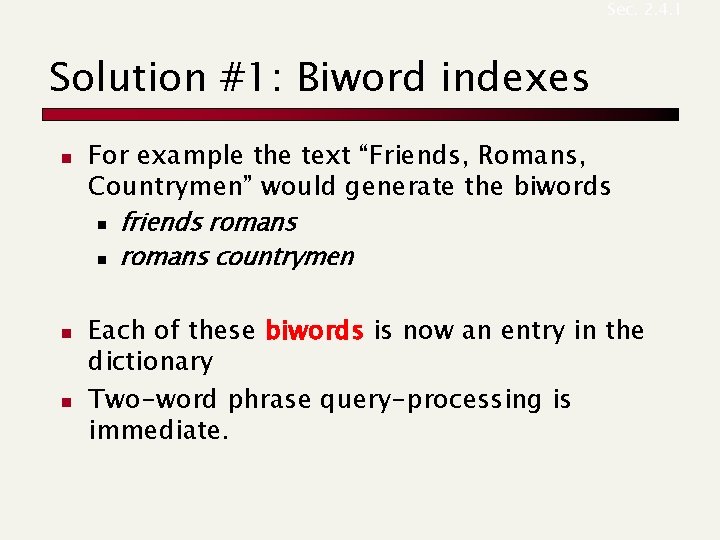

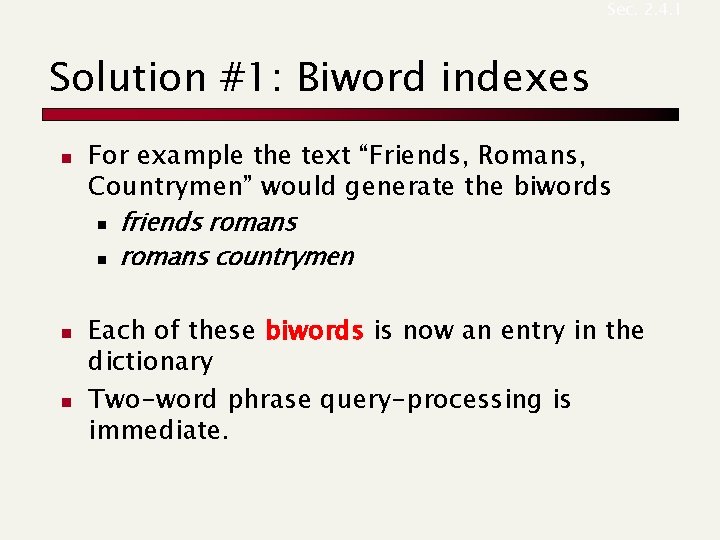

Sec. 2. 4. 1 Solution #1: Biword indexes n n n For example the text “Friends, Romans, Countrymen” would generate the biwords n friends romans n romans countrymen Each of these biwords is now an entry in the dictionary Two-word phrase query-processing is immediate.

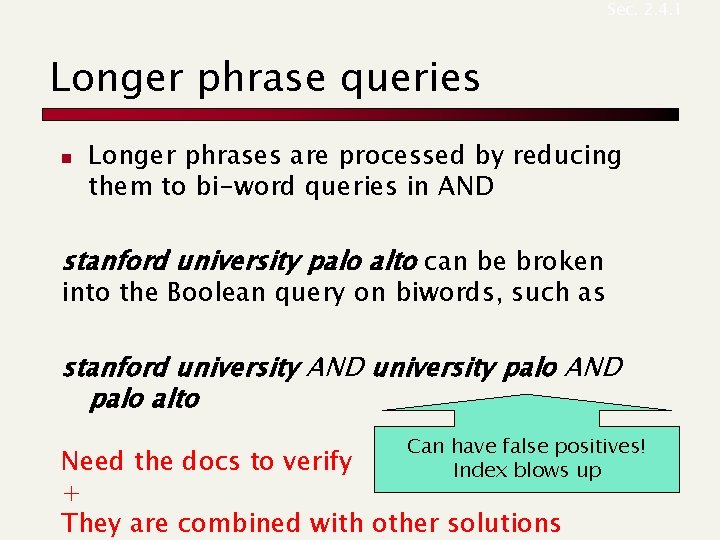

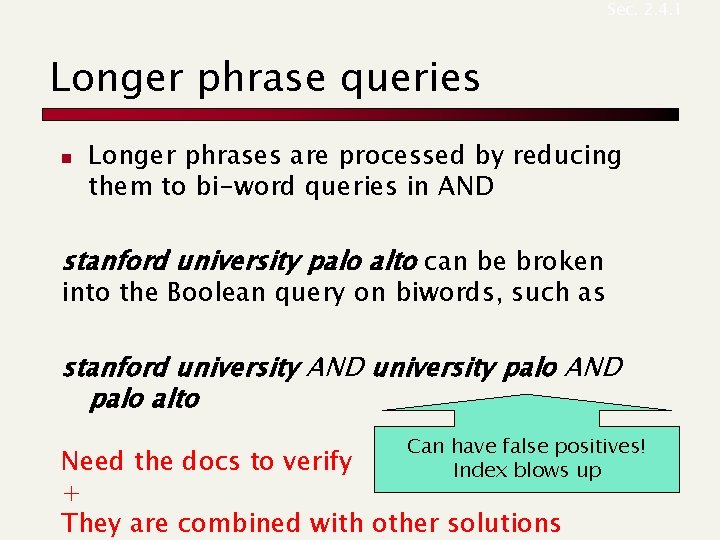

Sec. 2. 4. 1 Longer phrase queries n Longer phrases are processed by reducing them to bi-word queries in AND stanford university palo alto can be broken into the Boolean query on biwords, such as stanford university AND university palo AND palo alto Can have false positives! Index blows up Need the docs to verify + They are combined with other solutions

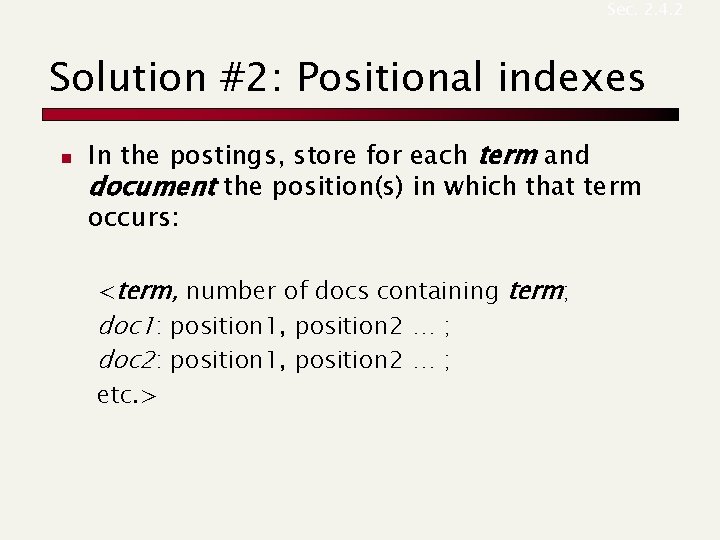

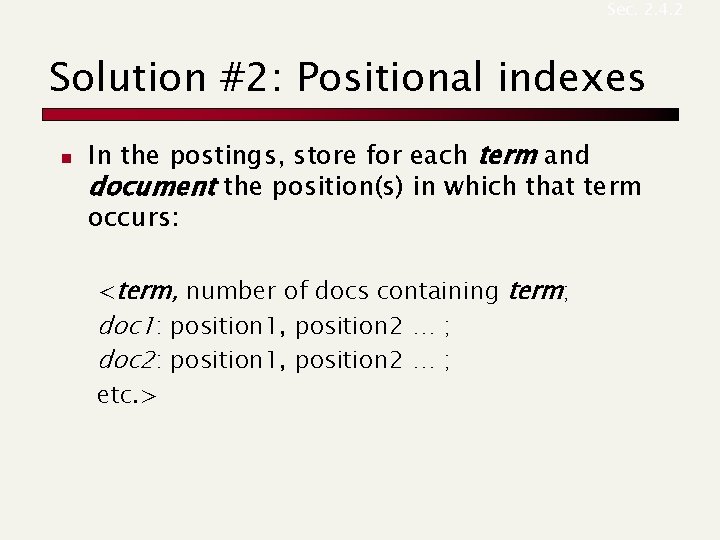

Sec. 2. 4. 2 Solution #2: Positional indexes n In the postings, store for each term and document the position(s) in which that term occurs: <term, number of docs containing term; doc 1: position 1, position 2 … ; doc 2: position 1, position 2 … ; etc. >

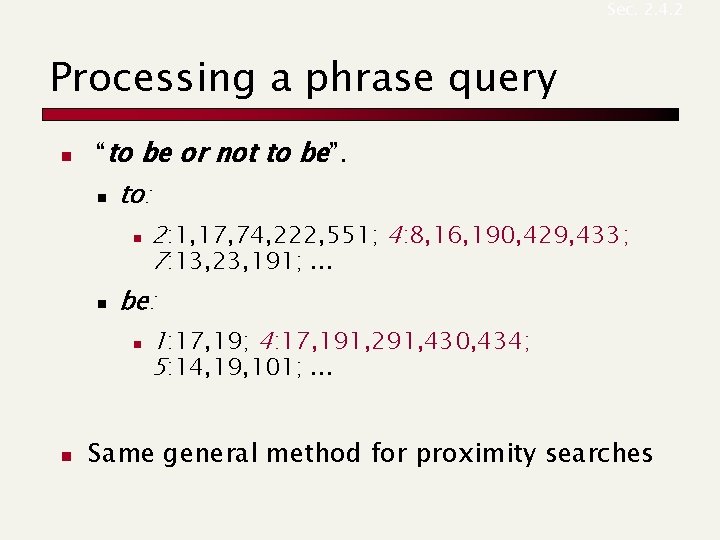

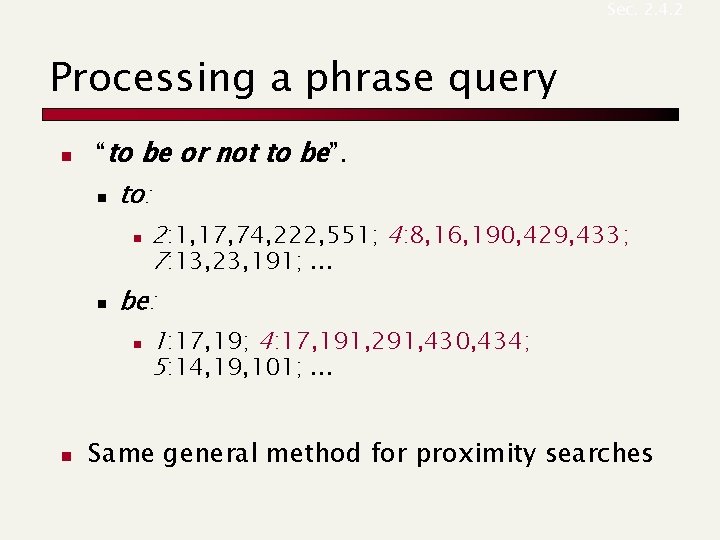

Sec. 2. 4. 2 Processing a phrase query n “to be or not to be”. n to: n n be: n n 2: 1, 17, 74, 222, 551; 4: 8, 16, 190, 429, 433; 7: 13, 23, 191; . . . 1: 17, 19; 4: 17, 191, 291, 430, 434; 5: 14, 19, 101; . . . Same general method for proximity searches

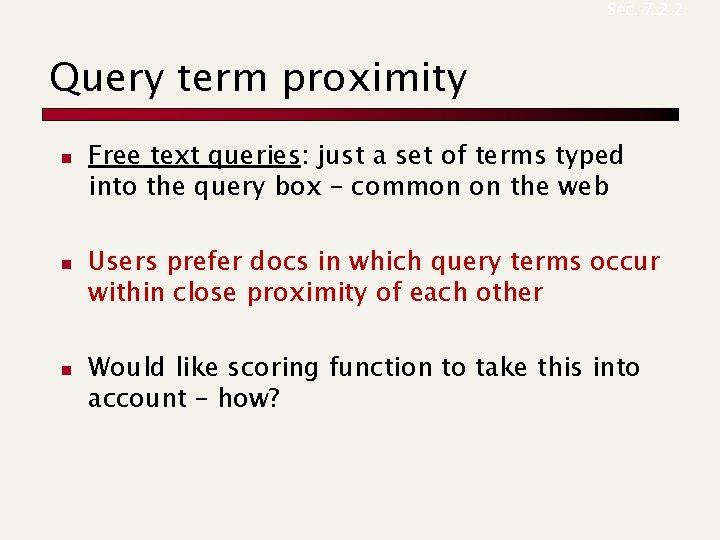

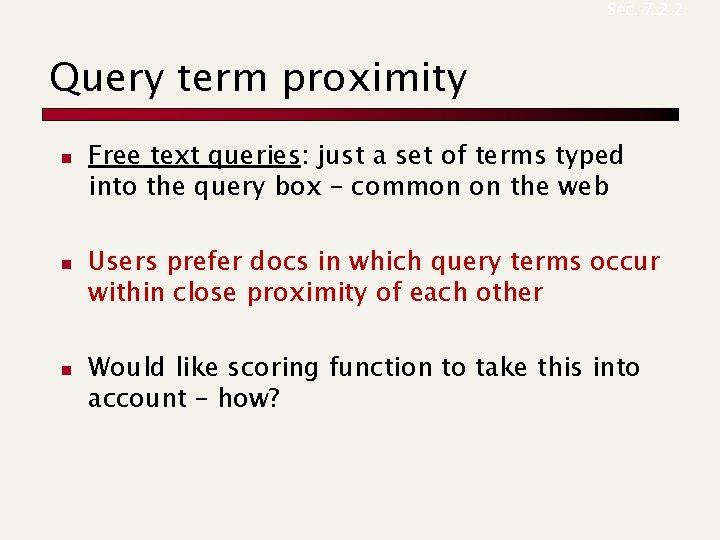

Sec. 7. 2. 2 Query term proximity n n n Free text queries: just a set of terms typed into the query box – common on the web Users prefer docs in which query terms occur within close proximity of each other Would like scoring function to take this into account – how?

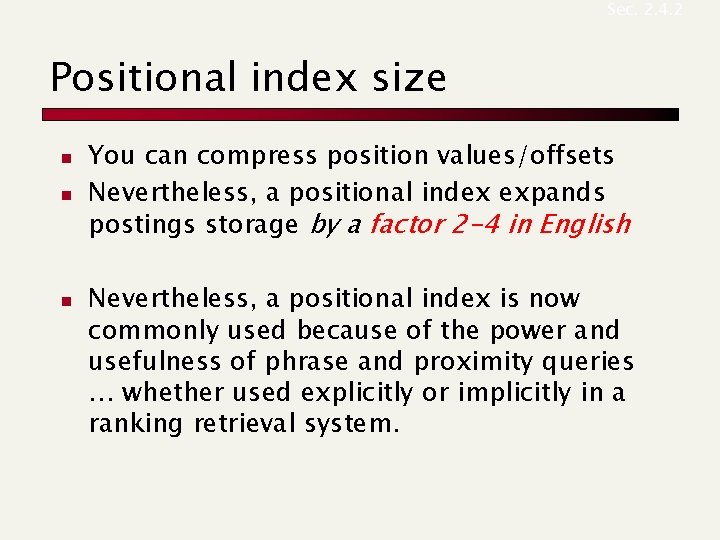

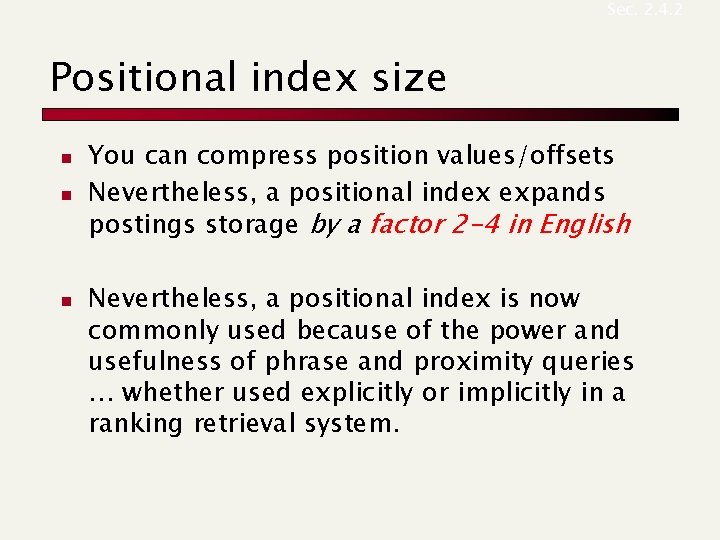

Sec. 2. 4. 2 Positional index size n n n You can compress position values/offsets Nevertheless, a positional index expands postings storage by a factor 2 -4 in English Nevertheless, a positional index is now commonly used because of the power and usefulness of phrase and proximity queries … whether used explicitly or implicitly in a ranking retrieval system.

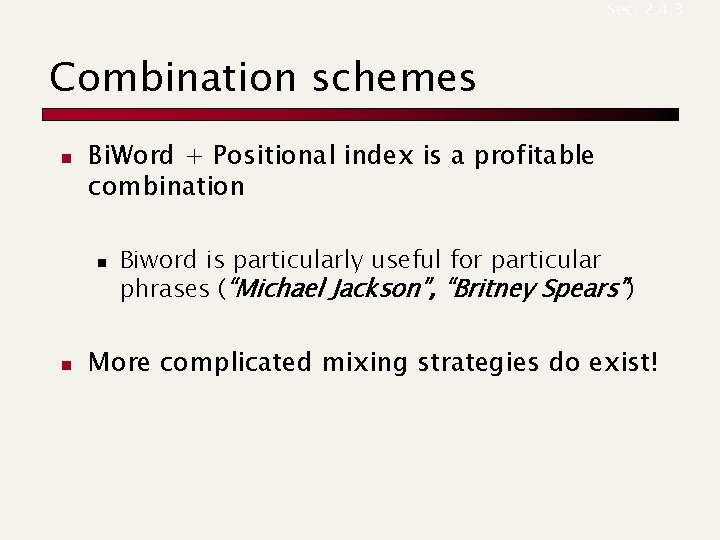

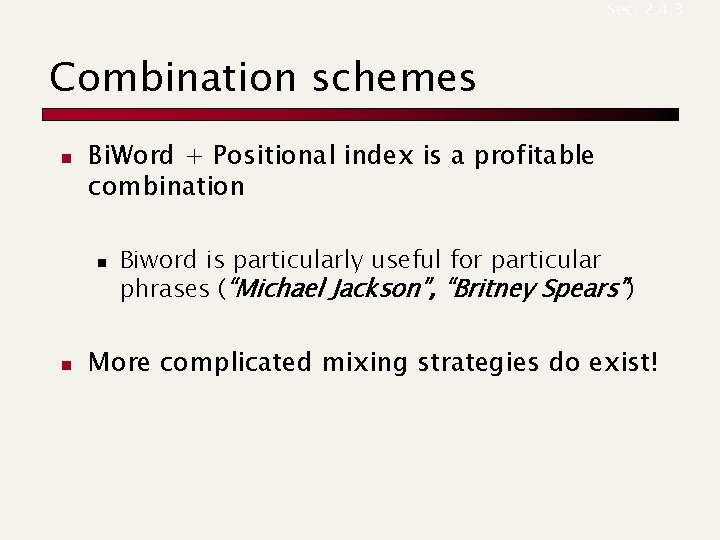

Sec. 2. 4. 3 Combination schemes n Bi. Word + Positional index is a profitable combination n n Biword is particularly useful for particular phrases (“Michael Jackson”, “Britney Spears”) More complicated mixing strategies do exist!

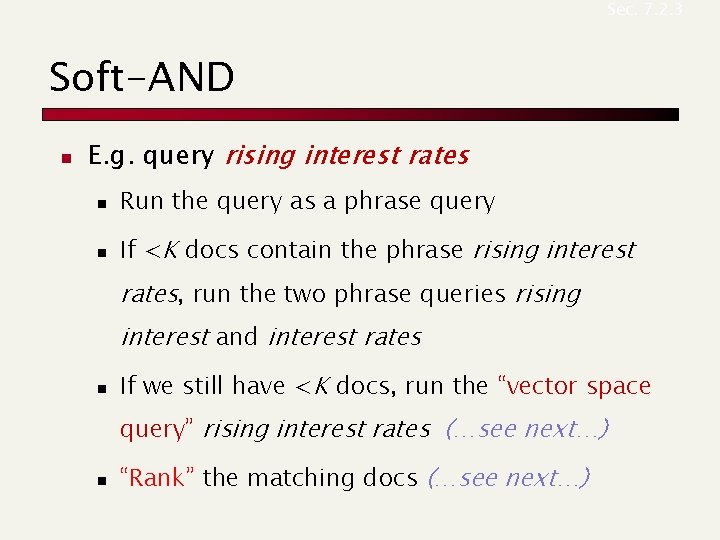

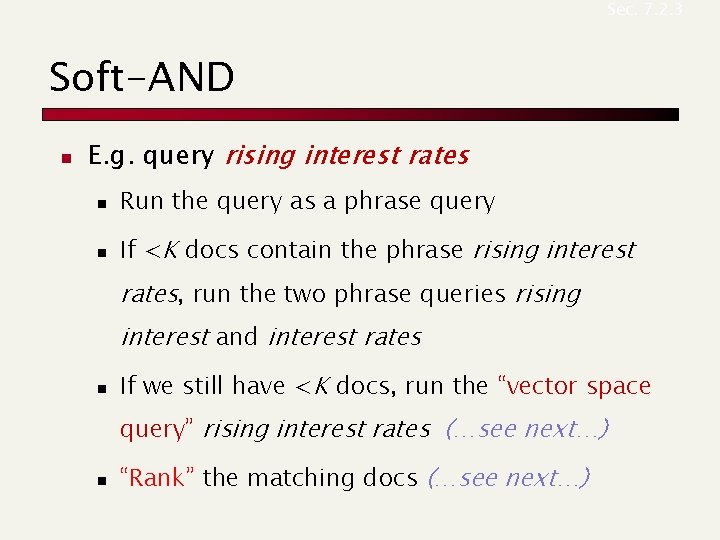

Sec. 7. 2. 3 Soft-AND n E. g. query rising interest rates n Run the query as a phrase query n If <K docs contain the phrase rising interest rates, run the two phrase queries rising interest and interest rates n If we still have <K docs, run the “vector space query” rising interest rates (…see next…) n “Rank” the matching docs (…see next…)

Query processing: other sophisticated queries Paolo Ferragina Dipartimento di Informatica Università di Pisa Reading 3. 2 and 3. 3

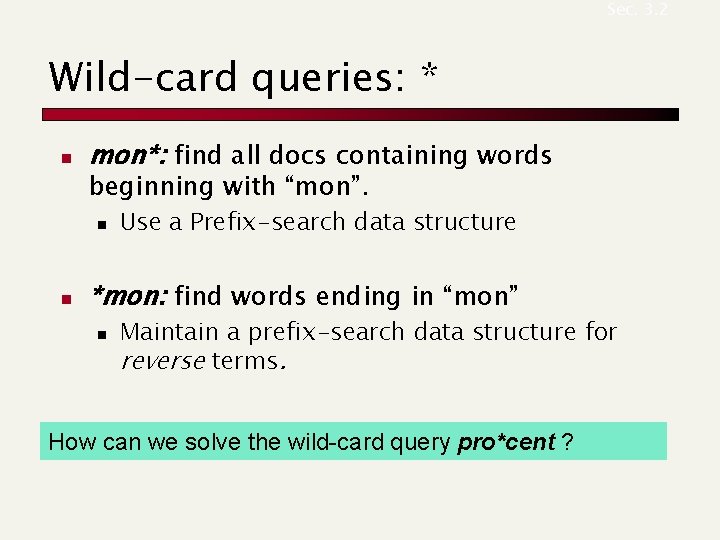

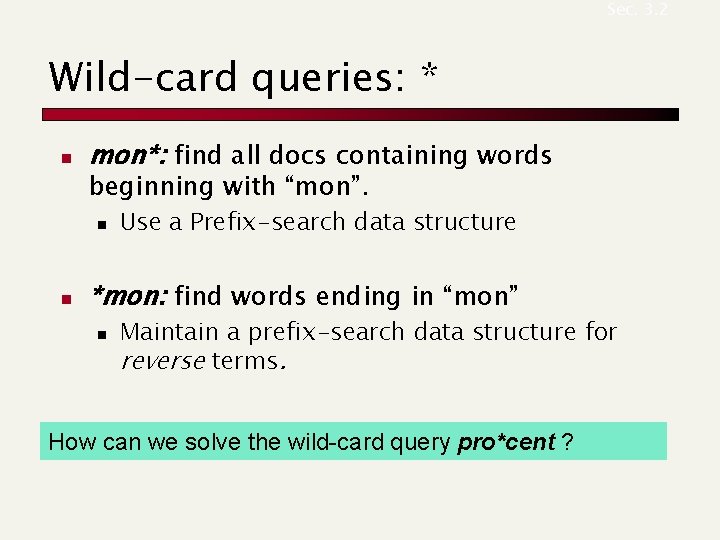

Sec. 3. 2 Wild-card queries: * n mon*: find all docs containing words beginning with “mon”. n n Use a Prefix-search data structure *mon: find words ending in “mon” n Maintain a prefix-search data structure for reverse terms. How can we solve the wild-card query pro*cent ?

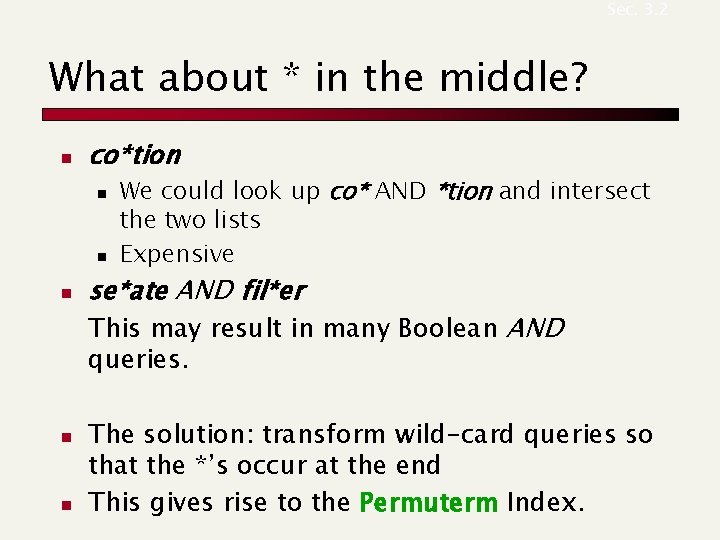

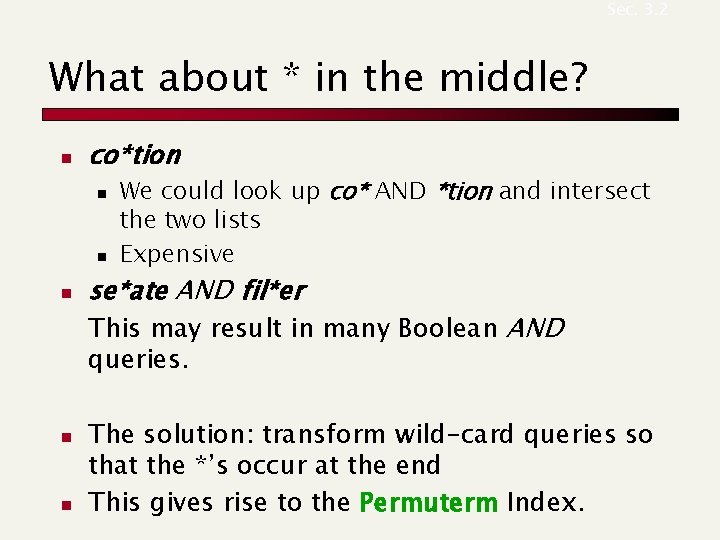

Sec. 3. 2 What about * in the middle? n co*tion n We could look up co* AND *tion and intersect the two lists Expensive se*ate AND fil*er This may result in many Boolean AND queries. n n The solution: transform wild-card queries so that the *’s occur at the end This gives rise to the Permuterm Index.

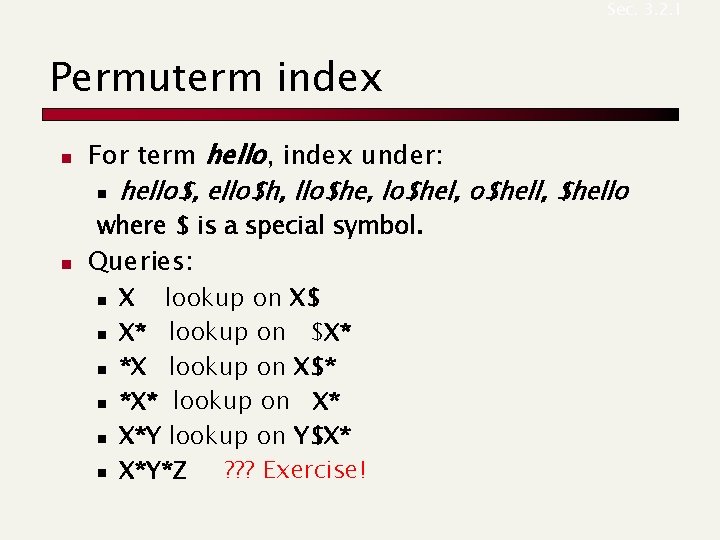

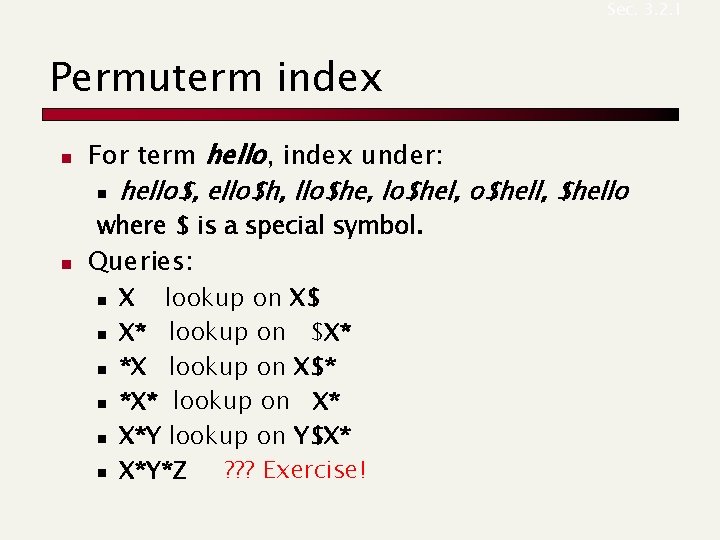

Sec. 3. 2. 1 Permuterm index n For term hello, index under: n hello$, ello$h, llo$he, lo$hel, o$hell, $hello where $ is a special symbol. n Queries: n n n X lookup on X$ X* lookup on $X* *X lookup on X$* *X* lookup on X* X*Y lookup on Y$X* X*Y*Z ? ? ? Exercise!

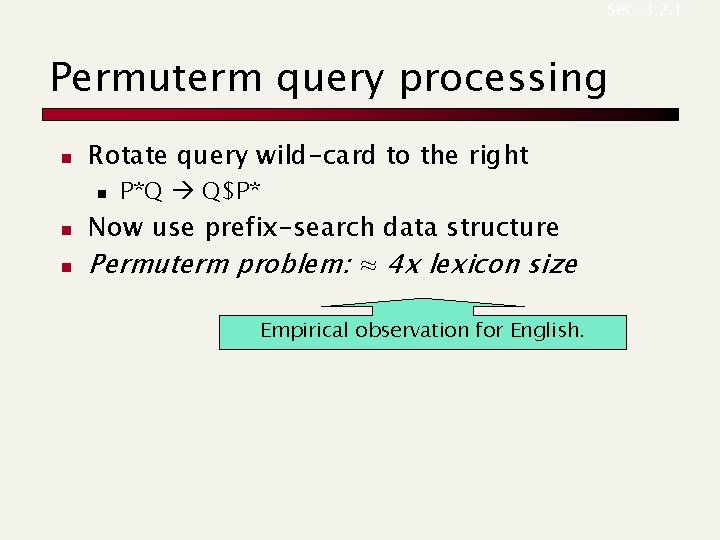

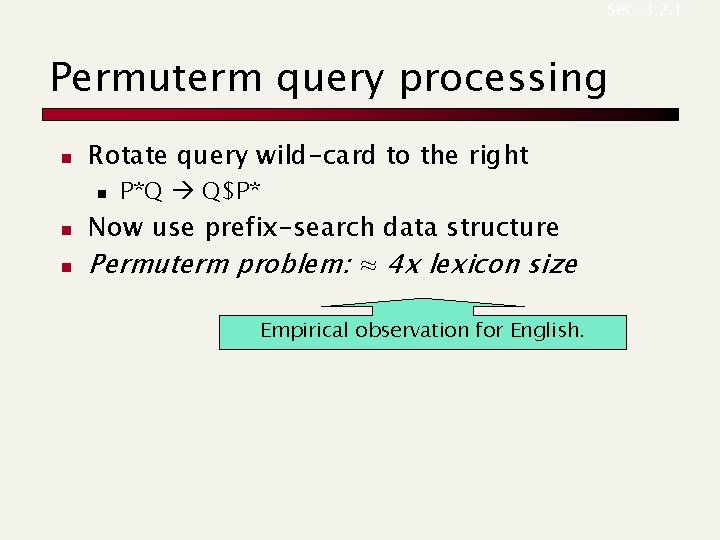

Sec. 3. 2. 1 Permuterm query processing n Rotate query wild-card to the right n n n P*Q Q$P* Now use prefix-search data structure Permuterm problem: ≈ 4 x lexicon size Empirical observation for English.

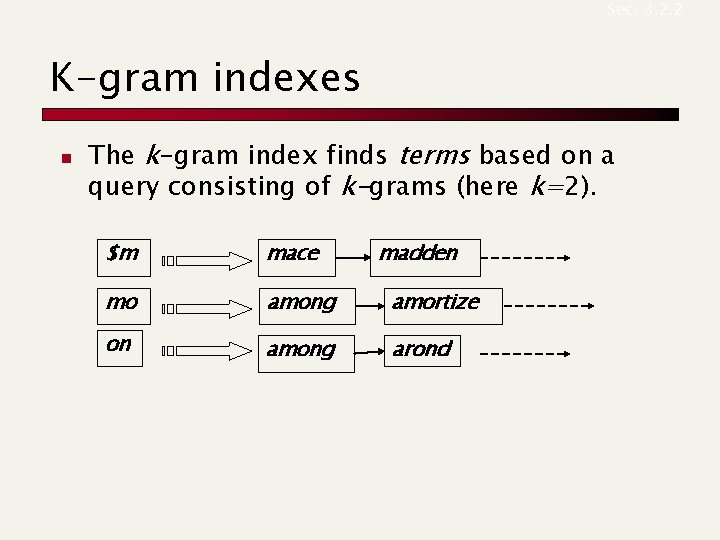

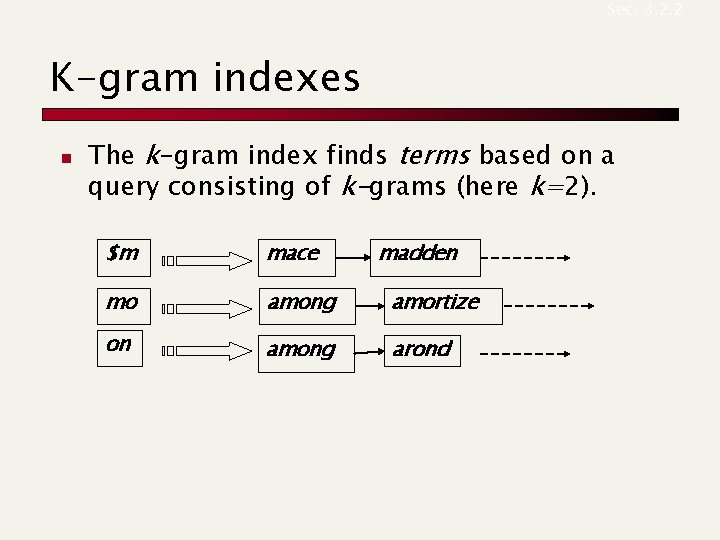

Sec. 3. 2. 2 K-gram indexes n The k-gram index finds terms based on a query consisting of k-grams (here k=2). $m mace madden mo among amortize on among arond

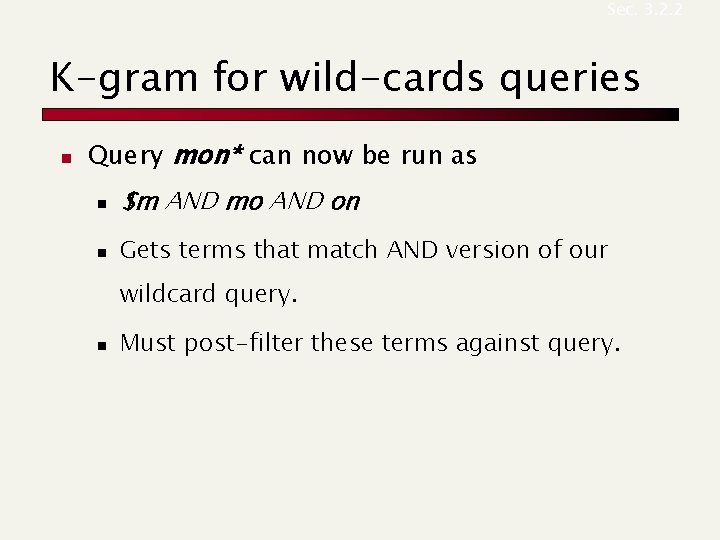

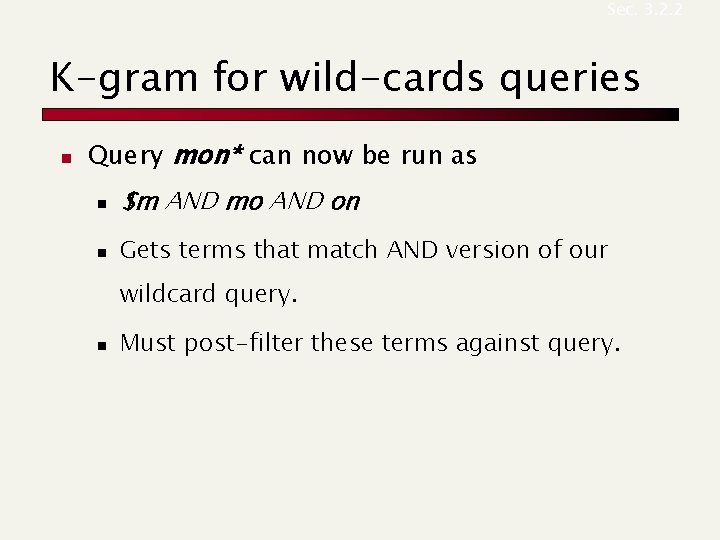

Sec. 3. 2. 2 K-gram for wild-cards queries n Query mon* can now be run as n $m AND mo AND on n Gets terms that match AND version of our wildcard query. n Must post-filter these terms against query.

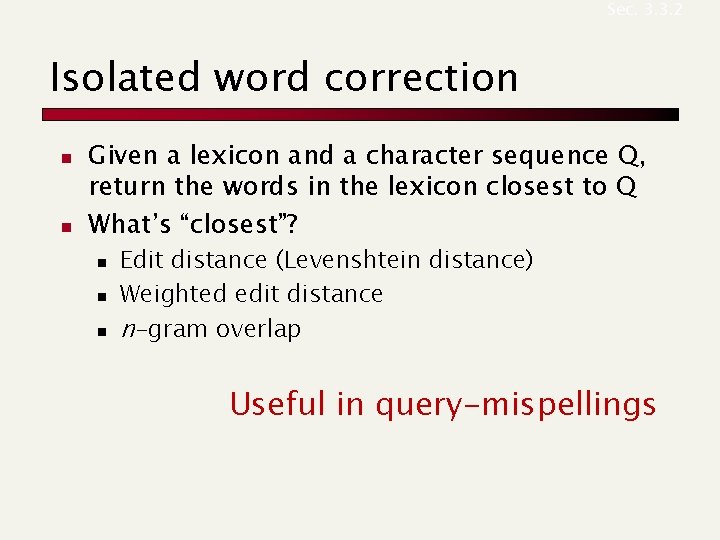

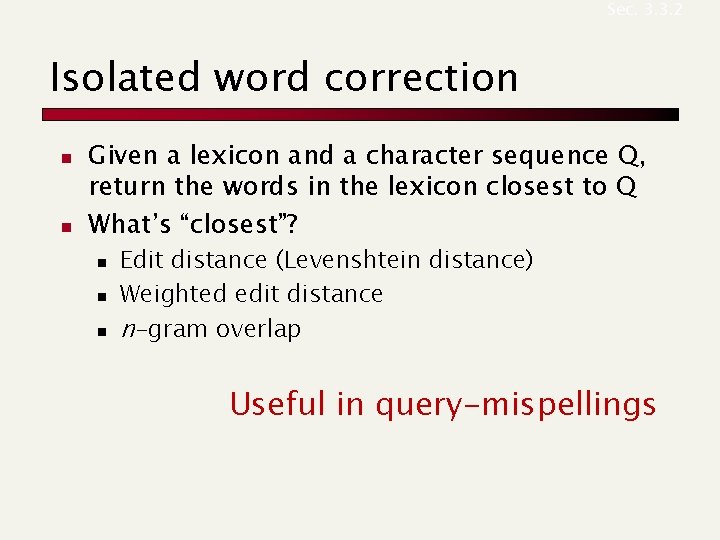

Sec. 3. 3. 2 Isolated word correction n n Given a lexicon and a character sequence Q, return the words in the lexicon closest to Q What’s “closest”? n n n Edit distance (Levenshtein distance) Weighted edit distance n-gram overlap Useful in query-mispellings

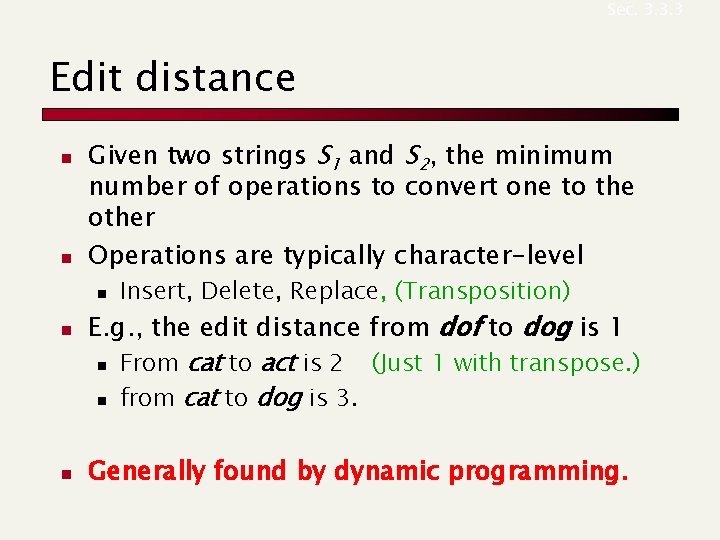

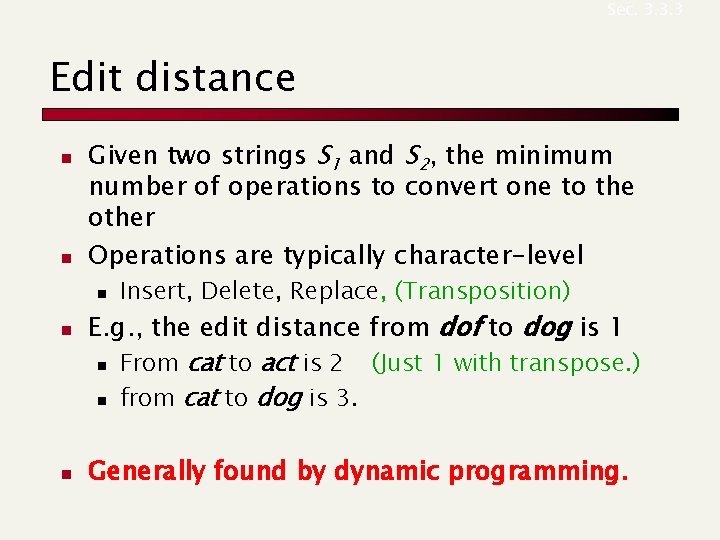

Sec. 3. 3. 3 Edit distance n n Given two strings S 1 and S 2, the minimum number of operations to convert one to the other Operations are typically character-level n n n Insert, Delete, Replace, (Transposition) E. g. , the edit distance from dof to dog is 1 n From cat to act is 2 (Just 1 with transpose. ) n from cat to dog is 3. Generally found by dynamic programming.

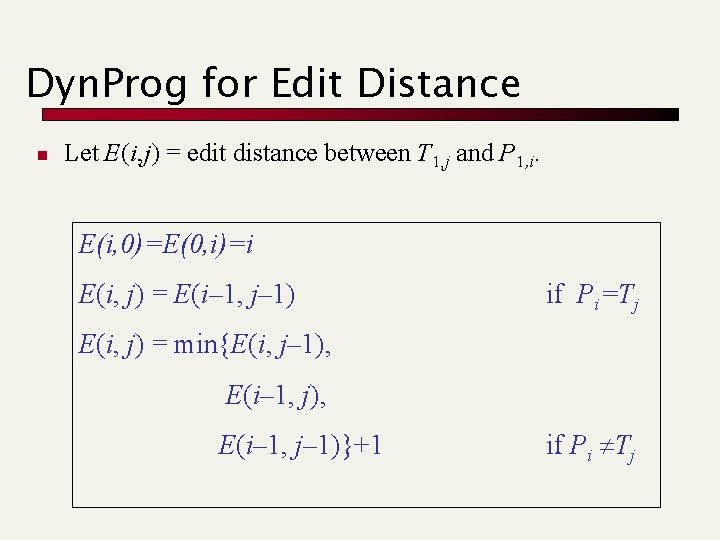

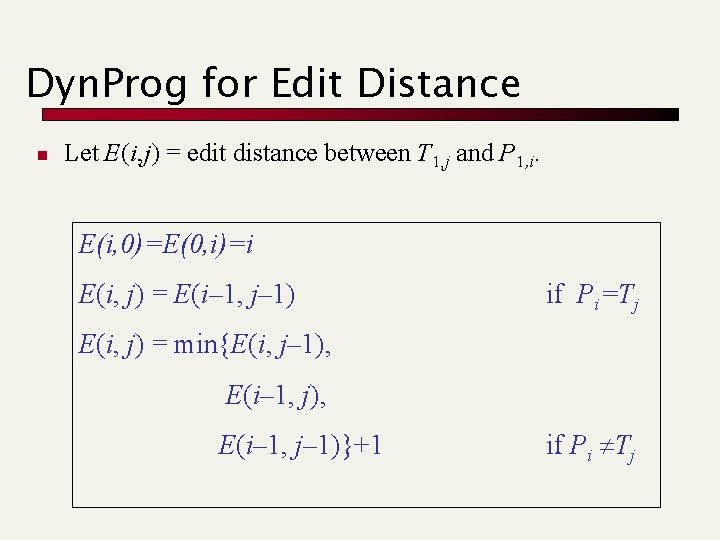

Dyn. Prog for Edit Distance n Let E(i, j) = edit distance between T 1, j and P 1, i. E(i, 0)=E(0, i)=i E(i, j) = E(i– 1, j– 1) if Pi=Tj E(i, j) = min{E(i, j– 1), E(i– 1, j– 1)}+1 if Pi Tj

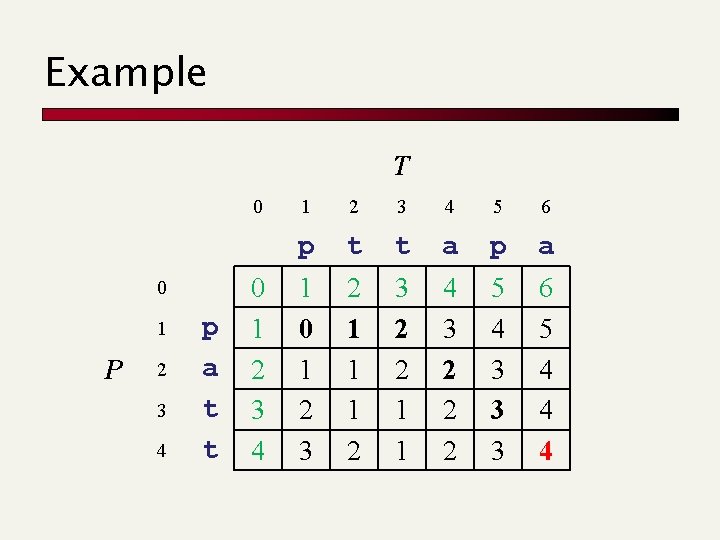

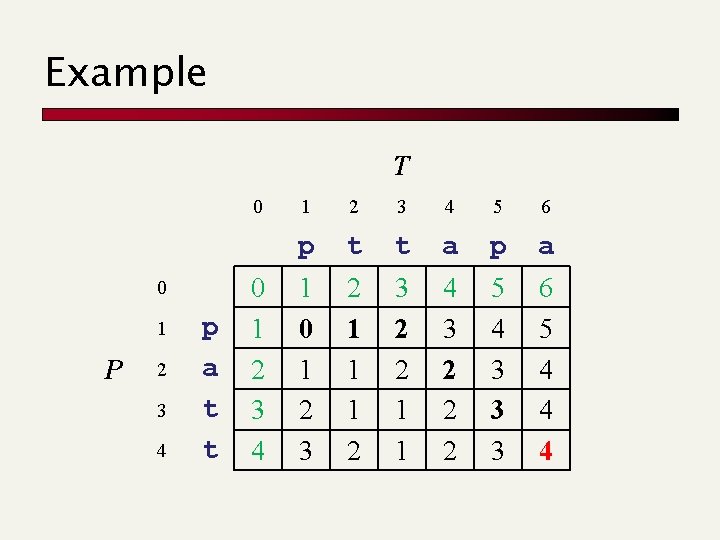

Example T 0 0 1 P 2 3 4 p a t t 0 1 2 3 4 5 6 p t t a p a 1 0 1 2 3 2 1 1 1 2 3 2 2 1 1 4 3 2 2 2 5 4 3 3 3 6 5 4 4 4

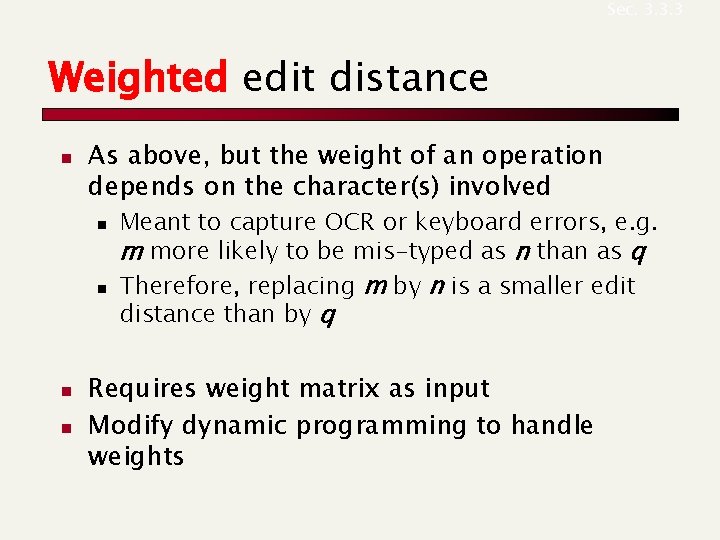

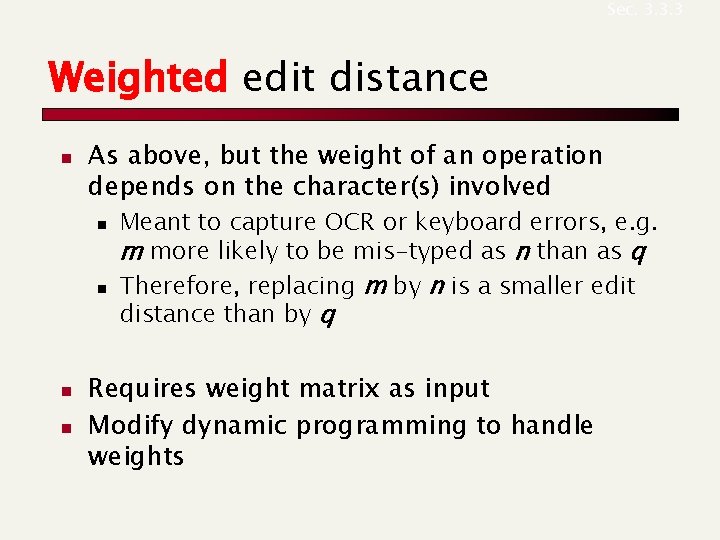

Sec. 3. 3. 3 Weighted edit distance n As above, but the weight of an operation depends on the character(s) involved n n Meant to capture OCR or keyboard errors, e. g. m more likely to be mis-typed as n than as q Therefore, replacing m by n is a smaller edit distance than by q Requires weight matrix as input Modify dynamic programming to handle weights

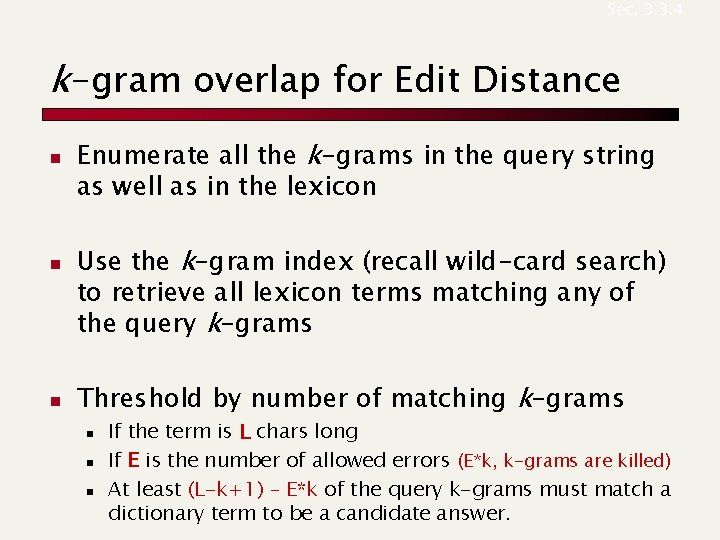

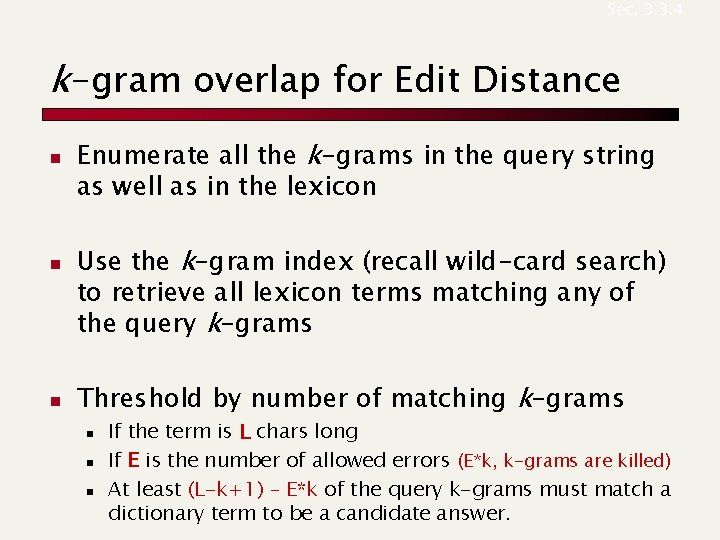

Sec. 3. 3. 4 k-gram overlap for Edit Distance n n n Enumerate all the k-grams in the query string as well as in the lexicon Use the k-gram index (recall wild-card search) to retrieve all lexicon terms matching any of the query k-grams Threshold by number of matching k-grams n n n If the term is L chars long If E is the number of allowed errors (E*k, k-grams are killed) At least (L-k+1) – E*k of the query k-grams must match a dictionary term to be a candidate answer.

Sec. 3. 3. 5 Context-sensitive spell correction n n Text: I flew from Heathrow to Narita. Consider the phrase query “flew form Heathrow” We’d like to respond Did you mean “flew from Heathrow”? because no docs matched the query phrase. n

Zone indexes Paolo Ferragina Dipartimento di Informatica Università di Pisa Reading 6. 1

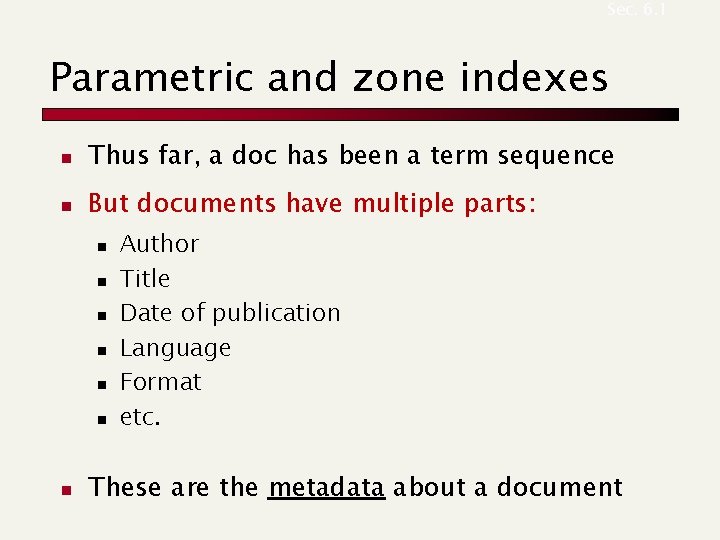

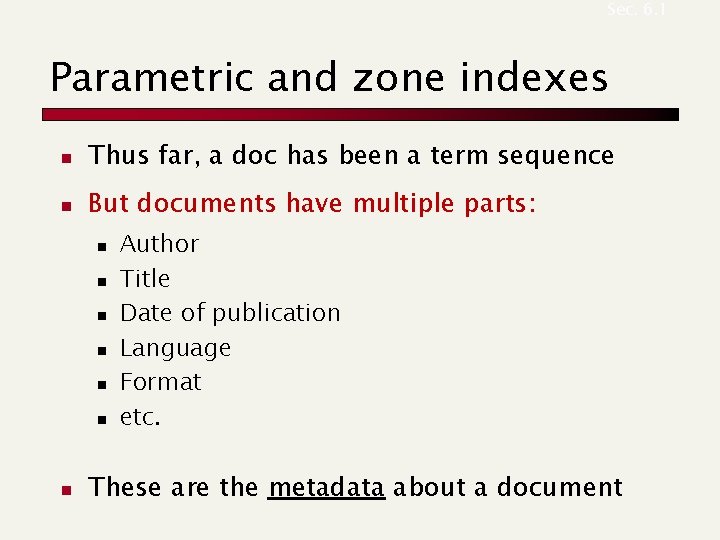

Sec. 6. 1 Parametric and zone indexes n Thus far, a doc has been a term sequence n But documents have multiple parts: n n n n Author Title Date of publication Language Format etc. These are the metadata about a document

Sec. 6. 1 Zone n A zone is a region of the doc that can contain an arbitrary amount of text e. g. , n n n Title Abstract References … Build inverted indexes on fields AND zones to permit querying E. g. , “find docs with merchant in the title zone and matching the query gentle rain”

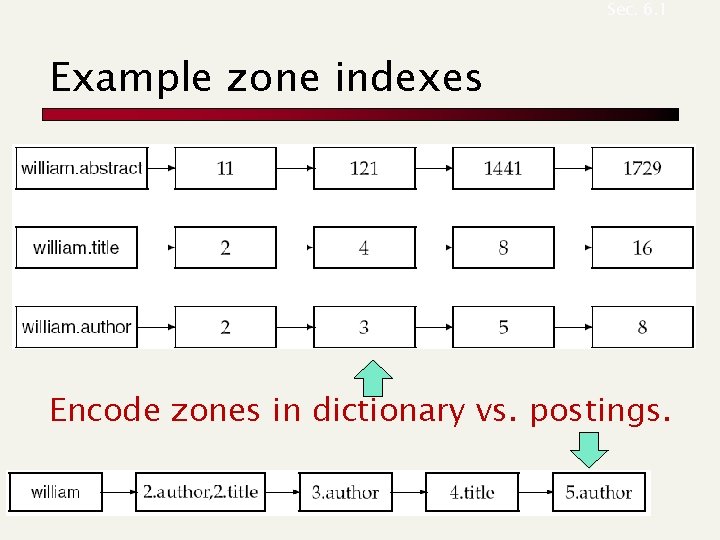

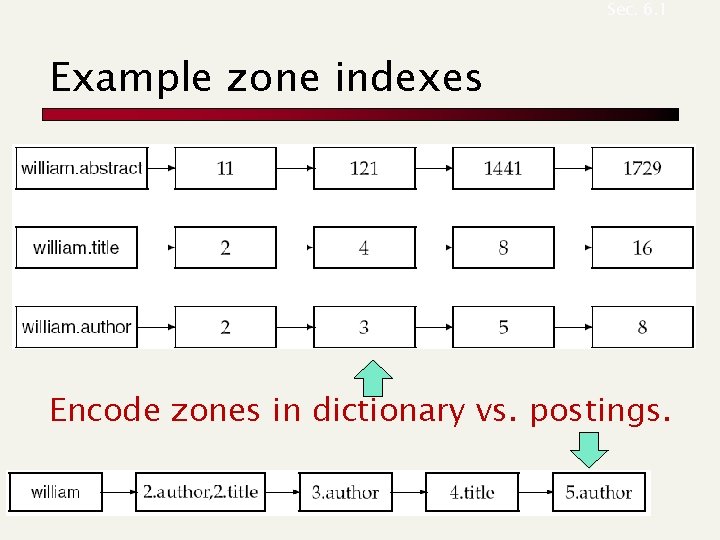

Sec. 6. 1 Example zone indexes Encode zones in dictionary vs. postings.

Sec. 7. 2. 1 Tiered indexes n Break postings up into a hierarchy of lists n n n Most important … Least important Inverted index thus broken up into tiers of decreasing importance At query time use top tier unless it fails to yield K docs n If so drop to lower tiers

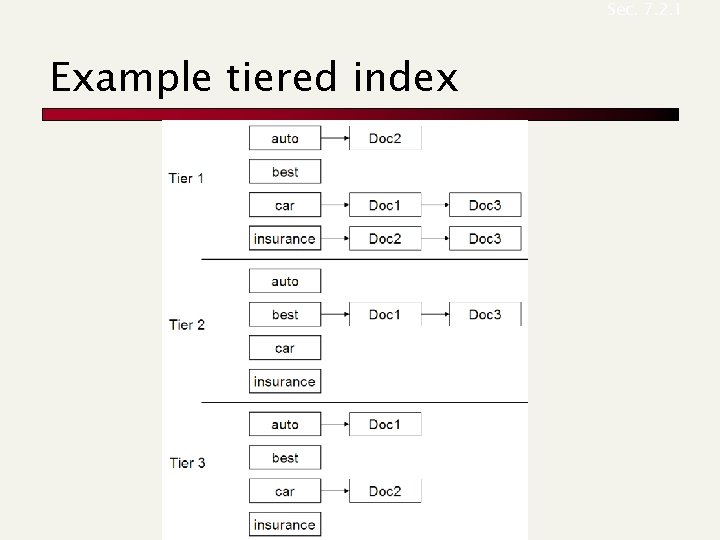

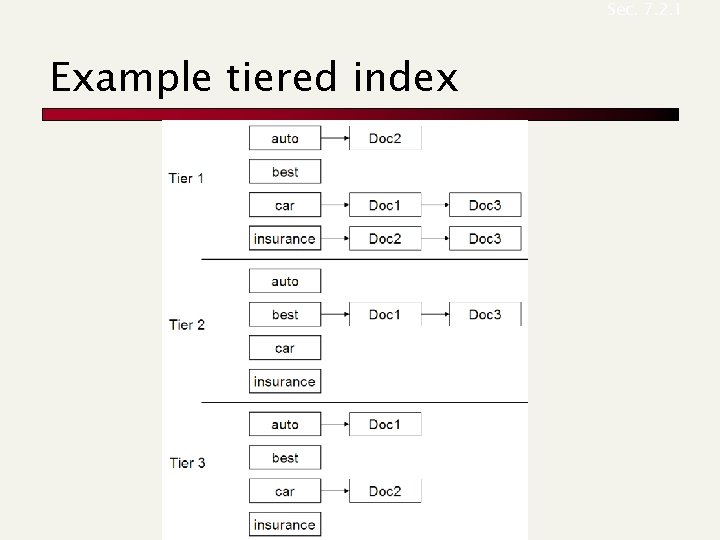

Sec. 7. 2. 1 Example tiered index