Query Execution Zachary G Ives University of Pennsylvania

- Slides: 32

Query Execution Zachary G. Ives University of Pennsylvania CIS 650 – Implementing Data Management Systems January 24, 2005 Content on hashing and sorting courtesy Ramakrishnan & Gehrke

Today’s Trivia Question 2

Query Execution What are the goals? § Logical vs. physical plans – what are the differences? § Some considerations in building execution engines: § Efficiency – minimize copying, comparisons § Scheduling – make standard code-paths fast § Data layout – how to optimize cache behavior, buffer management, distributed execution, etc. 3

Execution System Architectures § Central vs. distributed vs. parallel vs. mediator § Data partitioning – vertical vs. horizontal § Monet model – binary relations § Distributed – data placement § One operation at a time – INGRES § Pipelined § Iterator-driven § Dataflow-driven § Hybrid approaches 4

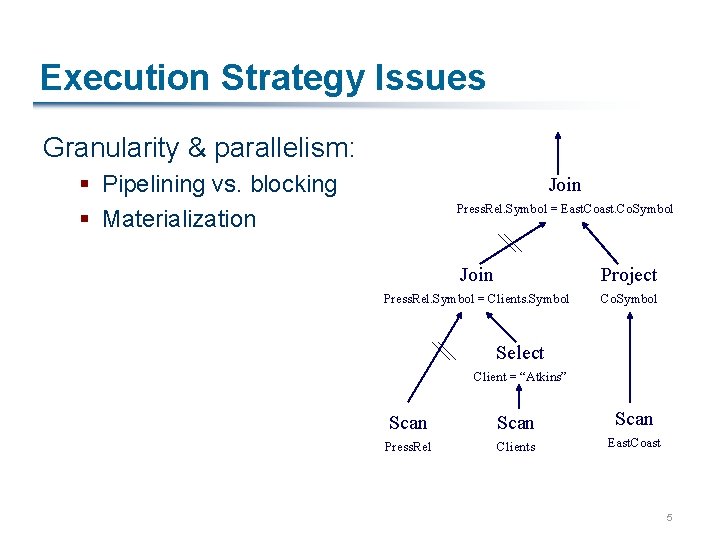

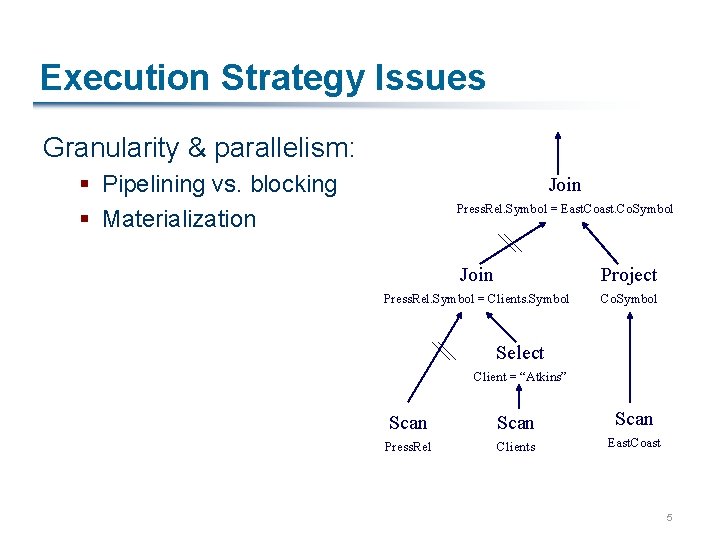

Execution Strategy Issues Granularity & parallelism: § Pipelining vs. blocking § Materialization Join Press. Rel. Symbol = East. Co. Symbol Join Project Press. Rel. Symbol = Clients. Symbol Co. Symbol Select Client = “Atkins” Scan Press. Rel Clients East. Coast 5

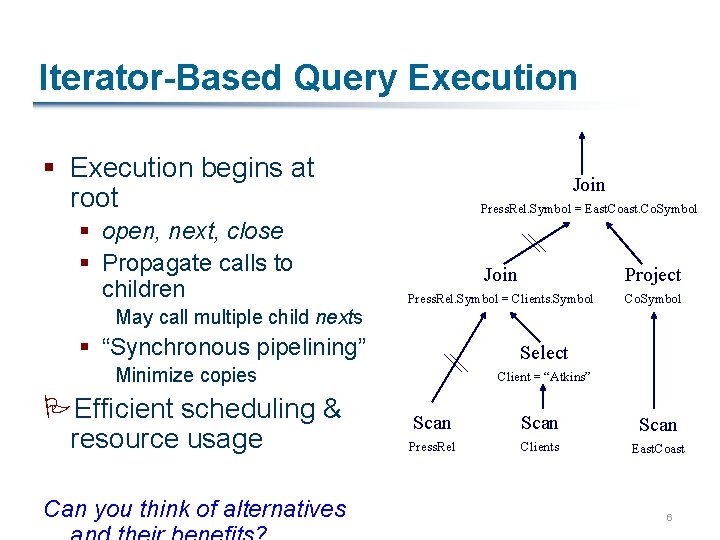

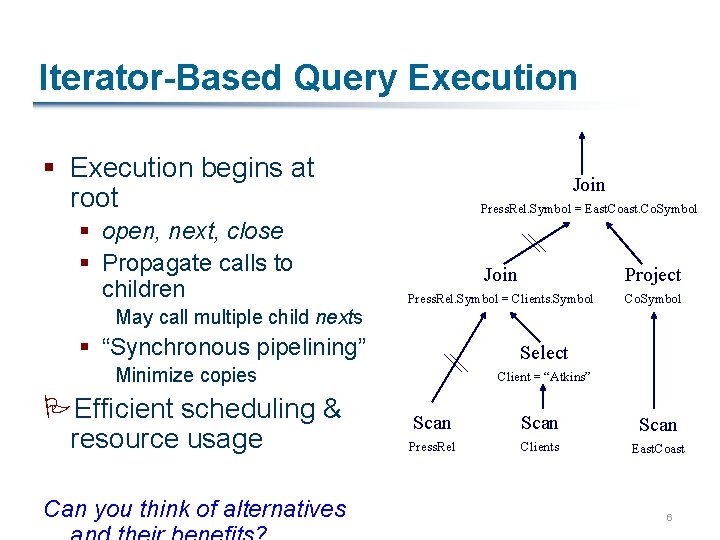

Iterator-Based Query Execution § Execution begins at root § open, next, close § Propagate calls to children May call multiple child nexts Join Press. Rel. Symbol = East. Co. Symbol Join Project Press. Rel. Symbol = Clients. Symbol Co. Symbol § “Synchronous pipelining” Select Minimize copies PEfficient scheduling & resource usage Can you think of alternatives Client = “Atkins” Scan Press. Rel Clients East. Coast 6

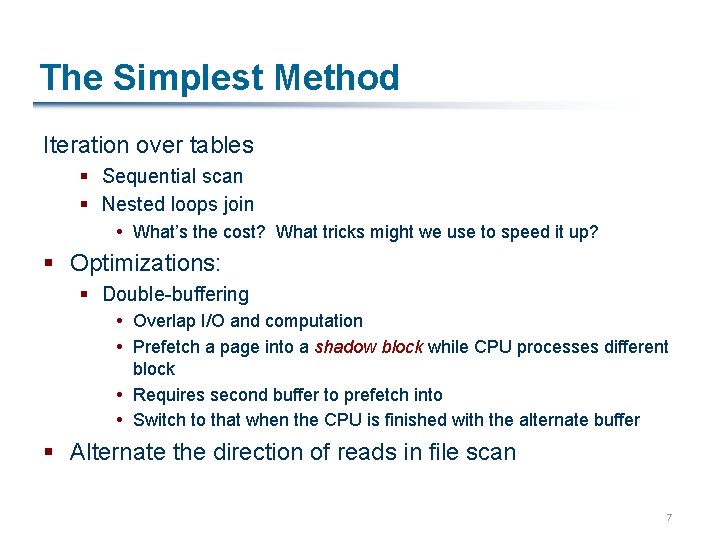

The Simplest Method Iteration over tables § Sequential scan § Nested loops join What’s the cost? What tricks might we use to speed it up? § Optimizations: § Double-buffering Overlap I/O and computation Prefetch a page into a shadow block while CPU processes different block Requires second buffer to prefetch into Switch to that when the CPU is finished with the alternate buffer § Alternate the direction of reads in file scan 7

Speeding Operations over Data Three general data organization techniques: § Indexing Associative lookup & synopses § Sorting § Hashing 8

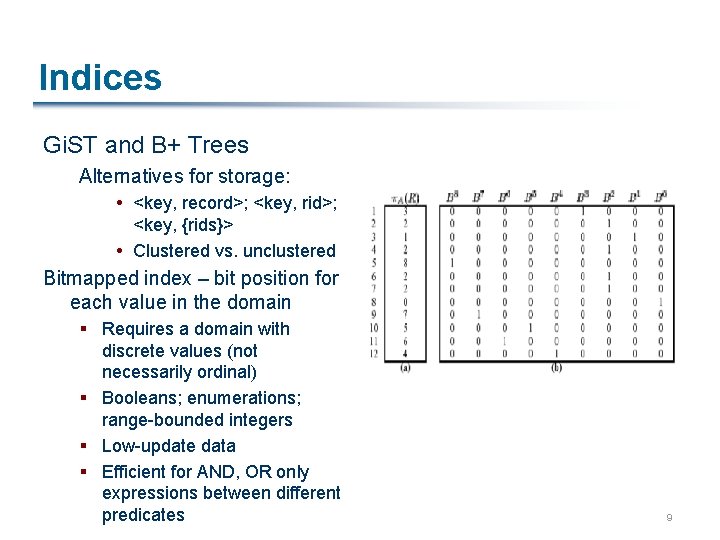

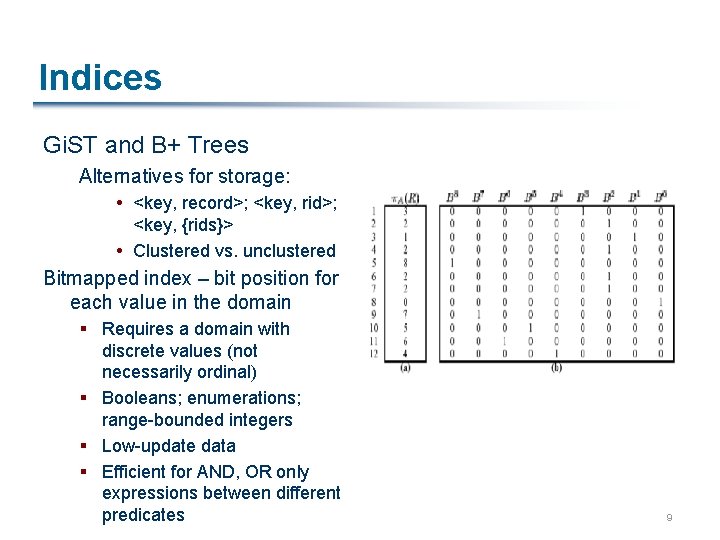

Indices Gi. ST and B+ Trees Alternatives for storage: <key, record>; <key, rid>; <key, {rids}> Clustered vs. unclustered Bitmapped index – bit position for each value in the domain § Requires a domain with discrete values (not necessarily ordinal) § Booleans; enumerations; range-bounded integers § Low-update data § Efficient for AND, OR only expressions between different predicates 9

Usefulness of Indices Where are these structures most useful? § Sargable predicates § Covering indices In many cases, only help with part of the story § Filter part of the answer set, but we still need further computation § e. g. , AND or OR of two predicates General rule of thumb: § Unclustered index only useful if selectivity is < 1020% 10

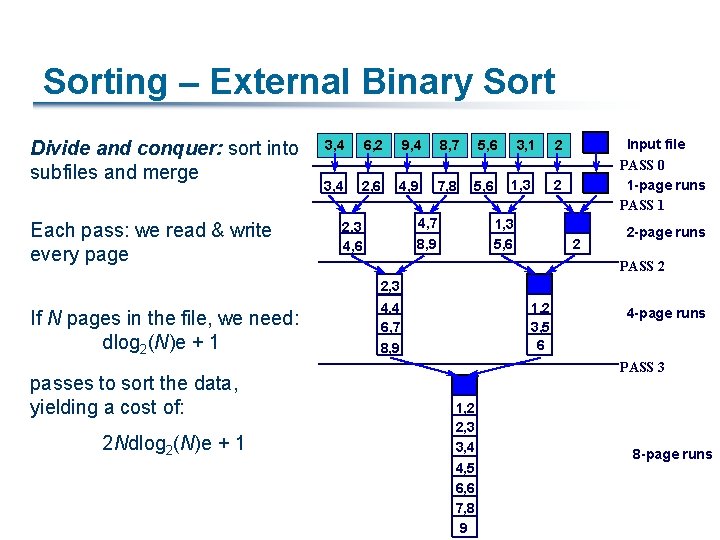

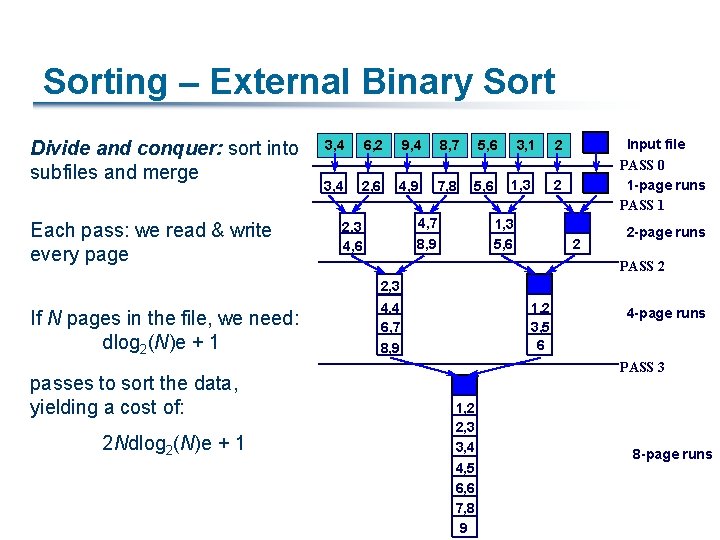

Sorting – External Binary Sort Divide and conquer: sort into subfiles and merge Each pass: we read & write every page 3, 4 6, 2 9, 4 8, 7 5, 6 3, 4 2, 6 4, 9 7, 8 5, 6 4, 7 8, 9 2, 3 4, 6 3, 1 1, 3 Input file PASS 0 1 -page runs PASS 1 2 2 1, 3 5, 6 2 2 -page runs PASS 2 2, 3 If N pages in the file, we need: dlog 2(N)e + 1 passes to sort the data, yielding a cost of: 2 Ndlog 2(N)e + 1 4, 4 6, 7 8, 9 1, 2 3, 5 6 4 -page runs PASS 3 1, 2 2, 3 3, 4 4, 5 6, 6 7, 8 9 8 -page runs

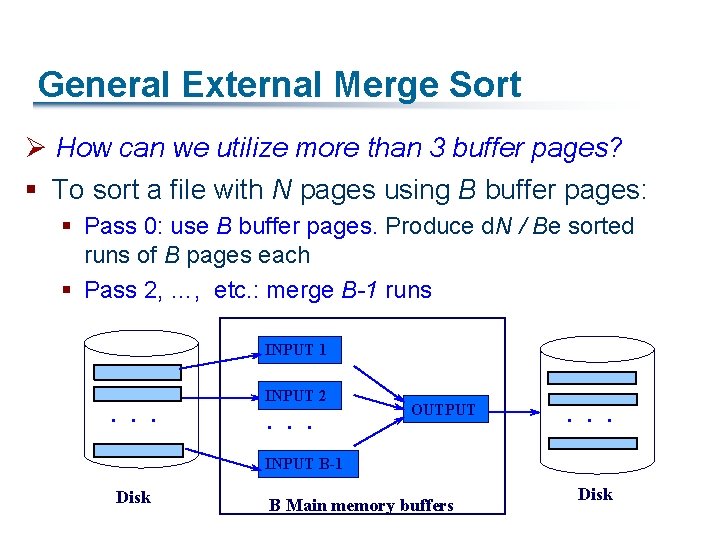

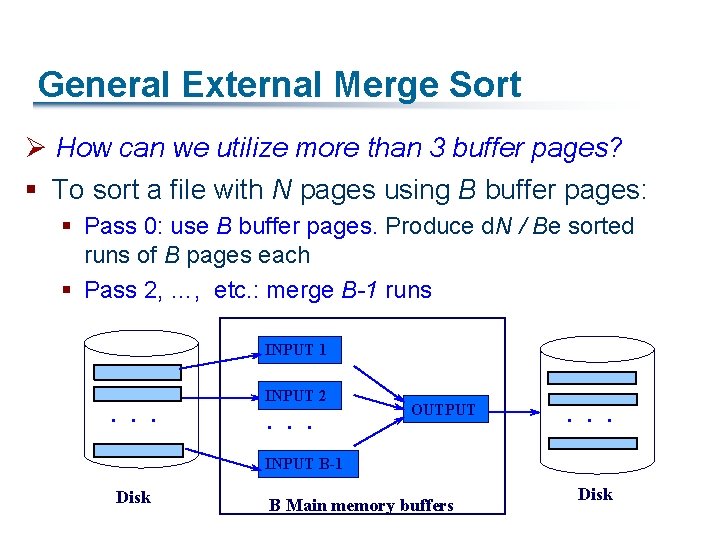

General External Merge Sort Ø How can we utilize more than 3 buffer pages? § To sort a file with N pages using B buffer pages: § Pass 0: use B buffer pages. Produce d. N / Be sorted runs of B pages each § Pass 2, …, etc. : merge B-1 runs INPUT 1 . . . INPUT 2 . . . OUTPUT . . . INPUT B-1 Disk B Main memory buffers Disk

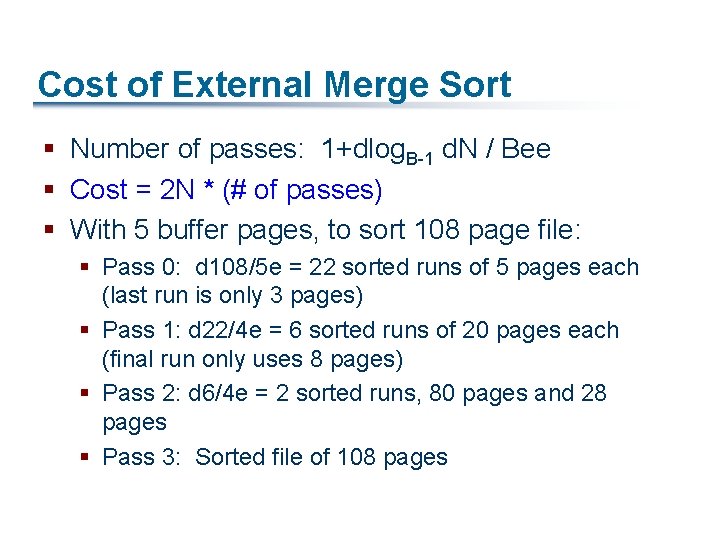

Cost of External Merge Sort § Number of passes: 1+dlog. B-1 d. N / Bee § Cost = 2 N * (# of passes) § With 5 buffer pages, to sort 108 page file: § Pass 0: d 108/5 e = 22 sorted runs of 5 pages each (last run is only 3 pages) § Pass 1: d 22/4 e = 6 sorted runs of 20 pages each (final run only uses 8 pages) § Pass 2: d 6/4 e = 2 sorted runs, 80 pages and 28 pages § Pass 3: Sorted file of 108 pages

Applicability of Sort Techniques § Join § Intersection § Aggregation § Duplicate removal as an instance of aggregation § XML nesting as an instance of aggregation 14

Merge Join § Requires data sorted by join attributes Merge and join sorted files, reading sequentially a block at a time § Maintain two file pointers While tuple at R < tuple at S, advance R (and vice versa) While tuples match, output all possible pairings § Maintain a “last in sequence” pointer § Preserves sorted order of “outer” relation § Cost: b(R) + b(S) plus sort costs, if necessary In practice, approximately linear, 3 (b(R) + b(S)) 15

Hashing Several types of hashing: § Static hashing § Extensible hashing § Consistent hashing (used in P 2 P; we’ll see later) 16

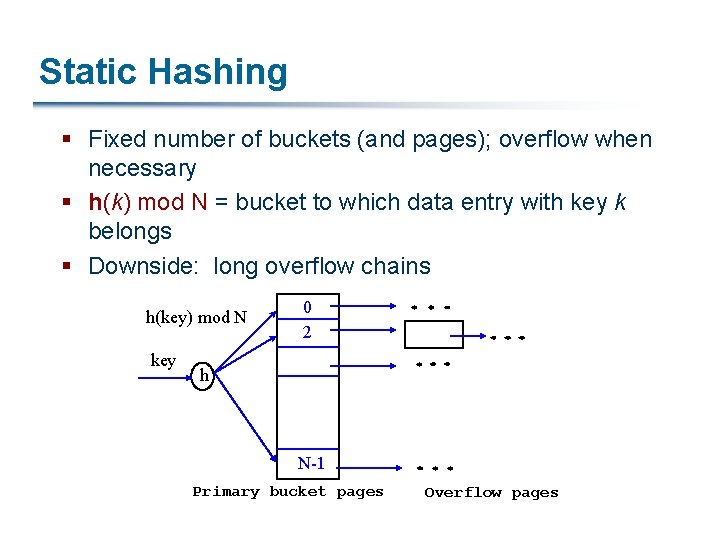

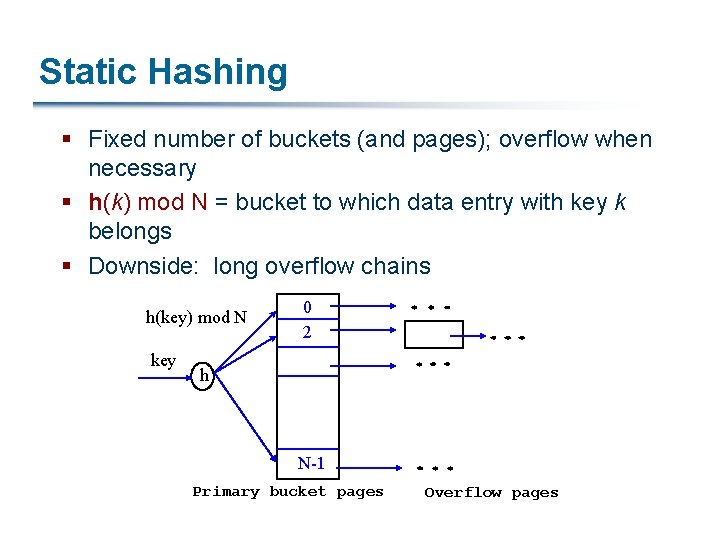

Static Hashing § Fixed number of buckets (and pages); overflow when necessary § h(k) mod N = bucket to which data entry with key k belongs § Downside: long overflow chains h(key) mod N key 0 2 h N-1 Primary bucket pages Overflow pages

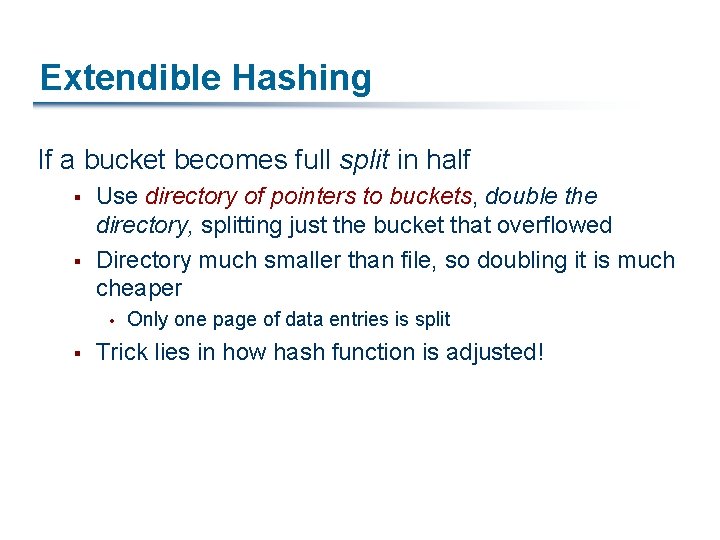

Extendible Hashing If a bucket becomes full split in half § § Use directory of pointers to buckets, double the directory, splitting just the bucket that overflowed Directory much smaller than file, so doubling it is much cheaper § Only one page of data entries is split Trick lies in how hash function is adjusted!

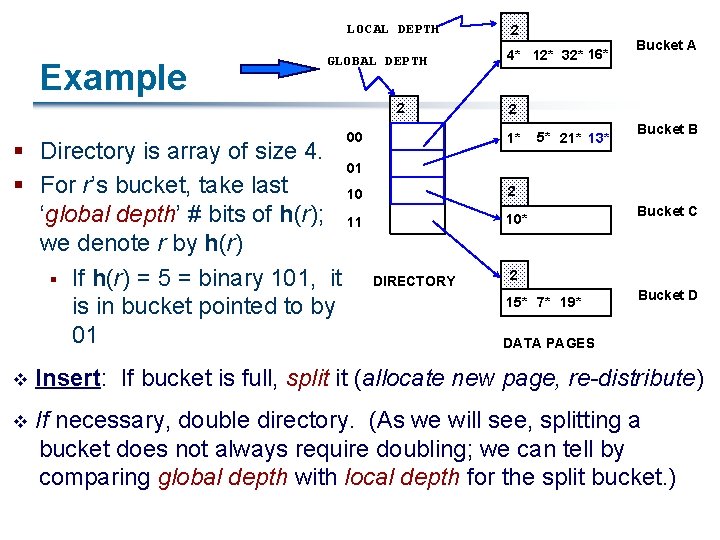

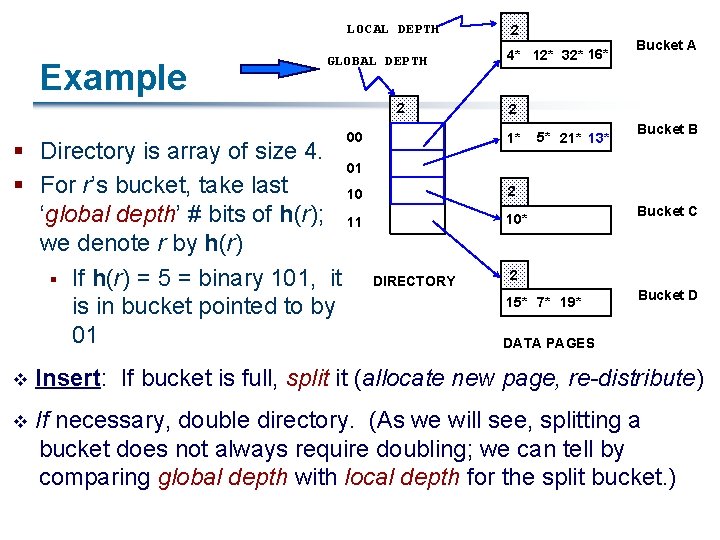

LOCAL DEPTH Example GLOBAL DEPTH 2 00 § Directory is array of size 4. 01 § For r’s bucket, take last 10 ‘global depth’ # bits of h(r); 11 we denote r by h(r) § If h(r) = 5 = binary 101, it is in bucket pointed to by 01 2 4* 12* 32* 16* Bucket A 2 1* 5* 21* 13* Bucket B 2 10* DIRECTORY Bucket C 2 15* 7* 19* Bucket D DATA PAGES v Insert: If bucket is full, split it (allocate new page, re-distribute) v If necessary, double directory. (As we will see, splitting a bucket does not always require doubling; we can tell by comparing global depth with local depth for the split bucket. )

Insert h(r)=20 (Causes Doubling) LOCAL DEPTH 2 32*16* GLOBAL DEPTH 2 00 Bucket A 2 1* 5* 21*13* Bucket B 10 2 11 10* Bucket C 3 000 2 1* 5* 21* 13* Bucket B 010 2 011 10* Bucket C 100 Bucket D 101 2 110 15* 7* 19* Bucket D 111 2 4* 12* 20* 32* 16* Bucket A 001 2 15* 7* 19* 3 GLOBAL DEPTH 01 DIRECTORY LOCAL DEPTH Bucket A 2 (`split image' of Bucket A) 3 DIRECTORY 4* 12* 20* Bucket A 2 (‘split image' of Bucket A)

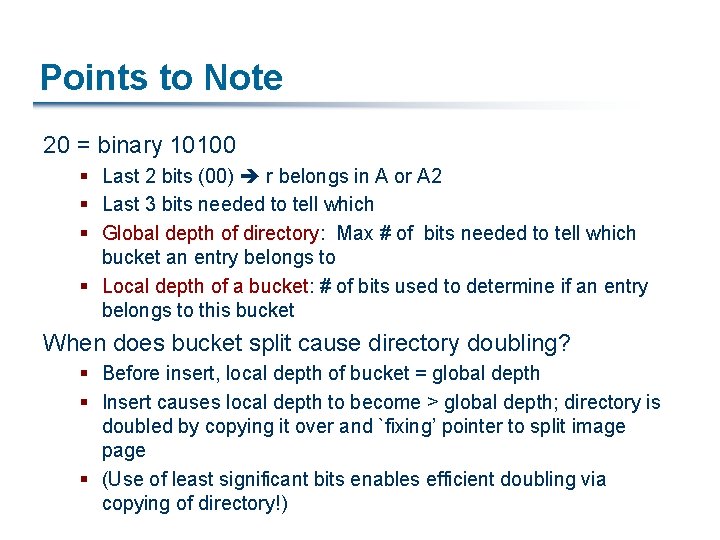

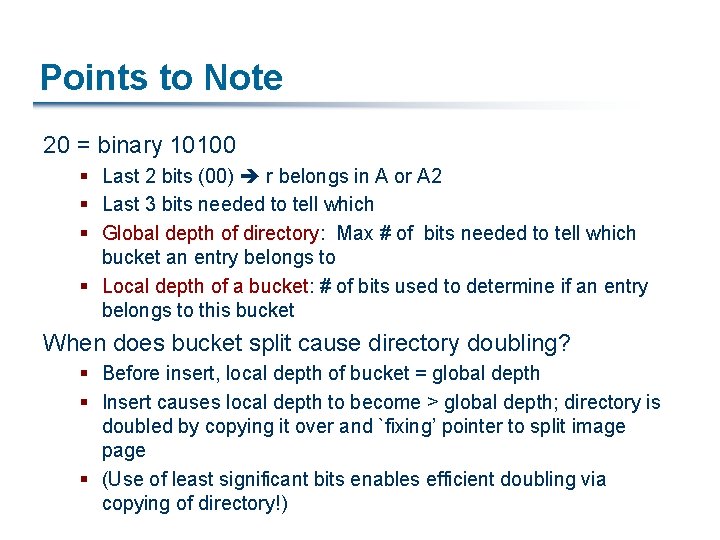

Points to Note 20 = binary 10100 § Last 2 bits (00) r belongs in A or A 2 § Last 3 bits needed to tell which § Global depth of directory: Max # of bits needed to tell which bucket an entry belongs to § Local depth of a bucket: # of bits used to determine if an entry belongs to this bucket When does bucket split cause directory doubling? § Before insert, local depth of bucket = global depth § Insert causes local depth to become > global depth; directory is doubled by copying it over and `fixing’ pointer to split image page § (Use of least significant bits enables efficient doubling via copying of directory!)

Comments on Extendible Hashing If directory fits in memory, equality search answered with one disk access; else two § Directory grows in spurts, and, if the distribution of hash values is skewed, directory can grow large § Multiple entries with same hash value cause problems! Delete: § If removal of data entry makes bucket empty, can be merged with ‘split image’ § If each directory element points to same bucket as its split image, can halve directory

Relevance of Hashing Techniques § Hash indices use extensible hashing § Uses of static hashing: § Aggregation § Intersection § Joins 23

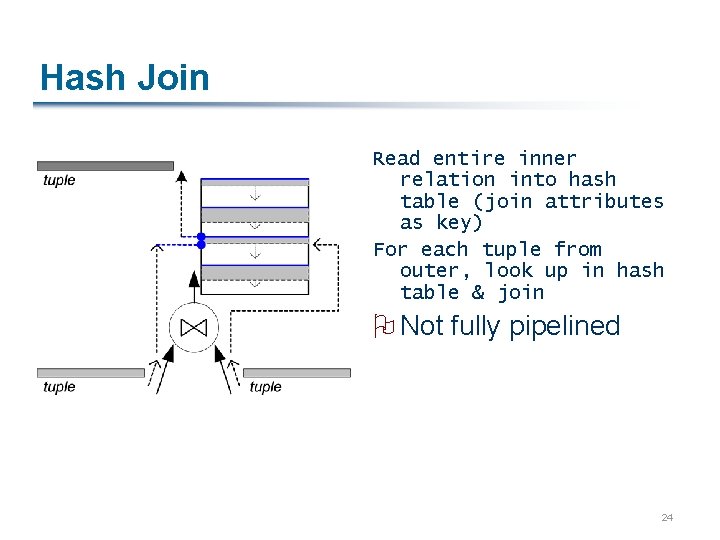

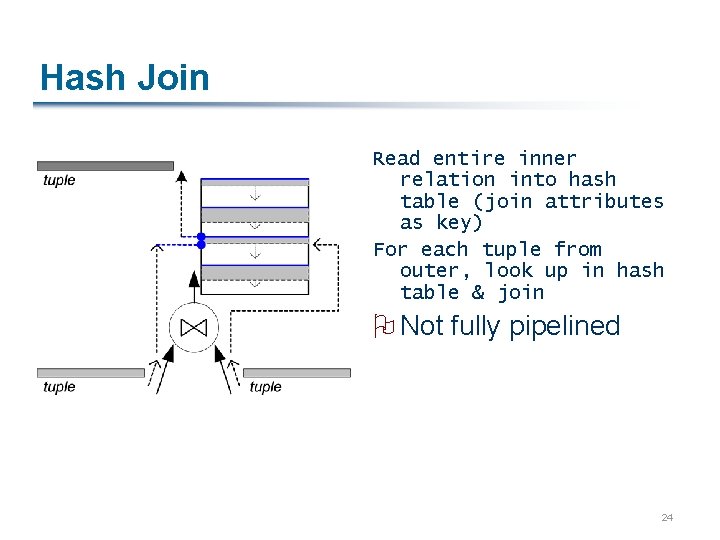

Hash Join Read entire inner relation into hash table (join attributes as key) For each tuple from outer, look up in hash table & join O Not fully pipelined 24

Running out of Memory § Prevention: First partition the data by value into memory -sized groups Partition both relations in the same way, write to files Recursively join the partitions § Resolution: Similar, but do when hash tables full Split hash table into files along bucket boundaries Partition remaining data in same way Recursively join partitions with diff. hash fn! § Hybrid hash join: flush “lazily” a few buckets at a time § Cost: <= 3 * (b(R) + b(S)) 25

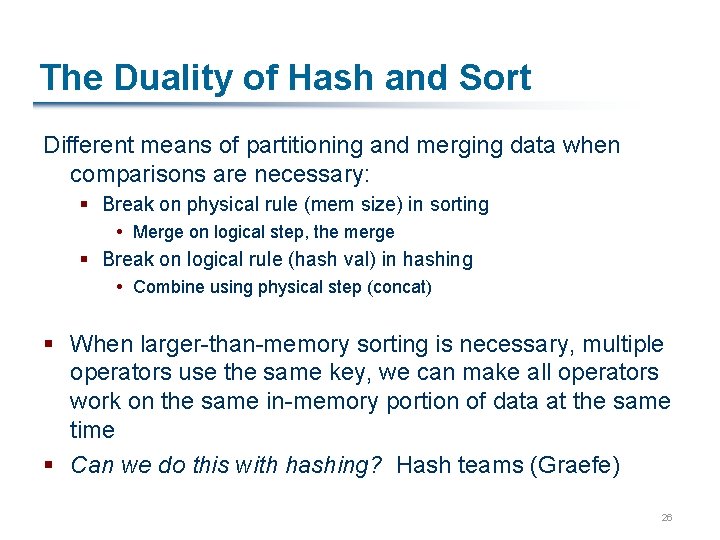

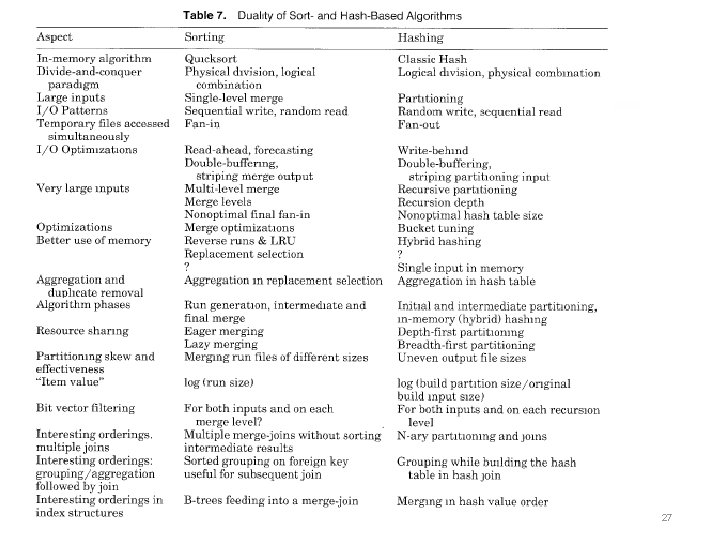

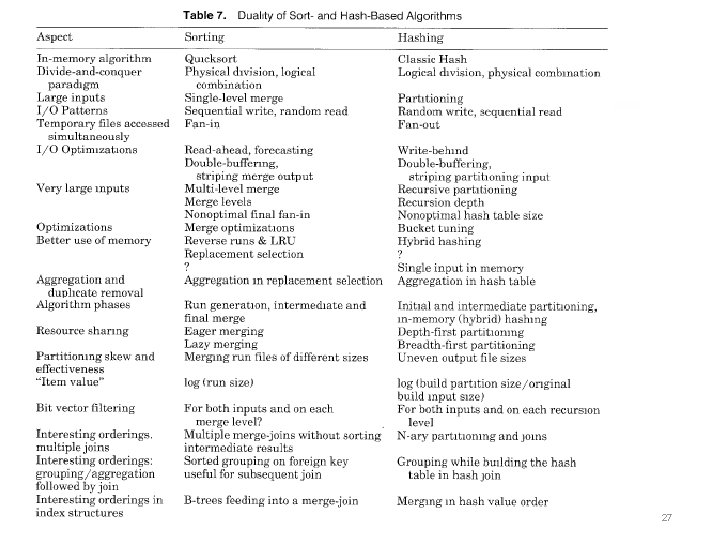

The Duality of Hash and Sort Different means of partitioning and merging data when comparisons are necessary: § Break on physical rule (mem size) in sorting Merge on logical step, the merge § Break on logical rule (hash val) in hashing Combine using physical step (concat) § When larger-than-memory sorting is necessary, multiple operators use the same key, we can make all operators work on the same in-memory portion of data at the same time § Can we do this with hashing? Hash teams (Graefe) 26

27

What If I Want to Distribute Query Processing? § Where do I put the data in the first place (or do I have a choice)? § How do we get data from point A -> point B? § What about delays? § What about “binding patterns”? § Looks kind of like an index join with a sargable predicate 28

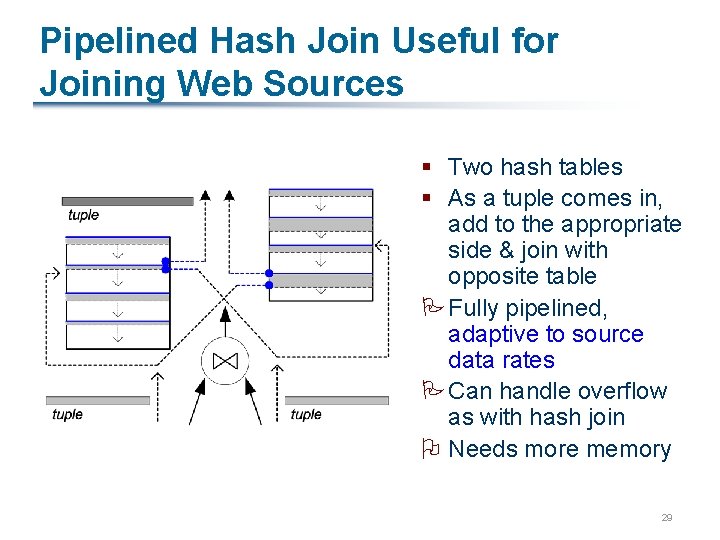

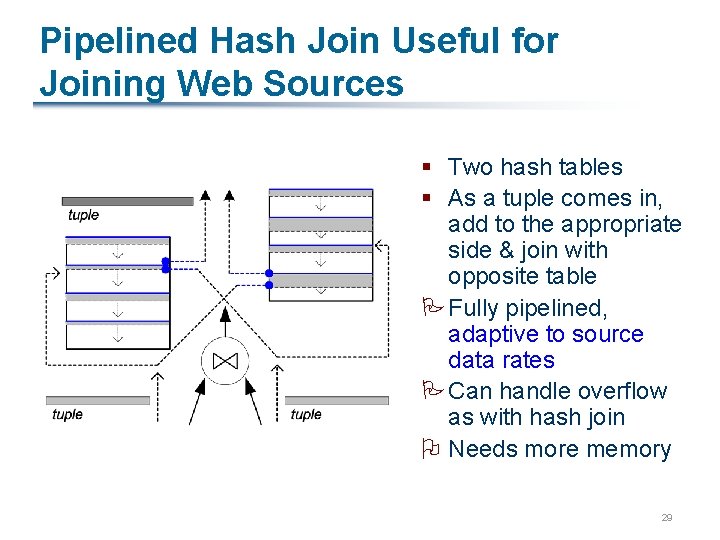

Pipelined Hash Join Useful for Joining Web Sources § Two hash tables § As a tuple comes in, add to the appropriate side & join with opposite table PFully pipelined, adaptive to source data rates PCan handle overflow as with hash join O Needs more memory 29

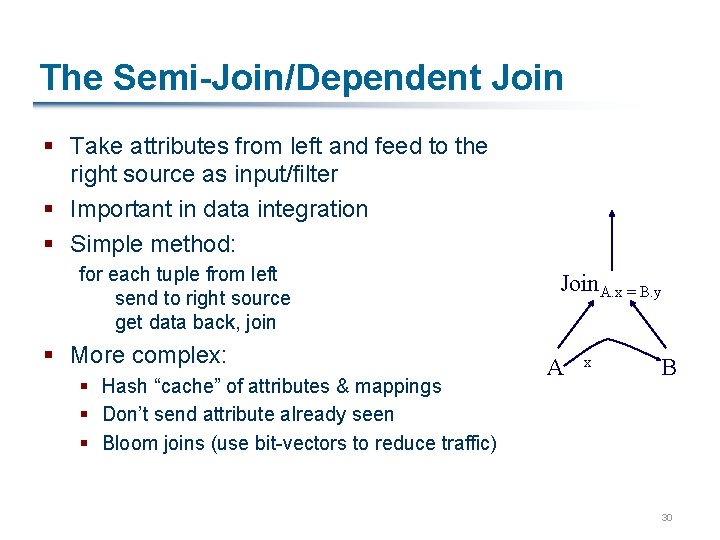

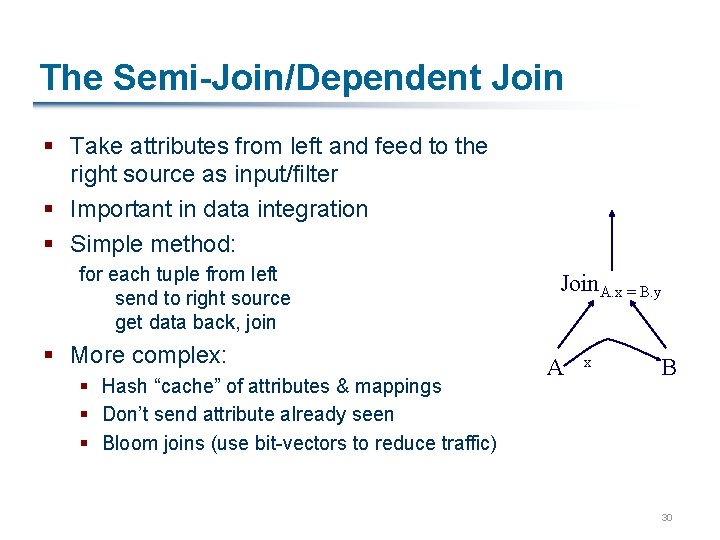

The Semi-Join/Dependent Join § Take attributes from left and feed to the right source as input/filter § Important in data integration § Simple method: for each tuple from left send to right source get data back, join § More complex: § Hash “cache” of attributes & mappings § Don’t send attribute already seen § Bloom joins (use bit-vectors to reduce traffic) Join. A. x = B. y A x B 30

Wrap-Up Query execution is all about engineering for efficiency § O(1) and O(lg n) algorithms wherever possible § Avoid looking at or copying data wherever possible § Note that larger-than-memory is of paramount importance Should that be so in today’s world? As we’ve seen it so far, it’s all about pipelining things through as fast as possible But may also need to consider other axes: § Adaptivity/flexibility – may sometimes need this § Information flow – to the optimizer, the runtime system 31

Upcoming Readings For Wednesday: § Read Chaudhuri survey as an overview § Read and review Selinger et al. paper For Monday: § Read EXODUS and Starburst papers § Write one review contrasting the two on the major issues 32