Quantifying Overhead in Todays Execution Models and Execution

- Slides: 43

Quantifying Overhead in Today’s Execution Models and Execution Models Bottom Up 500 N Interstate 35, Austin, Texas, 78701 Gilbert Hendry, Robert Clay Sandia National Laboratory Thomas Sterling, Matt Anderson Indiana University John Shalf, Nick Wright Lawrence Berkeley Natl. Laboratory Bob Lucas, Jacque Chame, Gene Wagenbreth and Pedro Diniz USC / Information Sciences Institute

Motivation • Today we have a bulk synchronous, distributed memory, communicating sequential processes (CSP) based execution model – We’ve evolved into it over two decades – It will require a lot of work to carry it forward to exascale – The characteristics of today’s execution model are mis-aligned with emerging hardware trends of the coming decade • We need to examine alternative execution models for exascale – Alternatives exist, and they look promising (e. g. async EMs) – We can use modeling and simulation to evaluate the alternatives using DOE applications – This can guide our hardware/software trade-offs in the codesign process, and expands options for creating more effective machines 2

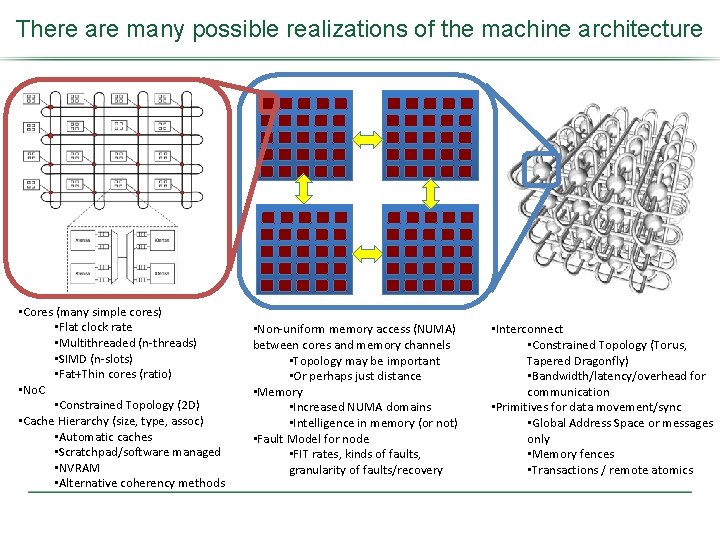

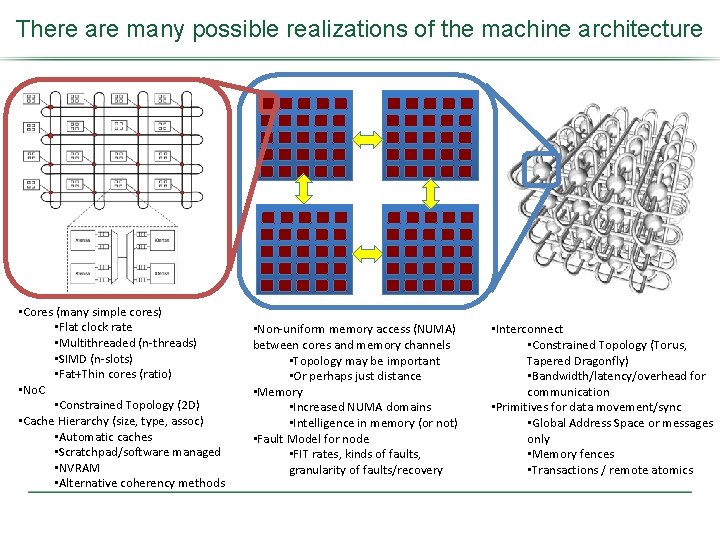

There are many possible realizations of the machine architecture • Cores (many simple cores) • Flat clock rate • Multithreaded (n-threads) • SIMD (n-slots) • Fat+Thin cores (ratio) • No. C • Constrained Topology (2 D) • Cache Hierarchy (size, type, assoc) • Automatic caches • Scratchpad/software managed • NVRAM • Alternative coherency methods • Non-uniform memory access (NUMA) between cores and memory channels • Topology may be important • Or perhaps just distance • Memory • Increased NUMA domains • Intelligence in memory (or not) • Fault Model for node • FIT rates, kinds of faults, granularity of faults/recovery • Interconnect • Constrained Topology (Torus, Tapered Dragonfly) • Bandwidth/latency/overhead for communication • Primitives for data movement/sync • Global Address Space or messages only • Memory fences • Transactions / remote atomics

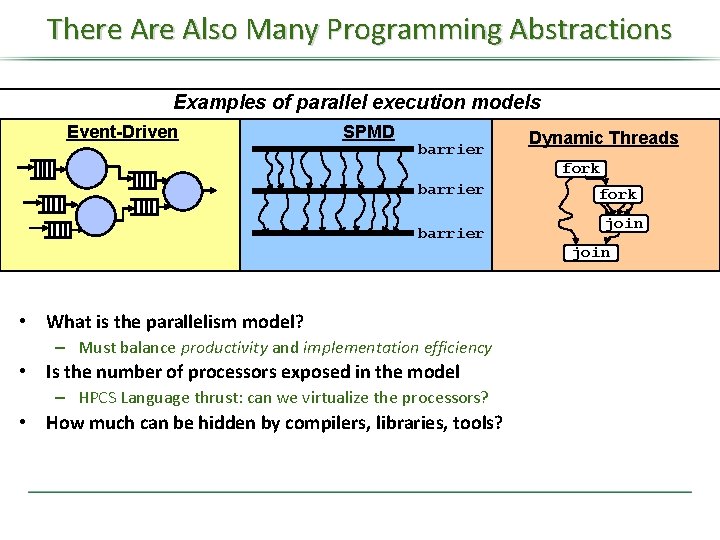

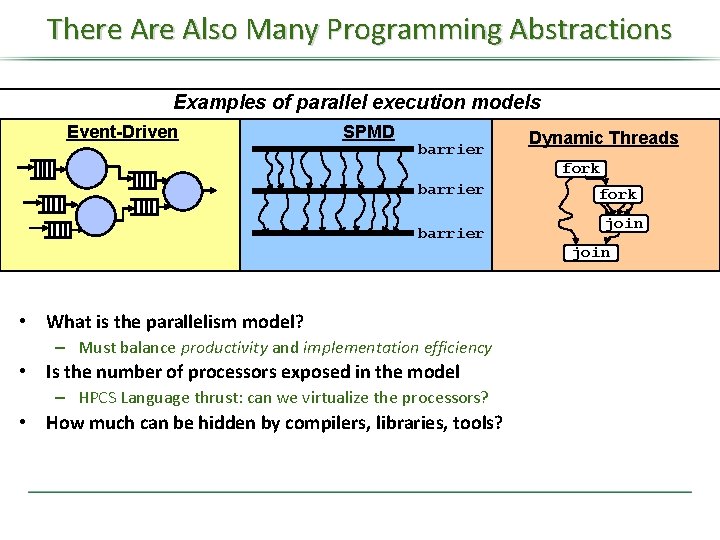

There Also Many Programming Abstractions Examples of parallel execution models Event-Driven SPMD barrier Dynamic Threads fork barrier fork join • What is the parallelism model? – Must balance productivity and implementation efficiency • Is the number of processors exposed in the model – HPCS Language thrust: can we virtualize the processors? • How much can be hidden by compilers, libraries, tools?

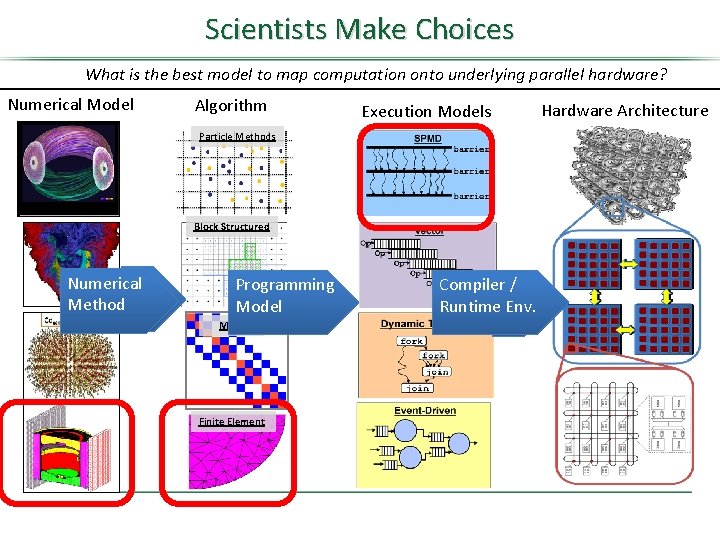

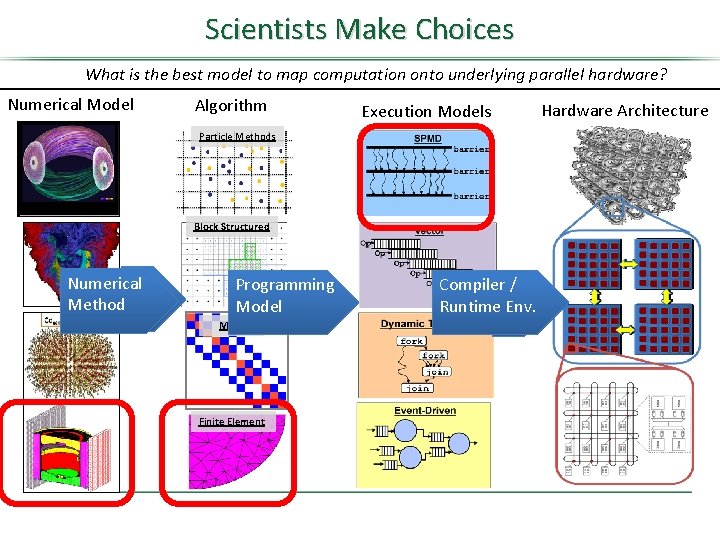

Scientists Make Choices What is the best model to map computation onto underlying parallel hardware? Numerical Model Algorithm Execution Models Particle Methods Block Structured Numerical Method Programming Model Matrix Finite Element Compiler / Runtime Env. Hardware Architecture

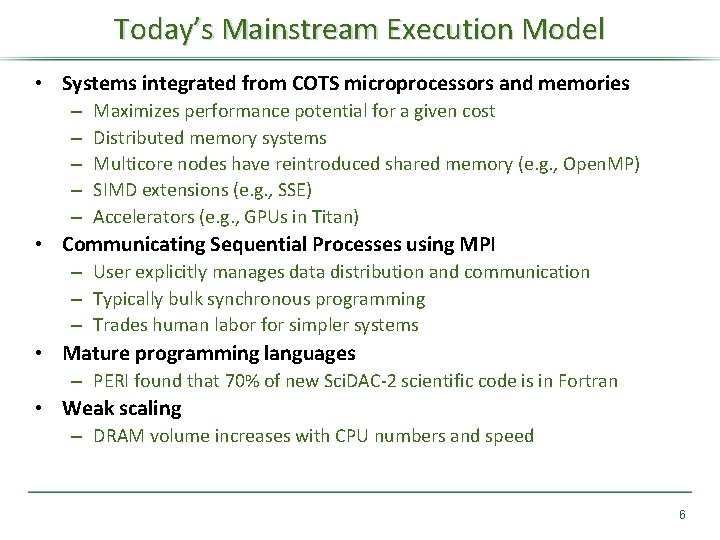

Today’s Mainstream Execution Model • Systems integrated from COTS microprocessors and memories – – – Maximizes performance potential for a given cost Distributed memory systems Multicore nodes have reintroduced shared memory (e. g. , Open. MP) SIMD extensions (e. g. , SSE) Accelerators (e. g. , GPUs in Titan) • Communicating Sequential Processes using MPI – User explicitly manages data distribution and communication – Typically bulk synchronous programming – Trades human labor for simpler systems • Mature programming languages – PERI found that 70% of new Sci. DAC-2 scientific code is in Fortran • Weak scaling – DRAM volume increases with CPU numbers and speed 6

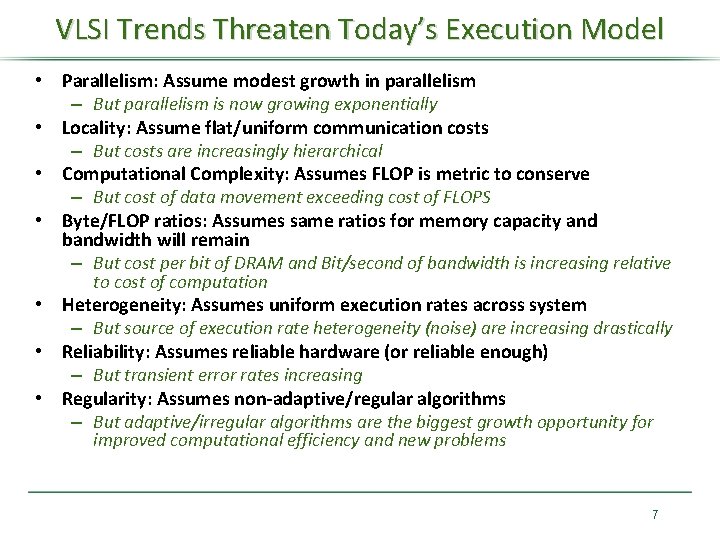

VLSI Trends Threaten Today’s Execution Model • Parallelism: Assume modest growth in parallelism – But parallelism is now growing exponentially • Locality: Assume flat/uniform communication costs – But costs are increasingly hierarchical • Computational Complexity: Assumes FLOP is metric to conserve – But cost of data movement exceeding cost of FLOPS • Byte/FLOP ratios: Assumes same ratios for memory capacity and bandwidth will remain – But cost per bit of DRAM and Bit/second of bandwidth is increasing relative to cost of computation • Heterogeneity: Assumes uniform execution rates across system – But source of execution rate heterogeneity (noise) are increasing drastically • Reliability: Assumes reliable hardware (or reliable enough) – But transient error rates increasing • Regularity: Assumes non-adaptive/regular algorithms – But adaptive/irregular algorithms are the biggest growth opportunity for improved computational efficiency and new problems 7

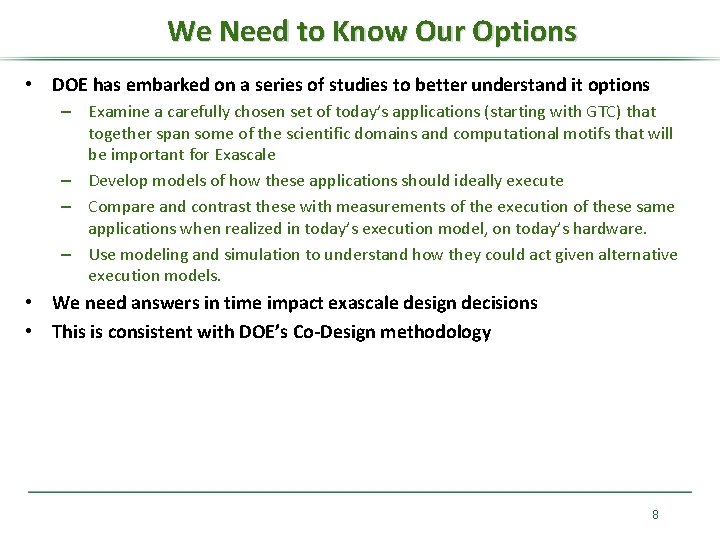

We Need to Know Our Options • DOE has embarked on a series of studies to better understand it options – Examine a carefully chosen set of today’s applications (starting with GTC) that together span some of the scientific domains and computational motifs that will be important for Exascale – Develop models of how these applications should ideally execute – Compare and contrast these with measurements of the execution of these same applications when realized in today’s execution model, on today’s hardware. – Use modeling and simulation to understand how they could act given alternative execution models. • We need answers in time impact exascale design decisions • This is consistent with DOE’s Co-Design methodology 8

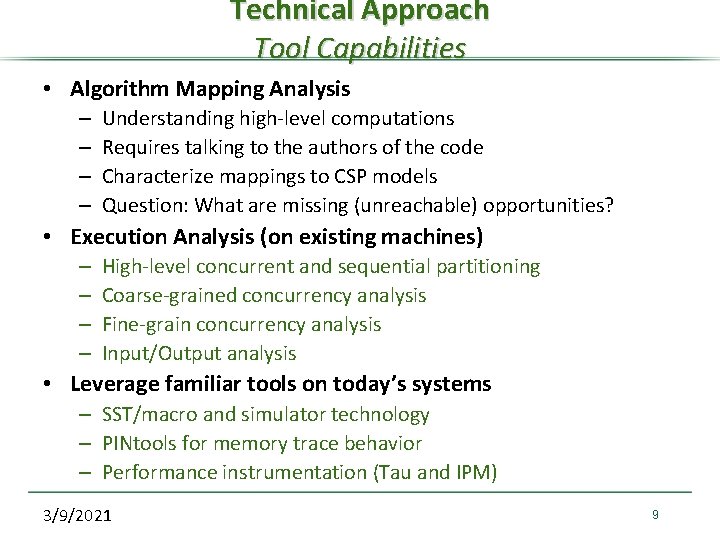

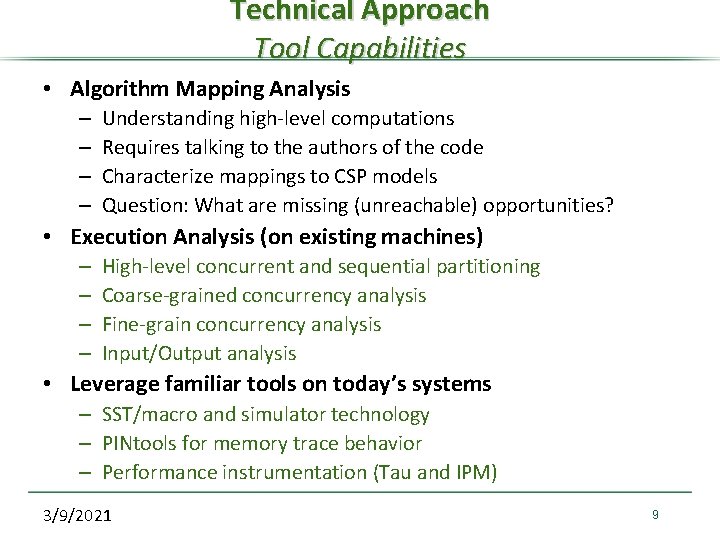

Technical Approach Tool Capabilities • Algorithm Mapping Analysis – – Understanding high-level computations Requires talking to the authors of the code Characterize mappings to CSP models Question: What are missing (unreachable) opportunities? • Execution Analysis (on existing machines) – – High-level concurrent and sequential partitioning Coarse-grained concurrency analysis Fine-grain concurrency analysis Input/Output analysis • Leverage familiar tools on today’s systems – SST/macro and simulator technology – PINtools for memory trace behavior – Performance instrumentation (Tau and IPM) 3/9/2021 9

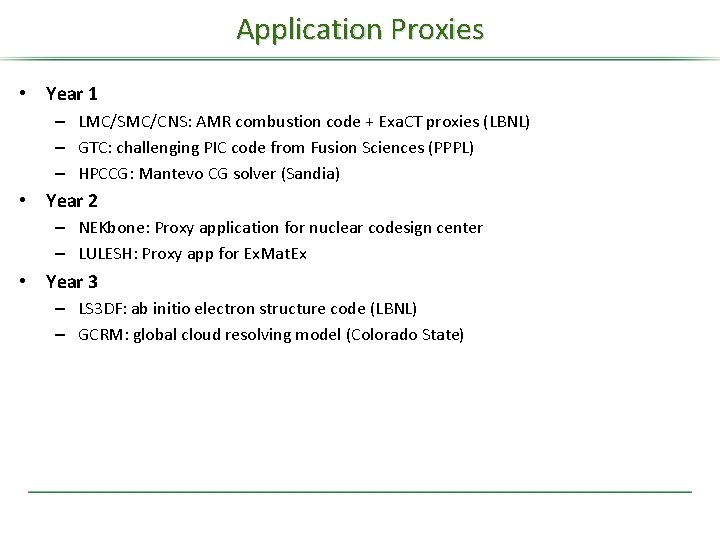

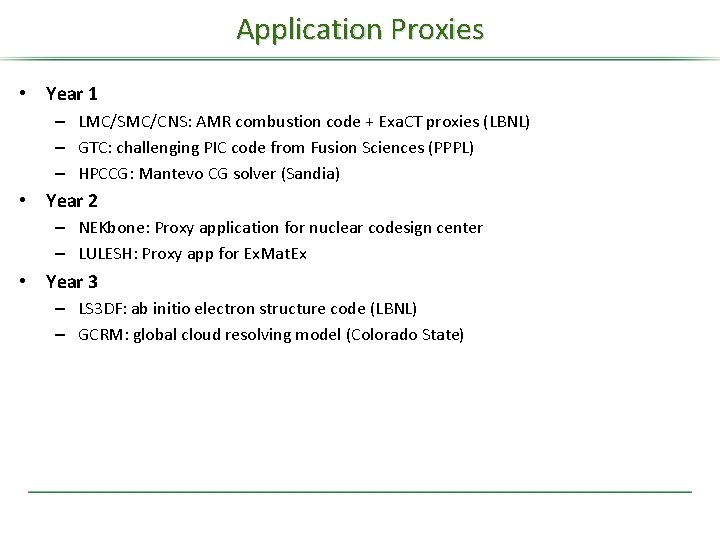

Application Proxies • Year 1 – LMC/SMC/CNS: AMR combustion code + Exa. CT proxies (LBNL) – GTC: challenging PIC code from Fusion Sciences (PPPL) – HPCCG: Mantevo CG solver (Sandia) • Year 2 – NEKbone: Proxy application for nuclear codesign center – LULESH: Proxy app for Ex. Mat. Ex • Year 3 – LS 3 DF: ab initio electron structure code (LBNL) – GCRM: global cloud resolving model (Colorado State)

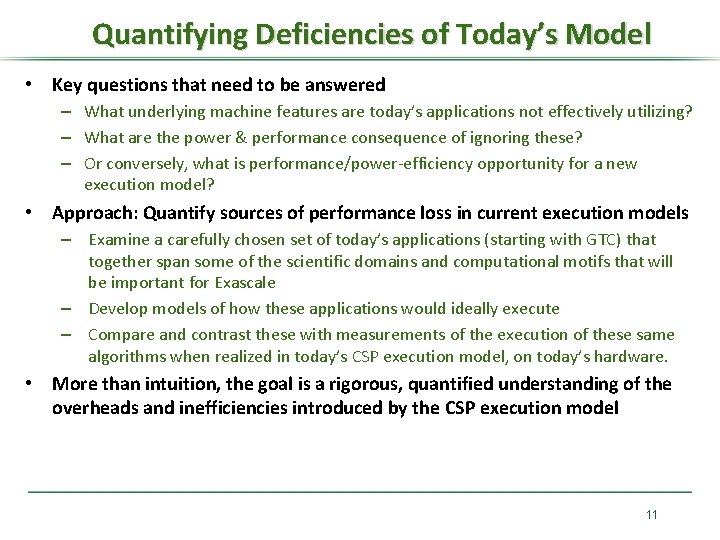

Quantifying Deficiencies of Today’s Model • Key questions that need to be answered – What underlying machine features are today’s applications not effectively utilizing? – What are the power & performance consequence of ignoring these? – Or conversely, what is performance/power-efficiency opportunity for a new execution model? • Approach: Quantify sources of performance loss in current execution models – Examine a carefully chosen set of today’s applications (starting with GTC) that together span some of the scientific domains and computational motifs that will be important for Exascale – Develop models of how these applications would ideally execute – Compare and contrast these with measurements of the execution of these same algorithms when realized in today’s CSP execution model, on today’s hardware. • More than intuition, the goal is a rigorous, quantified understanding of the overheads and inefficiencies introduced by the CSP execution model 11

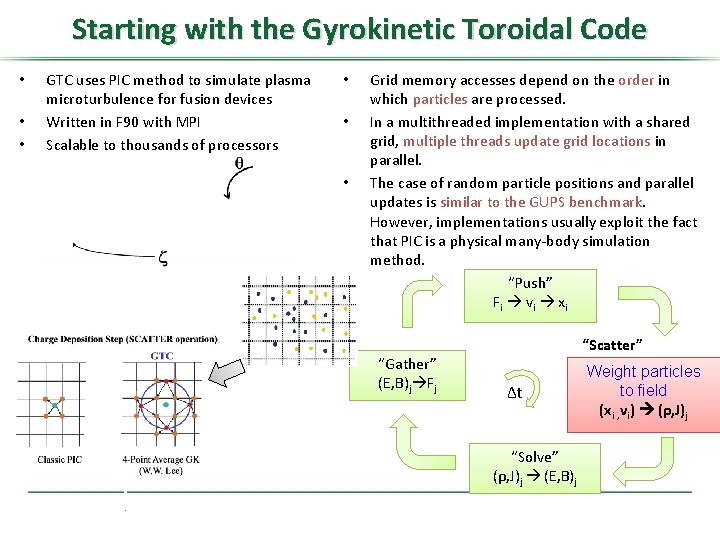

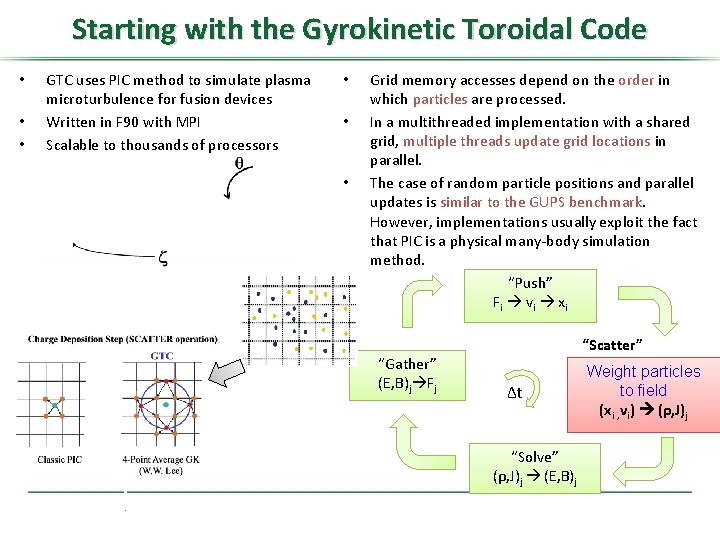

Starting with the Gyrokinetic Toroidal Code • • • GTC uses PIC method to simulate plasma microturbulence for fusion devices Written in F 90 with MPI Scalable to thousands of processors • • • Grid memory accesses depend on the order in which particles are processed. In a multithreaded implementation with a shared grid, multiple threads update grid locations in parallel. The case of random particle positions and parallel updates is similar to the GUPS benchmark. However, implementations usually exploit the fact that PIC is a physical many-body simulation method. “Push” F i v i xi “Gather” (E, B)j Fj “Scatter” Δt “Solve” (ρ, J)j (E, B)j Weight particles to field (xi , vi) (ρ, J)j

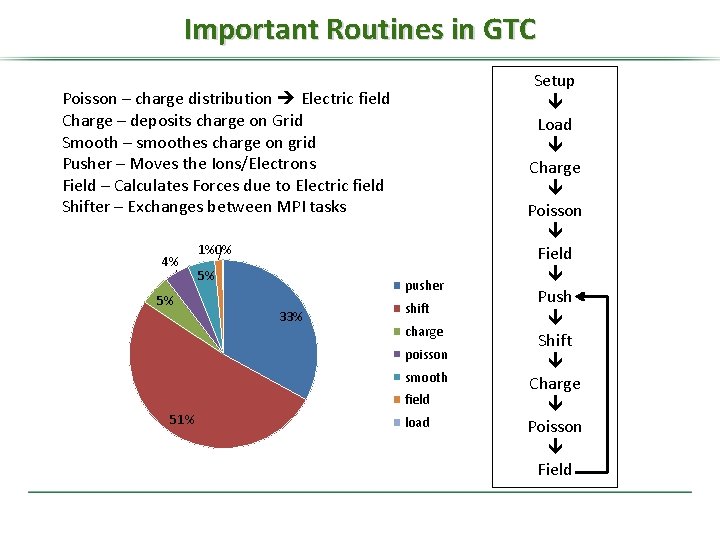

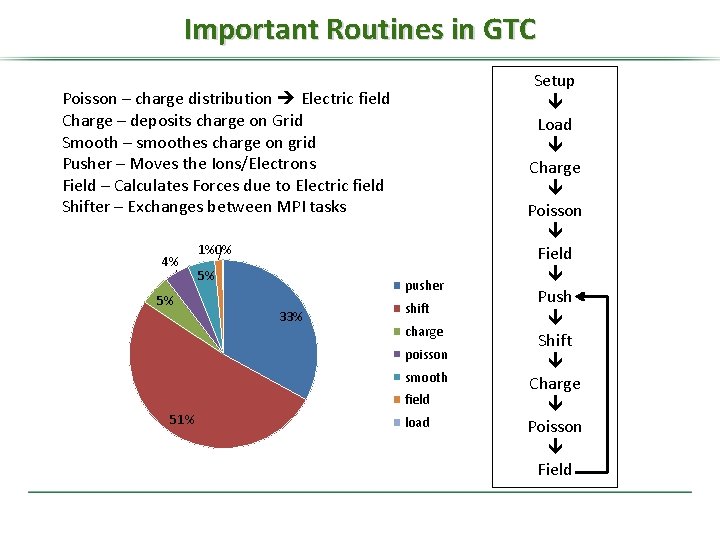

Important Routines in GTC Poisson – charge distribution Electric field Charge – deposits charge on Grid Smooth – smoothes charge on grid Pusher – Moves the Ions/Electrons Field – Calculates Forces due to Electric field Shifter – Exchanges between MPI tasks 4% 5% 1%0% 5% pusher 33% shift charge poisson smooth field 51% load Setup Load Charge Poisson Field Push Shift Charge Poisson Field

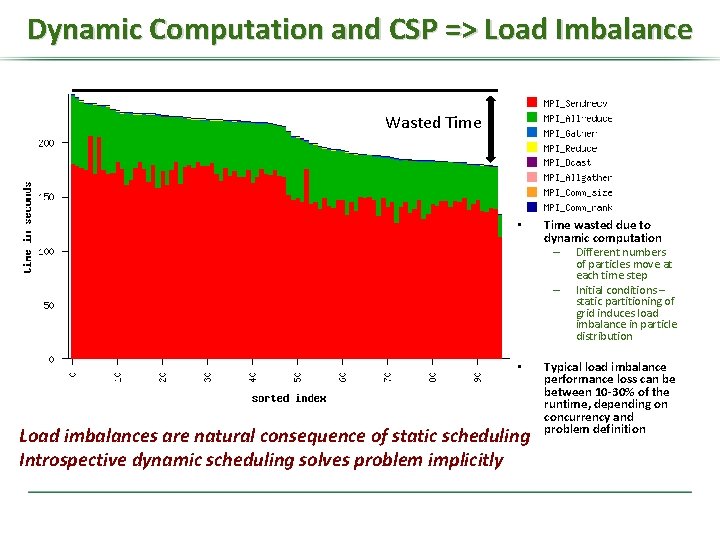

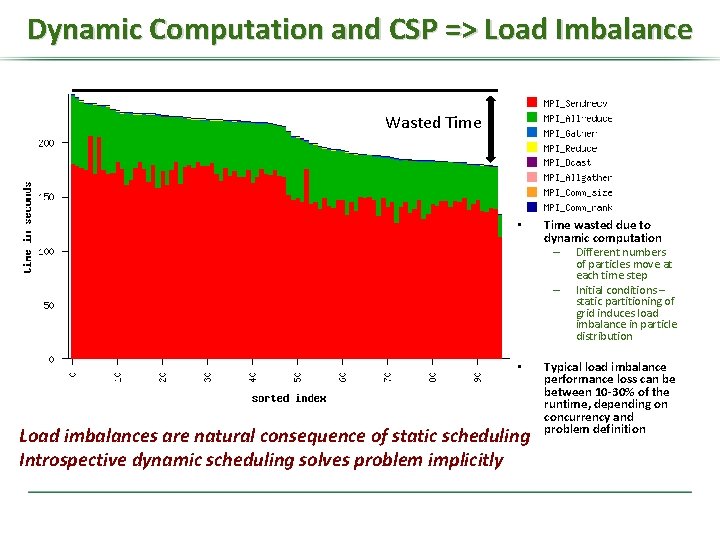

Dynamic Computation and CSP => Load Imbalance Wasted Time • Time wasted due to dynamic computation – Different numbers – • Load imbalances are natural consequence of static scheduling Introspective dynamic scheduling solves problem implicitly of particles move at each time step Initial conditions – static partitioning of grid induces load imbalance in particle distribution Typical load imbalance performance loss can be between 10 -30% of the runtime, depending on concurrency and problem definition

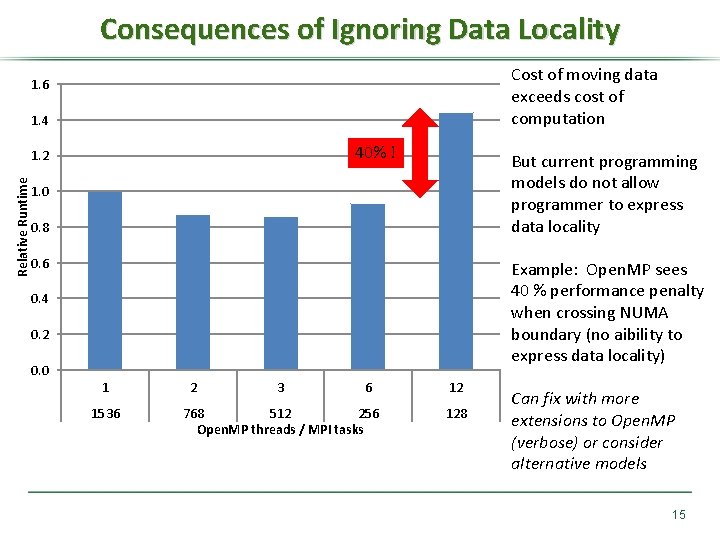

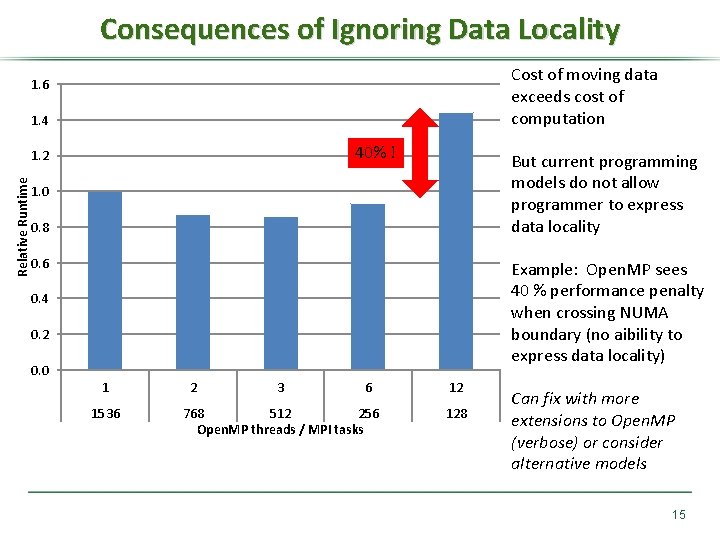

Consequences of Ignoring Data Locality Cost of moving data exceeds cost of computation 1. 6 1. 4 40% ! Relative Runtime 1. 2 But current programming models do not allow programmer to express data locality 1. 0 0. 8 0. 6 Example: Open. MP sees 40 % performance penalty when crossing NUMA boundary (no aibility to express data locality) 0. 4 0. 2 0. 0 1 1536 2 3 6 768 512 256 Open. MP threads / MPI tasks 12 128 Can fix with more extensions to Open. MP (verbose) or consider alternative models 15

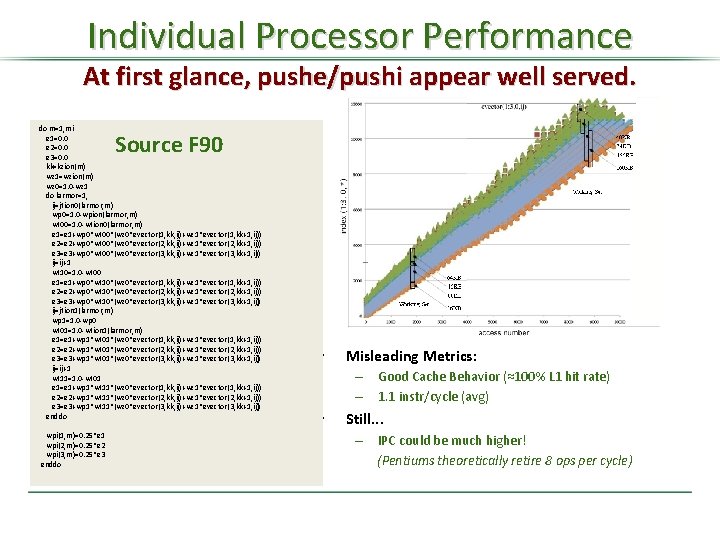

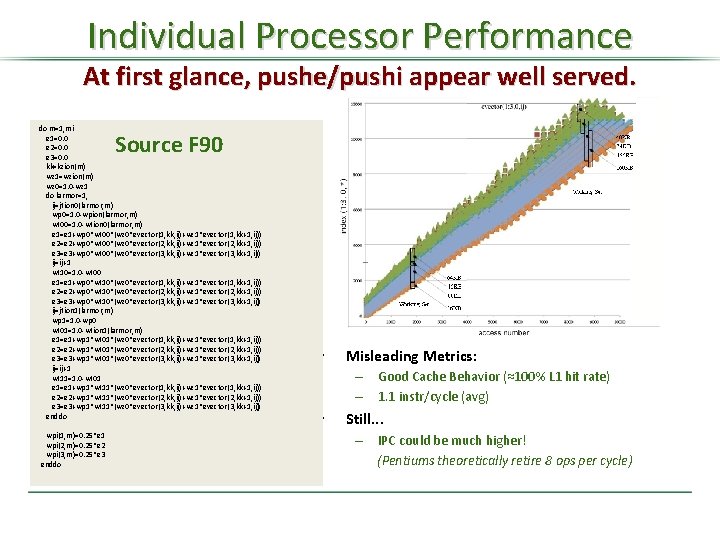

Individual Processor Performance At first glance, pushe/pushi appear well served. do m=1, mi e 1=0. 0 e 2=0. 0 e 3=0. 0 kk=kzion(m) wz 1=wzion(m) wz 0=1. 0 -wz 1 do larmor=1, ij=jtion 0(larmor, m) wp 0=1. 0 -wpion(larmor, m) wt 00=1. 0 -wtion 0(larmor, m) e 1=e 1+wp 0*wt 00*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 0*wt 00*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 0*wt 00*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) ij=ij+1 wt 10=1. 0 -wt 00 e 1=e 1+wp 0*wt 10*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 0*wt 10*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 0*wt 10*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) ij=jtion 1(larmor, m) wp 1=1. 0 -wp 0 wt 01=1. 0 -wtion 1(larmor, m) e 1=e 1+wp 1*wt 01*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 1*wt 01*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 1*wt 01*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) ij=ij+1 wt 11=1. 0 -wt 01 e 1=e 1+wp 1*wt 11*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 1*wt 11*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 1*wt 11*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) enddo Source F 90 wpi(1, m)=0. 25*e 1 wpi(2, m)=0. 25*e 2 wpi(3, m)=0. 25*e 3 enddo • Misleading Metrics: – Good Cache Behavior (≈100% L 1 hit rate) – 1. 1 instr/cycle (avg) • Still. . . – IPC could be much higher! (Pentiums theoretically retire 8 ops per cycle)

They Really Aren’t There is a Semantic Gap Source F 90 x 86 Binary do m=1, mi e 1=0. 0 e 2=0. 0 e 3=0. 0 kk=kzion(m) wz 1=wzion(m) wz 0=1. 0 -wz 1 do larmor=1, 4 ij=jtion 0(larmor, m) wp 0=1. 0 -wpion(larmor, m) wt 00=1. 0 -wtion 0(larmor, m) e 1=e 1+wp 0*wt 00*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 0*wt 00*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 0*wt 00*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) ij=ij+1 wt 10=1. 0 -wt 00 e 1=e 1+wp 0*wt 10*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 0*wt 10*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 0*wt 10*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) ij=jtion 1(larmor, m) wp 1=1. 0 -wp 0 wt 01=1. 0 -wtion 1(larmor, m) e 1=e 1+wp 1*wt 01*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 1*wt 01*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 1*wt 01*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) ij=ij+1 wt 11=1. 0 -wt 01 e 1=e 1+wp 1*wt 11*(wz 0*evector(1, kk, ij)+wz 1*evector(1, kk+1, ij)) e 2=e 2+wp 1*wt 11*(wz 0*evector(2, kk, ij)+wz 1*evector(2, kk+1, ij)) e 3=e 3+wp 1*wt 11*(wz 0*evector(3, kk, ij)+wz 1*evector(3, kk+1, ij)) enddo wpi(1, m)=0. 25*e 1 wpi(2, m)=0. 25*e 2 wpi(3, m)=0. 25*e 3 Compiler DFG enddo User Expected – “Mental Model” Per iteration of loop: FP MULTs: 195 FP ADD/SUBs: 121 Array Accesses: 34 Compiler Representation – “Programming Model” Per iteration of loop: FP MULTs: 195 FP ADD/SUBs: 121 Array Accesses: 96 5 x Gap exacerbated by x 86 ISA . . . movl imulq movq movaps subss movq addq mulss movaps movslq movaps subss mulss movl. . . %r 8 d, 248(%rsp) %rbx, %r 14 %rbx, %rbp %r 11, %r 12 %xmm 9, %xmm 4 %xmm 7, %xmm 11 (%r 15, %rax), %xmm 2 264(%rsp), %r 15 %r 14, %r 11 %rbp, %r 12 (%r 13, %r 12, 4), %xmm 4 %xmm 7, %xmm 1 %r 8 d, %r 8 %xmm 7, %xmm 6 (%r 15, %rax), %xmm 3 (%r 13, %r 11, 4), %xmm 1 (%r 9, %rax), %r 15 d In the Wild (PGF compiler) Per iteration of loop: FP MULTs+ADD/SUB: 284 Data Accesses: 183

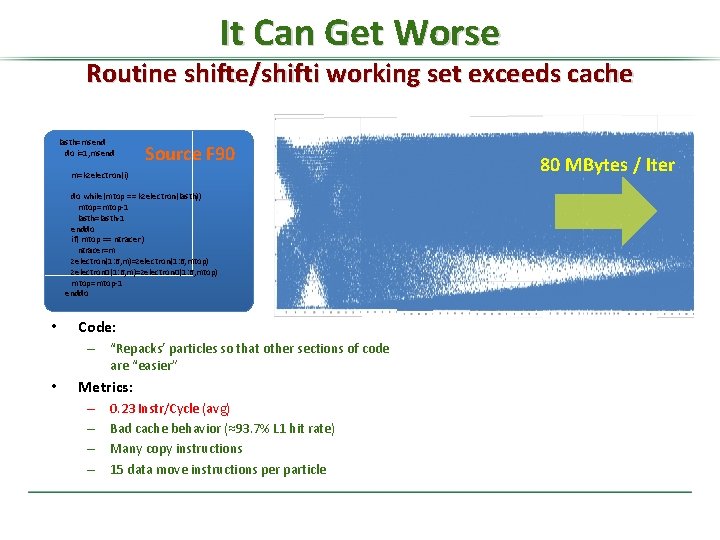

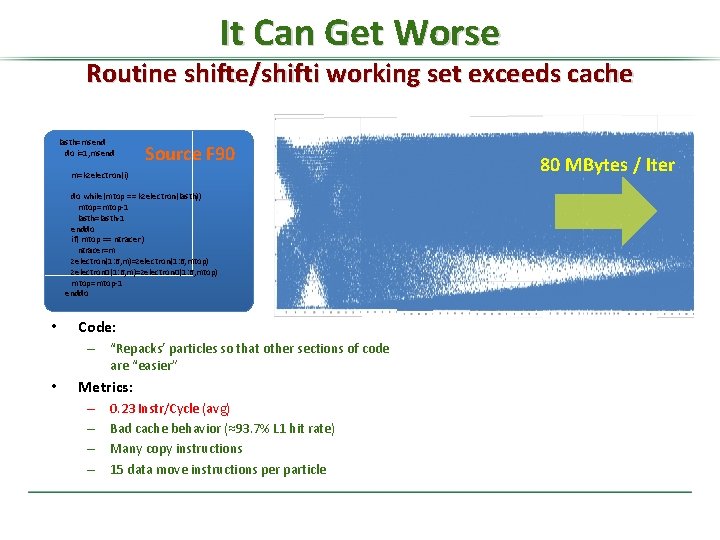

It Can Get Worse Routine shifte/shifti working set exceeds cache lasth=msend do i=1, msend Source F 90 m=kzelectron(i) do while(mtop == kzelectron(lasth)) mtop=mtop-1 lasth=lasth-1 enddo if( mtop == ntracer ) ntracer=m zelectron(1: 6, m)=zelectron(1: 6, mtop) zelectron 0(1: 6, m)=zelectron 0(1: 6, mtop) mtop=mtop-1 enddo • Code: – “Repacks’ particles so that other sections of code are “easier” • Metrics: – – 0. 23 Instr/Cycle (avg) Bad cache behavior (≈93. 7% L 1 hit rate) Many copy instructions 15 data move instructions per particle 80 MBytes / Iter

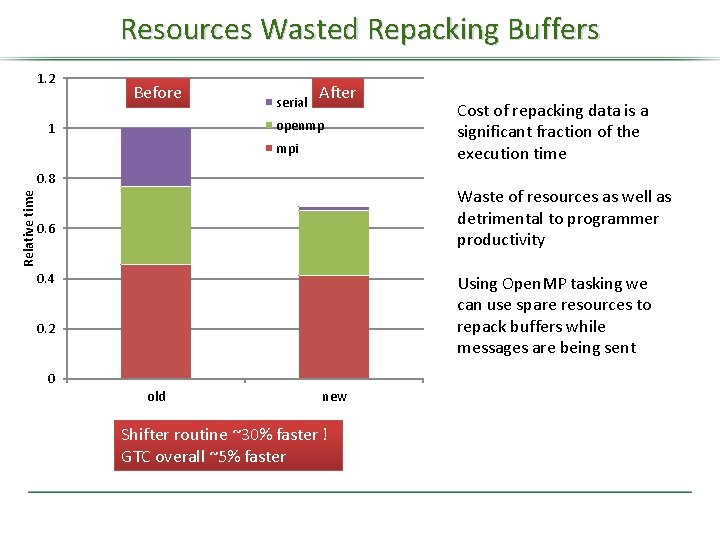

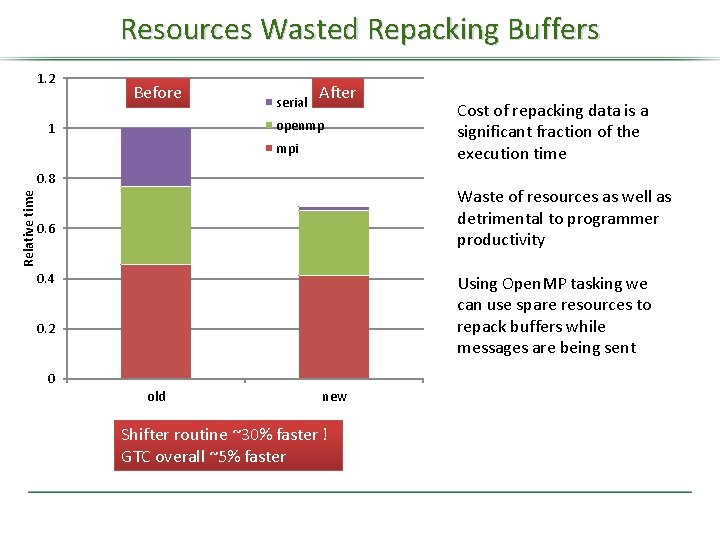

Resources Wasted Repacking Buffers 1. 2 Before serial After openmp 1 mpi Relative time 0. 8 Cost of repacking data is a significant fraction of the execution time Waste of resources as well as detrimental to programmer productivity 0. 6 0. 4 Using Open. MP tasking we can use spare resources to repack buffers while messages are being sent 0. 2 0 old new Shifter routine ~30% faster ! GTC overall ~5% faster

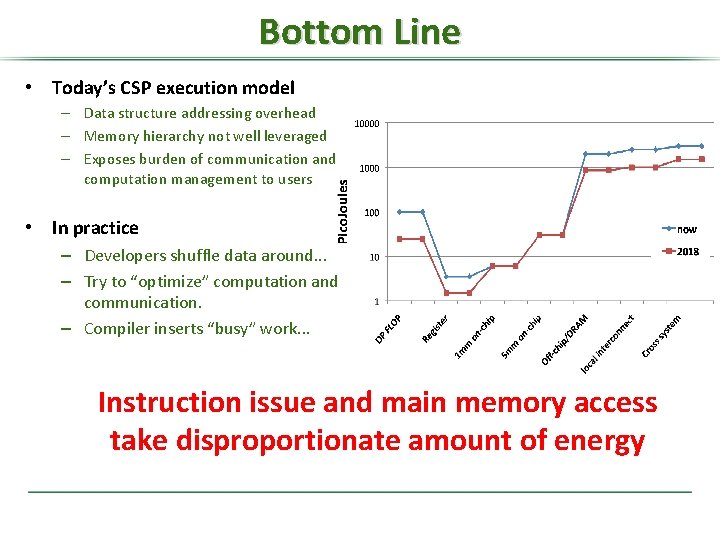

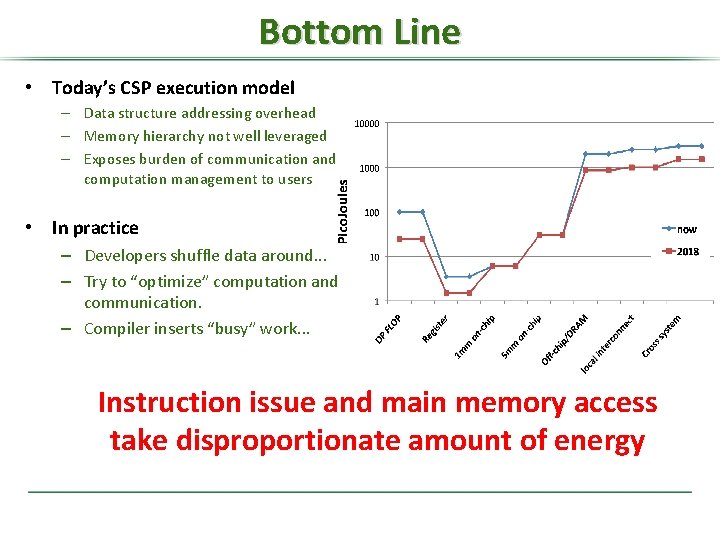

Bottom Line • Today’s CSP execution model – Data structure addressing overhead – Memory hierarchy not well leveraged – Exposes burden of communication and computation management to users • In practice – Developers shuffle data around. . . – Try to “optimize” computation and communication. – Compiler inserts “busy” work. . . Instruction issue and main memory access take disproportionate amount of energy

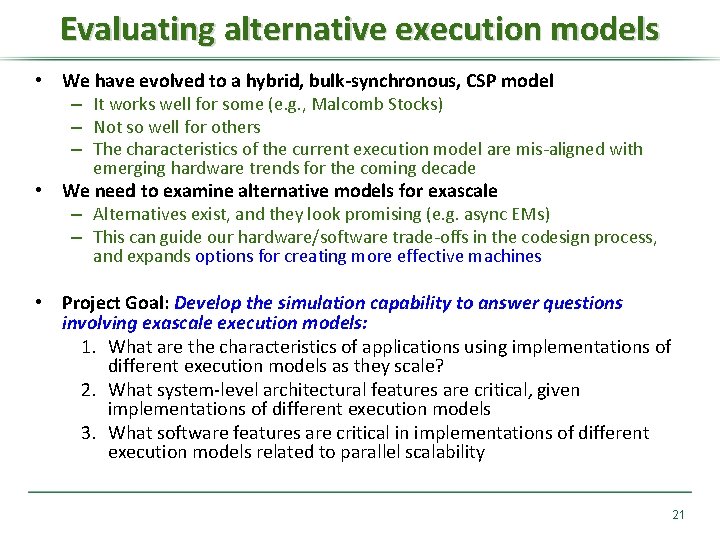

Evaluating alternative execution models • We have evolved to a hybrid, bulk-synchronous, CSP model – It works well for some (e. g. , Malcomb Stocks) – Not so well for others – The characteristics of the current execution model are mis-aligned with emerging hardware trends for the coming decade • We need to examine alternative models for exascale – Alternatives exist, and they look promising (e. g. async EMs) – This can guide our hardware/software trade-offs in the codesign process, and expands options for creating more effective machines • Project Goal: Develop the simulation capability to answer questions involving exascale execution models: 1. What are the characteristics of applications using implementations of different execution models as they scale? 2. What system-level architectural features are critical, given implementations of different execution models 3. What software features are critical in implementations of different execution models related to parallel scalability 21

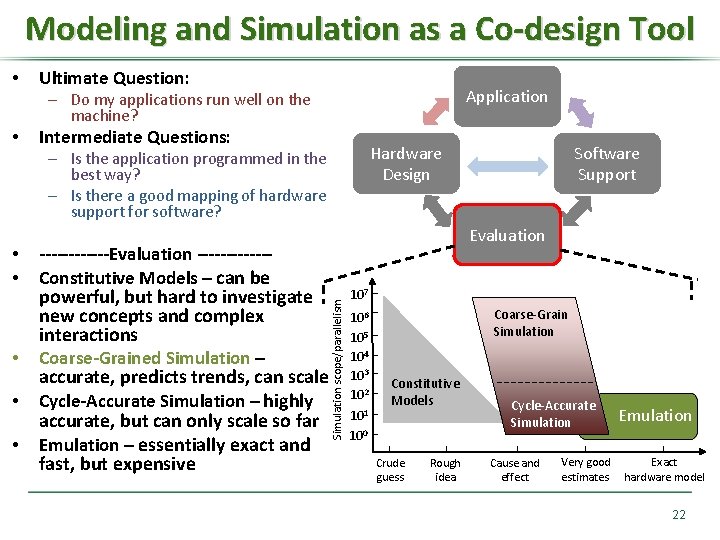

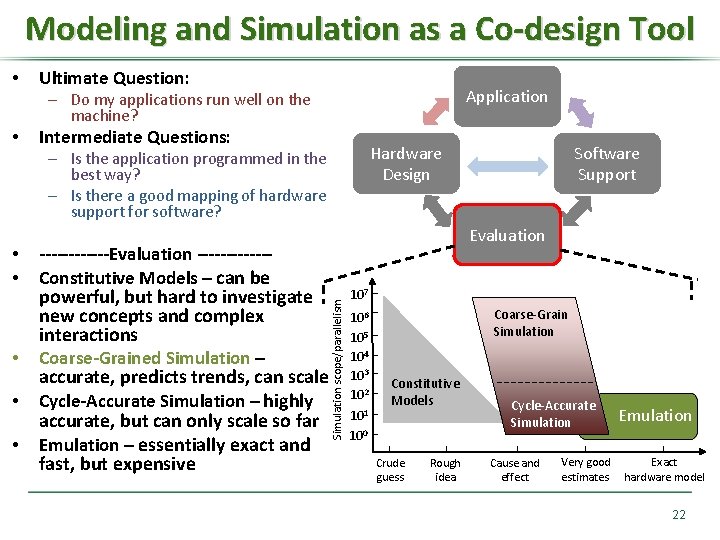

Modeling and Simulation as a Co-design Tool • Ultimate Question: Application – Do my applications run well on the machine? • Intermediate Questions: Hardware Design – Is the application programmed in the best way? – Is there a good mapping of hardware support for software? – evaluation! • • • Evaluation ------------Constitutive Models – can be powerful, but hard to investigate new concepts and complex interactions Coarse-Grained Simulation – accurate, predicts trends, can scale Cycle-Accurate Simulation – highly accurate, but can only scale so far Emulation – essentially exact and fast, but expensive Simulation scope/parallelism • • Software Support 107 106 105 104 103 102 101 100 Coarse-Grain Simulation Constitutive Models Crude guess Rough idea Cycle-Accurate Simulation Cause and effect Very good estimates Emulation Exact hardware model 22

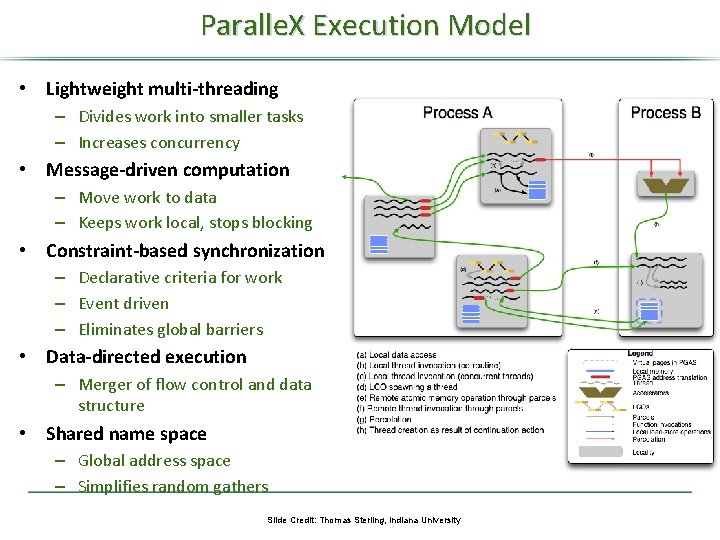

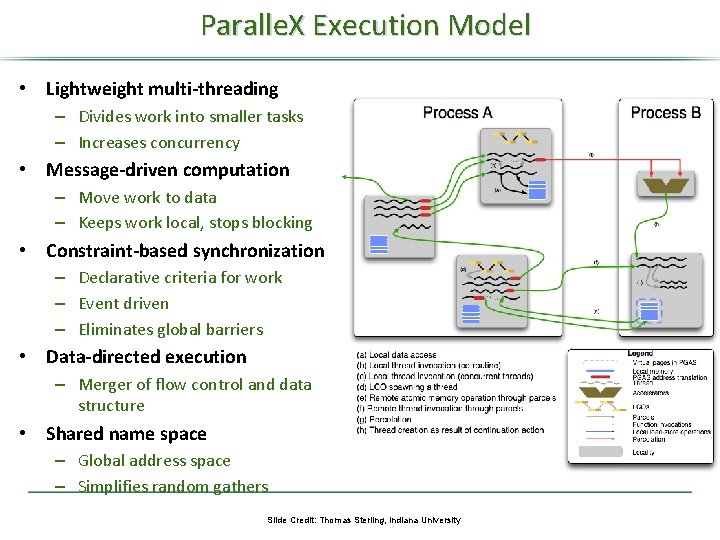

Paralle. X Execution Model • Lightweight multi-threading – Divides work into smaller tasks – Increases concurrency • Message-driven computation – Move work to data – Keeps work local, stops blocking • Constraint-based synchronization – Declarative criteria for work – Event driven – Eliminates global barriers • Data-directed execution – Merger of flow control and data structure • Shared name space – Global address space – Simplifies random gathers Slide Credit: Thomas Sterling, Indiana University

About SST/macro HPX Implementation • Almost identical API, so resulting application code is the nearly the same as real HPX • However, the API is not as filled out as the real thing. Only more commonly used functions/components are implemented • Default AGAS implemented as distributed hash. • Do have a model for on-node thread manager for oversubscription, though focus is on the parallel scalability aspects

Using HPCCG: conjugant gradient solver from Mantevo • Use it because it is extremely simple – it’s not very predictive of any app, lacks real context around the conjugate gradient solver – but it’s easy to understand, and easy to work with • We have GTC around, but it takes a while to simulate.

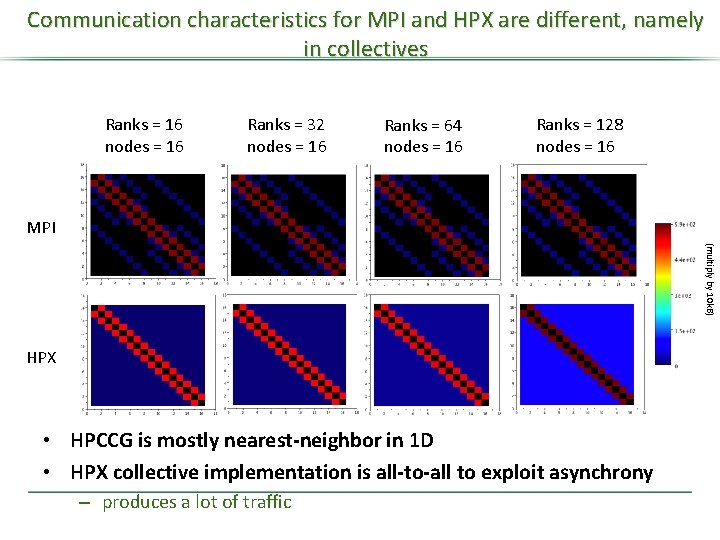

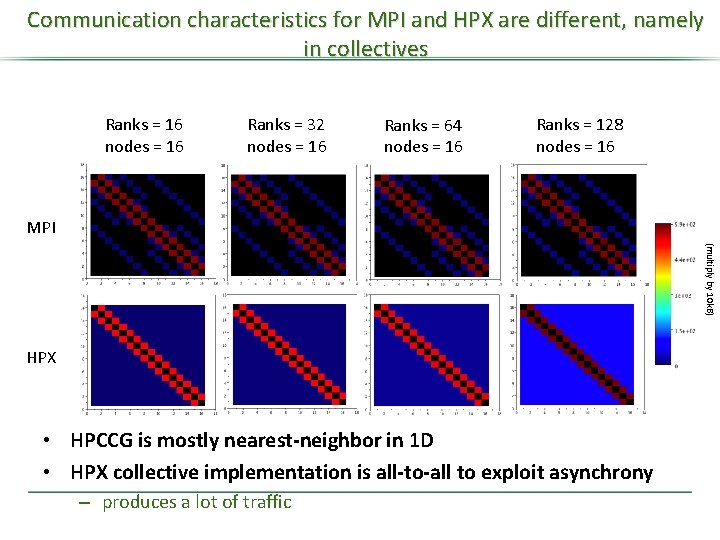

Communication characteristics for MPI and HPX are different, namely in collectives Ranks = 16 nodes = 16 Ranks = 32 nodes = 16 Ranks = 64 nodes = 16 Ranks = 128 nodes = 16 MPI (multiply by 10 k. B) HPX • HPCCG is mostly nearest-neighbor in 1 D • HPX collective implementation is all-to-all to exploit asynchrony – produces a lot of traffic

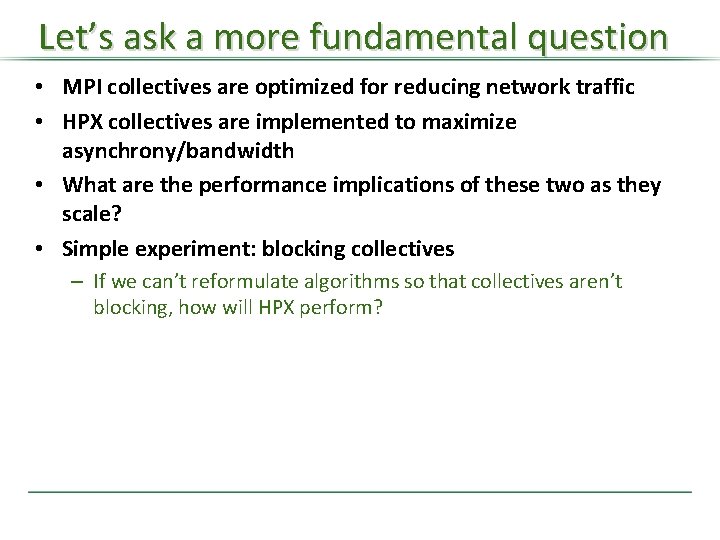

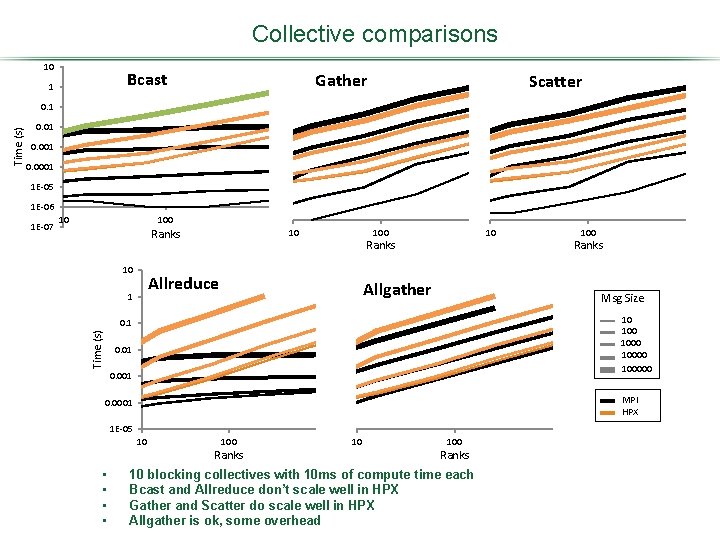

Let’s ask a more fundamental question • MPI collectives are optimized for reducing network traffic • HPX collectives are implemented to maximize asynchrony/bandwidth • What are the performance implications of these two as they scale? • Simple experiment: blocking collectives – If we can’t reformulate algorithms so that collectives aren’t blocking, how will HPX perform?

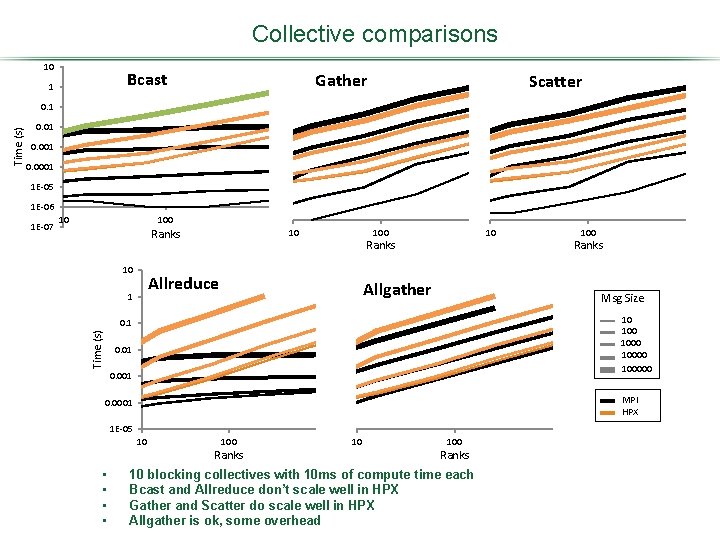

Collective comparisons 10 Bcast 1 Gather Scatter 0. 01 0. 0001 1 E-05 1 E-06 1 E-07 10 100 Ranks Time (s) 0. 1 Ranks • • 10 blocking collectives with 10 ms of compute time each Bcast and Allreduce don’t scale well in HPX Gather and Scatter do scale well in HPX Allgather is ok, some overhead 10 100 Ranks

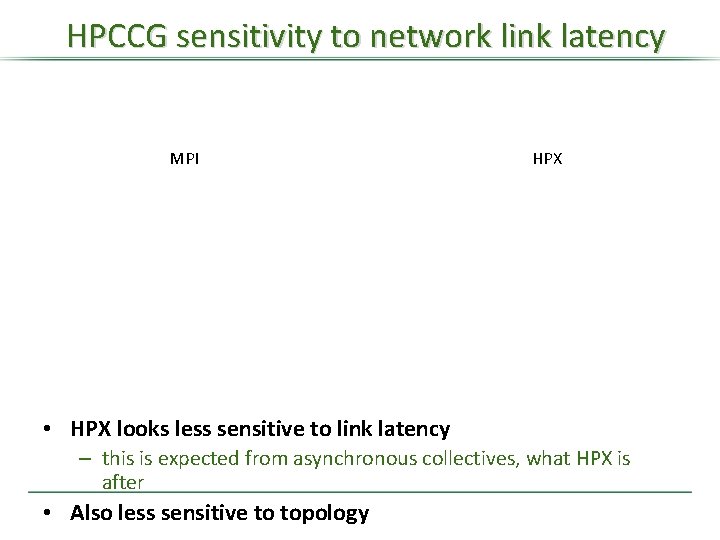

HPCCG sensitivity to network link latency MPI HPX • HPX looks less sensitive to link latency – this is expected from asynchronous collectives, what HPX is after • Also less sensitive to topology

HPCCG sensitivity to Link BW MPI • HPCCG not really sensitive to link bw – could be limited by something else HPX

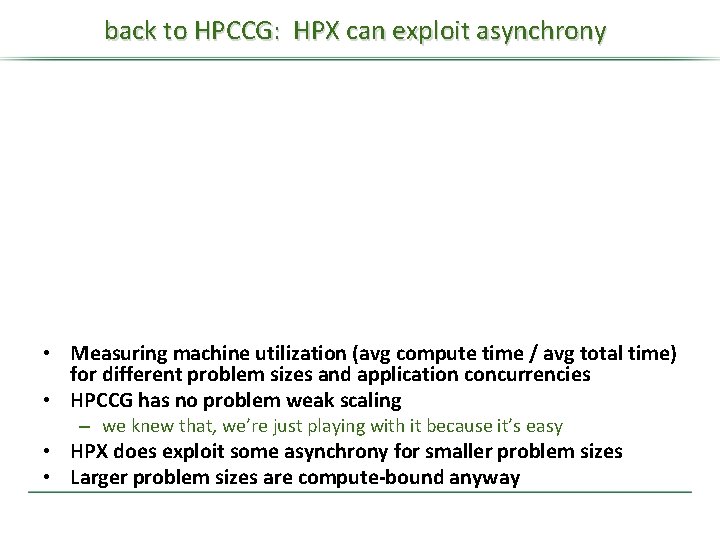

back to HPCCG: HPX can exploit asynchrony • Measuring machine utilization (avg compute time / avg total time) for different problem sizes and application concurrencies • HPCCG has no problem weak scaling – we knew that, we’re just playing with it because it’s easy • HPX does exploit some asynchrony for smaller problem sizes • Larger problem sizes are compute-bound anyway

Technical Summary for EMBU • HPX is capable of exploiting global asynchrony to the extent possible – but avoid Allreduces and Bcasts or scalability will kill you • HPX can be less sensitive to network parameters through latency hiding, which may be good considering the unknown exascale landscape

Summary • We are beginning to collect the data needed to make design decisions for Exascale systems. This is what Co-Design is. • These results will allow us to quantify technical challenges such as starvation, latency, overhead, and delays due to contention as well as the practical constraints of power, reliability, generality, and programmability. • We will then be able to assess new paradigms in the form of new execution models to exploit runtime information, manage asynchrony, co-design processor architectures and applications, expose untapped logical and physical parallelism, and ensure continued operation by graceful degradation. 33

Future Work • validate HPX implementation • more apps, reformulation of something • complete PGAS implementation in simulator (and validate) as a baseline alternative – PGAS is like an in-between point between MPI and HPX in that it has an asynchrony feature (one-sided communication) and a global naming feature (symmetric data). But it’s still structured, and porting is pretty easy. • Formal UQ parameter sensitivity analysis

SC 11 November 15, 2011 35

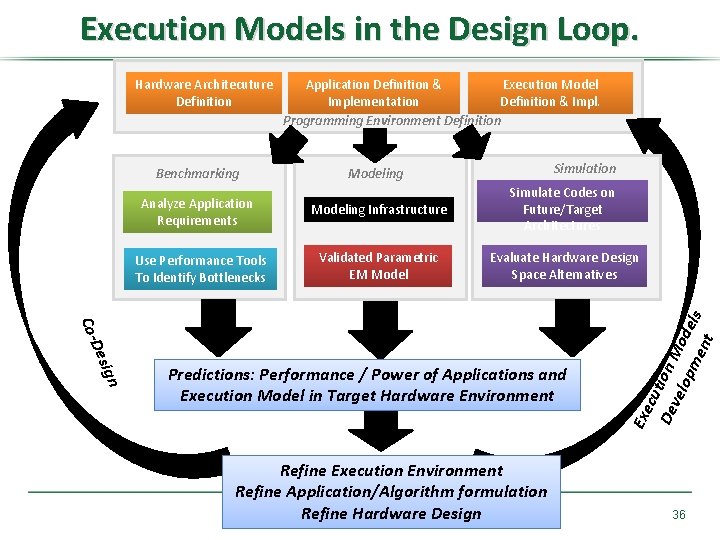

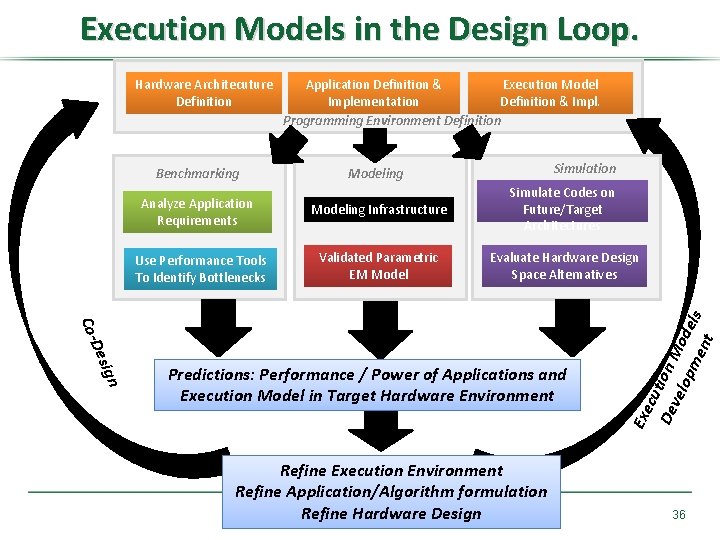

Execution Models in the Design Loop. Hardware Architecuture Definition Benchmarking Application Definition & Execution Model Implementation Definition & Impl. Programming Environment Definition Simulation Modeling Infrastructure Simulate Codes on Future/Target Architectures Use Performance Tools To Identify Bottlenecks Validated Parametric EM Model Evaluate Hardware Design Space Alternatives ign Des Co- Predictions: Performance / Power of Applications and Execution Model in Target Hardware Environment Refine Execution Environment Refine Application/Algorithm formulation Refine Hardware Design Exe cut De ion M vel opm ode ent ls Analyze Application Requirements 36

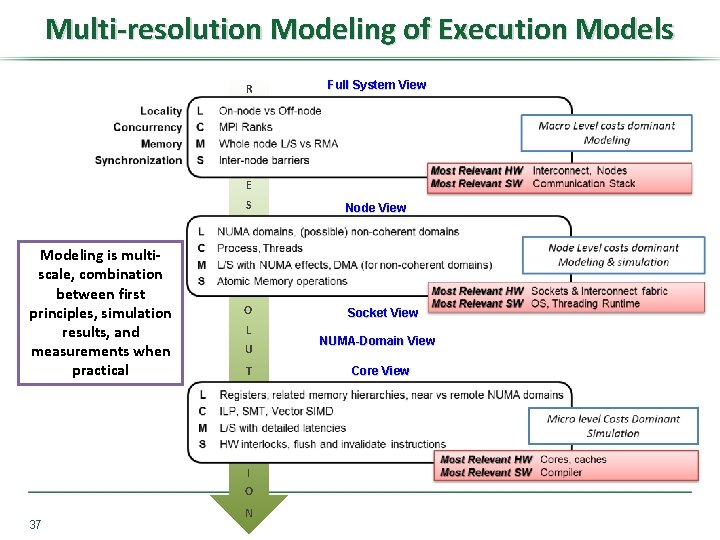

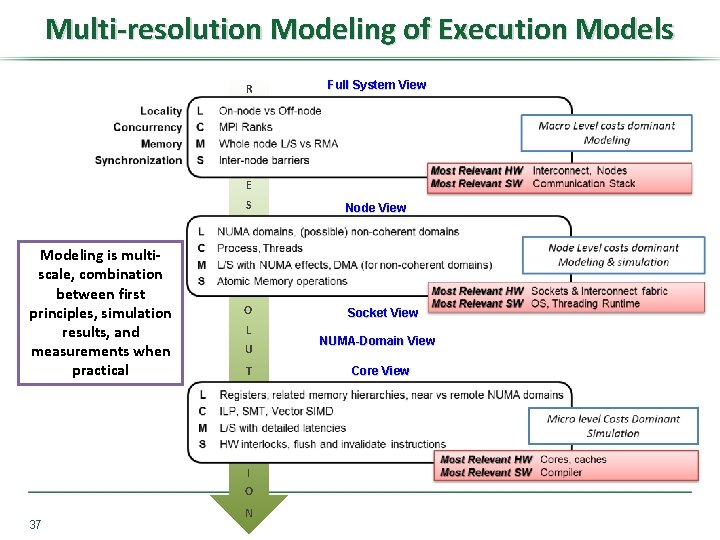

Multi-resolution Modeling of Execution Models R Full System View E Modeling is multiscale, combination between first principles, simulation results, and measurements when practical S Node View O Socket View L U T I O 37 N NUMA-Domain View Core View

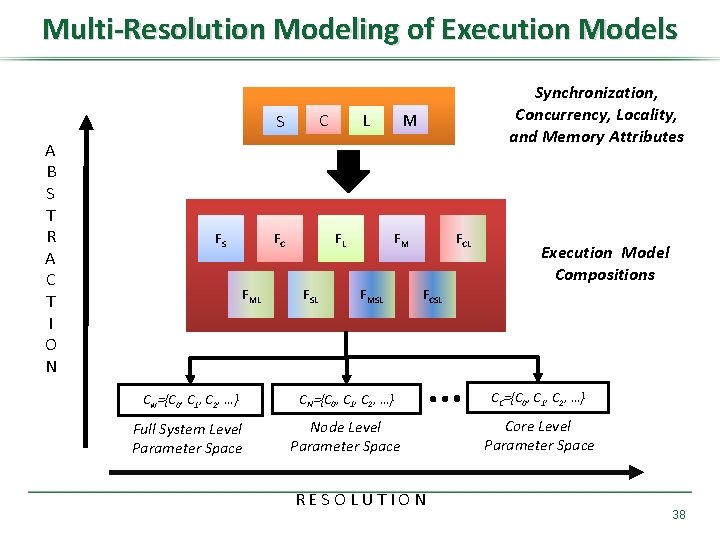

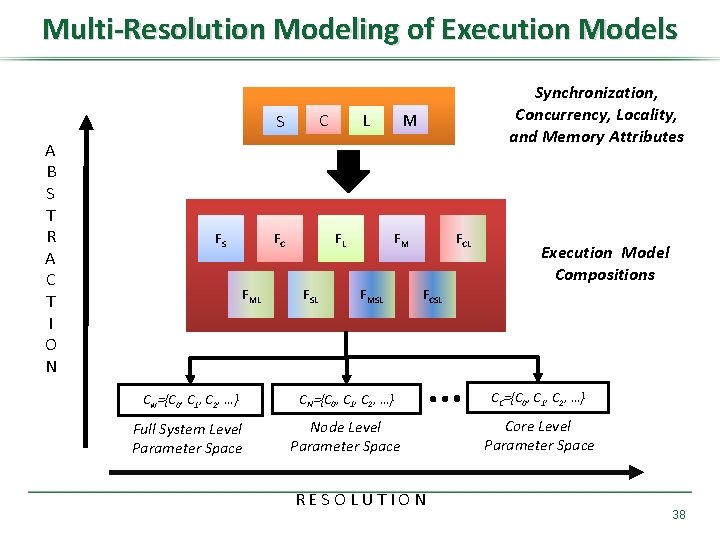

Multi-Resolution Modeling of Execution Models C S A B S T R A C T I O N FS FC FML M L FM FL FSL Synchronization, Concurrency, Locality, and Memory Attributes FMSL FCL Execution Model Compositions FCSL Cw={C 0, C 1, C 2, …} CN={C 0, C 1, C 2, …} CC={C 0, C 1, C 2, …} Full System Level Parameter Space Node Level Parameter Space Core Level Parameter Space RESOLUTION 38

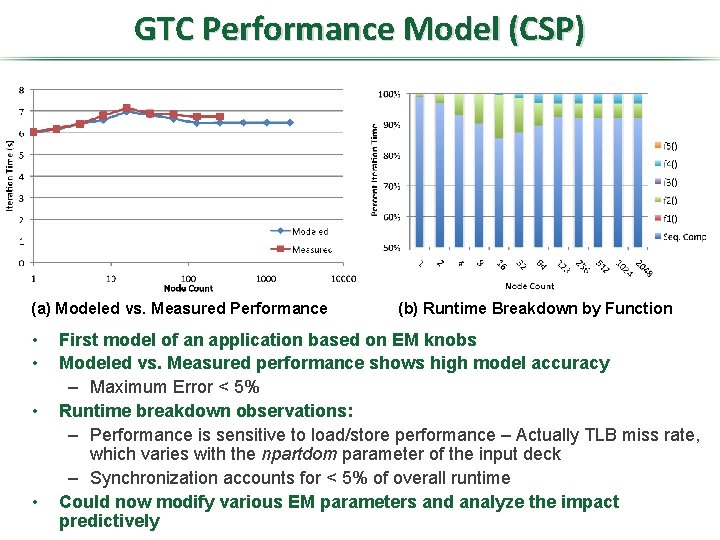

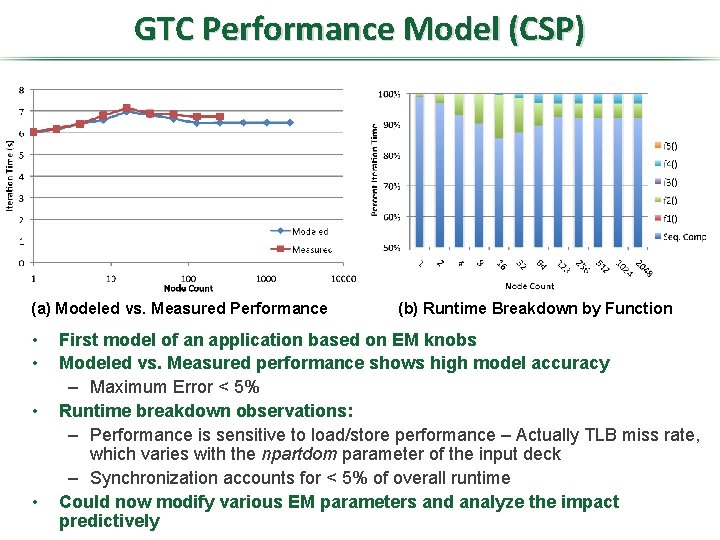

GTC Performance Model (CSP) (a) Modeled vs. Measured Performance • • (b) Runtime Breakdown by Function First model of an application based on EM knobs Modeled vs. Measured performance shows high model accuracy – Maximum Error < 5% Runtime breakdown observations: – Performance is sensitive to load/store performance – Actually TLB miss rate, which varies with the npartdom parameter of the input deck – Synchronization accounts for < 5% of overall runtime Could now modify various EM parameters and analyze the impact predictively

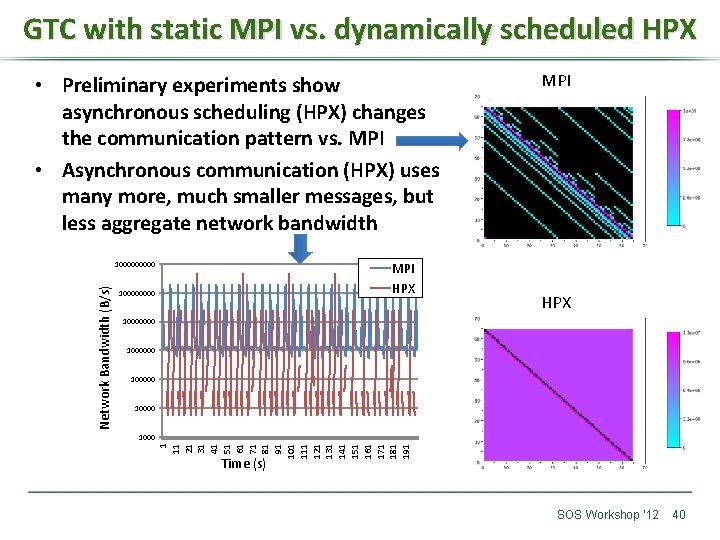

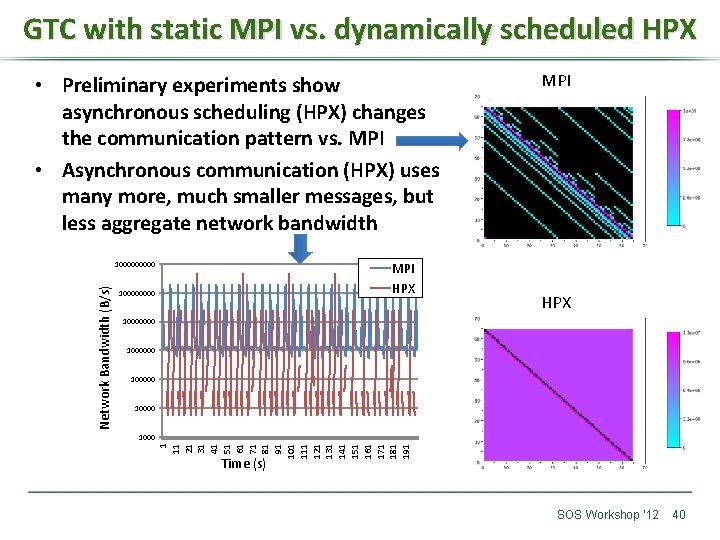

GTC with static MPI vs. dynamically scheduled HPX • Preliminary experiments show asynchronous scheduling (HPX) changes the communication pattern vs. MPI • Asynchronous communication (HPX) uses many more, much smaller messages, but less aggregate network bandwidth MPI HPX 10000000 100000 1000 1 11 21 31 41 51 61 71 81 91 101 111 121 131 141 151 161 171 181 191 Network Bandwidth (B/s) 100000 MPI Time (s) SOS Workshop ‘ 12 40

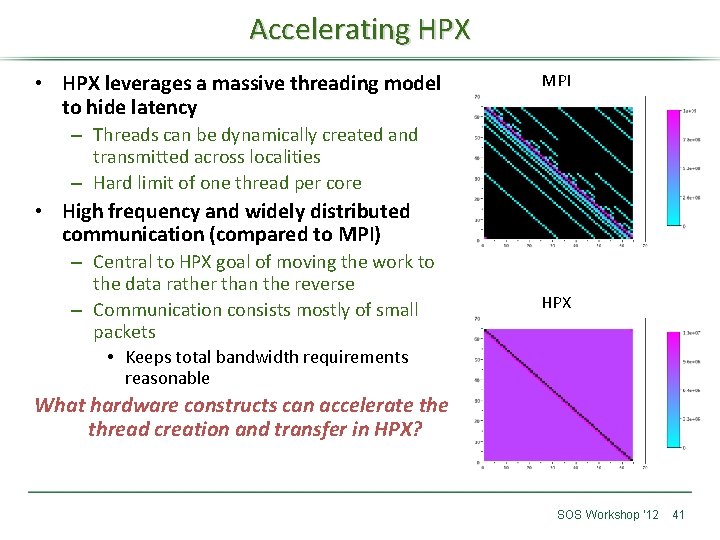

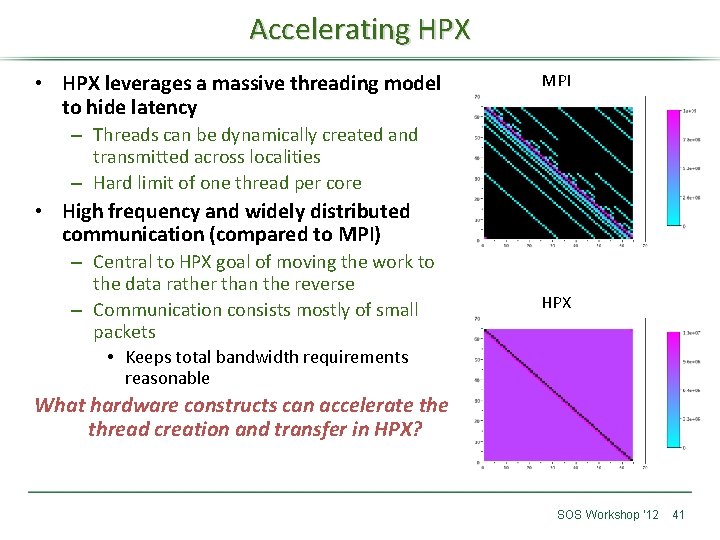

Accelerating HPX • HPX leverages a massive threading model to hide latency MPI – Threads can be dynamically created and transmitted across localities – Hard limit of one thread per core • High frequency and widely distributed communication (compared to MPI) – Central to HPX goal of moving the work to the data rather than the reverse – Communication consists mostly of small packets • Keeps total bandwidth requirements HPX reasonable What hardware constructs can accelerate thread creation and transfer in HPX? SOS Workshop ‘ 12 41

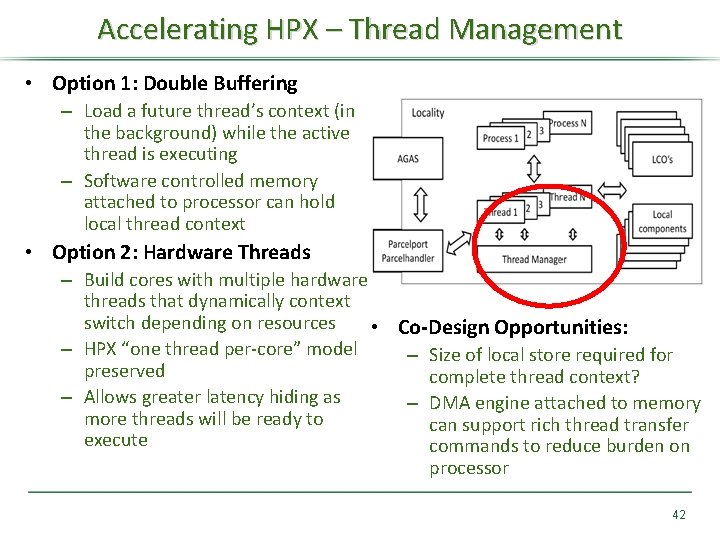

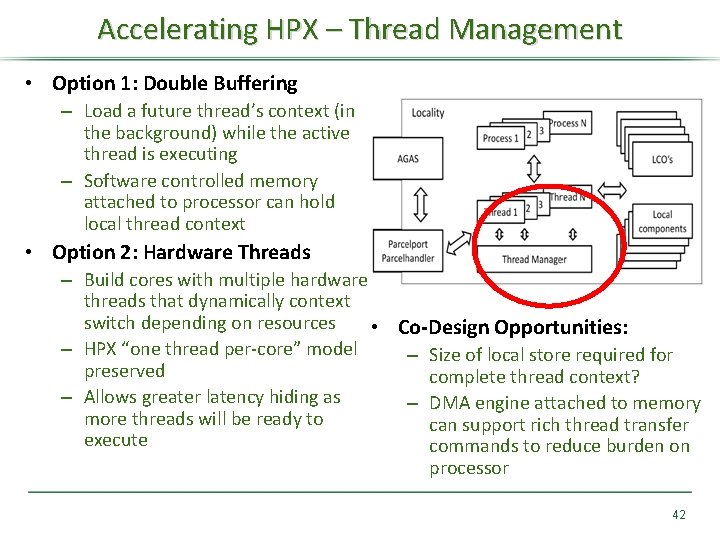

Accelerating HPX – Thread Management • Option 1: Double Buffering – Load a future thread’s context (in the background) while the active thread is executing – Software controlled memory attached to processor can hold local thread context • Option 2: Hardware Threads – Build cores with multiple hardware threads that dynamically context switch depending on resources • Co-Design Opportunities: – HPX “one thread per-core” model – Size of local store required for preserved complete thread context? – Allows greater latency hiding as – DMA engine attached to memory more threads will be ready to can support rich thread transfer execute commands to reduce burden on processor 42

Summary • We are beginning to collect the data needed to make design decisions for Exascale systems. This is what Co-Design is. • These results will allow us to quantify technical challenges such as starvation, latency, overhead, and delays due to contention as well as the practical constraints of power, reliability, generality, and programmability. • We will then be able to assess new paradigms in the form of new execution models to exploit runtime information, manage asynchrony, co-design processor architectures and applications, expose untapped logical and physical parallelism, and ensure continued operation by graceful degradation. 43