QUALITY ASSURANCE OF ASSESSMENT SANDRA KEMP Essential Skills

- Slides: 40

QUALITY ASSURANCE OF ASSESSMENT SANDRA KEMP Essential Skills in Medical Education Assessment ESMEA Online 2017

2 Key questions What are the principles of ensuring your assessment works as intended? How do you ensure the quality of your assessment?

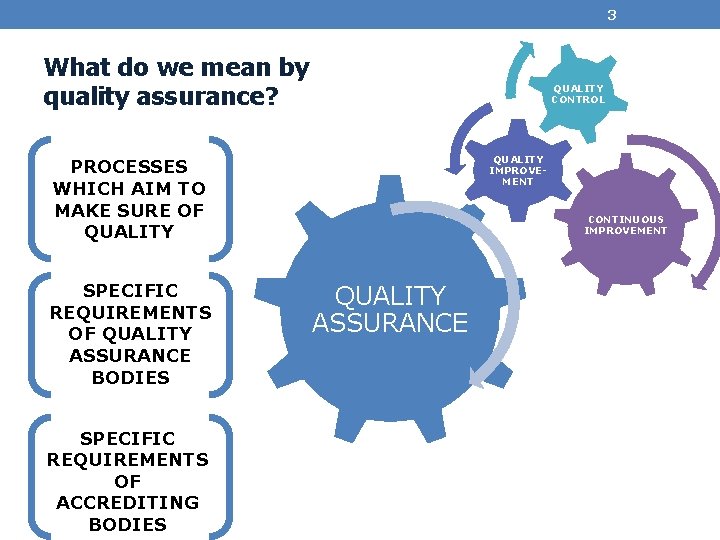

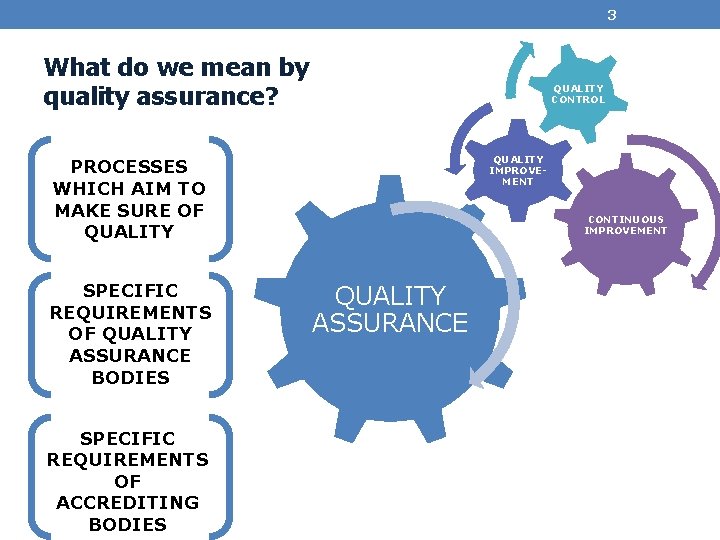

3 What do we mean by quality assurance? QUALITY CONTROL QUALITY IMPROVEMENT PROCESSES WHICH AIM TO MAKE SURE OF QUALITY SPECIFIC REQUIREMENTS OF QUALITY ASSURANCE BODIES SPECIFIC REQUIREMENTS OF ACCREDITING BODIES CONTINUOUS IMPROVEMENT QUALITY ASSURANCE

4 Let’s pause and hear from you… Why it matters… What happens if there is poor quality of assessment in training healthcare professionals (doctors, nurses…)?

5 Preparatory reading materials For this module: AMEE ASPIRE award ASPIRE Recognition of Excellence in Assessment in a Medical, Dental and Veterinary School Criterion 4, page 4: The assessment programme is subject to a rigorous and continuous quality control process

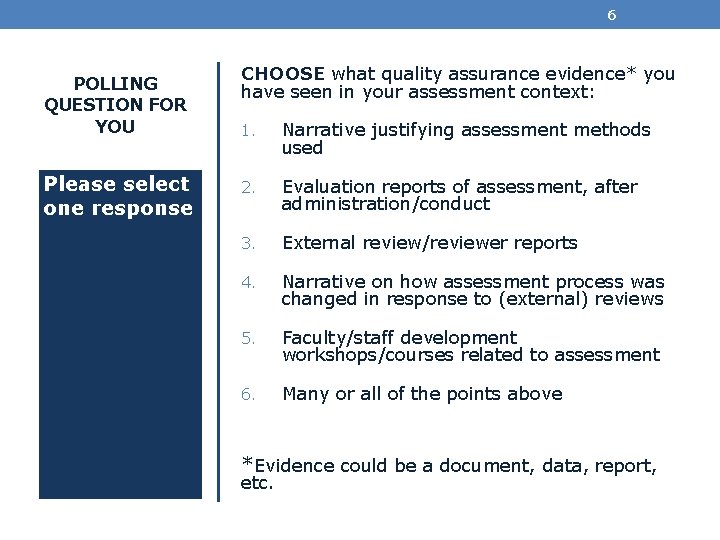

6 POLLING QUESTION FOR YOU Please select one response CHOOSE what quality assurance evidence* you have seen in your assessment context: 1. Narrative justifying assessment methods used 2. Evaluation reports of assessment, after administration/conduct 3. External review/reviewer reports 4. Narrative on how assessment process was changed in response to (external) reviews 5. Faculty/staff development workshops/courses related to assessment 6. Many or all of the points above *Evidence could be a document, data, report, etc.

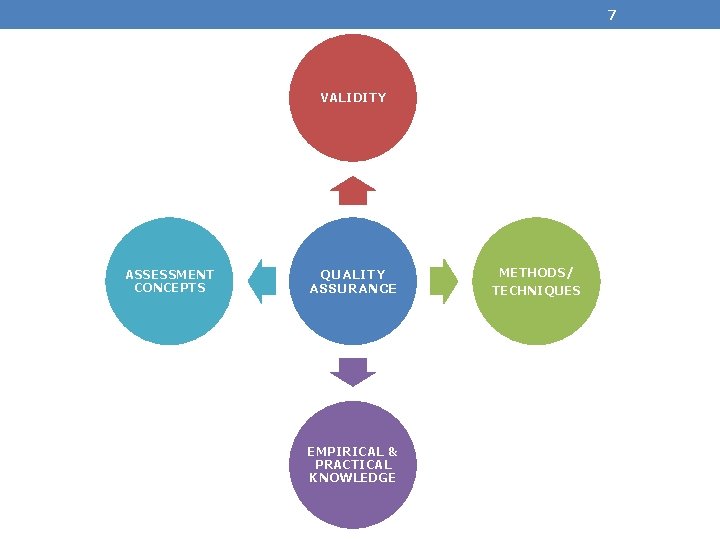

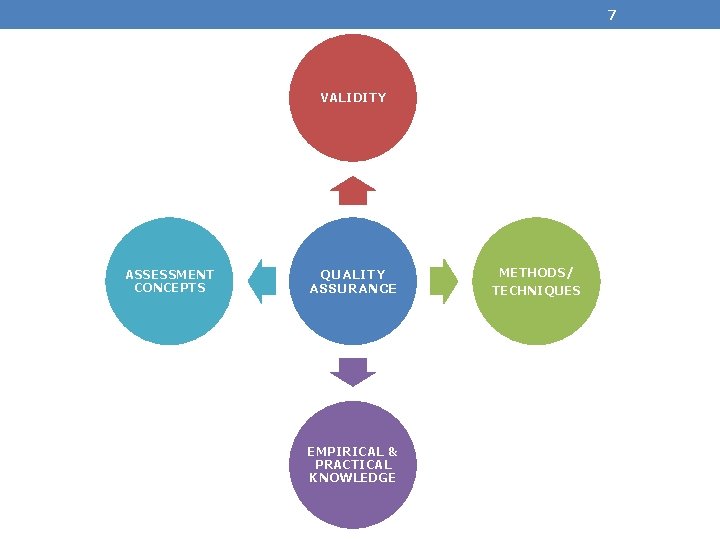

7 VALIDITY ASSESSMENT CONCEPTS QUALITY ASSURANCE EMPIRICAL & PRACTICAL KNOWLEDGE METHODS/ TECHNIQUES

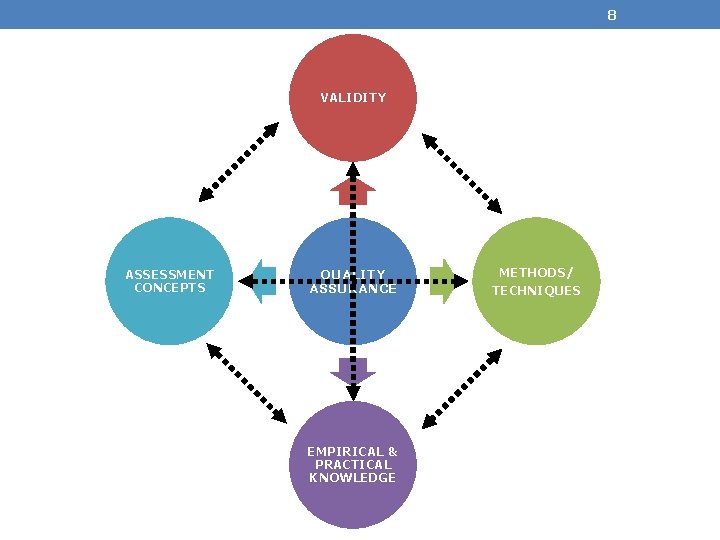

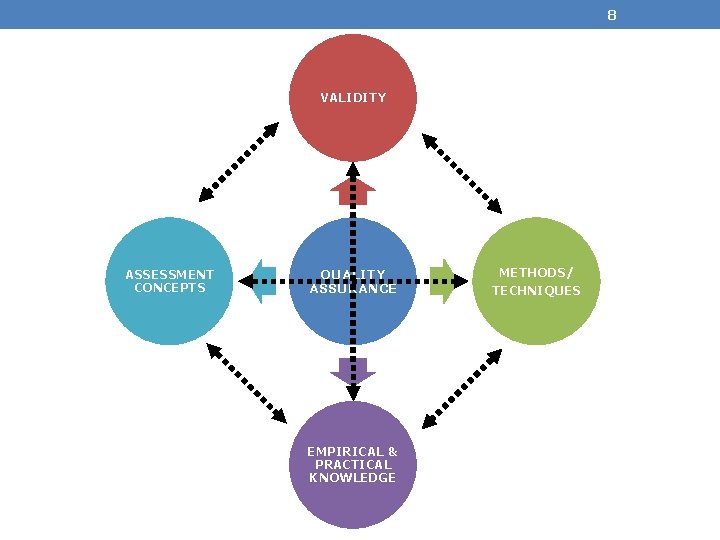

8 VALIDITY ASSESSMENT CONCEPTS QUALITY ASSURANCE EMPIRICAL & PRACTICAL KNOWLEDGE METHODS/ TECHNIQUES

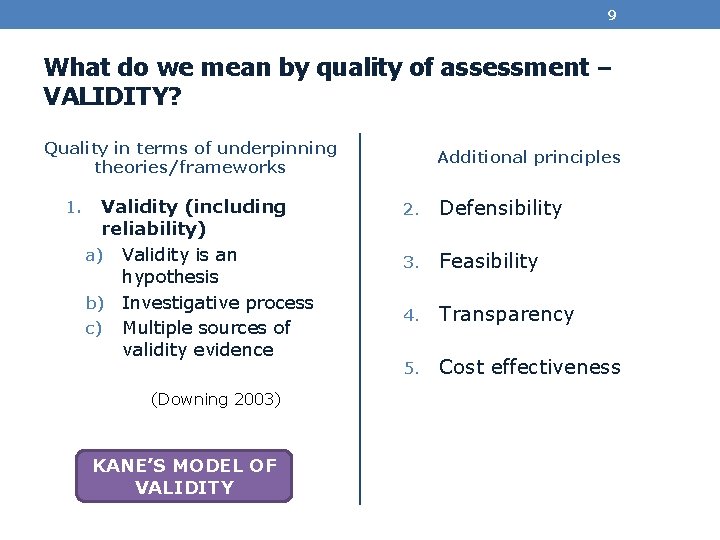

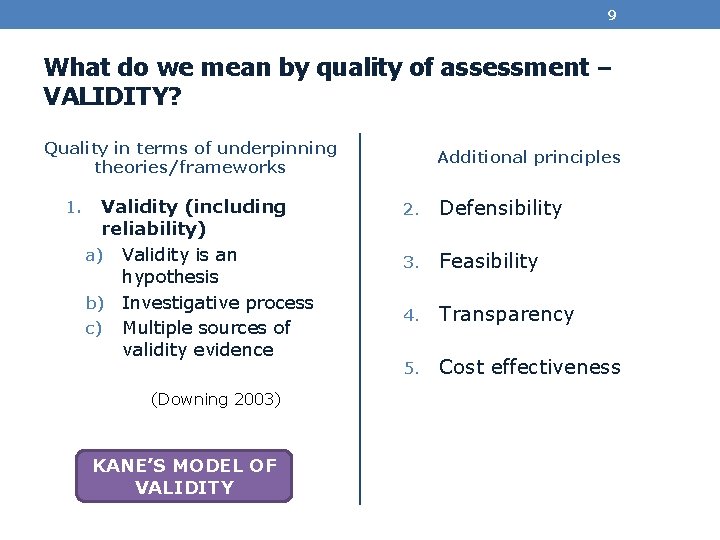

9 What do we mean by quality of assessment – VALIDITY? Quality in terms of underpinning theories/frameworks 1. Validity (including reliability) a) Validity is an hypothesis b) Investigative process c) Multiple sources of validity evidence (Downing 2003) KANE’S MODEL OF VALIDITY Additional principles 2. Defensibility 3. Feasibility 4. Transparency 5. Cost effectiveness

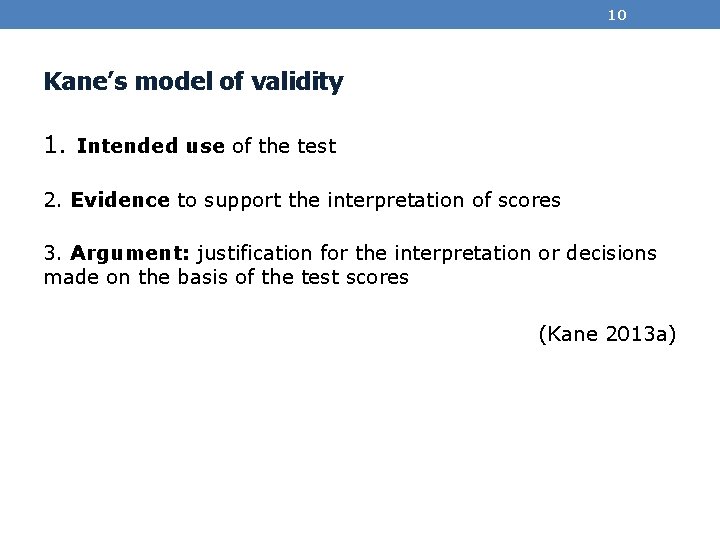

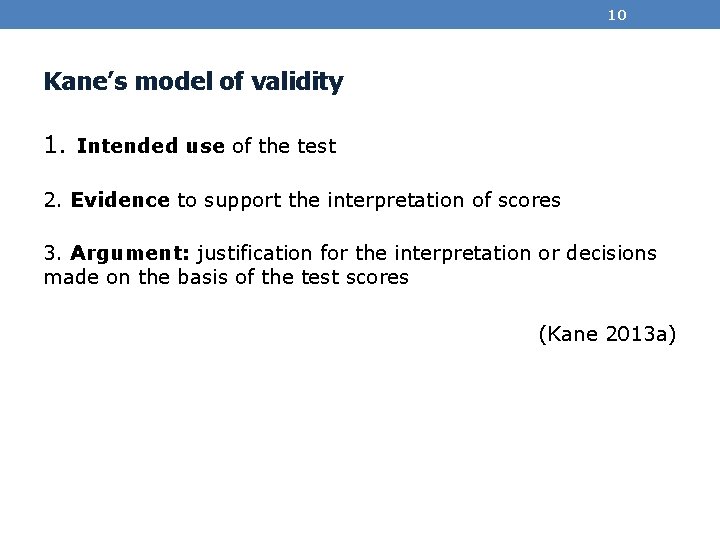

10 Kane’s model of validity 1. Intended use of the test 2. Evidence to support the interpretation of scores 3. Argument: justification for the interpretation or decisions made on the basis of the test scores (Kane 2013 a)

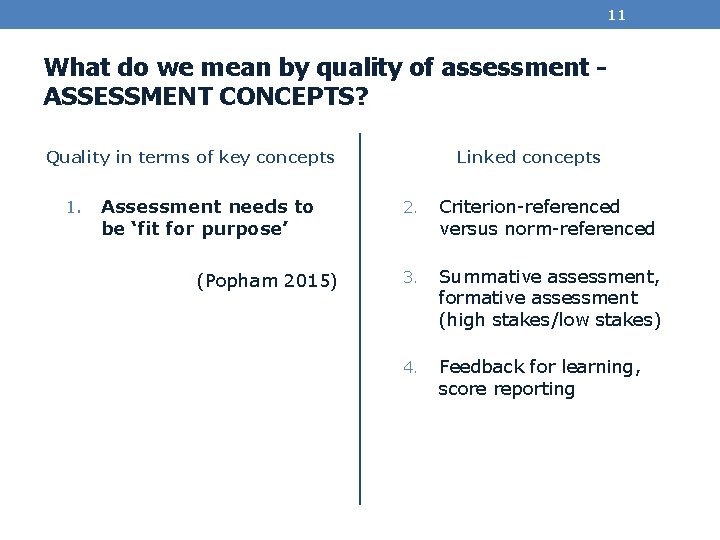

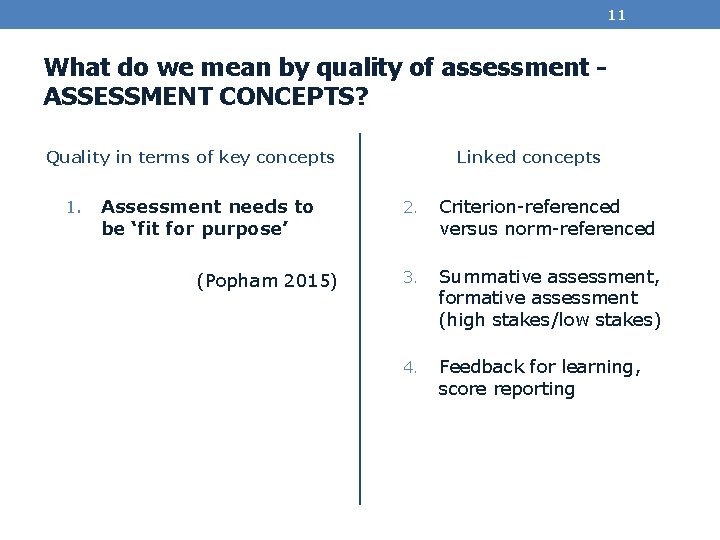

11 What do we mean by quality of assessment ASSESSMENT CONCEPTS? Quality in terms of key concepts 1. Assessment needs to be ‘fit for purpose’ (Popham 2015) Linked concepts 2. Criterion-referenced versus norm-referenced 3. Summative assessment, formative assessment (high stakes/low stakes) 4. Feedback for learning, score reporting

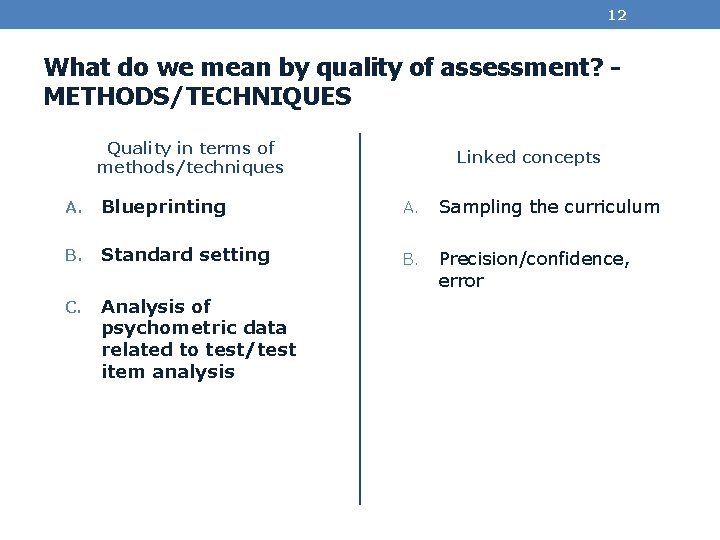

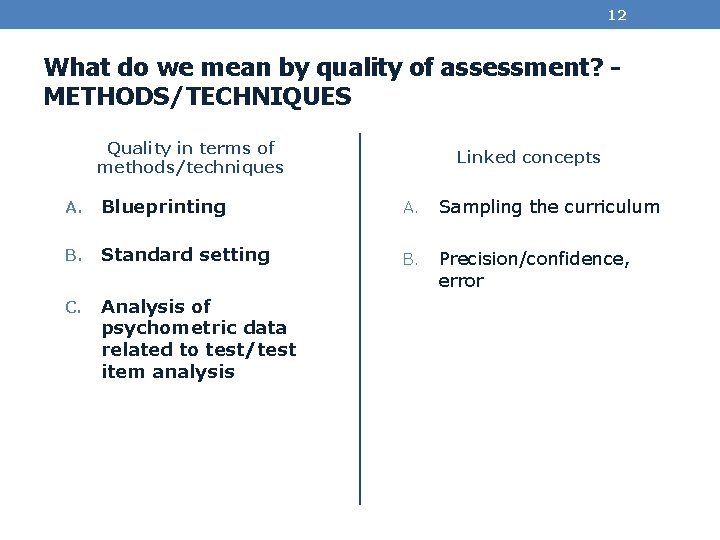

12 What do we mean by quality of assessment? METHODS/TECHNIQUES Quality in terms of methods/techniques Linked concepts A. Blueprinting A. Sampling the curriculum B. Standard setting B. Precision/confidence, error C. Analysis of psychometric data related to test/test item analysis

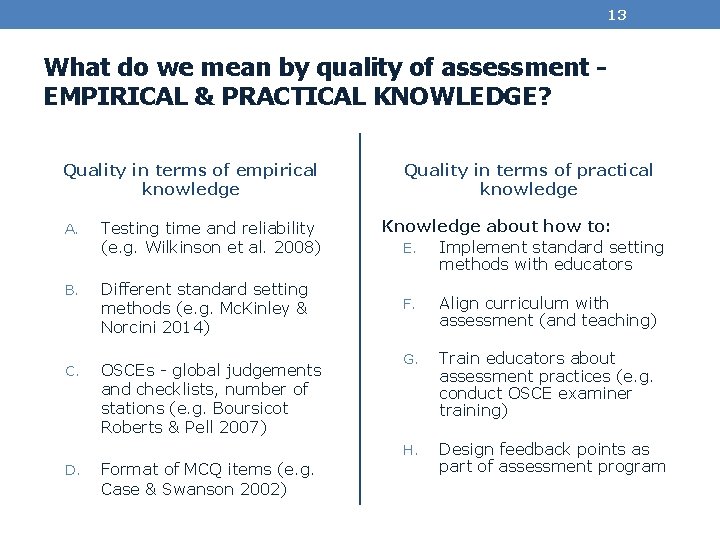

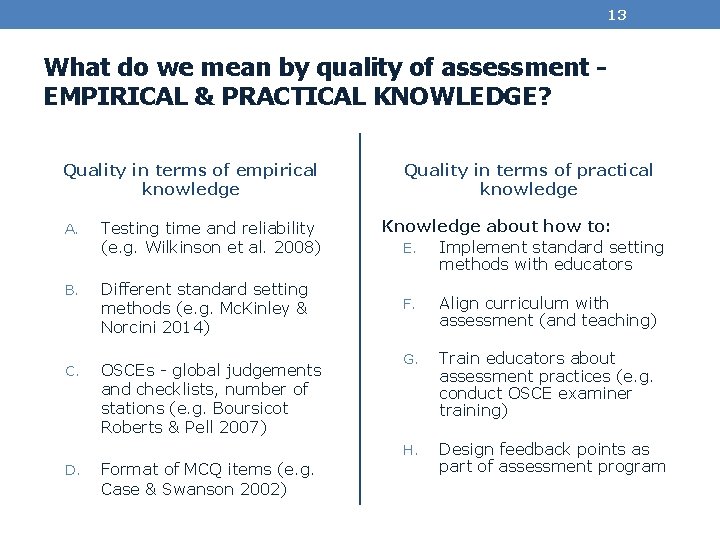

13 What do we mean by quality of assessment EMPIRICAL & PRACTICAL KNOWLEDGE? Quality in terms of empirical knowledge A. Testing time and reliability (e. g. Wilkinson et al. 2008) B. Different standard setting methods (e. g. Mc. Kinley & Norcini 2014) C. OSCEs - global judgements and checklists, number of stations (e. g. Boursicot Roberts & Pell 2007) D. Format of MCQ items (e. g. Case & Swanson 2002) Quality in terms of practical knowledge Knowledge about how to: E. Implement standard setting methods with educators F. Align curriculum with assessment (and teaching) G. Train educators about assessment practices (e. g. conduct OSCE examiner training) H. Design feedback points as part of assessment program

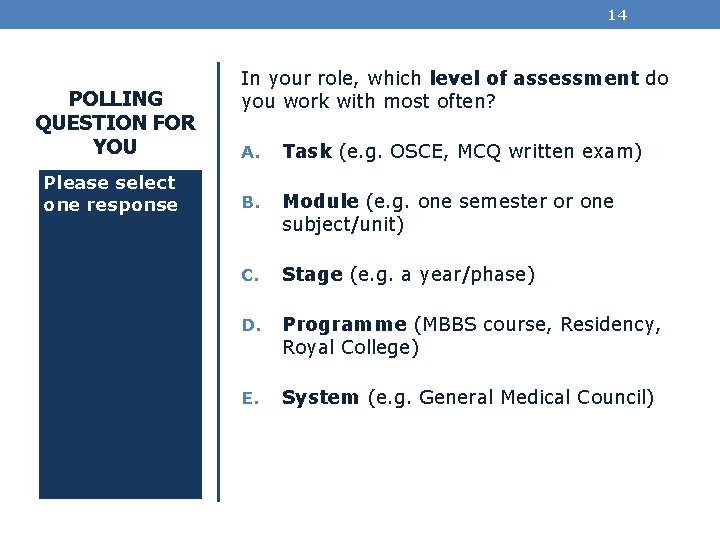

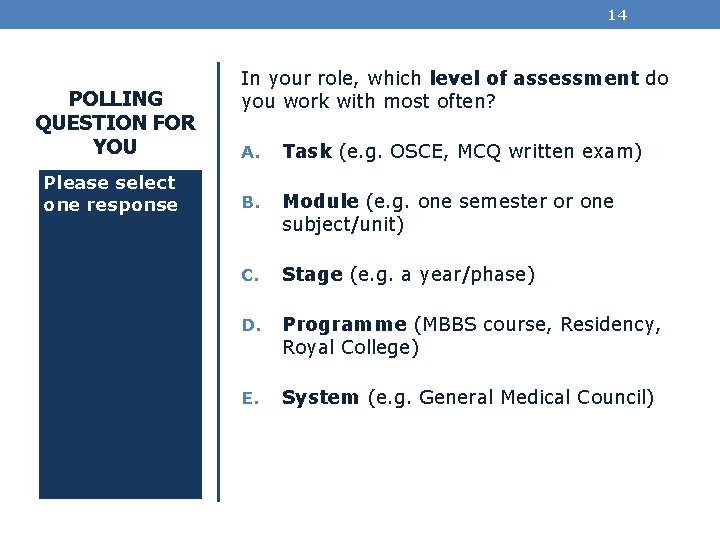

14 POLLING QUESTION FOR YOU Please select one response In your role, which level of assessment do you work with most often? A. Task (e. g. OSCE, MCQ written exam) B. Module (e. g. one semester or one subject/unit) C. Stage (e. g. a year/phase) D. Programme (MBBS course, Residency, Royal College) E. System (e. g. General Medical Council)

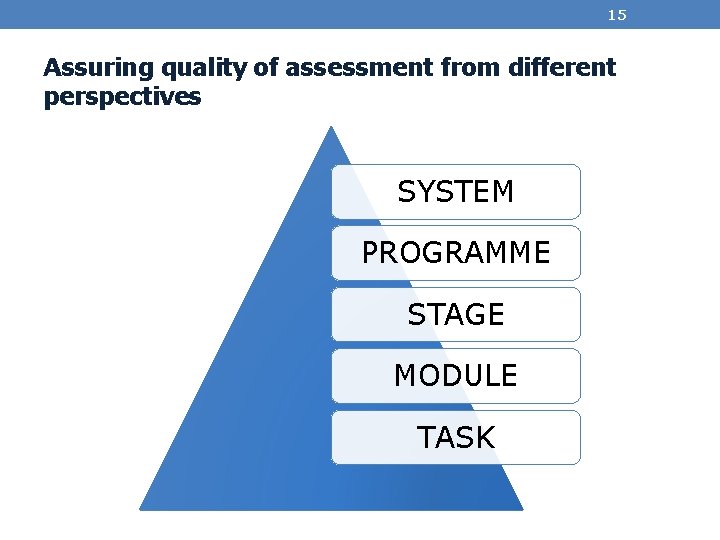

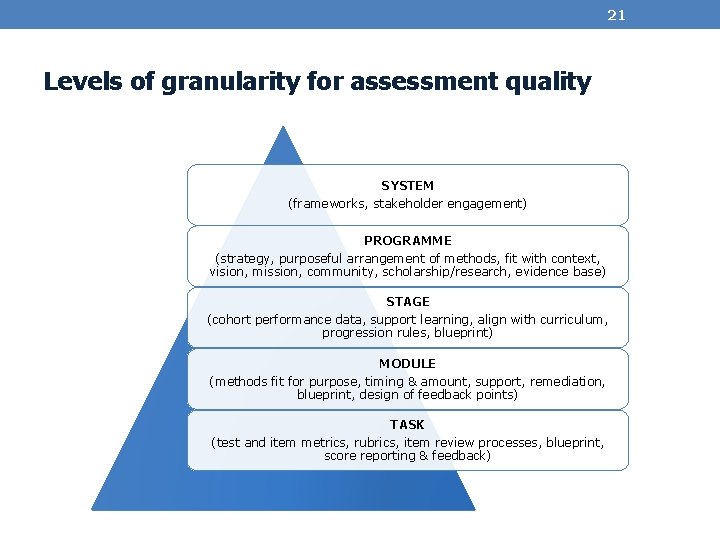

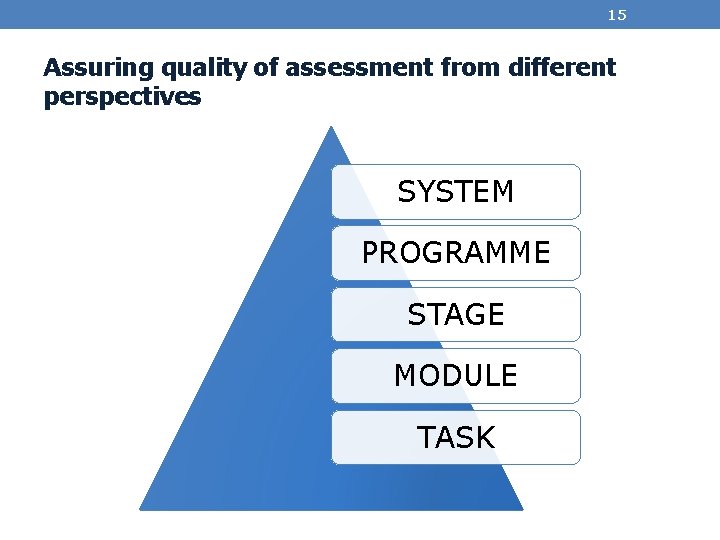

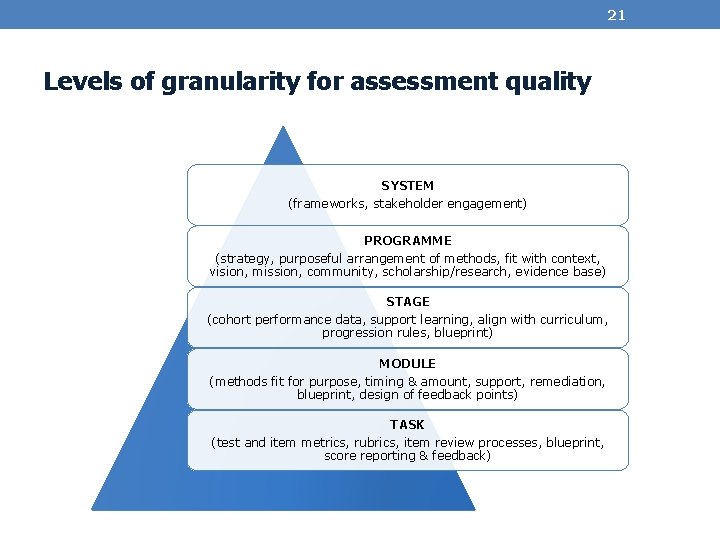

15 Assuring quality of assessment from different perspectives SYSTEM PROGRAMME STAGE MODULE TASK

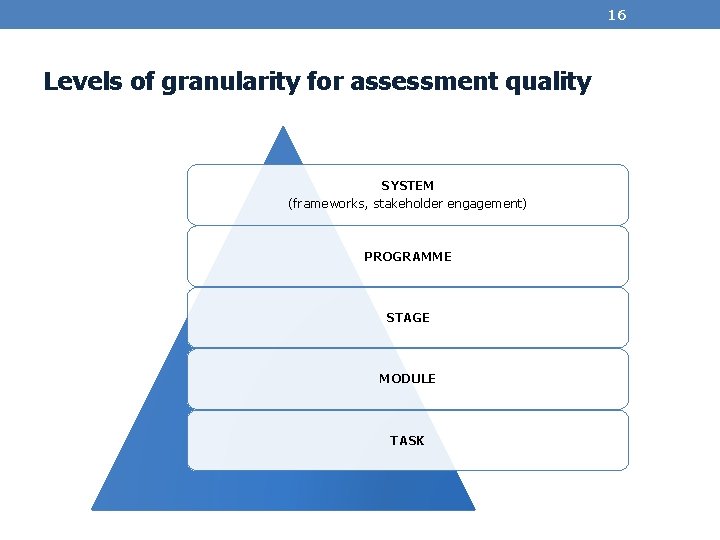

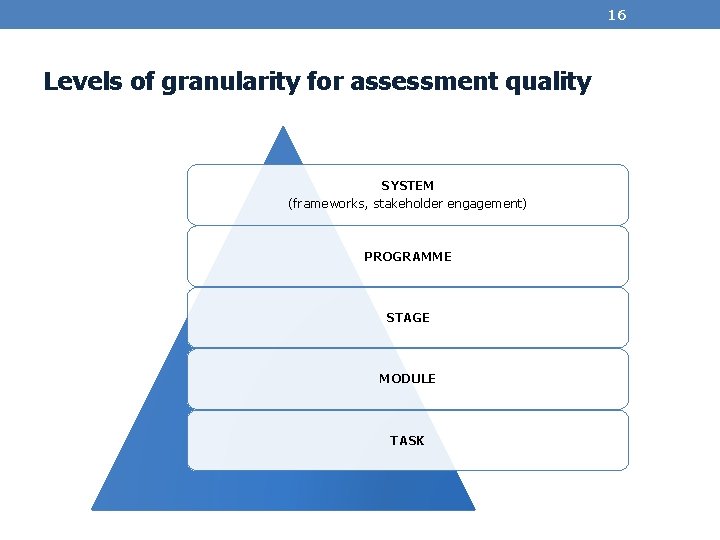

16 Levels of granularity for assessment quality SYSTEM (frameworks, stakeholder engagement) PROGRAMME STAGE MODULE TASK

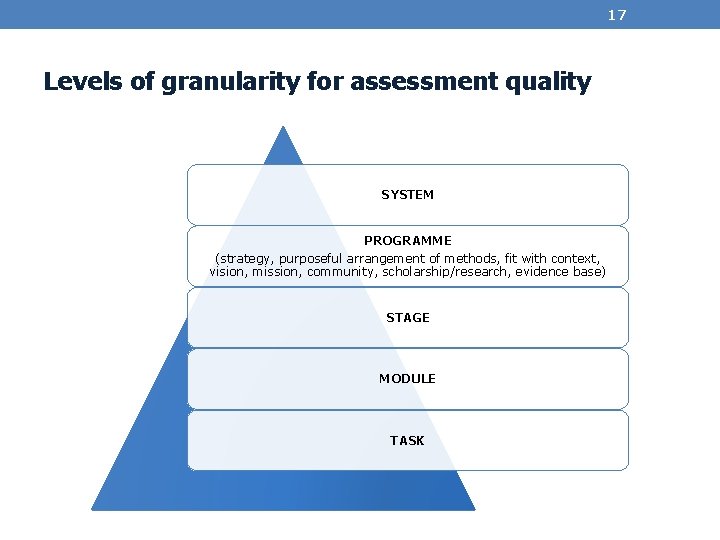

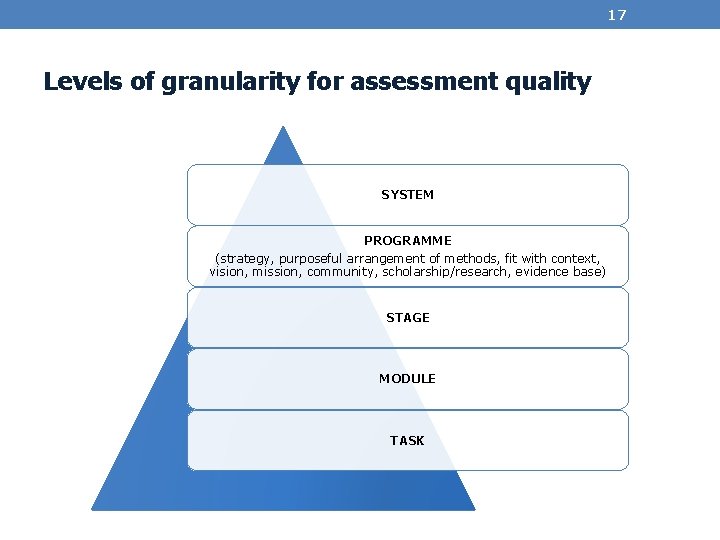

17 Levels of granularity for assessment quality SYSTEM PROGRAMME (strategy, purposeful arrangement of methods, fit with context, vision, mission, community, scholarship/research, evidence base) STAGE MODULE TASK

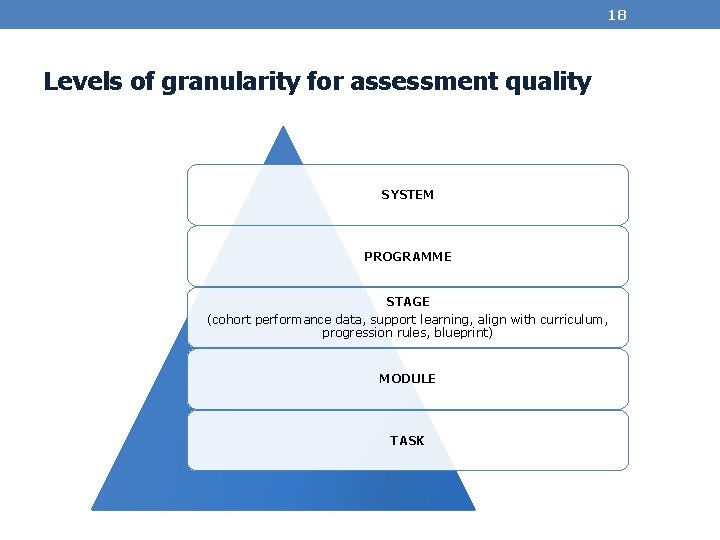

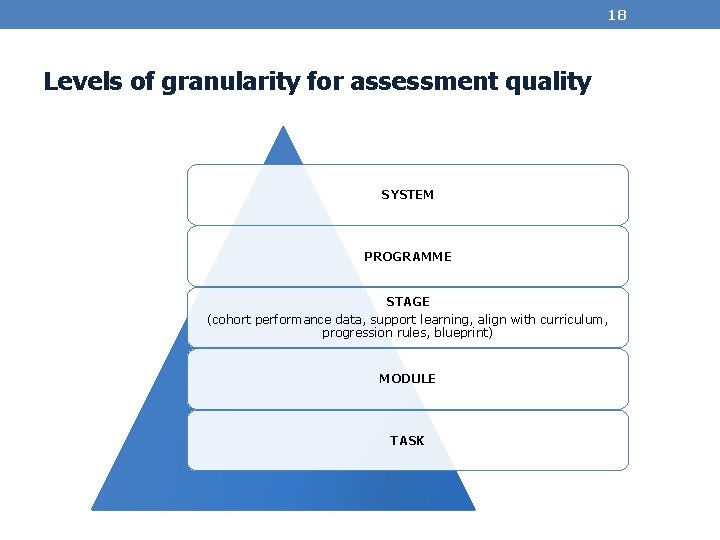

18 Levels of granularity for assessment quality SYSTEM PROGRAMME STAGE (cohort performance data, support learning, align with curriculum, progression rules, blueprint) MODULE TASK

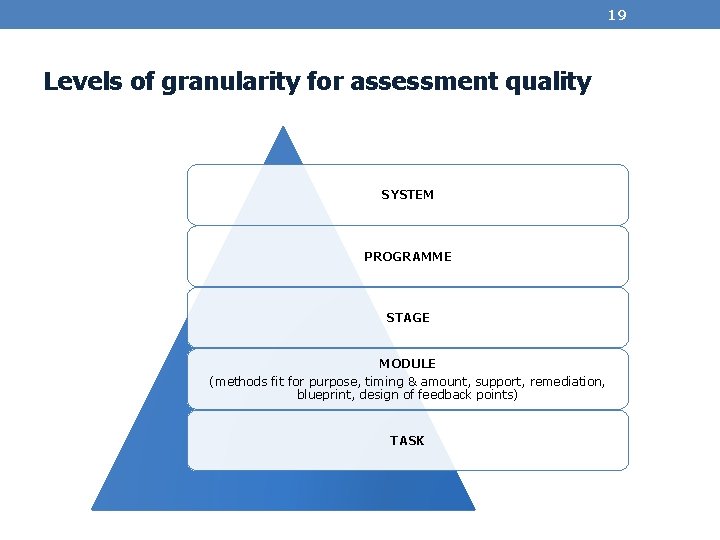

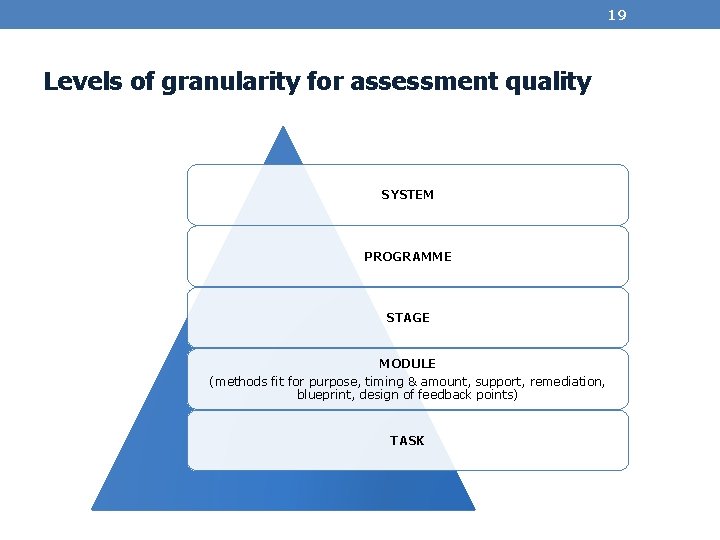

19 Levels of granularity for assessment quality SYSTEM PROGRAMME STAGE MODULE (methods fit for purpose, timing & amount, support, remediation, blueprint, design of feedback points) TASK

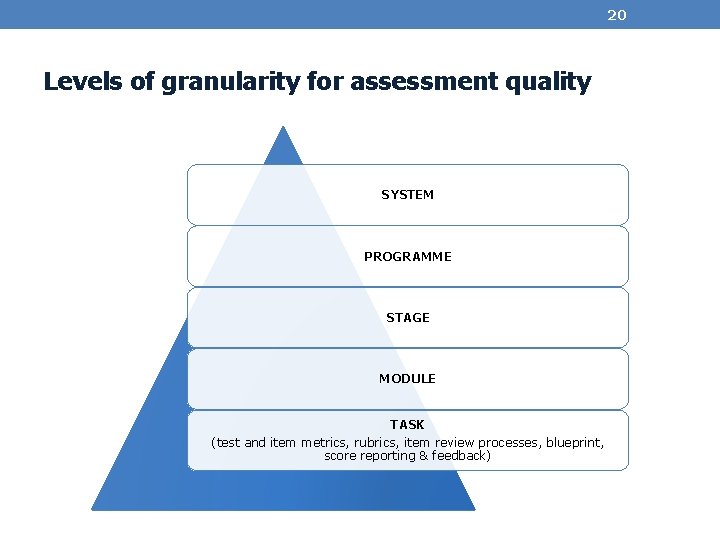

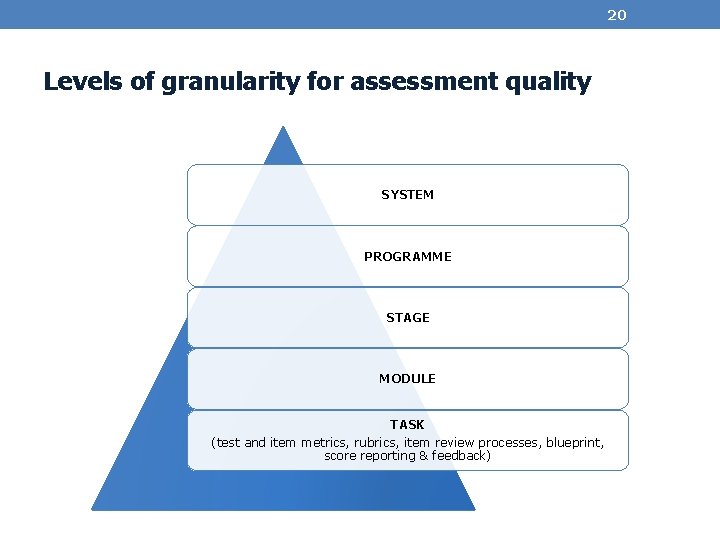

20 Levels of granularity for assessment quality SYSTEM PROGRAMME STAGE MODULE TASK (test and item metrics, rubrics, item review processes, blueprint, score reporting & feedback)

21 Levels of granularity for assessment quality SYSTEM (frameworks, stakeholder engagement) PROGRAMME (strategy, purposeful arrangement of methods, fit with context, vision, mission, community, scholarship/research, evidence base) STAGE (cohort performance data, support learning, align with curriculum, progression rules, blueprint) MODULE (methods fit for purpose, timing & amount, support, remediation, blueprint, design of feedback points) TASK (test and item metrics, rubrics, item review processes, blueprint, score reporting & feedback)

22 QA is a process 1. A series of activities as part of QA process 2. Each activity plays a different part to contribute to quality assurance 3. The series of activities as a whole reinforces the robustness of QA 4. Series of activities will change depending on level of assessment you are focused on: • Task/Module/Stage/Programme/System

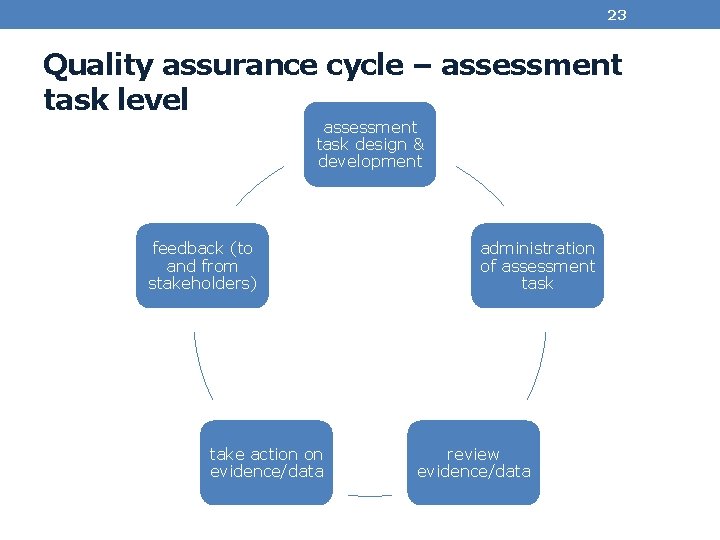

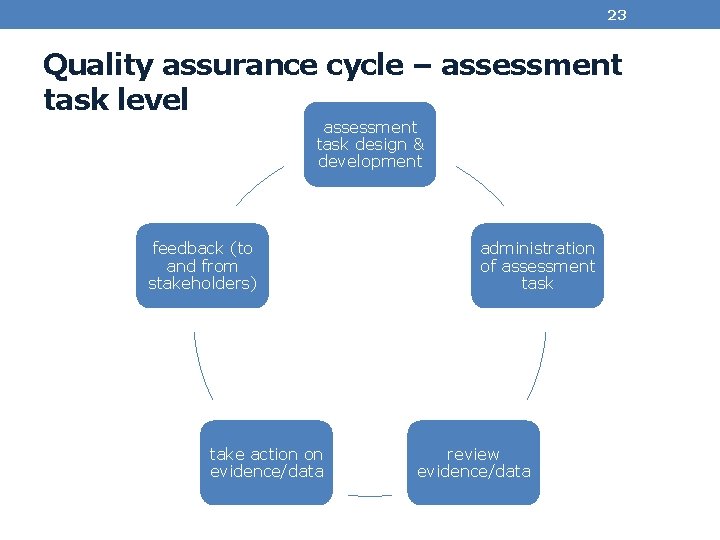

23 Quality assurance cycle – assessment task level assessment task design & development feedback (to and from stakeholders) take action on evidence/data administration of assessment task review evidence/data

24 Let’s pause and hear from you… When you are designing an assessment task, what will help with the quality of that assessment?

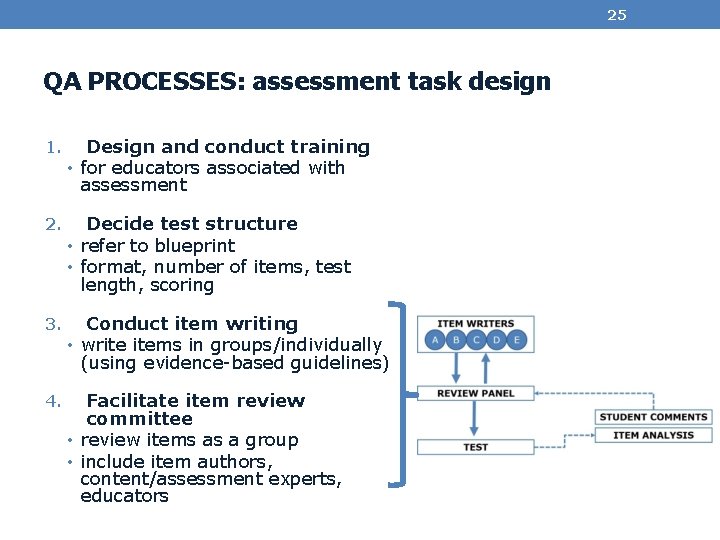

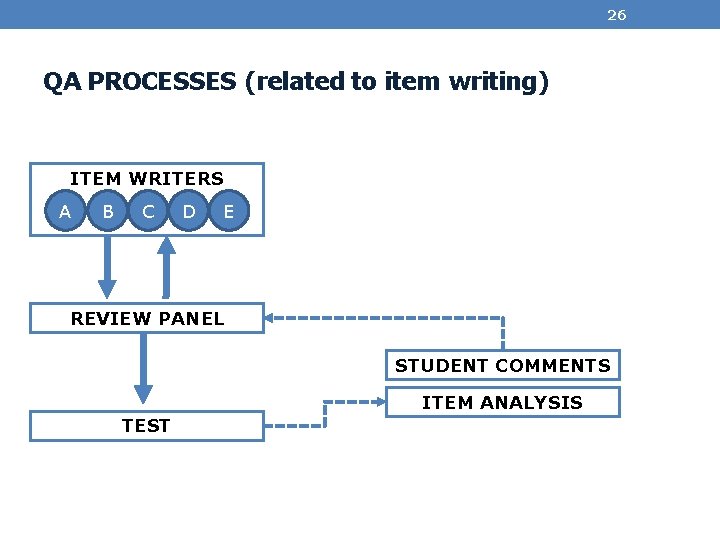

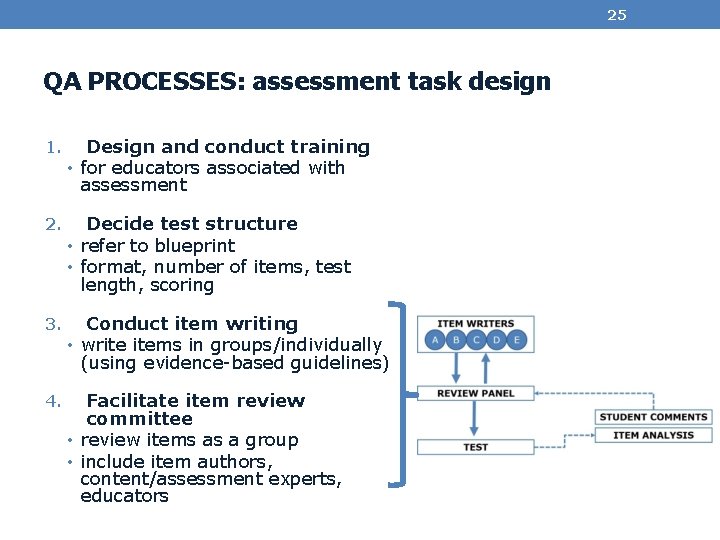

25 QA PROCESSES: assessment task design 1. Design and conduct training • for educators associated with assessment 2. Decide test structure • refer to blueprint • format, number of items, test length, scoring 3. Conduct item writing • write items in groups/individually (using evidence-based guidelines) 4. Facilitate item review committee • review items as a group • include item authors, content/assessment experts, educators

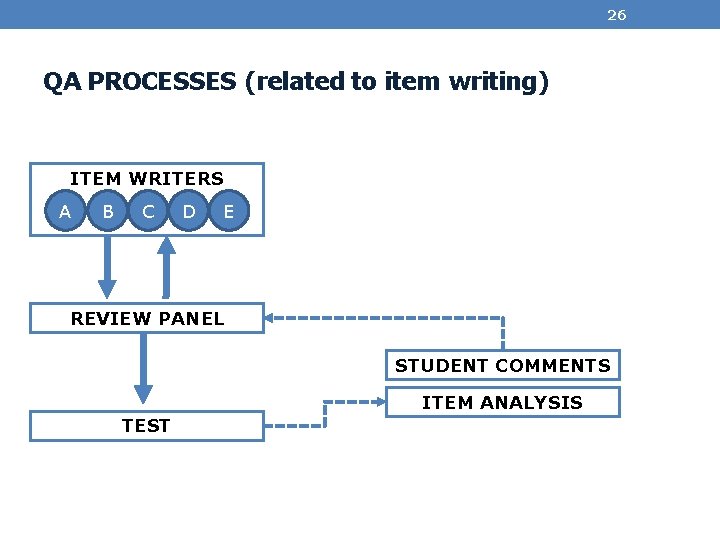

26 QA PROCESSES (related to item writing) ITEM WRITERS A B C D E REVIEW PANEL STUDENT COMMENTS ITEM ANALYSIS TEST

27 Let’s pause and hear from you… What kind of information will help you think about the quality of an assessment task?

28 QA PROCESSES: what evidence/data is important • Generate item and test statistics (psychometric data) • Convene Item Review Panel • Analyse test and item statistics, alongside exam items and correct answers • Make decisions about removing/keeping poorly performing items • Record keeping of all test and item data (database storage) • Information (feedback) from stakeholders • Review and analyse qualitative feedback from students, examiners, etc.

29 QA PROCESSES: action on evidence What action will you take to improve the assessment task/assessment program? 1. Evidence from psychometric data • Item re-writing with reference to item statistics 2. Feedback from stakeholders • Change the item review process 3. External review • More/different training needed

30 QA PROCESSES: communication to stakeholders Communicate information to stakeholders related to assessment: • Educators: curriculum and teaching • Did assessment highlight gaps in student understanding? • Does performance suggest need to shift emphasis in curriculum? Or redesign of outcomes? Or re-focus on a complex concept? • Item Authors: item writing quality • Which items need to be re-written? • Which items performed unexpectedly? • Is further training in item-writing required?

31 QA PROCESSES: communication to stakeholders Communicate information to stakeholders related to assessment: • Students: post-assessment action • Do students need to be updated about what has been done in response to their feedback? • Does an aspect of the assessment need clarification with students? • Accrediting bodies & leadership: all steps taken to assure quality of assessment task • What is the level of confidence that there are sufficient processes to assure quality? • Is the documentation of all the processes sufficient for communicating to accrediting bodies?

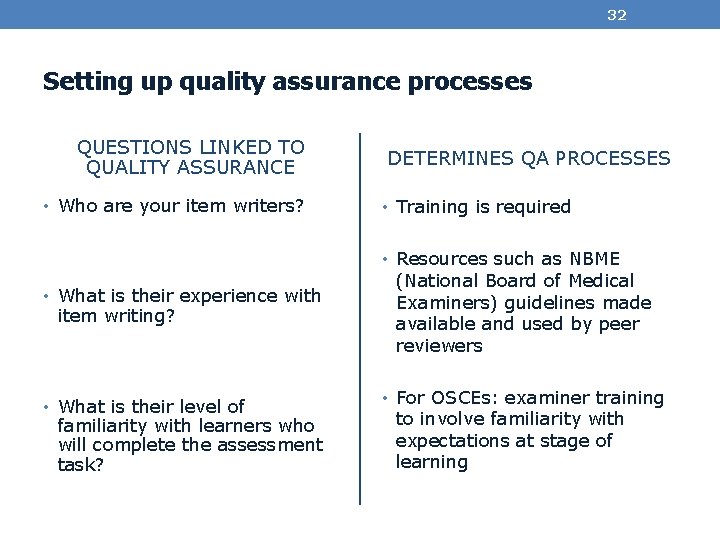

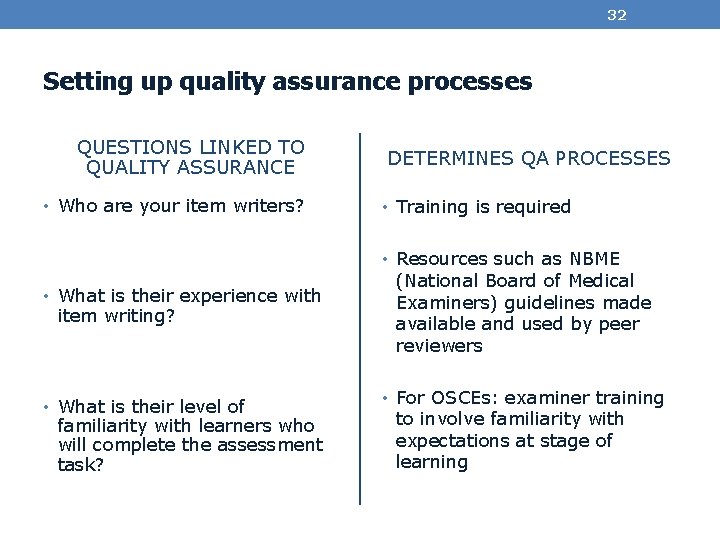

32 Setting up quality assurance processes QUESTIONS LINKED TO QUALITY ASSURANCE • Who are your item writers? DETERMINES QA PROCESSES • Training is required • Resources such as NBME • What is their experience with item writing? • What is their level of familiarity with learners who will complete the assessment task? (National Board of Medical Examiners) guidelines made available and used by peer reviewers • For OSCEs: examiner training to involve familiarity with expectations at stage of learning

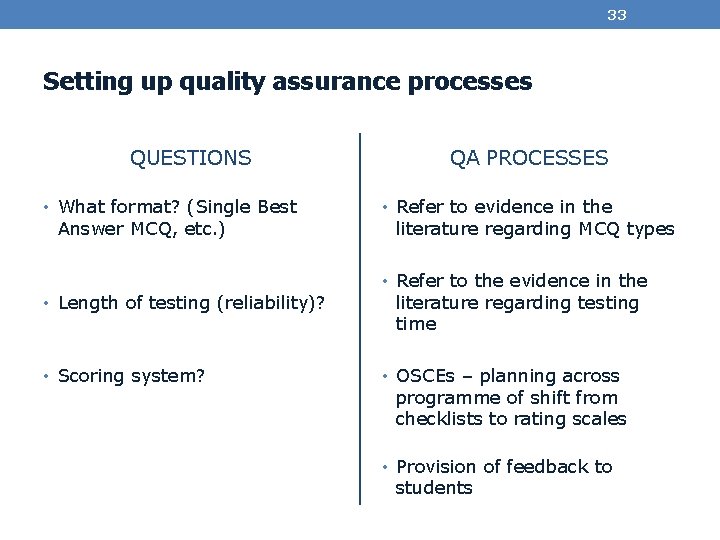

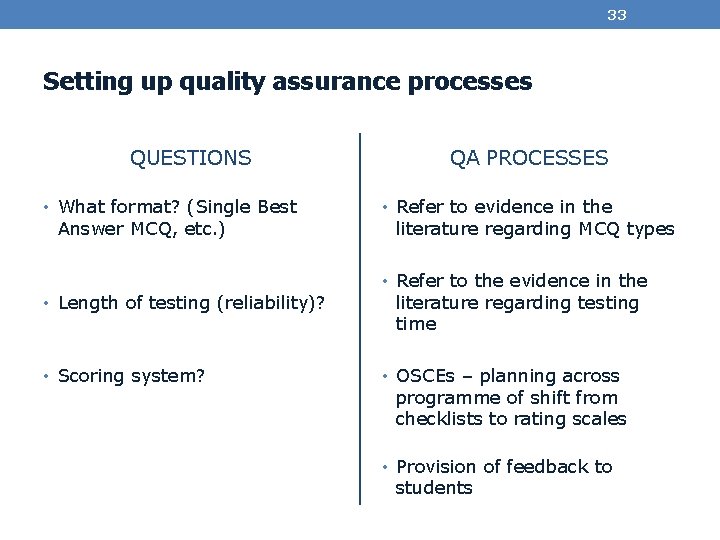

33 Setting up quality assurance processes QUESTIONS • What format? (Single Best Answer MCQ, etc. ) • Length of testing (reliability)? • Scoring system? QA PROCESSES • Refer to evidence in the literature regarding MCQ types • Refer to the evidence in the literature regarding testing time • OSCEs – planning across programme of shift from checklists to rating scales • Provision of feedback to students

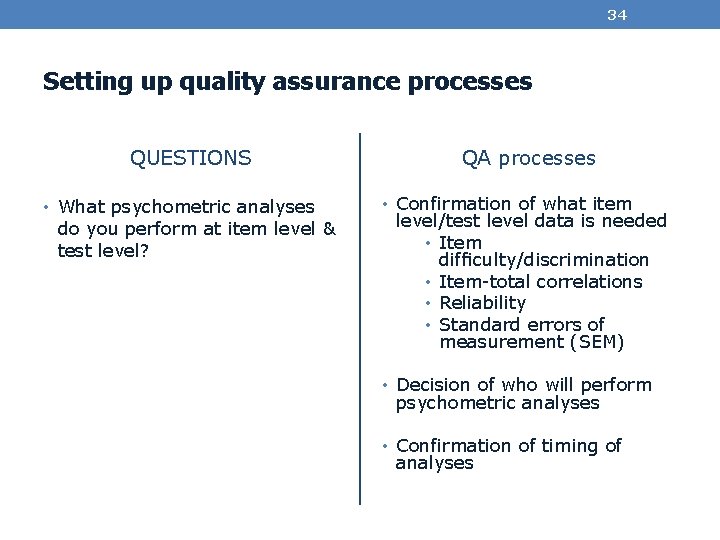

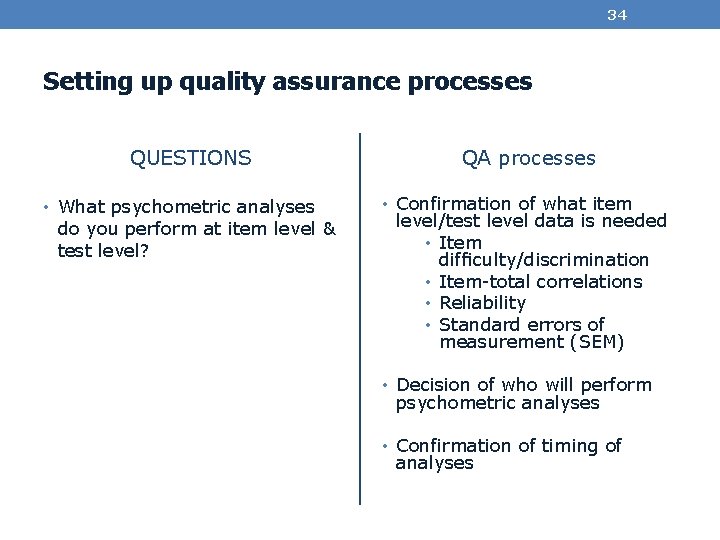

34 Setting up quality assurance processes QUESTIONS • What psychometric analyses do you perform at item level & test level? QA processes • Confirmation of what item level/test level data is needed • Item difficulty/discrimination • Item-total correlations • Reliability • Standard errors of measurement (SEM) • Decision of who will perform psychometric analyses • Confirmation of timing of analyses

35 Let’s pause and hear from you… What is one example of a QA process that you do, to support the quality of assessment?

36 Return to the key questions What are the principles of ensuring your assessment works as intended? How do you ensure the quality of your assessment? VALIDITY ASSESSMENT CONCEPTS QUALITY ASSURANCE EMPIRICAL & PRACTICAL KNOWLEDGE METHODS/ TECHNIQUES

37 Your questions?

38 Where to now? Assignment for ESMEA A reminder: An Amee-ESME Certificate in Medical Education Assessment is awarded on the basis of satisfactory participation in the six modules, and completion of an end of course assignment.

39 References AMEE, ASPIRE-to-Excellence Award, Criteria for Excellence in Assessment accessed Dec 2016, http: //www. aspire-to-excellence. org/Areas+of+Excellence/ Boursicot KAM, Roberts TE, Pell G. Using borderline methods to compare passing standards for OSCEs at graduation across three medical schools. Medical Education. 2007; 41(11): 1024 -31. Case S, Swanson D. Constructing written test questions for the basic and clinical sciences. 3 rd ed. National Board of Medical Examiners, 2002. Downing SM, Lieska NG, Raible MD. Establishing passing standards for classroom achievement tests in medical education: A comparative study of four methods. Academic Medicine. 2003; 78(10). Kane, MT. Validating the interpretations and uses of scores. Journal of Educational Measurement. 2013 a; 50(1), 1 -73 Kane, MT. The Argument-based approach to validation. School Psychology Review. 2013 b 42(4): 448 -457 Mc. Kinley DW, Norcini JJ. How to set standards on performance-based examinations: AMEE Guide No. 85. Medical Teacher. 2014; 22: 120 -30. Popham, WJ. Robert L. Linn Distinguished Address Award: American Educational Research Association. 2015 Wilkinson TJ, Campbell PJ, Judd SJ. Reliability of the long case. Medical Education. 2008; 42(9): 887 -93.

40 THANK YOU CONTACT DETAILS: Sandra Joy Kemp Director, Learning and Teaching Associate Professor Curtin Medical School, Curtin University Perth, Western Australia Email: sandra. kemp@curtin. edu. au