Pursuing Faster IO in COSMO POMPA Workshop May

- Slides: 11

Pursuing Faster I/O in COSMO POMPA Workshop May 3 rd 2010

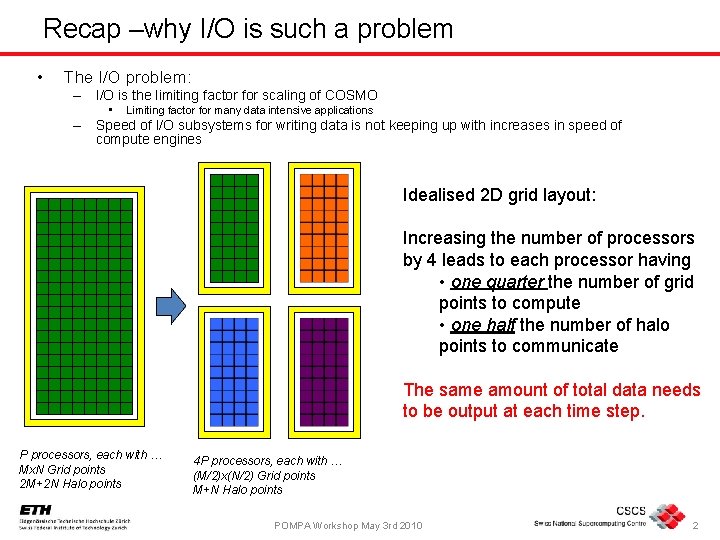

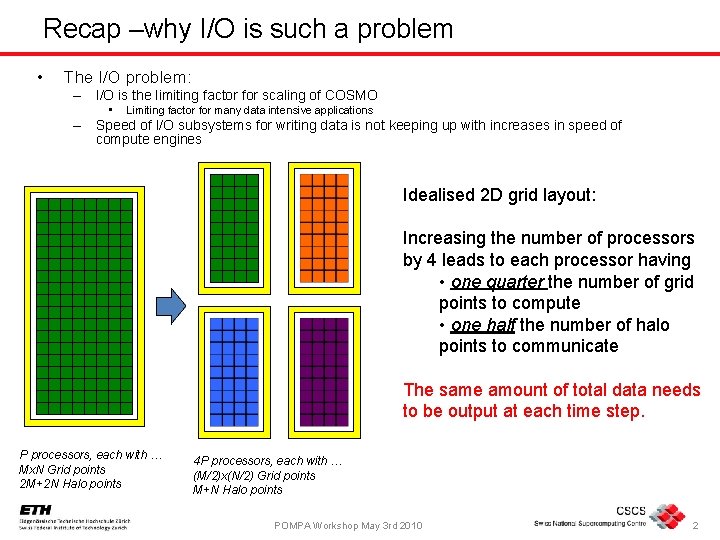

Recap –why I/O is such a problem • The I/O problem: – I/O is the limiting factor for scaling of COSMO • Limiting factor for many data intensive applications – Speed of I/O subsystems for writing data is not keeping up with increases in speed of compute engines Idealised 2 D grid layout: Increasing the number of processors by 4 leads to each processor having • one quarter the number of grid points to compute • one half the number of halo points to communicate The same amount of total data needs to be output at each time step. P processors, each with … Mx. N Grid points 2 M+2 N Halo points 4 P processors, each with … (M/2)x(N/2) Grid points M+N Halo points POMPA Workshop May 3 rd 2010 2

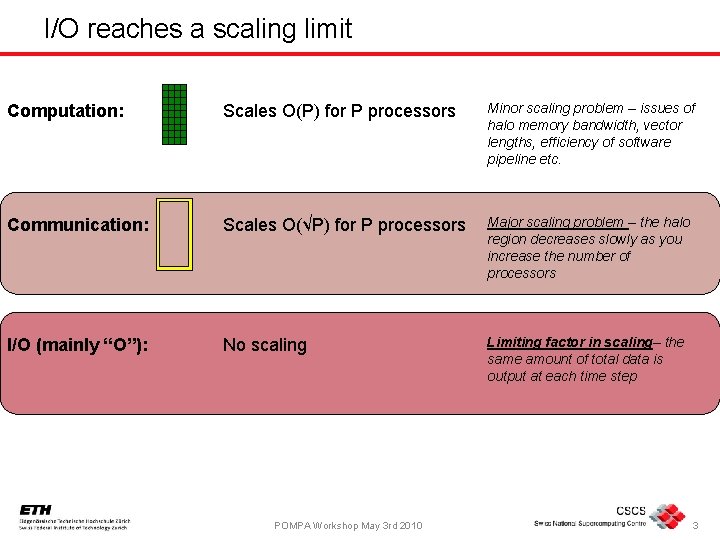

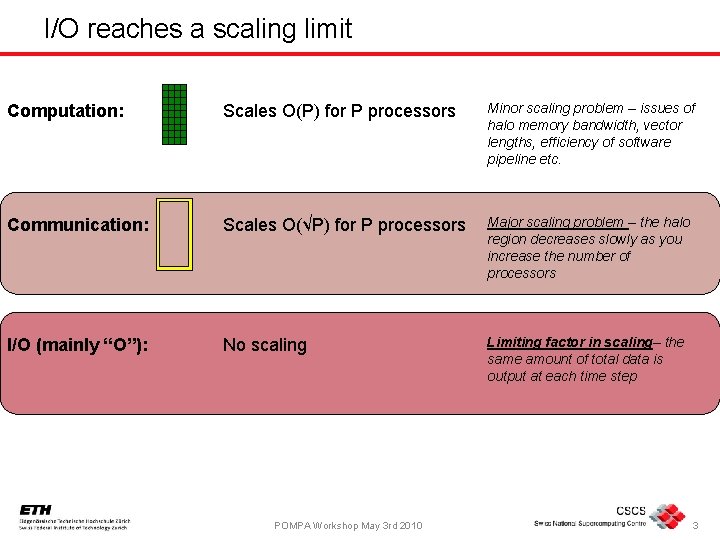

I/O reaches a scaling limit Computation: Scales O(P) for P processors Minor scaling problem – issues of halo memory bandwidth, vector lengths, efficiency of software pipeline etc. Communication: Scales O(√P) for P processors Major scaling problem – the halo region decreases slowly as you increase the number of processors I/O (mainly “O”): No scaling Limiting factor in scaling– the same amount of total data is output at each time step POMPA Workshop May 3 rd 2010 3

Current I/O strategies in COSMO • Two types of output format – GRIB and Net. CDF – Grib dominant in operational weather forecasting – Net. CDF is the main format used in climate research • GRIB output has the possibility of using asynchronous I/O processes to improve parallel performance • Net. CDF is always ultimately serialised through process zero of the simulation • Actually in each case of GRIB and Net. CDF the output is a multi-level data collection approach POMPA Workshop May 3 rd 2010 4

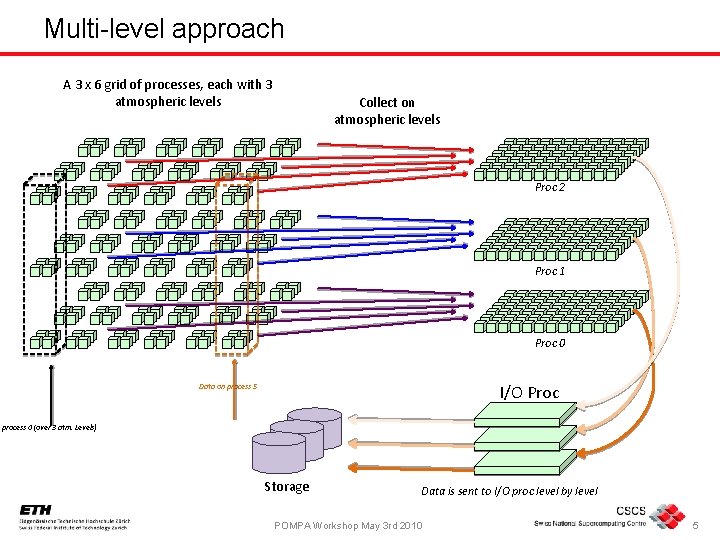

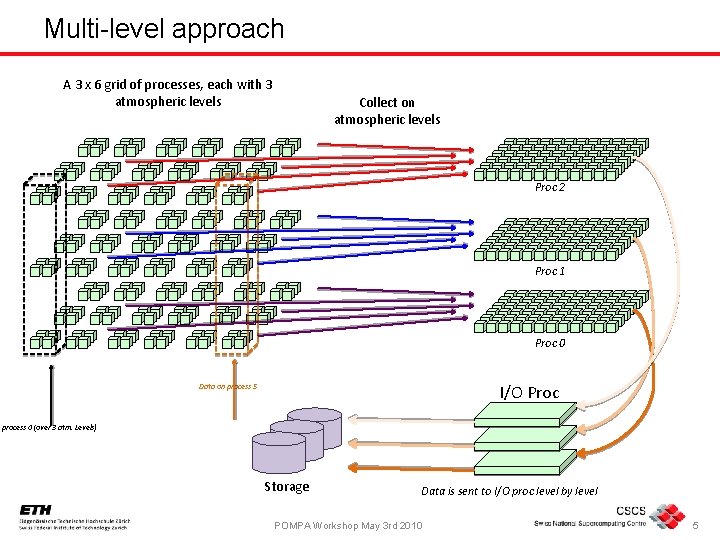

Multi-level approach A 3 x 6 grid of processes, each with 3 atmospheric levels Collect on atmospheric levels Proc 2 Proc 1 Proc 0 I/O Proc Data on process 5 n process 0 (over 3 atm. Levels) Storage Data is sent to I/O proc level by level POMPA Workshop May 3 rd 2010 5

Performance limitations and constraints • Both Grib and Net. CDF formats carry out the gather on levels stage ü For Grib-based weather simulations the final collect-andstore stage can deploy multiple I/O processes to deal with the data. – Allows improved performance where real storage bandwidth is the bottleneck – Produces multiple files (one per I/O process) that can easily be concatenated together • Only process 0 can currently act as an I/O proc for the collect-and-store stage with Net. CDF – Serialises the I/O through one compute process POMPA Workshop May 3 rd 2010 6

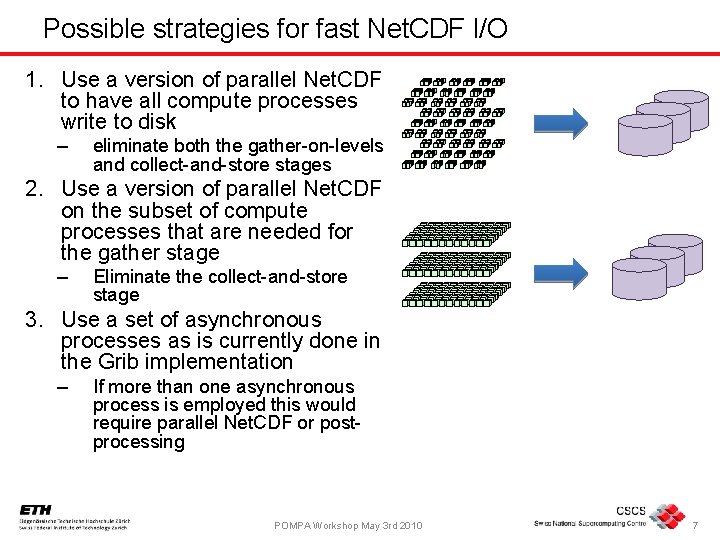

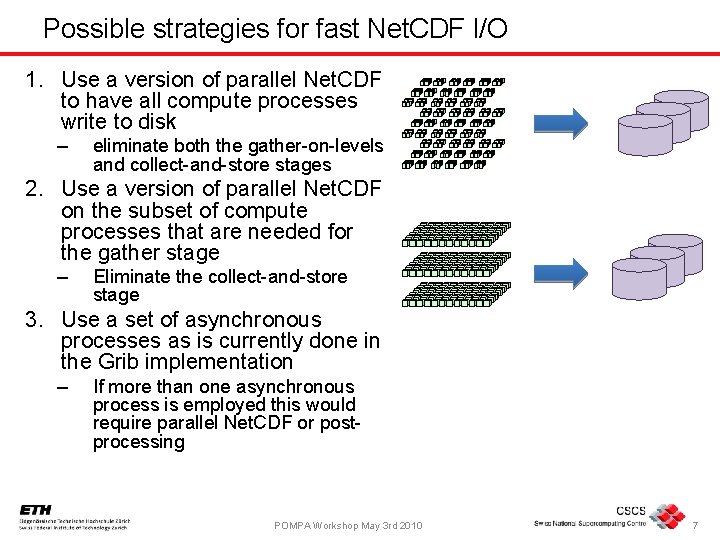

Possible strategies for fast Net. CDF I/O 1. Use a version of parallel Net. CDF to have all compute processes write to disk – eliminate both the gather-on-levels and collect-and-store stages 2. Use a version of parallel Net. CDF on the subset of compute processes that are needed for the gather stage – Eliminate the collect-and-store stage 3. Use a set of asynchronous processes as is currently done in the Grib implementation – If more than one asynchronous process is employed this would require parallel Net. CDF or postprocessing POMPA Workshop May 3 rd 2010 7

Full parallel strategy • A simple micro-benchmark of 3 D data distributed on a 2 D process grid showed reasonable results • This was implemented in the RAPS code and tested with the IPCC benchmark at ~ 900 cores – No smoothing operations in this benchmark or in the code • The results were poor – – Much of the I/O in this benchmark is 2 D fields Not much data is written at each timestep The current I/O performance is not bad The parallel strategy became dominated by metadata operations • File writes for 3 D fields were reasonably fast (~0. 025 s for 50 Mbytes) • Opening the file took a long time (0. 4 to 0. 5 seconds) • The strategy may be useful for high-resolution simulations writing large 3 D blocks of data – Originally this strategy was expected to target 2000 x 1000 x 60+ grids POMPA Workshop May 3 rd 2010 8

Slowdown from metadata • The first strategy has problems related to metadata scalability • Most modern highperformance file systems use POSIX I/O to open/close/seek etc. • This is not scalable as it reduces file access operations to the time taken for Metadata operations POMPA Workshop May 3 rd 2010 9

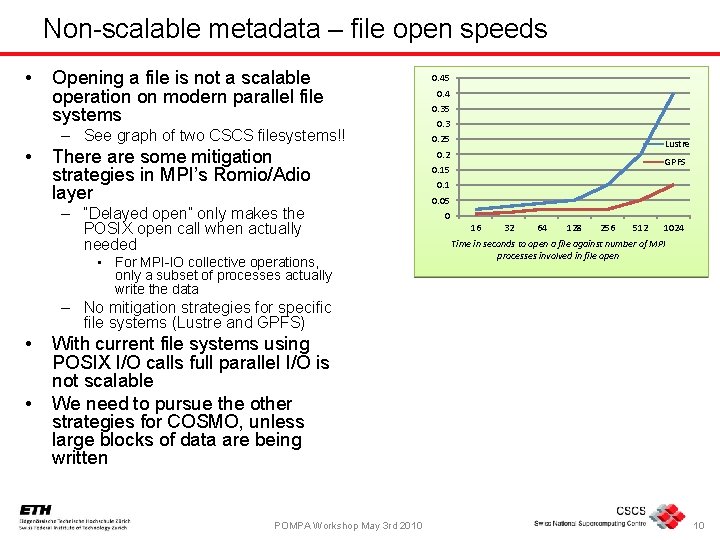

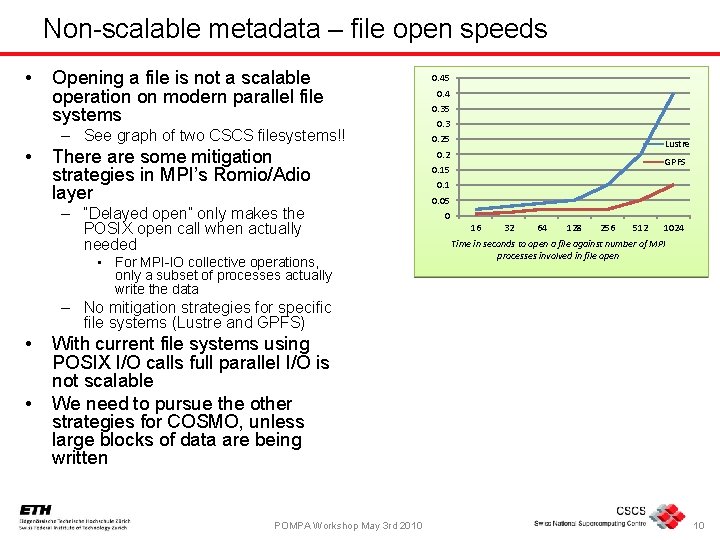

Non-scalable metadata – file open speeds • Opening a file is not a scalable operation on modern parallel file systems – See graph of two CSCS filesystems!! • There are some mitigation strategies in MPI’s Romio/Adio layer – “Delayed open” only makes the POSIX open call when actually needed • For MPI-IO collective operations, only a subset of processes actually write the data 0. 45 0. 4 0. 35 0. 3 0. 25 Lustre 0. 2 GPFS 0. 15 0. 1 0. 05 0 16 32 64 128 256 512 1024 Time in seconds to open a file against number of MPI processes involved in file open – No mitigation strategies for specific file systems (Lustre and GPFS) • • With current file systems using POSIX I/O calls full parallel I/O is not scalable We need to pursue the other strategies for COSMO, unless large blocks of data are being written POMPA Workshop May 3 rd 2010 10

Next steps • We are looking at all 3 strategies for improving Net. CDF I/O • We are investigating the current state of Metadata accesses in the MPI-IO layer and in file systems in general – Particularly Lustre and GPFS, but others (e. g. Orange. FS) • … but for some jobs the individual I/O operations might not be large enough to allow much speedup POMPA Workshop May 3 rd 2010 11