PURE STORAGE OPENSTACK Eating your own Dog Food

- Slides: 12

PURE STORAGE & OPENSTACK Eating your own Dog Food!! 1 © 2019 PURE STORAGE INC.

Introductions � Simon Dodsley Technical Director Pure Storage � Open. Stack advocate, Open Source contributor � Daniel Leaberry “I fix things, and build more things to fix” Senior Systems Administrator Pure Storage � Internal Open. Stack cloud admin, Pure Cinder CI maintainer 2 © 2019 PURE STORAGE INC.

Talking (mainly) Cinder… 3 © 2019 PURE STORAGE INC.

So Much Choice in Cinder As of Stein � 98 different Drivers � 31 different Vendors � 20 different functions (11 required) � 9 optional – relevant for your vendor? – valid for your applications? � A few other features, front-end Qo. S, volume groups, replication type � Even if you have a vendor in mind already, which data protocol and features should you use? � Even if a feature is supported, does the implementation work well? � Is development of your chosen driver on-going? � All the new stuff to come, NVMe 4 © 2019 PURE STORAGE INC.

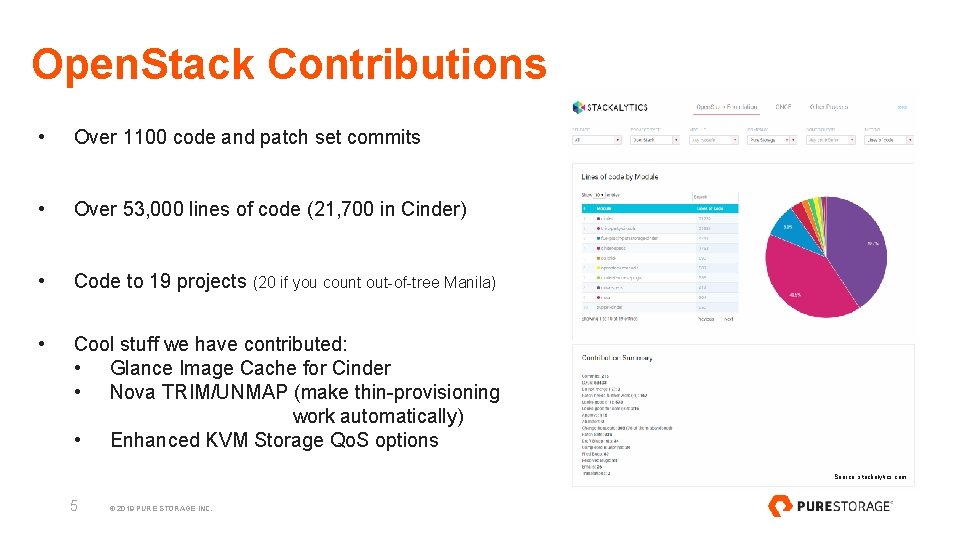

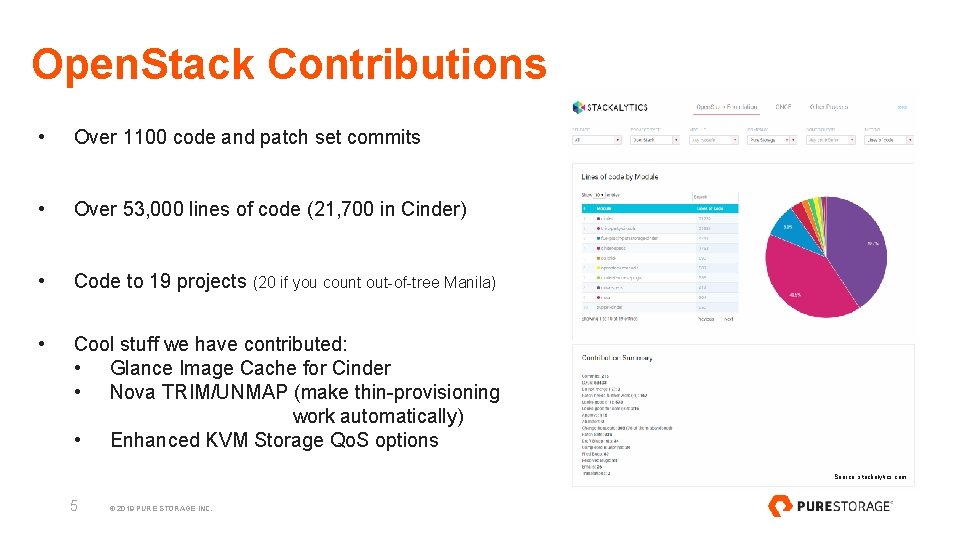

Open. Stack Contributions • Over 1100 code and patch set commits • Over 53, 000 lines of code (21, 700 in Cinder) • Code to 19 projects (20 if you count out-of-tree Manila) • Cool stuff we have contributed: • Glance Image Cache for Cinder • Nova TRIM/UNMAP (make thin-provisioning work automatically) • Enhanced KVM Storage Qo. S options Source: stackalytics. com 5 © 2019 PURE STORAGE INC.

What Flash. Array does really well!! � Thin-Provisioning – great data-reduction using always-on compression and deduplication – averaging 5. 5: 1 across all workloads in the global fleet � Encryption – always-on AES 256 � Snapshots – zero space instant snapshots – instant recovery from any snapshot � Glance Image Cache works like a dream (it should, Pure - contributed this feature) � Replication – both asynchronous and synchronous 6 © 2019 PURE STORAGE INC.

7 So let’s eat that dogfood…. Over to Daniel: © 2019 PURE STORAGE INC.

We don’t just write it; we use it!! � Internal Development Teams have a large Open. Stack Cluster � Currently on Ocata – upgrade planned to get Cinder Active/Active HA � Deployed using Puppet and openstack-puppet modules � 195 compute servers across 2 datacenters (60/40) � 4 Flash. Arrays (2 per DC) 8 © 2019 PURE STORAGE INC.

We don’t just write it; we use it!! � Nova Availability Zones used to isolate internal teams on compute resources � NUMA awareness and CPU pinning for some compute hosts � � Why use CPU pinning and NUMA awareness? � Some jobs are memory sensitive – pinning increases the memory bandwidth � Stable runtimes required – pinning gives dedicated cores, so no sharing � The stability helps us know if we introduced slowness instead of always debugging contention issues Issue : Retry. Filter causing multiple IP addresses to be allocated to a single instance � Caused by the NUMA scheduling architecture � Over 1100 Nova instances � 9 Over 370 40 core 128 GB RAM instances for developers – heavily oversubscribed – KVM memory dedupe helps reduce worries about memory consumption © 2019 PURE STORAGE INC.

We don’t just write it; we use it!! � Cinder is flawless � Only use Cinder volumes (no Nova ephemeral) � 10 compute nodes only have 40 GB local disk (Nova Bug #1681658 - Won’t Fix!!) � Use volume scheduler Volume. Number. Weigher with a 4000 volume limit per array � Use multipathing and Open Virtual Switch (OVS) on lacp bonded trunks � Requires longer deploy timeout as the test Purity image is 14 GB, however… � Use Glance Image Cache for Cinder so deploy time is only an issue first time – “worth it’s weight in gold”. Subsequent deploys are less than 20 seconds as it’s just a clone of a lun from the array. � Over 700 volumes are frequently created/deleted on the arrays per 24 hours � Custom scripts to snapshot/reset using Pure REST API for testing code that was originally running on VMware © 2019 PURE STORAGE INC.

We don’t just write it; we use it!! � Used as an AWS-like experience by one of our teams � Create/destroy hundreds of instances per day � Actually pulled back from AWS into Open. Stack � Heavily oversubscribed usage � 100 instances on a 48 core, 512 GB RAM server � Saves money $$$ 11 � $1 M+ saved in VMware licenses � Going forward : more on Open. Stack and less on VMware © 2019 PURE STORAGE INC.

THANK YOU 12 © 2019 PURE STORAGE INC. For more information please drop by our booth #A 10