PSM System Architecture Measurement 1 PSM Continuation of

- Slides: 11

PSM System Architecture Measurement 1

PSM Continuation of NDIA Measurements Task • Goal of last year’s task was to: • Identify a set of leading indicators that provide insight into technical performance at major decision points for managing programs quantitatively across their life cycle, with emphasis on Technology Development (TD) and Engineering Manufacturing and Development (EMD) phases. • Build upon objective measures in common practice in industry, government, and accepted standards. Do not define new measures unless currently available measures are inadequate to address the information needs. • Select objective measures based on essential attributes (e. g. , relevance, completeness, timeliness, simplicity, cost effectiveness, repeatability, and accuracy). • Measures should be commonly and readily available, with minimal additional effort needed for data collection and analysis. 2

PSM Architecture Measurement • Architecture was a high priority area but … • No measures met the criteria • Architectures must be complete, consistent, and correct • Complete –All the elements required to describe the are solution present and all the requirements/needs are addressed • Consistent –The artifacts that describe the architecture are internally consistent and consistent with external constraints (e. g. external interfaces) • Correct –The architecture satisfies all requirements within the program constraints (cost, schedule, etc. ) and it the architecture that does so to the greatest extent • This results in a need for multiple types of measurement 3

PSM • • • Architecture Measurement Two types of measurement needed • Completeness/Maturity and Consistency - Are all the elements required present at the current program phase? - Are all requirements accounted for? - Does it tie together? Within an architecture level? Between levels? Between artifact types? • Correct = Solution Quality - Does it meet the stakeholder needs? - Does it avoid known architecture deficiencies? - Does it do so better than alternatives? Traditionally this was determined at the milestone reviews and was a lagging indicator Model based architecting (or architecture modeling) makes the evaluation of completeness and consistency feasible as a leading indicator) 4

PSM • Two types of measures required • Quantitative • Qualitative • • • Goal is to measure whether an architecture is complete and consistent Easier with model-based architecting Anticipated artifacts / completed artifacts Internal reports showing missing data and inconsistencies between artifacts Supported by many of the architecture tools but requires effort on the part of the program to create and customize Models help visualize heuristics as well • • • Examples Progress chart Requirements trace reports (SELI) TBx closure rate and TBx counts (SELI) Empty data field counts Visual reviews of artifacts Other reports from the modeling tool database that address consistency • • Quantitative Measurement 5

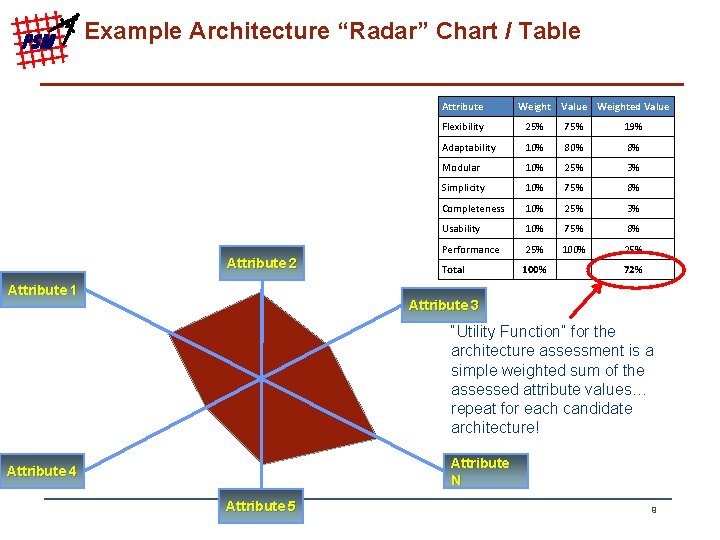

PSM Qualitative Measurement • Goal is to ensure the architecture is correct and coherent • Does it meets stakeholder needs within the program constraints? • Is it better than the alternative architectures in satisfying stakeholder needs? • Still somewhat subjective but has aspects that can be measured • Can only be determined in comparison to the alternatives • TPMs and MOE/KPP satisfaction compared • Examples • TPM/MOE radar charts • Est. At Completion vs TPM/MOE • Architecture design trade study records 6

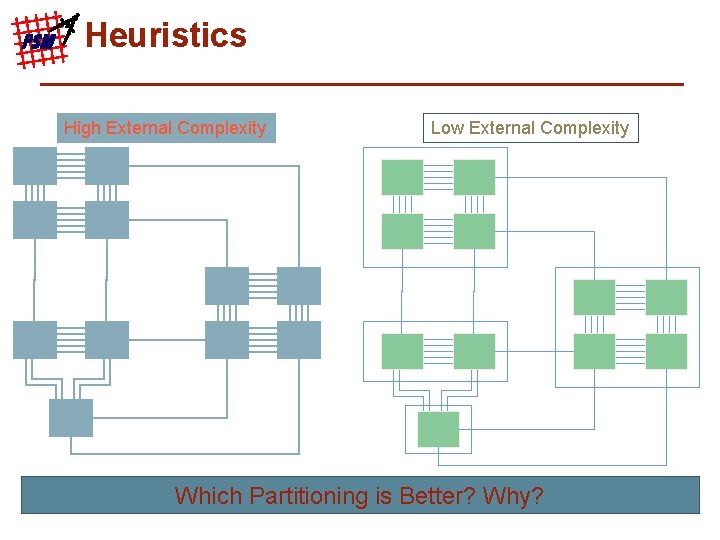

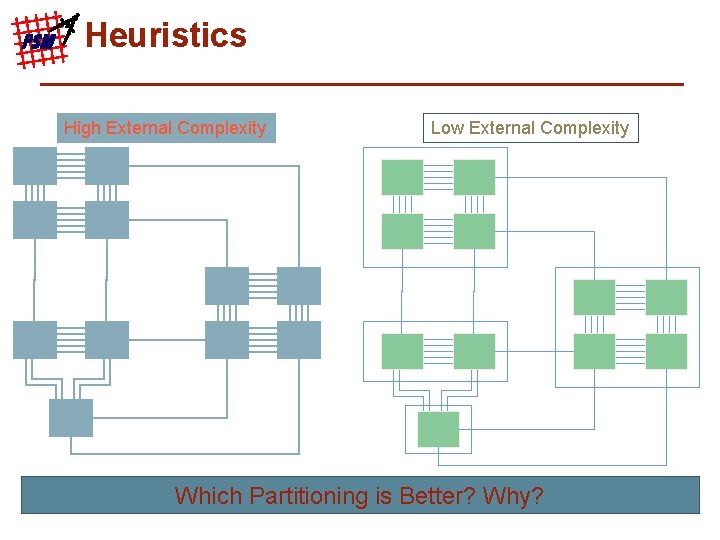

PSM Internal Measurements/Heuristics • Additional ways to measure architecture quality • Heuristics – “Does it look right” - Review of the model artifacts can sometimes indicate if an architecture exhibits good / bad characteristics such as low cohesion or high levels of coupling • Internal metrics - Number of internal interfaces - Number of requirements per architecture element can indicate an imbalance 7

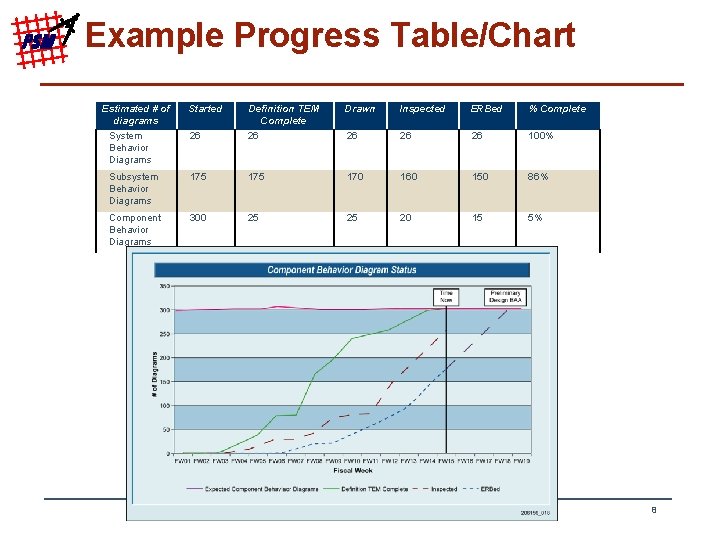

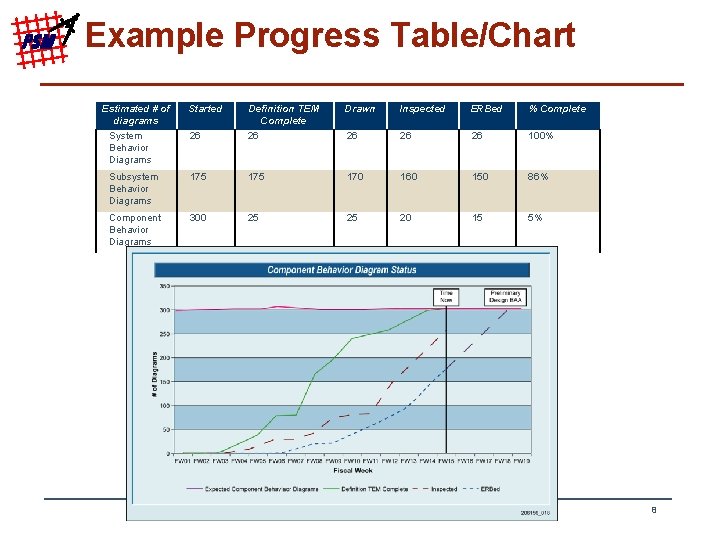

PSM Example Progress Table/Chart Estimated # of diagrams Started Definition TEM Complete Drawn Inspected ERBed % Complete System Behavior Diagrams 26 26 26 100% Subsystem Behavior Diagrams 175 170 160 150 86% Component Behavior Diagrams 300 25 25 20 15 5% 8

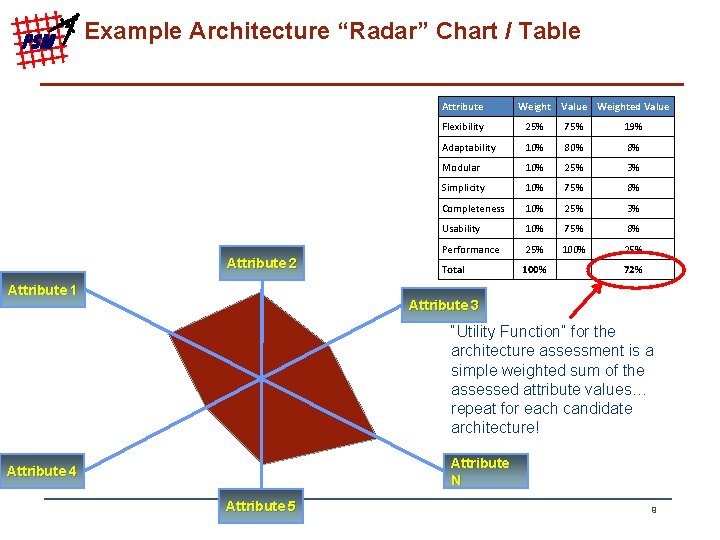

PSM Example Architecture “Radar” Chart / Table Attribute 2 Attribute 1 Weight Value Weighted Value Flexibility 25% 75% 19% Adaptability 10% 8% Modular 10% 25% 3% Simplicity 10% 75% 8% Completeness 10% 25% 3% Usability 10% 75% 8% Performance 25% 100% 25% Total 100% 72% Attribute 3 “Utility Function” for the architecture assessment is a simple weighted sum of the assessed attribute values… repeat for each candidate architecture! Attribute N Attribute 4 Attribute 5 9

PSM Structural Heuristics “The eye is a fine architect. Believe it” • Werner Von Braun, 1950 “A good solution somehow looks nice” – Robert Spinrad, 1991 10

PSM Heuristics High External Complexity Low External Complexity Which Partitioning is Better? Why? 11