PSEIS Blueprint for Parallel Processing RSFMadagascar School and

PSEIS, Blueprint for Parallel Processing RSF/Madagascar School and Workshop: Reproducible Research in Computational Geophysics Vancouver 2006 Randall L. Selzler* Joseph A. Dellinger 30 Aug 2006 RSelzler (at) Data-Warp. com Joseph. Dellinger (at) BP. com PSEIS, Blueprint for Parallel Processing

Parallel Processing… Essential Capability! Parallel Seismic Earth Imaging System Parallel Execution and Data 30 Aug 2006 • Required by some stakeholders • Needed for large problems • Reduce turn-around time • Known to be cost effective Challenges… Transfer • Speed • Scalability • Granularity • Hardware • Software PSEIS, Blueprint for Parallel Processing 2

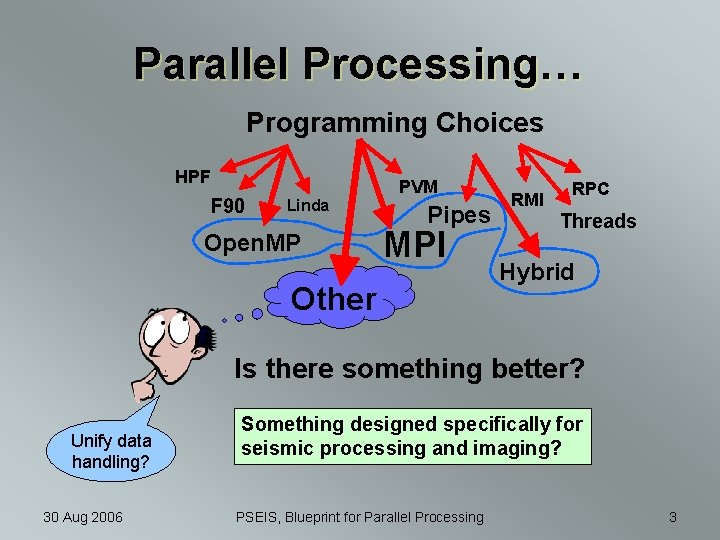

Parallel Processing… Programming Choices HPF F 90 Linda Open. MP PVM Pipes MPI Other RMI RPC Threads Hybrid Is there something better? Unify data handling? 30 Aug 2006 Something designed specifically for seismic processing and imaging? PSEIS, Blueprint for Parallel Processing 3

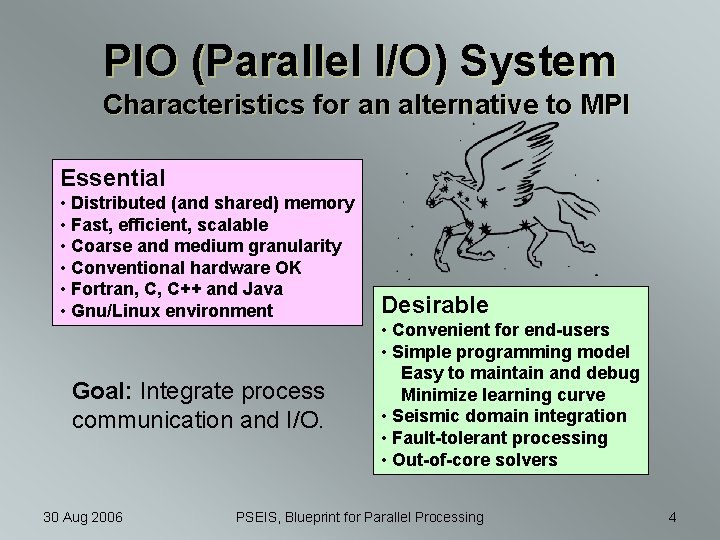

PIO (Parallel I/O) System Characteristics for an alternative to MPI Essential • Distributed (and shared) memory • Fast, efficient, scalable • Coarse and medium granularity • Conventional hardware OK • Fortran, C, C++ and Java • Gnu/Linux environment Goal: Integrate process communication and I/O. 30 Aug 2006 Desirable • Convenient for end-users • Simple programming model Easy to maintain and debug Minimize learning curve • Seismic domain integration • Fault-tolerant processing • Out-of-core solvers PSEIS, Blueprint for Parallel Processing 4

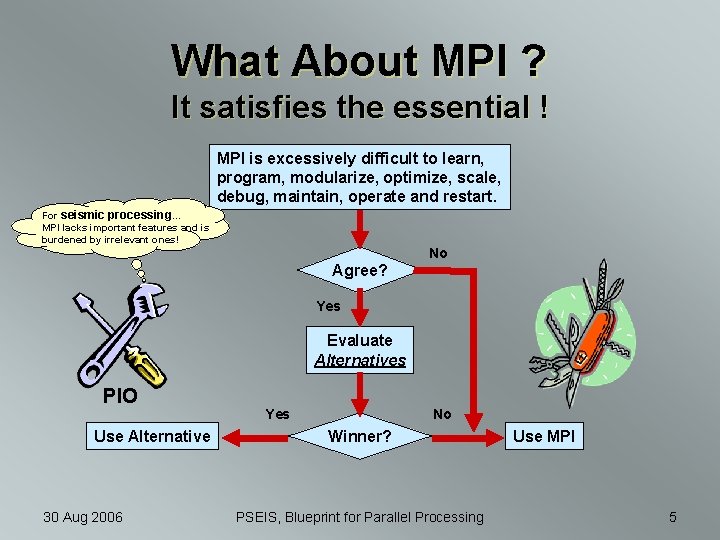

What About MPI ? It satisfies the essential ! MPI is excessively difficult to learn, program, modularize, optimize, scale, debug, maintain, operate and restart. For seismic processing… MPI lacks important features and is burdened by irrelevant ones! No Agree? Yes Evaluate Alternatives PIO Use Alternative 30 Aug 2006 Yes No Winner? PSEIS, Blueprint for Parallel Processing Use MPI 5

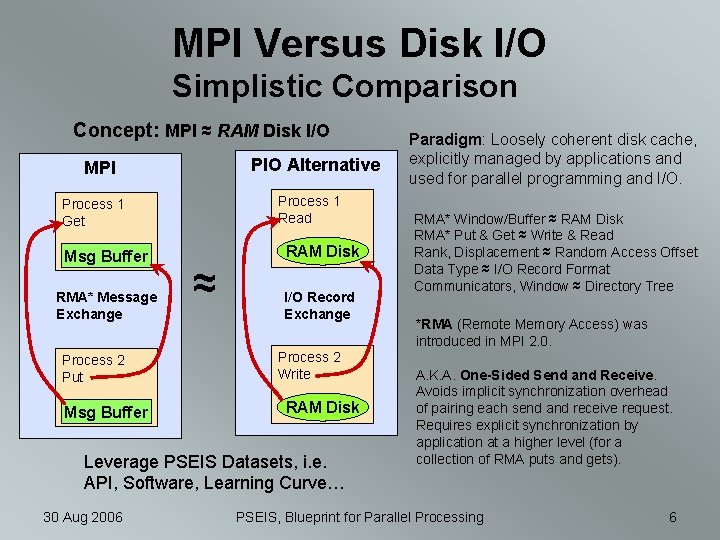

MPI Versus Disk I/O Simplistic Comparison Concept: MPI ≈ RAM Disk I/O PIO Alternative MPI Process 1 Read Process 1 Get Msg Buffer RMA* Message Exchange Process 2 Put Msg Buffer RAM Disk ≈ I/O Record Exchange Process 2 Write RAM Disk Leverage PSEIS Datasets, i. e. API, Software, Learning Curve… 30 Aug 2006 Paradigm: Loosely coherent disk cache, explicitly managed by applications and used for parallel programming and I/O. RMA* Window/Buffer ≈ RAM Disk RMA* Put & Get ≈ Write & Read Rank, Displacement ≈ Random Access Offset Data Type ≈ I/O Record Format Communicators, Window ≈ Directory Tree *RMA (Remote Memory Access) was introduced in MPI 2. 0. A. K. A. One-Sided Send and Receive. Avoids implicit synchronization overhead of pairing each send and receive request. Requires explicit synchronization by application at a higher level (for a collection of RMA puts and gets). PSEIS, Blueprint for Parallel Processing 6

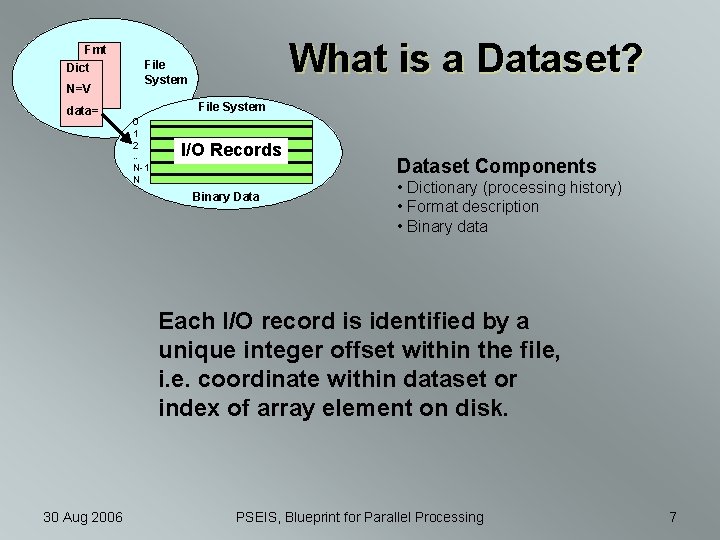

Fmt Dict N=V data= What is a Dataset? File System 0 1 2 … I/O Records N-1 N Binary Dataset Components • Dictionary (processing history) • Format description • Binary data Each I/O record is identified by a unique integer offset within the file, i. e. coordinate within dataset or index of array element on disk. 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 7

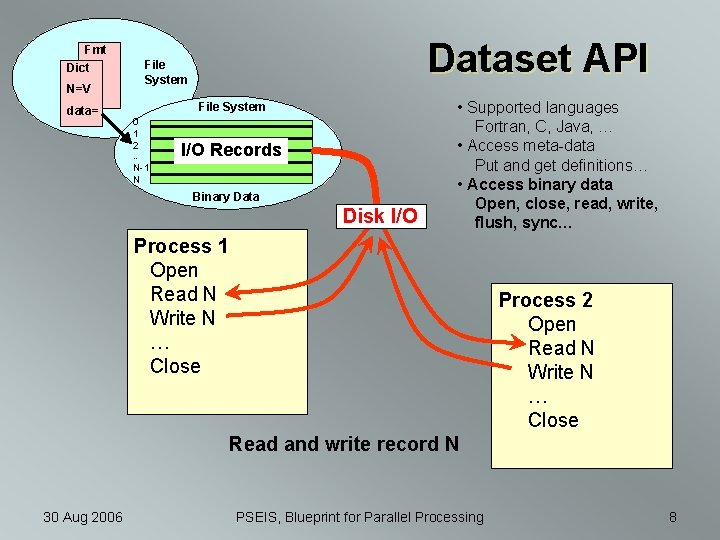

Fmt Dict N=V data= Dataset API File System 0 1 2 … I/O Records N-1 N Binary Data Disk I/O • Supported languages Fortran, C, Java, … • Access meta-data Put and get definitions… • Access binary data Open, close, read, write, flush, sync… Process 1 Open Read N Write N … Close Process 2 Open Read N Write N … Close Read and write record N 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 8

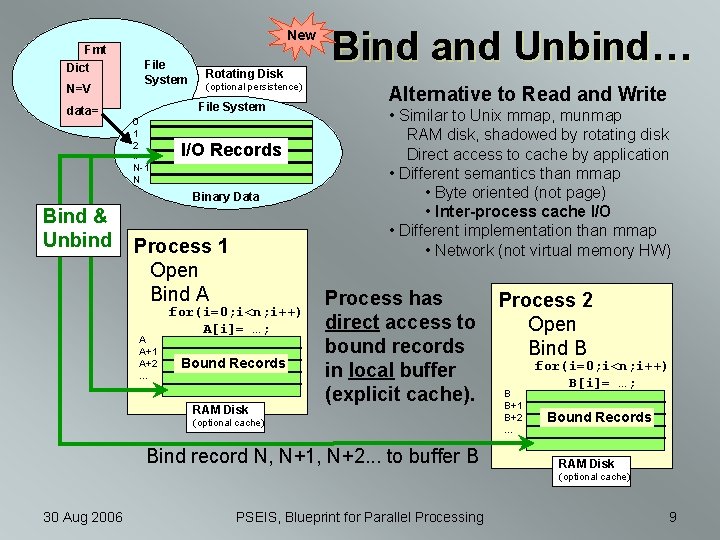

New Fmt Dict File System N=V data= Rotating Disk (optional persistence) File System 0 1 2 I/O Records … N-1 N Binary Data Bind & Unbind Process 1 Open Bind A A A+1 A+2 … for(i=0; i<n; i++) A[i]= …; Bound Records RAM Disk Bind and Unbind… Alternative to Read and Write • Similar to Unix mmap, munmap RAM disk, shadowed by rotating disk Direct access to cache by application • Different semantics than mmap • Byte oriented (not page) • Inter-process cache I/O • Different implementation than mmap • Network (not virtual memory HW) Process has direct access to bound records in local buffer (explicit cache). (optional cache) Bind record N, N+1, N+2. . . to buffer B Process 2 Open Bind B B B+1 B+2 … for(i=0; i<n; i++) B[i]= …; Bound Records RAM Disk (optional cache) 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 9

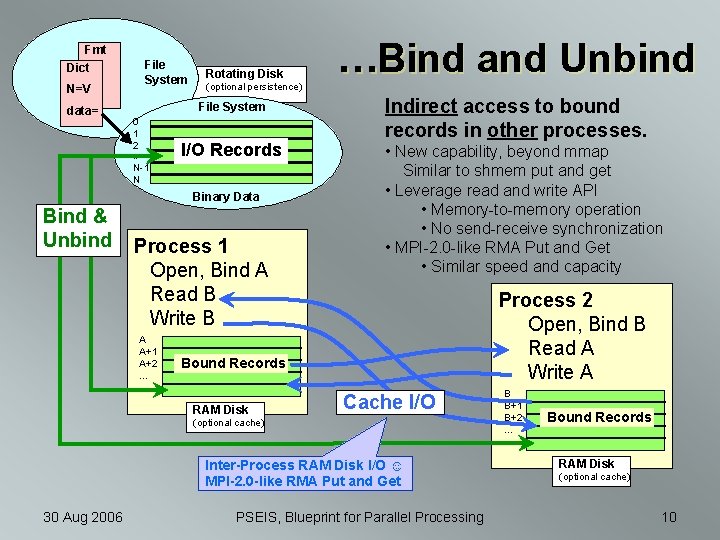

Fmt Dict File System N=V data= Rotating Disk (optional persistence) File System 0 1 2 … I/O Records N-1 N Binary Data Bind & Unbind Process 1 Open, Bind A Read B Write B A A+1 A+2 … …Bind and Unbind Indirect access to bound records in other processes. • New capability, beyond mmap Similar to shmem put and get • Leverage read and write API • Memory-to-memory operation • No send-receive synchronization • MPI-2. 0 -like RMA Put and Get • Similar speed and capacity Process 2 Open, Bind B Read A Write A Bound Records RAM Disk Cache I/O (optional cache) Inter-Process RAM Disk I/O ☺ MPI-2. 0 -like RMA Put and Get 30 Aug 2006 PSEIS, Blueprint for Parallel Processing B B+1 B+2 … Bound Records RAM Disk (optional cache) 10

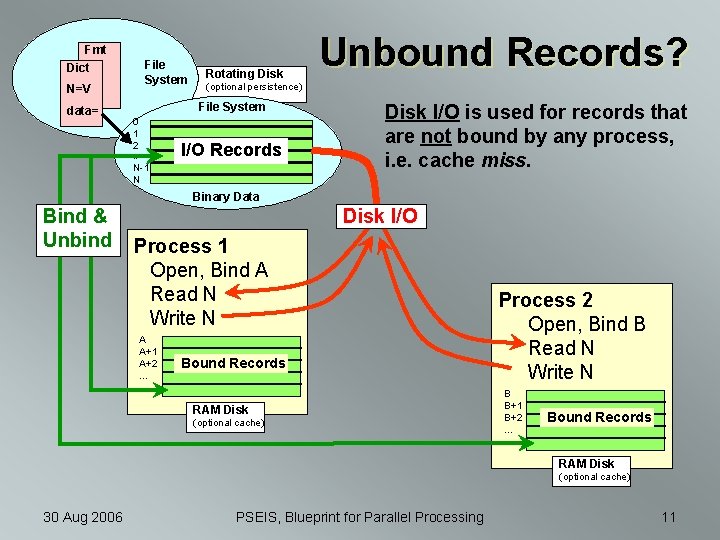

Fmt Dict File System N=V data= Rotating Disk (optional persistence) File System 0 1 2 … Unbound Records? I/O Records N-1 N Disk I/O is used for records that are not bound by any process, i. e. cache miss. Binary Data Bind & Unbind Disk I/O Process 1 Open, Bind A Read N Write N A A+1 A+2 … Bound Records RAM Disk (optional cache) Process 2 Open, Bind B Read N Write N B B+1 B+2 … Bound Records RAM Disk (optional cache) 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 11

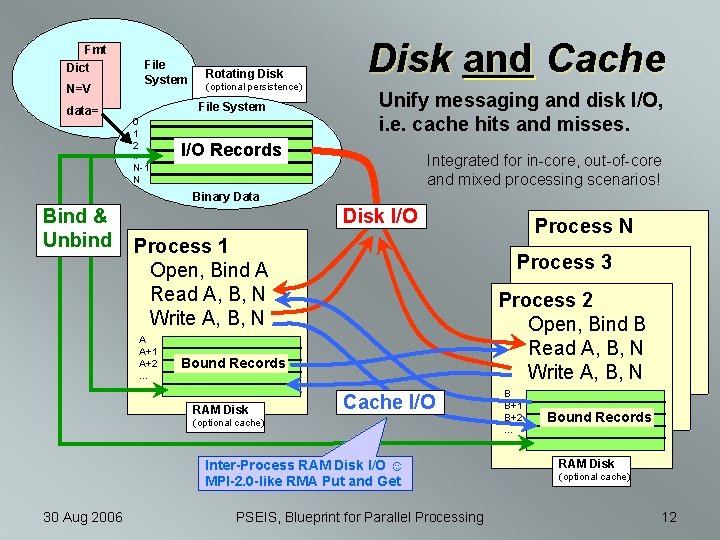

Fmt Dict File System N=V data= Rotating Disk (optional persistence) File System 0 1 2 … Disk and Cache Unify messaging and disk I/O, i. e. cache hits and misses. I/O Records Integrated for in-core, out-of-core and mixed processing scenarios! N-1 N Binary Data Bind & Unbind Disk I/O Process 1 Open, Bind A Read A, B, N Write A, B, N A A+1 A+2 … Process 3 Process 2 Open, Bind B Read A, B, N Write A, B, N Bound Records RAM Disk Cache I/O (optional cache) Inter-Process RAM Disk I/O ☺ MPI-2. 0 -like RMA Put and Get 30 Aug 2006 Process N PSEIS, Blueprint for Parallel Processing B B+1 B+2 … Bound Records RAM Disk (optional cache) 12

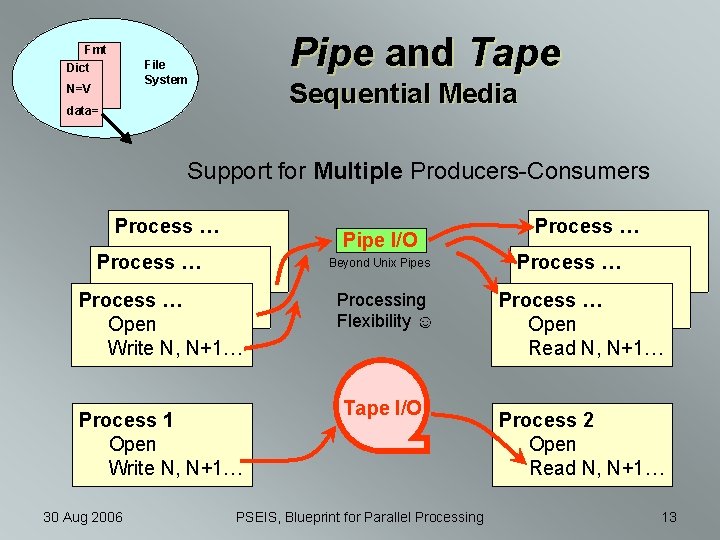

Fmt Dict Pipe and Tape File System N=V Sequential Media data= Support for Multiple Producers-Consumers Process … Pipe I/O Process … Beyond Unix Pipes Process … Open Write N, N+1… Process 1 Open Write N, N+1… 30 Aug 2006 Processing Flexibility ☺ Tape I/O PSEIS, Blueprint for Parallel Processing Process … Open Read N, N+1… Process 2 Open Read N, N+1… 13

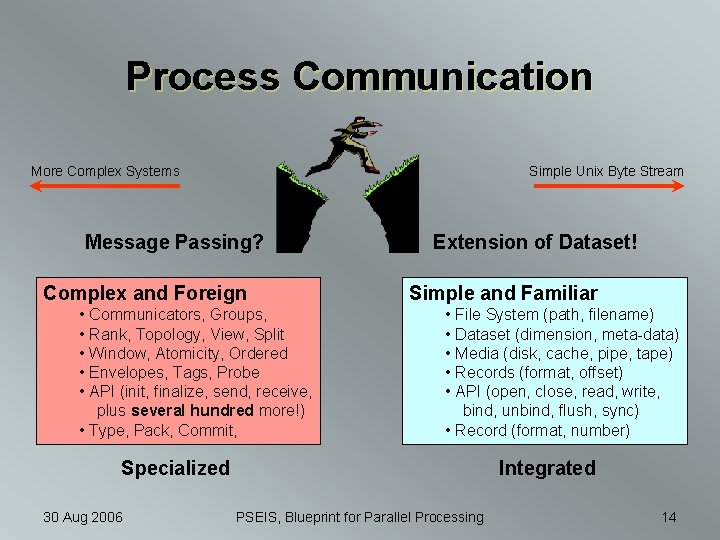

Process Communication More Complex Systems Simple Unix Byte Stream Message Passing? Complex and Foreign • Communicators, Groups, • Rank, Topology, View, Split • Window, Atomicity, Ordered • Envelopes, Tags, Probe • API (init, finalize, send, receive, plus several hundred more!) • Type, Pack, Commit, Extension of Dataset! Simple and Familiar • File System (path, filename) • Dataset (dimension, meta-data) • Media (disk, cache, pipe, tape) • Records (format, offset) • API (open, close, read, write, bind, unbind, flush, sync) • Record (format, number) Specialized 30 Aug 2006 Integrated PSEIS, Blueprint for Parallel Processing 14

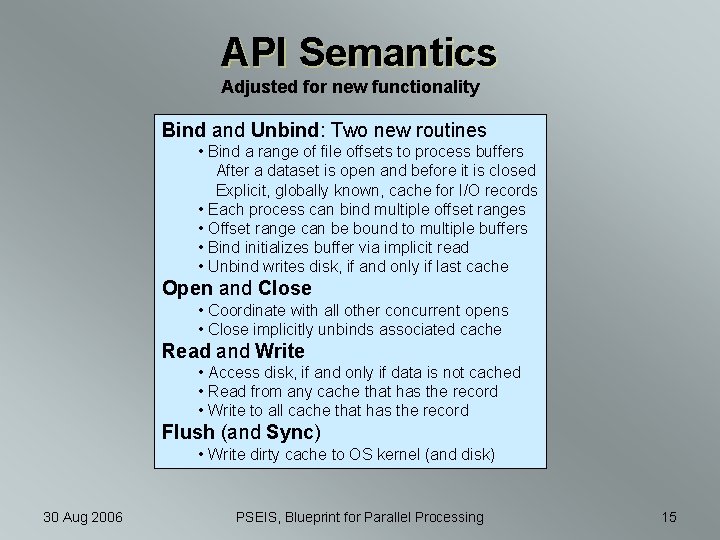

API Semantics Adjusted for new functionality Bind and Unbind: Two new routines • Bind a range of file offsets to process buffers After a dataset is open and before it is closed Explicit, globally known, cache for I/O records • Each process can bind multiple offset ranges • Offset range can be bound to multiple buffers • Bind initializes buffer via implicit read • Unbind writes disk, if and only if last cache Open and Close • Coordinate with all other concurrent opens • Close implicitly unbinds associated cache Read and Write • Access disk, if and only if data is not cached • Read from any cache that has the record • Write to all cache that has the record Flush (and Sync) • Write dirty cache to OS kernel (and disk) 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 15

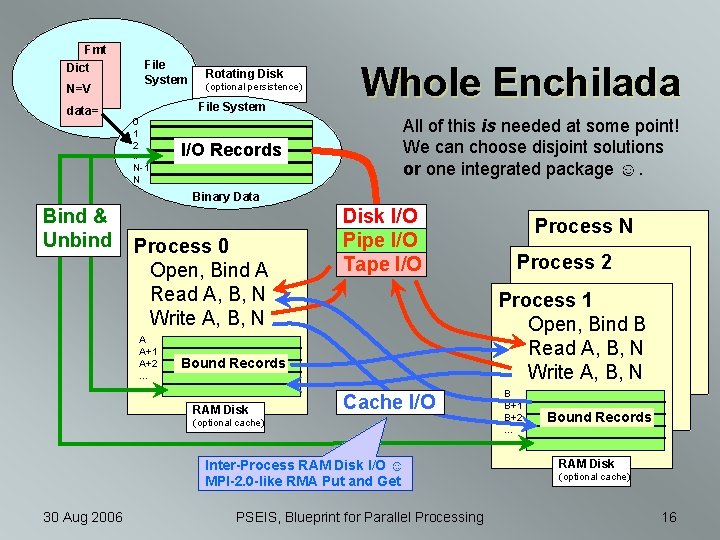

Fmt Dict File System N=V data= Rotating Disk (optional persistence) File System 0 1 2 … I/O Records N-1 N Whole Enchilada All of this is needed at some point! We can choose disjoint solutions or one integrated package ☺. Binary Data Bind & Unbind Process 0 Open, Bind A Read A, B, N Write A, B, N A A+1 A+2 … Disk I/O Pipe I/O Tape I/O Cache I/O (optional cache) Inter-Process RAM Disk I/O ☺ MPI-2. 0 -like RMA Put and Get 30 Aug 2006 Process 2 Process 1 Open, Bind B Read A, B, N Write A, B, N Bound Records RAM Disk Process N PSEIS, Blueprint for Parallel Processing B B+1 B+2 … Bound Records RAM Disk (optional cache) 16

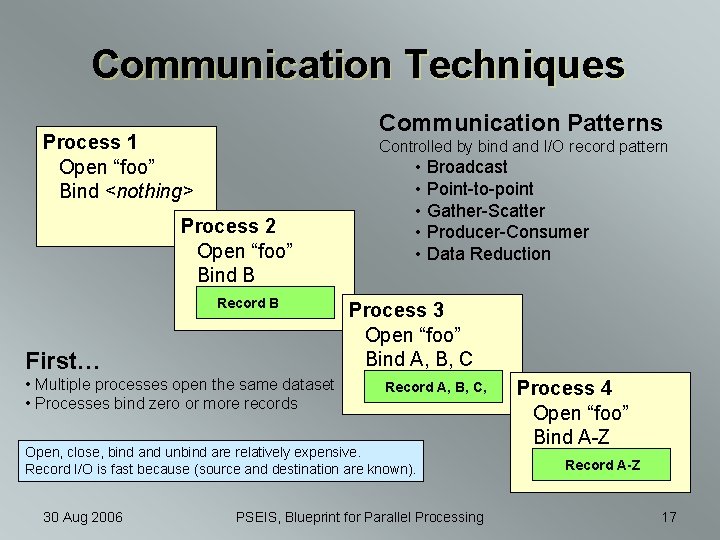

Communication Techniques Communication Patterns Process 1 Open “foo” Bind <nothing> Controlled by bind and I/O record pattern Process 2 Open “foo” Bind B Record B First… • Multiple processes open the same dataset • Processes bind zero or more records • Broadcast • Point-to-point • Gather-Scatter • Producer-Consumer • Data Reduction Process 3 Open “foo” Bind A, B, C Record A, B, C, Open, close, bind and unbind are relatively expensive. Record I/O is fast because (source and destination are known). 30 Aug 2006 PSEIS, Blueprint for Parallel Processing Process 4 Open “foo” Bind A-Z Record A-Z 17

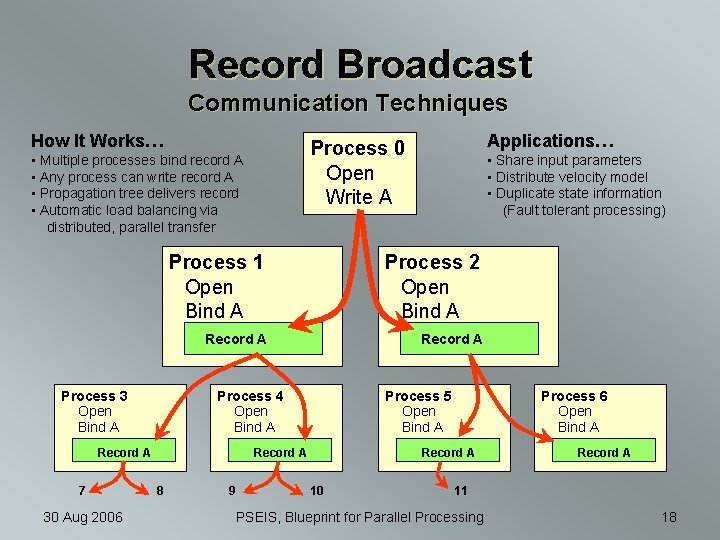

Record Broadcast Communication Techniques How It Works… • Multiple processes bind record A • Any process can write record A • Propagation tree delivers record • Automatic load balancing via distributed, parallel transfer Process 3 Open Bind A Process 2 Open Bind A Record A Process 4 Open Bind A 30 Aug 2006 Process 5 Open Bind A Record A 8 • Share input parameters • Distribute velocity model • Duplicate state information (Fault tolerant processing) Process 1 Open Bind A Record A 7 Applications… Process 0 Open Write A 9 Process 6 Open Bind A Record A 10 Record A 11 PSEIS, Blueprint for Parallel Processing 18

Point-to-Point Communication Techniques Restart How It Works… • Each process binds unique records • Publish process-record association • Read and write records as needed Source (destination) determined by record number within dataset. Applications… • Computational grids • Processing windows Process 1 Open Bind Top Read Overlap sh u l F ind b Un Disk d Bin Top Process 2 Open Bind Middle Read Overlap Finite Difference Grid Middle Process 3 Open Bind Bottom Read Overlap Bottom 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 19

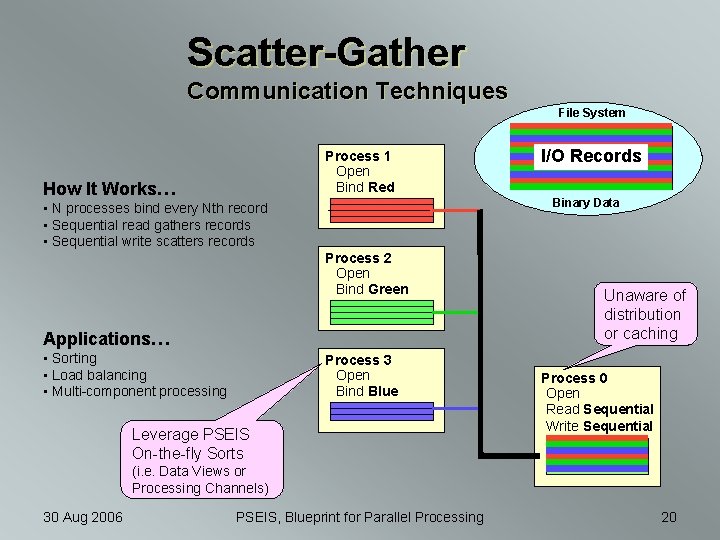

Scatter-Gather Communication Techniques File System Process 1 Open Bind Red How It Works… Binary Data • N processes bind every Nth record • Sequential read gathers records • Sequential write scatters records Process 2 Open Bind Green Applications… • Sorting • Load balancing • Multi-component processing I/O Records Process 3 Open Bind Blue Leverage PSEIS On-the-fly Sorts Unaware of distribution or caching Process 0 Open Read Sequential Write Sequential (i. e. Data Views or Processing Channels) 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 20

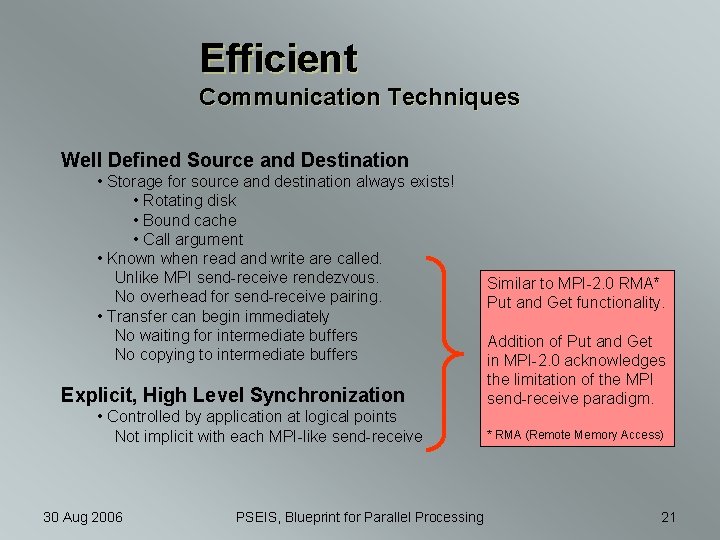

Efficient Communication Techniques Well Defined Source and Destination • Storage for source and destination always exists! • Rotating disk • Bound cache • Call argument • Known when read and write are called. Unlike MPI send-receive rendezvous. No overhead for send-receive pairing. • Transfer can begin immediately No waiting for intermediate buffers No copying to intermediate buffers Explicit, High Level Synchronization • Controlled by application at logical points Not implicit with each MPI-like send-receive 30 Aug 2006 PSEIS, Blueprint for Parallel Processing Similar to MPI-2. 0 RMA* Put and Get functionality. Addition of Put and Get in MPI-2. 0 acknowledges the limitation of the MPI send-receive paradigm. * RMA (Remote Memory Access) 21

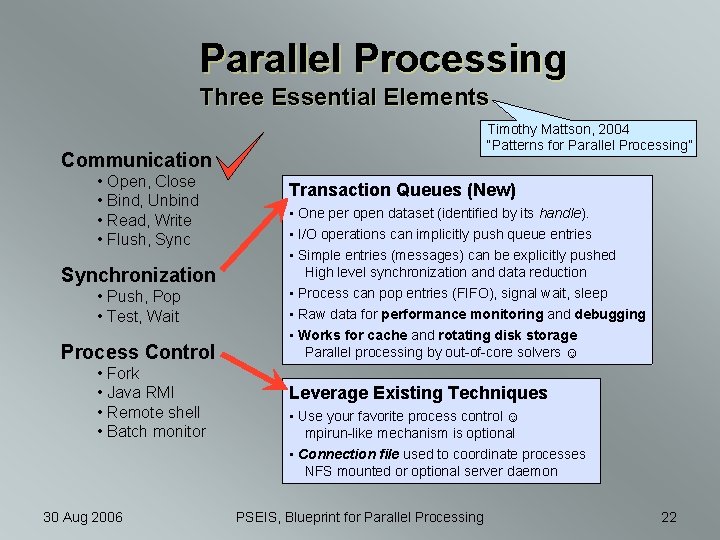

Parallel Processing Three Essential Elements Timothy Mattson, 2004 “Patterns for Parallel Processing” Communication • Open, Close • Bind, Unbind • Read, Write • Flush, Synchronization • Push, Pop • Test, Wait Process Control • Fork • Java RMI • Remote shell • Batch monitor 30 Aug 2006 Transaction Queues (New) • One per open dataset (identified by its handle). • I/O operations can implicitly push queue entries • Simple entries (messages) can be explicitly pushed High level synchronization and data reduction • Process can pop entries (FIFO), signal wait, sleep • Raw data for performance monitoring and debugging • Works for cache and rotating disk storage Parallel processing by out-of-core solvers ☺ Leverage Existing Techniques • Use your favorite process control ☺ mpirun-like mechanism is optional • Connection file used to coordinate processes NFS mounted or optional server daemon PSEIS, Blueprint for Parallel Processing 22

Conn Fmt File Dict System N=V data= Connection File… File System 0 1 2 … Used to find all processes that have a particular dataset open. I/O Records N-1 N Binary Data Process N Process 1 Open Process 3 • Lock, update and unlock connection file • Create socket connections as needed Connection file: • Concurrent opens • Host name • Process ID • IP address • Port number • Open adds entry • Close deletes entry Sockets provide direct communication between processes. Multiple datasets share sockets (consolidate transactions). 30 Aug 2006 PSEIS, Blueprint for Parallel Processing Process 2 Open • Lock, update and unlock connection file • Create socket connections as needed 23

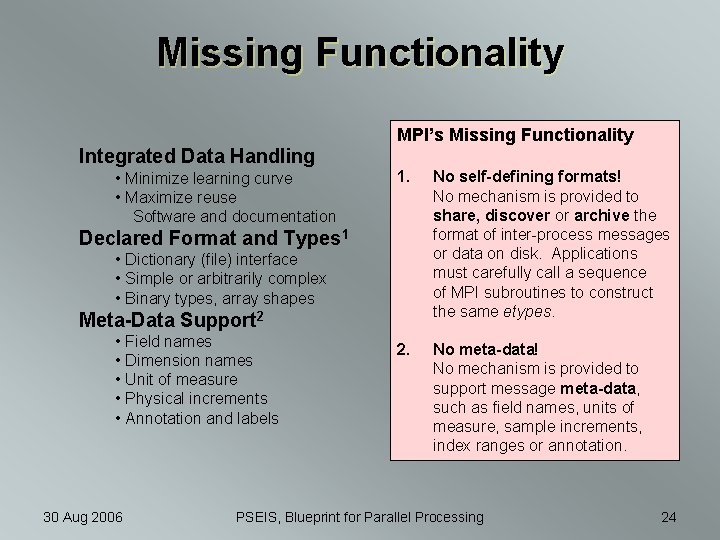

Missing Functionality MPI’s Missing Functionality Integrated Data Handling • Minimize learning curve • Maximize reuse Software and documentation 1. No self-defining formats! No mechanism is provided to share, discover or archive the format of inter-process messages or data on disk. Applications must carefully call a sequence of MPI subroutines to construct the same etypes. 2. No meta-data! No mechanism is provided to support message meta-data, such as field names, units of measure, sample increments, index ranges or annotation. Declared Format and Types 1 • Dictionary (file) interface • Simple or arbitrarily complex • Binary types, array shapes Meta-Data Support 2 • Field names • Dimension names • Unit of measure • Physical increments • Annotation and labels 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 24

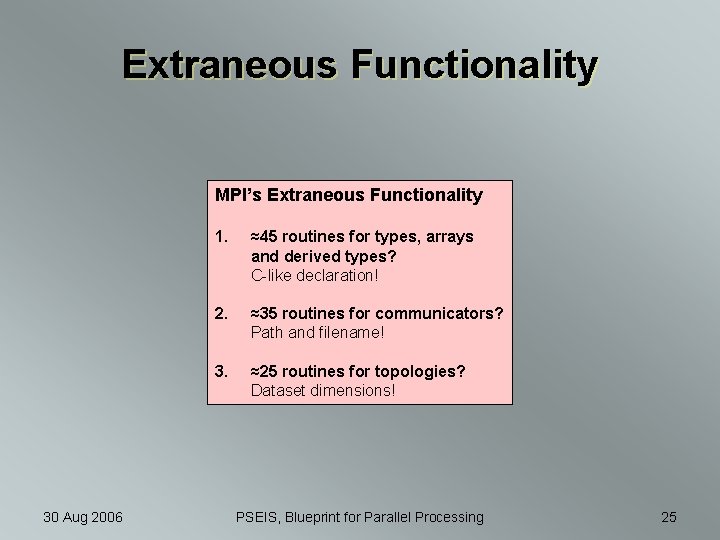

Extraneous Functionality MPI’s Extraneous Functionality 30 Aug 2006 1. ≈45 routines for types, arrays and derived types? C-like declaration! 2. ≈35 routines for communicators? Path and filename! 3. ≈25 routines for topologies? Dataset dimensions! PSEIS, Blueprint for Parallel Processing 25

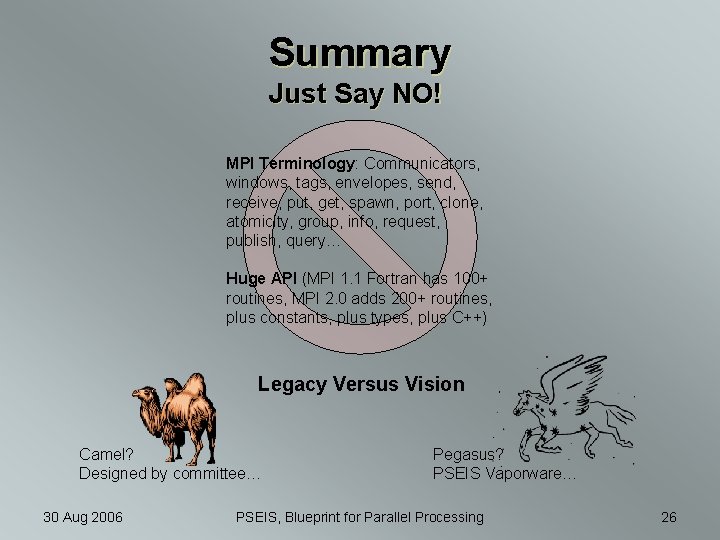

Summary Just Say NO! MPI Terminology: Communicators, windows, tags, envelopes, send, receive, put, get, spawn, port, clone, atomicity, group, info, request, publish, query… Huge API (MPI 1. 1 Fortran has 100+ routines, MPI 2. 0 adds 200+ routines, plus constants, plus types, plus C++) Legacy Versus Vision Camel? Designed by committee… 30 Aug 2006 Pegasus? PSEIS Vaporware… PSEIS, Blueprint for Parallel Processing 26

Acknowledgement Many people have contributed to and supported DDS and PSEIS. The following deserve special thanks: • Joe Dellinger, BP • John Etgen, BP • Jerry Ehlers, BP • Jin Lee, BP • Kurt Marfurt, University of Houston • Dan Whitmore, Conoco. Phillips Thanks are also due BP for releasing DDS as Open Source Software and for permission to publish this work. 30 Aug 2006 PSEIS, Blueprint for Parallel Processing 27

- Slides: 27