Project Name Preliminary Design Review PDR Software Section

- Slides: 21

Project Name Preliminary Design Review (PDR) Software Section Date of Review Name Organization Job Title Phone # E-mail

Overview p p Software Development Team Integrated Master Schedule (IMS) Highlighted with Software Milestones Software Entrance Criteria Software Development Process including problem control board Software Architecture n p p p p p Organization of CSCI Functional allocation to increments Software Requirements Allocation Software Unit/ CSCI development status/type Test procedures Software Integration Methodology Software Measurement Software Supplier Management Update Software Risk Assessment with Mitigation Strategies Issues and Concerns 11/9/2007 Preliminary Design Review (PDR) 2

Software Development Team p p Show Contractor and Government personnel. Show the Government counterpart to the Contractor key software leadership. Highlight personnel changes since SSR. Highlight organizational structure changes since SSR. 11/9/2007 Preliminary Design Review (PDR) 3

Integrated Master Schedule (IMS) Highlighted with Software Milestones p p p Show a graphical representation of the IMS highlighting the software milestones. * Show all dates critical to the software team, such as test periods, integration dates, when hardware prototypes will be available, laboratory completion dates, etc. Include Non-Advocate Review dates and CMMI appraisal dates. * If an incremental development implementation method is employed provide software milestones for each increment. 11/9/2007 Preliminary Design Review (PDR) 4

Software Entrance Criteria p p List the SETR Handbook entrance criteria applicable to the software. Show which criteria have been meet (green) and which criteria is yellow, red, or not applicable. n 11/9/2007 For those not met indicate when the will be met or if the Government has concurred and accepts that risk. Preliminary Design Review (PDR) 5

Functions Allocation to each Increment p p Show functional allocation to each increment and highlight the functions that are dependent on other functions from previous increments. Show movement of functions from one increment to another from SSR. 11/9/2007 Preliminary Design Review (PDR) 6

Software High Level Design p p Functionally describe each software unit and/or CSCI and identify the software requirements specifications (Sw. RS) allocated to it. * Show the preliminary interface design and the Interface Requirements Specifications (IRS) allocated to the interface design. * *If an incremental approach is implemented, the expectation is CSCI and interface design for the first increment will be described in the System PDR and the follow-on incremental software CDRs will show detail allocation of that increment’s Sw. RS/IRS (as finalized at that increments SSR) to the CSCI/Interface design. 11/9/2007 Preliminary Design Review (PDR) 7

Software Unit / CSCI Development Status / Type p p Identify each software unit/CSCI’s development status/type, i. e. new development, existing design or software to be reused as is, existing design or software to be reengineered, software to be developed for reuse. * Identify challenges such as algorithms, timing, fusion, interfacing, etc. *If an incremental approach is implemented, the expectation is to have all CSCI identified but only status the first increment’s CSCIs. 11/9/2007 Preliminary Design Review (PDR) 8

Software Architecture p p Describe the architecture and the rationale behind the approach chosen. Show the static (such as "consists of") relationship(s) of the software units and/or CSCIs. Multiple relationships may be presented, depending on the selected software design methodology (for example, in an objectoriented design, you may present the class and object structures as well as the module and process architectures of the CSCI). Describe the concept of execution among the software units and/or CSCIs. It shall include diagrams and descriptions showing the dynamic relationship of the software units and/or CSCIs, that is, how they will interact during a Use Case. 11/9/2007 Preliminary Design Review (PDR) 9

Software Development Process including problem control board p p p Identify development tools and how they are used during various parts of the development process. Describe any changes made to the processes since the SFR and SSR as a result of lessons learned or process auditing. Describe any changes to supporting processes such as Quality Assurance, Configuration Control (including Change Control), and Requirements Management. 11/9/2007 Preliminary Design Review (PDR) 10

Test Planning and Unit Level Test Procedures p Show the test schedule for all software testing from unit level testing to the integrated system testing just prior to developmental test. n n p Show the schedule for the development of the test Plan and Test Procedures. Describe the dependences of the software development events on the test planning and preparation. Show drop dead dates for labs and facilities need for the testing. Show the software drops planned for the test periods. Show unit level test procedures will trace to Sw. RS & IRS. 11/9/2007 Preliminary Design Review (PDR) 11

Software Integration Methodology n Describe, in detail, the integration activities between the completion of Unit level testing to the completion of System level testing. List the dependencies among the software configuration items. p Show the schedule details for the integration planning and activities. p 11/9/2007 Preliminary Design Review (PDR) 12

Measures p Present measures updates as defined in the next 5 slides. 11/9/2007 Preliminary Design Review (PDR) 13

Measures p Software Size n p Software size is tracked with the source lines of code (SLOC), Equivalent SLOC (ESLOC), or function points indicators n Total planned size by language and type (new, unmodified reused, and deleted) n Total actual SLOC by language and type (new, unmodified reused, and deleted) 11/9/2007 Development Progress Profile n Development progress is tracked as a percentage complete of work n This measure tracks the progress made against initial plans and tracked against builds, preferably as a function of requirements completed n The measure tracks planned and actual work for the software requirements, software design, software implementation, and software test development activities Preliminary Design Review (PDR) 14

Measures p Computer Resource Utilization (CRU) Indicators n CRU indicators show percentage of resource utilization at present and the estimate at completion n The memory utilization measure tracks the percentage of the target computer memory resources used n The throughput utilization measures tracks the percentage of the target computer throughput or CPU used during worst case loading conditions n The Channel Utilization measure tracks the percentage of the target system channel and/or bus resources used 11/9/2007 p Build Release Indicators n The build plan measure report tracks the capability of the software to satisfy the functionality of each scheduled incremental build n Changes to the functionality of an incremental build may be an indicator of potential problems such as requirements growth or schedule slip, particularly if capability is pushed to a subsequent incremental build Preliminary Design Review (PDR) 15

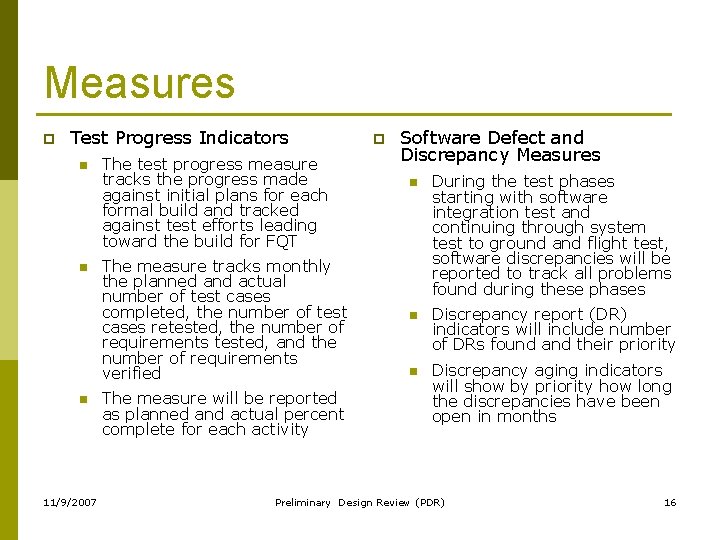

Measures p Test Progress Indicators n n n 11/9/2007 The test progress measure tracks the progress made against initial plans for each formal build and tracked against test efforts leading toward the build for FQT The measure tracks monthly the planned and actual number of test cases completed, the number of test cases retested, the number of requirements tested, and the number of requirements verified The measure will be reported as planned and actual percent complete for each activity p Software Defect and Discrepancy Measures n During the test phases starting with software integration test and continuing through system test to ground and flight test, software discrepancies will be reported to track all problems found during these phases n Discrepancy report (DR) indicators will include number of DRs found and their priority n Discrepancy aging indicators will show by priority how long the discrepancies have been open in months Preliminary Design Review (PDR) 16

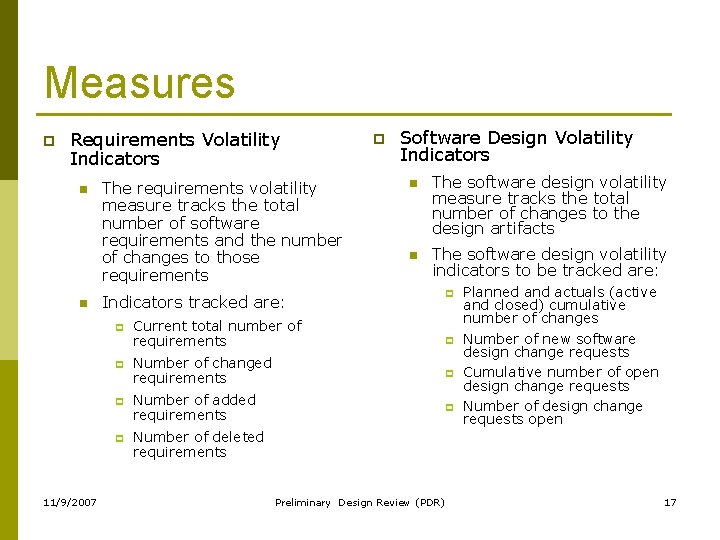

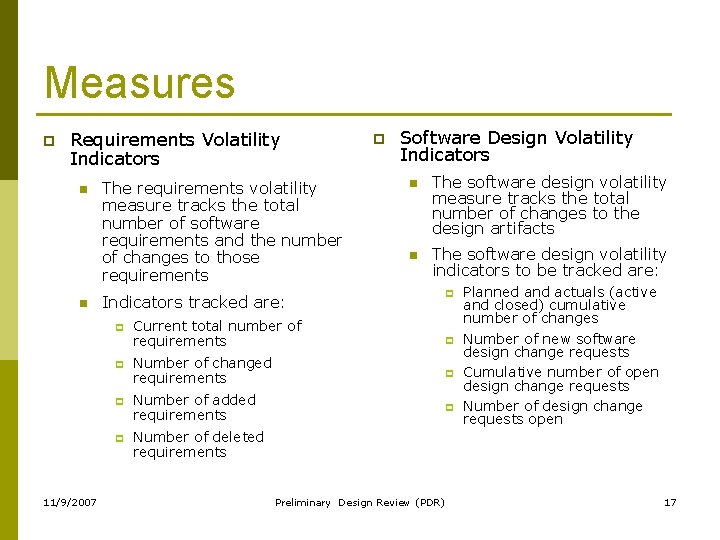

Measures p Requirements Volatility Indicators n n The requirements volatility measure tracks the total number of software requirements and the number of changes to those requirements Software Design Volatility Indicators n The software design volatility measure tracks the total number of changes to the design artifacts n The software design volatility indicators to be tracked are: Indicators tracked are: p p 11/9/2007 p Current total number of requirements p p Number of changed requirements p Number of added requirements p Planned and actuals (active and closed) cumulative number of changes Number of new software design change requests Cumulative number of open design change requests Number of design change requests open Number of deleted requirements Preliminary Design Review (PDR) 17

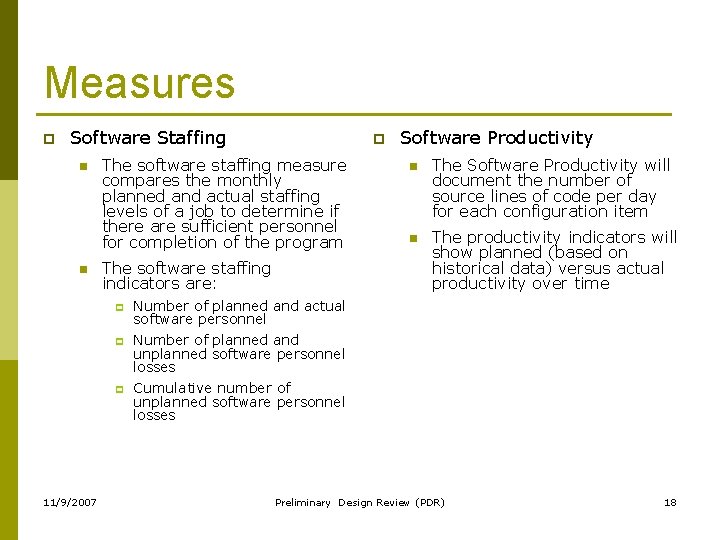

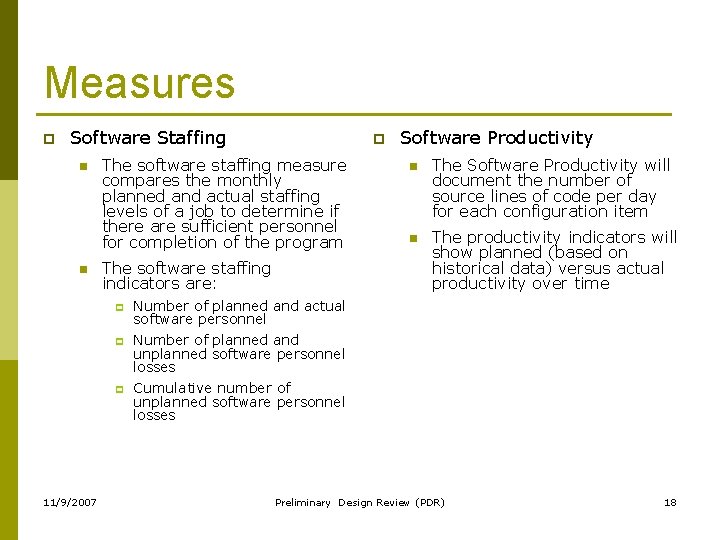

Measures p Software Staffing n n The software staffing measure compares the monthly planned and actual staffing levels of a job to determine if there are sufficient personnel for completion of the program The software staffing indicators are: p p p 11/9/2007 p Software Productivity n The Software Productivity will document the number of source lines of code per day for each configuration item n The productivity indicators will show planned (based on historical data) versus actual productivity over time Number of planned and actual software personnel Number of planned and unplanned software personnel losses Cumulative number of unplanned software personnel losses Preliminary Design Review (PDR) 18

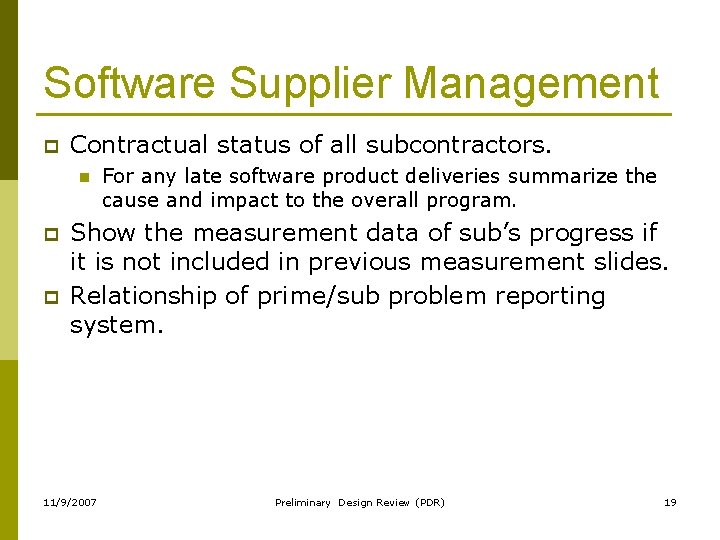

Software Supplier Management p Contractual status of all subcontractors. n p p For any late software product deliveries summarize the cause and impact to the overall program. Show the measurement data of sub’s progress if it is not included in previous measurement slides. Relationship of prime/sub problem reporting system. 11/9/2007 Preliminary Design Review (PDR) 19

Risk Assessment with Mitigation Strategies p p Show software risk updates by using the standard Risk Cube. For “red” and “yellow” risks, show the mitigation plan. n 11/9/2007 Mitigation plan should show for each step the owner, completion date, and the resulting consequencelikelihood value for the completed mitigation step. Preliminary Design Review (PDR) 20

Issues and Concerns p This section should list any issues and concerns that do not qualify as a risk and that require the attention of the Government or Contractor Leadership. n n n 11/9/2007 List the issue or concern and the expected outcome. List the consequence should there be no action. Provide a need date for resolution. Preliminary Design Review (PDR) 21