Project 4 Enabling High Performance Application IO Parallel

Project 4 : Enabling High Performance Application I/O Parallel net. CDF A. Choudhary, W. Liao W. Gropp, R. Ross, R. Thakur Northwestern University Argonne National Lab Sci. DAC All Hands Meeting, September 11 -13, 2002

Outline • • Net. CDF overview Parallel net. CDF and MPI-IO Progress on API implementation Preliminary performance evaluation using LBNL test suite

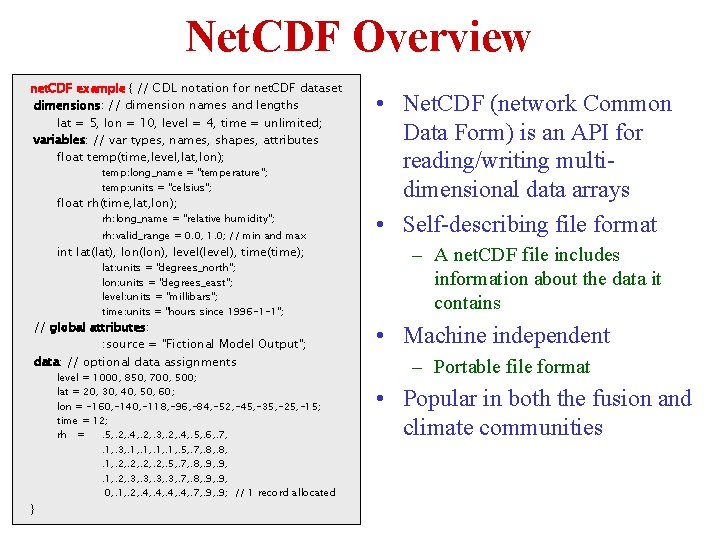

Net. CDF Overview net. CDF example { // CDL notation for net. CDF dataset dimensions: // dimension names and lengths lat = 5, lon = 10, level = 4, time = unlimited; variables: // var types, names, shapes, attributes float temp(time, level, lat, lon); temp: long_name = "temperature"; temp: units = "celsius"; float rh(time, lat, lon); rh: long_name = "relative humidity"; rh: valid_range = 0. 0, 1. 0; // min and max int lat(lat), lon(lon), level(level), time(time); lat: units = "degrees_north"; lon: units = "degrees_east"; level: units = "millibars"; time: units = "hours since 1996 -1 -1"; // global attributes: : source = "Fictional Model Output"; data: // optional data assignments } level = 1000, 850, 700, 500; lat = 20, 30, 40, 50, 60; lon = -160, -140, -118, -96, -84, -52, -45, -35, -25, -15; time = 12; rh =. 5, . 2, . 4, . 2, . 3, . 2, . 4, . 5, . 6, . 7, . 1, . 3, . 1, . 5, . 7, . 8, . 1, . 2, . 5, . 7, . 8, . 9, . 1, . 2, . 3, . 7, . 8, . 9, 0, . 1, . 2, . 4, . 7, . 9; // 1 record allocated • Net. CDF (network Common Data Form) is an API for reading/writing multidimensional data arrays • Self-describing file format – A net. CDF file includes information about the data it contains • Machine independent – Portable file format • Popular in both the fusion and climate communities

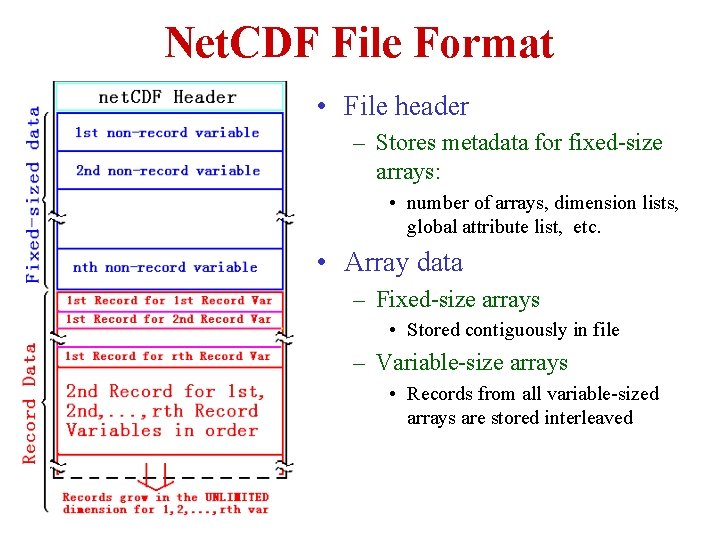

Net. CDF File Format • File header – Stores metadata for fixed-size arrays: • number of arrays, dimension lists, global attribute list, etc. • Array data – Fixed-size arrays • Stored contiguously in file – Variable-size arrays • Records from all variable-sized arrays are stored interleaved

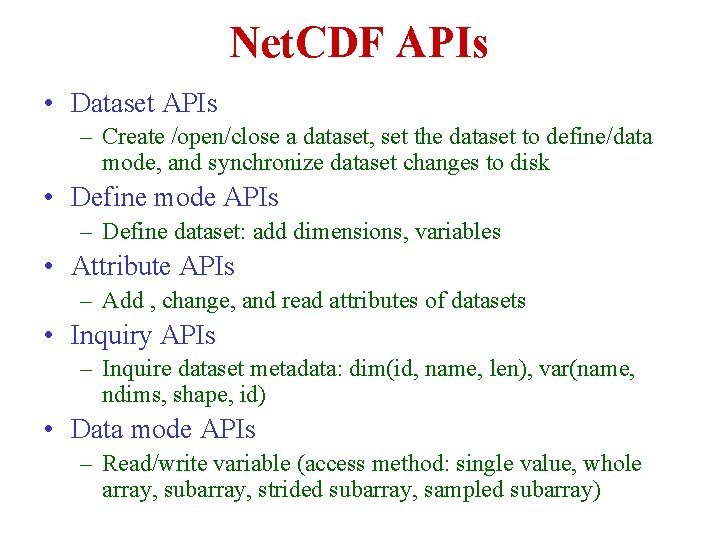

Net. CDF APIs • Dataset APIs – Create /open/close a dataset, set the dataset to define/data mode, and synchronize dataset changes to disk • Define mode APIs – Define dataset: add dimensions, variables • Attribute APIs – Add , change, and read attributes of datasets • Inquiry APIs – Inquire dataset metadata: dim(id, name, len), var(name, ndims, shape, id) • Data mode APIs – Read/write variable (access method: single value, whole array, subarray, strided subarray, sampled subarray)

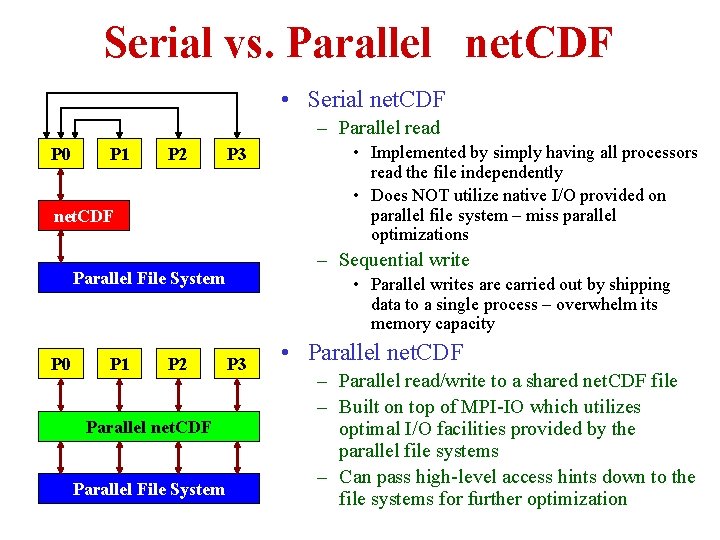

Serial vs. Parallel net. CDF • Serial net. CDF – Parallel read P 0 P 1 P 2 P 3 net. CDF – Sequential write Parallel File System P 0 P 1 P 2 Parallel net. CDF Parallel File System • Implemented by simply having all processors read the file independently • Does NOT utilize native I/O provided on parallel file system – miss parallel optimizations • Parallel writes are carried out by shipping data to a single process – overwhelm its memory capacity P 3 • Parallel net. CDF – Parallel read/write to a shared net. CDF file – Built on top of MPI-IO which utilizes optimal I/O facilities provided by the parallel file systems – Can pass high-level access hints down to the file systems for further optimization

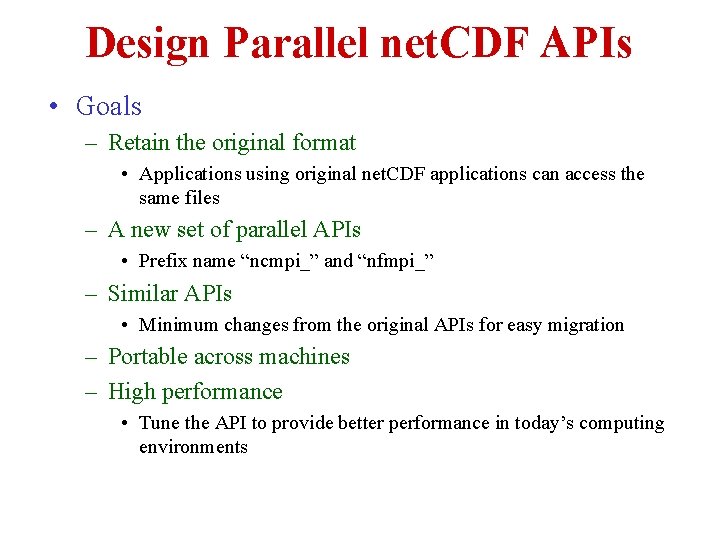

Design Parallel net. CDF APIs • Goals – Retain the original format • Applications using original net. CDF applications can access the same files – A new set of parallel APIs • Prefix name “ncmpi_” and “nfmpi_” – Similar APIs • Minimum changes from the original APIs for easy migration – Portable across machines – High performance • Tune the API to provide better performance in today’s computing environments

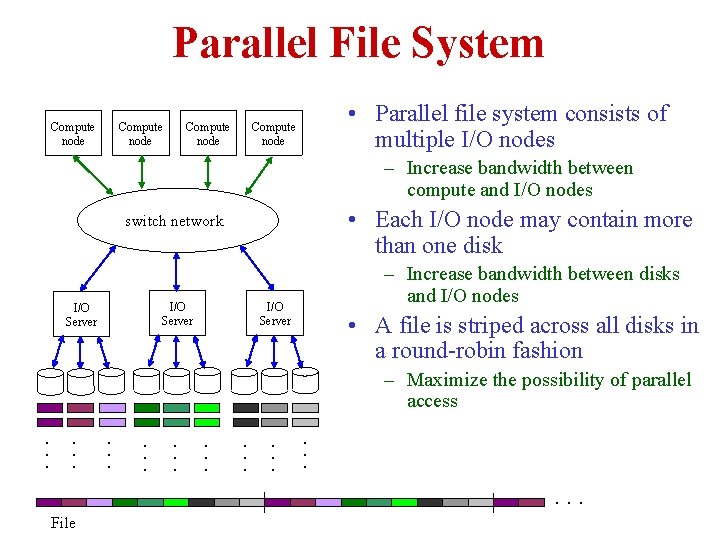

Parallel File System Compute node • Parallel file system consists of multiple I/O nodes – Increase bandwidth between compute and I/O nodes • Each I/O node may contain more than one disk switch network I/O Server – Increase bandwidth between disks and I/O nodes • A file is striped across all disks in a round-robin fashion – Maximize the possibility of parallel access . . . . File

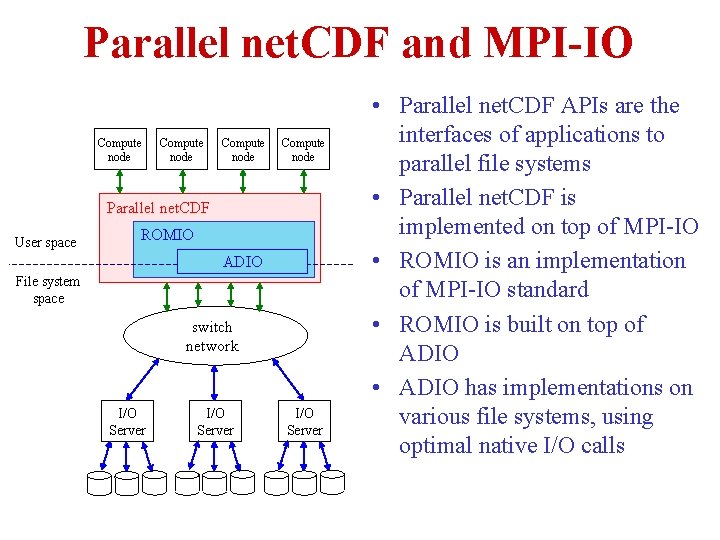

Parallel net. CDF and MPI-IO Compute node Parallel net. CDF User space ROMIO ADIO File system space switch network I/O Server • Parallel net. CDF APIs are the interfaces of applications to parallel file systems • Parallel net. CDF is implemented on top of MPI-IO • ROMIO is an implementation of MPI-IO standard • ROMIO is built on top of ADIO • ADIO has implementations on various file systems, using optimal native I/O calls

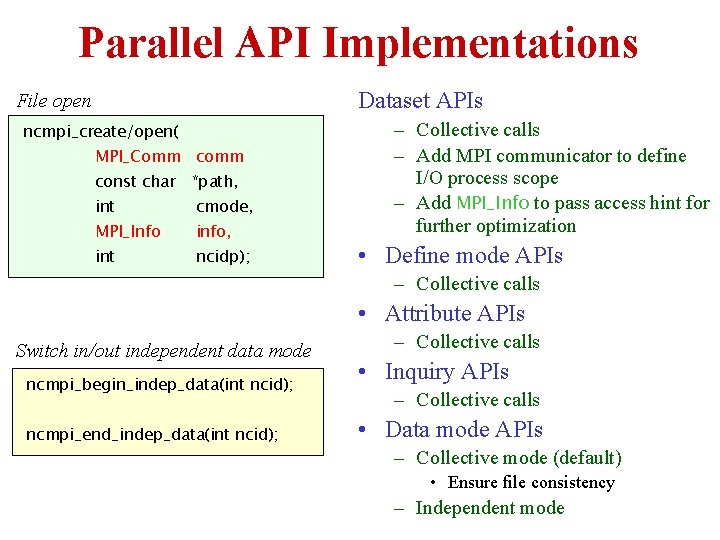

Parallel API Implementations Dataset APIs File open ncmpi_create/open( MPI_Comm const char *path, int cmode, MPI_Info info, int ncidp); – Collective calls – Add MPI communicator to define I/O process scope – Add MPI_Info to pass access hint for further optimization • Define mode APIs – Collective calls • Attribute APIs Switch in/out independent data mode ncmpi_begin_indep_data(int ncid); ncmpi_end_indep_data(int ncid); – Collective calls • Inquiry APIs – Collective calls • Data mode APIs – Collective mode (default) • Ensure file consistency – Independent mode

![Data Mode APIs High-level APIs ncmpi_put/get_vars_types_all( int ncid, const MPI_Offset start[ ], const MPI_Offset Data Mode APIs High-level APIs ncmpi_put/get_vars_types_all( int ncid, const MPI_Offset start[ ], const MPI_Offset](http://slidetodoc.com/presentation_image_h/97b05b1704b54cfedbf59c1b6266960f/image-11.jpg)

Data Mode APIs High-level APIs ncmpi_put/get_vars_types_all( int ncid, const MPI_Offset start[ ], const MPI_Offset count[ ] const MPI_Offset stride[ ], const unsigned char *buf); Flexible APIs ncmpi_put/get_vars( int ncid, const MPI_Offset start[ ], const MPI_Offset count[ ] const MPI_Offset stride[ ], void *buf, int count, MPI_Datatype datatype); • Collective and independent calls – With suffix “_all” or not • High-level APIs – Mimics the original APIs – Easy path of migration to the parallel interface – Mapping net. CDF access types to MPI derived datatypes • Flexible APIs – Better handling of internal data representations – More fully expose the capabilities of MPI-IO to the programmer

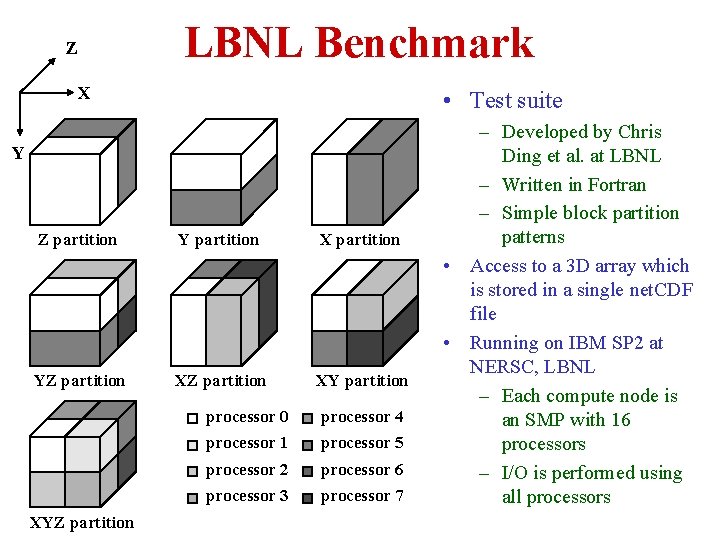

LBNL Benchmark Z X • Test suite Y Z partition Y partition X partition YZ partition XYZ partition processor 0 processor 4 processor 1 processor 5 processor 2 processor 6 processor 3 processor 7 – Developed by Chris Ding et al. at LBNL – Written in Fortran – Simple block partition patterns • Access to a 3 D array which is stored in a single net. CDF file • Running on IBM SP 2 at NERSC, LBNL – Each compute node is an SMP with 16 processors – I/O is performed using all processors

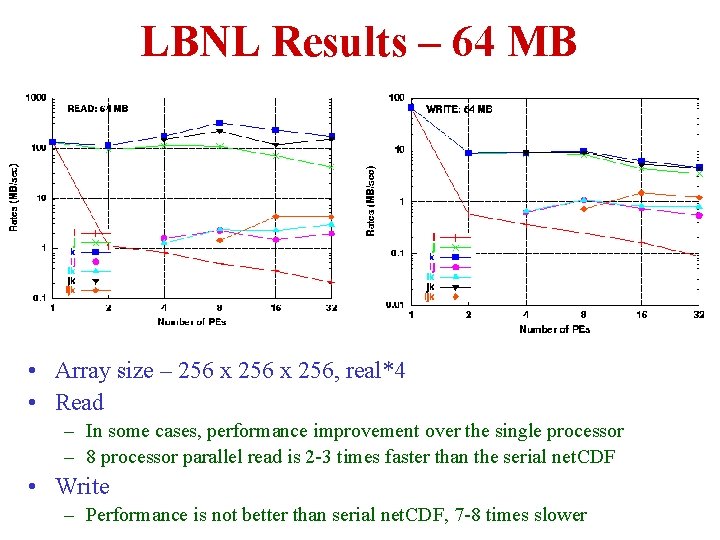

LBNL Results – 64 MB • Array size – 256 x 256, real*4 • Read – In some cases, performance improvement over the single processor – 8 processor parallel read is 2 -3 times faster than the serial net. CDF • Write – Performance is not better than serial net. CDF, 7 -8 times slower

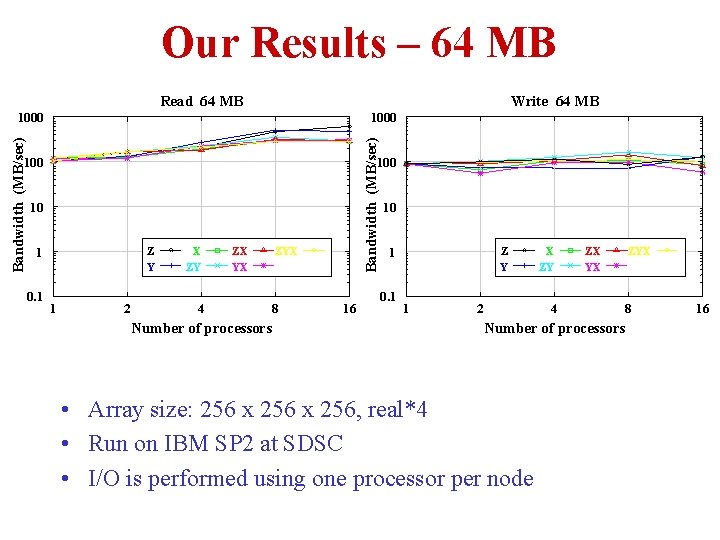

Our Results – 64 MB Read 64 MB Write 64 MB 1000 Bandwidth (MB/sec) 1000 100 10 1 0. 1 Z Y 1 2 X ZY ZX YX 4 Number of processors ZYX 8 16 10 1 0. 1 Z Y 1 2 X ZY ZX YX 4 Number of processors • Array size: 256 x 256, real*4 • Run on IBM SP 2 at SDSC • I/O is performed using one processor per node ZYX 8 16

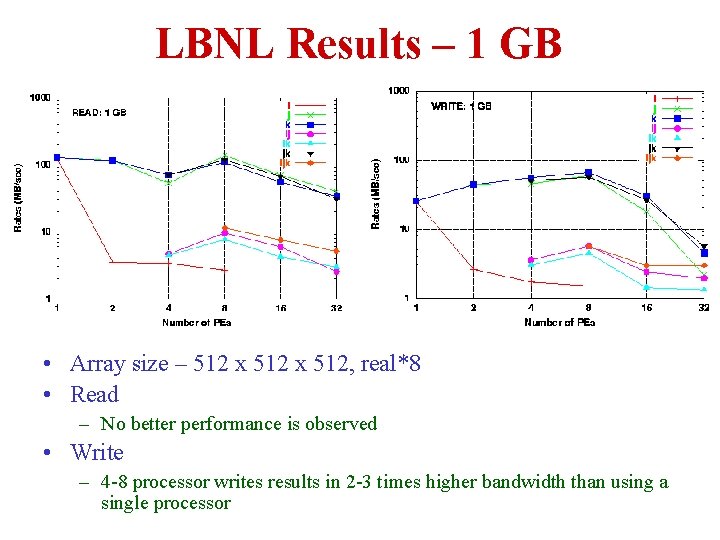

LBNL Results – 1 GB • Array size – 512 x 512, real*8 • Read – No better performance is observed • Write – 4 -8 processor writes results in 2 -3 times higher bandwidth than using a single processor

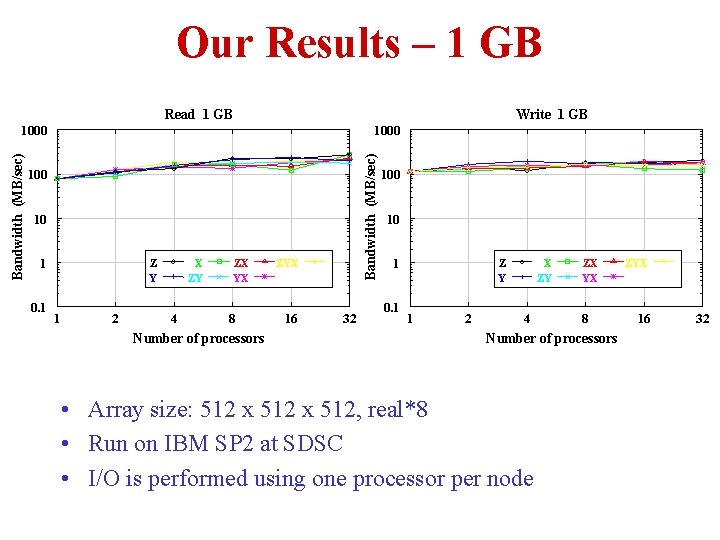

Our Results – 1 GB Read 1 GB Write 1 GB 1000 Bandwidth (MB/sec) 1000 10 1 0. 1 X ZY Z Y 1 2 4 ZX YX 8 Number of processors ZYX 16 32 100 10 1 0. 1 X ZY Z Y 1 2 4 ZX YX 8 Number of processors • Array size: 512 x 512, real*8 • Run on IBM SP 2 at SDSC • I/O is performed using one processor per node ZYX 16 32

Summary • Complete the parallel C APIs • Identify friendly users – ORNL, LBNL • User reference manual • Preliminary performance results – Using LBNL test suite: typical access patterns – Obtained scalable results

- Slides: 17