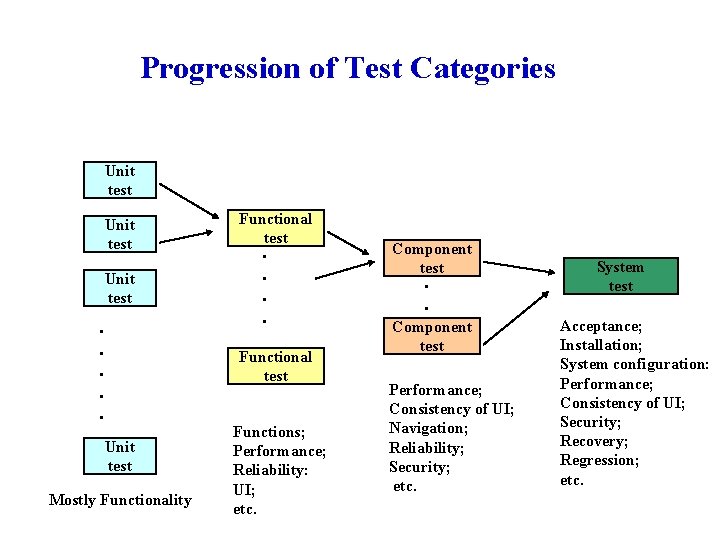

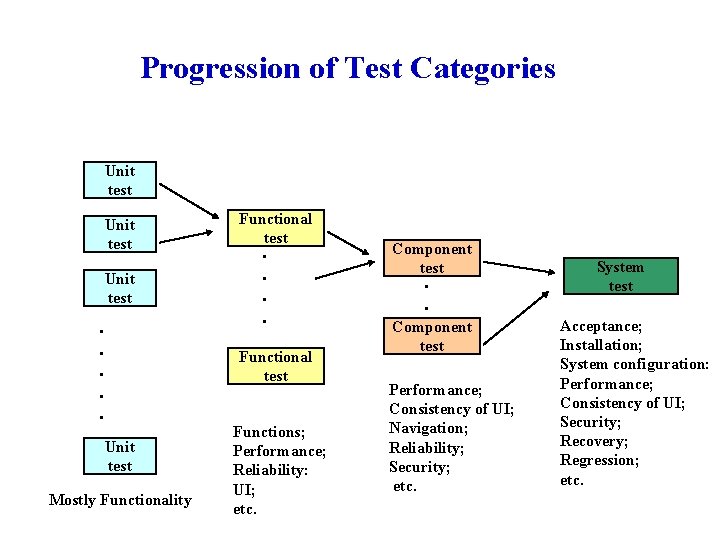

Progression of Test Categories Unit test Functional test

- Slides: 11

Progression of Test Categories Unit test . . . Functional test Unit test Mostly Functionality Functions; Performance; Reliability: UI; etc. Component test Performance; Consistency of UI; Navigation; Reliability; Security; etc. System test Acceptance; Installation; System configuration: Performance; Consistency of UI; Security; Recovery; Regression; etc.

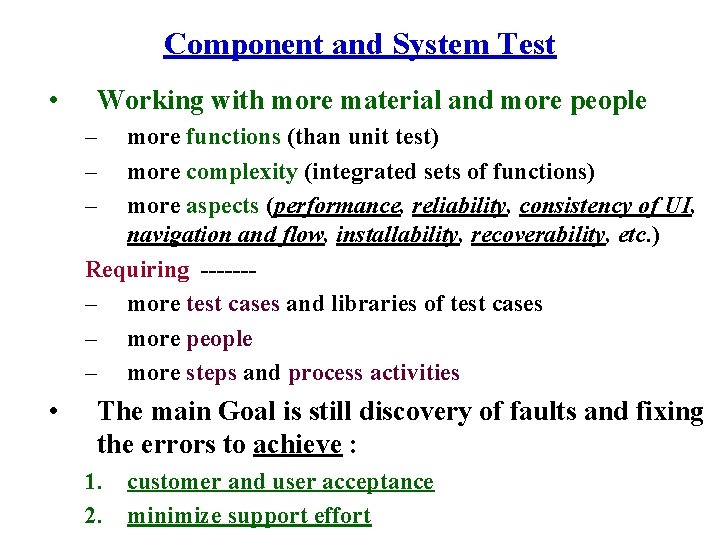

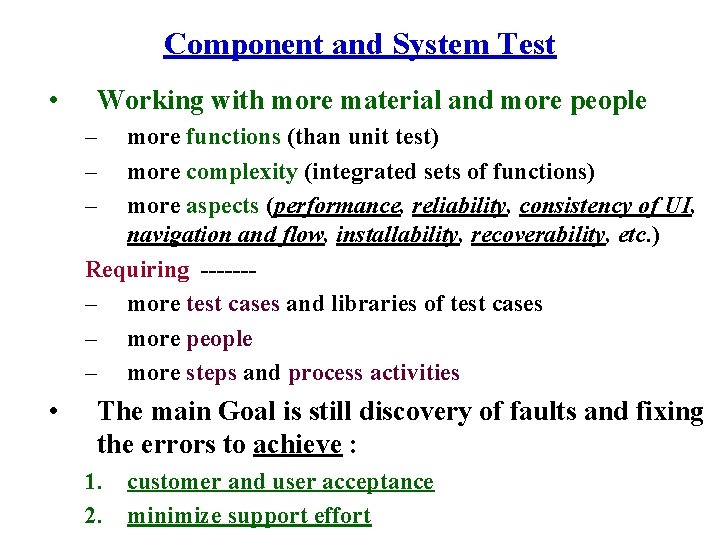

Component and System Test • Working with more material and more people – – – more functions (than unit test) more complexity (integrated sets of functions) more aspects (performance, reliability, consistency of UI, navigation and flow, installability, recoverability, etc. ) Requiring ------– more test cases and libraries of test cases – more people – more steps and process activities • The main Goal is still discovery of faults and fixing the errors to achieve : 1. customer and user acceptance 2. minimize support effort

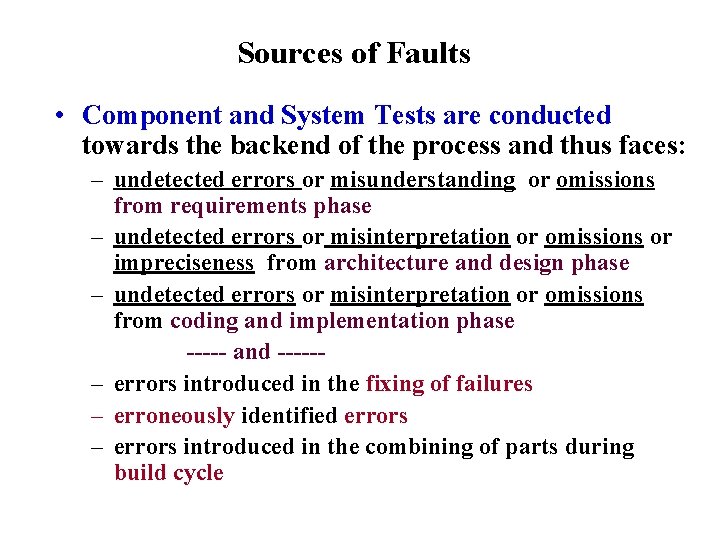

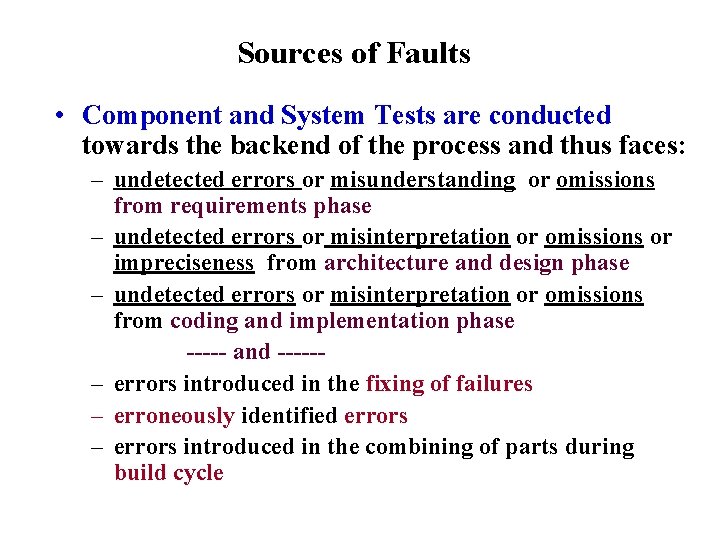

Sources of Faults • Component and System Tests are conducted towards the backend of the process and thus faces: – undetected errors or misunderstanding or omissions from requirements phase – undetected errors or misinterpretation or omissions or impreciseness from architecture and design phase – undetected errors or misinterpretation or omissions from coding and implementation phase ----- and -----– errors introduced in the fixing of failures – erroneously identified errors – errors introduced in the combining of parts during build cycle

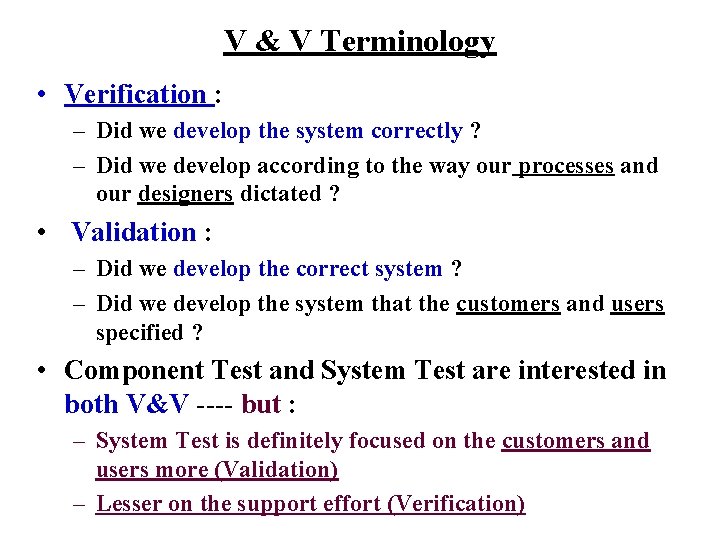

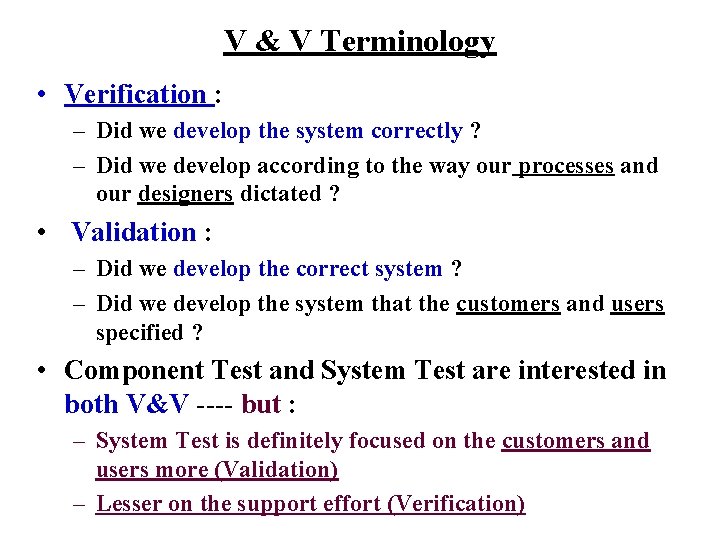

V & V Terminology • Verification : – Did we develop the system correctly ? – Did we develop according to the way our processes and our designers dictated ? • Validation : – Did we develop the correct system ? – Did we develop the system that the customers and users specified ? • Component Test and System Test are interested in both V&V ---- but : – System Test is definitely focused on the customers and users more (Validation) – Lesser on the support effort (Verification)

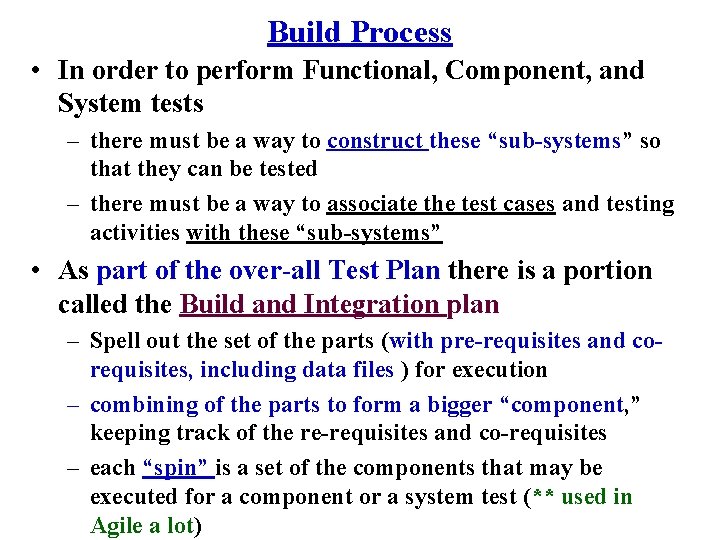

Build Process • In order to perform Functional, Component, and System tests – there must be a way to construct these “sub-systems” so that they can be tested – there must be a way to associate the test cases and testing activities with these “sub-systems” • As part of the over-all Test Plan there is a portion called the Build and Integration plan – Spell out the set of the parts (with pre-requisites and corequisites, including data files ) for execution – combining of the parts to form a bigger “component, ” keeping track of the re-requisites and co-requisites – each “spin” is a set of the components that may be executed for a component or a system test (** used in Agile a lot)

Configuration Manager • Build and Integration may be performed manually with compilers and files but with any level of complexity, there is a need for a tool (Configuration Management tool): • capability of tracking a variety of and a large number of parts – speed of compilation versus accessing pre-compiled objects – complexity of keeping track of pre-requisites and corequisites – keeping track of libraries of “tested and locked” parts and “untested” parts – merging all the necessary parts to “build” an executable component

Some Definitions of Subtypes of Tests • Stress Tests : testing the system to the “limits” of all the items (users, transactions, etc. ) specified in the requirements ----performance • System Configuration Test: testing the overall hardware, software, and network combinations as specified in the requirements, including the compatibility and interfacing with existing systems ---- installation • Recovery Test: testing the systems response to a loss of some resource and evaluating the response speed and results against the stated recovery requirement ---- back-up/recovery • Security Test: testing to ensure that the security requirements are met • Human Factors and Usability Test: testing to the user interface and the user performance requirements ----- UI consistency and Navigation

Reliability, Availability, Maintainability • Some desirable characteristics of the system that we like our software to have : For Customer (valid) – Reliability : to function consistently and correctly (according to the requirements) over a period of time (the longer the better) – Availability : to be operational (every time if possible) when we need it – Maintainability : to be repairable and modifiable quickly and easily (within some time frame every time, if possible). For Support (verifiable)

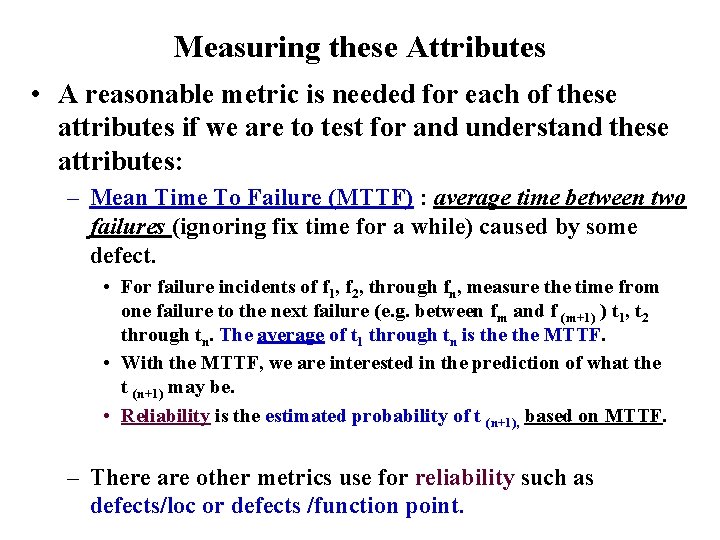

Measuring these Attributes • A reasonable metric is needed for each of these attributes if we are to test for and understand these attributes: – Mean Time To Failure (MTTF) : average time between two failures (ignoring fix time for a while) caused by some defect. • For failure incidents of f 1, f 2, through fn, measure the time from one failure to the next failure (e. g. between fm and f (m+1) ) t 1, t 2 through tn. The average of t 1 through tn is the MTTF. • With the MTTF, we are interested in the prediction of what the t (n+1) may be. • Reliability is the estimated probability of t (n+1), based on MTTF. – There are other metrics use for reliability such as defects/loc or defects /function point.

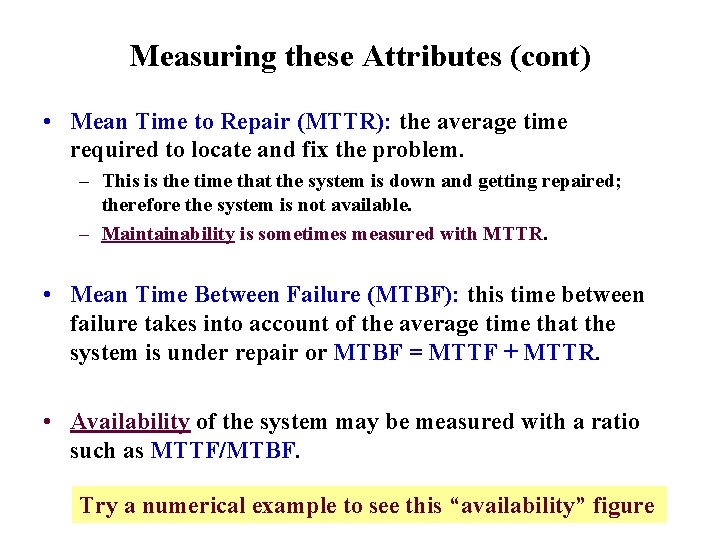

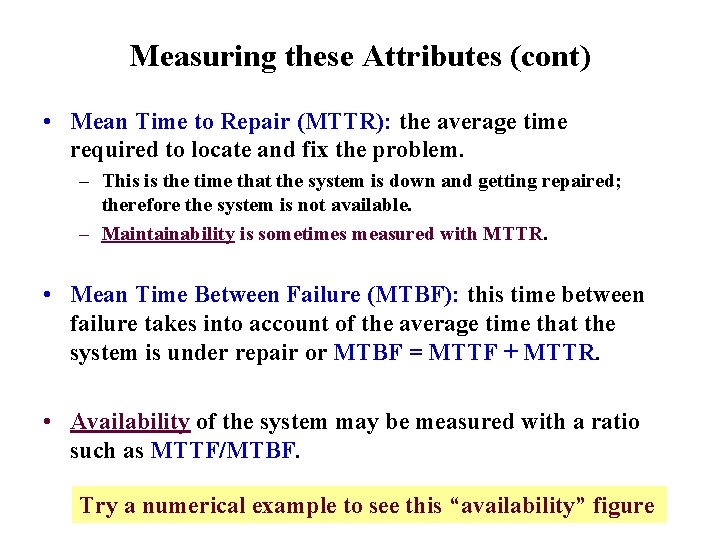

Measuring these Attributes (cont) • Mean Time to Repair (MTTR): the average time required to locate and fix the problem. – This is the time that the system is down and getting repaired; therefore the system is not available. – Maintainability is sometimes measured with MTTR. • Mean Time Between Failure (MTBF): this time between failure takes into account of the average time that the system is under repair or MTBF = MTTF + MTTR. • Availability of the system may be measured with a ratio such as MTTF/MTBF. Try a numerical example to see this “availability” figure

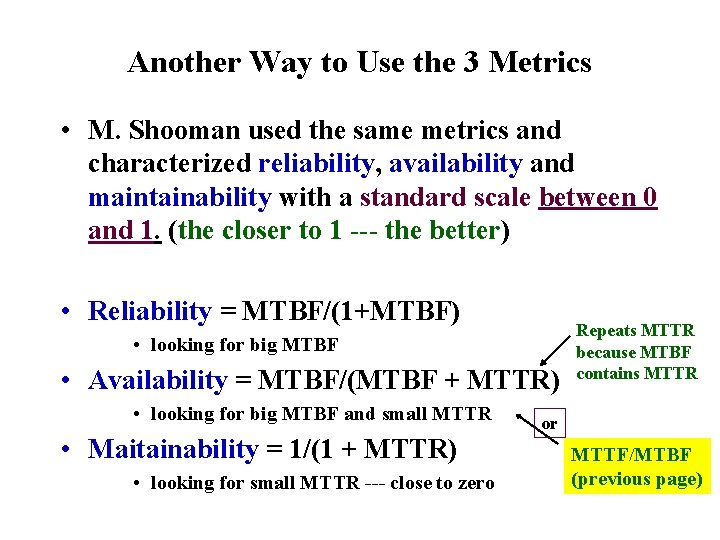

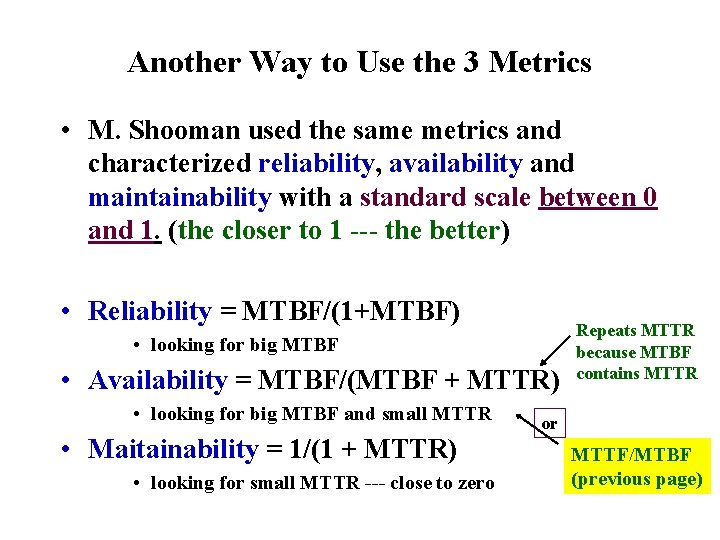

Another Way to Use the 3 Metrics • M. Shooman used the same metrics and characterized reliability, availability and maintainability with a standard scale between 0 and 1. (the closer to 1 --- the better) • Reliability = MTBF/(1+MTBF) • looking for big MTBF • Availability = MTBF/(MTBF + MTTR) • looking for big MTBF and small MTTR • Maitainability = 1/(1 + MTTR) • looking for small MTTR --- close to zero Repeats MTTR because MTBF contains MTTR or MTTF/MTBF (previous page)