Progress Monitoring Advance Organizer Overview of progress monitoring

- Slides: 39

Progress Monitoring

Advance Organizer • Overview of progress monitoring • • Definition Rationale/Benefits Types of Research • Activity: Possibilities and benefits of improved progress monitoring • Learn the steps of progress monitoring and apply to one student and his/her Intervention Plan? ?

Progress Monitoring: Definition • A type of formative assessment (i. e. , frequent evaluation), is often used to evaluate student learning. • Progress monitoring is the ongoing process of collecting and analyzing data to determine student progress. http: //iris. peabody. vanderbilt. edu

Progress Monitoring: • Consists of frequent administration-for example, once per week-of brief probes or tests (e. g. , one-minute reading passages that give teachers immediate feedback on the skills currently being taught). • Uses probes (i. e. , tests) that measure the critical skills that the student must master by the end of the year. • Allows teacher to assess student learning soon after instruction and to implement instructional changes based on these data. http: //iris. peabody. vanderbilt. edu

Some Benefits of Progress Monitoring • accelerated learning because students are receiving more appropriate instruction; • more informed instructional decisions; • documentation of student progress for accountability purposes; and • more efficient communication with families and other professionals about students’ progress. NCSPM, n. d.

Progress Monitoring: What the Research and Resources Say… • More than 30 years of research have proven the benefits of monitoring a student’s progress in reading. • Students of teachers who use progress monitoring achieve higher grades than do those whose teachers do not. (Fuchs, Butterworth, & Fuchs, 1989) • Students are more aware of their performance and view themselves as more responsible for their learning when they graph their progress monitoring data. (Davis, Fuchs, & Whinnery, 1995) • Students learn more when teachers implement progress monitoring. (Safer & Fleischman, 2005) • By monitoring students’ progress, teachers can make instructional changes to improve the academic growth of all students, including those who are struggling with reading. (Fuchs & Fuchs, 2007) • Progress monitoring data are strongly predictive of student achievement on state and local standardized achievement tests. (Good, Simmons, & Kame’enui, 2001) http: //iris. peabody. vanderbilt. edu

Types of progress monitoring • Curriculum-based measurements (CBMs) • Specific subskill mastery measurement • Classroom assessment (system or teacher developed) • Performance assessments • Large scale assessments

• Progress monitoring can be especially useful with students who have difficulty showing what they know in typical assessments. • Progress monitoring allows a real view of what skills and knowledge a student has. National Center on Student Progress Monitoring, n. d.

Progress Monitoring for Informed Decision-Making

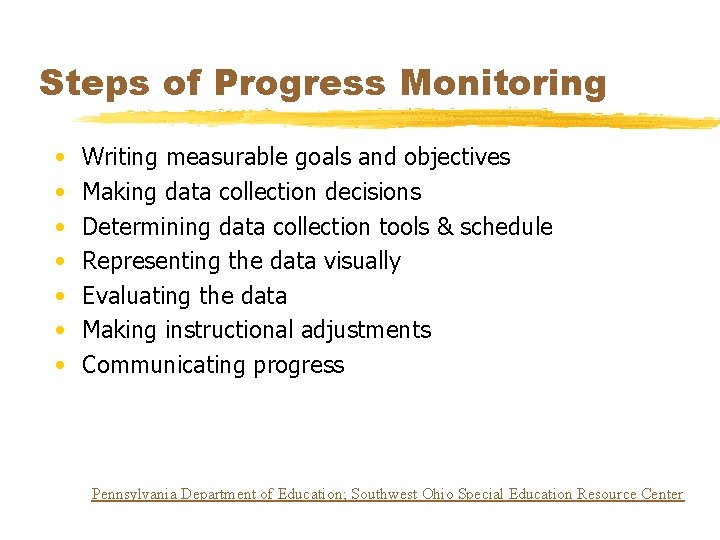

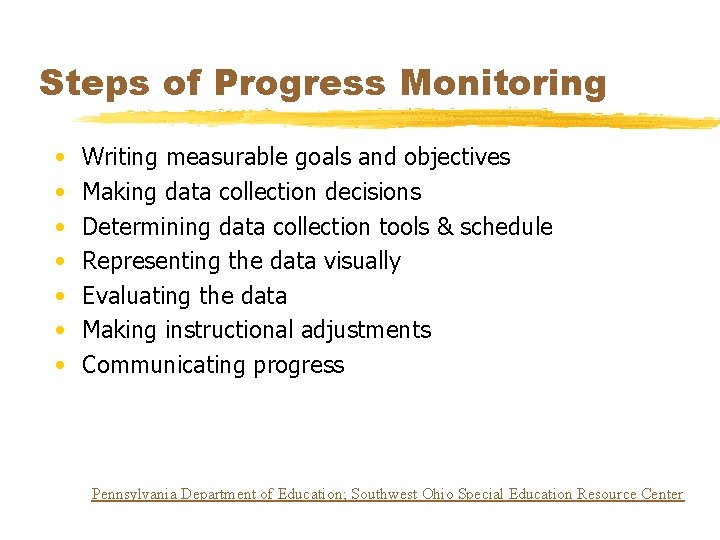

Steps of Progress Monitoring • • Writing measurable goals and objectives Making data collection decisions Determining data collection tools & schedule Representing the data visually Evaluating the data Making instructional adjustments Communicating progress Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 1: Writing goals and outcomes • Make goals measurable: • Determine the purpose and outcome of goal. (What do we need to observe this student doing? Clearly defined observable behaviors. ) • Fill in the blanks. (Conditions? Criteria? Frequency of assessment? ) • Check with the team. (What are parent, student, and other team member perceptions of purpose and outcome? ) Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 2: Making data collection decisions • Make statement of progress specific: • Determine what type of data will be collected (e. g. , frequency, percentage, duration, quality, level of assistance, fluency)? • Determine where the data will be collected (e. g. , classroom, cafeteria, playground, job-site)? • Determine how often evidence will be collected (e. g. , daily, weekly, monthly, quarterly)? How often is enough to truly show progress, or lack of? • Who will collect data (e. g. , classroom teacher, student, special education teacher, OT, school psychologist)? • Check with the team. (What are parent, student, and other team member perceptions of data collection and reporting? ) Southwest Ohio Special Education Resource Center

Activity One: Filling in the Missing Pieces • Consider your current practices. • • Changes? Questions? Feedback? Roadblocks? Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule • The tools used to collect data and ultimately measure progress provide evidence of student performance specific to goals or expected outcomes. • Data collection tools should represent different types of measurement in order to provide a clear picture of student progress.

Step 3: Determining data collection tools and schedule, continued • DIRECT MEASUREMENT provides valid and reliable indications of student progress. • Behavior Observation can be documented in many different ways; behavior observation provides first hand evidence of student performance as it occurs. • Observation Narratives • Data Charts • Frequency Recording • Duration Recording Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule, continued • DIRECT MEASUREMENT (continued) • Curriculum Based Assessment (CBA) is the direct observation and recording of student’s performance in the school curriculum. • Criterion Referenced Test (CRT) • Teacher constructed • Focuses on hierarchies of skills in the general education curriculum • Curriculum Based Measure (CBM) • Brief, standardized samples • Fluency based (accuracy and time) Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule, continued • INDIRECT MEASUREMENT can supplement direct measures. • Rubrics • Describes performance on a scale from desired performance to undesired performance using both qualitative and quantitative descriptions. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule, continued • INDIRECT MEASUREMENT (continued) • Interviews • Provide a summary of student performance on a given behavior in a structured format; regular education teachers or other school personnel can informally conference with the teacher in charge of data collection; conferences are then summarized and added to the progress monitoring file. • Student Self-Monitoring • Documents student behaviors and performance through self recording given specific cues. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule, continued • AUTHENTIC MEASUREMENT provides evidence of student performance through genuine student input. • Work Samples • Provides evidence of student performance through “hard copies” of actual student work. • • • Writing Math Projects (cutting, drawing) Pictures of student work Audio recordings of student performance (reading, responding to questions) • Portfolios • Documents student performance through a collection of work samples demonstrating specific outcomes. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule, continued • AUTHENTIC MEASUREMENT • Student Interviews (continued) • Assesses student performance through informal conferences between the teacher and student; conversations are then summarized and included in the progress monitoring file. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Activity Two: Filling in the Missing Pieces. • Consider your current practices around using data collection tools for direct measurement. • Questions? • Feedback? • Roadblocks? Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule, continued • The data collection schedule depends on how service is delivered. • Direct Instruction • Times for data collection should be worked into daily and weekly plans for instruction. • Data collection does not necessarily have to be separate from this instructional time; this situation can provide a real picture of student performance during a typical day. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 3: Determining data collection tools and schedule, continued • Indirect Instruction or Support • Times for data collection should be worked into the time when service is being delivered, if possible. • Data can also be collected remotely by regular education teachers or other service providers. • Consultation • Regular education teachers and other service providers play a key role in data collection and input. • Times for data collection should also be scheduled when concerns have been brought up; this is a perfect opportunity for using direct measures (observations, data charts, etc. ) Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

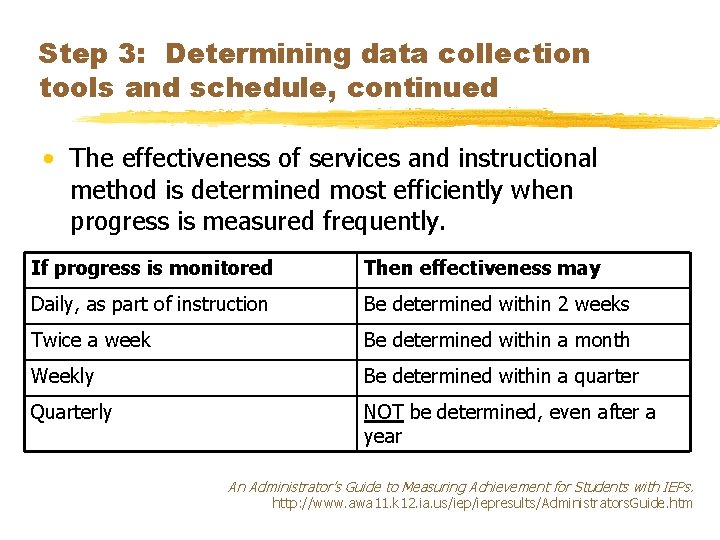

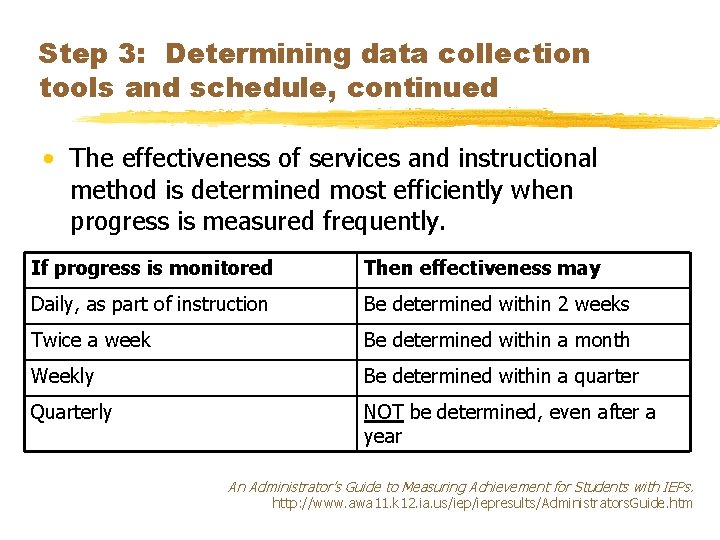

Step 3: Determining data collection tools and schedule, continued • The effectiveness of services and instructional method is determined most efficiently when progress is measured frequently. If progress is monitored Then effectiveness may Daily, as part of instruction Be determined within 2 weeks Twice a week Be determined within a month Weekly Be determined within a quarter Quarterly NOT be determined, even after a year An Administrator’s Guide to Measuring Achievement for Students with IEPs. http: //www. awa 11. k 12. ia. us/iepresults/Administrators. Guide. htm

Activity Three: Filling in the Missing Pieces. • Consider your practices around developing data collection schedules for the tools you use to measure behavior. • Questions? • Feedback? • Roadblocks? Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 4: Representing Data Visually • Compiling data is a critical component in progress monitoring. • Summarizes data collected periodically during the duration of an IEP. • Ultimately saves time; attempting to compile all data collected during the duration of a year long IEP would be an overwhelming task. • Provides the team with useful reference points in time. • Saves time and confusion during meetings. • To graph or not to graph. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

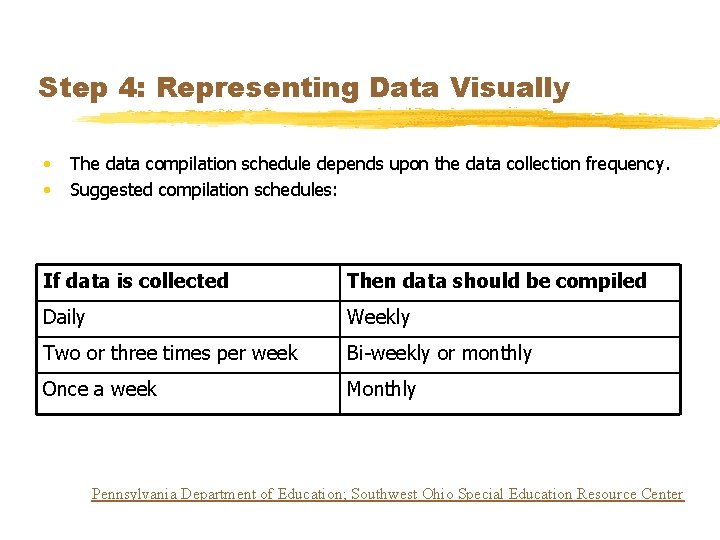

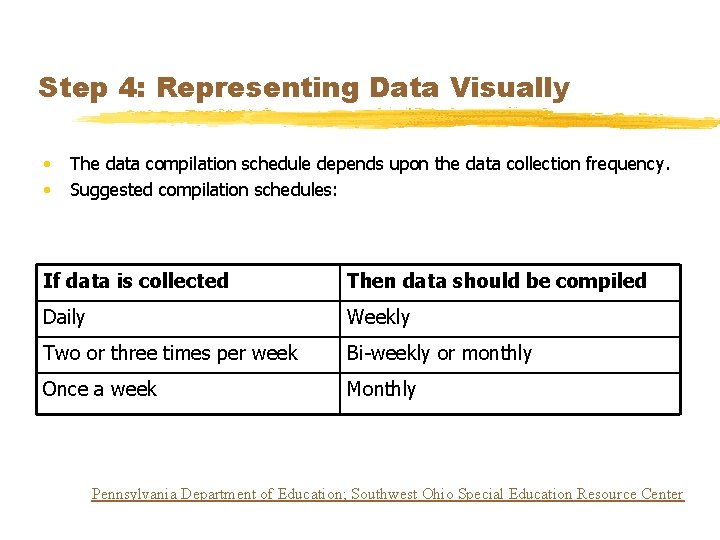

Step 4: Representing Data Visually • • The data compilation schedule depends upon the data collection frequency. Suggested compilation schedules: If data is collected Then data should be compiled Daily Weekly Two or three times per week Bi-weekly or monthly Once a week Monthly Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Activity Four: Compiling data and representing it visually • Consider data compilation: • If you already practice compiling data, how do you make it work along with all other responsibilities? • If you don’t usually compile data, how might it be worked in with everything else you do during school? Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 5: Evaluating the data • Data collection provides information used to drive instruction. • Collected data must be reviewed regularly and on a predetermined basis. • The data must be evaluated to determine if the student is making progress toward goals/benchmarks/objectives, and how the child is responding to the intervention/treatment being implemented. • Decision rules should be applied when analyzing data (e. g. , four consecutive or four out of six below the aimline). Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 6: Making instructional decisions • Student progress is considered in relationship to each goal or expected outcome. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 6: Making instructional decisions, continued • Four aspects should be considered: 1. Progress – Did the student make the progress expected by the SAT/IEP team? (criteria) 2. Comparison to Peers or Standards – How does the student’s performance compare with the performance of general education students? 3. Independence – Is the student more independent in the goal area? 4. Goal Status – – Will work in the goal be continued? Will student be dismissed from this goal area? Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 6: Making instructional decisions, continued • • When the data patterns indicate the need to intervene, simple instructional interventions should be used. If these adjustments still do not yield results, moderate and then more intensive interventions should be tried. When instructional interventions do not result in the expected progress being made for students receiving special education and related services, the IEP team should be reconvened to reevaluate the goal and objectives. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 6: Making instructional decisions, continued Examples of data patterns and suggestions for interventions: 1. 2. 3. 4. 5. If the data patterns show that the student is making adequate progress or better progress, the program is working, and the teacher/related service staff should continue the present instructional program. If the data patterns show that the student’s progress is stalled, and the student can do some but not all of the task, the teacher should provide more direct or intensive instruction on difficult steps. If the data patterns show that the student’s progress is at or near zero, the task is too difficult. The teacher/related service staff should teach prerequisite skills. If the data patterns show that the student’s progress is stalled close to the goal, the teacher should provide increased repetitions and frequent opportunities for practice. If the data patterns show that the student’s goal has been accomplished, then the instructional program is successful, and the student should move on to a new goal. Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Activity Five: Evaluating the data and making instructional decisions • Consider your current practices. How does the SAT/IEP evaluate the compiled data, and use it to inform instruction or service options. • Questions? • Feedback? • Roadblocks? Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 7: Communicating progress • Progress toward SAT/IEP goals and outcomes is reported to parents as agreed to on SAT Intervention Plan or Behavior Intervention Plan. • Timeline • Mid-Quarter (Interim Reports) • Quarterly • Format • Compilation Forms • Graphs • Narratives • Accompanies hard data • Explains any instructional changes or specific circumstances Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Step 7: Communicating progress, continued • Communication about student progress should actively involve the parent and the student. • Communication can be a motivational tool for students and can strengthen home-school bonds. • Ways to keep lines of communication open include: • • Communication books and data logs Parent/teacher conferences Progress reports and report cards Phone calls Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Activity Six: Communicating progress • Consider practices for communicating progress with teachers, students, parents. • Questions? • Feedback? • Roadblocks? Pennsylvania Department of Education; Southwest Ohio Special Education Resource Center

Wrapping it up…. • Progress monitoring remains a required part of the SAT/IEP. • Other provisions regulations mandate greater accountability for student progress. • Results-oriented shift • Outcomes focus Etscheidt, 2006

Resources • Progress Monitoring. Pennsylvania Department of Education, 01. 2009. • The IEP: Progress Monitoring Process. Southwest Ohio Special Education Regional Resource Center • Selected resources

Advance organizer biologie

Advance organizer biologie Problem solving videos

Problem solving videos Advance organizer unterricht

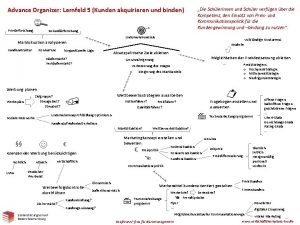

Advance organizer unterricht Kunden akquirieren und binden

Kunden akquirieren und binden Examples of advance organizers

Examples of advance organizers Advance organizer beispiele

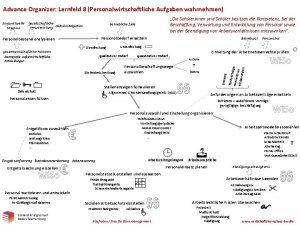

Advance organizer beispiele David ausubel

David ausubel Personalwirtschaftliche aufgaben wahrnehmen

Personalwirtschaftliche aufgaben wahrnehmen Cues and questions

Cues and questions Skimming advance organizer examples

Skimming advance organizer examples Ausubel cognitivisme

Ausubel cognitivisme Advance organizer

Advance organizer Physical progress and financial progress

Physical progress and financial progress Progress monitoring

Progress monitoring Knec monitoring learners' progress

Knec monitoring learners' progress Progress monitoring charts

Progress monitoring charts Progress monitoring system

Progress monitoring system Aimsweb progress monitoring

Aimsweb progress monitoring Progress monitoring

Progress monitoring Openedge database monitoring

Openedge database monitoring Progress monitoring google forms

Progress monitoring google forms Intervention central progress monitoring

Intervention central progress monitoring Franklin academy boynton

Franklin academy boynton Progress monitoring theory

Progress monitoring theory Cli engage dashboard

Cli engage dashboard Progress database monitoring

Progress database monitoring Action planning

Action planning Chart dog graph maker

Chart dog graph maker Apm testing florida

Apm testing florida Cambium progress monitoring tool

Cambium progress monitoring tool Progress monitoring examples

Progress monitoring examples Advance image search

Advance image search Advance care planning verpleegkundige

Advance care planning verpleegkundige Carlo renders

Carlo renders Economic advance and social unrest

Economic advance and social unrest Merchant cash advance direct mail

Merchant cash advance direct mail Cues questions and advance organizers

Cues questions and advance organizers Please order in advance

Please order in advance Planning is deciding in advance what is to be done

Planning is deciding in advance what is to be done Advance science

Advance science