Programming Massively Parallel Processors Lecture Slides for Chapter

![for (m = 0; m < M; m++) { phi. Mag[m] = r. Phi[m]*r. for (m = 0; m < M; m++) { phi. Mag[m] = r. Phi[m]*r.](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-10.jpg)

![Algorithms to Accelerate for (m = 0; m < M; m++) { r. Mu[m] Algorithms to Accelerate for (m = 0; m < M; m++) { r. Mu[m]](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-11.jpg)

![for (m = 0; m < M; m++) { r. Mu[m] = r. Phi[m]*r. for (m = 0; m < M; m++) { r. Mu[m] = r. Phi[m]*r.](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-19.jpg)

![Chunking k-space data to fit into constant memory __constant__ float kx_c[CHUNK_SIZE], ky_c[CHUNK_SIZE], kz_c[CHUNK_SIZE]; … Chunking k-space data to fit into constant memory __constant__ float kx_c[CHUNK_SIZE], ky_c[CHUNK_SIZE], kz_c[CHUNK_SIZE]; …](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-26.jpg)

- Slides: 51

Programming Massively Parallel Processors Lecture Slides for Chapter 8: Application Case Study – Advanced MRI Reconstruction © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 1

Objective • To learn about computational thinking skills through a concrete example – – Problem formulation Designing implementations to steer around limitations Validating results Understanding the impact of your improvements • A top to bottom experience! © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 2

Acknowledgements Sam S. Stone§, Haoran Yi§, Justin P. Haldar†, Deepthi Nandakumar, Bradley P. Sutton†, Zhi-Pei Liang†, Keith Thulburin* § Center for Reliable and High-Performance Computing † Beckman Institute for Advanced Science and Technology Department of Electrical and Computer Engineering University of Illinois at Urbana-Champaign * University of Illinois, Chicago Medical Center © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 3

Overview • • Magnetic resonance imaging Non-Cartesian Scanner Trajectory Least-squares (LS) reconstruction algorithm Optimizing the LS reconstruction on the G 80 – Overcoming bottlenecks – Performance tuning • Summary © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 4

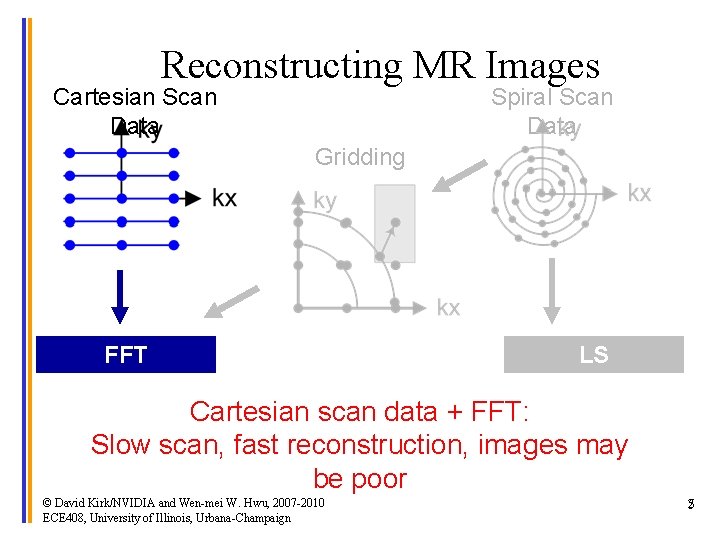

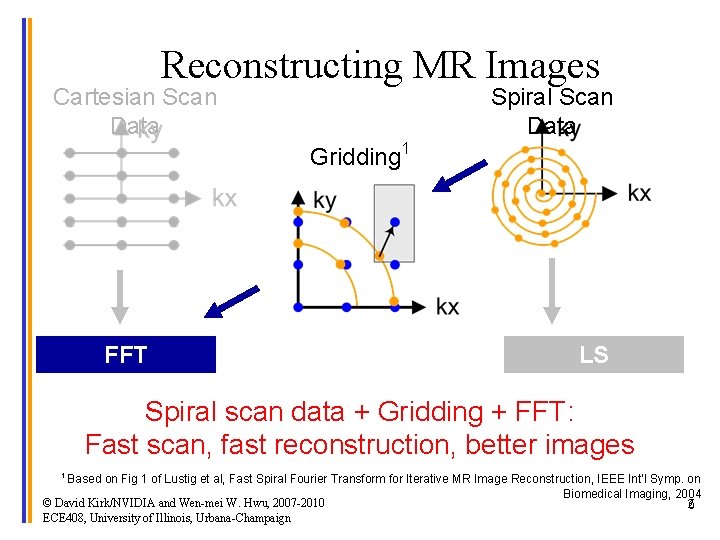

Reconstructing MR Images Cartesian Scan Data Spiral Scan Data Gridding FFT LS Cartesian scan data + FFT: Slow scan, fast reconstruction, images may be poor © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 25

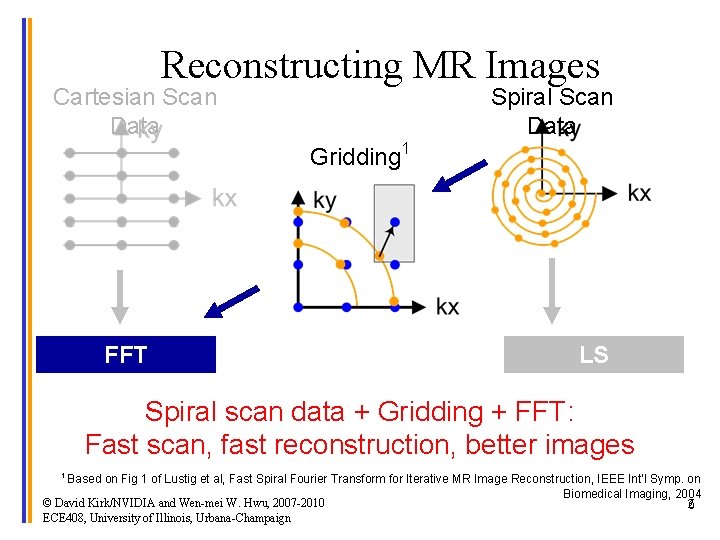

Reconstructing MR Images Cartesian Scan Data Spiral Scan Data Gridding 1 FFT LS Spiral scan data + Gridding + FFT: Fast scan, fast reconstruction, better images 1 Based on Fig 1 of Lustig et al, Fast Spiral Fourier Transform for Iterative MR Image Reconstruction, IEEE Int’l Symp. on Biomedical Imaging, 2004 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 26 ECE 408, University of Illinois, Urbana-Champaign

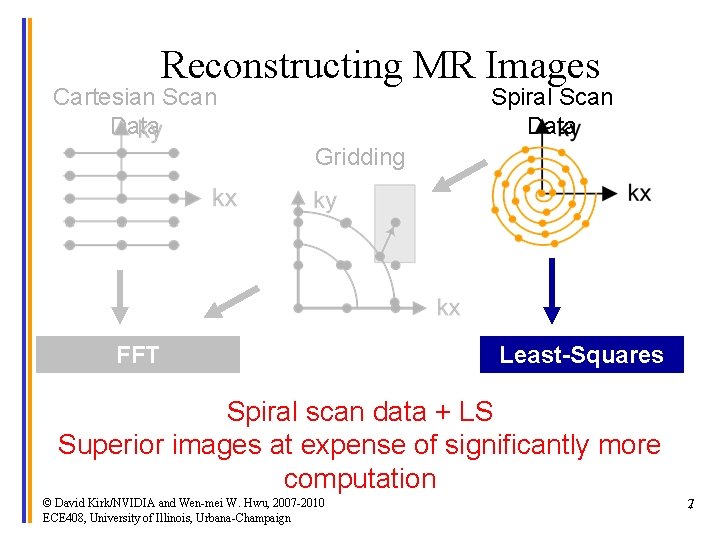

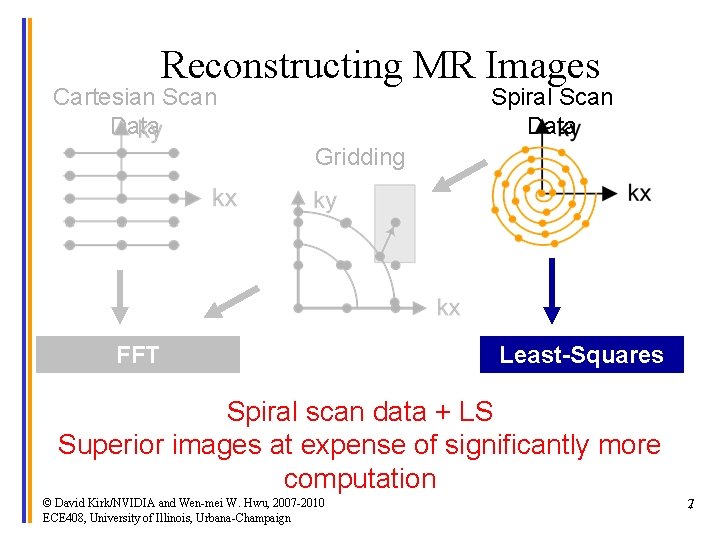

Reconstructing MR Images Cartesian Scan Data Spiral Scan Data Gridding FFT Least-Squares (LS) Spiral scan data + LS Superior images at expense of significantly more computation © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 27

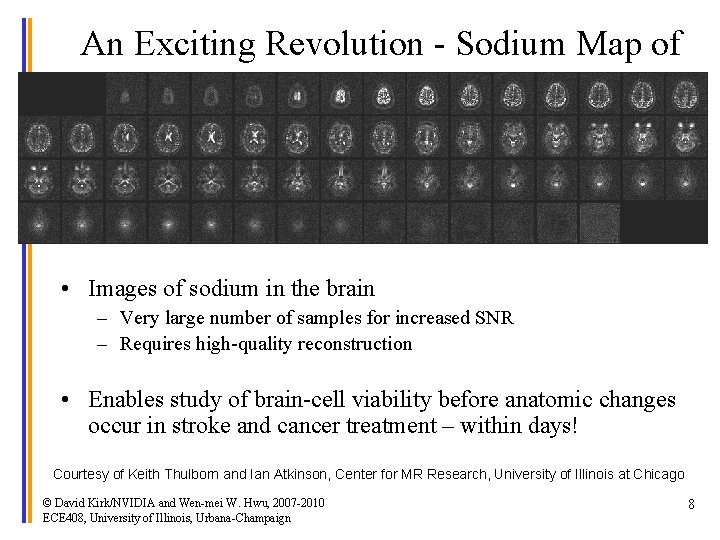

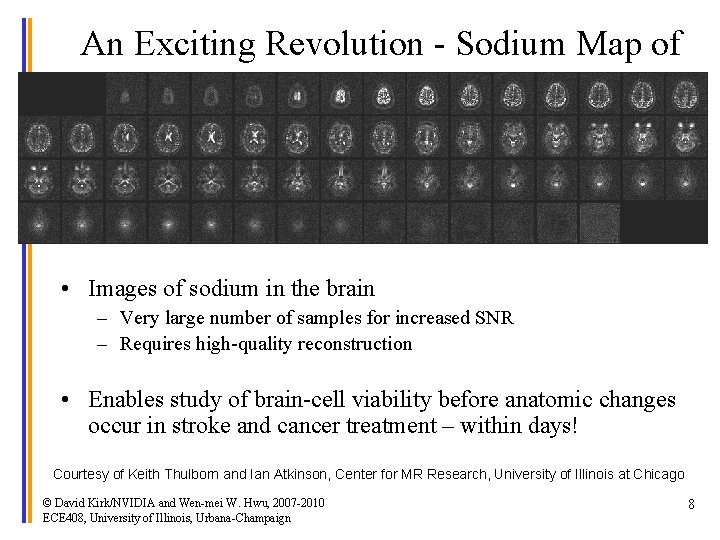

An Exciting Revolution - Sodium Map of the Brain • Images of sodium in the brain – Very large number of samples for increased SNR – Requires high-quality reconstruction • Enables study of brain-cell viability before anatomic changes occur in stroke and cancer treatment – within days! Courtesy of Keith Thulborn and Ian Atkinson, Center for MR Research, University of Illinois at Chicago © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 8

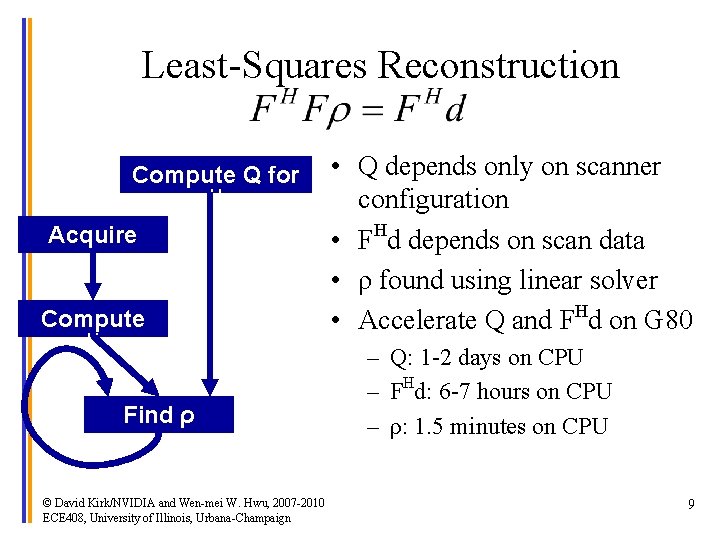

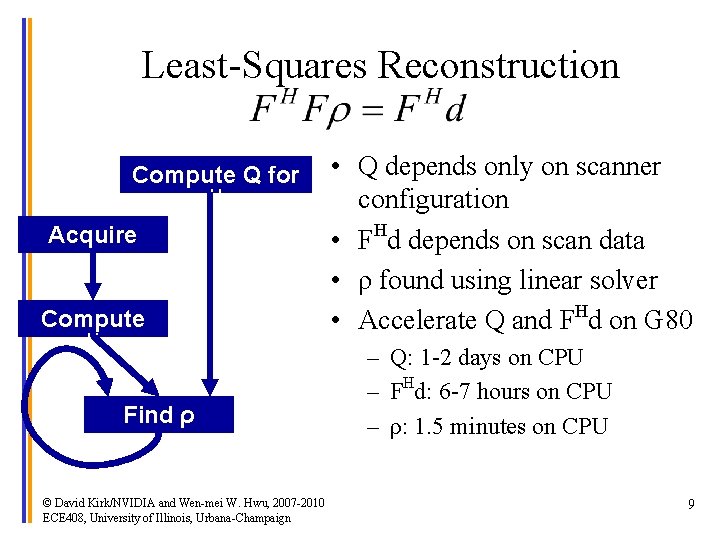

Least-Squares Reconstruction Compute Q for F HF Acquire Data Compute F Hd Find ρ © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign • Q depends only on scanner configuration H • F d depends on scan data • ρ found using linear solver • Accelerate Q and FHd on G 80 – Q: 1 -2 days on CPU – FHd: 6 -7 hours on CPU – ρ: 1. 5 minutes on CPU 9

![for m 0 m M m phi Magm r Phimr for (m = 0; m < M; m++) { phi. Mag[m] = r. Phi[m]*r.](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-10.jpg)

for (m = 0; m < M; m++) { phi. Mag[m] = r. Phi[m]*r. Phi[m] + i. Phi[m]*i. Phi[m]; r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; for (n = 0; n < N; n++) { exp. Q = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); r. Q[n] +=phi. Mag[m]*cos(exp. Q); i. Q[n] +=phi. Mag[m]*sin(exp. Q); } c. Arg = cos(exp. Fh. D); s. Arg = sin(exp. Fh. D); } (a) Q computation r. Fh. D[n] += i. Fh. D[n] += Q v. s. FHD } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; (b) FHd computation 10

![Algorithms to Accelerate for m 0 m M m r Mum Algorithms to Accelerate for (m = 0; m < M; m++) { r. Mu[m]](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-11.jpg)

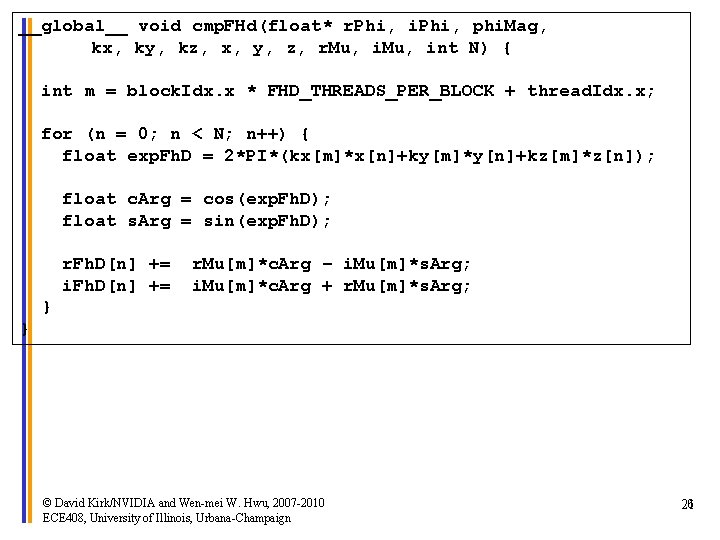

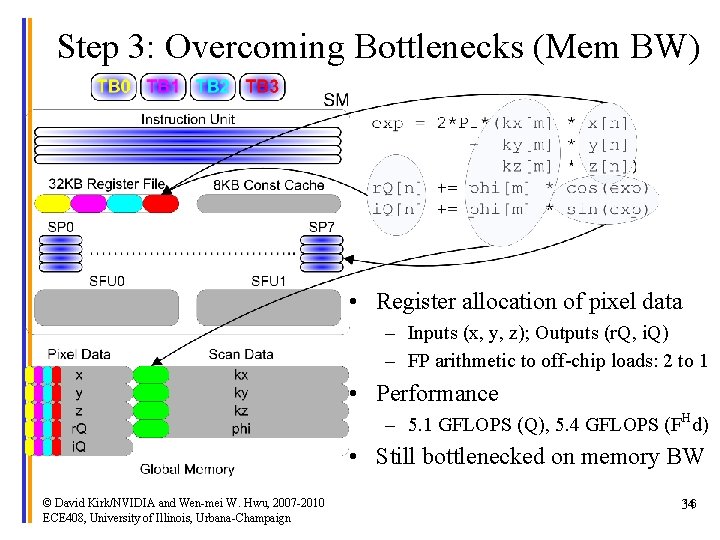

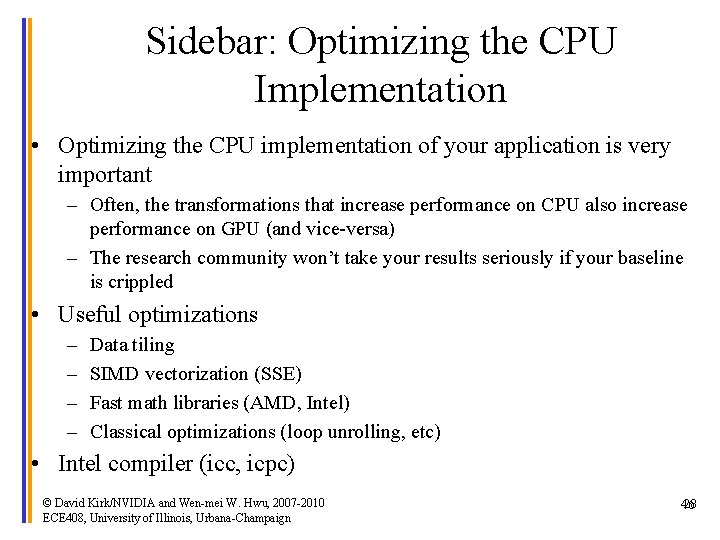

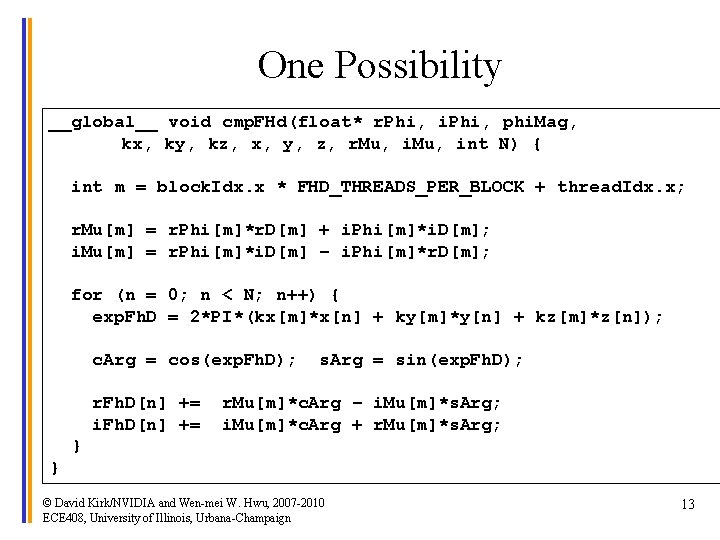

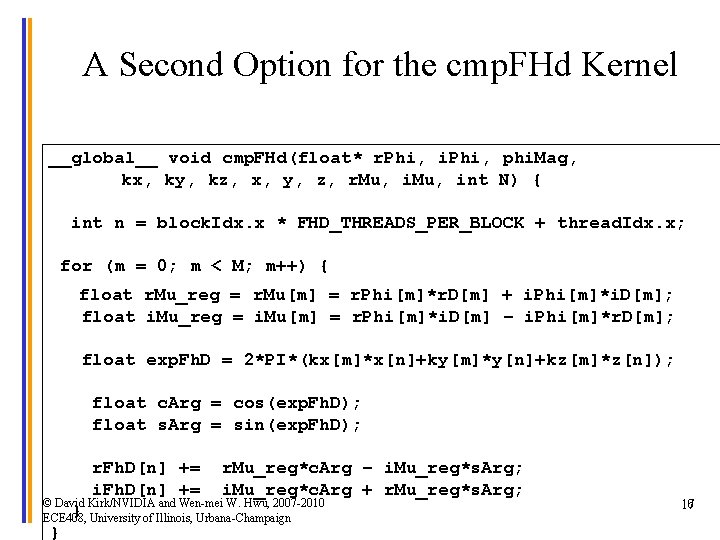

Algorithms to Accelerate for (m = 0; m < M; m++) { r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); c. Arg = cos(exp. Fh. D); s. Arg = sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } ©}David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign • Scan data – M = # scan points – kx, ky, kz = 3 D scan data • Pixel data – N = # pixels – x, y, z = input 3 D pixel data – r. Fh. D, i. Fh. D= output pixel data • Complexity is O(MN) • Inner loop – 13 FP MUL or ADD ops – 2 FP trig ops – 12 loads, 2 stores 11

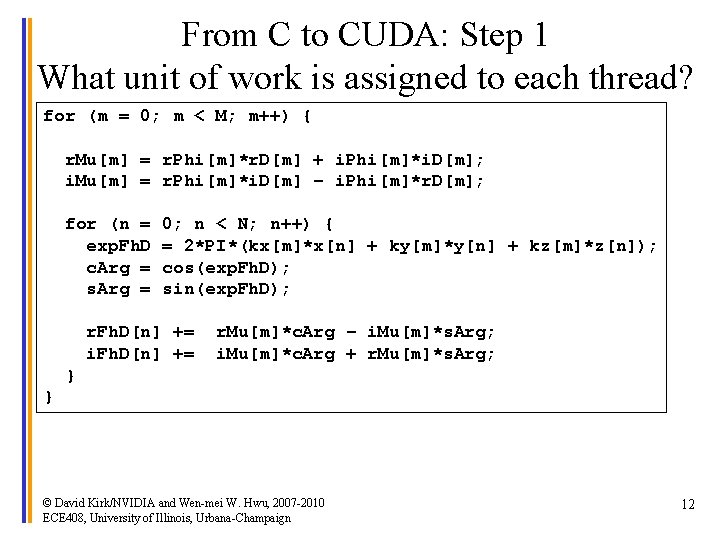

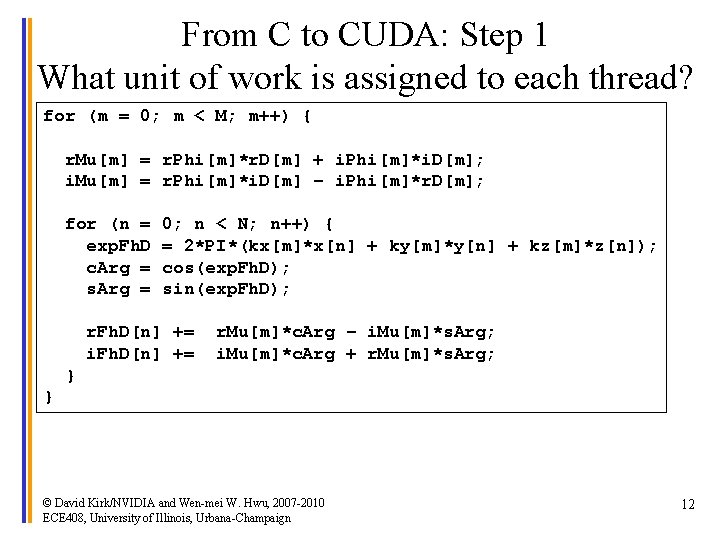

From C to CUDA: Step 1 What unit of work is assigned to each thread? for (m = 0; m < M; m++) { r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; for (n = exp. Fh. D c. Arg = s. Arg = 0; n < N; n++) { = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); cos(exp. Fh. D); sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 12

One Possibility __global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, kx, ky, kz, x, y, z, r. Mu, int N) { int m = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); c. Arg = cos(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += s. Arg = sin(exp. Fh. D); r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 13

One Possibility - Improved __global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, kx, ky, kz, x, y, z, r. Mu, int N) { int m = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; float r. Mu_reg, i. Mu_reg; r. Mu_reg = r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu_reg = i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); c. Arg = cos(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += s. Arg = sin(exp. Fh. D); r. Mu_reg*c. Arg – i. Mu_reg*s. Arg; i. Mu_reg*c. Arg + r. Mu_reg*s. Arg; } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 14

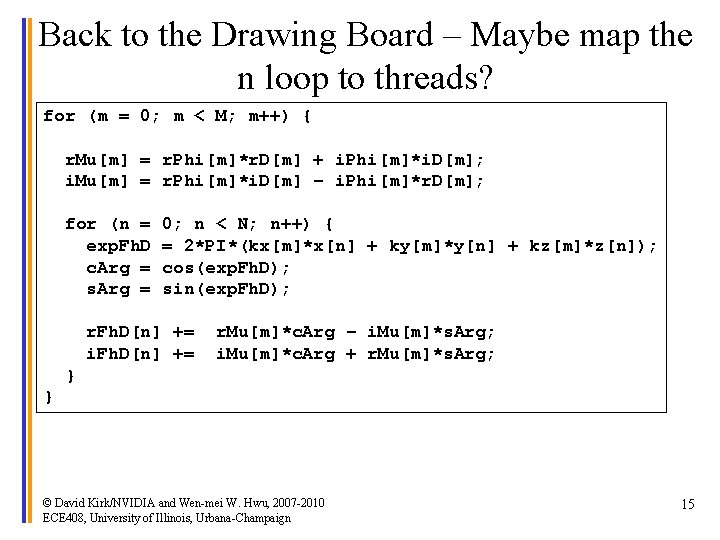

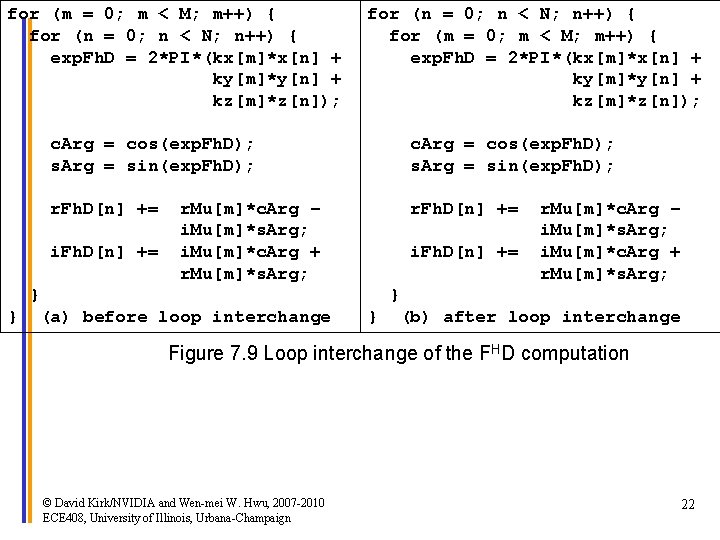

Back to the Drawing Board – Maybe map the n loop to threads? for (m = 0; m < M; m++) { r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; for (n = exp. Fh. D c. Arg = s. Arg = 0; n < N; n++) { = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); cos(exp. Fh. D); sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 15

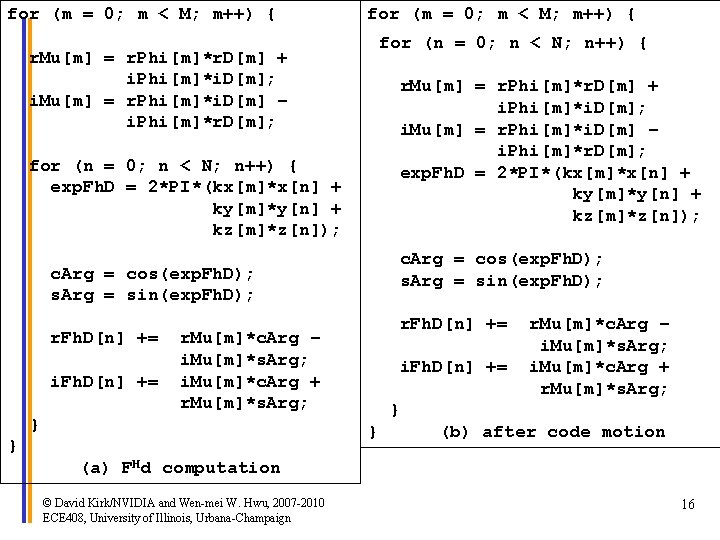

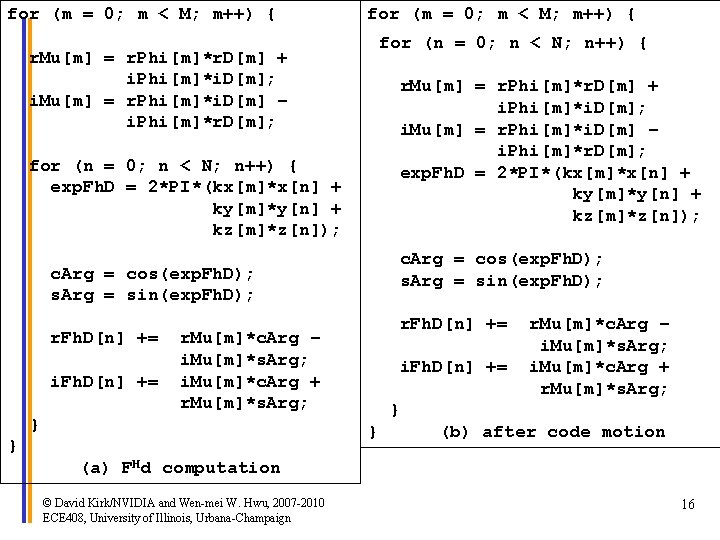

for (m = 0; m < M; m++) { for (n = 0; n < N; n++) { r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); c. Arg = cos(exp. Fh. D); s. Arg = sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } i. Fh. D[n] += } } } r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; (b) after code motion (a) FHd computation © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 16

A Second Option for the cmp. FHd Kernel __global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, kx, ky, kz, x, y, z, r. Mu, int N) { int n = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; for (m = 0; m < M; m++) { float r. Mu_reg = r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; float i. Mu_reg = i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; float exp. Fh. D = 2*PI*(kx[m]*x[n]+ky[m]*y[n]+kz[m]*z[n]); float c. Arg = cos(exp. Fh. D); float s. Arg = sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu_reg*c. Arg – i. Mu_reg*s. Arg; i. Mu_reg*c. Arg + r. Mu_reg*s. Arg; © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 } University of Illinois, Urbana-Champaign ECE 408, } 6 17

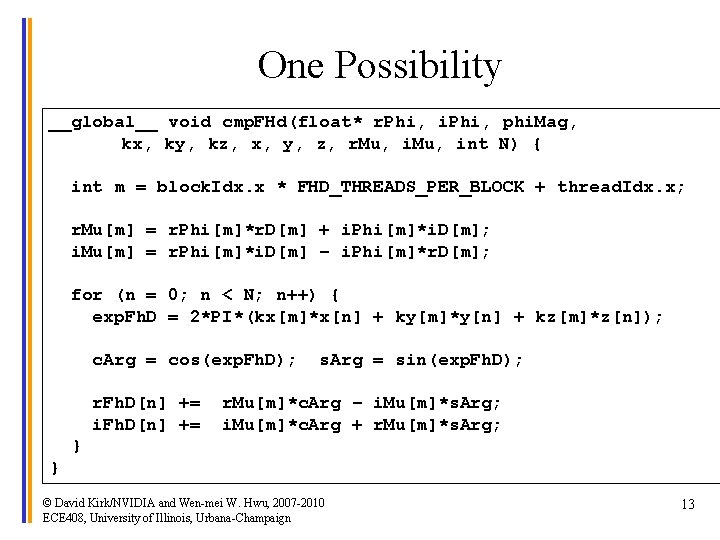

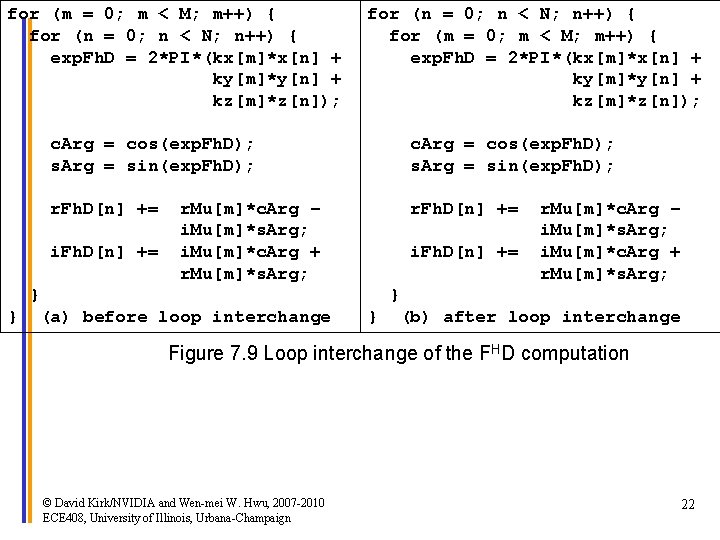

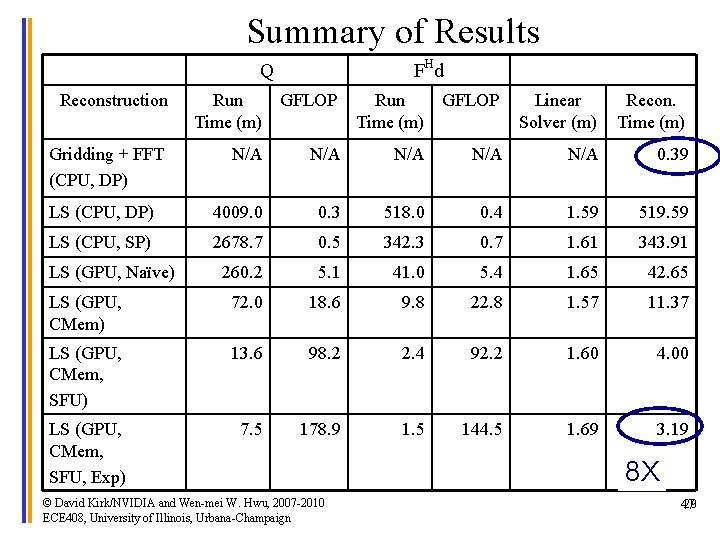

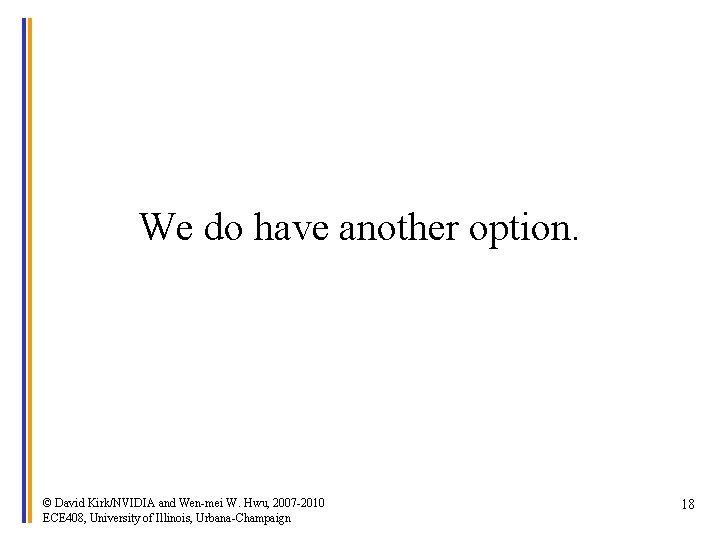

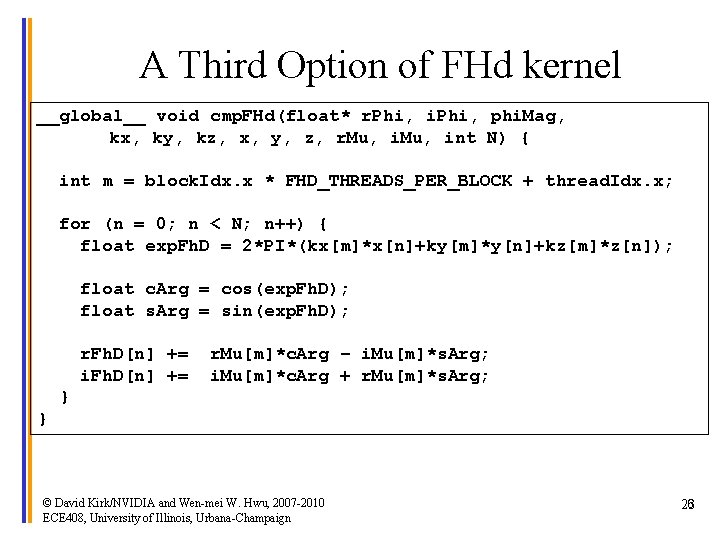

We do have another option. © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 18

![for m 0 m M m r Mum r Phimr for (m = 0; m < M; m++) { r. Mu[m] = r. Phi[m]*r.](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-19.jpg)

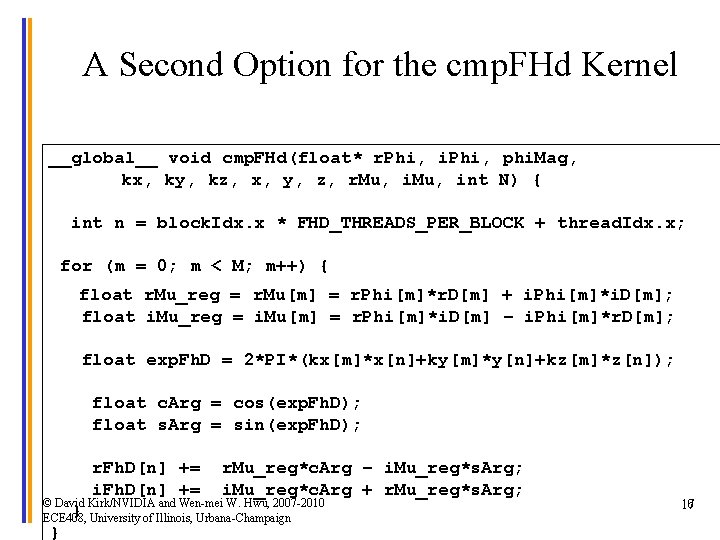

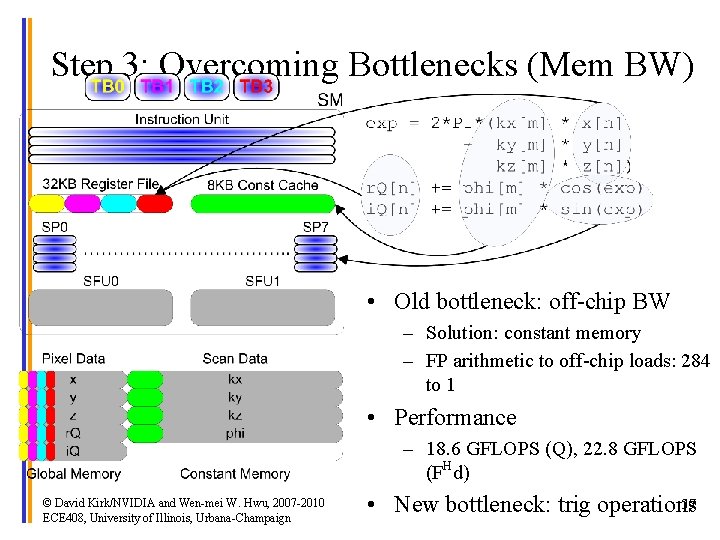

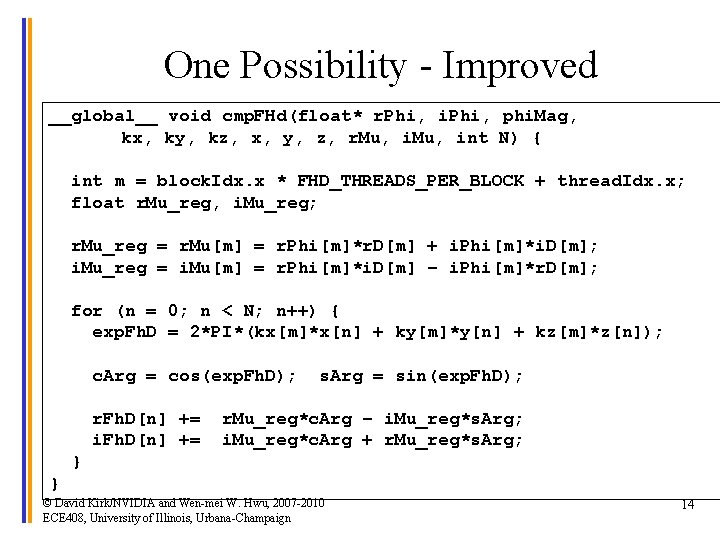

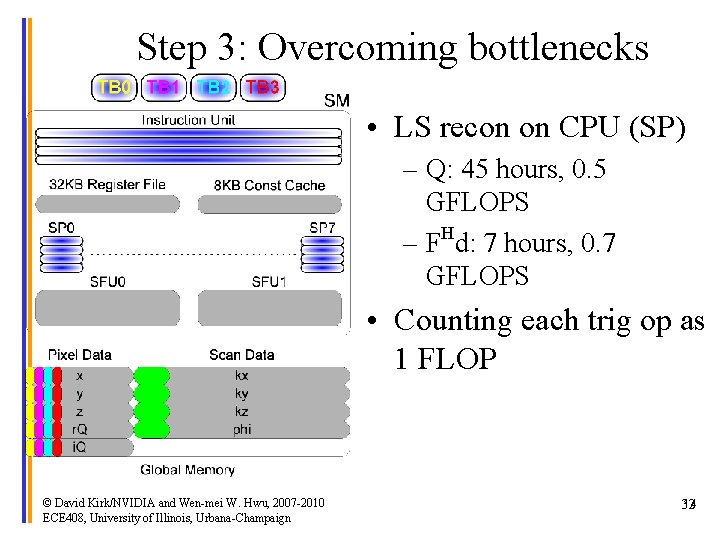

for (m = 0; m < M; m++) { r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; } for (m = 0; m < M; m++) { for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); c. Arg = cos(exp. Fh. D); s. Arg = sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += c. Arg = cos(exp. Fh. D); s. Arg = sin(exp. Fh. D); r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; r. Fh. D[n] += i. Fh. D[n] += } } r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } (a) FHd computation © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign } (b) after loop fission 19

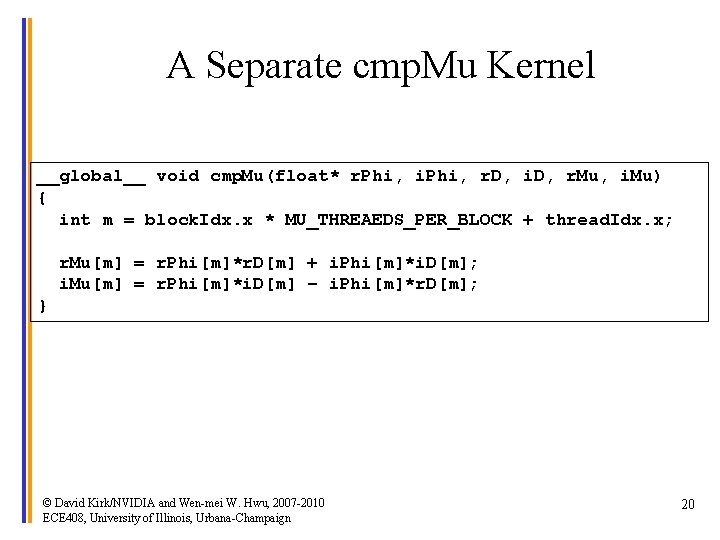

A Separate cmp. Mu Kernel __global__ void cmp. Mu(float* r. Phi, i. Phi, r. D, i. D, r. Mu, i. Mu) { int m = block. Idx. x * MU_THREAEDS_PER_BLOCK + thread. Idx. x; r. Mu[m] = r. Phi[m]*r. D[m] + i. Phi[m]*i. D[m]; i. Mu[m] = r. Phi[m]*i. D[m] – i. Phi[m]*r. D[m]; } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 20

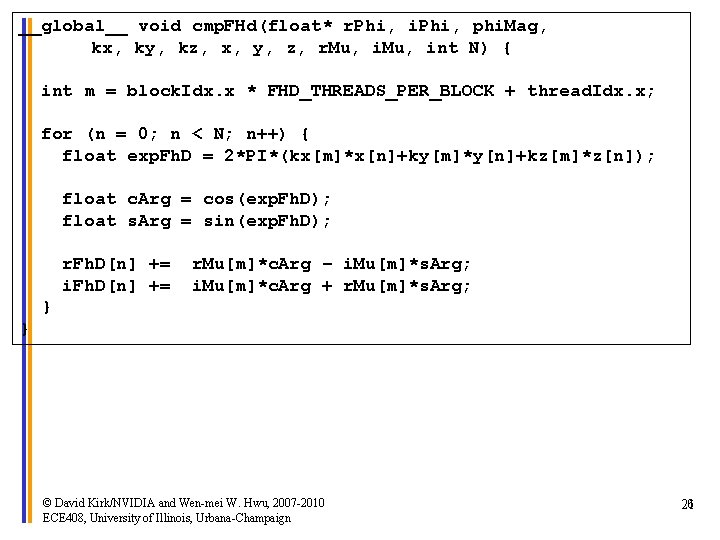

__global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, kx, ky, kz, x, y, z, r. Mu, int N) { int m = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; for (n = 0; n < N; n++) { float exp. Fh. D = 2*PI*(kx[m]*x[n]+ky[m]*y[n]+kz[m]*z[n]); float c. Arg = cos(exp. Fh. D); float s. Arg = sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 6 21

for (m = 0; m < M; m++) { for (n = 0; n < N; n++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); c. Arg = cos(exp. Fh. D); s. Arg = sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } } for (n = 0; n < N; n++) { for (m = 0; m < M; m++) { exp. Fh. D = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]); (a) before loop interchange i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } } (b) after loop interchange Figure 7. 9 Loop interchange of the FHD computation © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 22

A Third Option of FHd kernel __global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, kx, ky, kz, x, y, z, r. Mu, int N) { int m = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; for (n = 0; n < N; n++) { float exp. Fh. D = 2*PI*(kx[m]*x[n]+ky[m]*y[n]+kz[m]*z[n]); float c. Arg = cos(exp. Fh. D); float s. Arg = sin(exp. Fh. D); r. Fh. D[n] += i. Fh. D[n] += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 6 23

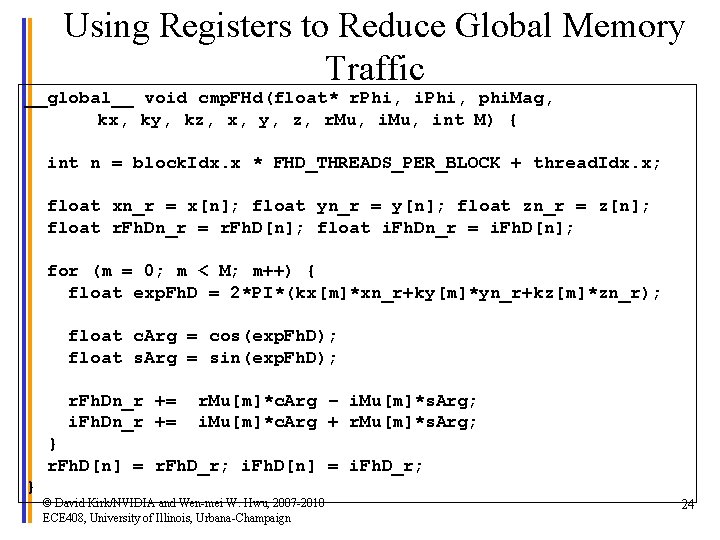

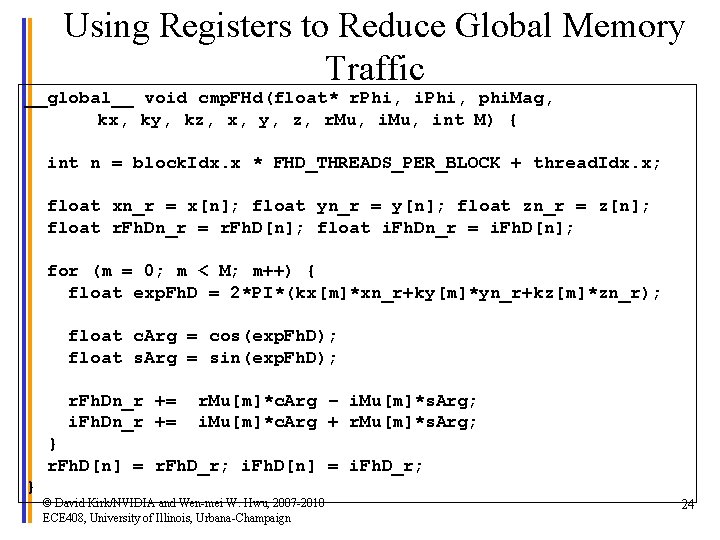

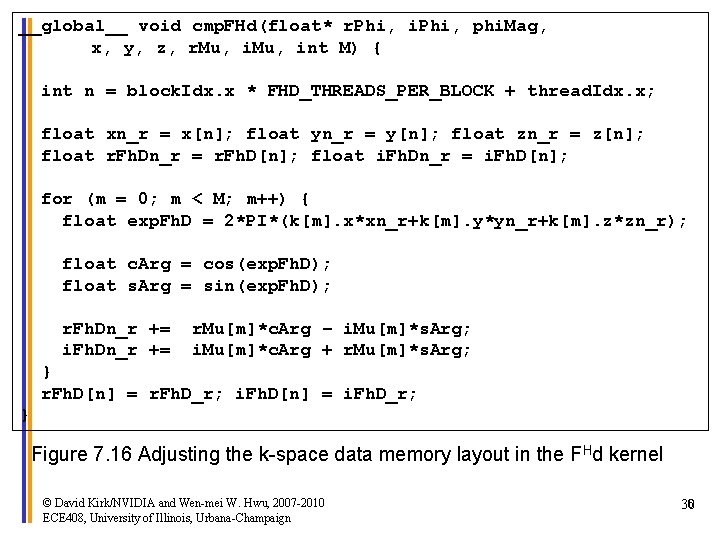

Using Registers to Reduce Global Memory Traffic __global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, kx, ky, kz, x, y, z, r. Mu, int M) { int n = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; float xn_r = x[n]; float yn_r = y[n]; float zn_r = z[n]; float r. Fh. Dn_r = r. Fh. D[n]; float i. Fh. Dn_r = i. Fh. D[n]; for (m = 0; m < M; m++) { float exp. Fh. D = 2*PI*(kx[m]*xn_r+ky[m]*yn_r+kz[m]*zn_r); float c. Arg = cos(exp. Fh. D); float s. Arg = sin(exp. Fh. D); r. Fh. Dn_r += i. Fh. Dn_r += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } r. Fh. D[n] = r. Fh. D_r; i. Fh. D[n] = i. Fh. D_r; } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 24

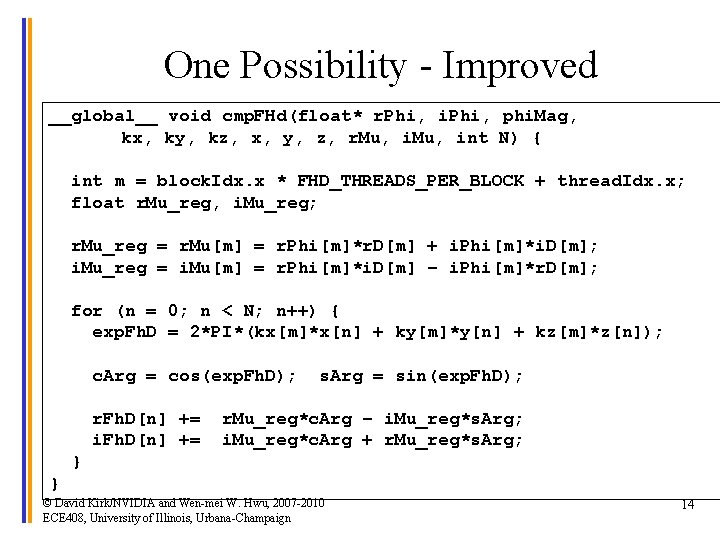

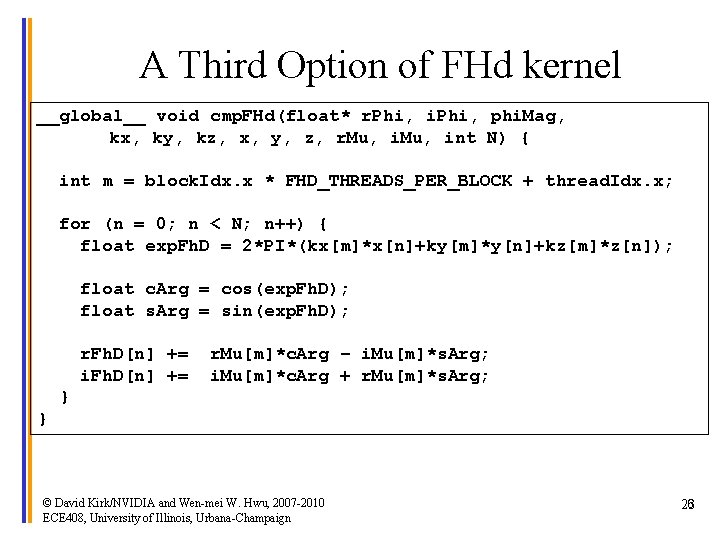

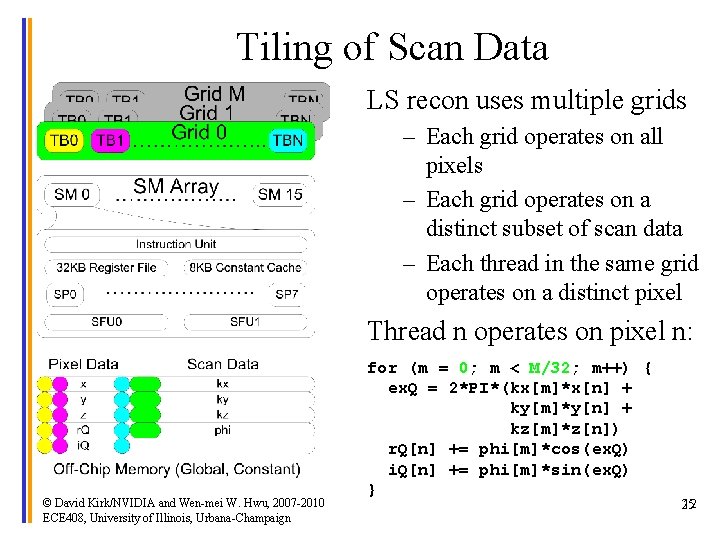

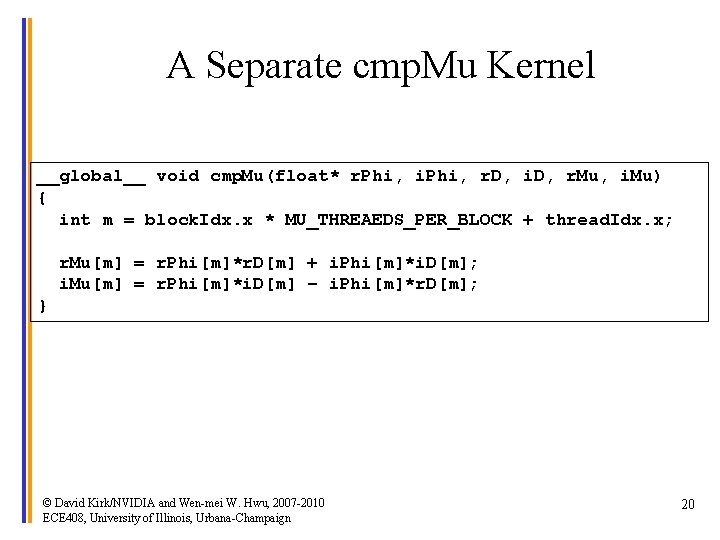

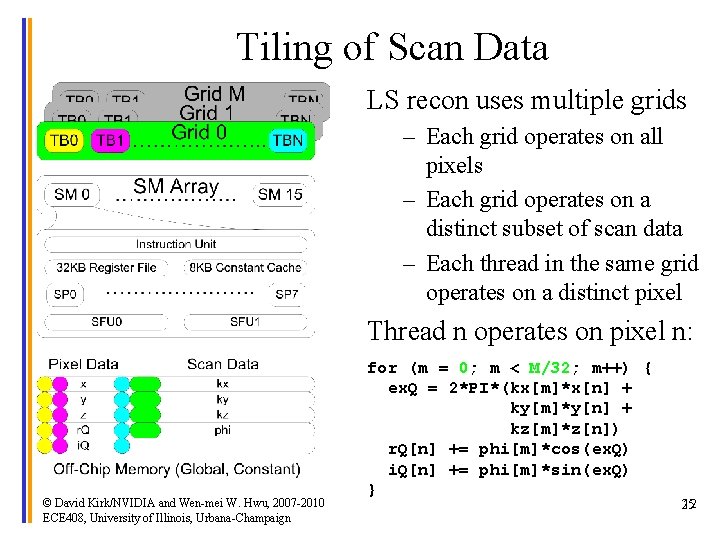

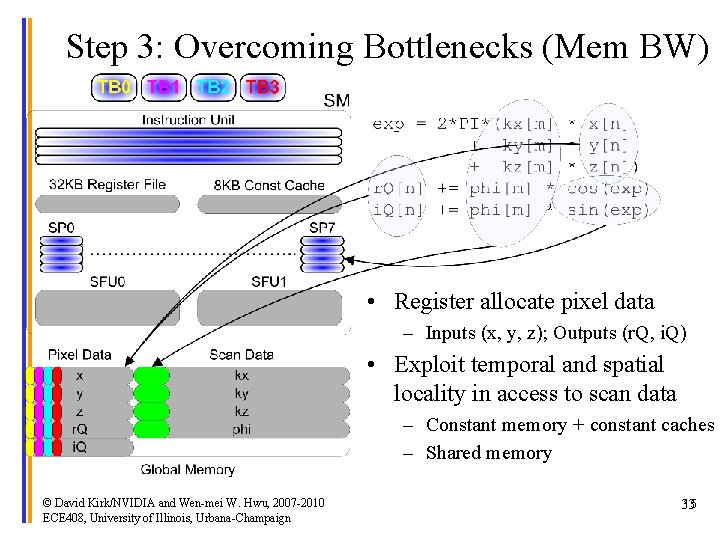

Tiling of Scan Data LS recon uses multiple grids – Each grid operates on all pixels – Each grid operates on a distinct subset of scan data – Each thread in the same grid operates on a distinct pixel Thread n operates on pixel n: © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign for (m = 0; m < M/32; m++) { ex. Q = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]) r. Q[n] += phi[m]*cos(ex. Q) i. Q[n] += phi[m]*sin(ex. Q) } 12 25

![Chunking kspace data to fit into constant memory constant float kxcCHUNKSIZE kycCHUNKSIZE kzcCHUNKSIZE Chunking k-space data to fit into constant memory __constant__ float kx_c[CHUNK_SIZE], ky_c[CHUNK_SIZE], kz_c[CHUNK_SIZE]; …](https://slidetodoc.com/presentation_image_h2/b1517d282fa03c3ab6cb084a074ce7b9/image-26.jpg)

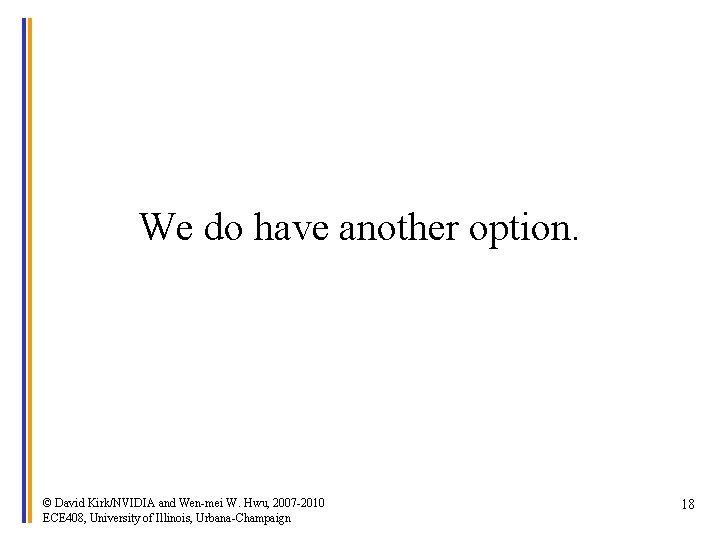

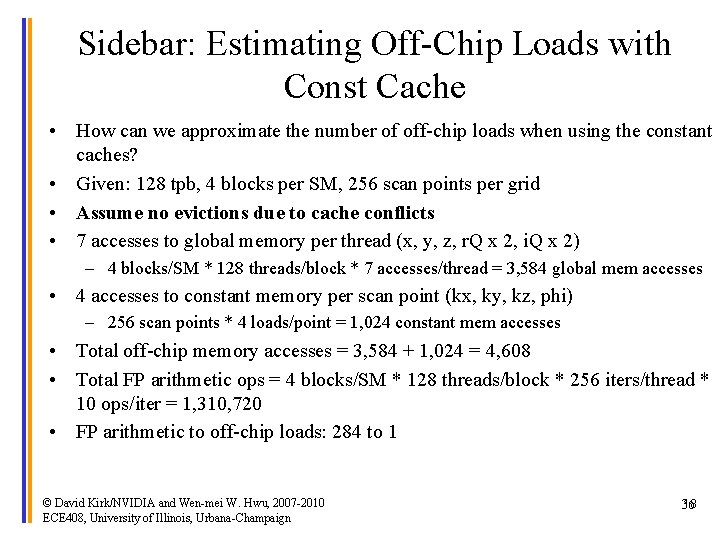

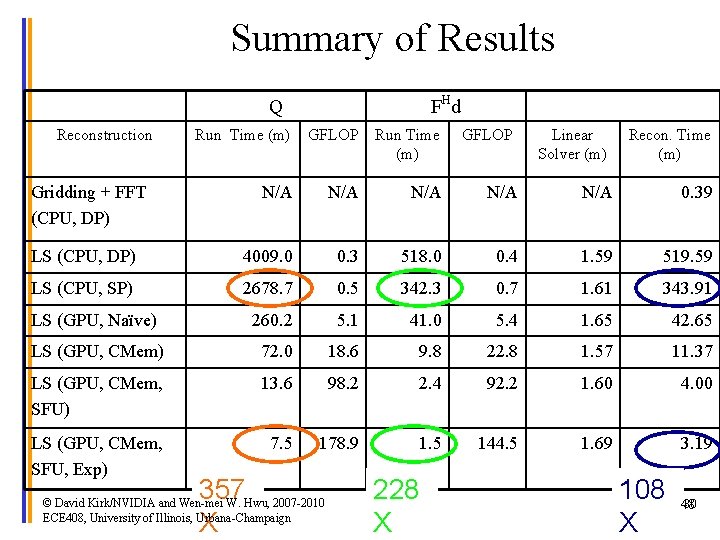

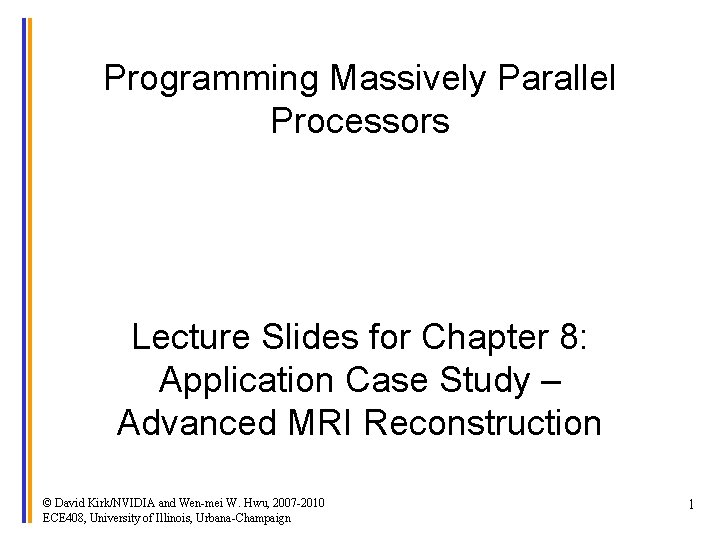

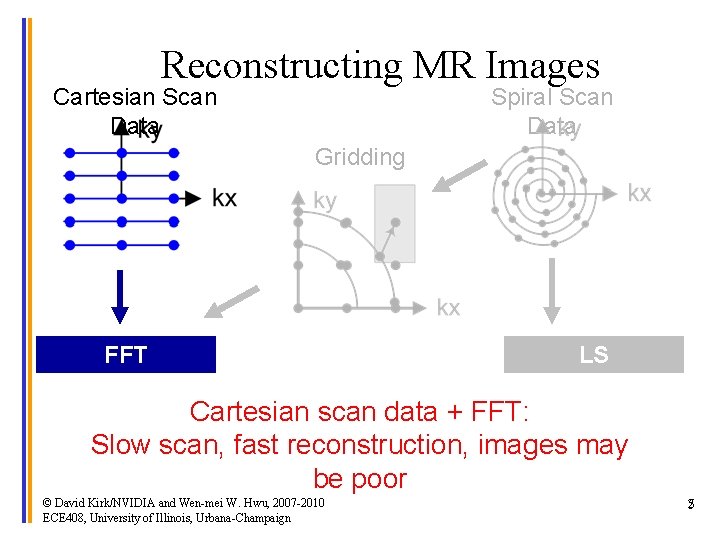

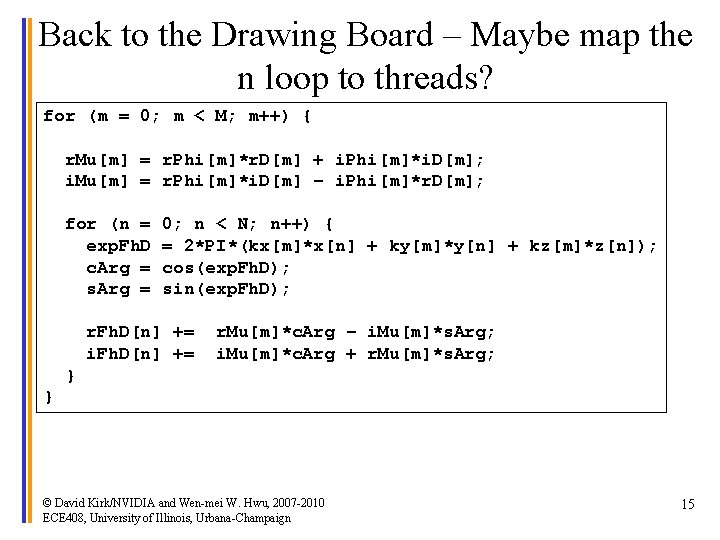

Chunking k-space data to fit into constant memory __constant__ float kx_c[CHUNK_SIZE], ky_c[CHUNK_SIZE], kz_c[CHUNK_SIZE]; … __ void main() { int i; for (i = 0; i < M/CHUNK_SIZE; i++); cuda. Memcpy(kx_c, &kx[i*CHUNK_SIZE], 4*CHUNK_SIZE, cuda. Mem. Cpy. Host. To. Device); cuda. Memcpy(ky_c, &ky[i*CHUNK_SIZE], 4*CHUNK_SIZE, cuda. Mem. Cpy. Host. To. Device); … cmp. FHD<<<FHD_THREADS_PER_BLOCK, N/FHD_THREADS_PER_BLOCK>>> (r. Phi, i. Phi, phi. Mag, x, y, z, r. Mu, int M); } /* Need to call kernel one more time if M is not */ © /* David perfect Kirk/NVIDIA andmultiple Wen-mei W. Hwu, of 2007 -2010 CHUNK SIZE */ 26 } ECE 408, University of Illinois, Urbana-Champaign

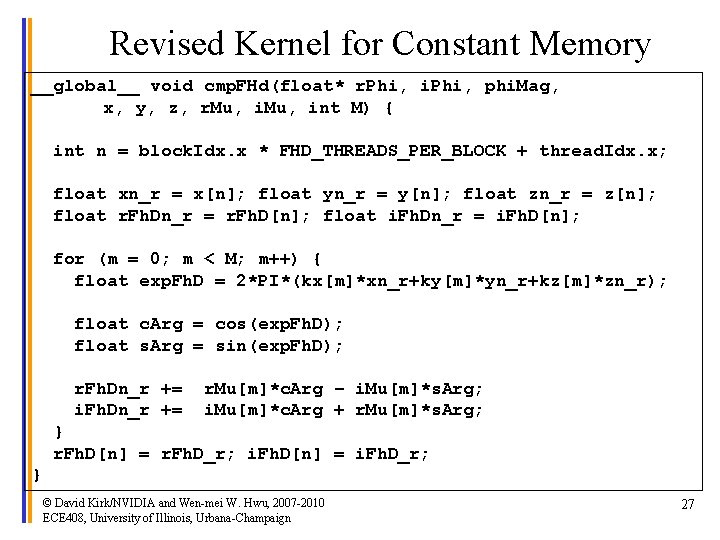

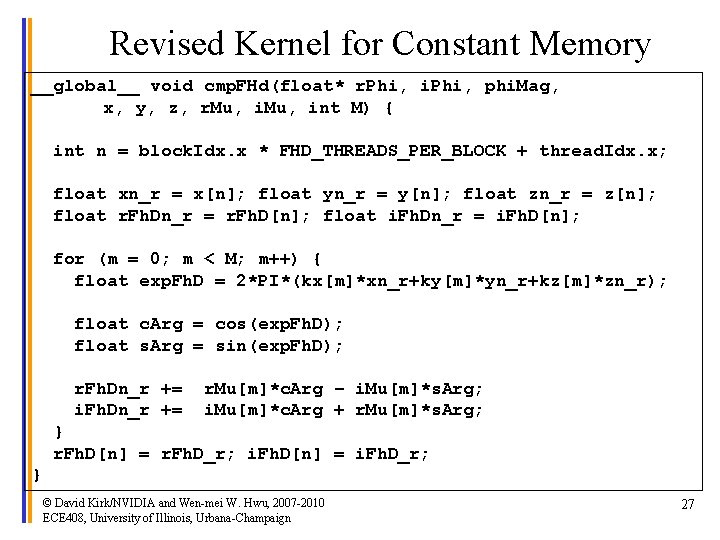

Revised Kernel for Constant Memory __global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, x, y, z, r. Mu, int M) { int n = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; float xn_r = x[n]; float yn_r = y[n]; float zn_r = z[n]; float r. Fh. Dn_r = r. Fh. D[n]; float i. Fh. Dn_r = i. Fh. D[n]; for (m = 0; m < M; m++) { float exp. Fh. D = 2*PI*(kx[m]*xn_r+ky[m]*yn_r+kz[m]*zn_r); float c. Arg = cos(exp. Fh. D); float s. Arg = sin(exp. Fh. D); r. Fh. Dn_r += i. Fh. Dn_r += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } r. Fh. D[n] = r. Fh. D_r; i. Fh. D[n] = i. Fh. D_r; } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 27

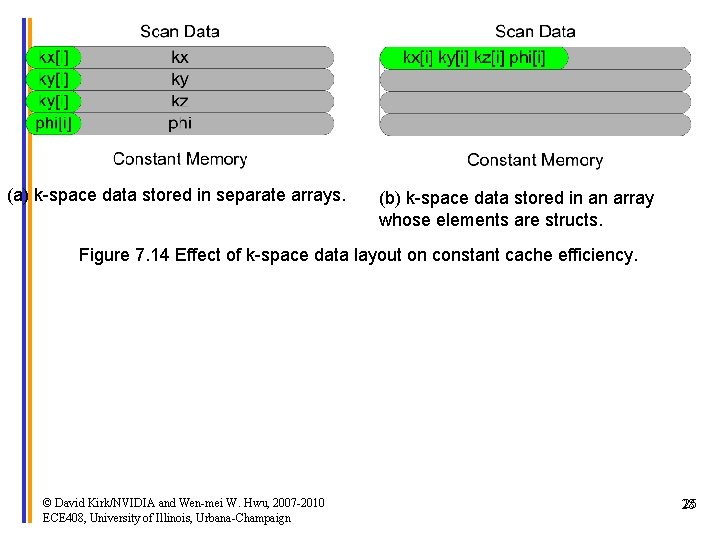

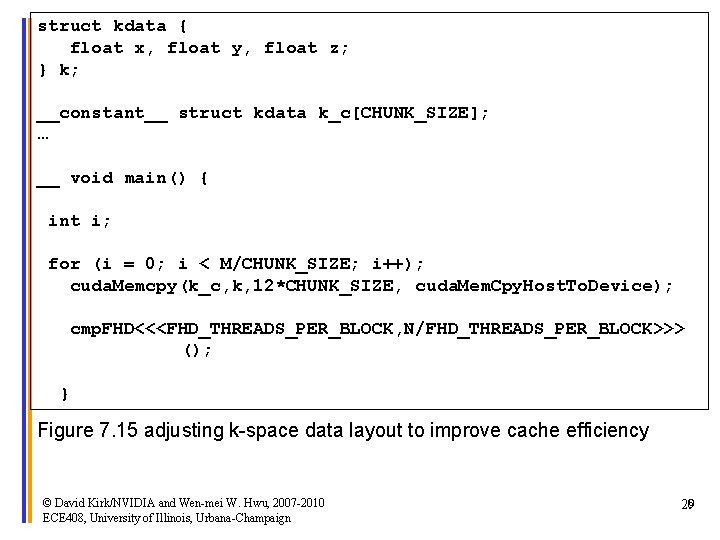

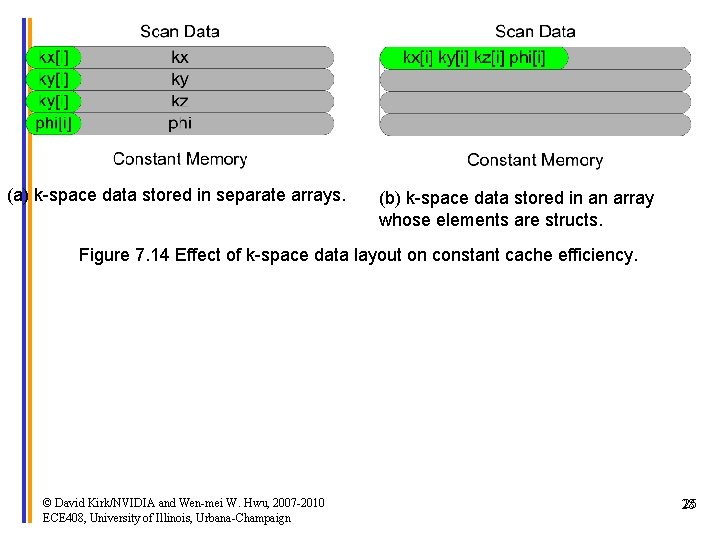

(a) k-space data stored in separate arrays. (b) k-space data stored in an array whose elements are structs. Figure 7. 14 Effect of k-space data layout on constant cache efficiency. © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 25 28

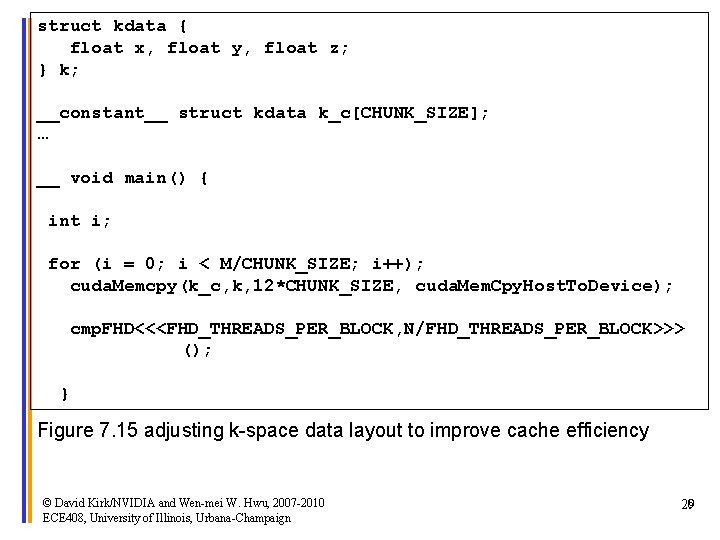

struct kdata { float x, float y, float z; } k; __constant__ struct kdata k_c[CHUNK_SIZE]; … __ void main() { int i; for (i = 0; i < M/CHUNK_SIZE; i++); cuda. Memcpy(k_c, k, 12*CHUNK_SIZE, cuda. Mem. Cpy. Host. To. Device); cmp. FHD<<<FHD_THREADS_PER_BLOCK, N/FHD_THREADS_PER_BLOCK>>> (); } Figure 7. 15 adjusting k-space data layout to improve cache efficiency © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 6 29

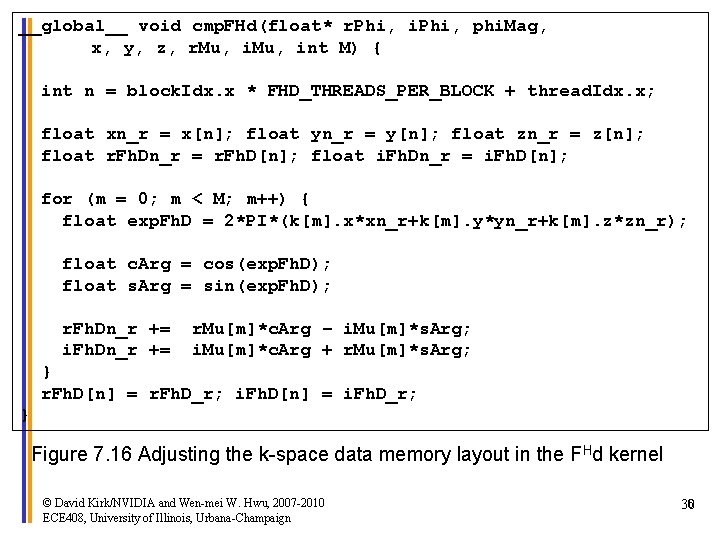

__global__ void cmp. FHd(float* r. Phi, i. Phi, phi. Mag, x, y, z, r. Mu, int M) { int n = block. Idx. x * FHD_THREADS_PER_BLOCK + thread. Idx. x; float xn_r = x[n]; float yn_r = y[n]; float zn_r = z[n]; float r. Fh. Dn_r = r. Fh. D[n]; float i. Fh. Dn_r = i. Fh. D[n]; for (m = 0; m < M; m++) { float exp. Fh. D = 2*PI*(k[m]. x*xn_r+k[m]. y*yn_r+k[m]. z*zn_r); float c. Arg = cos(exp. Fh. D); float s. Arg = sin(exp. Fh. D); r. Fh. Dn_r += i. Fh. Dn_r += r. Mu[m]*c. Arg – i. Mu[m]*s. Arg; i. Mu[m]*c. Arg + r. Mu[m]*s. Arg; } r. Fh. D[n] = r. Fh. D_r; i. Fh. D[n] = i. Fh. D_r; } Figure 7. 16 Adjusting the k-space data memory layout in the FHd kernel © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 6 30

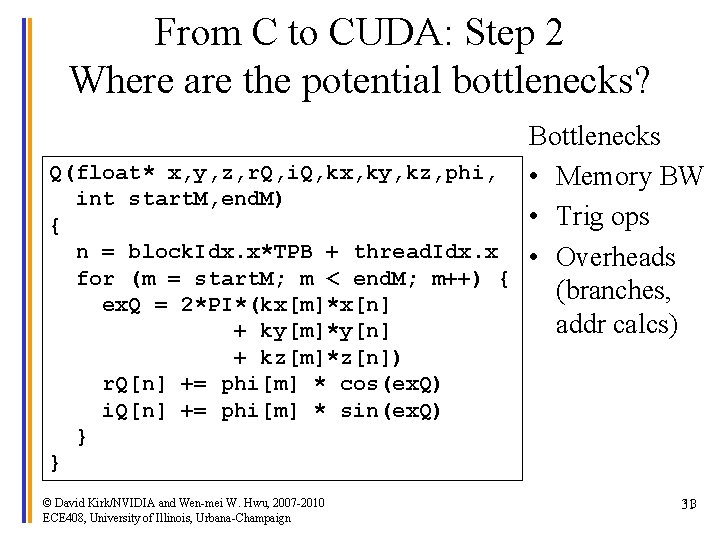

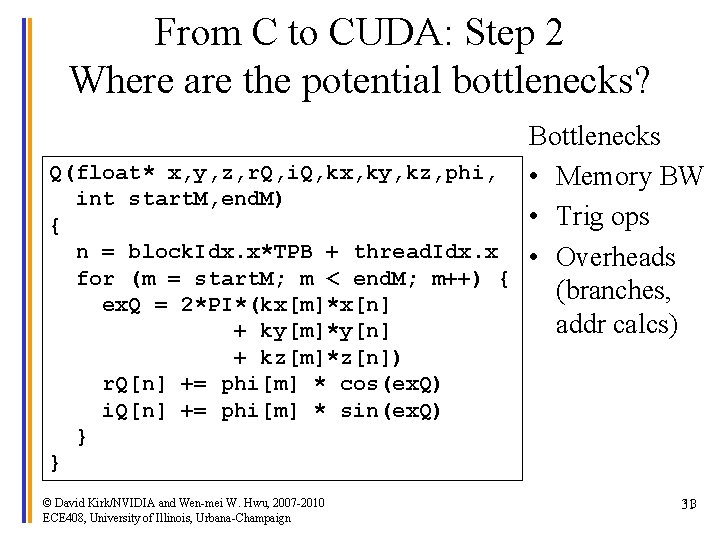

From C to CUDA: Step 2 Where are the potential bottlenecks? Q(float* x, y, z, r. Q, i. Q, kx, ky, kz, phi, int start. M, end. M) { n = block. Idx. x*TPB + thread. Idx. x for (m = start. M; m < end. M; m++) { ex. Q = 2*PI*(kx[m]*x[n] + ky[m]*y[n] + kz[m]*z[n]) r. Q[n] += phi[m] * cos(ex. Q) i. Q[n] += phi[m] * sin(ex. Q) } } © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign Bottlenecks • Memory BW • Trig ops • Overheads (branches, addr calcs) 13 31

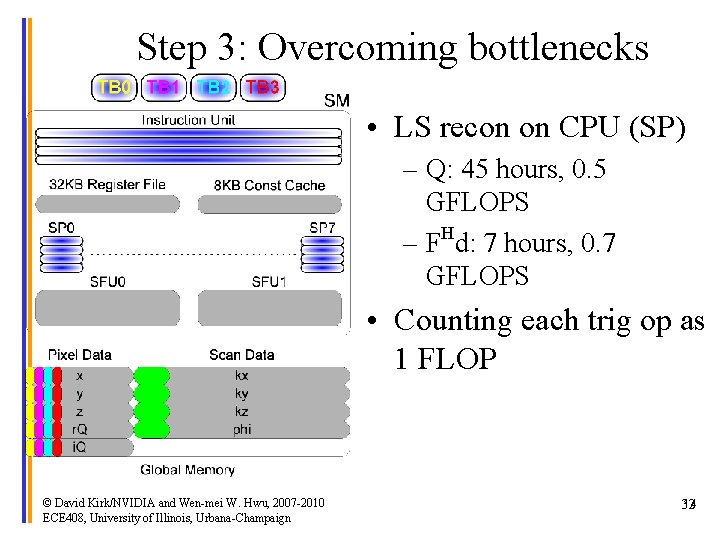

Step 3: Overcoming bottlenecks • LS recon on CPU (SP) – Q: 45 hours, 0. 5 GFLOPS – FHd: 7 hours, 0. 7 GFLOPS • Counting each trig op as 1 FLOP © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 14 32

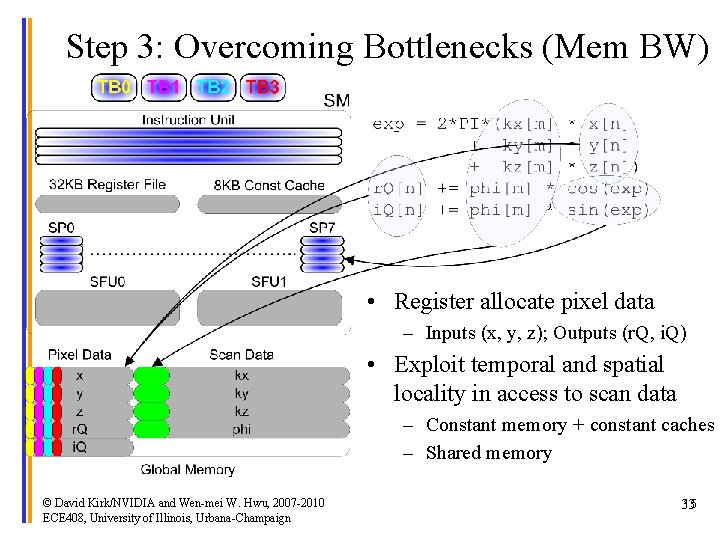

Step 3: Overcoming Bottlenecks (Mem BW) • Register allocate pixel data – Inputs (x, y, z); Outputs (r. Q, i. Q) • Exploit temporal and spatial locality in access to scan data – Constant memory + constant caches – Shared memory © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 15 33

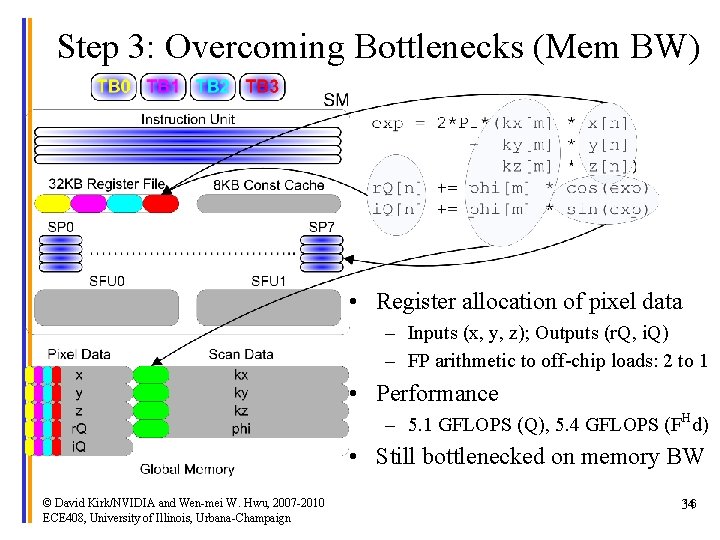

Step 3: Overcoming Bottlenecks (Mem BW) • Register allocation of pixel data – Inputs (x, y, z); Outputs (r. Q, i. Q) – FP arithmetic to off-chip loads: 2 to 1 • Performance – 5. 1 GFLOPS (Q), 5. 4 GFLOPS (FHd) • Still bottlenecked on memory BW © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 16 34

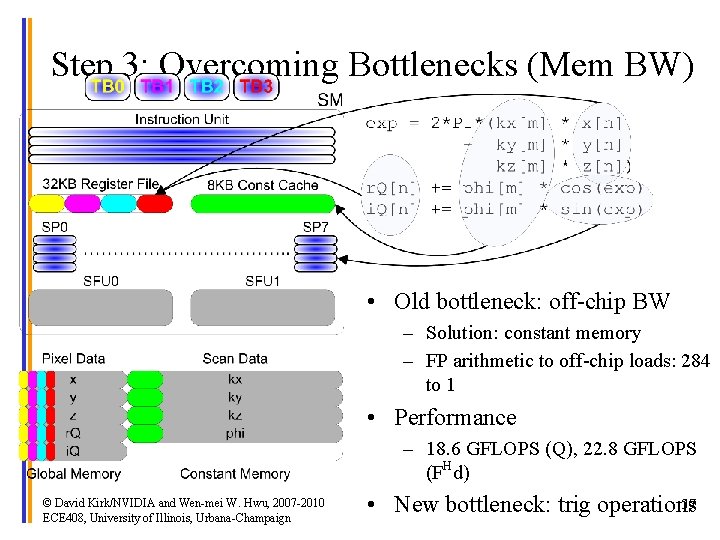

Step 3: Overcoming Bottlenecks (Mem BW) • Old bottleneck: off-chip BW – Solution: constant memory – FP arithmetic to off-chip loads: 284 to 1 • Performance – 18. 6 GFLOPS (Q), 22. 8 GFLOPS (FHd) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 17 35 • New bottleneck: trig operations

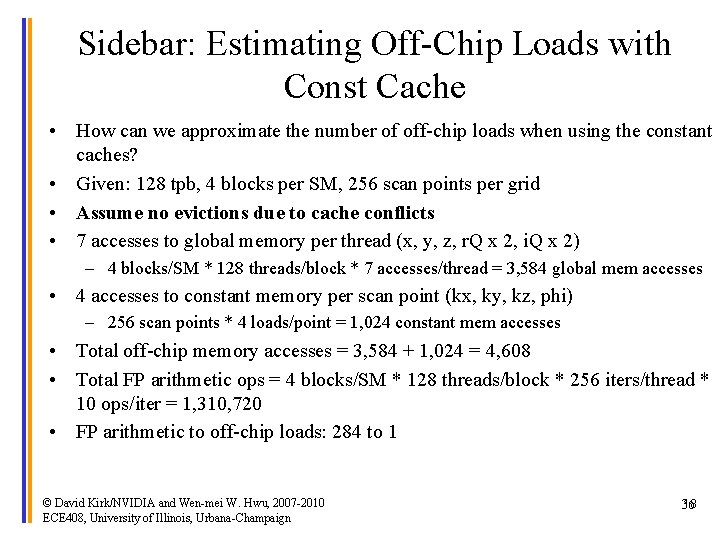

Sidebar: Estimating Off-Chip Loads with Const Cache • How can we approximate the number of off-chip loads when using the constant caches? • Given: 128 tpb, 4 blocks per SM, 256 scan points per grid • Assume no evictions due to cache conflicts • 7 accesses to global memory per thread (x, y, z, r. Q x 2, i. Q x 2) – 4 blocks/SM * 128 threads/block * 7 accesses/thread = 3, 584 global mem accesses • 4 accesses to constant memory per scan point (kx, ky, kz, phi) – 256 scan points * 4 loads/point = 1, 024 constant mem accesses • Total off-chip memory accesses = 3, 584 + 1, 024 = 4, 608 • Total FP arithmetic ops = 4 blocks/SM * 128 threads/block * 256 iters/thread * 10 ops/iter = 1, 310, 720 • FP arithmetic to off-chip loads: 284 to 1 © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 18 36

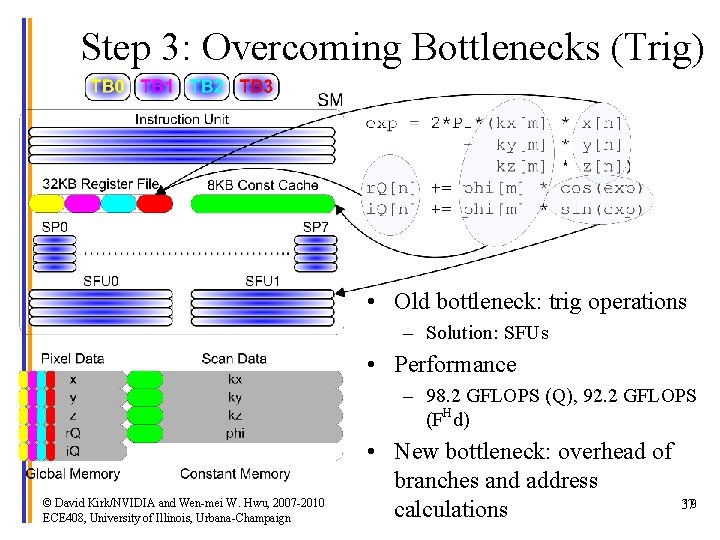

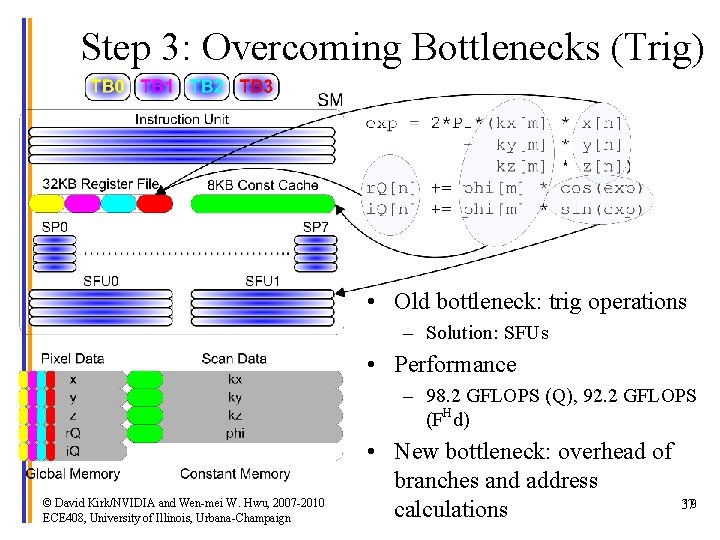

Step 3: Overcoming Bottlenecks (Trig) • Old bottleneck: trig operations – Solution: SFUs • Performance – 98. 2 GFLOPS (Q), 92. 2 GFLOPS (FHd) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign • New bottleneck: overhead of branches and address calculations 19 37

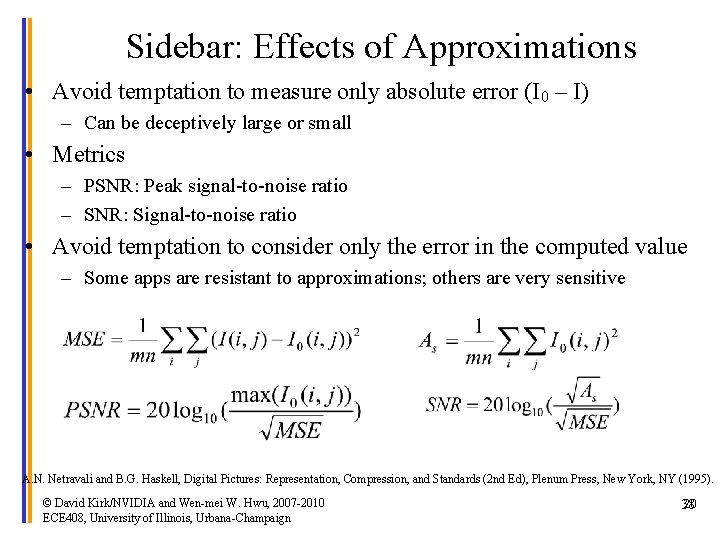

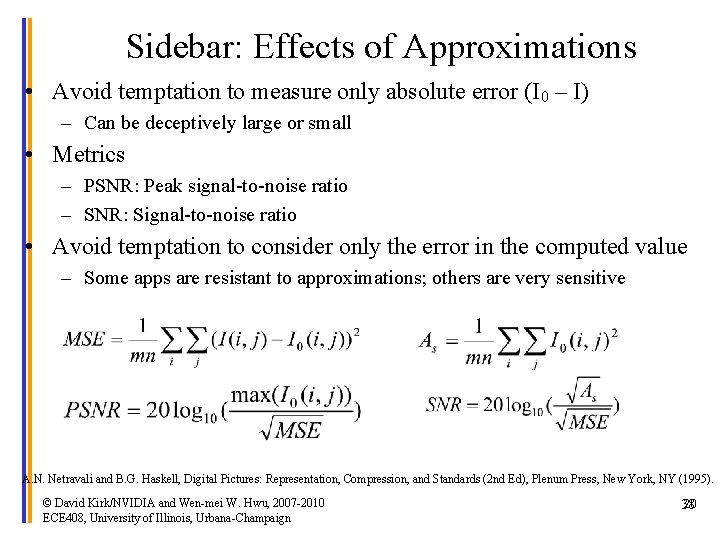

Sidebar: Effects of Approximations • Avoid temptation to measure only absolute error (I 0 – I) – Can be deceptively large or small • Metrics – PSNR: Peak signal-to-noise ratio – SNR: Signal-to-noise ratio • Avoid temptation to consider only the error in the computed value – Some apps are resistant to approximations; others are very sensitive A. N. Netravali and B. G. Haskell, Digital Pictures: Representation, Compression, and Standards (2 nd Ed), Plenum Press, New York, NY (1995). © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 20 38

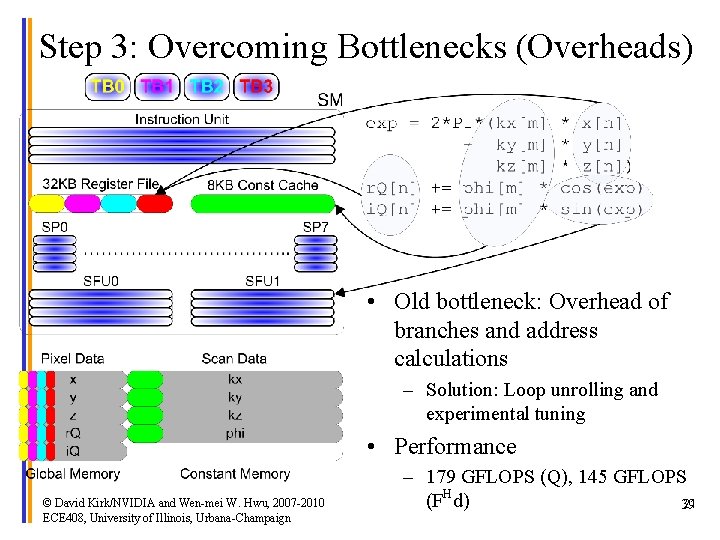

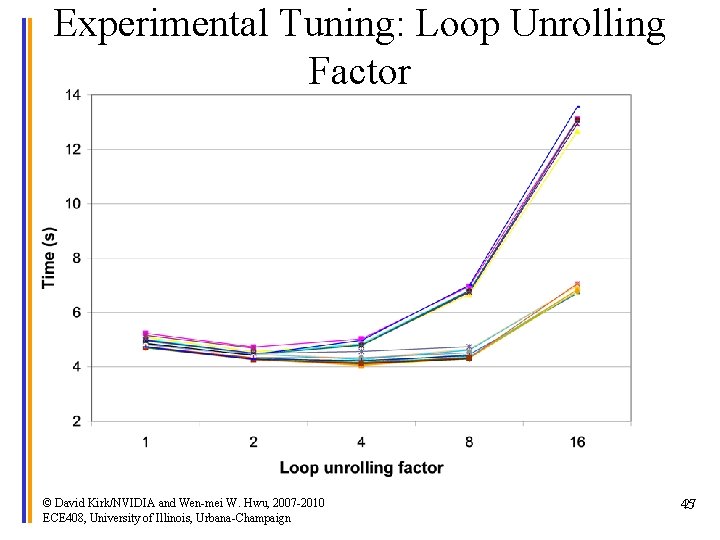

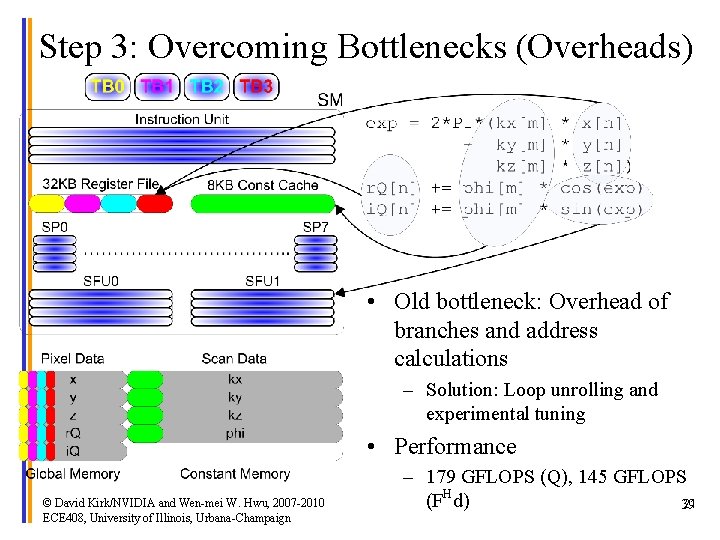

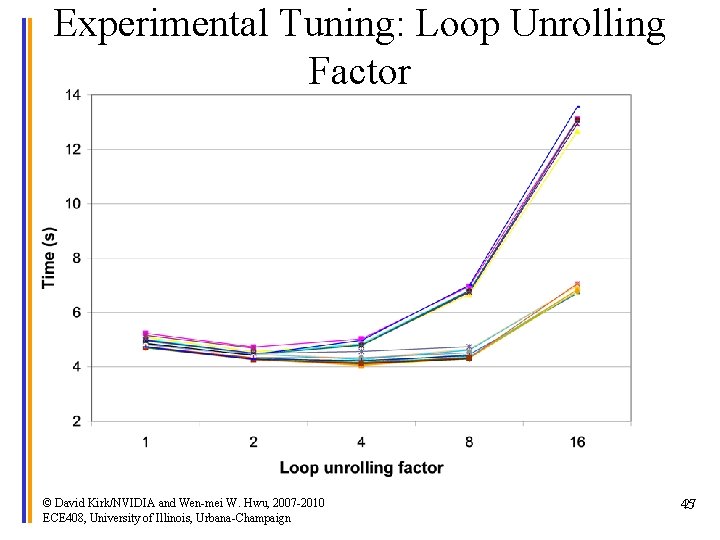

Step 3: Overcoming Bottlenecks (Overheads) • Old bottleneck: Overhead of branches and address calculations – Solution: Loop unrolling and experimental tuning • Performance © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign – 179 GFLOPS (Q), 145 GFLOPS (FHd) 21 39

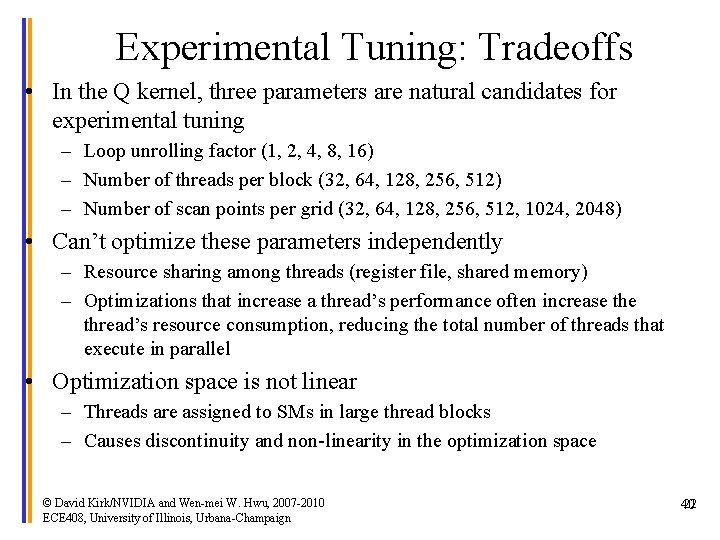

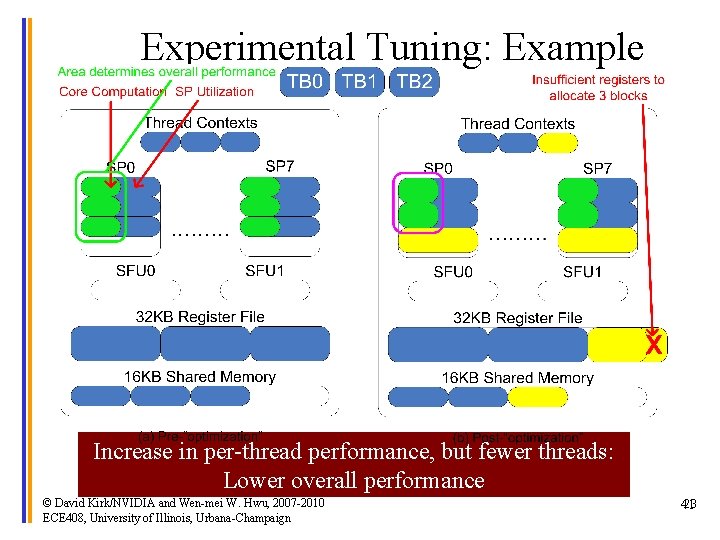

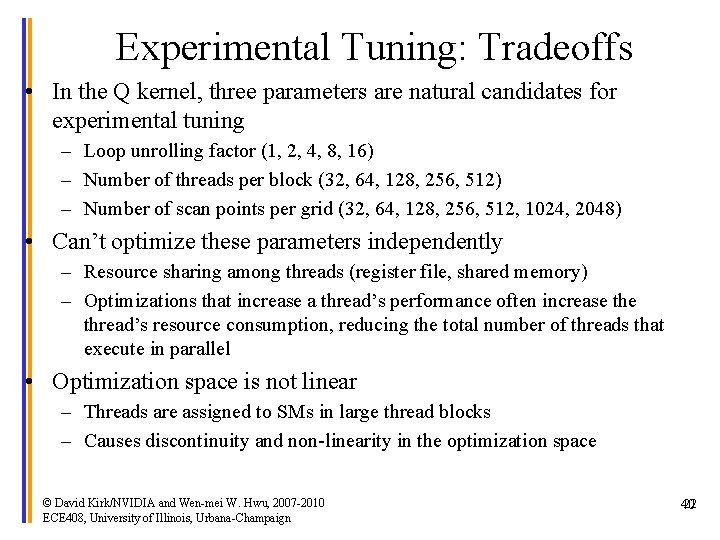

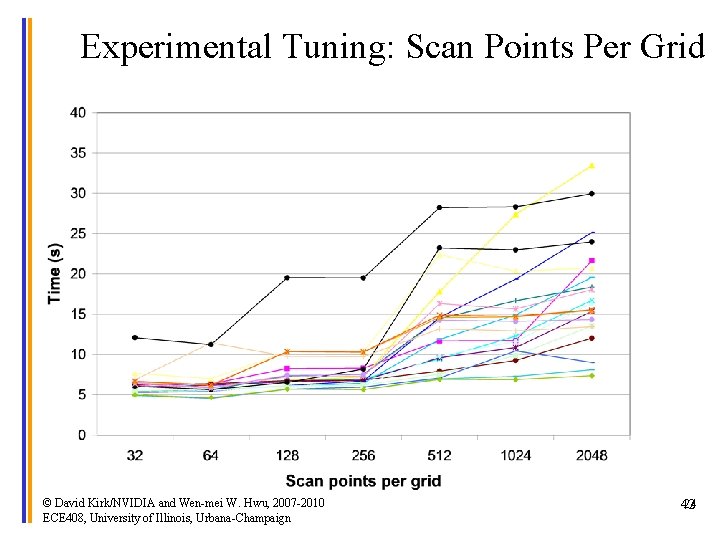

Experimental Tuning: Tradeoffs • In the Q kernel, three parameters are natural candidates for experimental tuning – Loop unrolling factor (1, 2, 4, 8, 16) – Number of threads per block (32, 64, 128, 256, 512) – Number of scan points per grid (32, 64, 128, 256, 512, 1024, 2048) • Can’t optimize these parameters independently – Resource sharing among threads (register file, shared memory) – Optimizations that increase a thread’s performance often increase thread’s resource consumption, reducing the total number of threads that execute in parallel • Optimization space is not linear – Threads are assigned to SMs in large thread blocks – Causes discontinuity and non-linearity in the optimization space © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 22 40

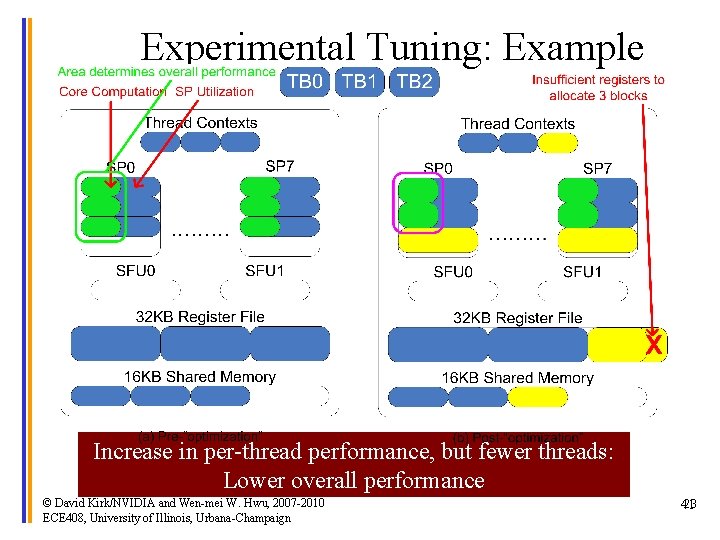

Experimental Tuning: Example Increase in per-thread performance, but fewer threads: Lower overall performance © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 23 41

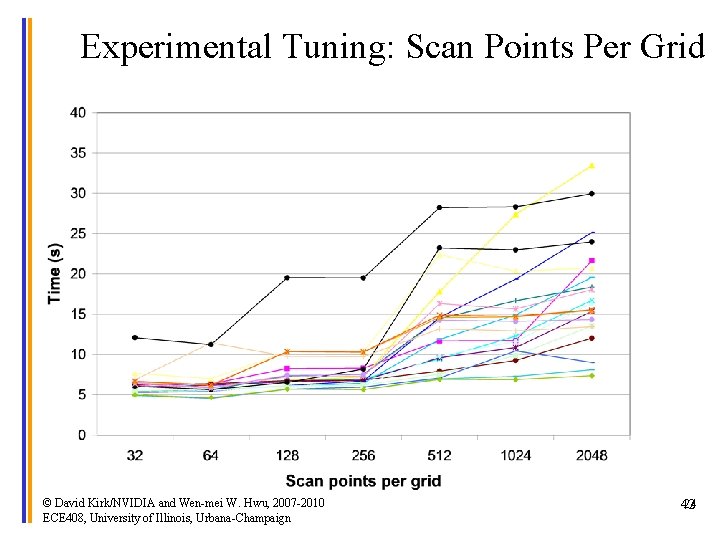

Experimental Tuning: Scan Points Per Grid © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 24 42

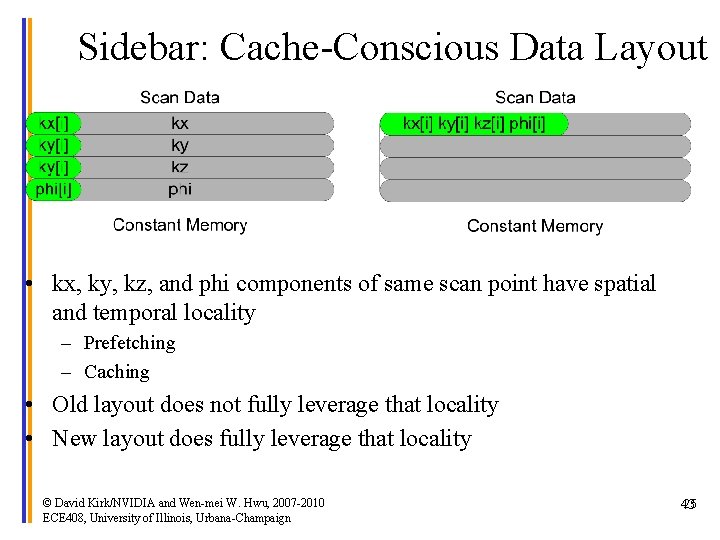

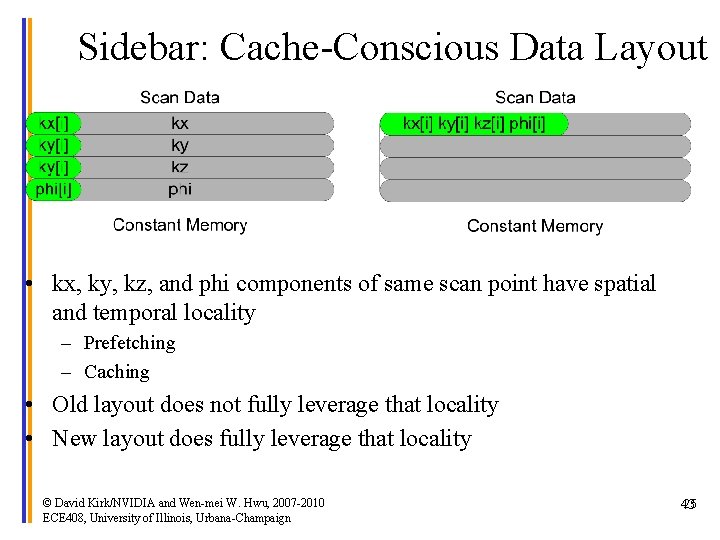

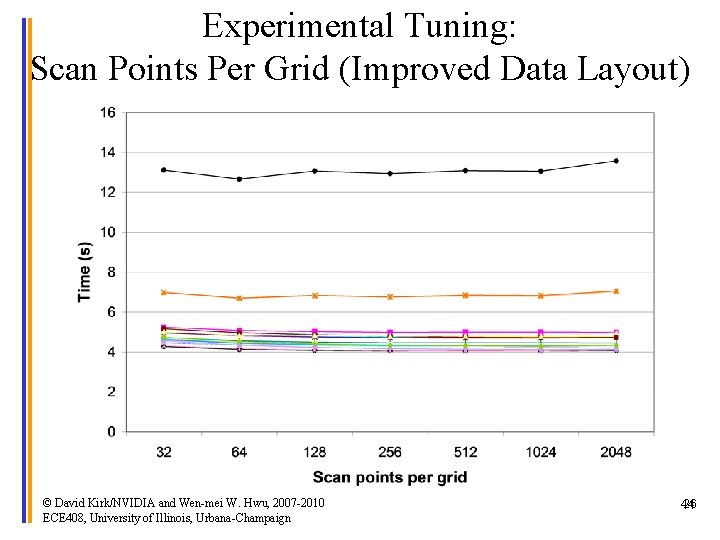

Sidebar: Cache-Conscious Data Layout • kx, ky, kz, and phi components of same scan point have spatial and temporal locality – Prefetching – Caching • Old layout does not fully leverage that locality • New layout does fully leverage that locality © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 25 43

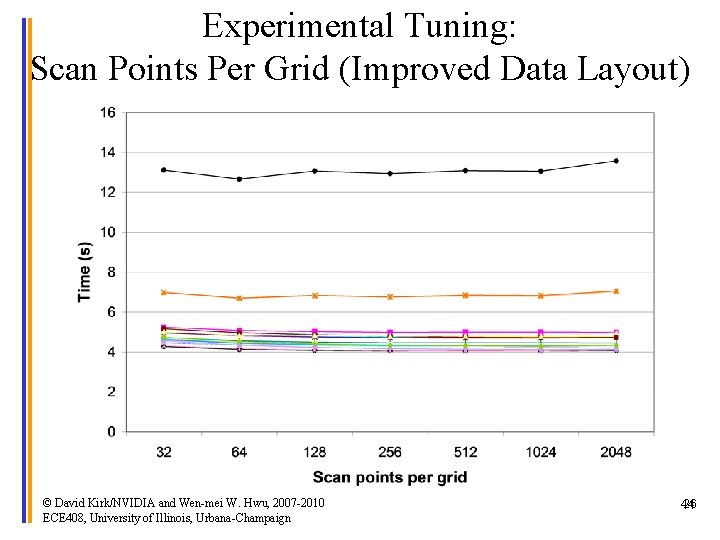

Experimental Tuning: Scan Points Per Grid (Improved Data Layout) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 26 44

Experimental Tuning: Loop Unrolling Factor © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 27 45

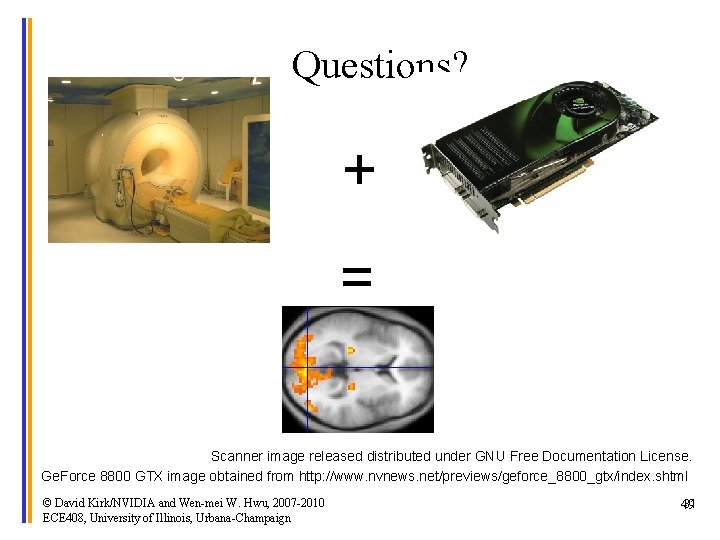

Sidebar: Optimizing the CPU Implementation • Optimizing the CPU implementation of your application is very important – Often, the transformations that increase performance on CPU also increase performance on GPU (and vice-versa) – The research community won’t take your results seriously if your baseline is crippled • Useful optimizations – – Data tiling SIMD vectorization (SSE) Fast math libraries (AMD, Intel) Classical optimizations (loop unrolling, etc) • Intel compiler (icc, icpc) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 28 46

Summary of Results FHd Q Reconstruction Run Time (m) GFLOP Linear Solver (m) Recon. Time (m) Gridding + FFT (CPU, DP) N/A N/A N/A 0. 39 LS (CPU, DP) 4009. 0 0. 3 518. 0 0. 4 1. 59 519. 59 LS (CPU, SP) 2678. 7 0. 5 342. 3 0. 7 1. 61 343. 91 260. 2 5. 1 41. 0 5. 4 1. 65 42. 65 LS (GPU, CMem) 72. 0 18. 6 9. 8 22. 8 1. 57 11. 37 LS (GPU, CMem, SFU) 13. 6 98. 2 2. 4 92. 2 1. 60 4. 00 LS (GPU, CMem, SFU, Exp) 7. 5 178. 9 1. 5 144. 5 1. 69 3. 19 LS (GPU, Naïve) © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 8 X 29 47

Summary of Results FHd Q Reconstruction Run Time (m) GFLOP Run Time (m) N/A N/A N/A 0. 39 LS (CPU, DP) 4009. 0 0. 3 518. 0 0. 4 1. 59 519. 59 LS (CPU, SP) 2678. 7 0. 5 342. 3 0. 7 1. 61 343. 91 LS (GPU, Naïve) 260. 2 5. 1 41. 0 5. 4 1. 65 42. 65 LS (GPU, CMem) 72. 0 18. 6 9. 8 22. 8 1. 57 11. 37 LS (GPU, CMem, SFU) 13. 6 98. 2 2. 4 92. 2 1. 60 4. 00 LS (GPU, CMem, SFU, Exp) 7. 5 178. 9 1. 5 144. 5 1. 69 3. 19 Gridding + FFT (CPU, DP) 357 X © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 228 X GFLOP Linear Solver (m) Recon. Time (m) 108 X 30 48

Questions? + = Scanner image released distributed under GNU Free Documentation License. Ge. Force 8800 GTX image obtained from http: //www. nvnews. net/previews/geforce_8800_gtx/index. shtml © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign 31 49

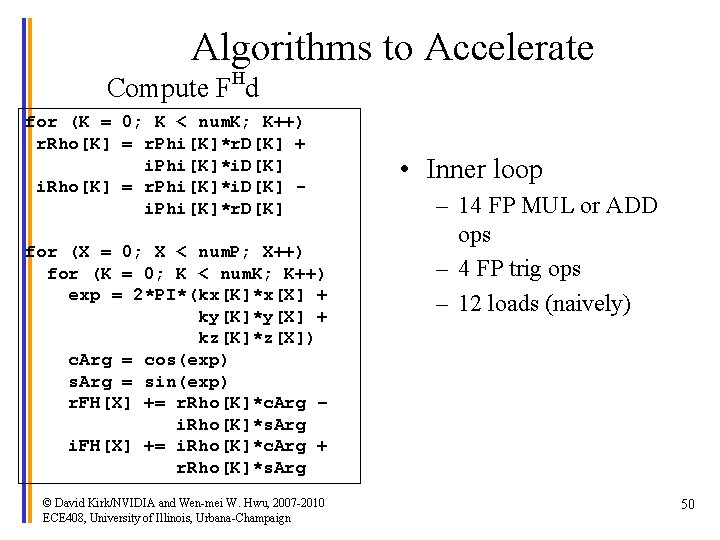

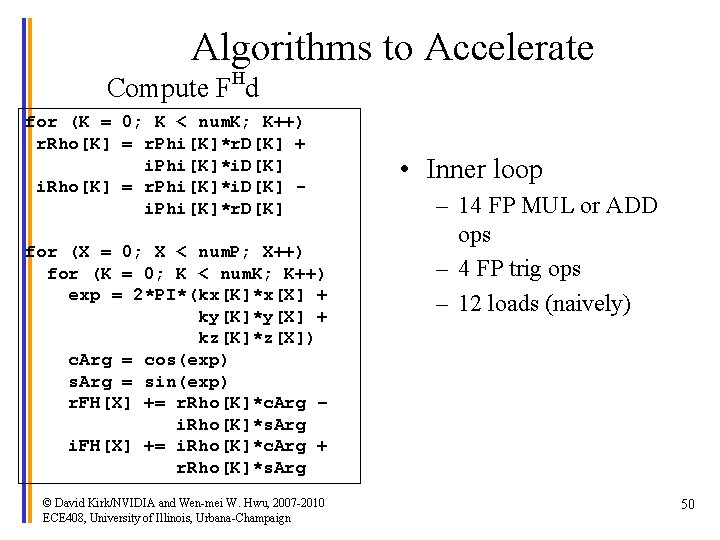

Algorithms to Accelerate Compute FHd for (K = 0; K < num. K; K++) r. Rho[K] = r. Phi[K]*r. D[K] + i. Phi[K]*i. D[K] i. Rho[K] = r. Phi[K]*i. D[K] i. Phi[K]*r. D[K] for (X = 0; X < num. P; X++) for (K = 0; K < num. K; K++) exp = 2*PI*(kx[K]*x[X] + ky[K]*y[X] + kz[K]*z[X]) c. Arg = cos(exp) s. Arg = sin(exp) r. FH[X] += r. Rho[K]*c. Arg – i. Rho[K]*s. Arg i. FH[X] += i. Rho[K]*c. Arg + r. Rho[K]*s. Arg © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 ECE 408, University of Illinois, Urbana-Champaign • Inner loop – 14 FP MUL or ADD ops – 4 FP trig ops – 12 loads (naively) 50

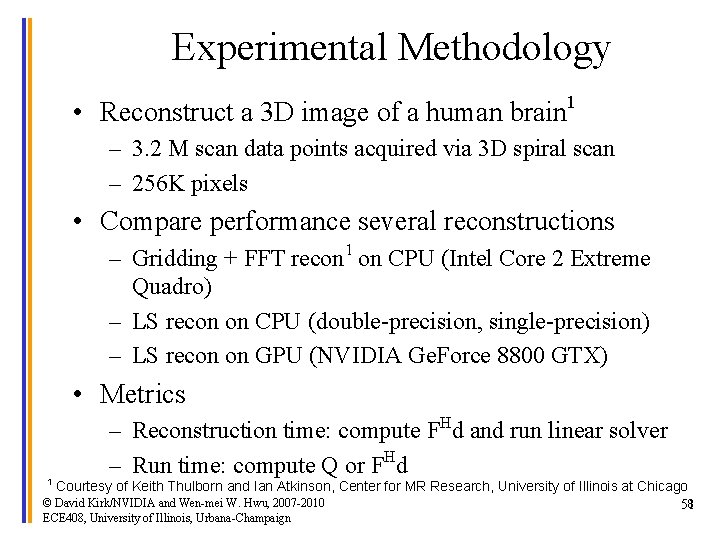

Experimental Methodology • Reconstruct a 3 D image of a human brain 1 – 3. 2 M scan data points acquired via 3 D spiral scan – 256 K pixels • Compare performance several reconstructions – Gridding + FFT recon 1 on CPU (Intel Core 2 Extreme Quadro) – LS recon on CPU (double-precision, single-precision) – LS recon on GPU (NVIDIA Ge. Force 8800 GTX) • Metrics 1 – Reconstruction time: compute FHd and run linear solver – Run time: compute Q or FHd Courtesy of Keith Thulborn and Ian Atkinson, Center for MR Research, University of Illinois at Chicago © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2010 8 51 ECE 408, University of Illinois, Urbana-Champaign