Programming Heterogeneous GPU Systems Jeffrey Vetter Presented to

- Slides: 20

Programming Heterogeneous (GPU) Systems Jeffrey Vetter Presented to Extreme Scale Computing Training Program ANL: St. Charles, IL 2 August 2013 http: //ft. ornl. gov vetter@computer. org

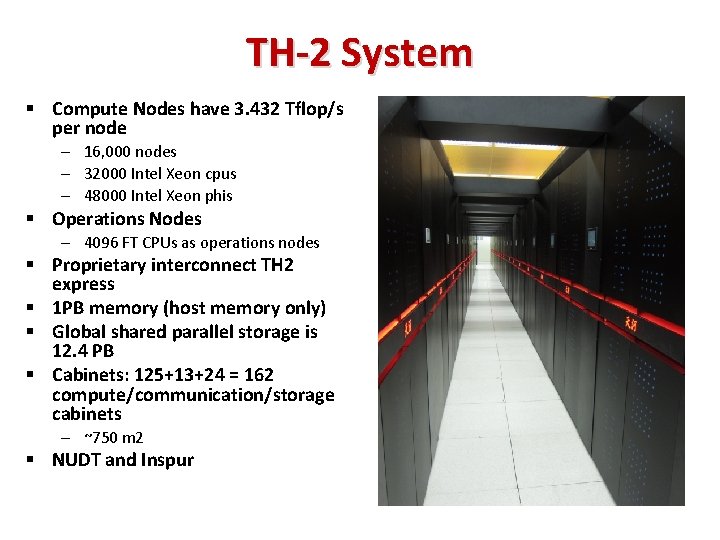

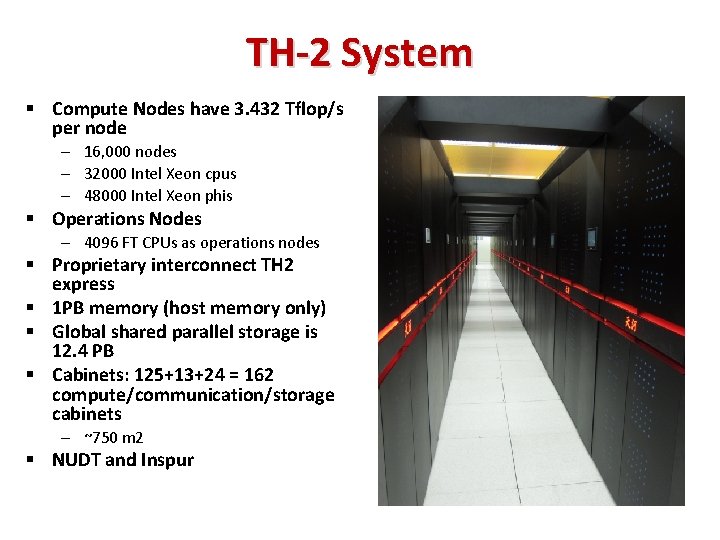

TH-2 System § Compute Nodes have 3. 432 Tflop/s per node – 16, 000 nodes – 32000 Intel Xeon cpus – 48000 Intel Xeon phis § Operations Nodes – 4096 FT CPUs as operations nodes § Proprietary interconnect TH 2 express § 1 PB memory (host memory only) § Global shared parallel storage is 12. 4 PB § Cabinets: 125+13+24 = 162 compute/communication/storage cabinets – ~750 m 2 § NUDT and Inspur

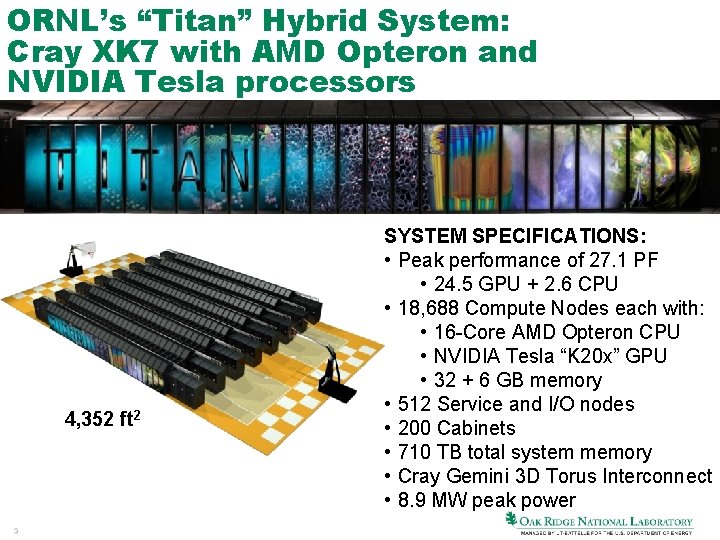

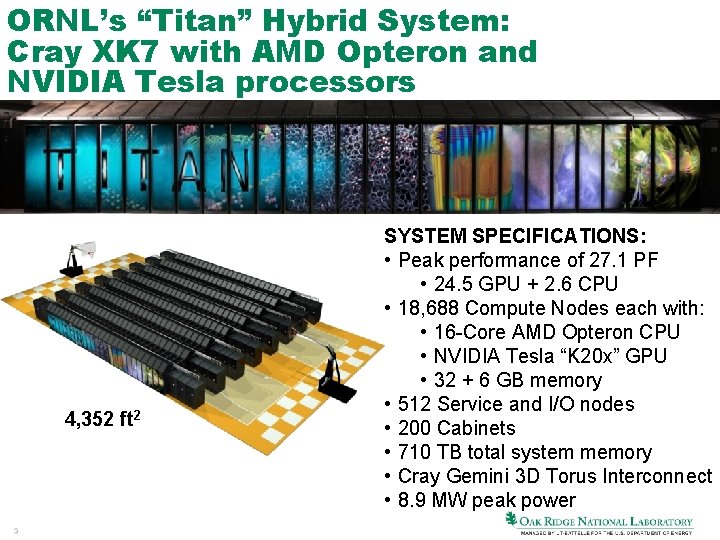

ORNL’s “Titan” Hybrid System: Cray XK 7 with AMD Opteron and NVIDIA Tesla processors 4, 352 ft 2 3 SYSTEM SPECIFICATIONS: • Peak performance of 27. 1 PF • 24. 5 GPU + 2. 6 CPU • 18, 688 Compute Nodes each with: • 16 -Core AMD Opteron CPU • NVIDIA Tesla “K 20 x” GPU • 32 + 6 GB memory • 512 Service and I/O nodes • 200 Cabinets • 710 TB total system memory • Cray Gemini 3 D Torus Interconnect • 8. 9 MW peak power

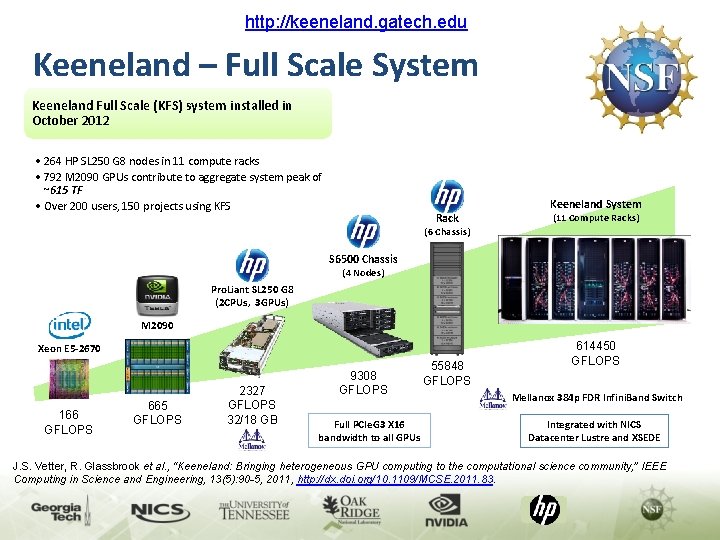

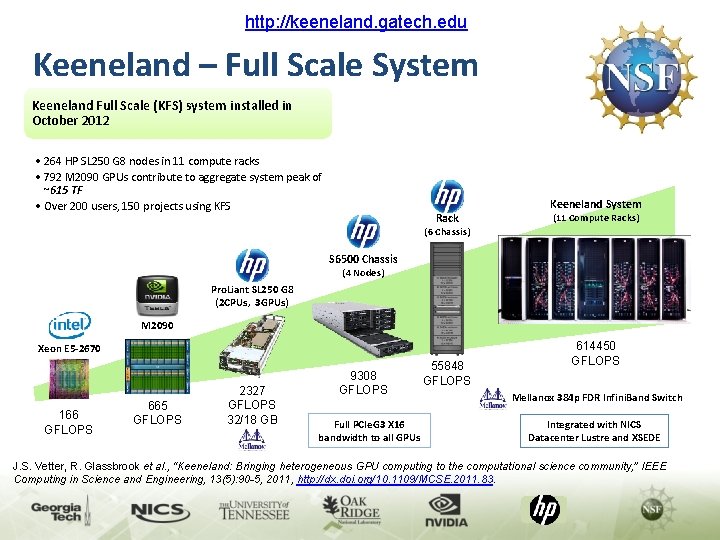

http: //keeneland. gatech. edu Keeneland – Full Scale System Keeneland Full Scale (KFS) system installed in October 2012 • 264 HP SL 250 G 8 nodes in 11 compute racks • 792 M 2090 GPUs contribute to aggregate system peak of ~615 TF • Over 200 users, 150 projects using KFS Rack Keeneland System (11 Compute Racks) (6 Chassis) S 6500 Chassis (4 Nodes) Pro. Liant SL 250 G 8 (2 CPUs, 3 GPUs) M 2090 Xeon E 5 -2670 166 GFLOPS 665 GFLOPS 2327 GFLOPS 32/18 GB 9308 GFLOPS Full PCIe. G 3 X 16 bandwidth to all GPUs 55848 GFLOPS 614450 GFLOPS Mellanox 384 p FDR Infini. Band Switch Integrated with NICS Datacenter Lustre and XSEDE J. S. Vetter, R. Glassbrook et al. , “Keeneland: Bringing heterogeneous GPU computing to the computational science community, ” IEEE Computing in Science and Engineering, 13(5): 90 -5, 2011, http: //dx. doi. org/10. 1109/MCSE. 2011. 83.

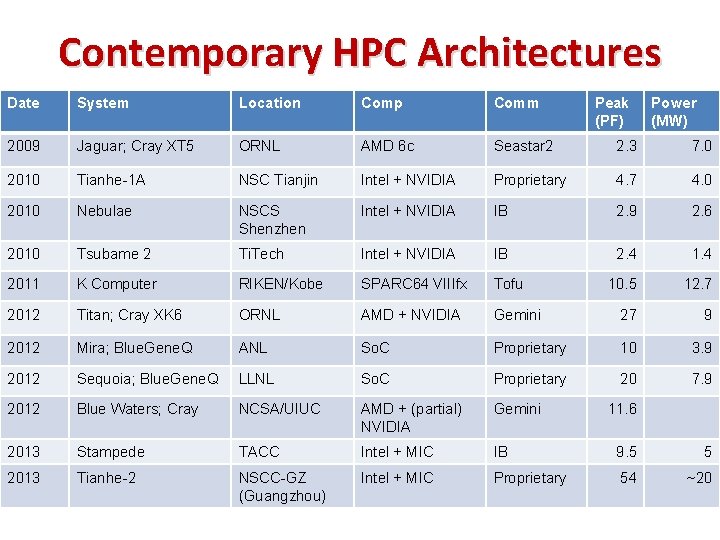

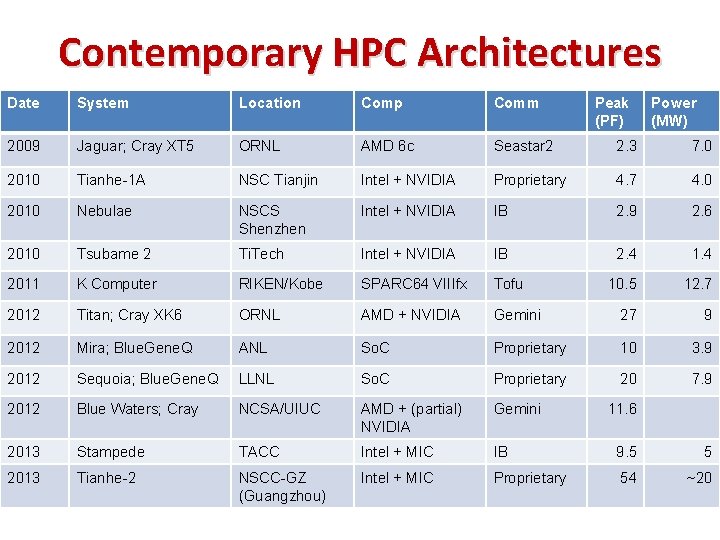

Contemporary HPC Architectures Date System Location Comp Comm Peak (PF) Power (MW) 2009 Jaguar; Cray XT 5 ORNL AMD 6 c Seastar 2 2. 3 7. 0 2010 Tianhe-1 A NSC Tianjin Intel + NVIDIA Proprietary 4. 7 4. 0 2010 Nebulae NSCS Shenzhen Intel + NVIDIA IB 2. 9 2. 6 2010 Tsubame 2 Ti. Tech Intel + NVIDIA IB 2. 4 1. 4 2011 K Computer RIKEN/Kobe SPARC 64 VIIIfx Tofu 10. 5 12. 7 2012 Titan; Cray XK 6 ORNL AMD + NVIDIA Gemini 27 9 2012 Mira; Blue. Gene. Q ANL So. C Proprietary 10 3. 9 2012 Sequoia; Blue. Gene. Q LLNL So. C Proprietary 20 7. 9 2012 Blue Waters; Cray NCSA/UIUC AMD + (partial) NVIDIA Gemini 2013 Stampede TACC Intel + MIC IB 9. 5 5 2013 Tianhe-2 NSCC-GZ (Guangzhou) Intel + MIC Proprietary 54 ~20 11. 6

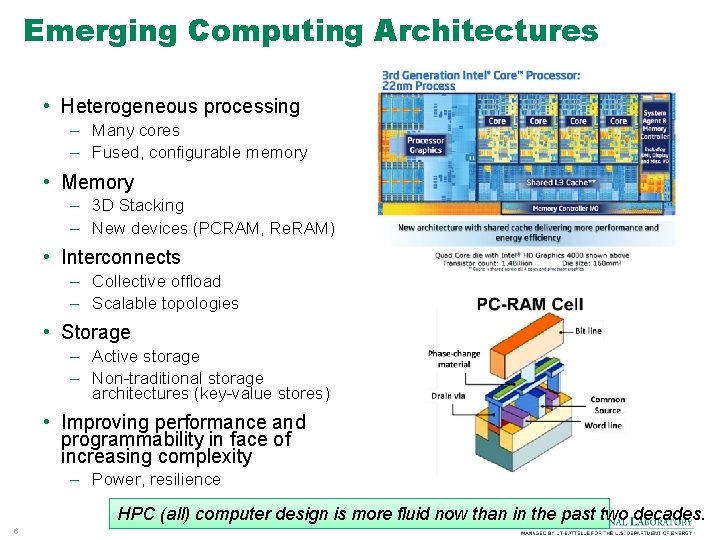

Emerging Computing Architectures • Heterogeneous processing – Many cores – Fused, configurable memory • Memory – 3 D Stacking – New devices (PCRAM, Re. RAM) • Interconnects – Collective offload – Scalable topologies • Storage – Active storage – Non-traditional storage architectures (key-value stores) • Improving performance and programmability in face of increasing complexity – Power, resilience HPC (all) computer design is more fluid now than in the past two decades. 6

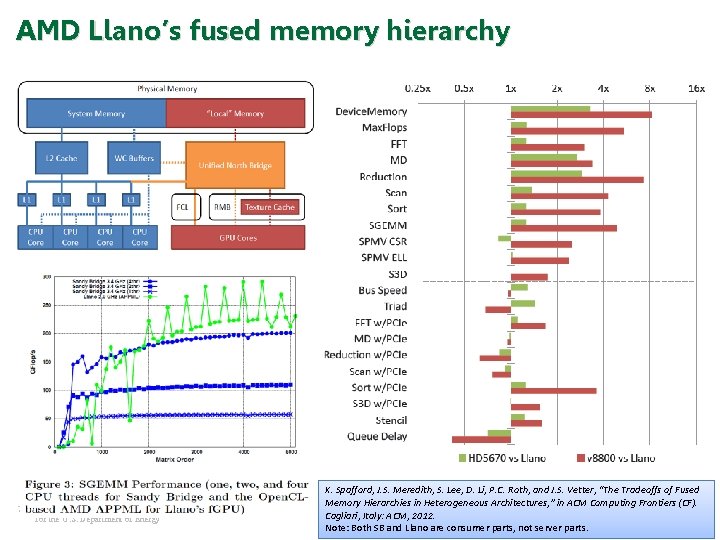

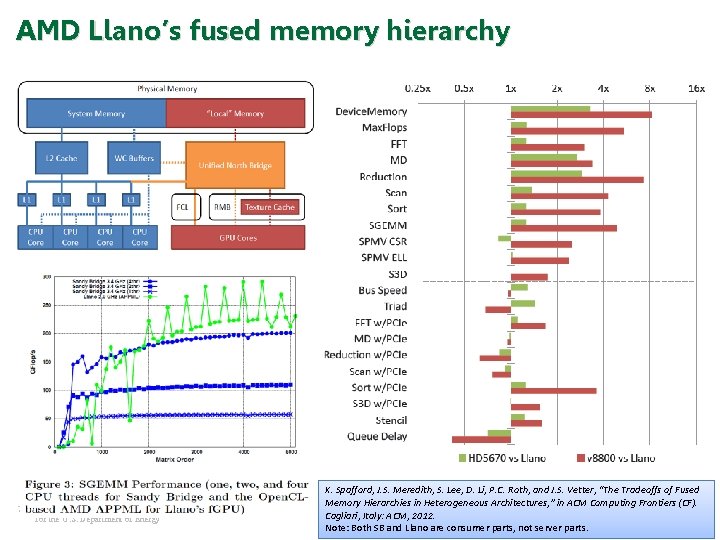

AMD Llano’s fused memory hierarchy 7 Managed by UT-Battelle for the U. S. Department of Energy K. Spafford, J. S. Meredith, S. Lee, D. Li, P. C. Roth, and J. S. Vetter, “The Tradeoffs of Fused Memory Hierarchies in Heterogeneous Architectures, ” in ACM Computing Frontiers (CF). Cagliari, Italy: ACM, 2012. Note: Both SB and Llano are consumer parts, not server parts.

Future Directions in Heterogeneous Computing • Over the next decade: Heterogeneous computing will continue to increase in importance • Manycore • Hardware features – Transactional memory – Random Number Generators – Scatter/Gather – Wider SIMD/AVX 8 • Synergies with BIGDATA, mobile markets, graphics • Top 10 list of features to include from application perspective. Now is the time!

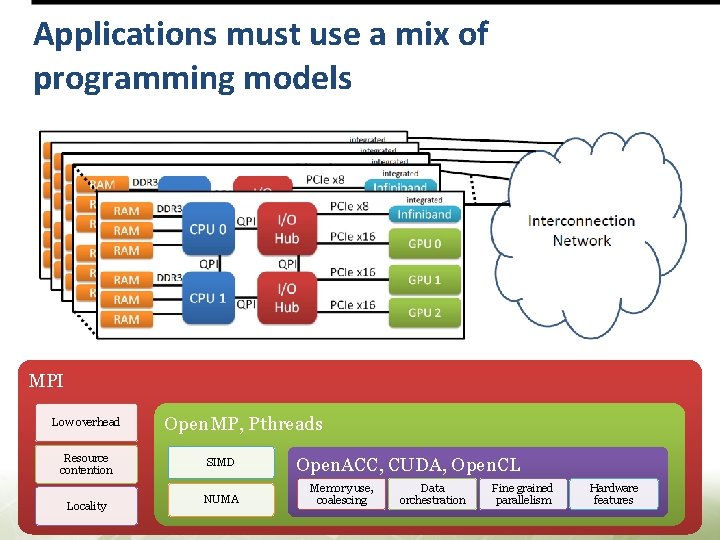

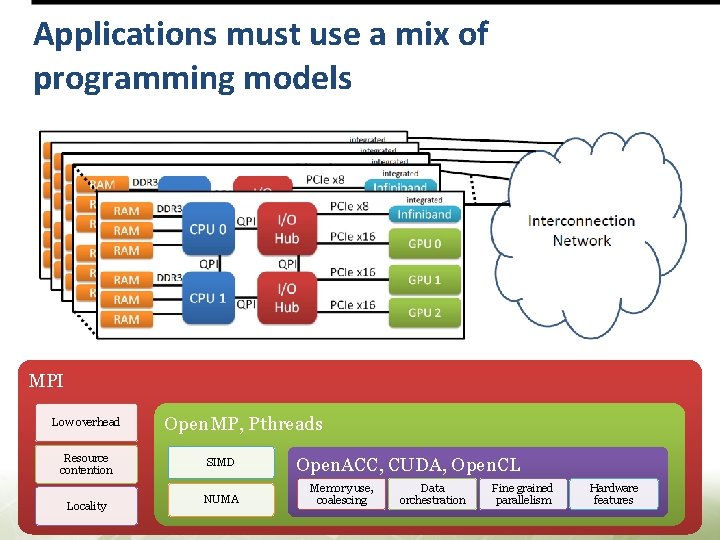

Applications must use a mix of programming models MPI Low overhead Resource contention Locality Open. MP, Pthreads SIMD NUMA Open. ACC, CUDA, Open. CL Memory use, coalescing Data orchestration Fine grained parallelism Hardware features

Communication MPI Profiling

Communication – MPI § MPI dominates HPC § Communication can severely restrict performance and scalability § Developer has explicit control of MPI in application – Communication computation overlap – Collectives § MPI tools provide wealth of information – Statistics – number and size of message sent in certain time – Tracing – event based log per task of all communication events Georgia Tech / Computational Science and Engineering / Vetter 11

MPI Provides the MPI Profiling Layer § MPI Spec provides the MPI Profiling Layer to allow interposition between application and MPI runtime § PERUSE is a recent attempt to provide more detailed information from the runtime for performance measurement – http: //www. mpi-peruse. org/ Georgia Tech / Computational Science and Engineering / Vetter 12

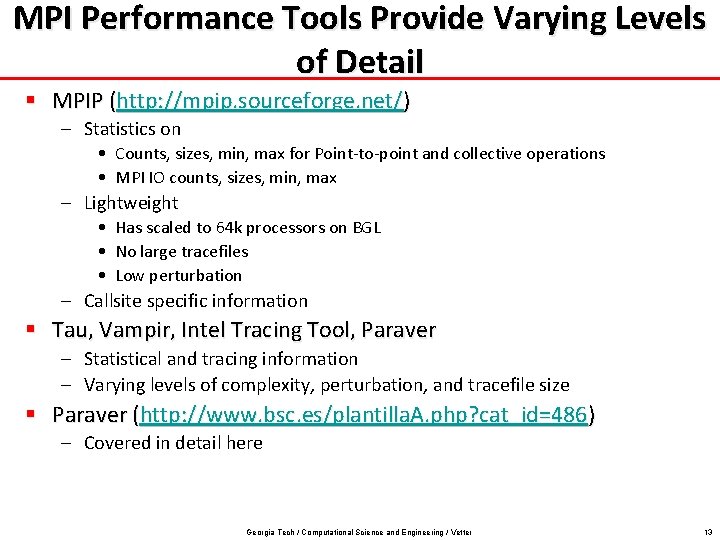

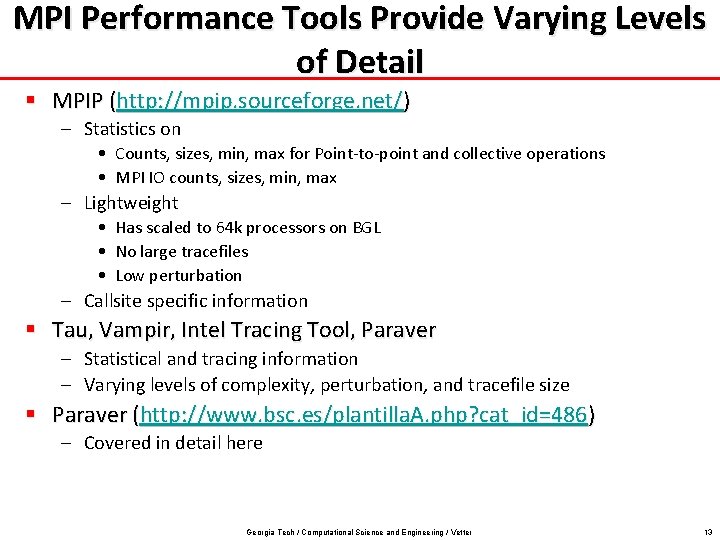

MPI Performance Tools Provide Varying Levels of Detail § MPIP (http: //mpip. sourceforge. net/) – Statistics on • Counts, sizes, min, max for Point-to-point and collective operations • MPI IO counts, sizes, min, max – Lightweight • Has scaled to 64 k processors on BGL • No large tracefiles • Low perturbation – Callsite specific information § Tau, Vampir, Intel Tracing Tool, Paraver – Statistical and tracing information – Varying levels of complexity, perturbation, and tracefile size § Paraver (http: //www. bsc. es/plantilla. A. php? cat_id=486) – Covered in detail here Georgia Tech / Computational Science and Engineering / Vetter 13

MPI Profiling

Why do these systems have different performance on POP? Georgia Tech / Computational Science and Engineering / Vetter 15

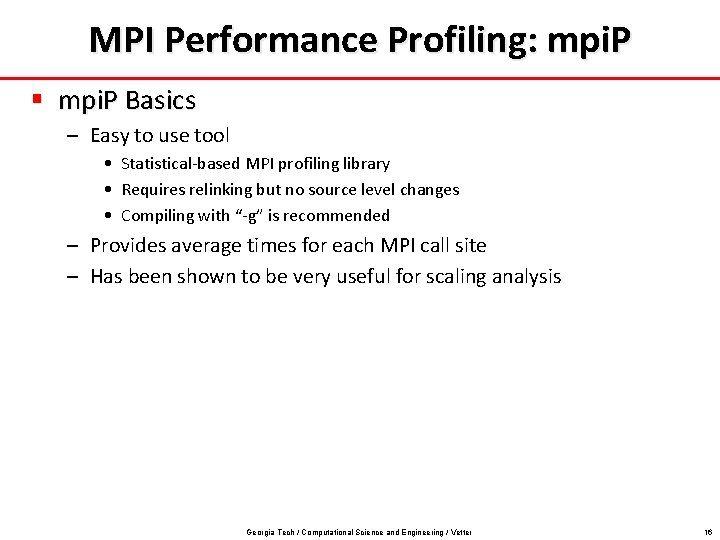

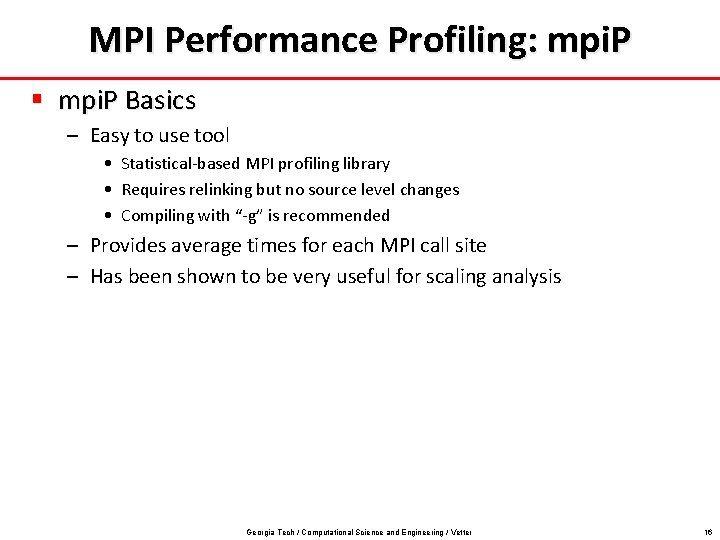

MPI Performance Profiling: mpi. P § mpi. P Basics – Easy to use tool • Statistical-based MPI profiling library • Requires relinking but no source level changes • Compiling with “-g” is recommended – Provides average times for each MPI call site – Has been shown to be very useful for scaling analysis Georgia Tech / Computational Science and Engineering / Vetter 16

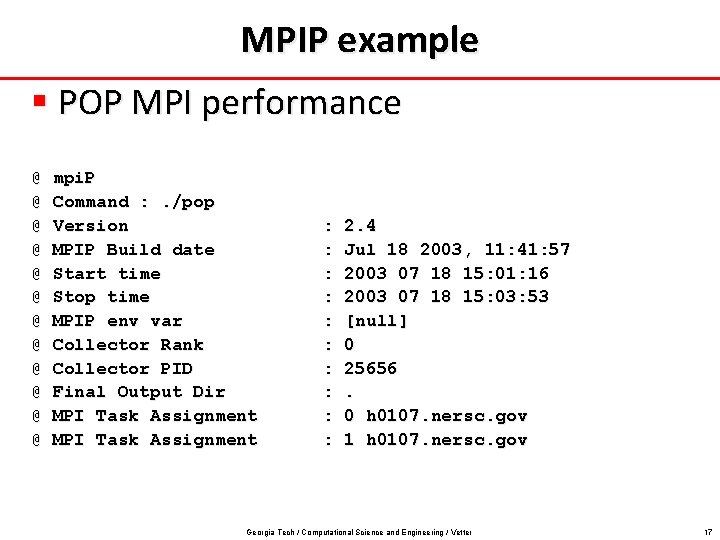

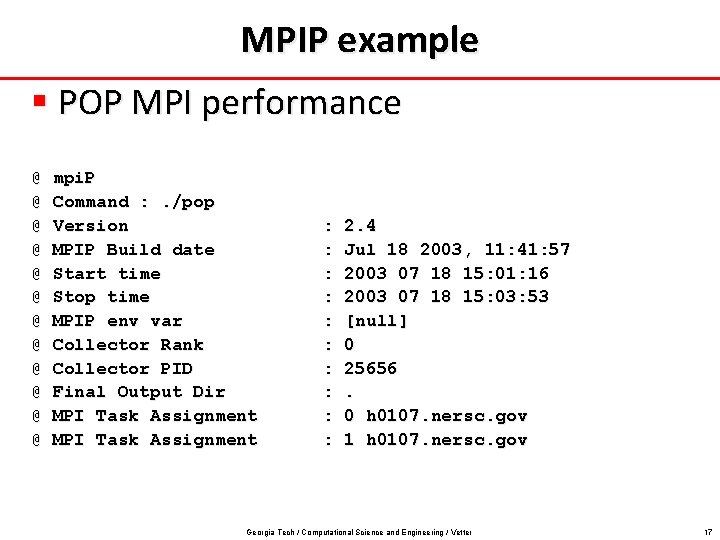

MPIP example § POP MPI performance @ @ @ mpi. P Command : . /pop Version MPIP Build date Start time Stop time MPIP env var Collector Rank Collector PID Final Output Dir MPI Task Assignment : : : : : 2. 4 Jul 18 2003, 11: 41: 57 2003 07 18 15: 01: 16 2003 07 18 15: 03: 53 [null] 0 25656. 0 h 0107. nersc. gov 1 h 0107. nersc. gov Georgia Tech / Computational Science and Engineering / Vetter 17

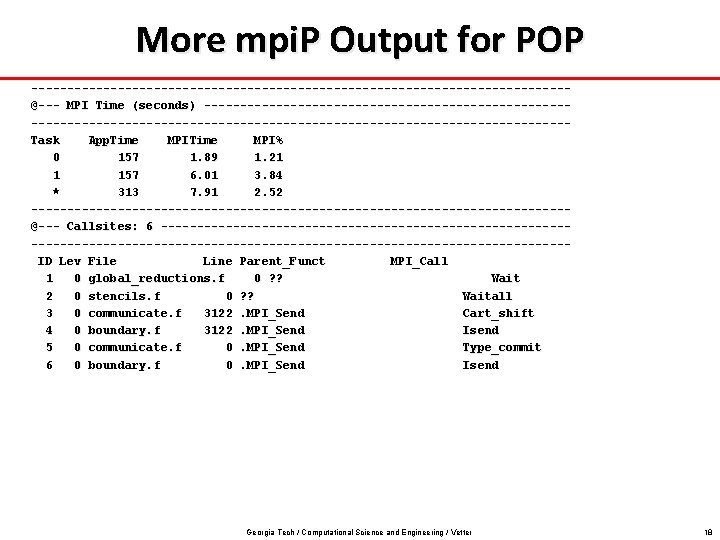

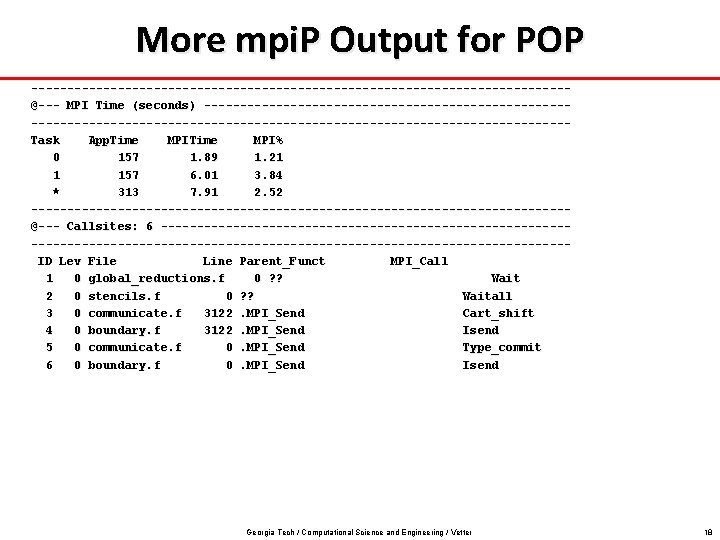

More mpi. P Output for POP -------------------------------------@--- MPI Time (seconds) --------------------------------------------------------------Task App. Time MPI% 0 157 1. 89 1. 21 1 157 6. 01 3. 84 * 313 7. 91 2. 52 -------------------------------------@--- Callsites: 6 -----------------------------------------------------------------ID Lev File Line Parent_Funct MPI_Call 1 0 global_reductions. f 0 ? ? Wait 2 0 stencils. f 0 ? ? Waitall 3 0 communicate. f 3122. MPI_Send Cart_shift 4 0 boundary. f 3122. MPI_Send Isend 5 0 communicate. f 0. MPI_Send Type_commit 6 0 boundary. f 0. MPI_Send Isend Georgia Tech / Computational Science and Engineering / Vetter 18

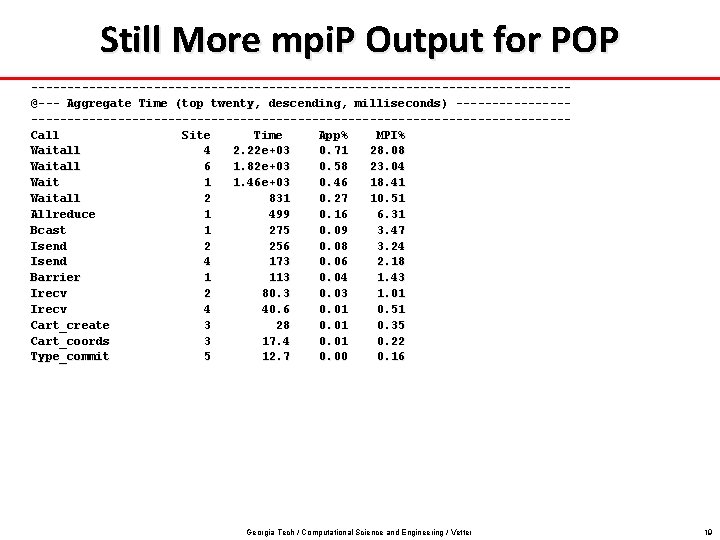

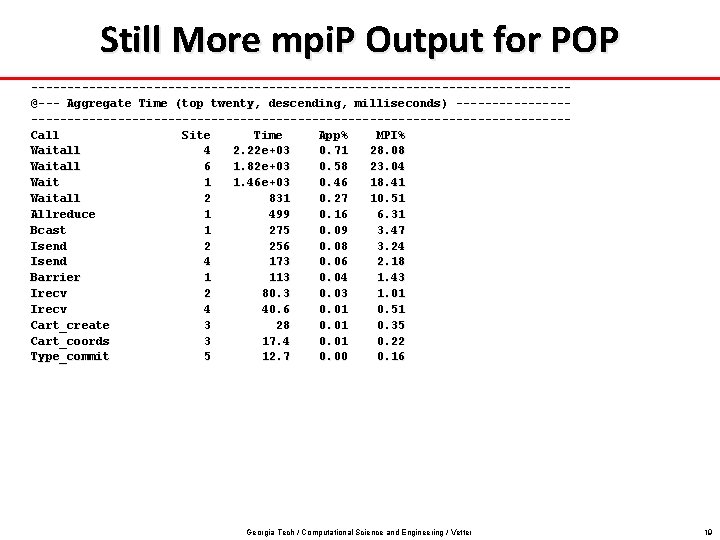

Still More mpi. P Output for POP -------------------------------------@--- Aggregate Time (top twenty, descending, milliseconds) ---------------------------------------------Call Site Time App% MPI% Waitall 4 2. 22 e+03 0. 71 28. 08 Waitall 6 1. 82 e+03 0. 58 23. 04 Wait 1 1. 46 e+03 0. 46 18. 41 Waitall 2 831 0. 27 10. 51 Allreduce 1 499 0. 16 6. 31 Bcast 1 275 0. 09 3. 47 Isend 2 256 0. 08 3. 24 Isend 4 173 0. 06 2. 18 Barrier 1 113 0. 04 1. 43 Irecv 2 80. 3 0. 03 1. 01 Irecv 4 40. 6 0. 01 0. 51 Cart_create 3 28 0. 01 0. 35 Cart_coords 3 17. 4 0. 01 0. 22 Type_commit 5 12. 7 0. 00 0. 16 Georgia Tech / Computational Science and Engineering / Vetter 19

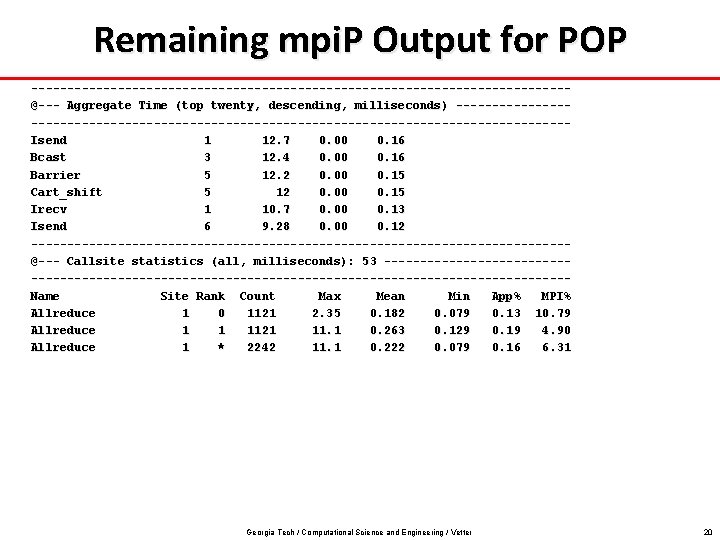

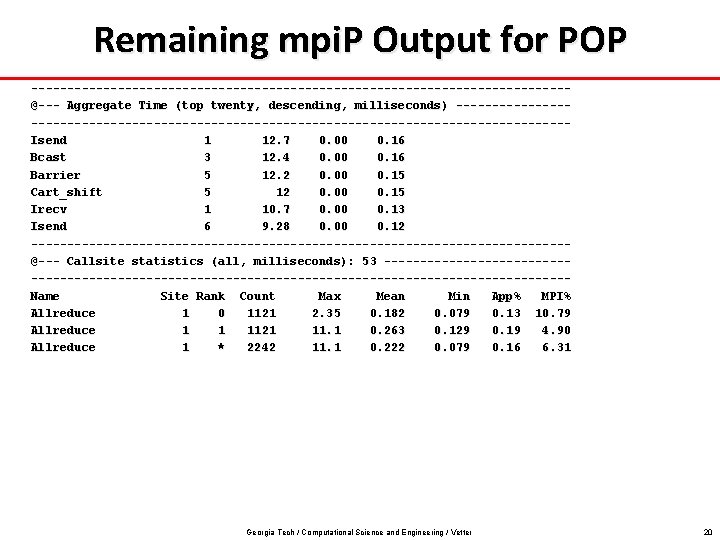

Remaining mpi. P Output for POP -------------------------------------@--- Aggregate Time (top twenty, descending, milliseconds) ---------------------------------------------Isend 1 12. 7 0. 00 0. 16 Bcast 3 12. 4 0. 00 0. 16 Barrier 5 12. 2 0. 00 0. 15 Cart_shift 5 12 0. 00 0. 15 Irecv 1 10. 7 0. 00 0. 13 Isend 6 9. 28 0. 00 0. 12 -------------------------------------@--- Callsite statistics (all, milliseconds): 53 --------------------------------------------------Name Site Rank Count Max Mean Min App% MPI% Allreduce 1 0 1121 2. 35 0. 182 0. 079 0. 13 10. 79 Allreduce 1 1 1121 11. 1 0. 263 0. 129 0. 19 4. 90 Allreduce 1 * 2242 11. 1 0. 222 0. 079 0. 16 6. 31 Georgia Tech / Computational Science and Engineering / Vetter 20