Programming Clusters using MessagePassing Interface MPI Dr Rajkumar

- Slides: 53

Programming Clusters using Message-Passing Interface (MPI) Dr. Rajkumar Buyya Cloud Computing and Distributed Systems (CLOUDS) Laboratory The University of Melbourne, Australia www. cloudbus. org

Outline n n n Introduction to Message Passing Environments Hello. World MPI Program Compiling and Running MPI programs n n n On interactive clusters And Batch clusters Elements of Hello World Program MPI Routines Listing Communication in MPI programs Summary

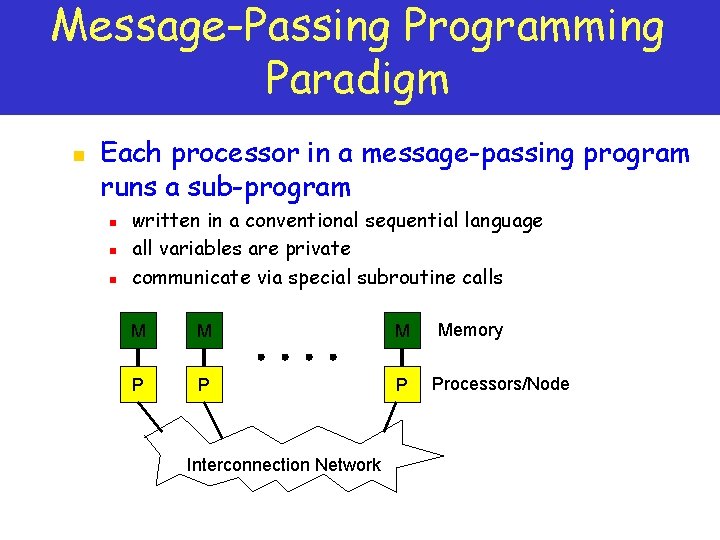

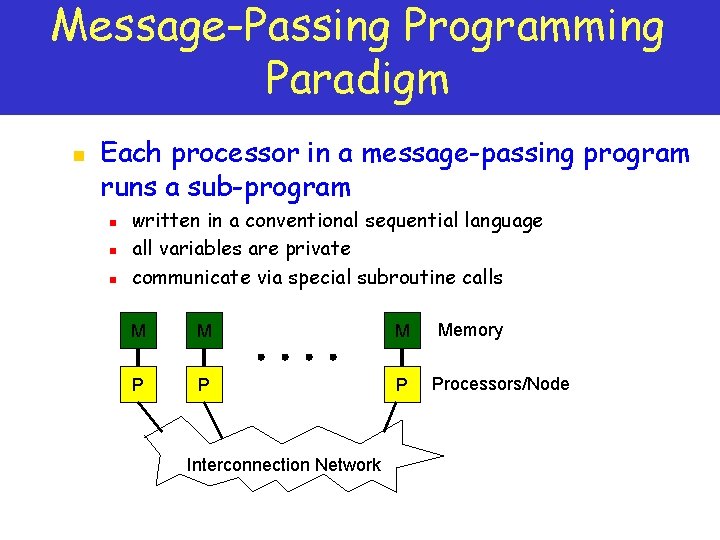

Message-Passing Programming Paradigm n Each processor in a message-passing program runs a sub-program n n n written in a conventional sequential language all variables are private communicate via special subroutine calls M M M P P P Interconnection Network Memory Processors/Node

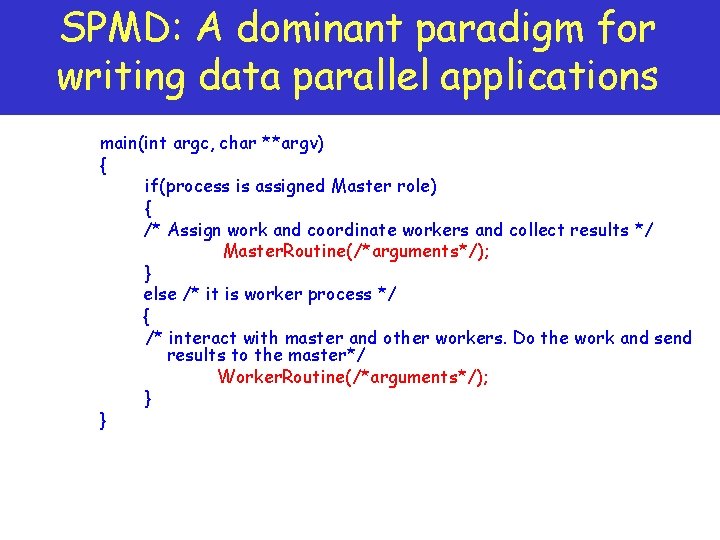

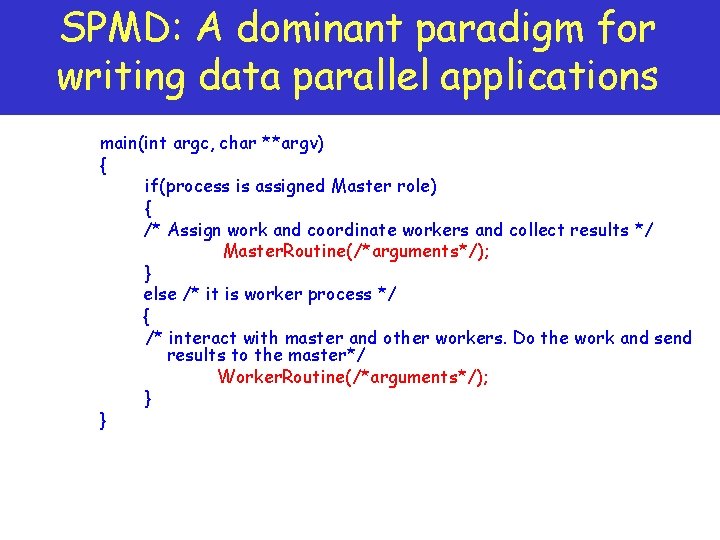

SPMD: A dominant paradigm for writing data parallel applications main(int argc, char **argv) { if(process is assigned Master role) { /* Assign work and coordinate workers and collect results */ Master. Routine(/*arguments*/); } else /* it is worker process */ { /* interact with master and other workers. Do the work and send results to the master*/ Worker. Routine(/*arguments*/); } }

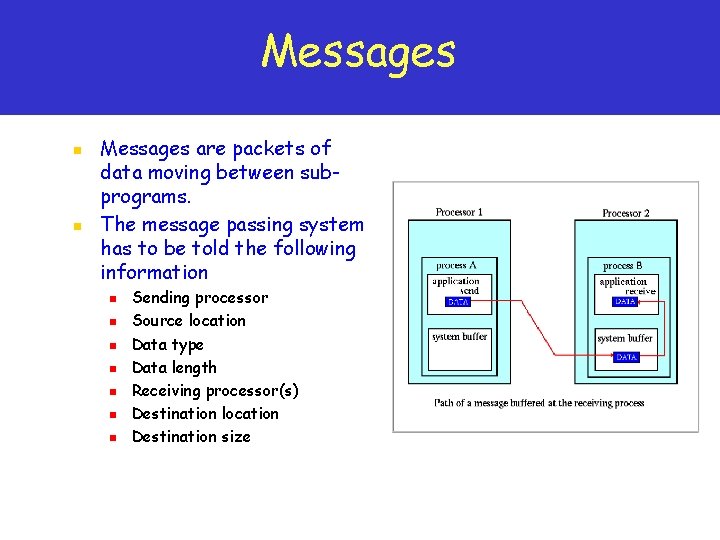

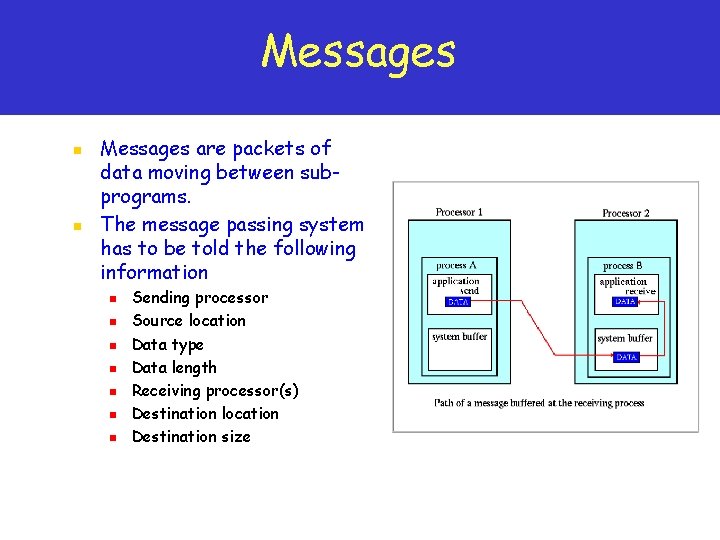

Messages n n Messages are packets of data moving between subprograms. The message passing system has to be told the following information n n n Sending processor Source location Data type Data length Receiving processor(s) Destination location Destination size

Messages n Access: n n Addressing: n n It is important that the receiving process is capable of dealing with the messages it is sent A message passing system is similar to: n n Messages need to have addresses to be sent to Reception: n n Each sub-program needs to be connected to a message passing system Post-office, Phone line, Fax, E-mail, etc Message Types: n Point-to-Point, Collective, Synchronous (telephone)/Asynchronous (Postal)

Message Passing Systems and MPI - www. mpi-forum. org n n Initially, each manufacturer developed their own message passing interface Wide range of features, often incompatible MPI Forum brought together several Vendors and users of HPC systems from US and Europe – overcome above limitations Produced a document defining a standard, called Message Passing Interface (MPI), which is derived from experience or common features/issues addressed by many message-passing libraries. It aimed: n n n to provide source-code portability to allow efficient implementation to provide a high level of functionality to support heterogeneous parallel architectures to support parallel I/O (in MPI 2. 0) MPI 1. 0 contains over 115 routines/functions

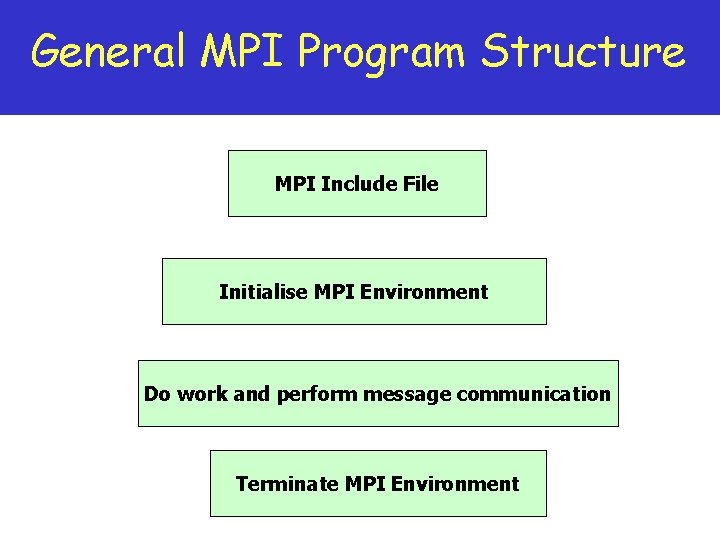

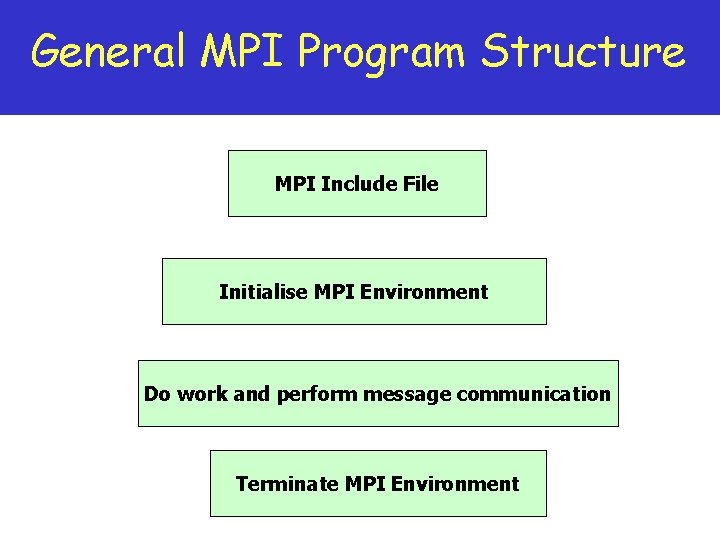

General MPI Program Structure MPI Include File Initialise MPI Environment Do work and perform message communication Terminate MPI Environment

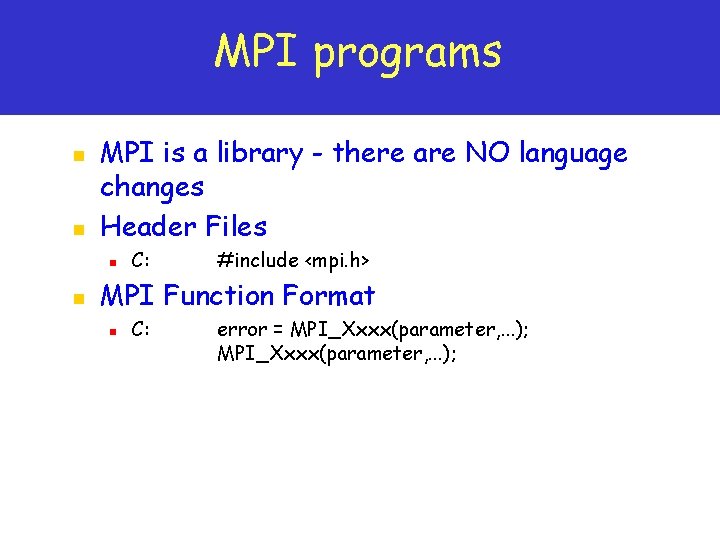

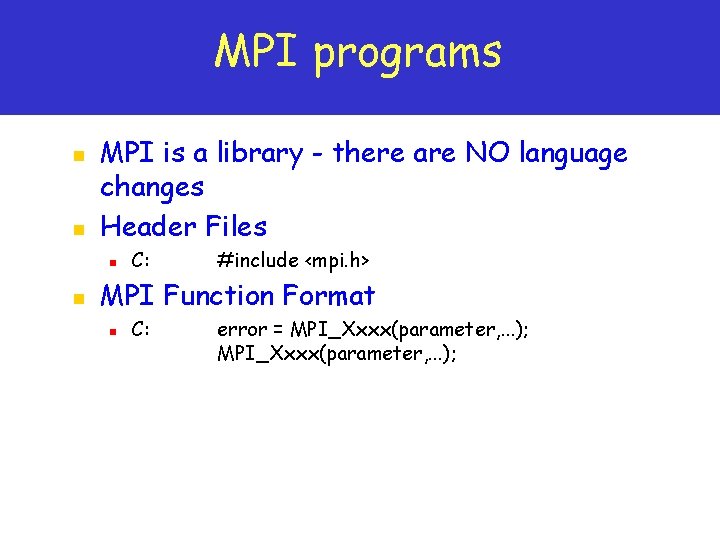

MPI programs n n MPI is a library - there are NO language changes Header Files n n C: #include <mpi. h> MPI Function Format n C: error = MPI_Xxxx(parameter, . . . );

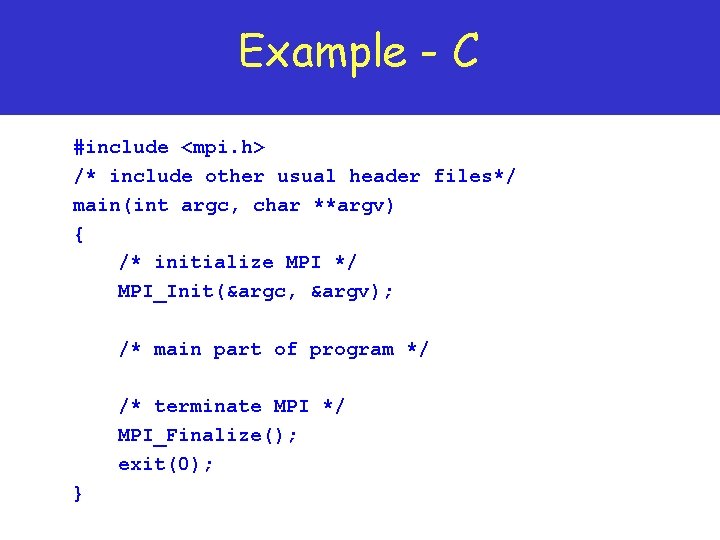

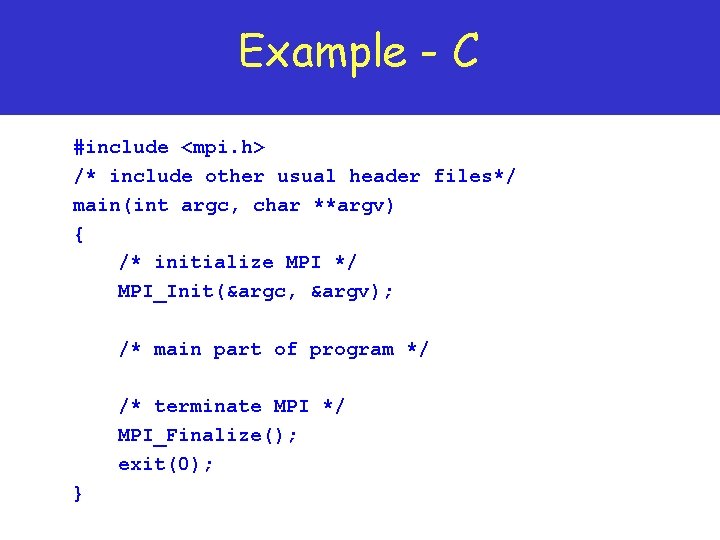

Example - C #include <mpi. h> /* include other usual header files*/ main(int argc, char **argv) { /* initialize MPI */ MPI_Init(&argc, &argv); /* main part of program */ /* terminate MPI */ MPI_Finalize(); exit(0); }

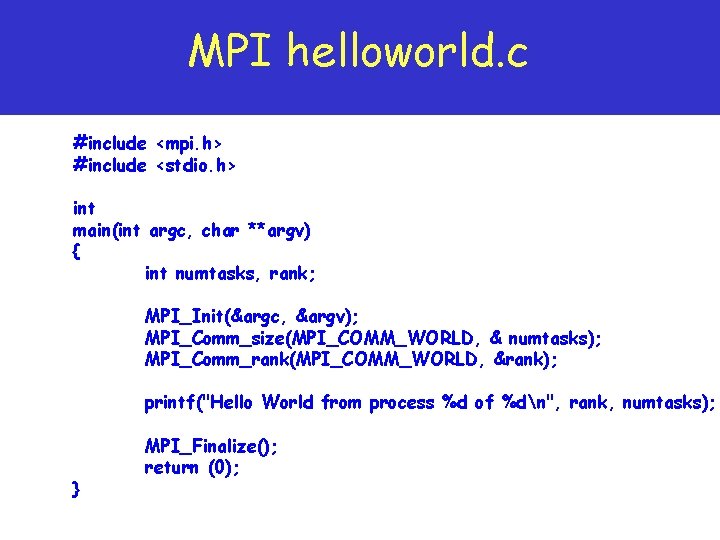

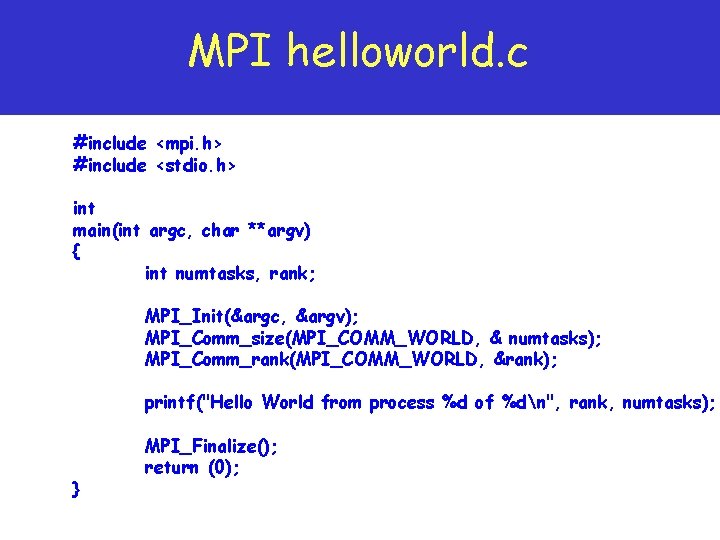

MPI helloworld. c #include <mpi. h> #include <stdio. h> int main(int argc, char **argv) { int numtasks, rank; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, & numtasks); MPI_Comm_rank(MPI_COMM_WORLD, &rank); printf("Hello World from process %d of %dn", rank, numtasks); } MPI_Finalize(); return (0);

MPI Programs Compilation and Execution

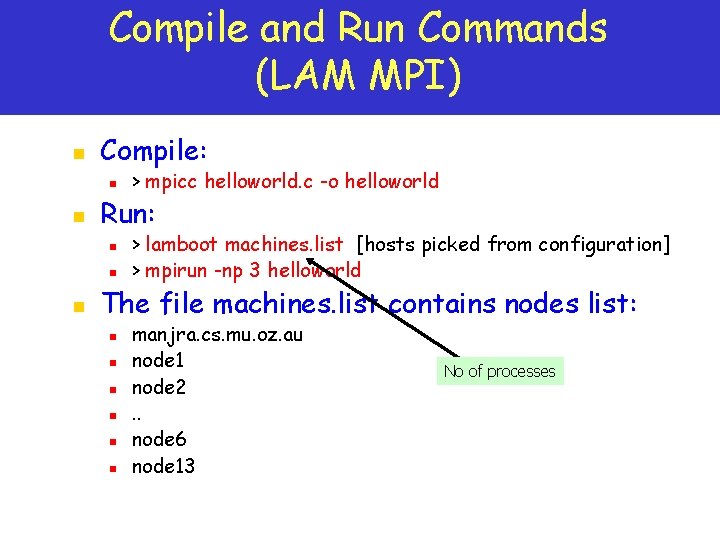

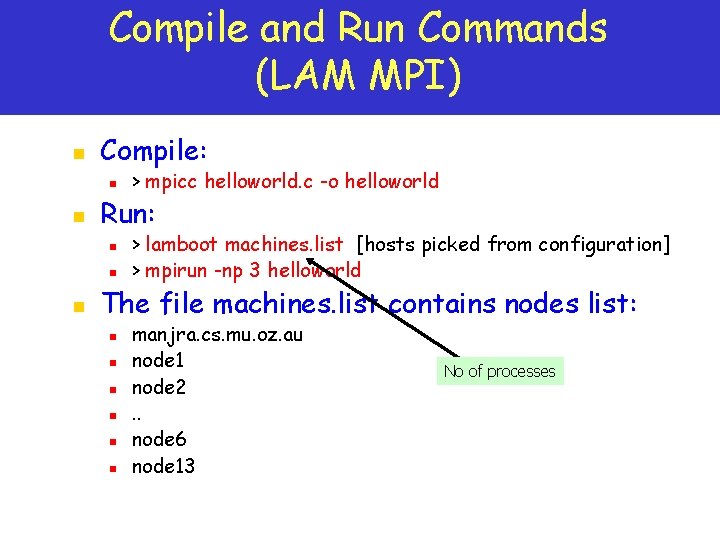

Compile and Run Commands (LAM MPI) n Compile: n n Run: n n n > mpicc helloworld. c -o helloworld > lamboot machines. list [hosts picked from configuration] > mpirun -np 3 helloworld The file machines. list contains nodes list: n n n manjra. cs. mu. oz. au node 1 node 2. . node 6 node 13 No of processes

Sample Run and Output n A Run with 3 Processes: n n > lamboot > mpirun -np 3 helloworld § § § Hello World from process 0 of 3 Hello World from process 1 of 3 Hello World from process 2 of 3

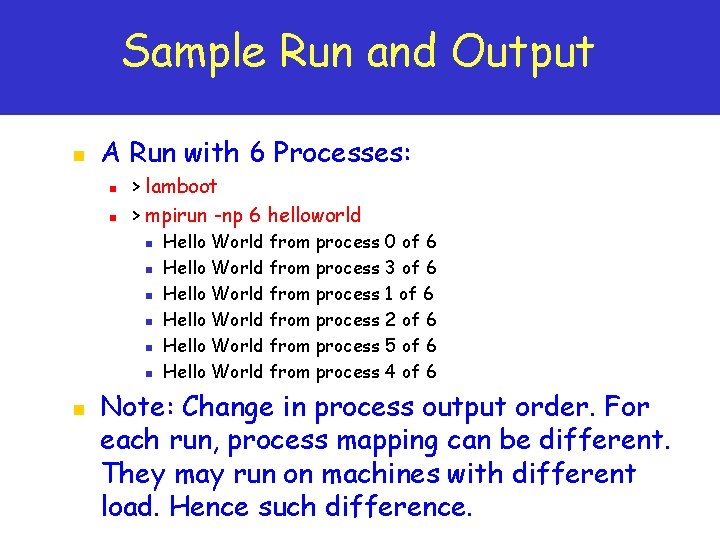

Sample Run and Output n A Run with 6 Processes: n n > lamboot > mpirun -np 6 helloworld n n n n Hello World from process 0 of 6 Hello World from process 3 of 6 Hello World from process 1 of 6 Hello World from process 5 of 6 Hello World from process 4 of 6 Hello World from process 2 of 6 Note: Process execution need not be in process number order.

Sample Run and Output n A Run with 6 Processes: n n > lamboot > mpirun -np 6 helloworld n n n n Hello World from process 0 of 6 Hello World from process 3 of 6 Hello World from process 1 of 6 Hello World from process 2 of 6 Hello World from process 5 of 6 Hello World from process 4 of 6 Note: Change in process output order. For each run, process mapping can be different. They may run on machines with different load. Hence such difference.

More on MPI Program Elements and Error Checking

Initializing MPI n The first MPI routine called in any MPI program must be MPI_Init. The C version accepts the arguments to main n int MPI_Init(int *argc, char ***argv); n n n MPI_Init must be called by every MPI program Making multiple MPI_Init calls is erroneous

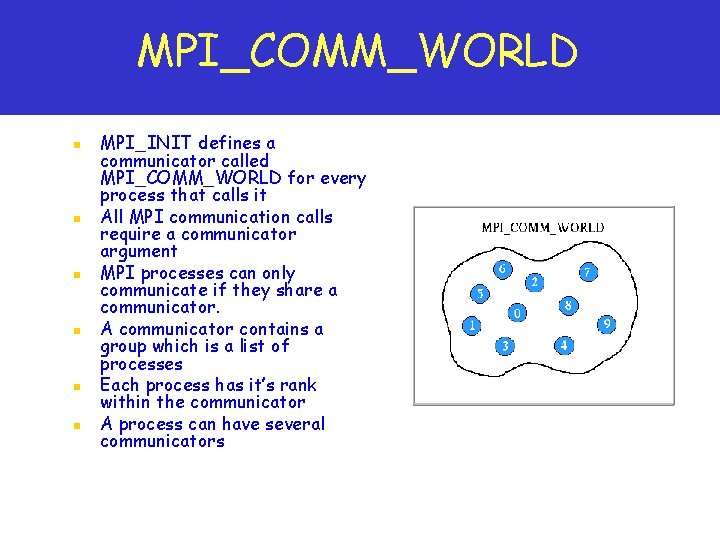

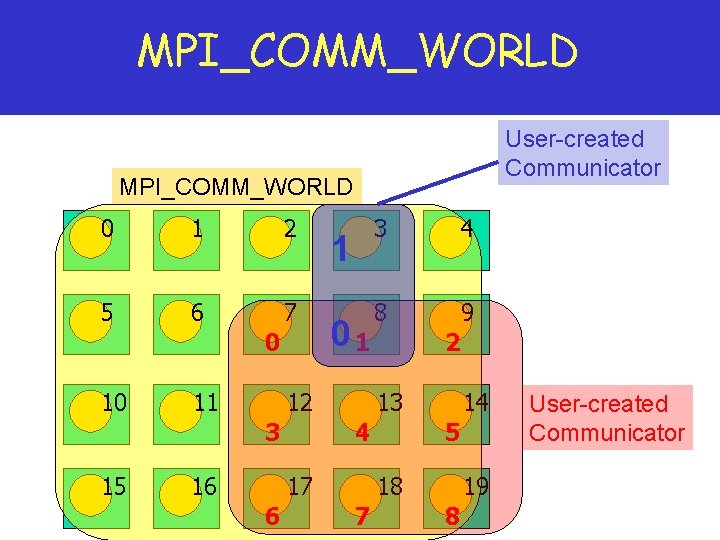

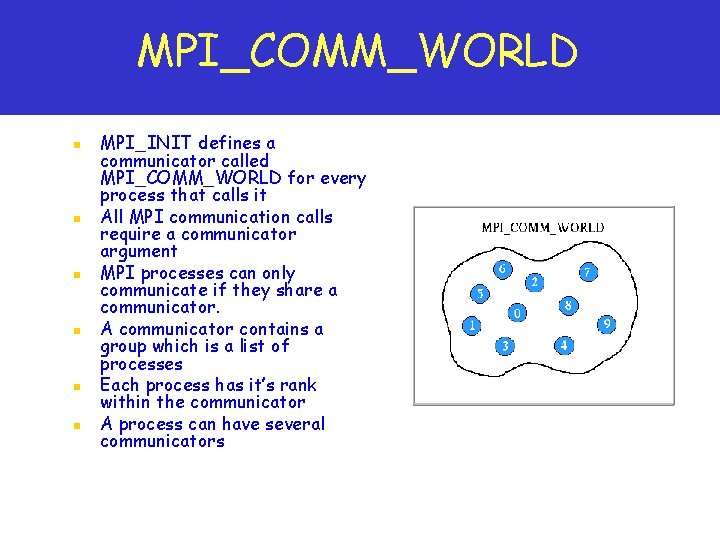

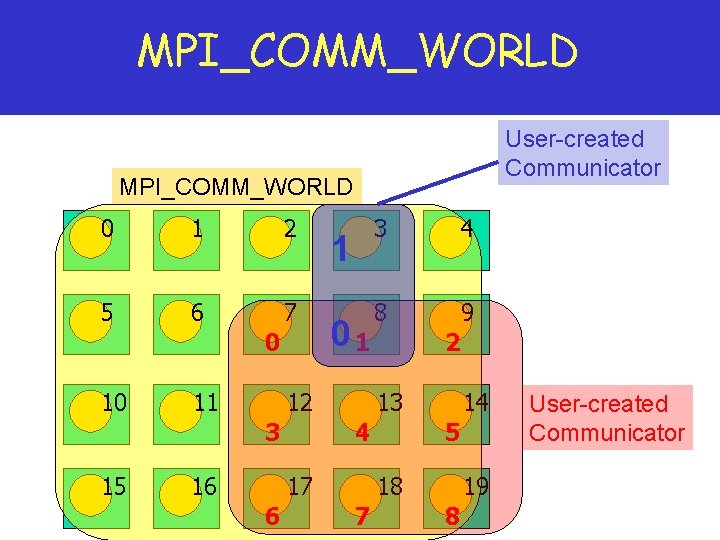

MPI_COMM_WORLD n n n MPI_INIT defines a communicator called MPI_COMM_WORLD for every process that calls it All MPI communication calls require a communicator argument MPI processes can only communicate if they share a communicator. A communicator contains a group which is a list of processes Each process has it’s rank within the communicator A process can have several communicators

MPI_COMM_WORLD User-created Communicator MPI_COMM_WORLD 0 1 2 5 6 7 0 10 11 01 12 3 15 1 16 4 8 9 2 14 13 4 17 6 3 5 19 18 7 8 User-created Communicator

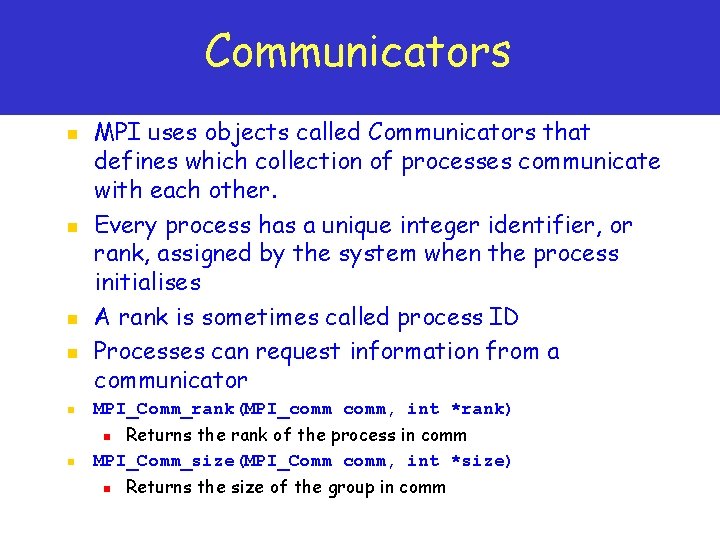

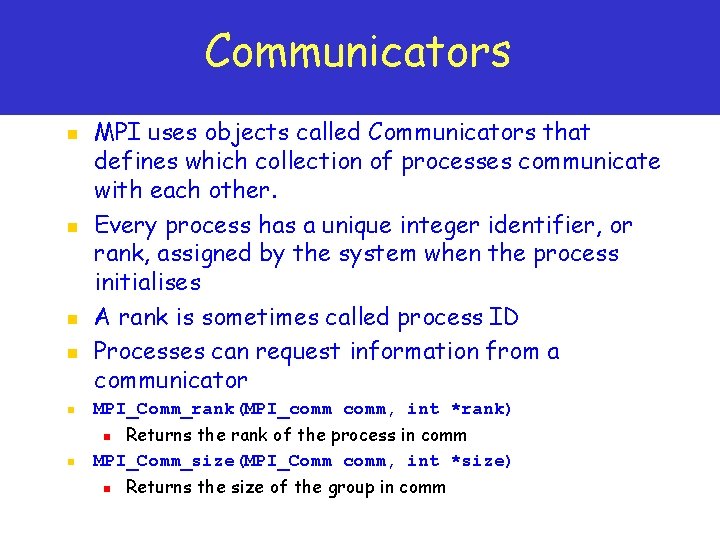

Communicators n n MPI uses objects called Communicators that defines which collection of processes communicate with each other. Every process has a unique integer identifier, or rank, assigned by the system when the process initialises A rank is sometimes called process ID Processes can request information from a communicator n MPI_Comm_rank(MPI_comm, int *rank) n Returns the rank of the process in comm MPI_Comm_size(MPI_Comm comm, int *size) n n Returns the size of the group in comm

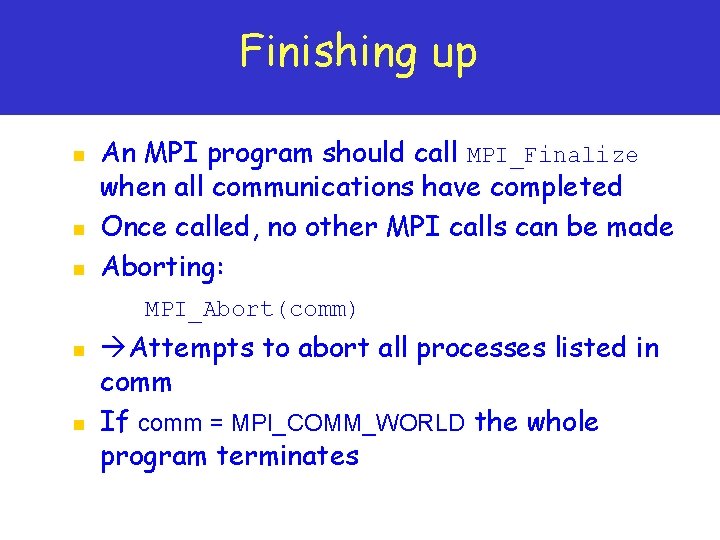

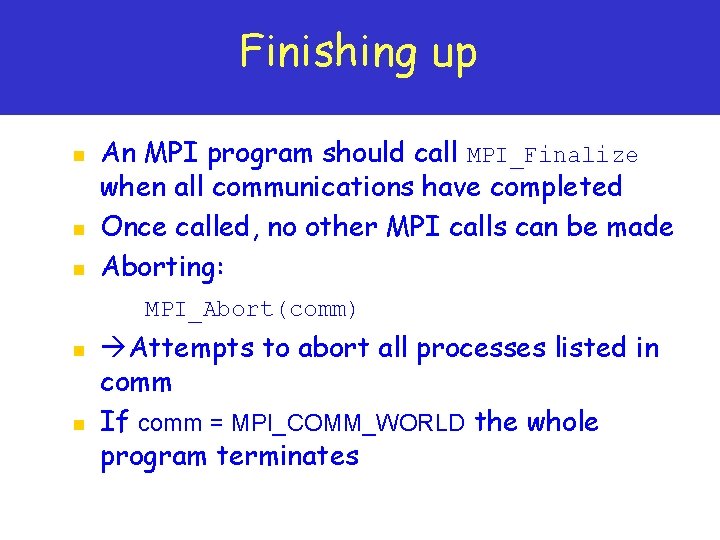

Finishing up n n n An MPI program should call MPI_Finalize when all communications have completed Once called, no other MPI calls can be made Aborting: MPI_Abort(comm) n n Attempts to abort all processes listed in comm If comm = MPI_COMM_WORLD the whole program terminates

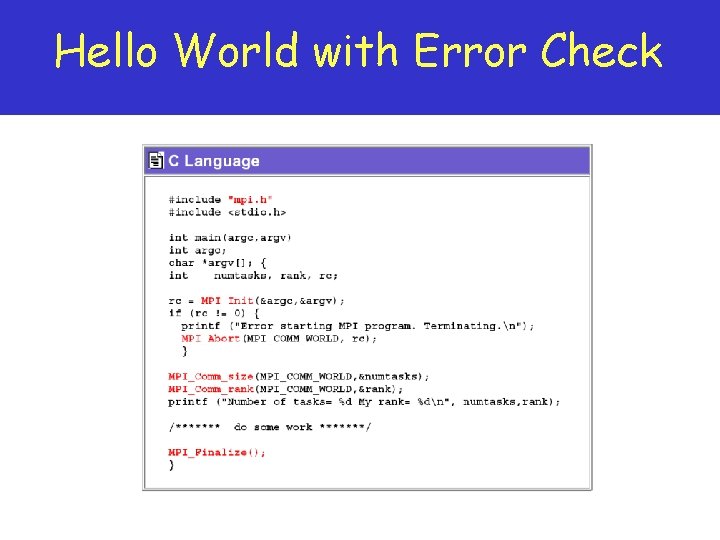

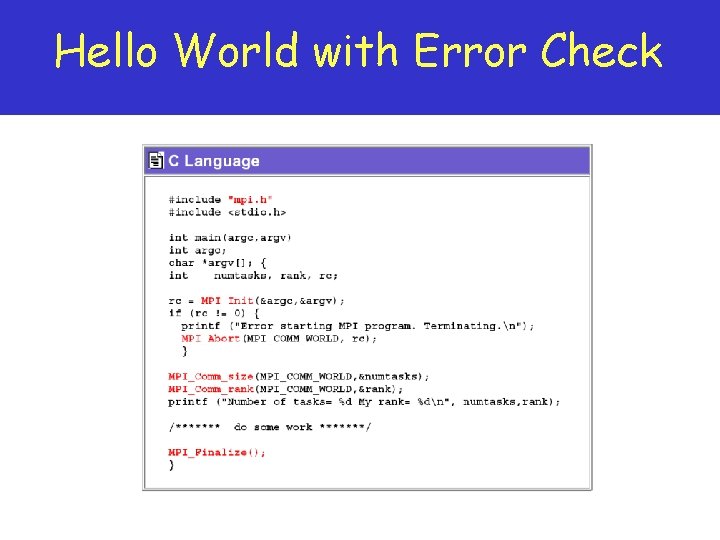

Hello World with Error Check

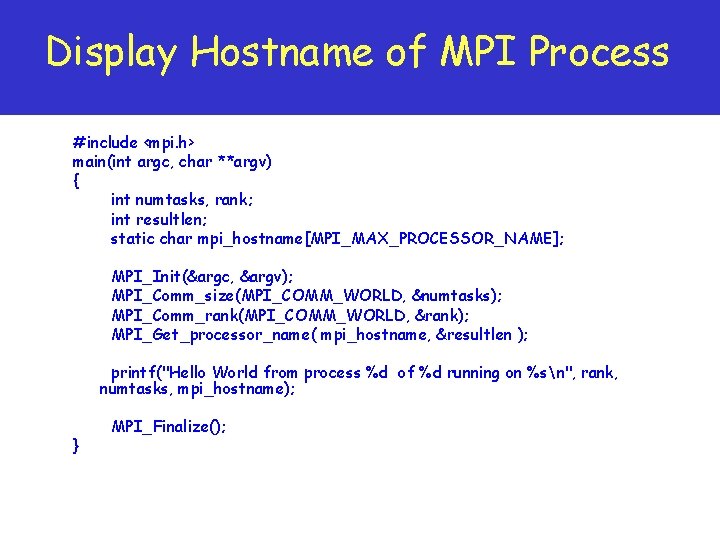

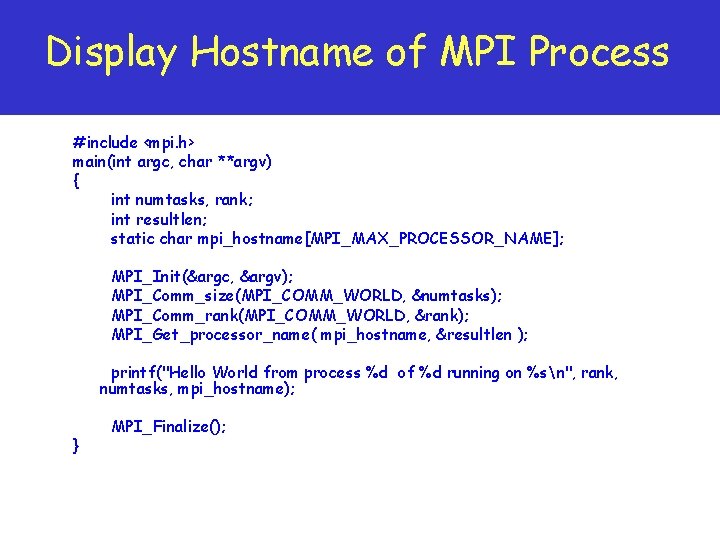

Display Hostname of MPI Process #include <mpi. h> main(int argc, char **argv) { int numtasks, rank; int resultlen; static char mpi_hostname[MPI_MAX_PROCESSOR_NAME]; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &numtasks); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Get_processor_name( mpi_hostname, &resultlen ); printf("Hello World from process %d of %d running on %sn", rank, numtasks, mpi_hostname); } MPI_Finalize();

MPI Routines

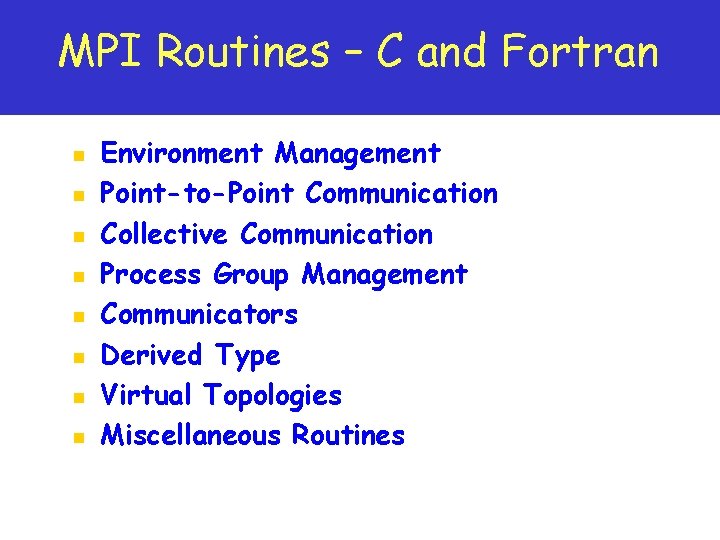

MPI Routines – C and Fortran n n n n Environment Management Point-to-Point Communication Collective Communication Process Group Management Communicators Derived Type Virtual Topologies Miscellaneous Routines

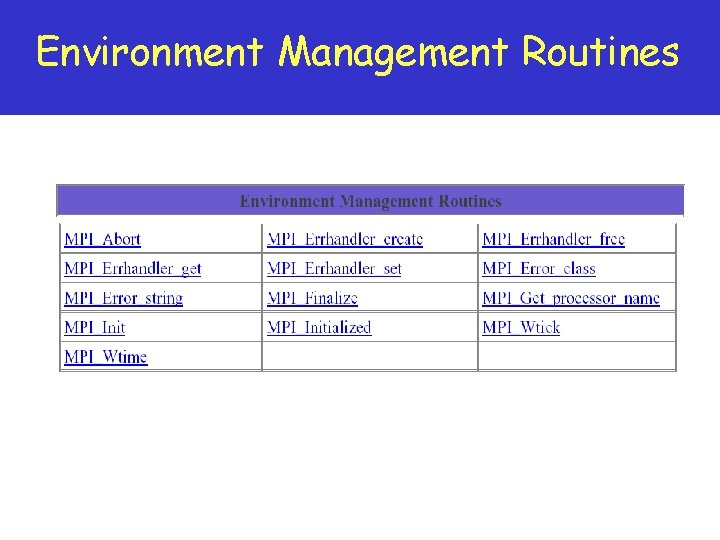

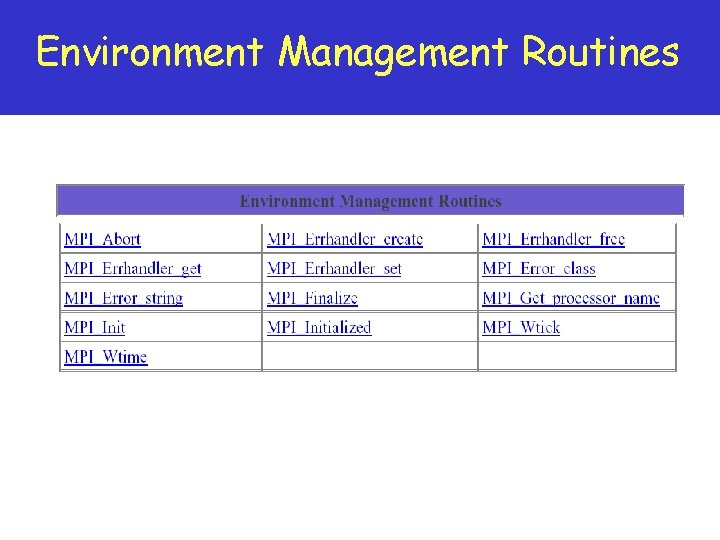

Environment Management Routines

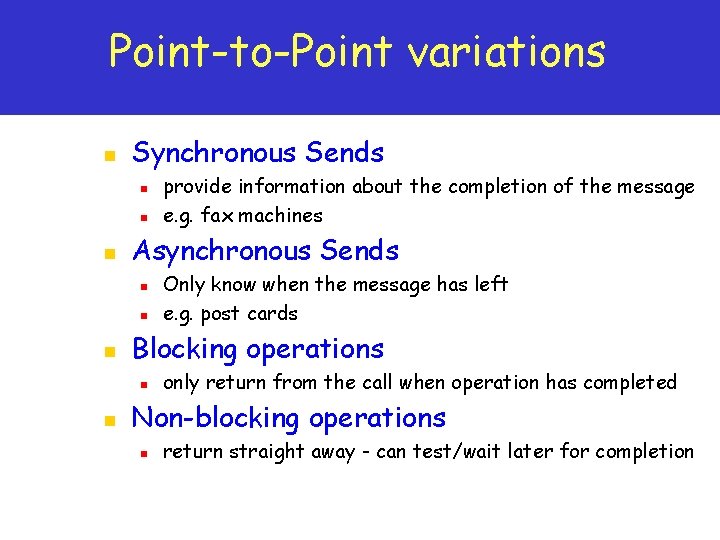

Point-to-Point Communication n A simplest form of message passing One process sends a message to another Several variations on how sending a message can interact with execution of the subprogram

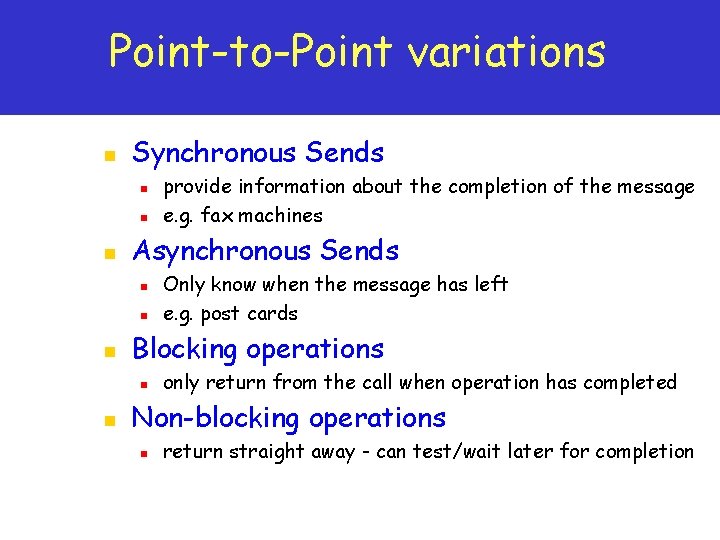

Point-to-Point variations n Synchronous Sends n n n Asynchronous Sends n n n Only know when the message has left e. g. post cards Blocking operations n n provide information about the completion of the message e. g. fax machines only return from the call when operation has completed Non-blocking operations n return straight away - can test/wait later for completion

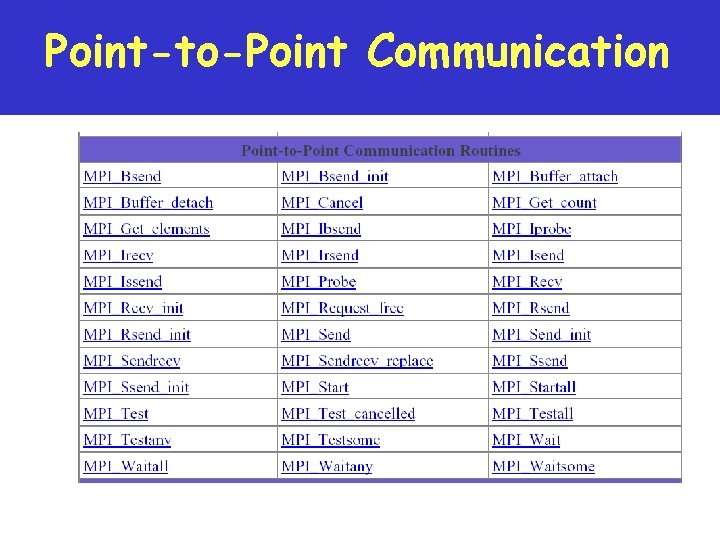

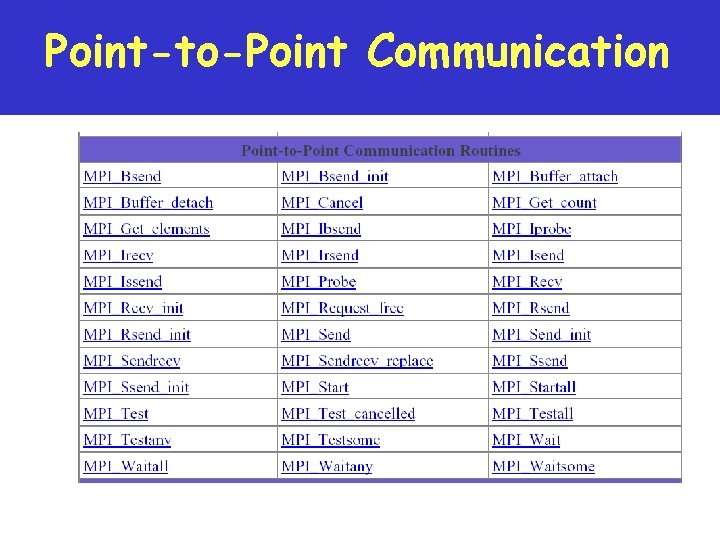

Point-to-Point Communication

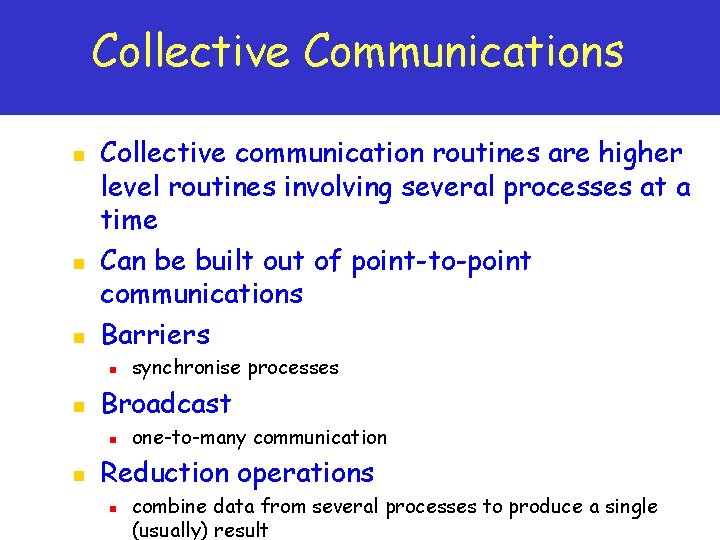

Collective Communications n n n Collective communication routines are higher level routines involving several processes at a time Can be built out of point-to-point communications Barriers n n Broadcast n n synchronise processes one-to-many communication Reduction operations n combine data from several processes to produce a single (usually) result

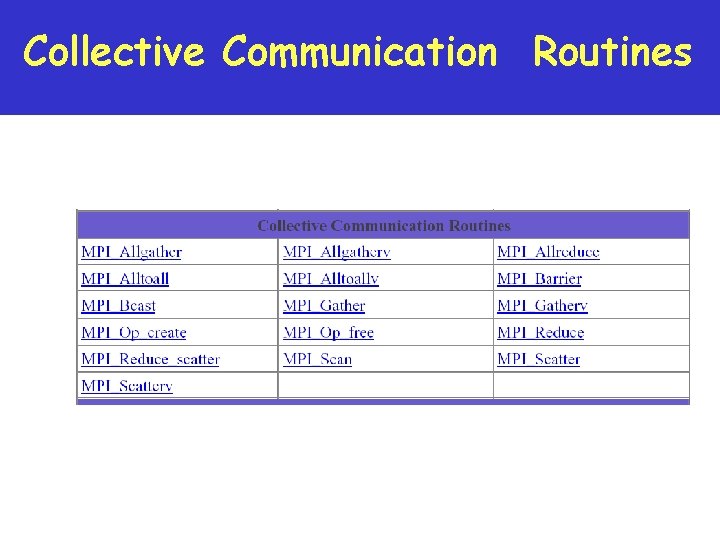

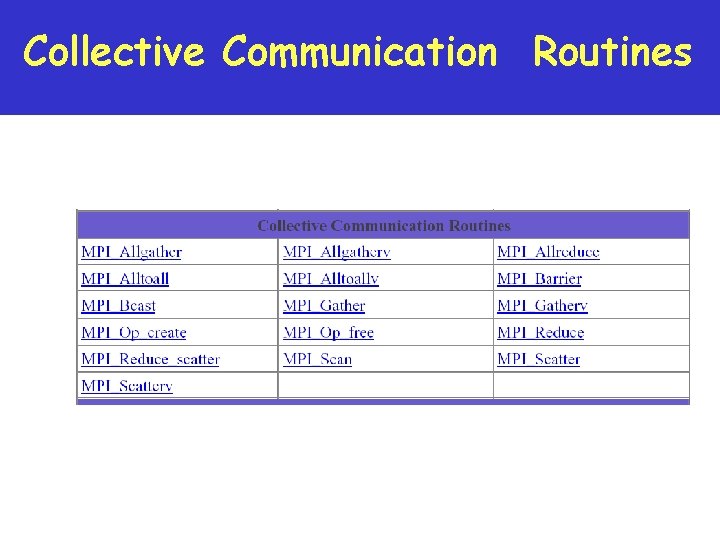

Collective Communication Routines

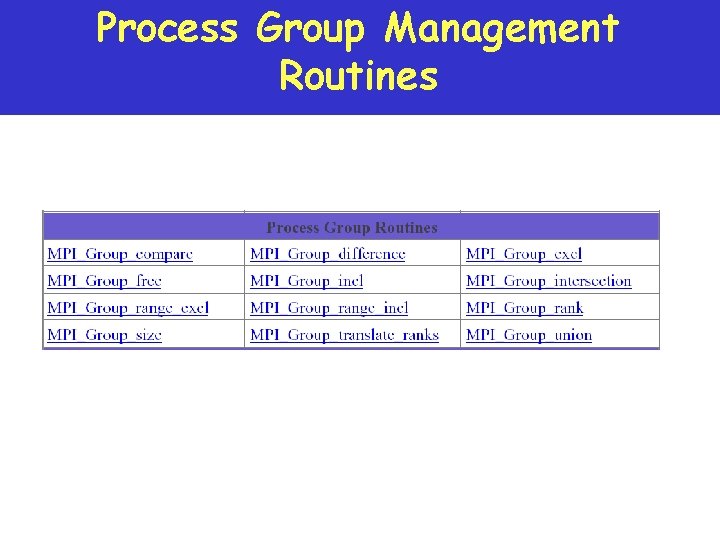

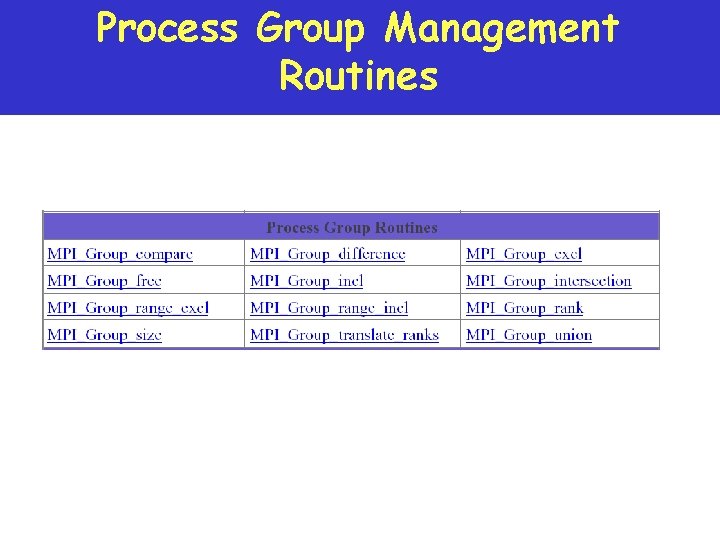

Process Group Management Routines

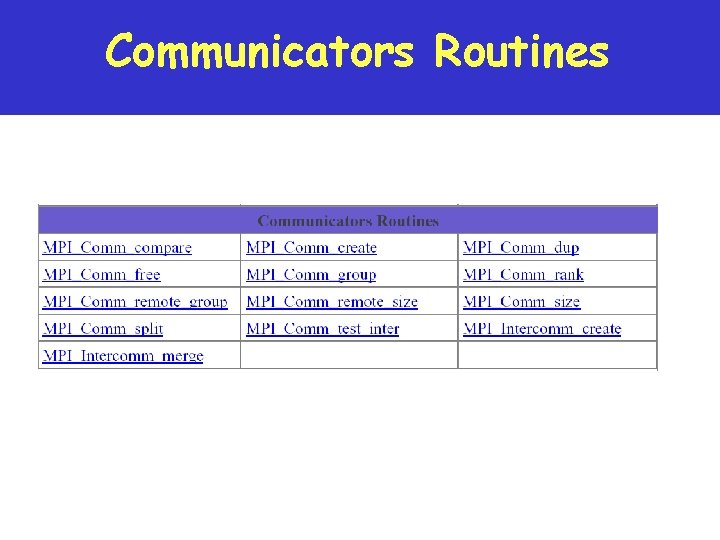

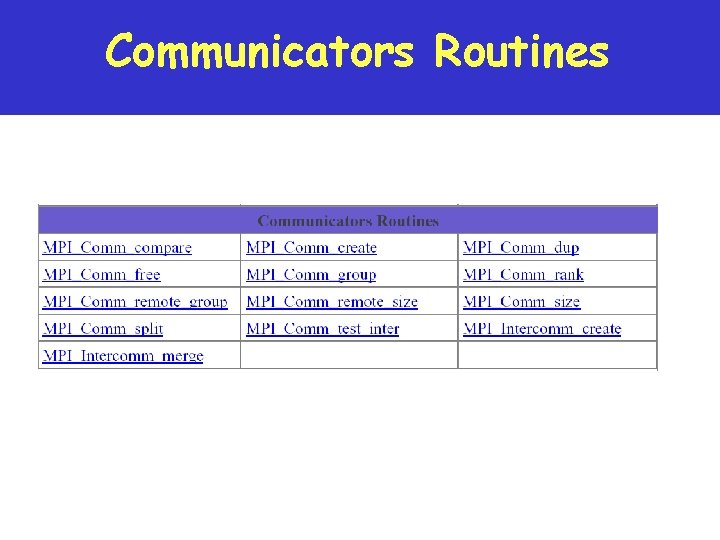

Communicators Routines

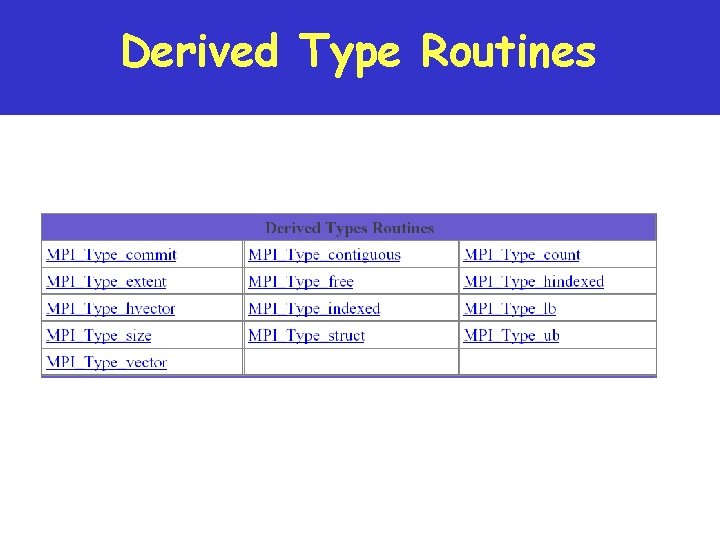

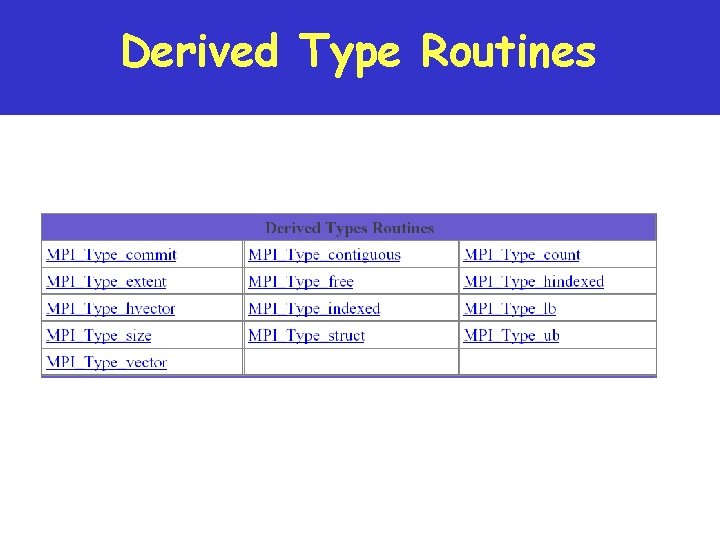

Derived Type Routines

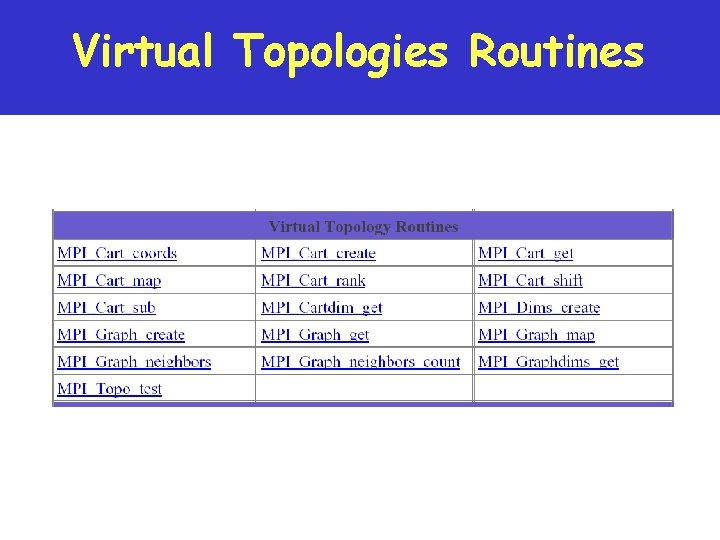

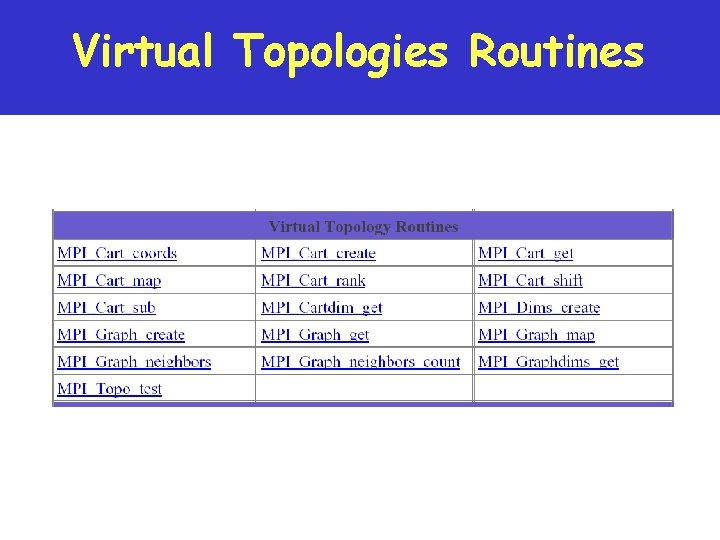

Virtual Topologies Routines

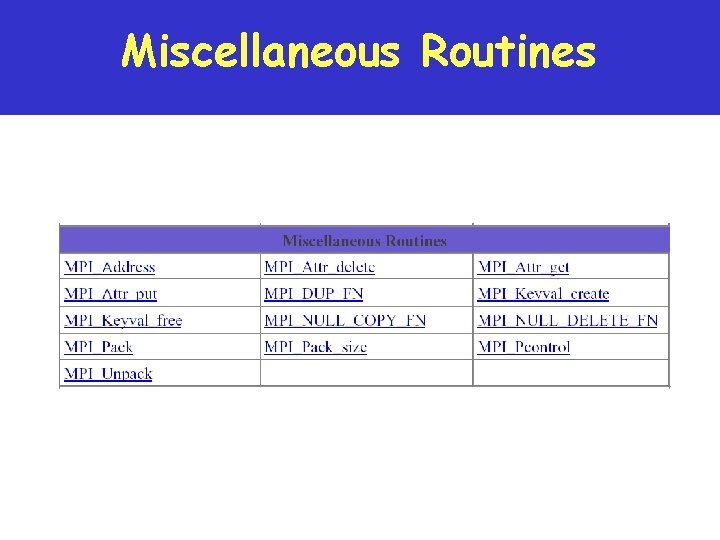

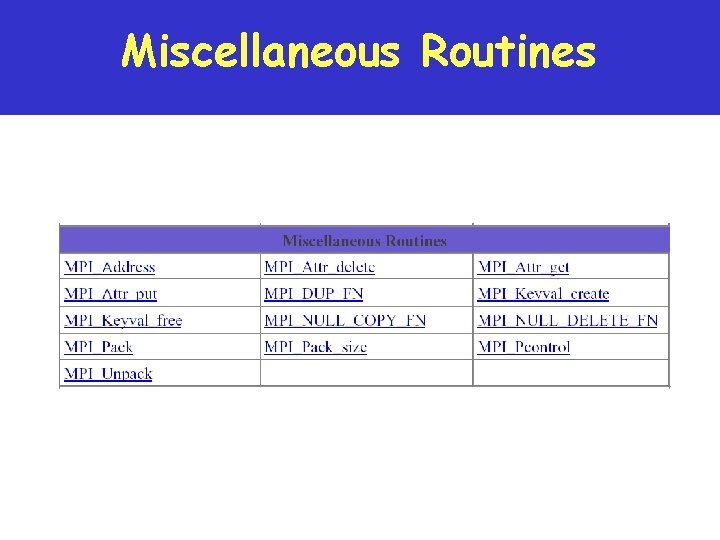

Miscellaneous Routines

MPI Communication Routines and Examples

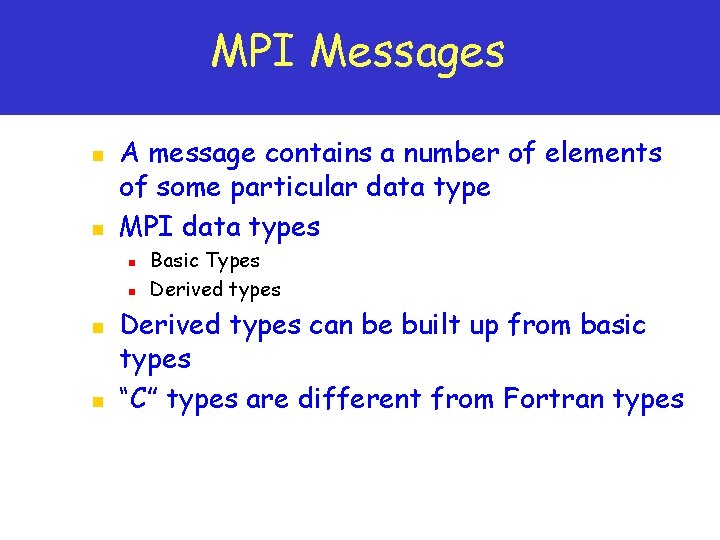

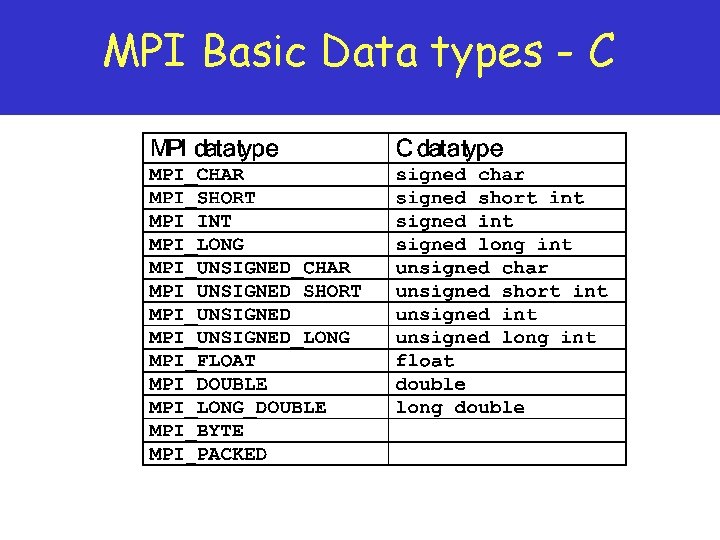

MPI Messages n n A message contains a number of elements of some particular data type MPI data types n n Basic Types Derived types can be built up from basic types “C” types are different from Fortran types

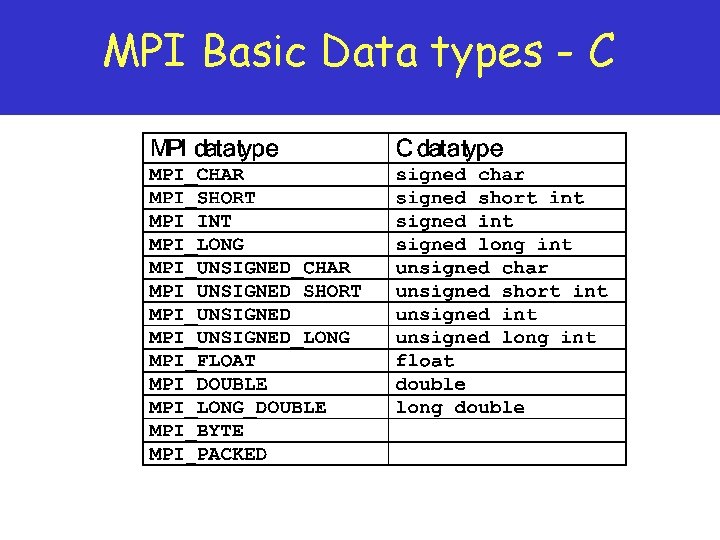

MPI Basic Data types - C

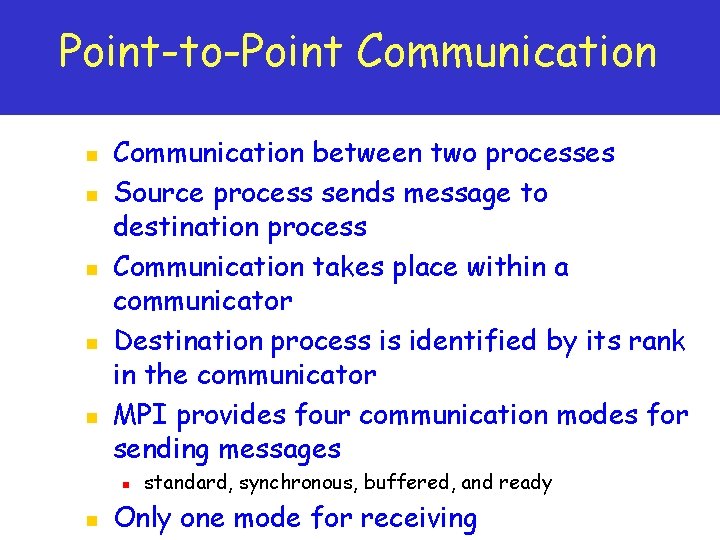

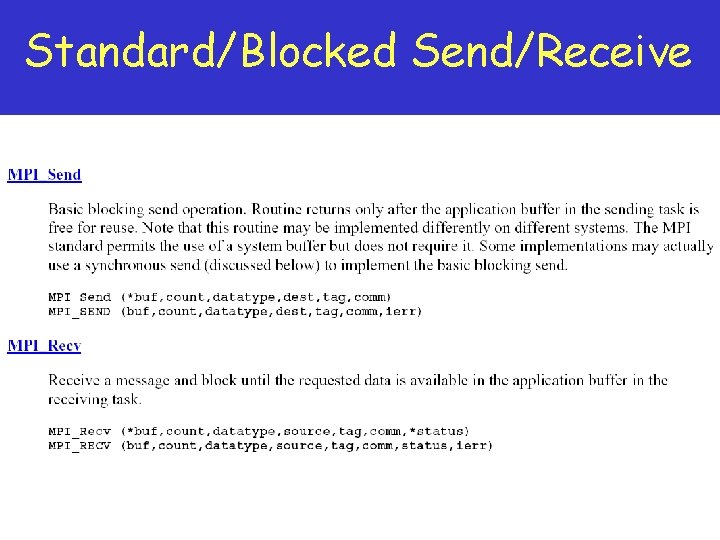

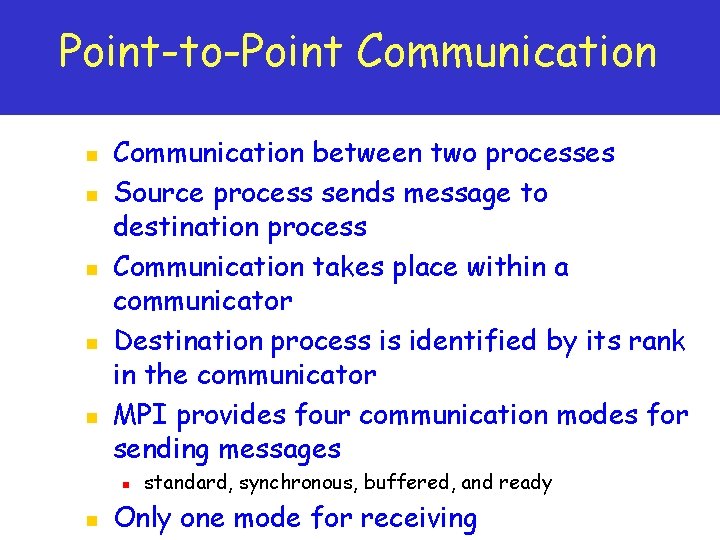

Point-to-Point Communication n n Communication between two processes Source process sends message to destination process Communication takes place within a communicator Destination process is identified by its rank in the communicator MPI provides four communication modes for sending messages n n standard, synchronous, buffered, and ready Only one mode for receiving

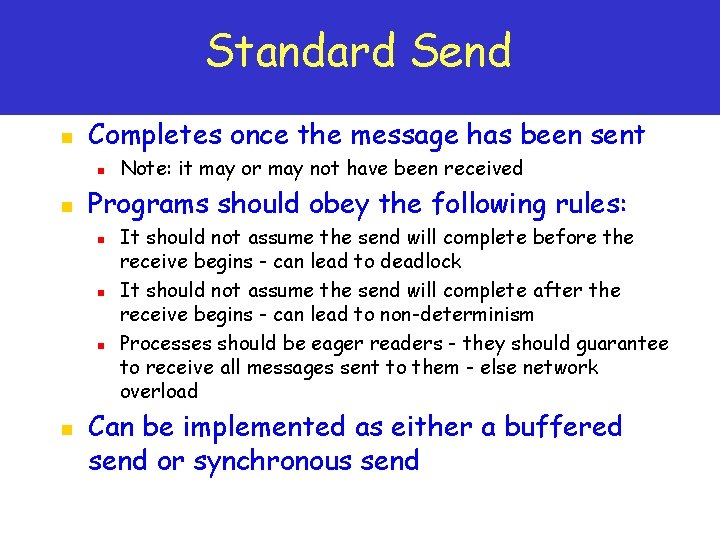

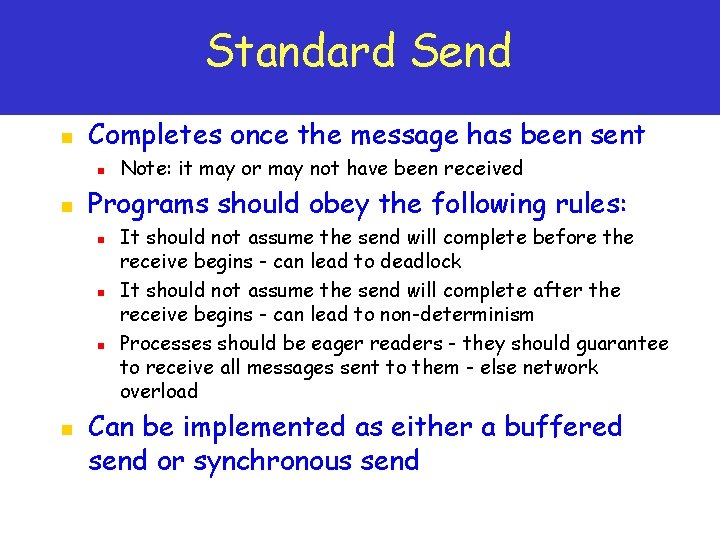

Standard Send n Completes once the message has been sent n n Programs should obey the following rules: n n Note: it may or may not have been received It should not assume the send will complete before the receive begins - can lead to deadlock It should not assume the send will complete after the receive begins - can lead to non-determinism Processes should be eager readers - they should guarantee to receive all messages sent to them - else network overload Can be implemented as either a buffered send or synchronous send

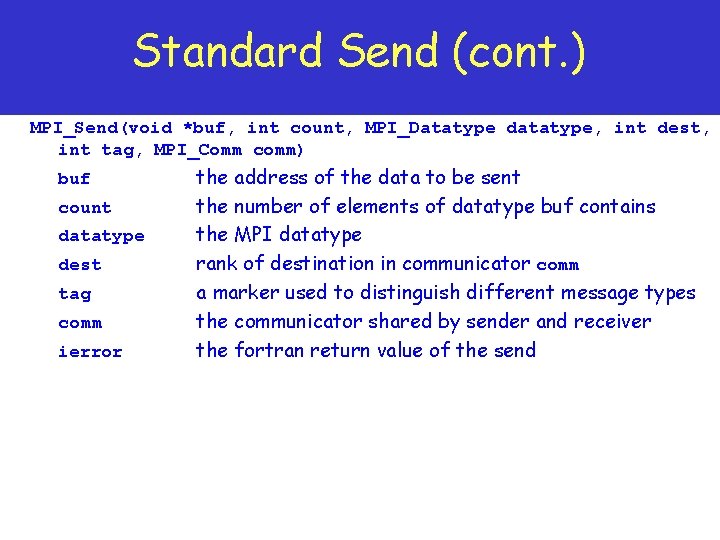

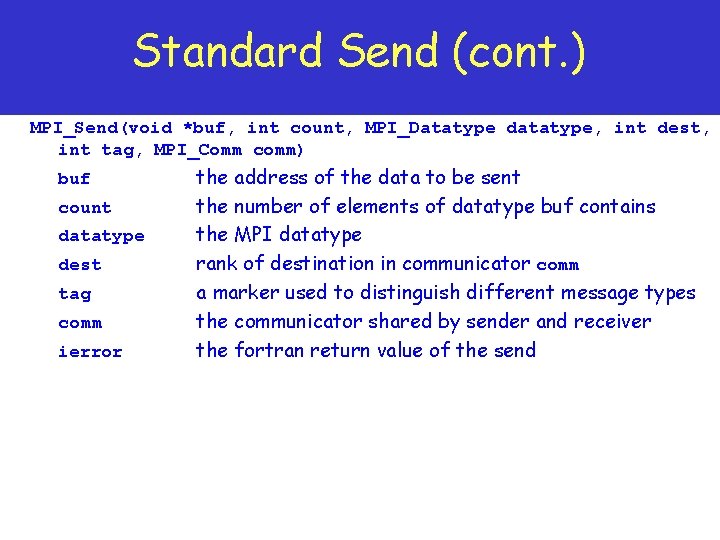

Standard Send (cont. ) MPI_Send(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) buf count datatype dest tag comm ierror the address of the data to be sent the number of elements of datatype buf contains the MPI datatype rank of destination in communicator comm a marker used to distinguish different message types the communicator shared by sender and receiver the fortran return value of the send

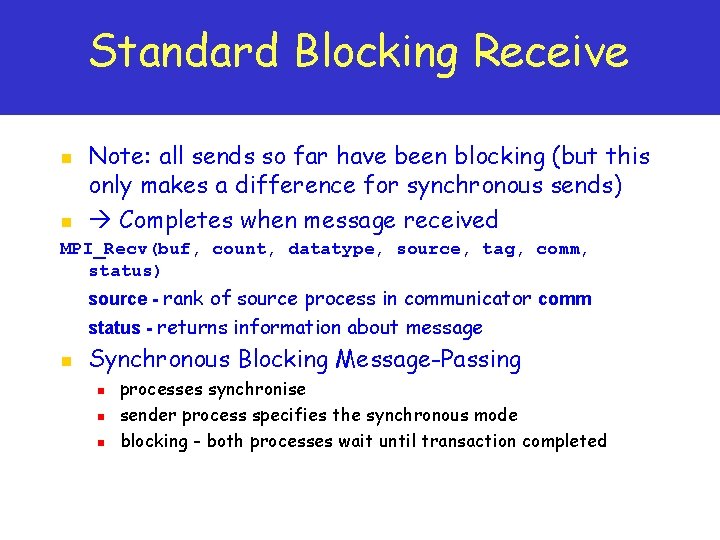

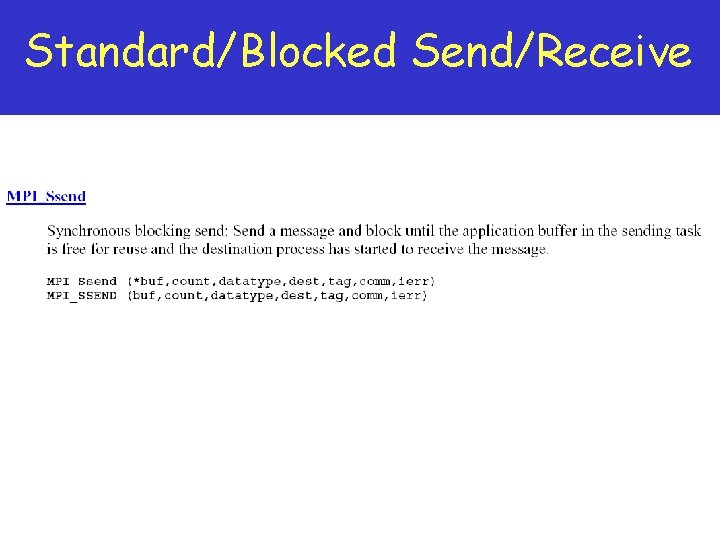

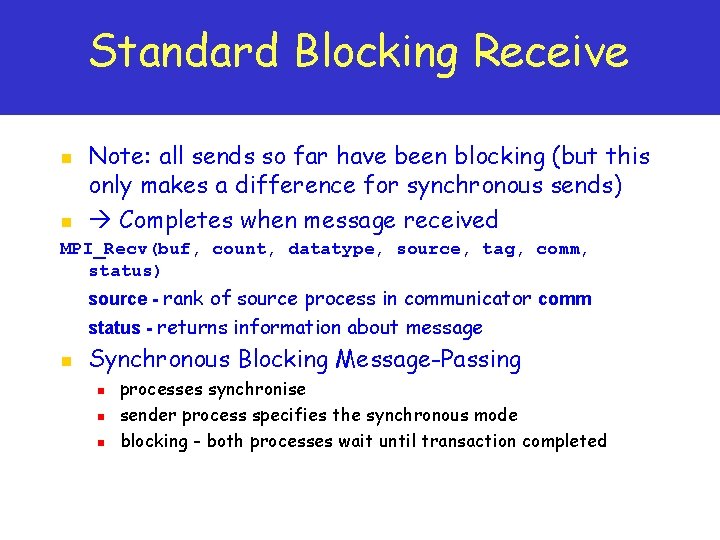

Standard Blocking Receive n n Note: all sends so far have been blocking (but this only makes a difference for synchronous sends) Completes when message received MPI_Recv(buf, count, datatype, source, tag, comm, status) source - rank of source process in communicator comm status - returns information about message n Synchronous Blocking Message-Passing n n n processes synchronise sender process specifies the synchronous mode blocking - both processes wait until transaction completed

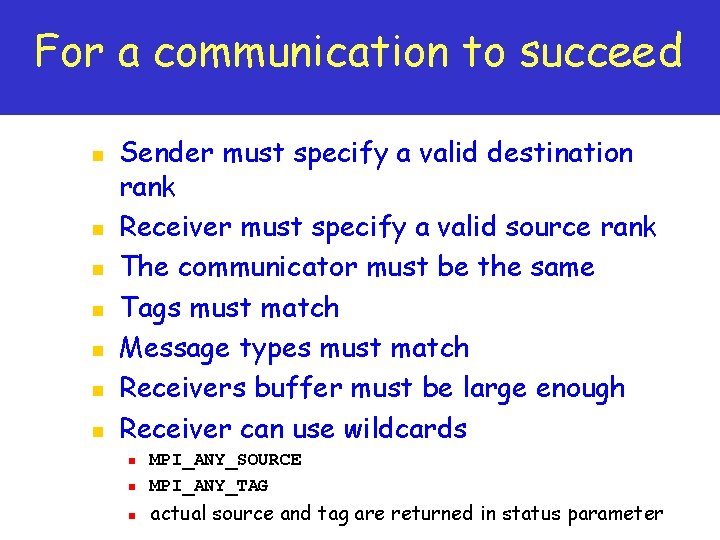

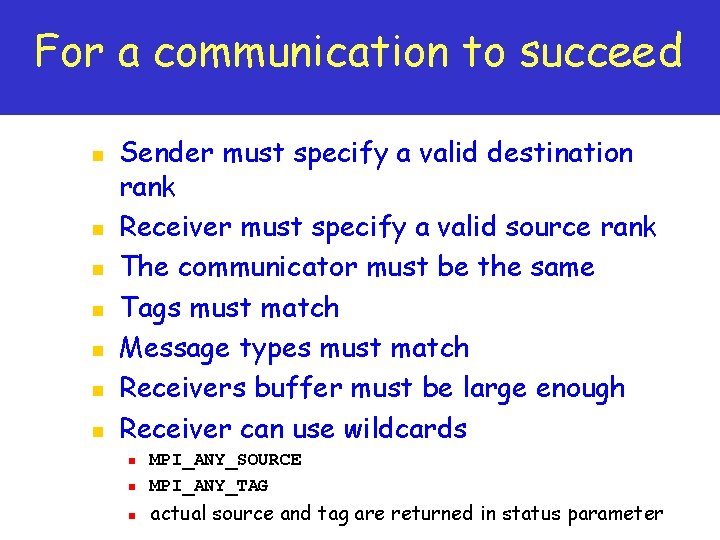

For a communication to succeed n n n n Sender must specify a valid destination rank Receiver must specify a valid source rank The communicator must be the same Tags must match Message types must match Receivers buffer must be large enough Receiver can use wildcards n MPI_ANY_SOURCE MPI_ANY_TAG n actual source and tag are returned in status parameter n

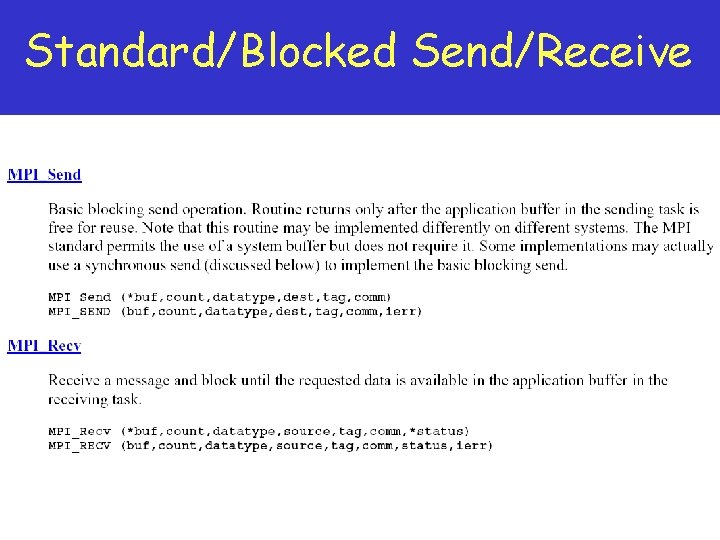

Standard/Blocked Send/Receive

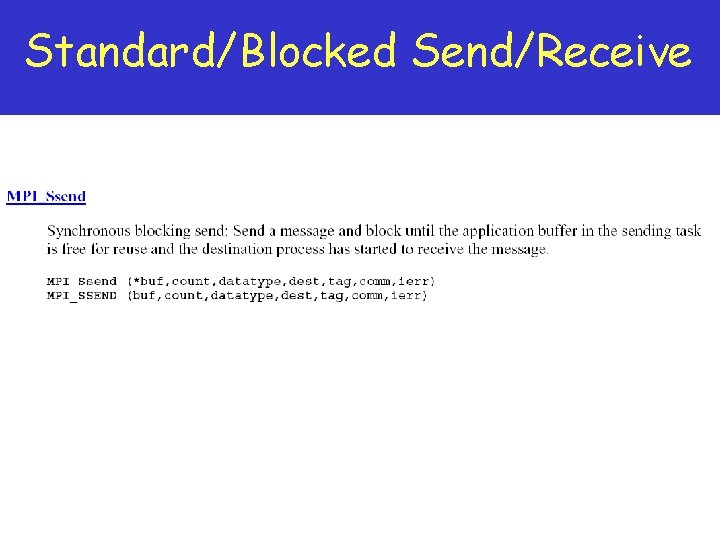

Standard/Blocked Send/Receive

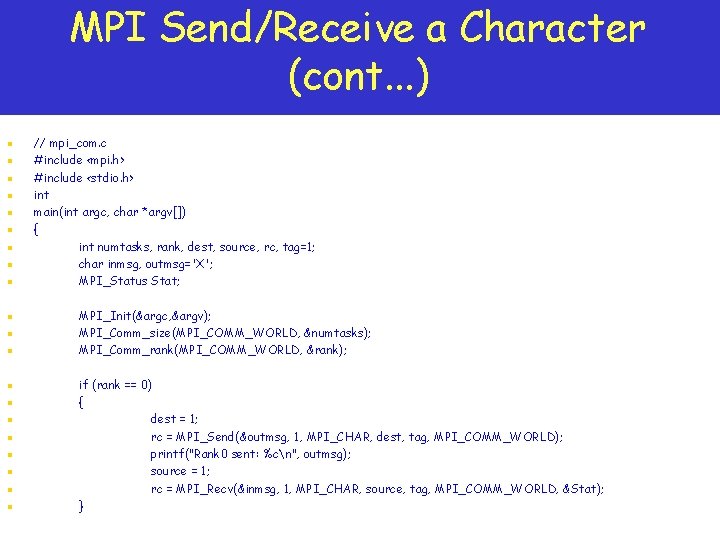

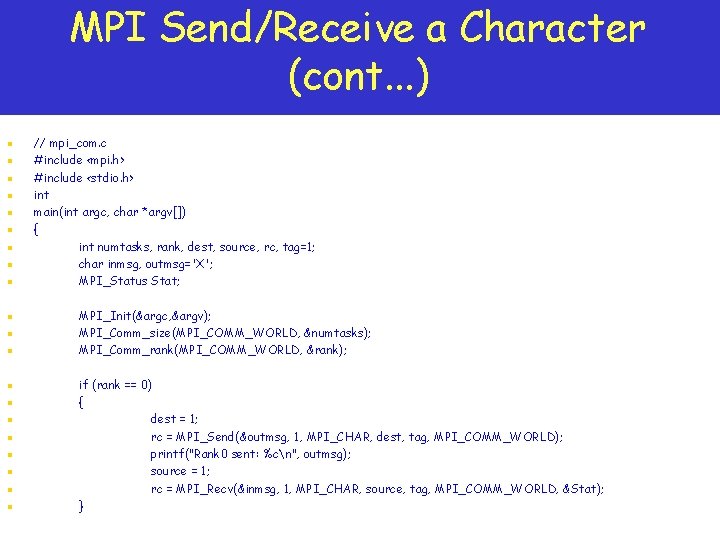

MPI Send/Receive a Character (cont. . . ) n n n n n // mpi_com. c #include <mpi. h> #include <stdio. h> int main(int argc, char *argv[]) { int numtasks, rank, dest, source, rc, tag=1; char inmsg, outmsg='X'; MPI_Status Stat; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &numtasks); MPI_Comm_rank(MPI_COMM_WORLD, &rank); if (rank == 0) { dest = 1; rc = MPI_Send(&outmsg, 1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); printf("Rank 0 sent: %cn", outmsg); source = 1; rc = MPI_Recv(&inmsg, 1, MPI_CHAR, source, tag, MPI_COMM_WORLD, &Stat); }

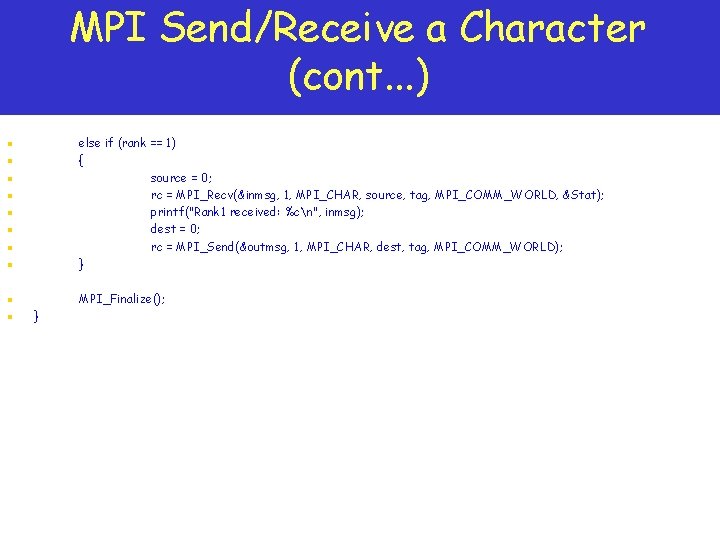

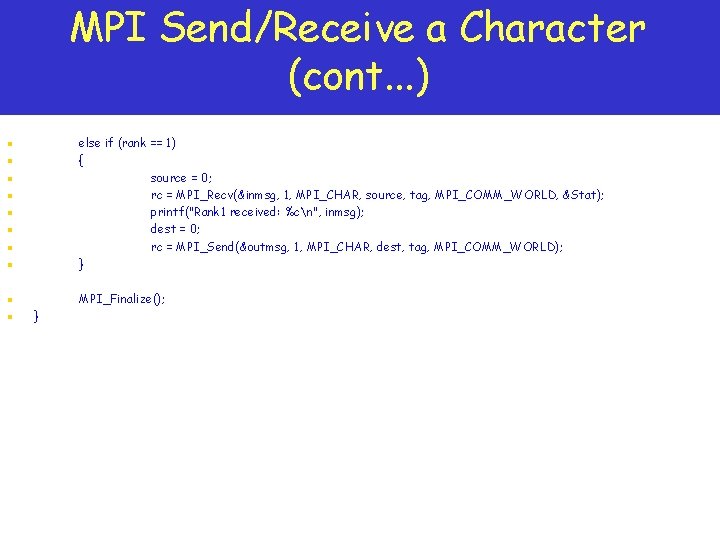

MPI Send/Receive a Character (cont. . . ) n else if (rank == 1) { source = 0; rc = MPI_Recv(&inmsg, 1, MPI_CHAR, source, tag, MPI_COMM_WORLD, &Stat); printf("Rank 1 received: %cn", inmsg); dest = 0; rc = MPI_Send(&outmsg, 1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); } n MPI_Finalize(); n n n n }

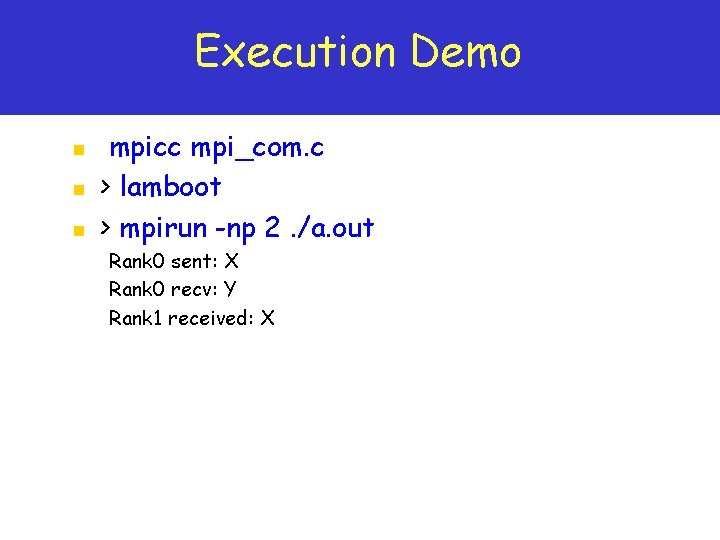

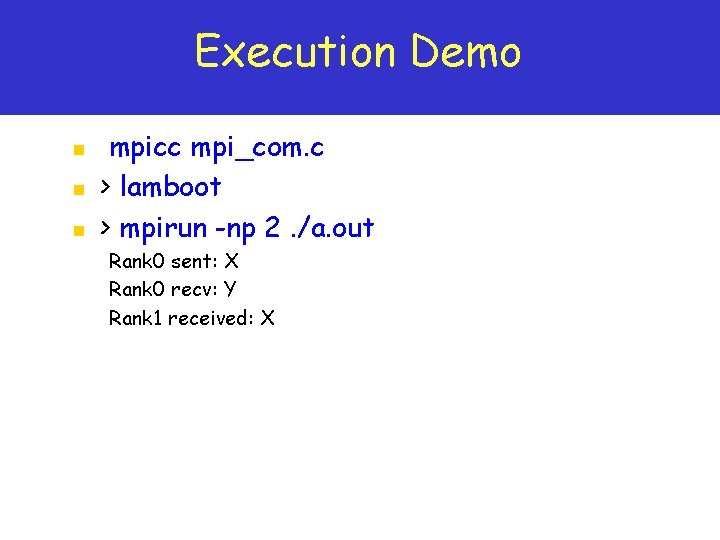

Execution Demo n n n mpicc mpi_com. c > lamboot > mpirun -np 2. /a. out Rank 0 sent: X Rank 0 recv: Y Rank 1 received: X

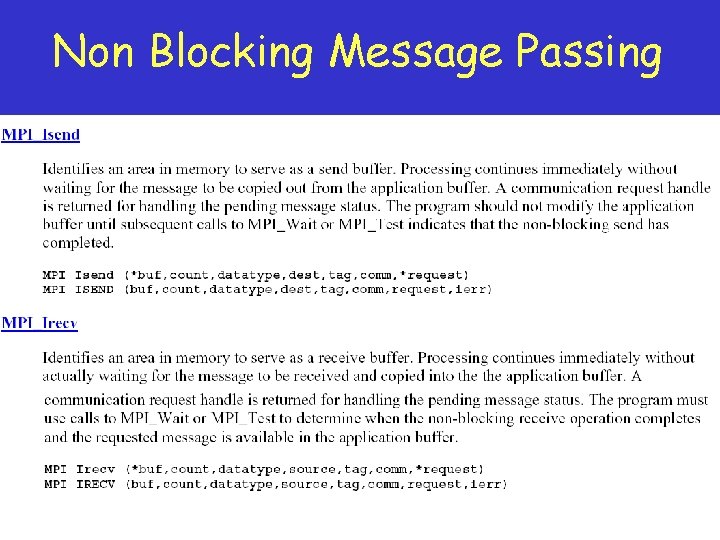

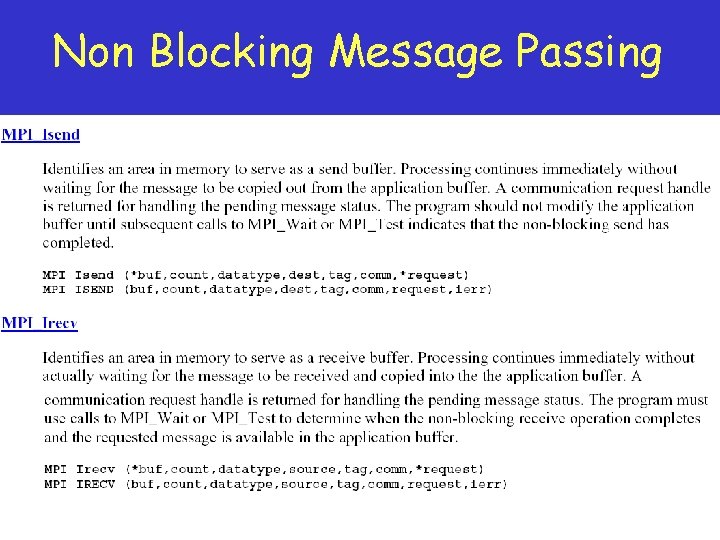

Non Blocking Message Passing

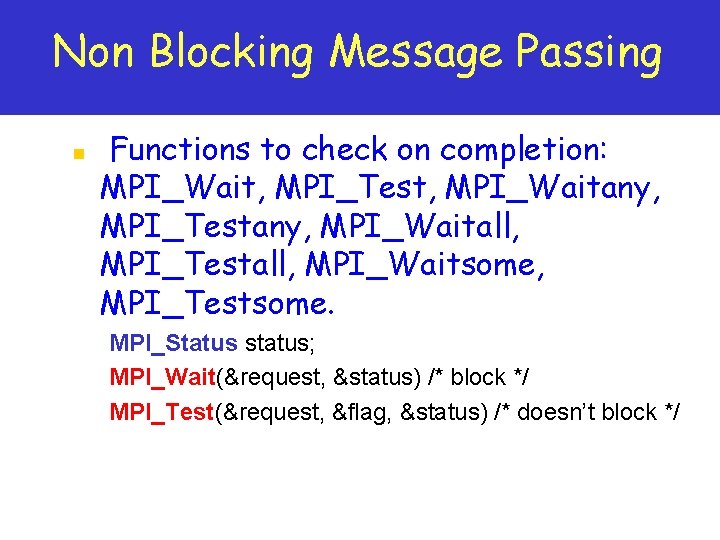

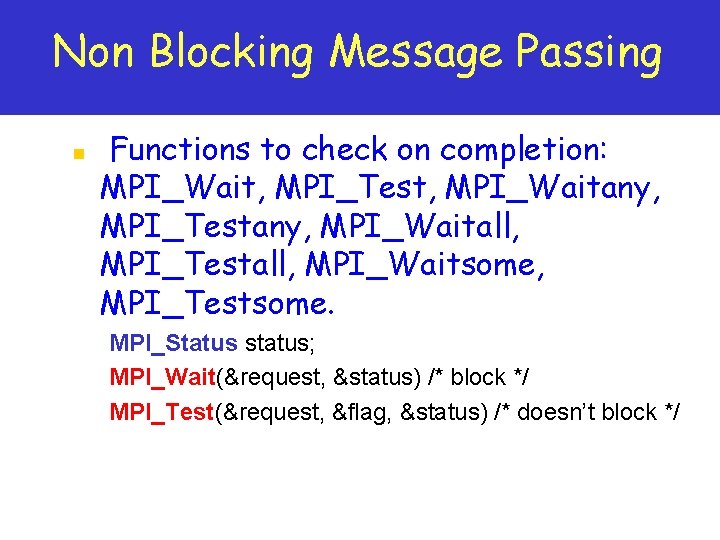

Non Blocking Message Passing n Functions to check on completion: MPI_Wait, MPI_Test, MPI_Waitany, MPI_Testany, MPI_Waitall, MPI_Testall, MPI_Waitsome, MPI_Testsome. MPI_Status status; MPI_Wait(&request, &status) /* block */ MPI_Test(&request, &flag, &status) /* doesn’t block */

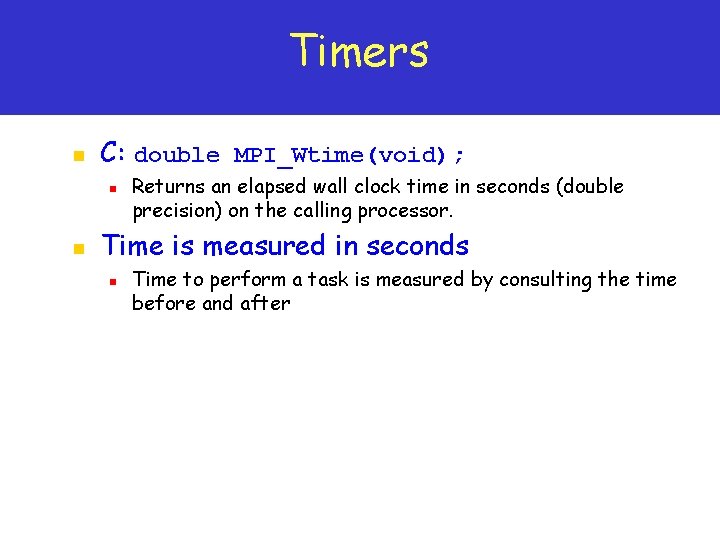

Timers n C: double MPI_Wtime(void); n n Returns an elapsed wall clock time in seconds (double precision) on the calling processor. Time is measured in seconds n Time to perform a task is measured by consulting the time before and after