Program Evaluation and Quality Assurance HPR 322 Chapter

- Slides: 21

Program Evaluation and Quality Assurance HPR 322 Chapter 13 The process of evaluation

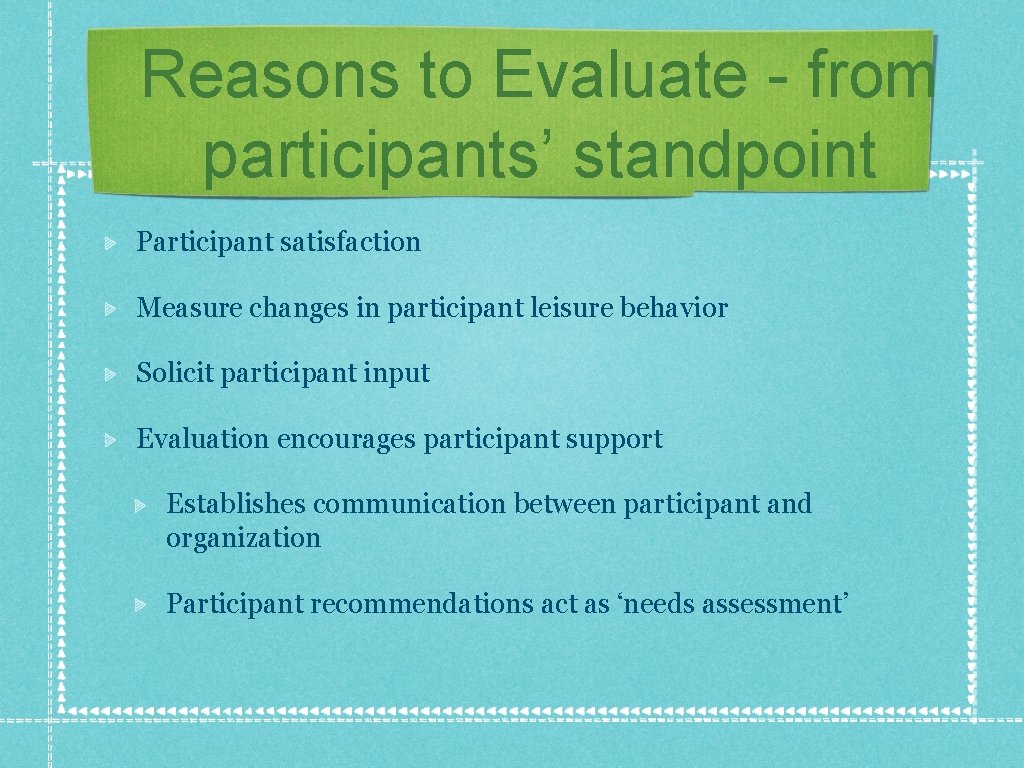

Reasons to Evaluate - from participants’ standpoint Participant satisfaction Measure changes in participant leisure behavior Solicit participant input Evaluation encourages participant support Establishes communication between participant and organization Participant recommendations act as ‘needs assessment’

Reasons to evaluate - from programmer’s standpoint Promotes relationship between recreation leader and participants Enables programmers to develop sensitivity to participants Enables programmers to determine program design effectiveness Reveals the need for program improvements and enhancements

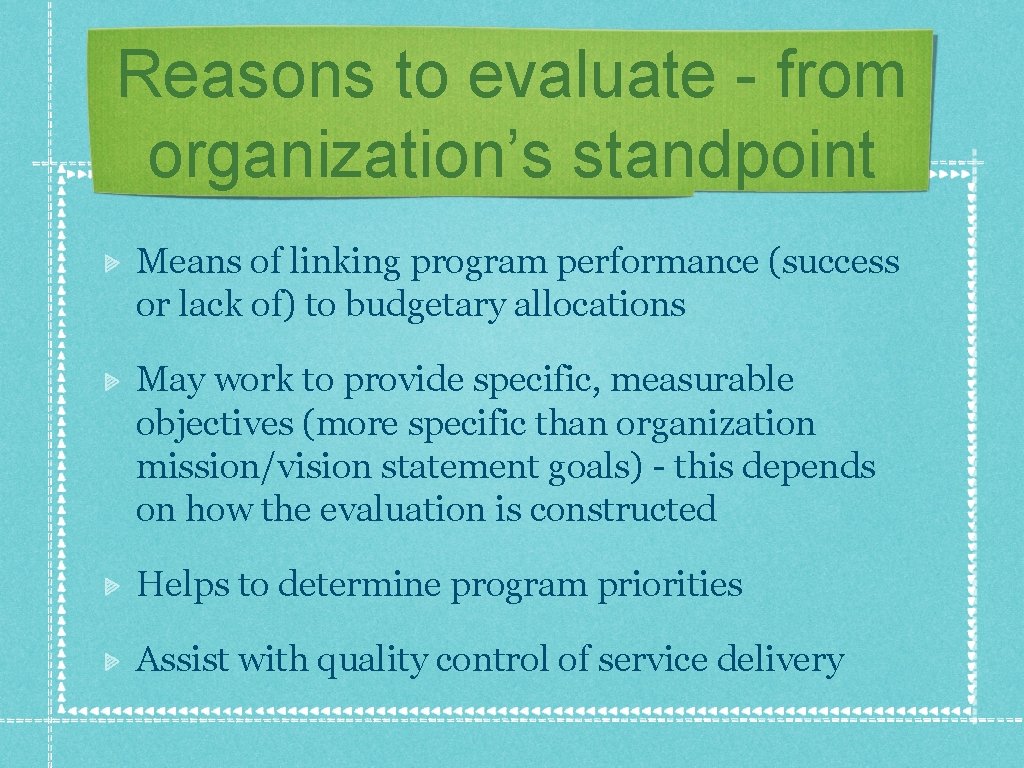

Reasons to evaluate - from organization’s standpoint Means of linking program performance (success or lack of) to budgetary allocations May work to provide specific, measurable objectives (more specific than organization mission/vision statement goals) - this depends on how the evaluation is constructed Helps to determine program priorities Assist with quality control of service delivery

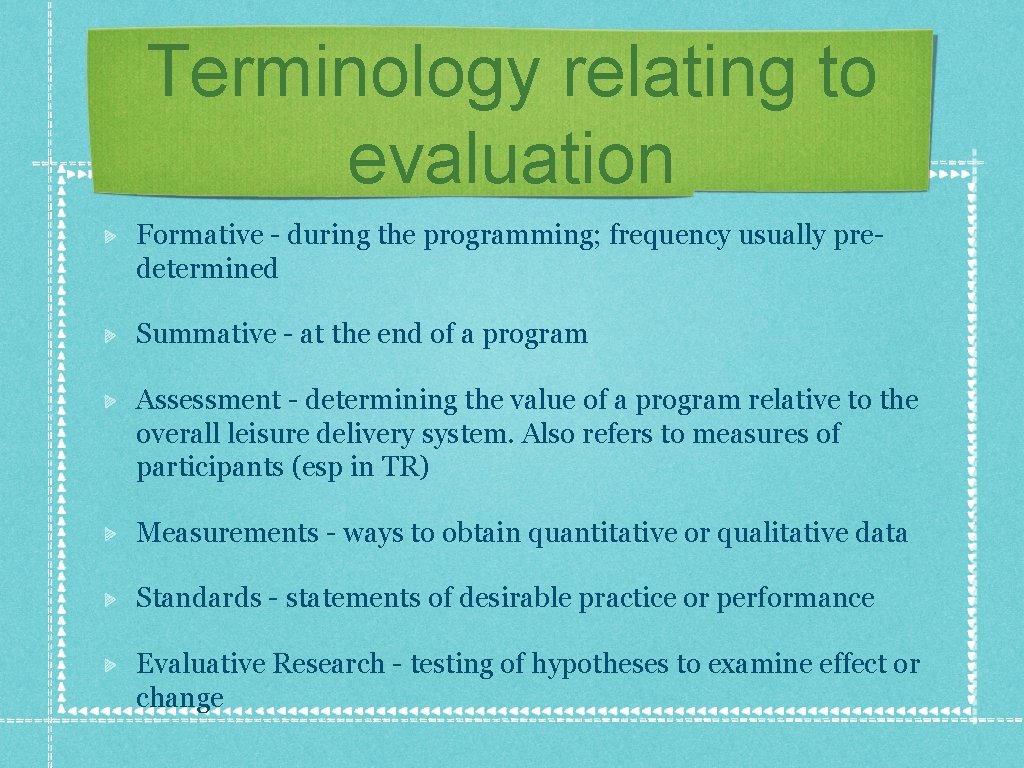

Terminology relating to evaluation Formative - during the programming; frequency usually predetermined Summative - at the end of a program Assessment - determining the value of a program relative to the overall leisure delivery system. Also refers to measures of participants (esp in TR) Measurements - ways to obtain quantitative or qualitative data Standards - statements of desirable practice or performance Evaluative Research - testing of hypotheses to examine effect or change

Types of evaluative questions Questions relating to goals (what goals were chosen; what alternative goals might have been chosen) Questions relating to strategies (similar questions) Questions relating to program elements (design, implementation) Questions relating to results (results, long-term effects, etc. )

The 5 P’s of evaluation Participants Personnel Place Policies/administration Program

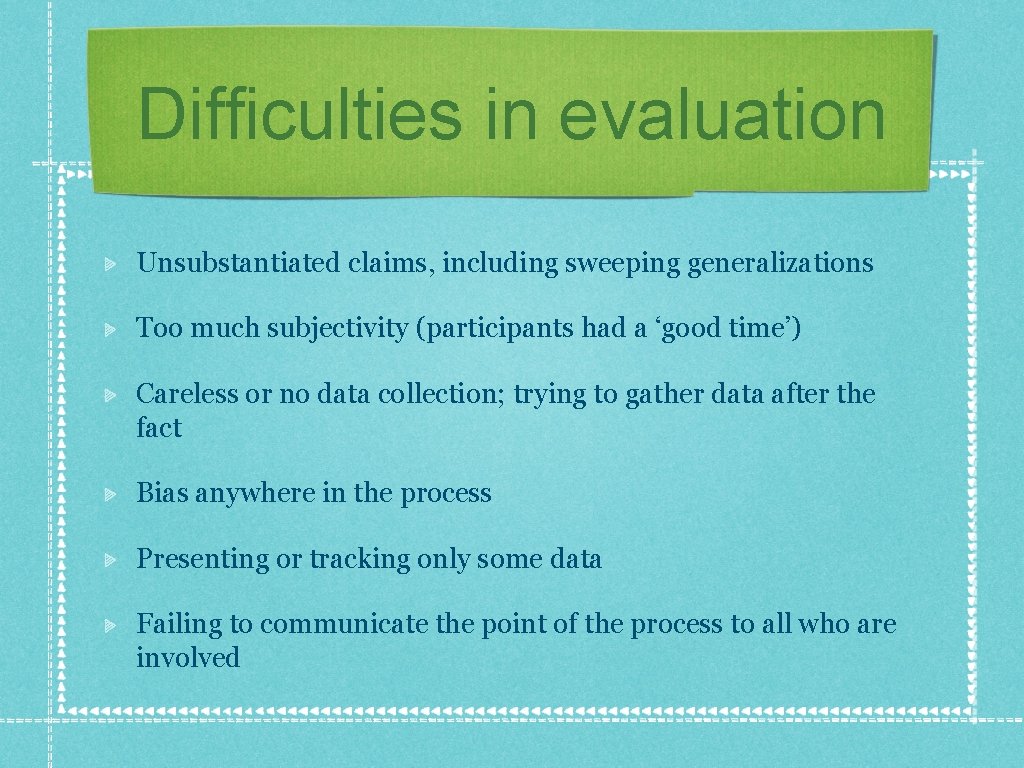

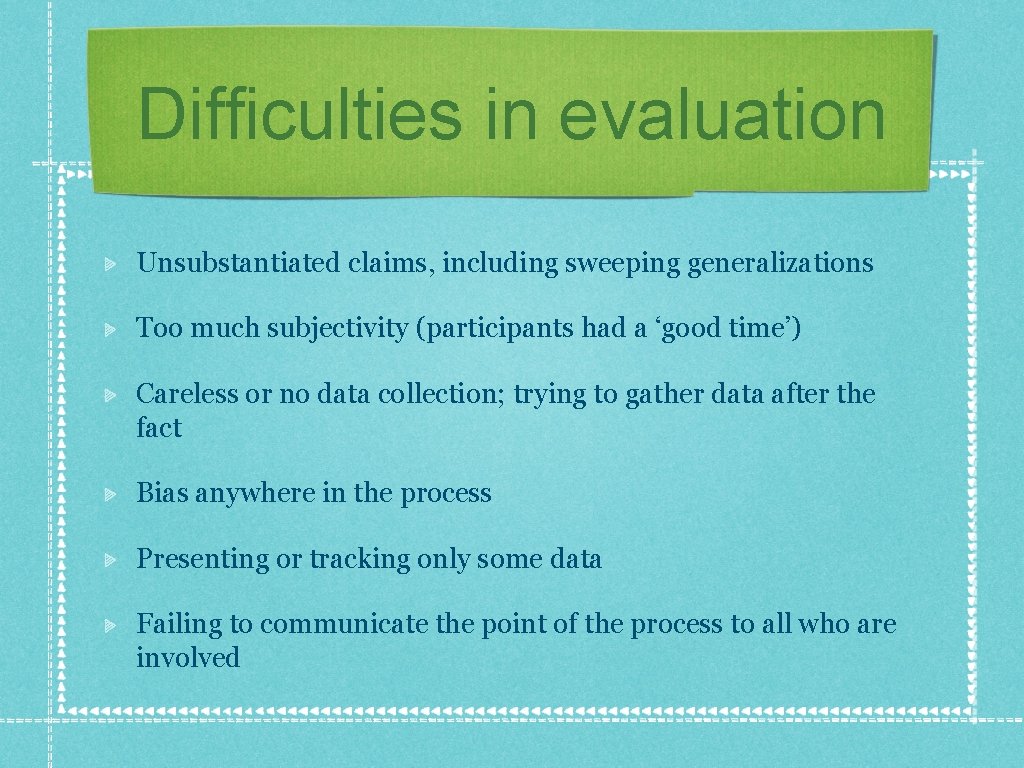

Difficulties in evaluation Unsubstantiated claims, including sweeping generalizations Too much subjectivity (participants had a ‘good time’) Careless or no data collection; trying to gather data after the fact Bias anywhere in the process Presenting or tracking only some data Failing to communicate the point of the process to all who are involved

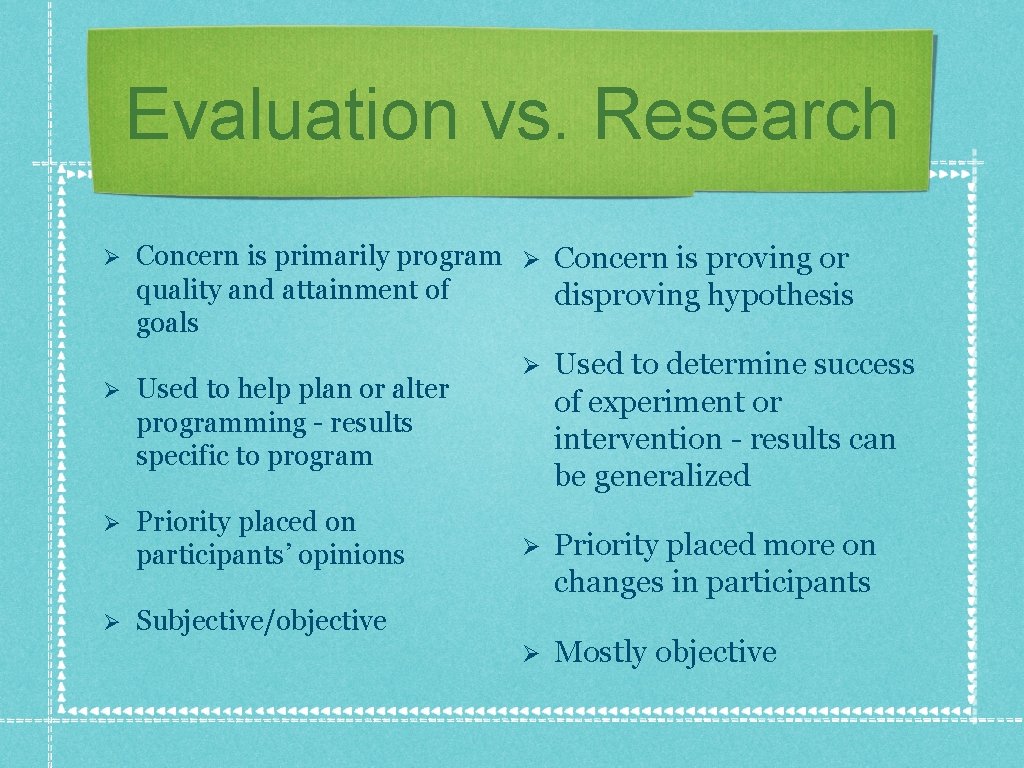

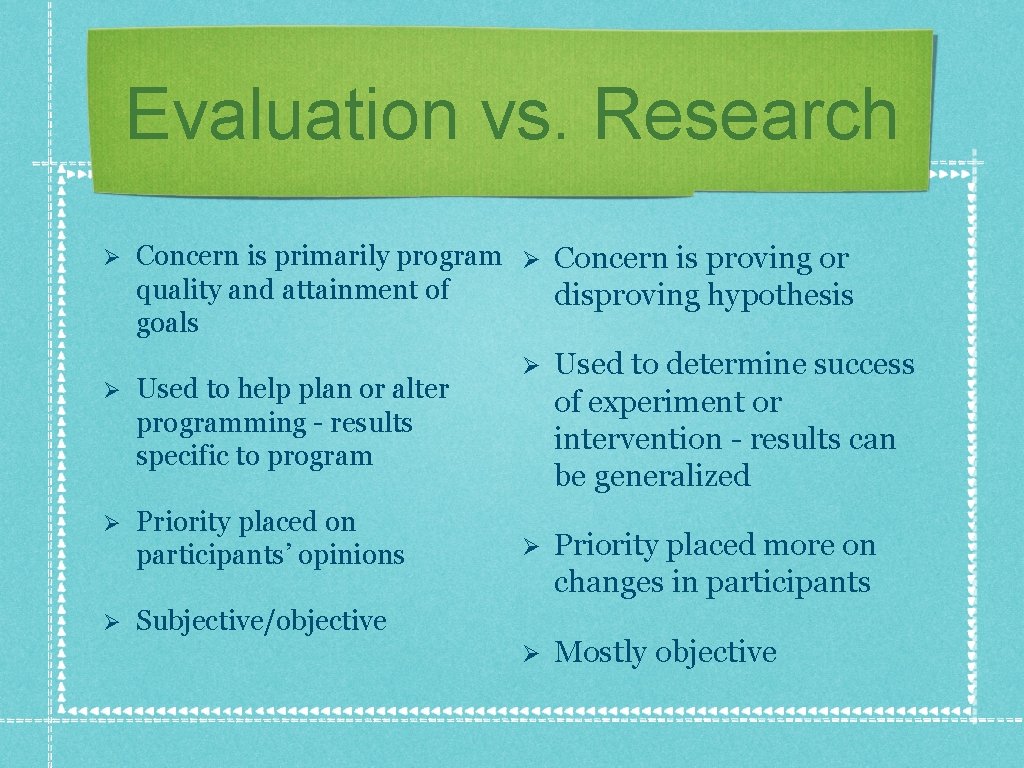

Evaluation vs. Research Ø Concern is primarily program Ø Concern is proving or quality and attainment of disproving hypothesis goals Ø Used to help plan or alter programming - results specific to program Ø Priority placed on participants’ opinions Ø Subjective/objective Ø Used to determine success of experiment or intervention - results can be generalized Ø Priority placed more on changes in participants Ø Mostly objective

Quality assurance/quality control Lots of concern about ‘quality’ Applies to programming, programmers, experience, facilities, etc. What makes a leisure experience ‘high quality? ’ What makes one ‘low quality? ’ Quality is a subjective term; objective measures must be devised

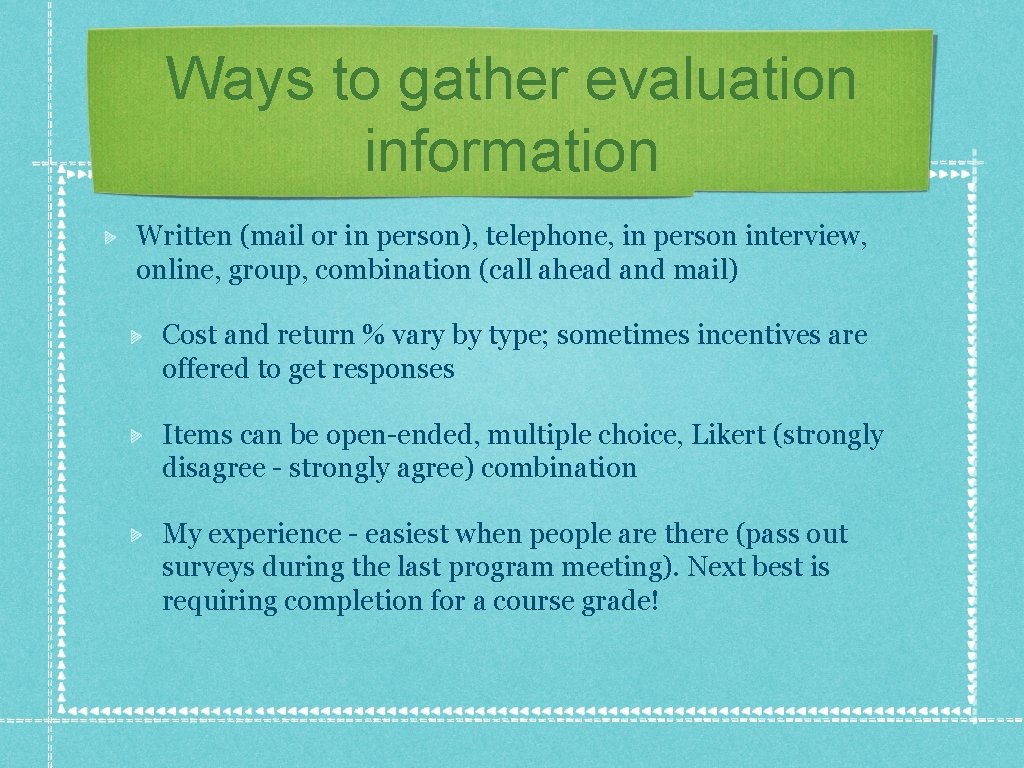

Ways to gather evaluation information Written (mail or in person), telephone, in person interview, online, group, combination (call ahead and mail) Cost and return % vary by type; sometimes incentives are offered to get responses Items can be open-ended, multiple choice, Likert (strongly disagree - strongly agree) combination My experience - easiest when people are there (pass out surveys during the last program meeting). Next best is requiring completion for a course grade!

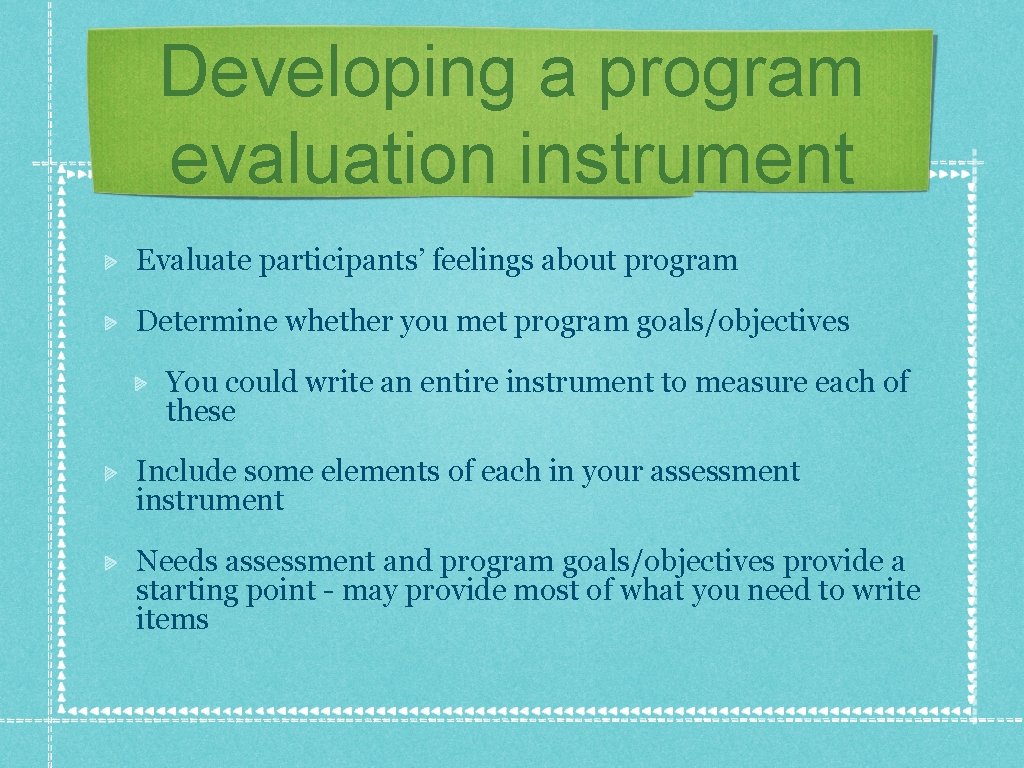

Developing a program evaluation instrument Evaluate participants’ feelings about program Determine whether you met program goals/objectives You could write an entire instrument to measure each of these Include some elements of each in your assessment instrument Needs assessment and program goals/objectives provide a starting point - may provide most of what you need to write items

What type of items are most effective? Always provide some place for comments otherwise it looks like you don’t care Open ended items take longer to complete and may result in incomplete responses Choices are good (multiple choice, Likert) when possible. (Before you use Likert, scales, think about what you will do with your results; you may not need to know ‘degree’ of feeling)

A little more on ‘Likert’ Most are 5 item - from strongly disagree to strongly agree (with ‘neutral’ in the middle) Developed by Rensis Likert for thesis in 1930 s Agreement/disagreement important as is degree of agreement/disagreement (how important is the item to you or how strongly do you feel about it). This level of information may be important in research but not to evaluate how well your program was received A 5 point - poor to excellent - scale may be better for this type of program assessment

How should your evaluation look? Overly long evaluations may be intimidating Too short evaluations may not give you enough information While consistency is good, forms probably should evolve over time Be prepared to use (or at least respond to) information you receive

Useful information Like needs assessment - name, address, age, etc. You should be able to more easily gather personal information from participants Identify program that is being assessed. If multiple times/sections are offered, identify which Rating/ranking/opinion of program, instructor, equipment, staff, facility, etc. Recommendations/suggestions about above Typical use patterns (are opinions of regular users more important? )

More useful information Other areas of interest or possible participation - existing or recommendations Interests/participation of other family members “Would you like to be contacted regarding this” or “May we contact you regarding this” Add to mail/email list Anything else that might help you with future programming Information for grant/fund providers

Back to my example This is a new program, so everyone is a first time participant (no need to ask that). I could ask if individuals have done similar programs I have some funding, so I need to be certain I gather information for the funders (they should have told me what they wanted to know when they awarded the funding) I know that I would like to continue so I can ask about possible future participation I needed financial help to keep from operating at a loss but I may not receive it next year. I can ask if participants would be willing to pay more, or I can phrase a question about value for their money

More about my water exercise program I probably won’t ask a lot about the facility - I should be gathering that data from pool attendees. However, I may want to get input from people who have not attended the pool regularly before my class Can I get this completed during the last meeting? Depends on usual behavior of participants (do they shower and change, hang around, or just get out of the pool and leave). Observing their behavior during regular sessions should give me some idea of how/when to administer

Program Evaluation Assignment Participant information - name, address, etc. 10 items evaluating program - facility, instruction, type/format, etc. Can re-use needs assessment items rephrase if necessary. (Not ‘what time is convenient, ’ but ‘was the scheduled time for this program convenient’) At least one item relating to one of the objectives you outlined Tell me your plan for getting the evaluations completed (during classes, mail, phone and mail, go door to door, email, etc. )

Assignment One copy of blank evaluation form Directions for completion, if necessary. (If you are mailing, you would put directions about how/where to mail back, same for email. You can make up addresses, email addresses, phone #s) Indication of your plan for getting as many evaluations back as possible Due November 17 th with complete program