Production Grids Computer and Information Sciences Earth Sciences

- Slides: 27

Production Grids Computer and Information Sciences Earth Sciences Life Sciences General Overview Multidisciplinary Research Math and Physical Science Social Sciences Fabrizio Gagliardi New Materials, Technologies and Processes Director, Technical Computing, Microsoft EMEA

Talk Outline u u u A few personal remarks Need for e-Infrastructures and Grids Background history Outlook to the future MS contribution

A few personal remarks u u GGF 1 and EDG EGEE and GGF experience Acknowledgements Move to MS

Need for e-Infrastructures u u Science, industry and commerce are more and more digital, process vast amounts of data and need massive computing power We live in a “flat” world: Ø u Need to use technology for the good cause Ø u Science is more and more an international collaboration and often requires a multidisciplinary approach Fight Digital/Divide Industrial uptake has become essential

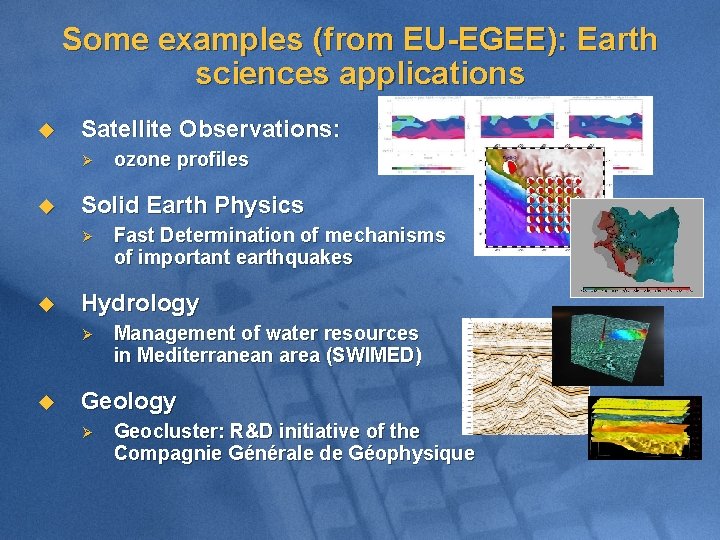

Some examples (from EU-EGEE): Earth sciences applications u Satellite Observations: Ø u Solid Earth Physics Ø u Fast Determination of mechanisms of important earthquakes Hydrology Ø u ozone profiles Management of water resources in Mediterranean area (SWIMED) Geology Ø Geocluster: R&D initiative of the Compagnie Générale de Géophysique

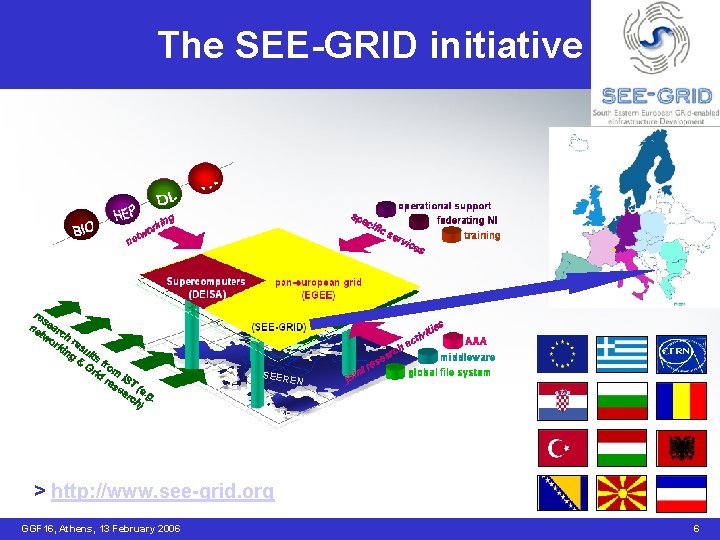

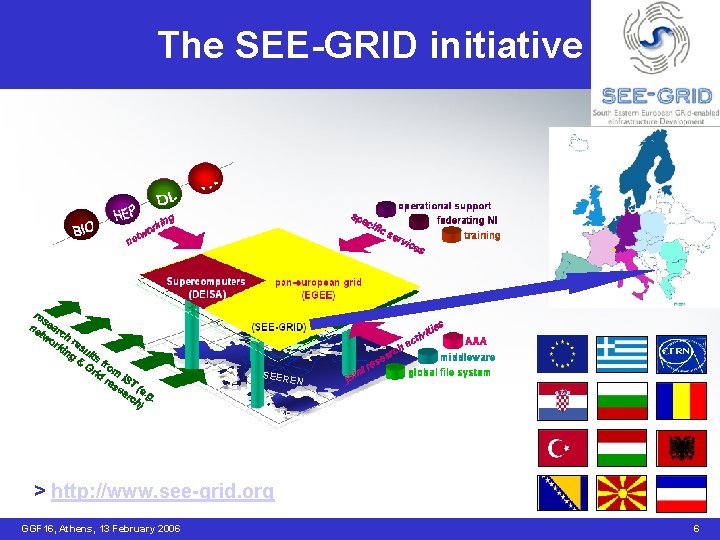

The SEE-GRID initiative SEER EN > http: //www. see-grid. org GGF 16, Athens, 13 February 2006 6

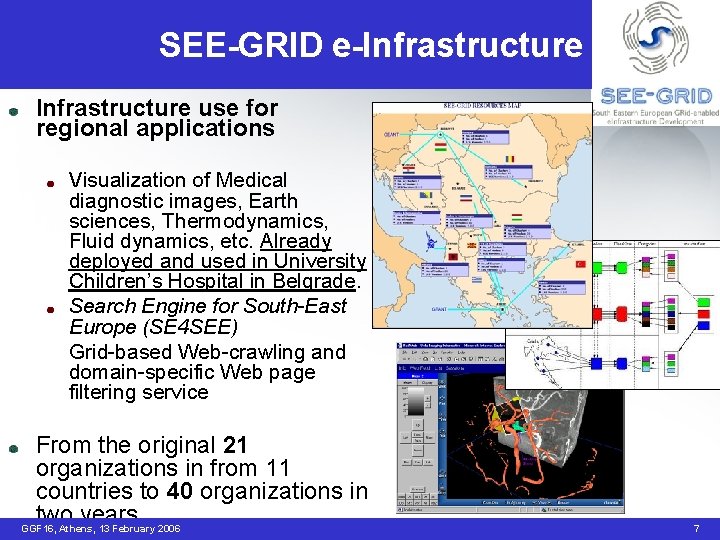

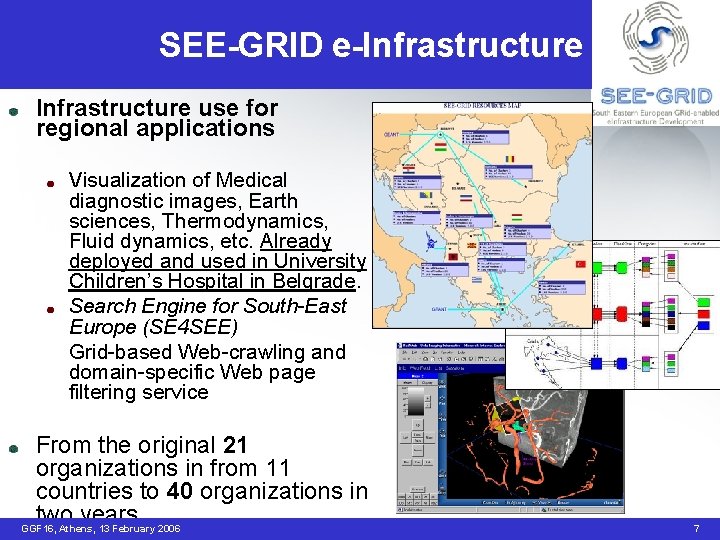

SEE-GRID e-Infrastructure use for regional applications Visualization of Medical diagnostic images, Earth sciences, Thermodynamics, Fluid dynamics, etc. Already deployed and used in University Children’s Hospital in Belgrade. Search Engine for South-East Europe (SE 4 SEE) Grid-based Web-crawling and domain-specific Web page filtering service From the original 21 organizations in from 11 countries to 40 organizations in two years GGF 16, Athens, 13 February 2006 7

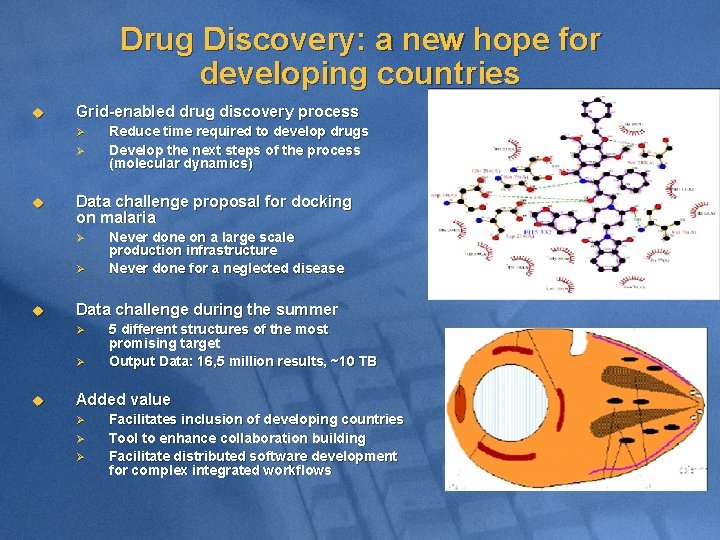

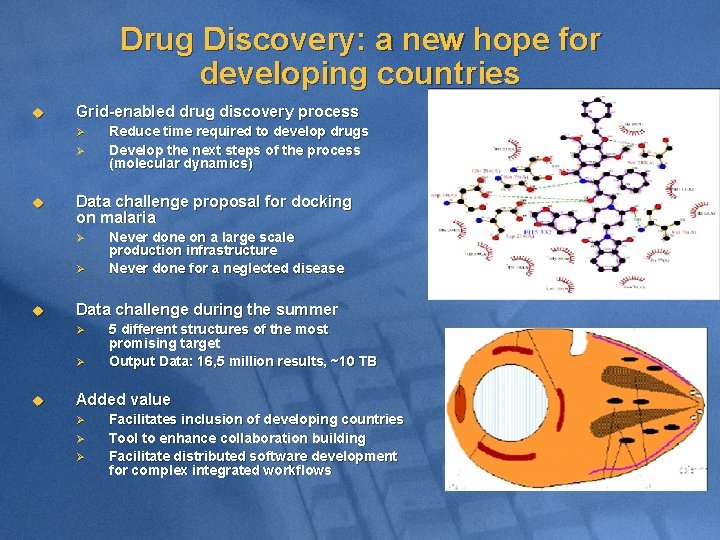

Drug Discovery: a new hope for developing countries u Grid-enabled drug discovery process Ø Ø u Data challenge proposal for docking on malaria Ø Ø u Never done on a large scale production infrastructure Never done for a neglected disease Data challenge during the summer Ø Ø u Reduce time required to develop drugs Develop the next steps of the process (molecular dynamics) 5 different structures of the most promising target Output Data: 16, 5 million results, ~10 TB Added value Ø Ø Ø Facilitates inclusion of developing countries Tool to enhance collaboration building Facilitate distributed software development for complex integrated workflows

The underlining infrastructure

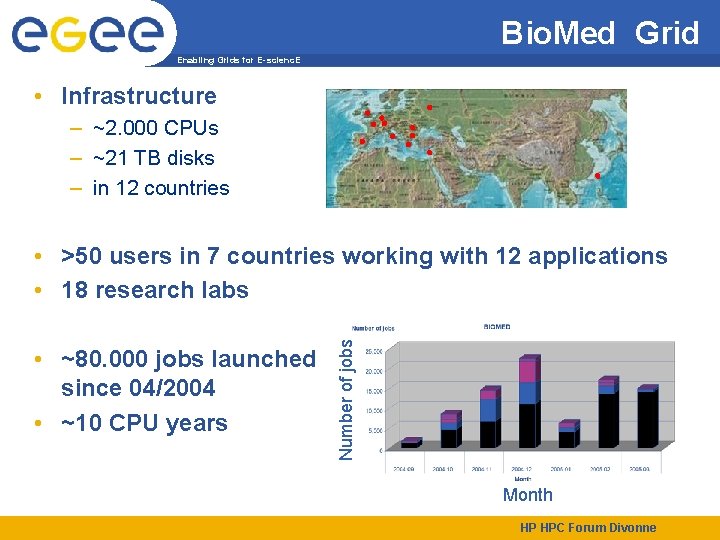

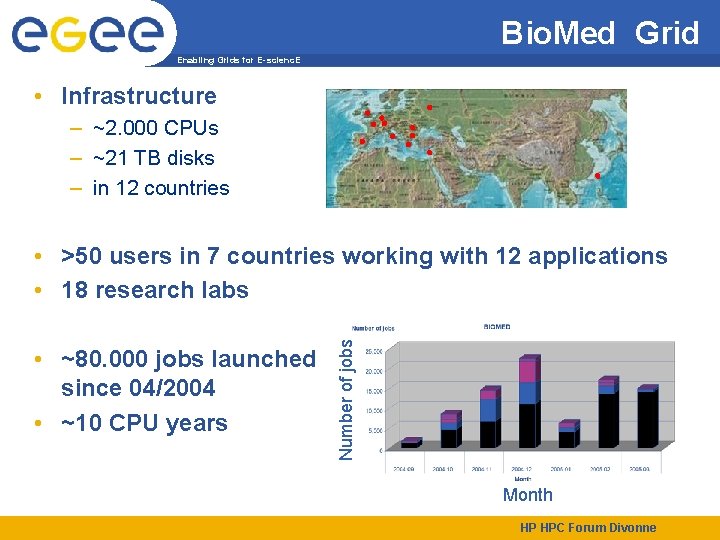

Bio. Med Grid Enabling Grids for E-scienc. E • Infrastructure – ~2. 000 CPUs – ~21 TB disks – in 12 countries • ~80. 000 jobs launched since 04/2004 • ~10 CPU years Number of jobs • >50 users in 7 countries working with 12 applications • 18 research labs Month HP HPC Forum Divonne

The EGEE Grid www. eu-egee. org

The EU research network

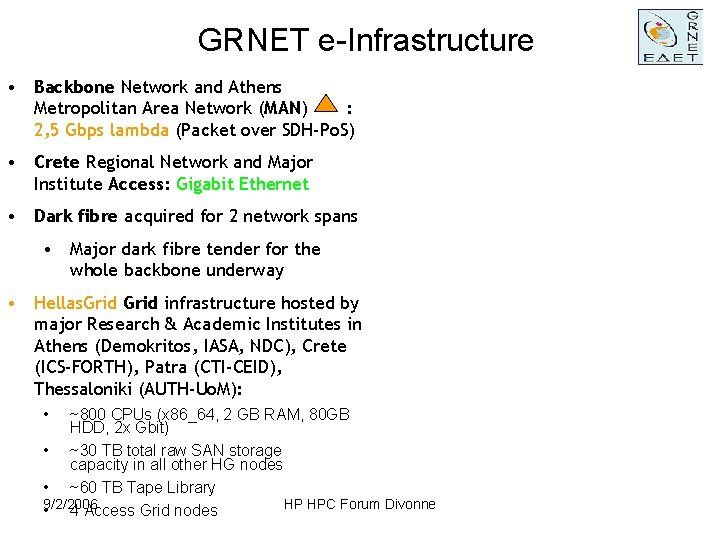

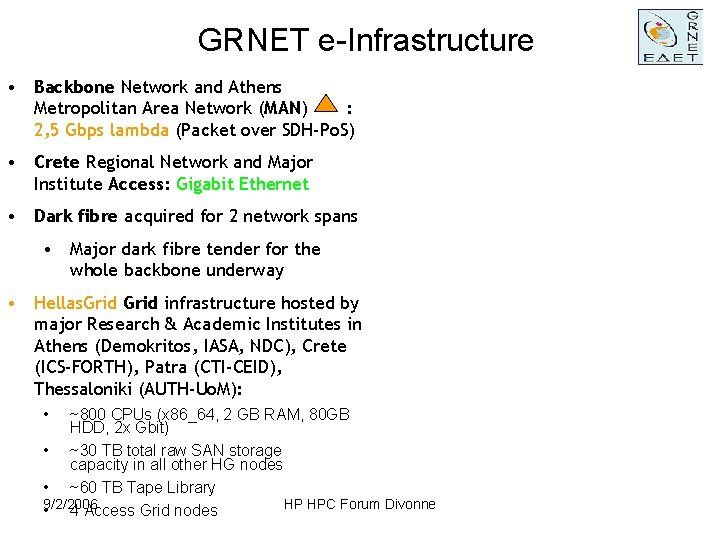

GRNET e-Infrastructure • Backbone Network and Athens Metropolitan Area Network (MAN) : 2, 5 Gbps lambda (Packet over SDH-Po. S) • Crete Regional Network and Major Institute Access: Gigabit Ethernet • Dark fibre acquired for 2 network spans • Major dark fibre tender for the whole backbone underway • Hellas. Grid infrastructure hosted by major Research & Academic Institutes in Athens (Demokritos, IASA, NDC), Crete (ICS-FORTH), Patra (CTI-CEID), Thessaloniki (AUTH-Uo. M): • ~800 CPUs (x 86_64, 2 GB RAM, 80 GB HDD, 2 x Gbit) • ~30 TB total raw SAN storage capacity in all other HG nodes • ~60 TB Tape Library HP HPC Forum Divonne • 9/2/2006 4 Access Grid nodes

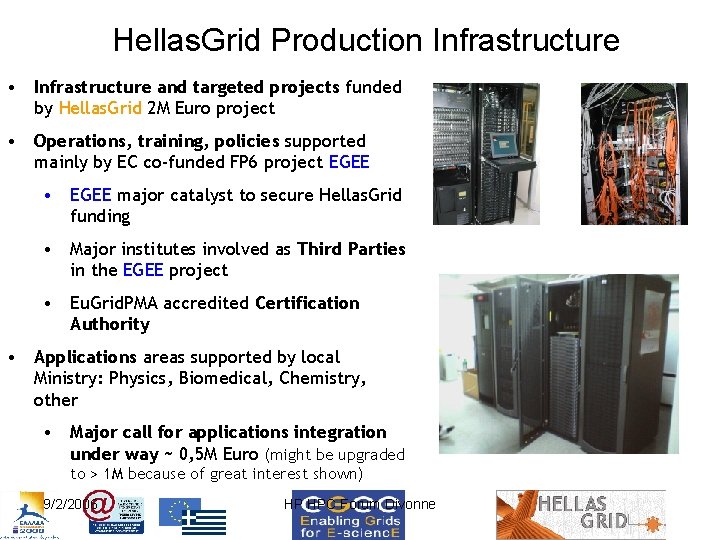

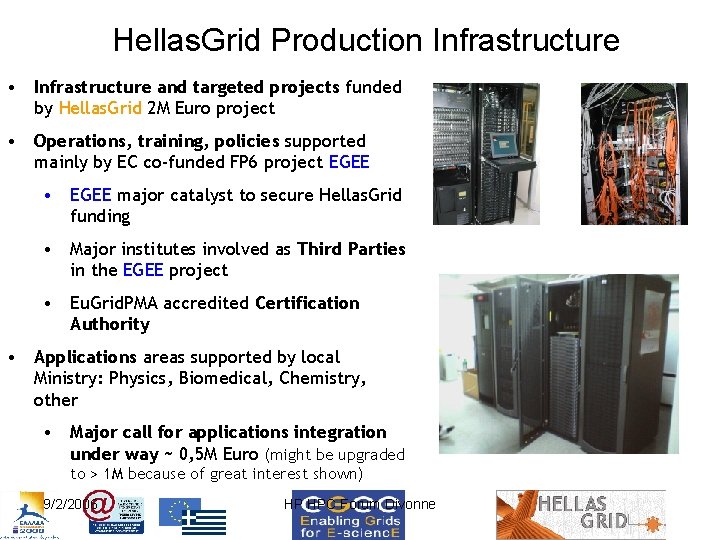

Hellas. Grid Production Infrastructure • Infrastructure and targeted projects funded by Hellas. Grid 2 M Euro project • Operations, training, policies supported mainly by EC co-funded FP 6 project EGEE • EGEE major catalyst to secure Hellas. Grid funding • Major institutes involved as Third Parties in the EGEE project • Eu. Grid. PMA accredited Certification Authority • Applications areas supported by local Ministry: Physics, Biomedical, Chemistry, other • Major call for applications integration under way ~ 0, 5 M Euro (might be upgraded to > 1 M because of great interest shown) 9/2/2006 HP HPC Forum Divonne

Current situation: accomplishments and challenges u u u Many Grids around the world, very few maintained as a persistent infrastructure Need for public and open Grids (OSG, EGEE and related projects, NAREGI, and TERAGRID, DEISA good prototypes) Persistence, support, sustainability, long term funding, easy access are the major challenges

Key Challenges u Security (see Blair Dillaway’s talk) u Stable accepted industrial standards (GGF and EGA converging) u Learning curve for applications u Complexity of running a Grid infrastructure across different administrative domains

How did we get here? u Meta-computing and distributed computing early examples in the 80’ and 90’ (CASA, IWay, Unicore, Condor etc. ) u EU FP 5 and US Trillium and national Grids u EU FP 6, US OSG, NAREGI/Japan… u UK e-Science programme and similar other national programmes

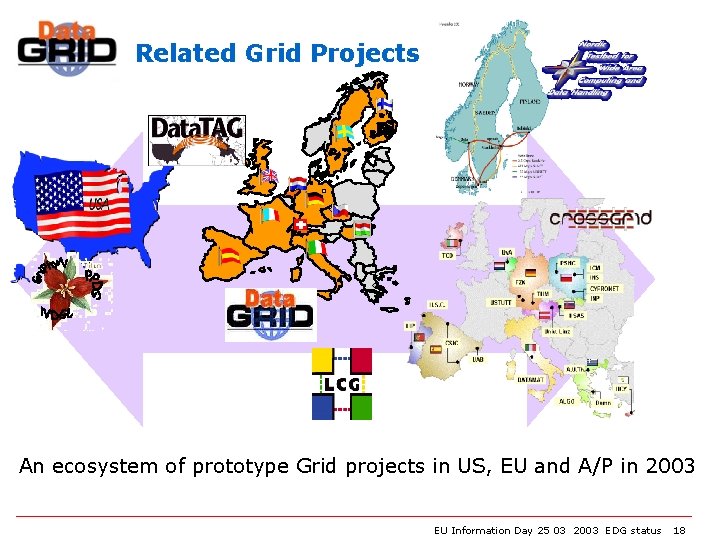

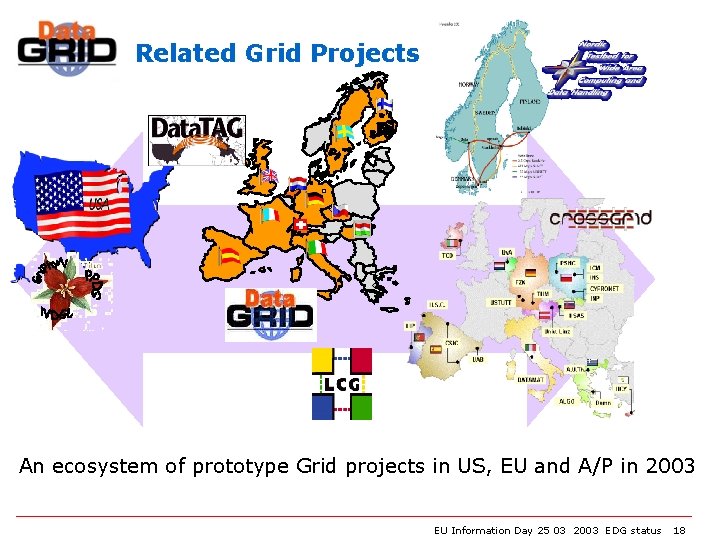

Related Grid Projects An ecosystem of prototype Grid projects in US, EU and A/P in 2003 EU Information Day 25 03 2003 EDG status 18

Where are we going?

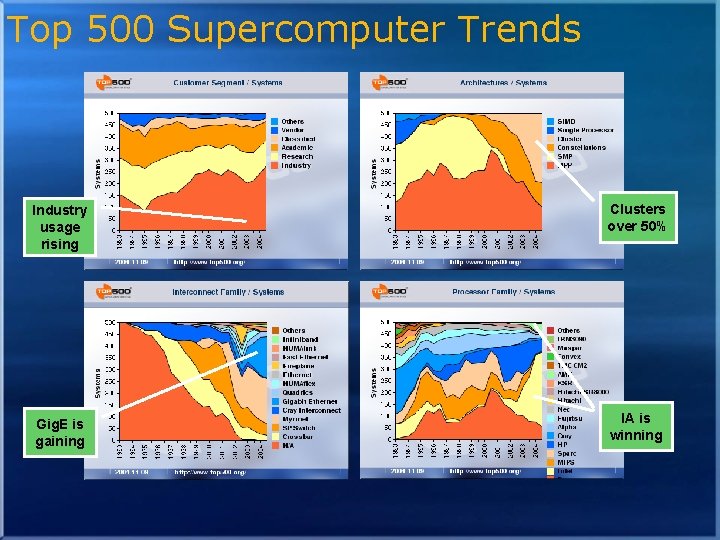

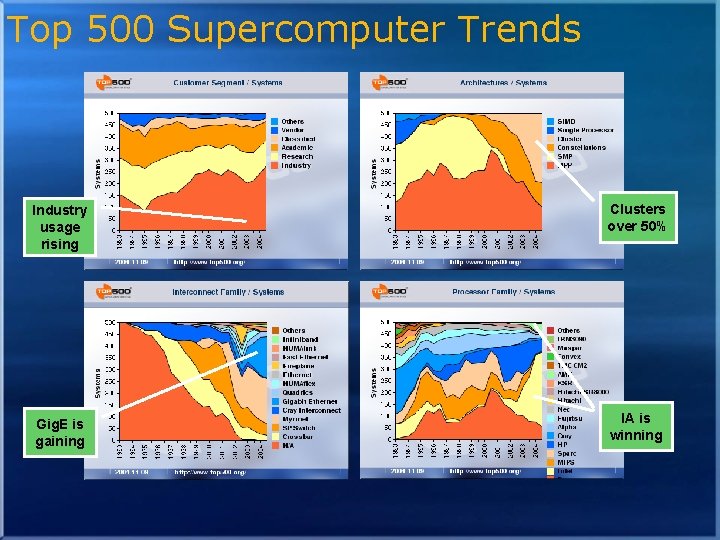

Top 500 Supercomputer Trends Industry usage rising Clusters over 50% Gig. E is gaining IA is winning

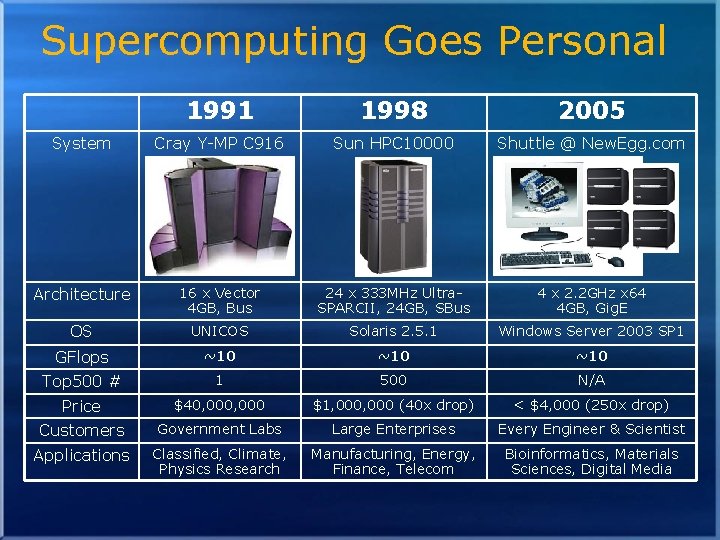

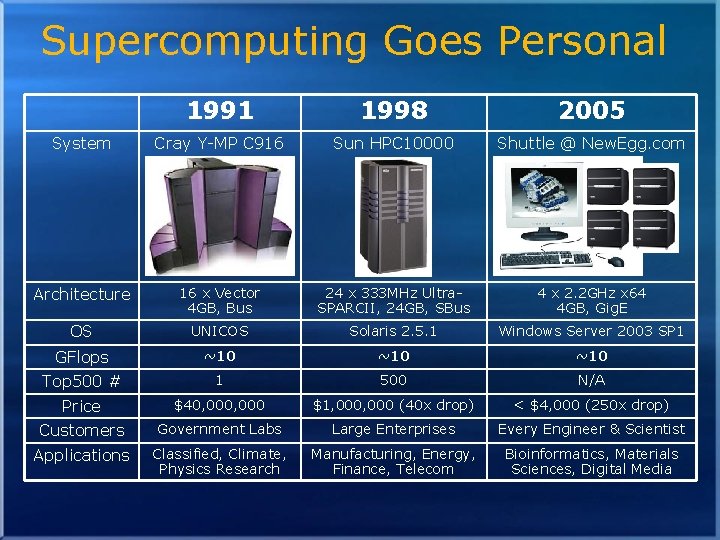

Supercomputing Goes Personal 1991 1998 2005 System Cray Y-MP C 916 Sun HPC 10000 Shuttle @ New. Egg. com Architecture 16 x Vector 4 GB, Bus 24 x 333 MHz Ultra. SPARCII, 24 GB, SBus 4 x 2. 2 GHz x 64 4 GB, Gig. E OS UNICOS Solaris 2. 5. 1 Windows Server 2003 SP 1 GFlops ~10 ~10 Top 500 # 1 500 N/A Price $40, 000 $1, 000 (40 x drop) < $4, 000 (250 x drop) Customers Government Labs Large Enterprises Every Engineer & Scientist Applications Classified, Climate, Physics Research Manufacturing, Energy, Finance, Telecom Bioinformatics, Materials Sciences, Digital Media

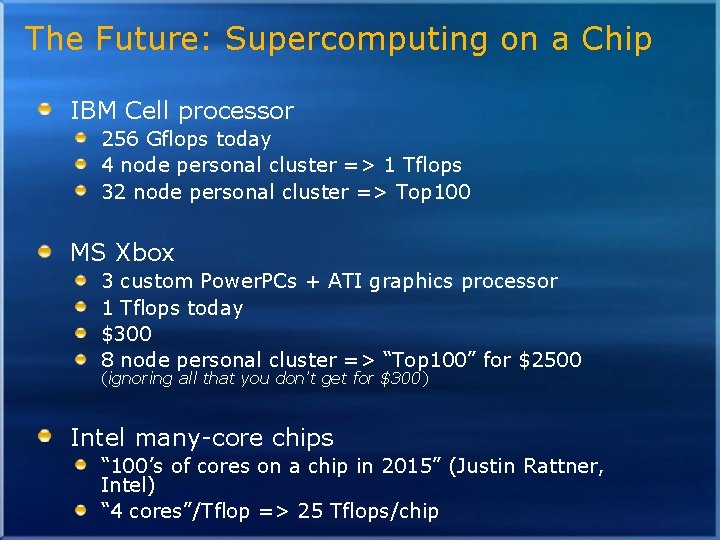

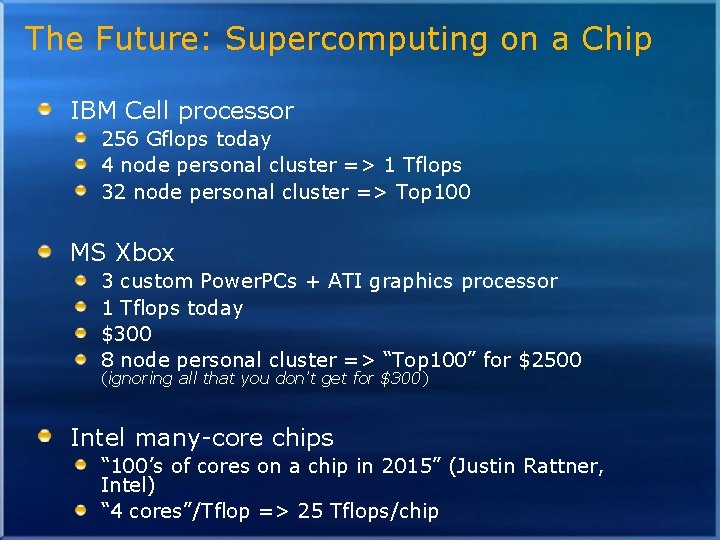

The Future: Supercomputing on a Chip IBM Cell processor 256 Gflops today 4 node personal cluster => 1 Tflops 32 node personal cluster => Top 100 MS Xbox 3 custom Power. PCs + ATI graphics processor 1 Tflops today $300 8 node personal cluster => “Top 100” for $2500 (ignoring all that you don’t get for $300) Intel many-core chips “ 100’s of cores on a chip in 2015” (Justin Rattner, Intel) “ 4 cores”/Tflop => 25 Tflops/chip

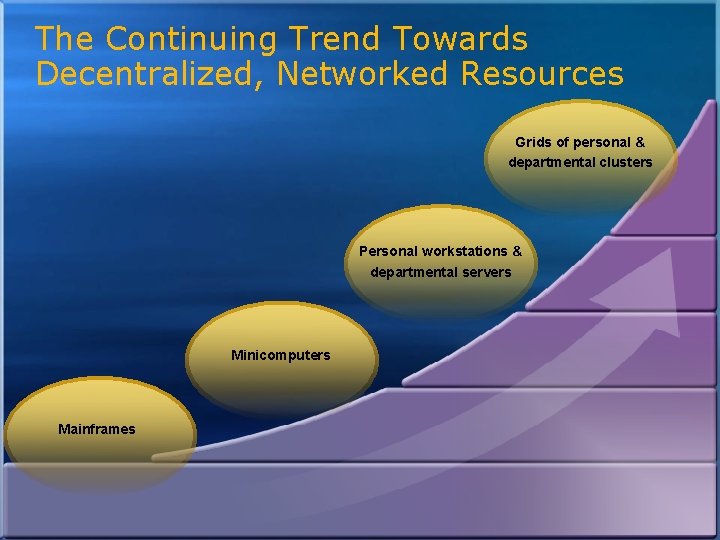

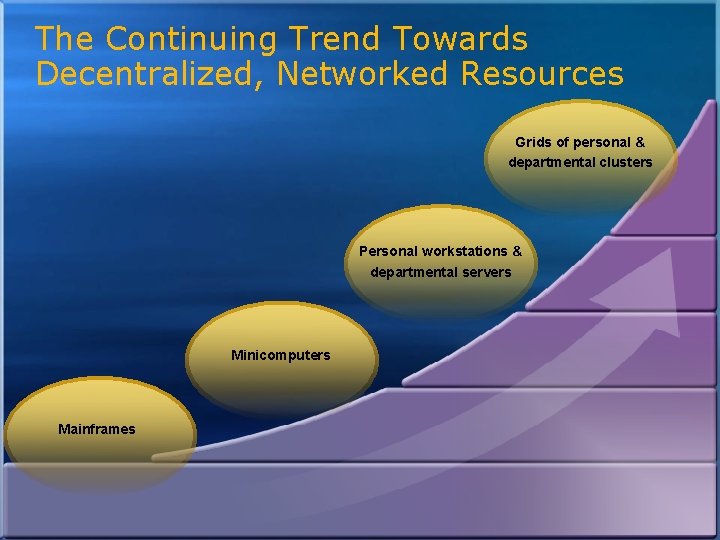

The Continuing Trend Towards Decentralized, Networked Resources Grids of personal & departmental clusters Personal workstations & departmental servers Minicomputers Mainframes

MS HPC road map Computer Cluster Solution (CCS) V 1: Beowulf-style compute cluster seamlessly integrated into a Windows-based infrastructure, including security infrastructure (Kerberos and Active Directory); user jobs run under the Windows user credentials ISVs support for most common software applications Job scheduler accessible via command-line, COM and via a published Web services protocol. Can be customized via sys admin-defined admission and release filters that run in the scheduler when a job is submitted and when it becomes scheduled as ready-to-run. In V 2 the scheduler will be made even more extensible. Performance will be comparable to Linux MPICH (incl. MPICH-2) supported (open-source version from ANL)

MS HPC road map CCS areas of focus for V 2: Extend to forests of clusters and meta-schedulers Storage and parallel I/O issues, as well as possibly simple workflow support Development of new the tools

Conclusions Production Grids are a reality and here to stay HPC is becoming commodity seamlessly stretching from desktop to back-end resources MS HPC products are coming soon Microsoft is participating in major standardisation bodies MS is present at this meeting and prime sponsor

Wish everybody a fruitful and positive week in Athens you can contact me at: fabrig@microsoft. com