Processor Comparison M R Smith smithmrucalgary ca 1022020

- Slides: 16

Processor Comparison M. R. Smith smithmr@ucalgary. ca 10/2/2020 1

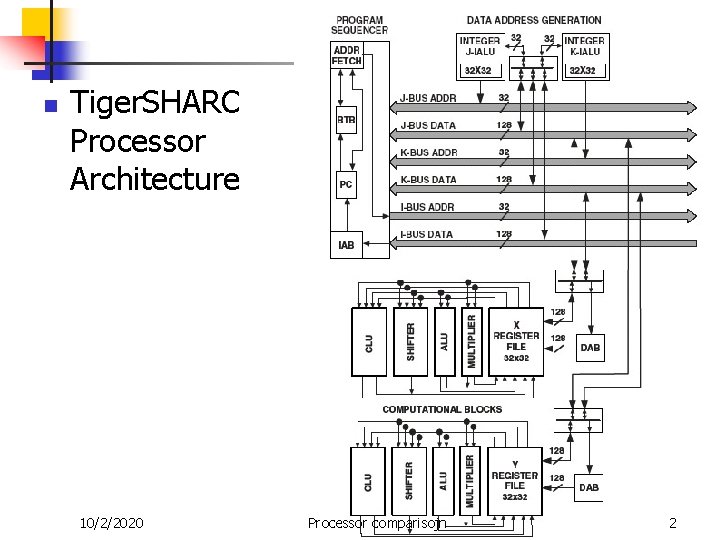

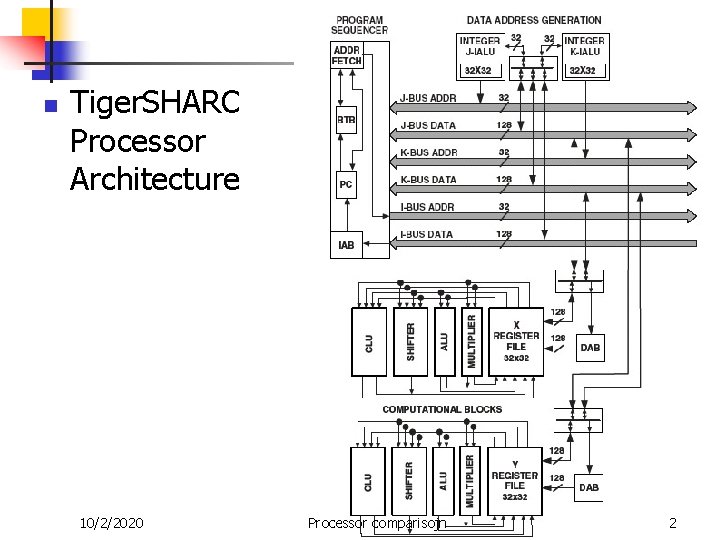

n Tiger. SHARC Processor Architecture 10/2/2020 Processor comparisojn 2

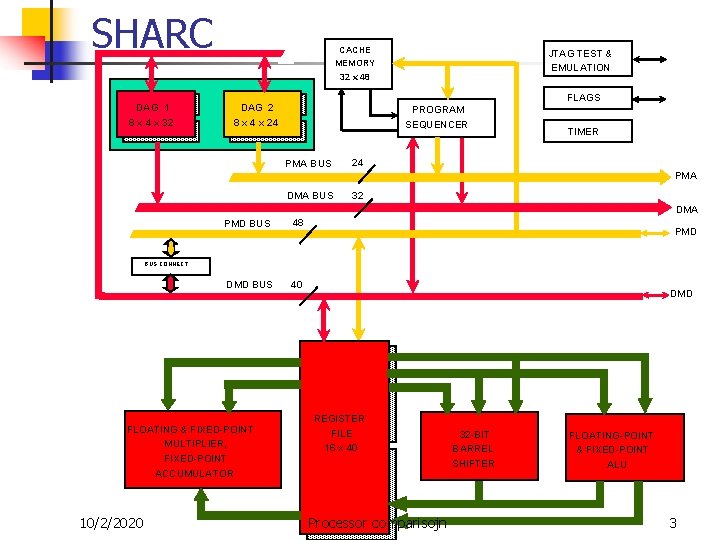

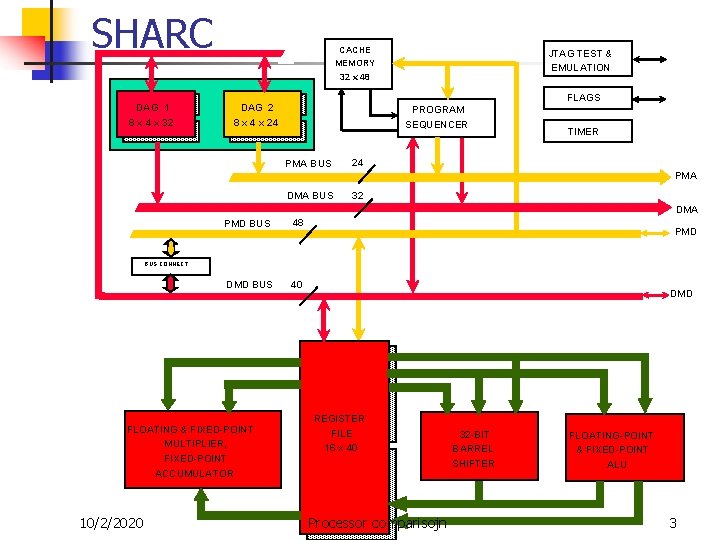

SHARC DAG 1 8 x 4 x 32 CACHE MEMORY 32 x 48 JTAG TEST & EMULATION FLAGS DAG 2 8 x 4 x 24 PROGRAM SEQUENCER PMA BUS TIMER 24 PMA DMA BUS 32 DMA PMD BUS 48 DMD BUS 40 PMD BUS CONNECT FLOATING & FIXED-POINT MULTIPLIER, FIXED-POINT ACCUMULATOR 10/2/2020 DMD REGISTER FILE 16 x 40 Processor comparisojn 32 -BIT BARREL SHIFTER FLOATING-POINT & FIXED-POINT ALU 3

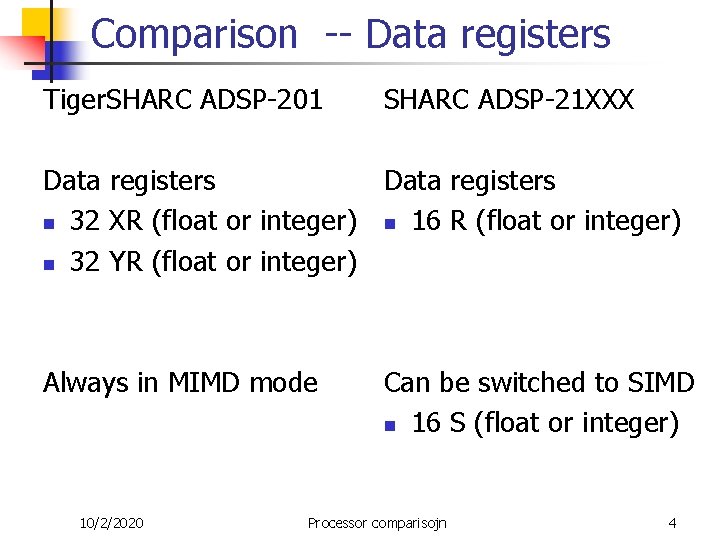

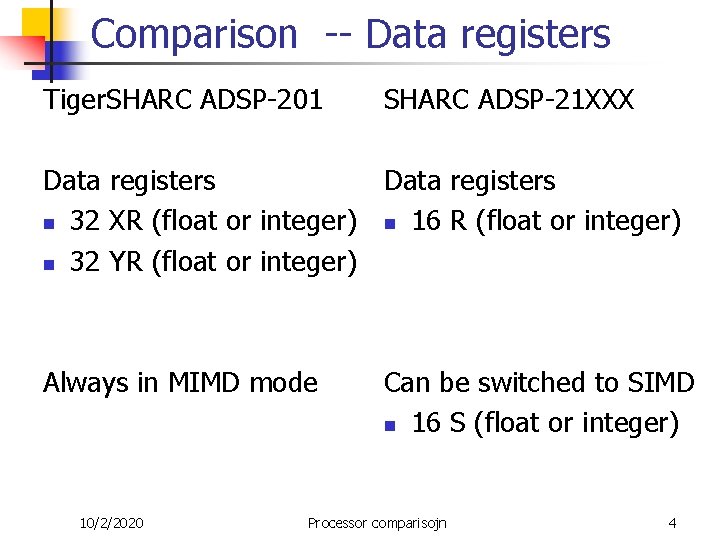

Comparison -- Data registers Tiger. SHARC ADSP-201 SHARC ADSP-21 XXX Data registers n 32 XR (float or integer) n 16 R (float or integer) n 32 YR (float or integer) Always in MIMD mode 10/2/2020 Can be switched to SIMD n 16 S (float or integer) Processor comparisojn 4

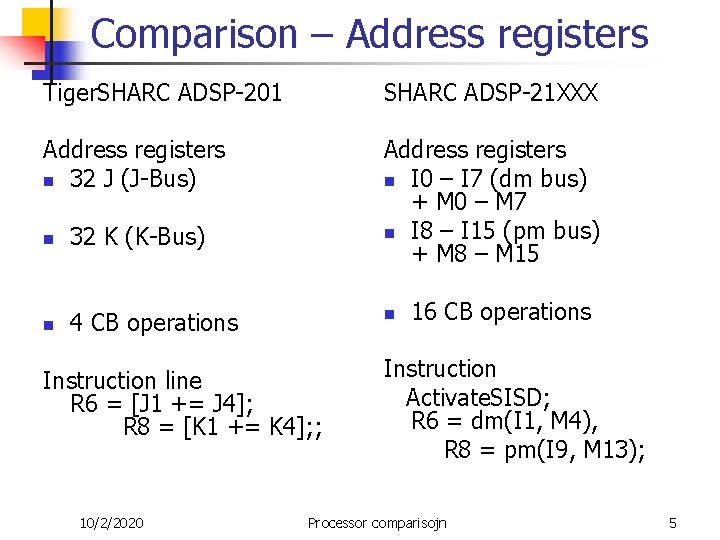

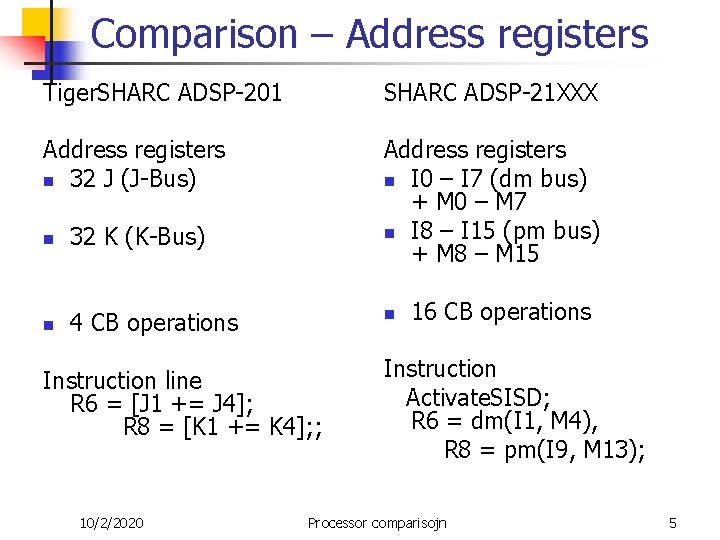

Comparison – Address registers Tiger. SHARC ADSP-201 SHARC ADSP-21 XXX Address registers n 32 J (J-Bus) Address registers n I 0 – I 7 (dm bus) + M 0 – M 7 n I 8 – I 15 (pm bus) + M 8 – M 15 n 32 K (K-Bus) n 4 CB operations n Instruction line R 6 = [J 1 += J 4]; R 8 = [K 1 += K 4]; ; 10/2/2020 16 CB operations Instruction Activate. SISD; R 6 = dm(I 1, M 4), R 8 = pm(I 9, M 13); Processor comparisojn 5

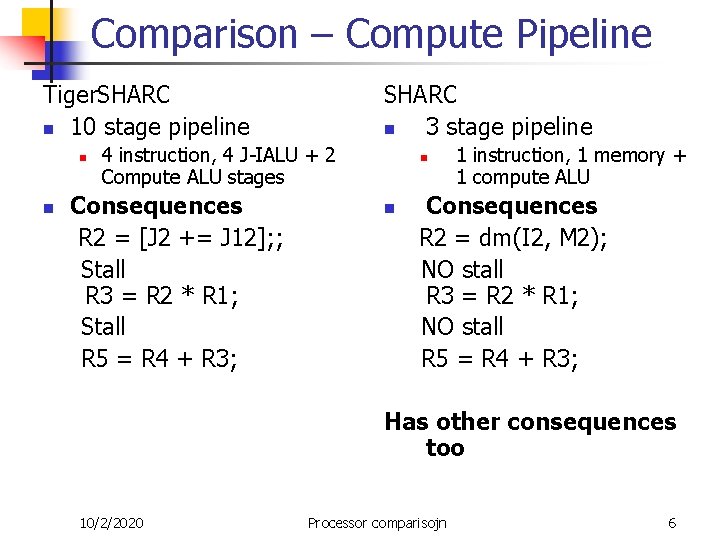

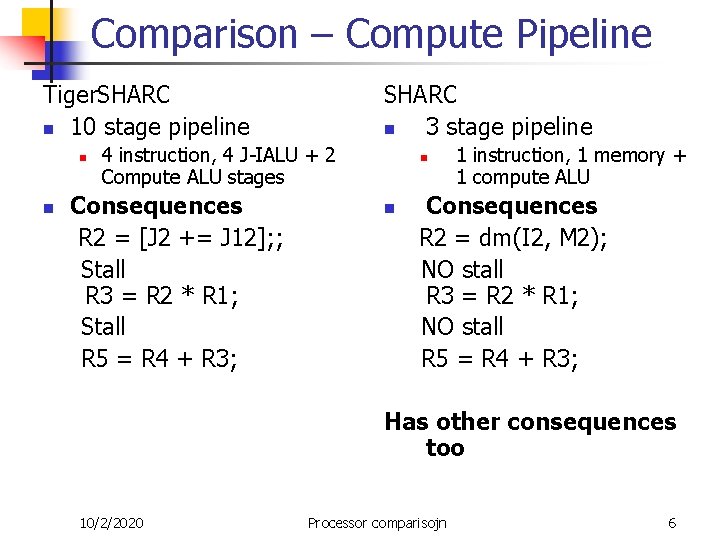

Comparison – Compute Pipeline Tiger. SHARC n 10 stage pipeline n n SHARC n 3 stage pipeline 4 instruction, 4 J-IALU + 2 Compute ALU stages Consequences R 2 = [J 2 += J 12]; ; Stall R 3 = R 2 * R 1; Stall R 5 = R 4 + R 3; n n 1 instruction, 1 memory + 1 compute ALU Consequences R 2 = dm(I 2, M 2); NO stall R 3 = R 2 * R 1; NO stall R 5 = R 4 + R 3; Has other consequences too 10/2/2020 Processor comparisojn 6

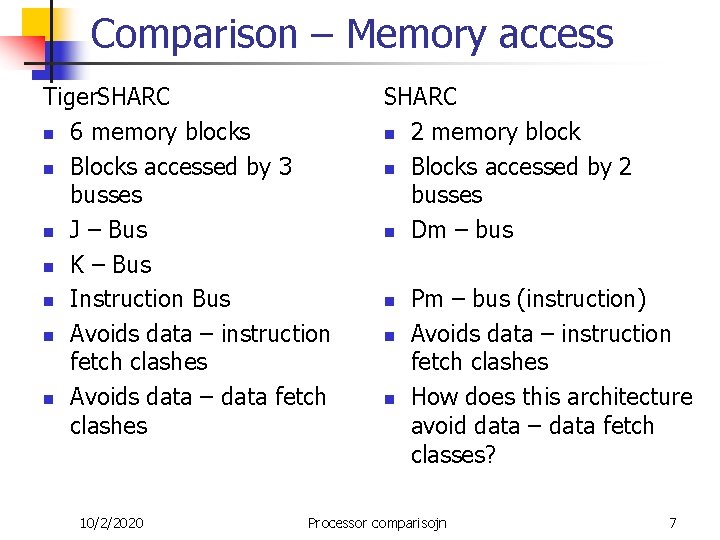

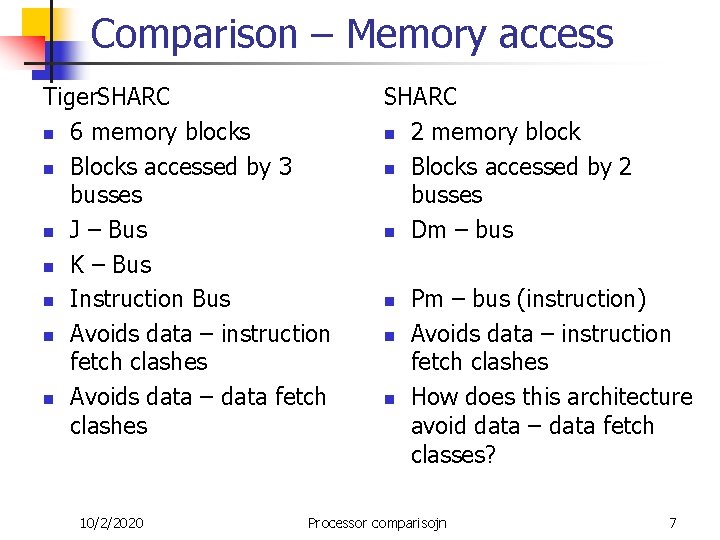

Comparison – Memory access Tiger. SHARC n 6 memory blocks n Blocks accessed by 3 busses n J – Bus n K – Bus n Instruction Bus n Avoids data – instruction fetch clashes n Avoids data – data fetch clashes 10/2/2020 SHARC n 2 memory block n Blocks accessed by 2 busses n Dm – bus n n n Pm – bus (instruction) Avoids data – instruction fetch clashes How does this architecture avoid data – data fetch classes? Processor comparisojn 7

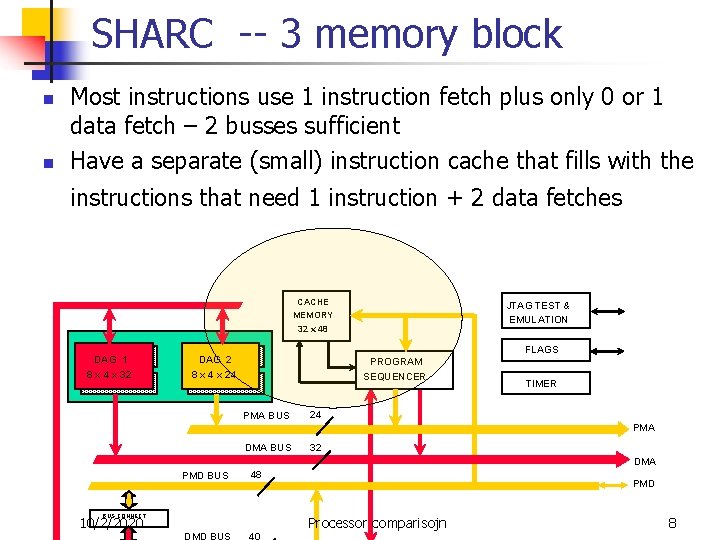

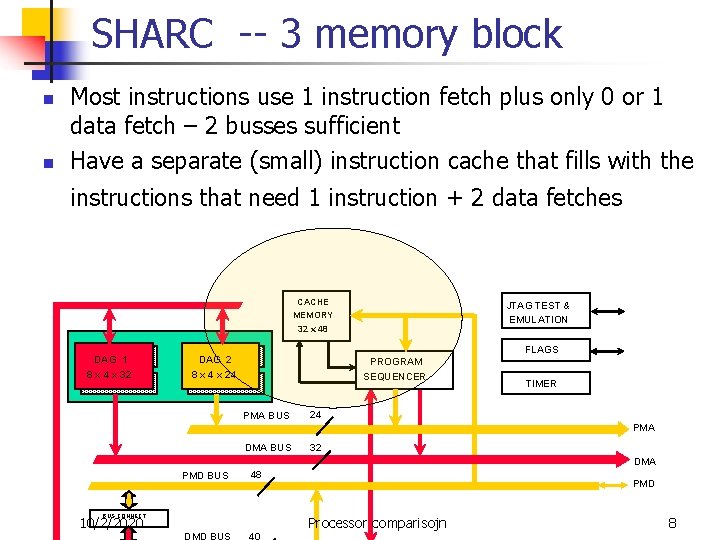

SHARC -- 3 memory block n n Most instructions use 1 instruction fetch plus only 0 or 1 data fetch – 2 busses sufficient Have a separate (small) instruction cache that fills with the instructions that need 1 instruction + 2 data fetches CACHE MEMORY 32 x 48 DAG 1 8 x 4 x 32 JTAG TEST & EMULATION FLAGS DAG 2 8 x 4 x 24 PROGRAM SEQUENCER PMA BUS TIMER 24 PMA DMA BUS 32 DMA PMD BUS 48 DMD BUS 40 BUS CONNECT 10/2/2020 PMD Processor comparisojn 8

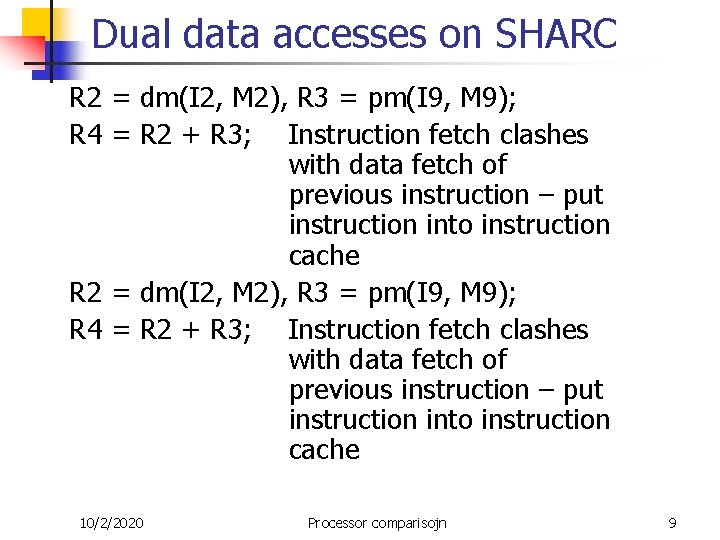

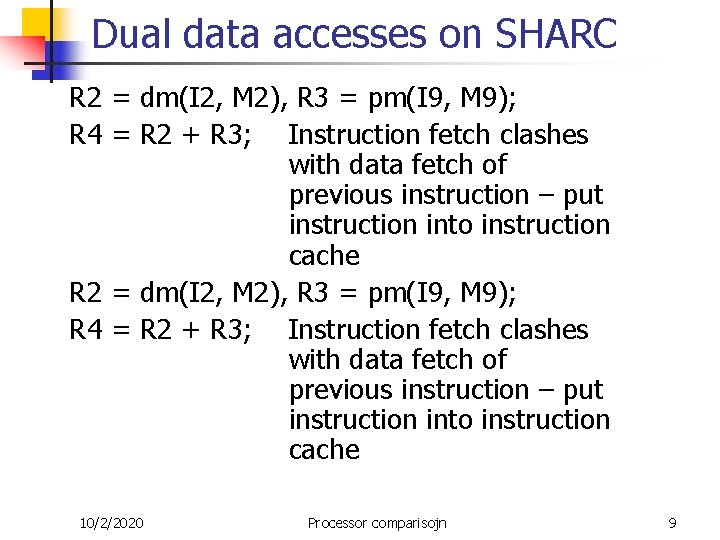

Dual data accesses on SHARC R 2 = dm(I 2, M 2), R 3 = pm(I 9, M 9); R 4 = R 2 + R 3; Instruction fetch clashes with data fetch of previous instruction – put instruction into instruction cache 10/2/2020 Processor comparisojn 9

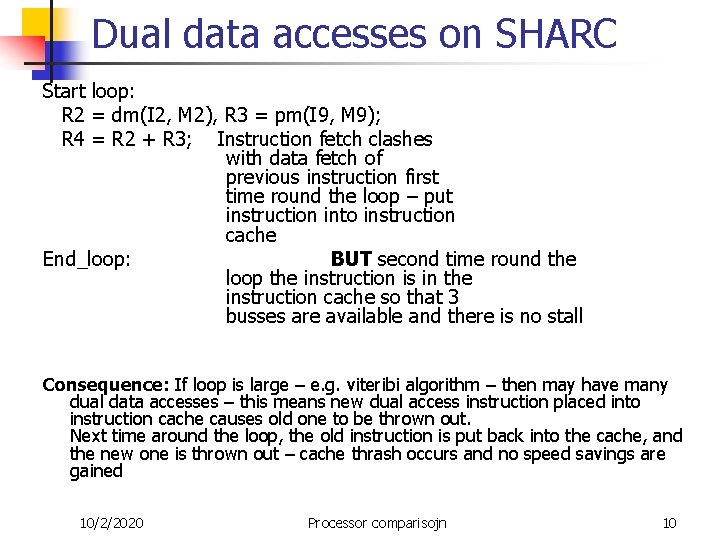

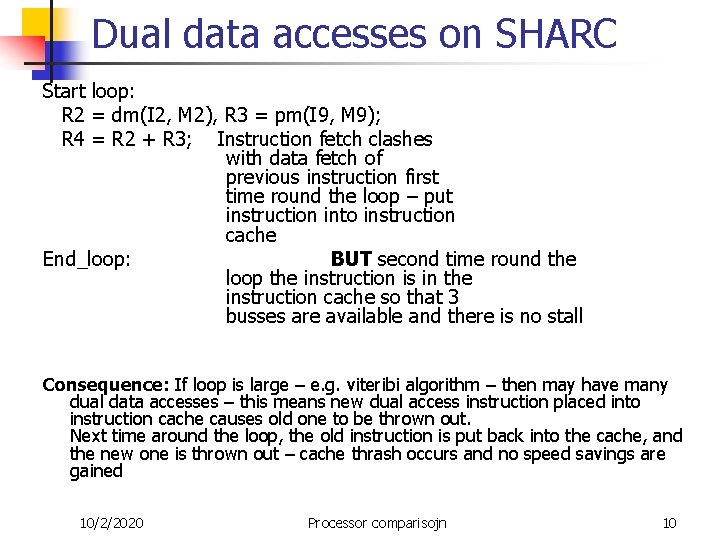

Dual data accesses on SHARC Start loop: R 2 = dm(I 2, M 2), R 3 = pm(I 9, M 9); R 4 = R 2 + R 3; Instruction fetch clashes with data fetch of previous instruction first time round the loop – put instruction into instruction cache End_loop: BUT second time round the loop the instruction is in the instruction cache so that 3 busses are available and there is no stall Consequence: If loop is large – e. g. viteribi algorithm – then may have many dual data accesses – this means new dual access instruction placed into instruction cache causes old one to be thrown out. Next time around the loop, the old instruction is put back into the cache, and the new one is thrown out – cache thrash occurs and no speed savings are gained 10/2/2020 Processor comparisojn 10

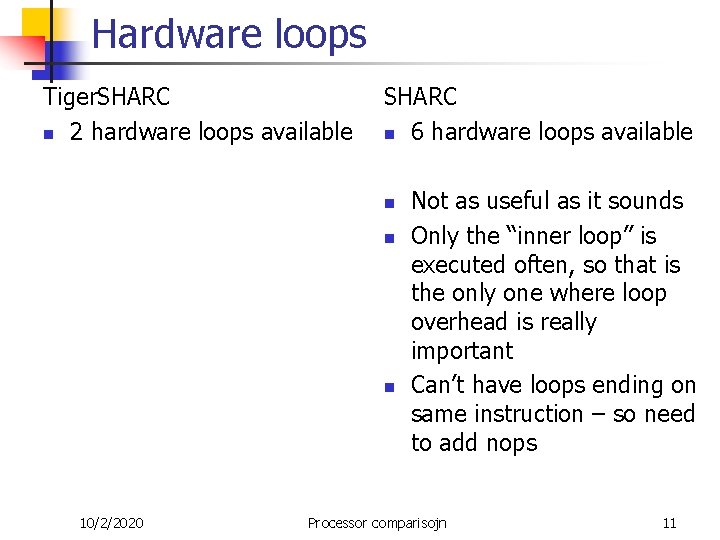

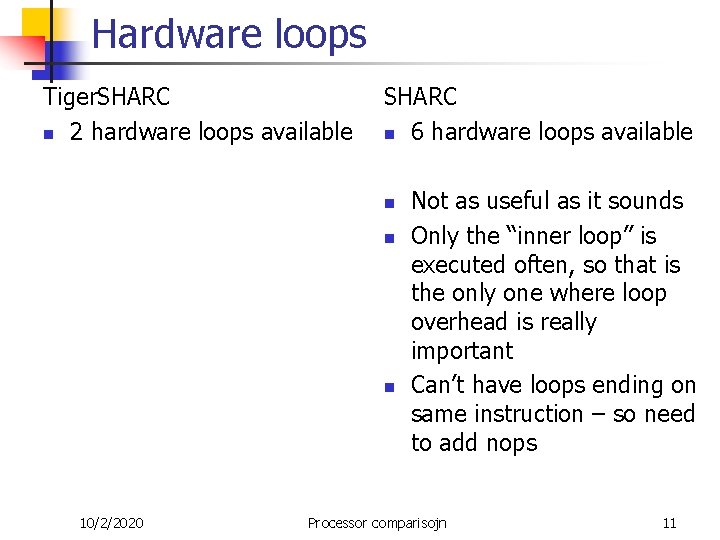

Hardware loops Tiger. SHARC n 2 hardware loops available SHARC n 6 hardware loops available n n n 10/2/2020 Not as useful as it sounds Only the “inner loop” is executed often, so that is the only one where loop overhead is really important Can’t have loops ending on same instruction – so need to add nops Processor comparisojn 11

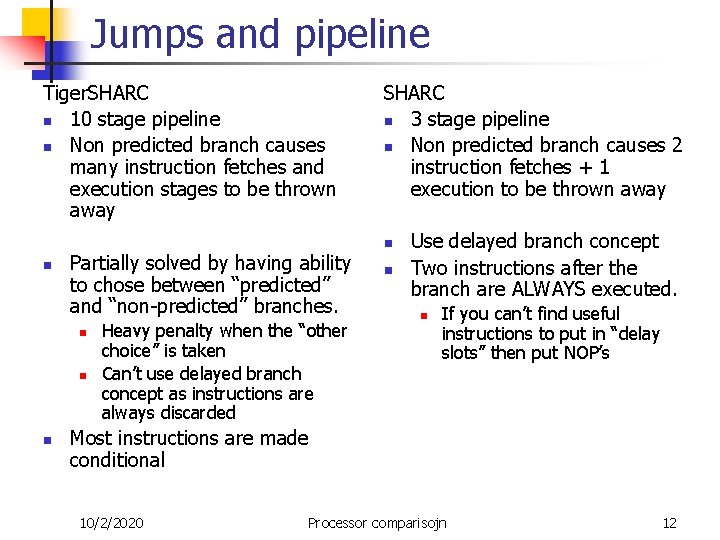

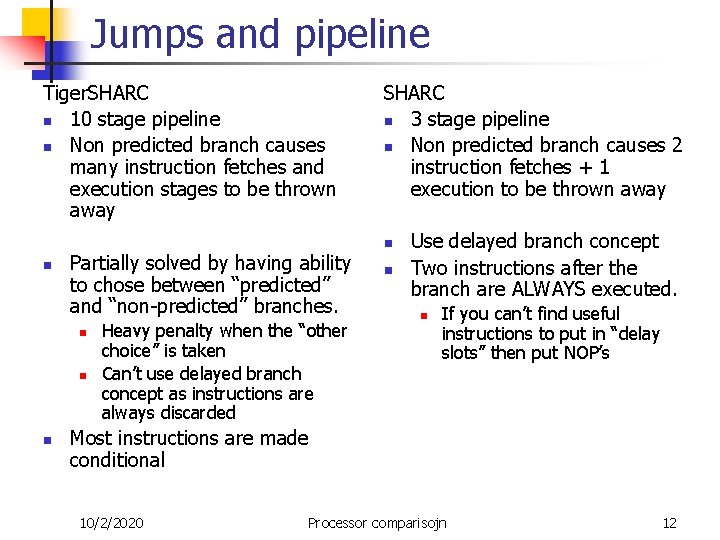

Jumps and pipeline Tiger. SHARC n 10 stage pipeline n Non predicted branch causes many instruction fetches and execution stages to be thrown away n Partially solved by having ability to chose between “predicted” and “non-predicted” branches. n n n Heavy penalty when the “other choice” is taken Can’t use delayed branch concept as instructions are always discarded SHARC n 3 stage pipeline n Non predicted branch causes 2 instruction fetches + 1 execution to be thrown away n n Use delayed branch concept Two instructions after the branch are ALWAYS executed. n If you can’t find useful instructions to put in “delay slots” then put NOP’s Most instructions are made conditional 10/2/2020 Processor comparisojn 12

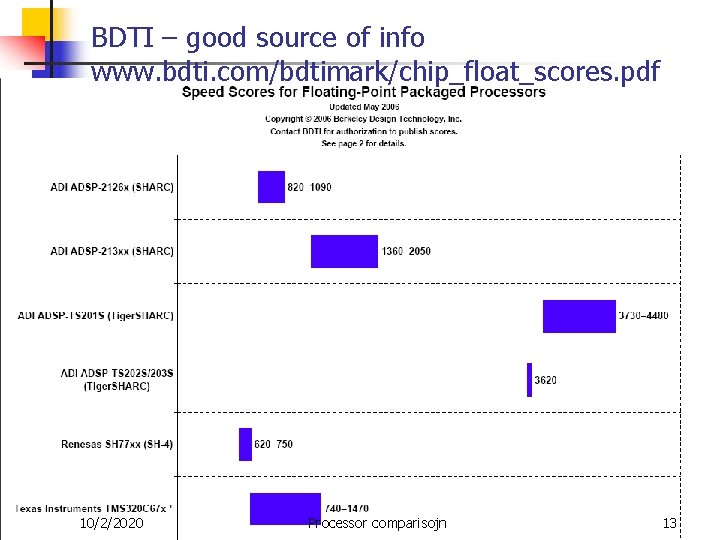

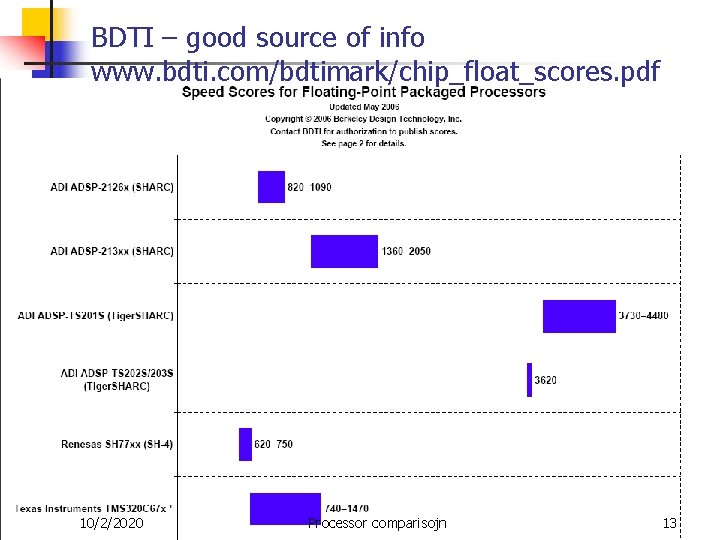

BDTI – good source of info www. bdti. com/bdtimark/chip_float_scores. pdf 10/2/2020 Processor comparisojn 13

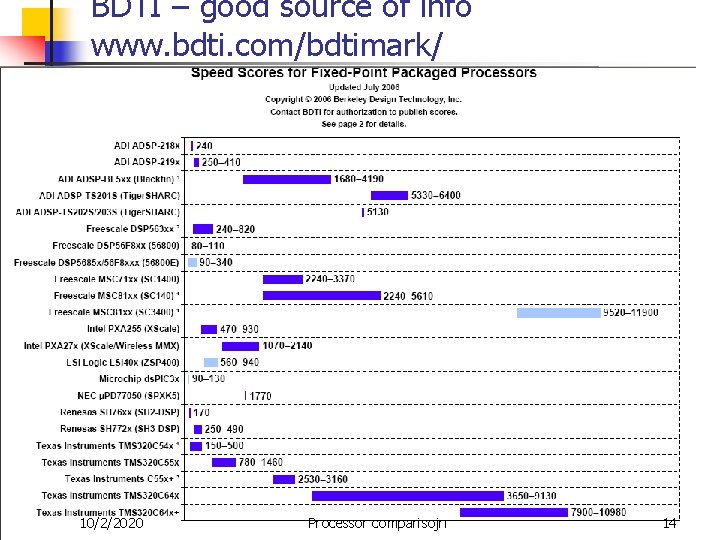

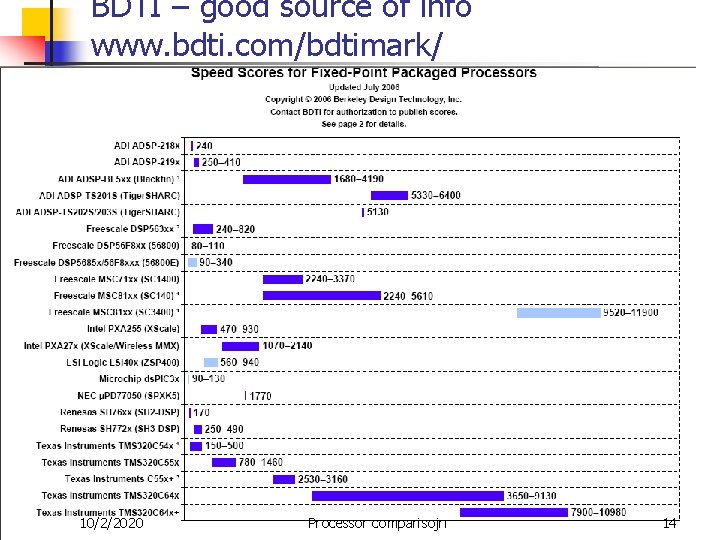

BDTI – good source of info www. bdti. com/bdtimark/ 10/2/2020 Processor comparisojn 14

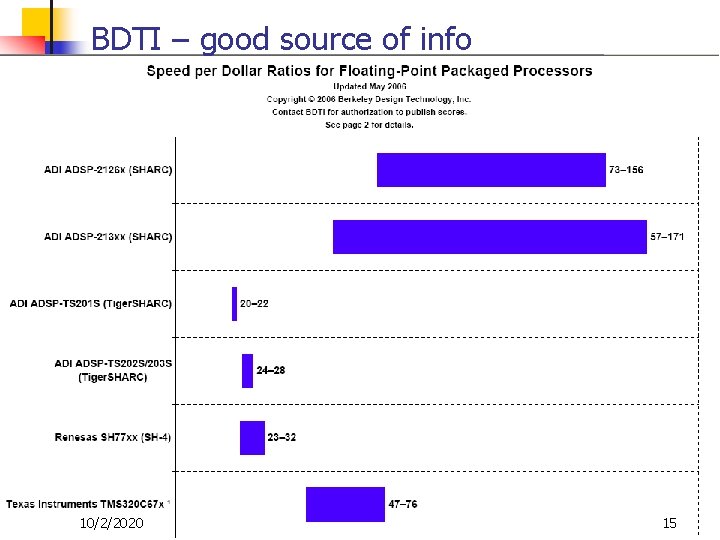

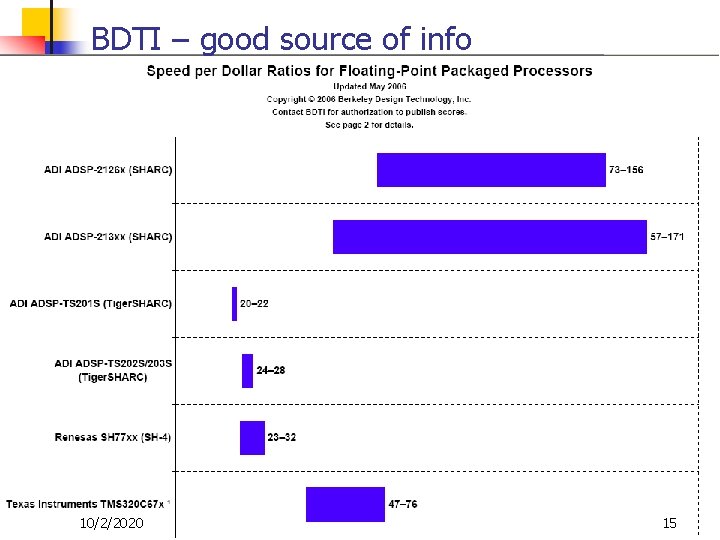

BDTI – good source of info 10/2/2020 15

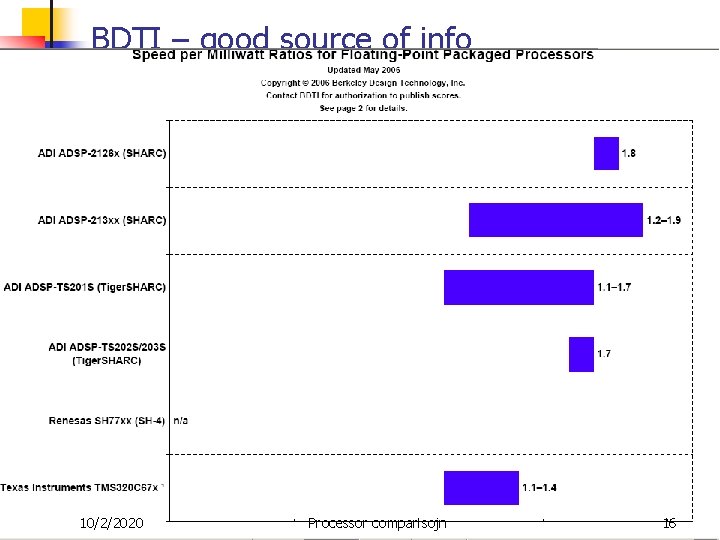

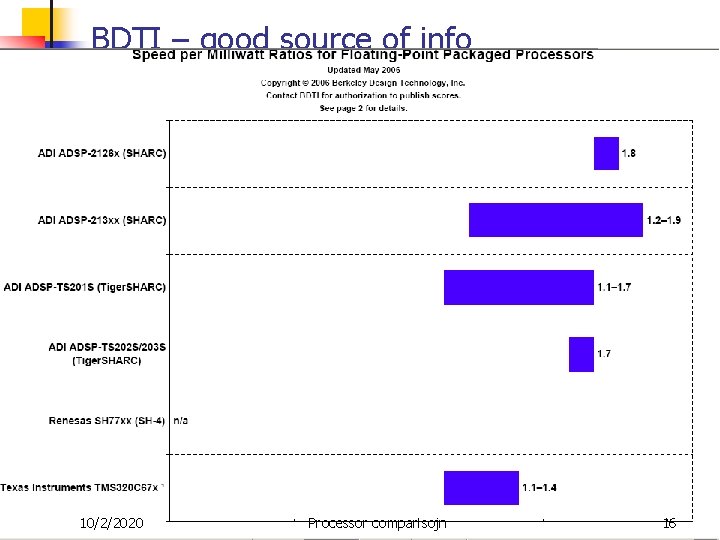

BDTI – good source of info 10/2/2020 Processor comparisojn 16