Processes Outline contemporary computer systems allow multiple programs

- Slides: 67

Processes

Outline • contemporary computer systems allow multiple programs to be loaded into memory and executed concurrently • A system therefore consists of a collection of processes (a program in execution): operating system processes executing system code and user processes executing user code. • • • Process Concept Process Scheduling Operations on Processes Interprocess Communication Examples of IPC Systems Communication in Client-Server Systems*

Process Concept • A process is an instance of a program in execution. • Batch systems work in terms of "jobs". Many modern process concepts are still expressed in terms of jobs, ( e. g. job scheduling ), and the two terms are often used interchangeably.

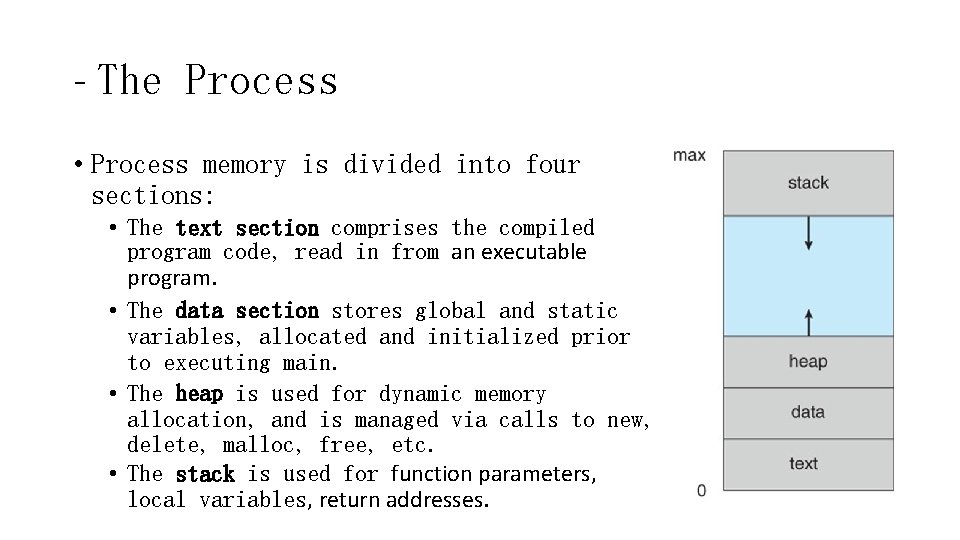

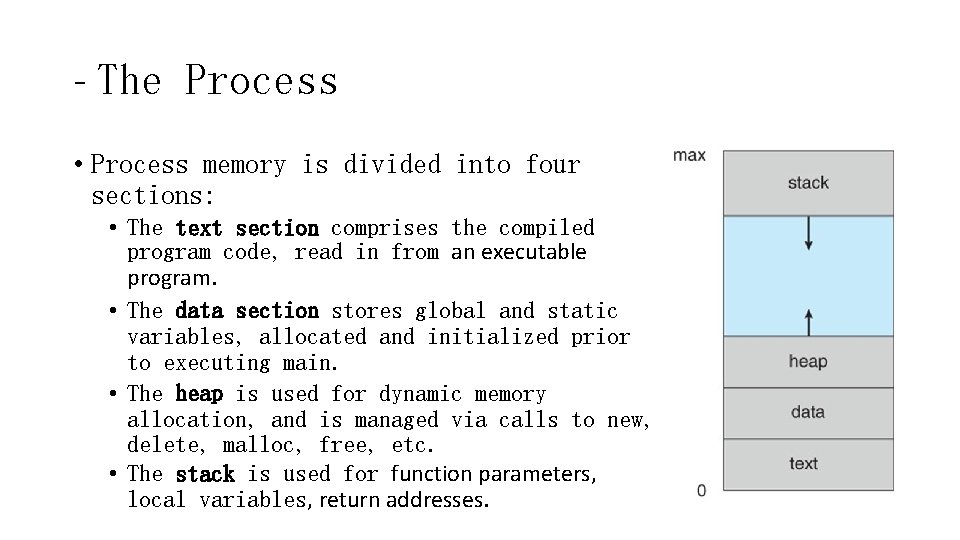

- The Process • Process memory is divided into four sections: • The text section comprises the compiled program code, read in from an executable program. • The data section stores global and static variables, allocated and initialized prior to executing main. • The heap is used for dynamic memory allocation, and is managed via calls to new, delete, malloc, free, etc. • The stack is used for function parameters, local variables, return addresses.

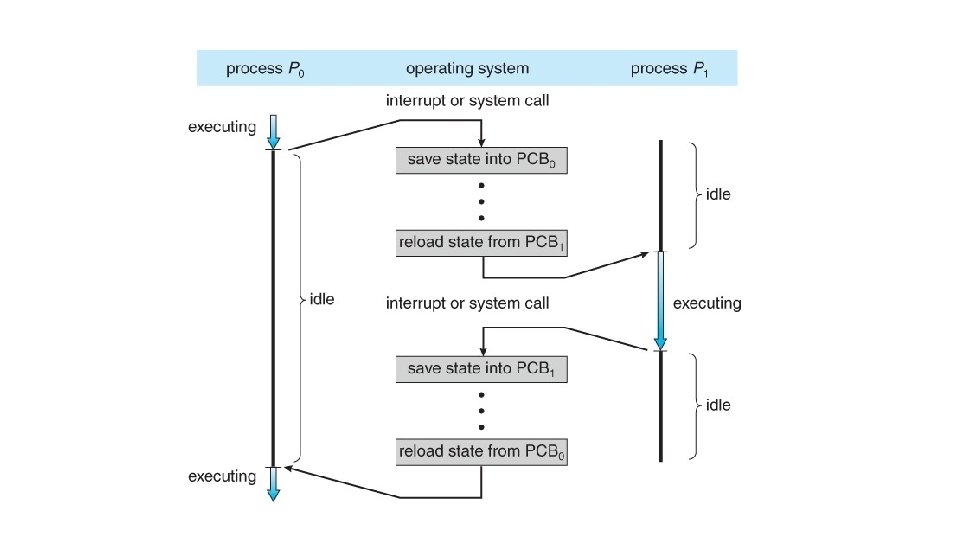

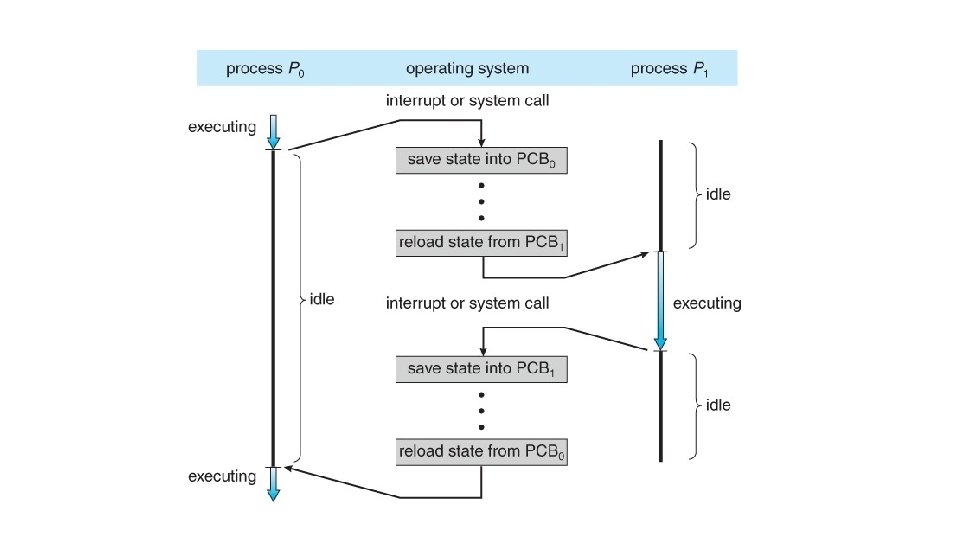

• When processes are swapped out of CPU and later restored, additional information must also be stored and restored. Key among them are the program counter and the value of all program registers.

Program vs Process • Program is passive entity stored on disk (executable file), process is active • Program becomes process when a executable file loaded into memory • Execution of program started via GUI mouse clicks, command line entry of its name, etc • One program can be several processes • Consider multiple users executing the same program

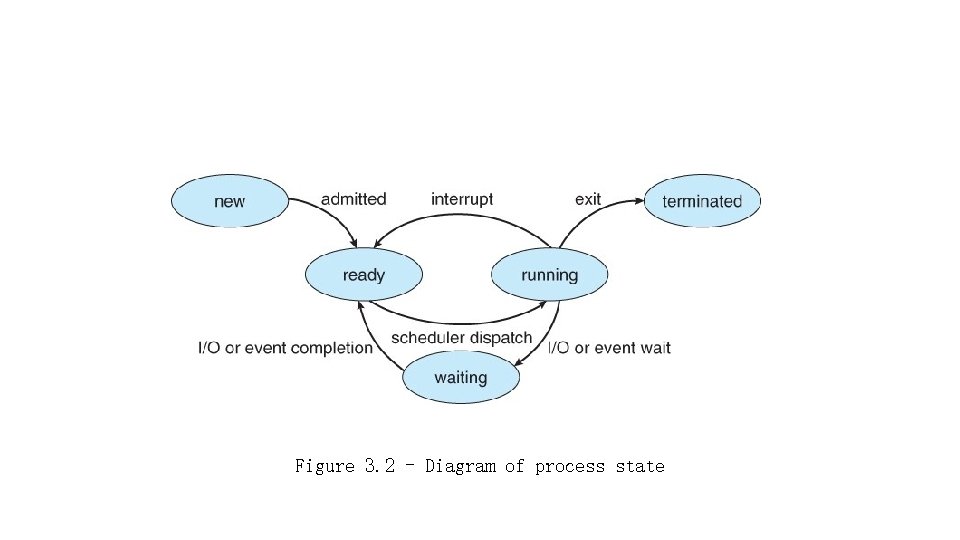

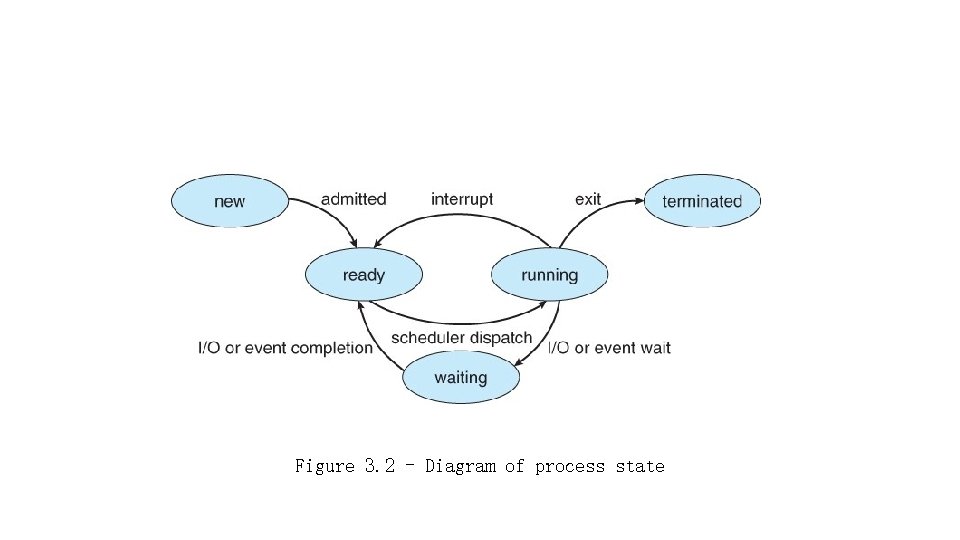

- Process State • Processes may be in one of 5 states. • New - The process is in the stage of being created. • Ready - The process has all the resources available that it needs to run, but the CPU is not currently working on this process's instructions. • Running - The CPU is working on this process's instructions. • Waiting - The process cannot run at the moment, because it is waiting for some resource to become available or for some event to occur. For example the process may be waiting for keyboard input, disk access request, inter-process messages, a timer to go off, or a child process to finish. • Terminated - The process has completed.

Figure 3. 2 - Diagram of process state

• Task manager in Windows • ps, top • The load average reported by the "w" command indicate the average number of processes in the "Ready" state over the last 1, 5, and 15 minutes, i. e. processes who have everything they need to run but cannot because the CPU is busy doing something else. • Some systems may have other states besides the ones listed here.

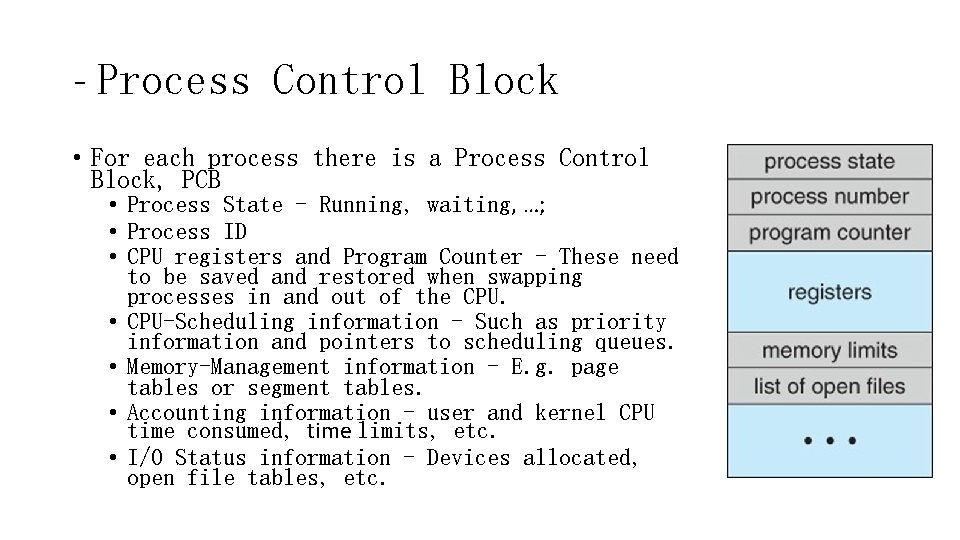

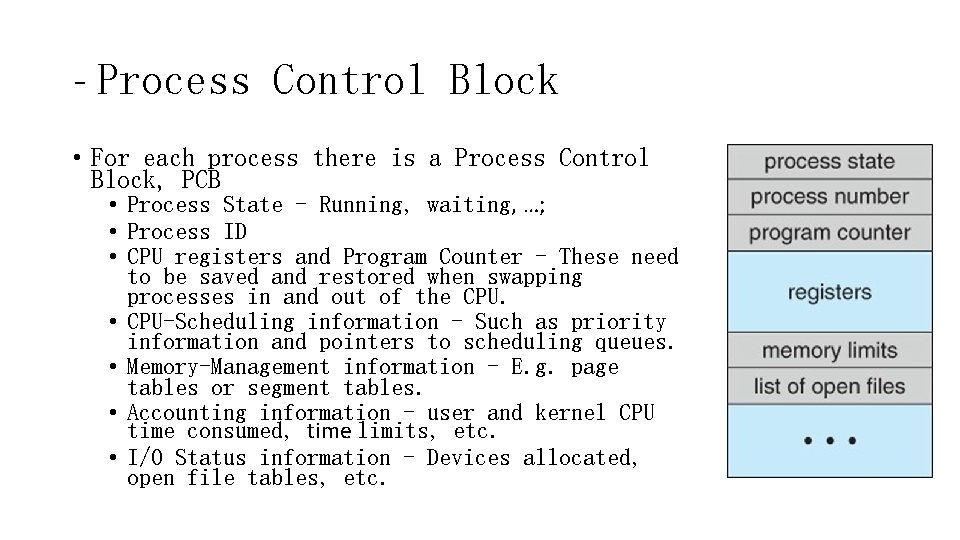

- Process Control Block • For each process there is a Process Control Block, PCB • Process State - Running, waiting, …; • Process ID • CPU registers and Program Counter - These need to be saved and restored when swapping processes in and out of the CPU. • CPU-Scheduling information - Such as priority information and pointers to scheduling queues. • Memory-Management information - E. g. page tables or segment tables. • Accounting information - user and kernel CPU time consumed, time limits, etc. • I/O Status information - Devices allocated, open file tables, etc.

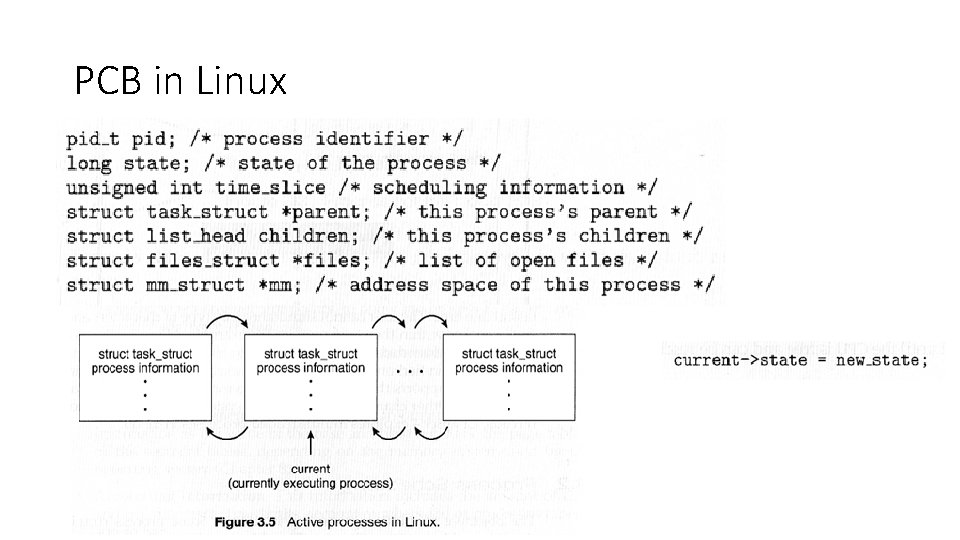

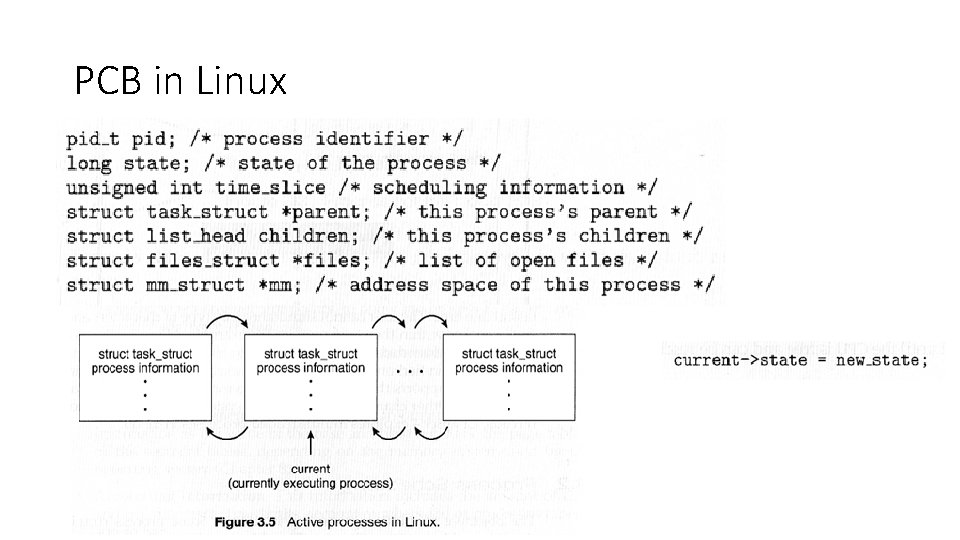

PCB in Linux

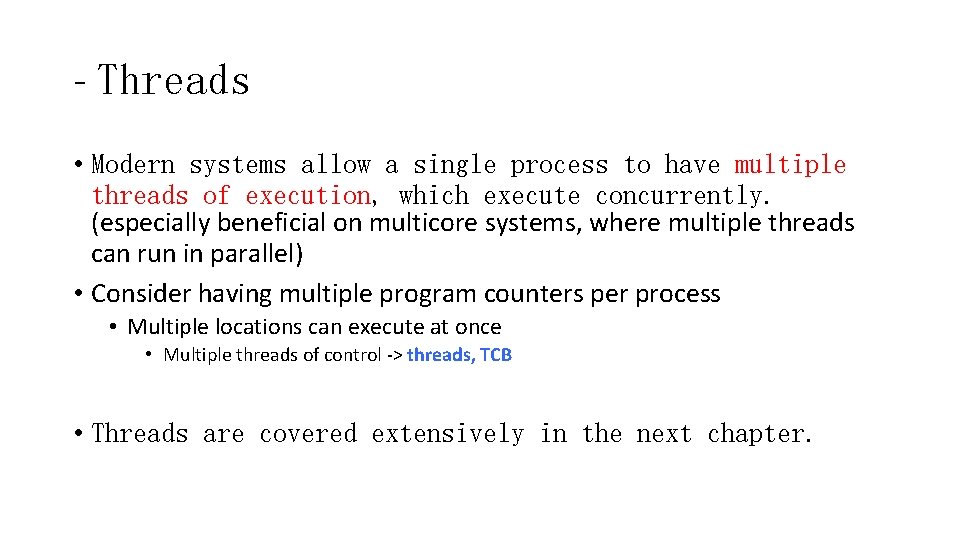

- Threads • Modern systems allow a single process to have multiple threads of execution, which execute concurrently. (especially beneficial on multicore systems, where multiple threads can run in parallel) • Consider having multiple program counters per process • Multiple locations can execute at once • Multiple threads of control -> threads, TCB • Threads are covered extensively in the next chapter.

Process Scheduling • The two main objectives of the process scheduling system • keep the CPU busy at all times • deliver "acceptable" response times for all programs, particularly for interactive ones. • The process scheduler must meet these objectives by implementing suitable policies for swapping processes in and out of the CPU. • selects among available READY processes for next execution on CPU

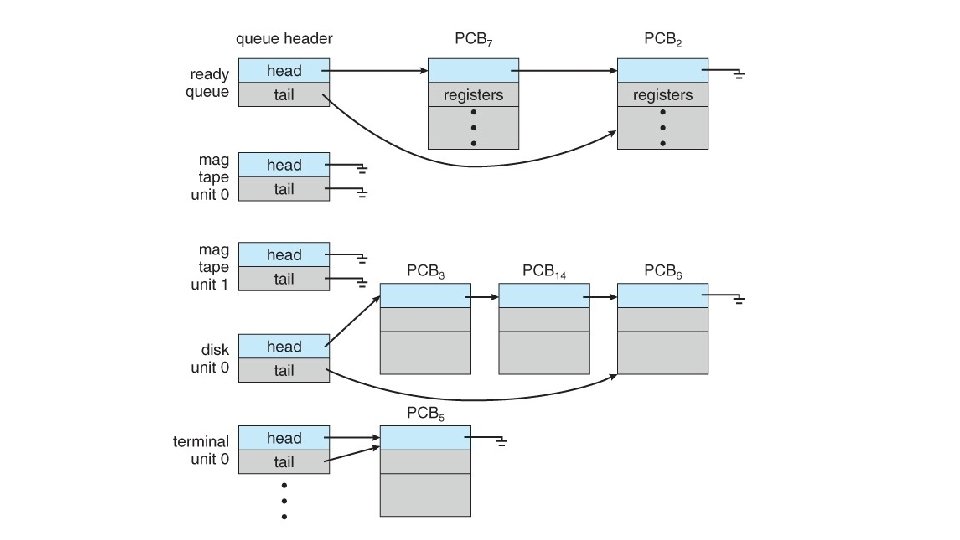

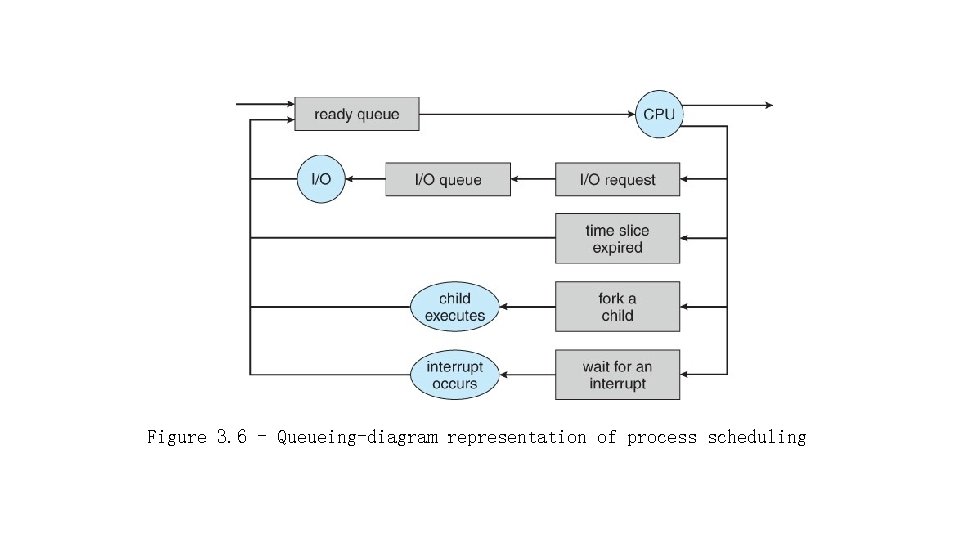

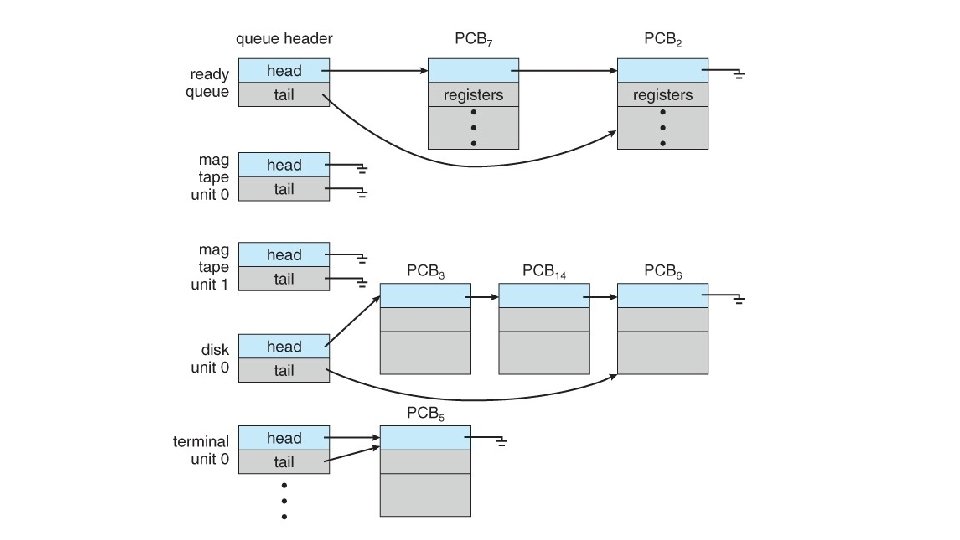

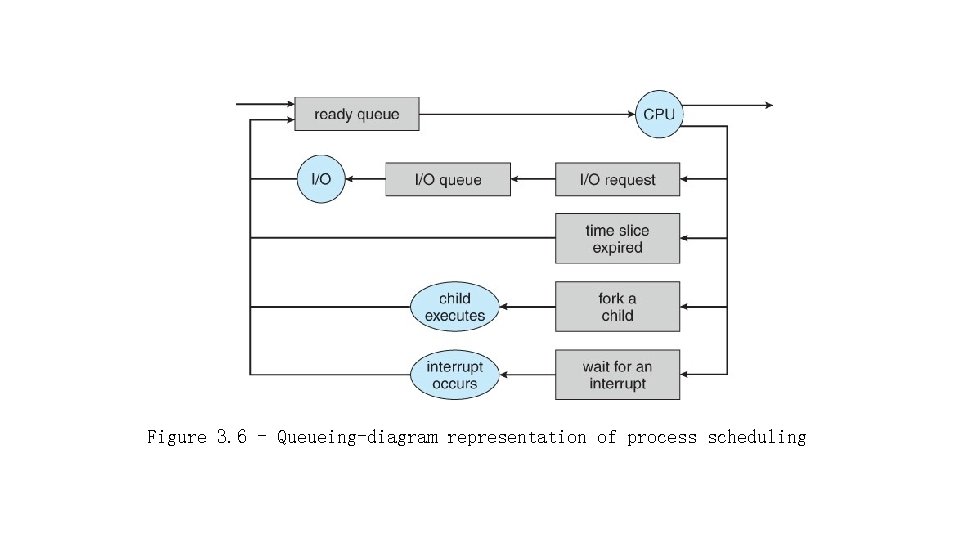

- Scheduling Queues • Maintains scheduling queues of processes • Job queue – set of all processes in the system • Ready queue – set of all processes residing in main memory, ready and waiting to execute • Device queues – set of processes waiting for an I/O device • Processes migrate among the various queues

Figure 3. 6 - Queueing-diagram representation of process scheduling

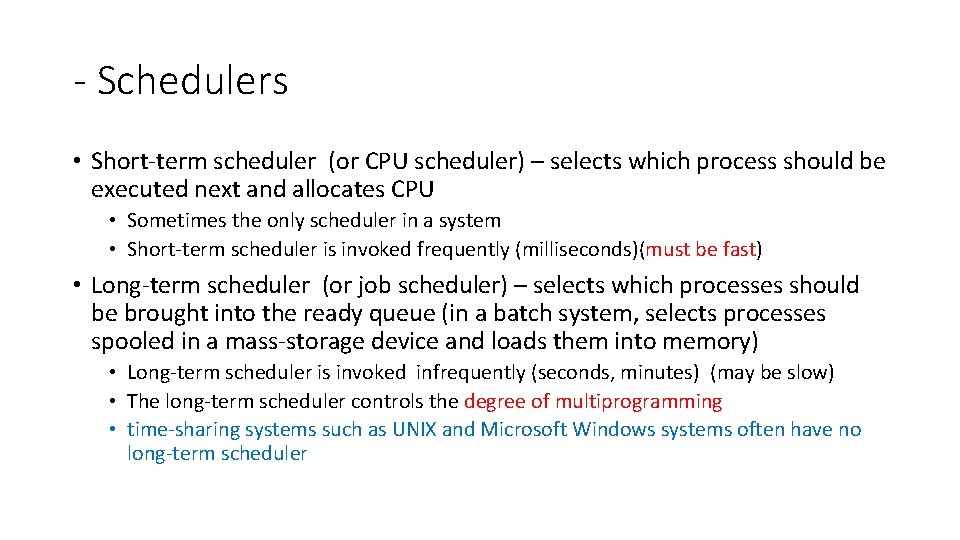

- Schedulers • Short-term scheduler (or CPU scheduler) – selects which process should be executed next and allocates CPU • Sometimes the only scheduler in a system • Short-term scheduler is invoked frequently (milliseconds)(must be fast) • Long-term scheduler (or job scheduler) – selects which processes should be brought into the ready queue (in a batch system, selects processes spooled in a mass-storage device and loads them into memory) • Long-term scheduler is invoked infrequently (seconds, minutes) (may be slow) • The long-term scheduler controls the degree of multiprogramming • time-sharing systems such as UNIX and Microsoft Windows systems often have no long-term scheduler

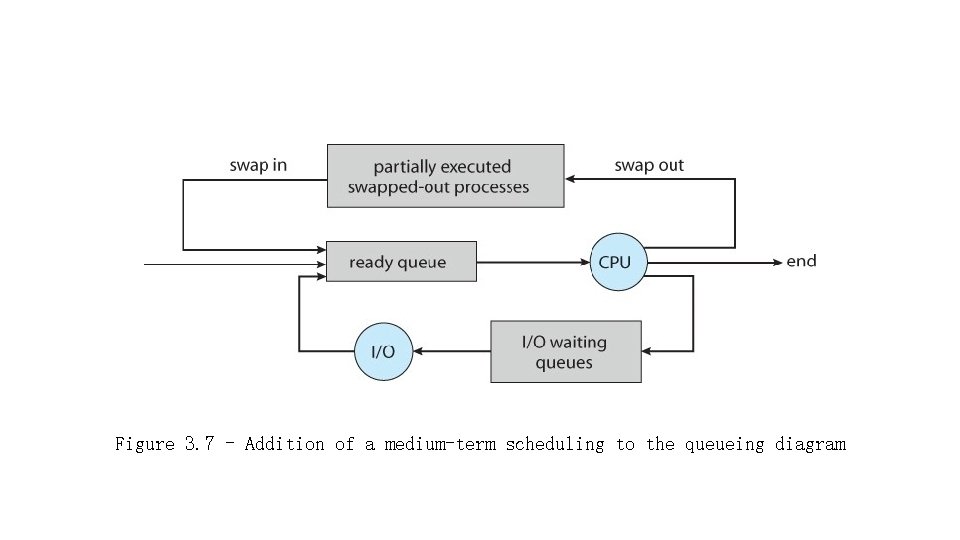

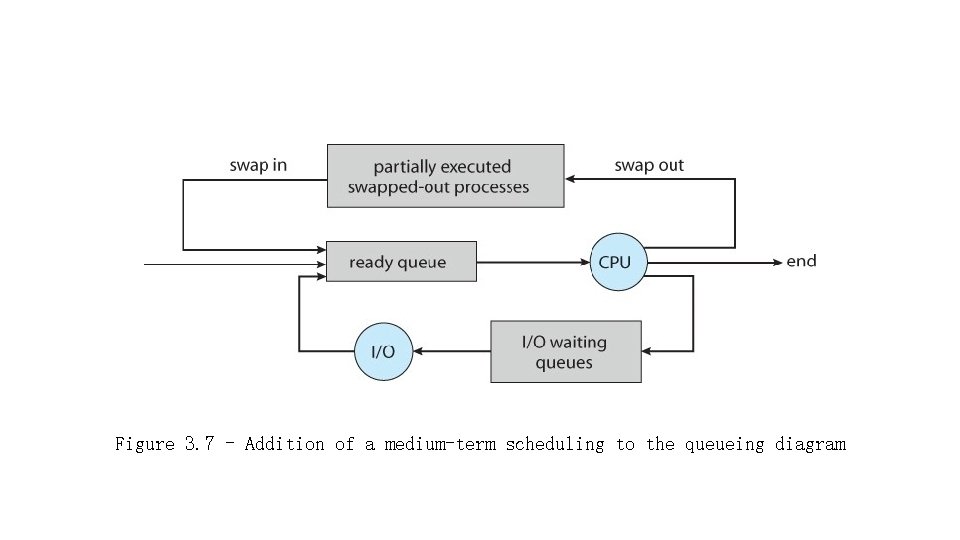

• Some systems also employ a medium-term scheduler. • remove process from memory, store on disk, bring back in from disk to continue execution: swapping • An efficient scheduling system will select a good process mix of CPU-bound processes and I/O bound processes. • I/O-bound process – spends more time doing I/O than computations, many short CPU bursts • CPU-bound process – spends more time doing computations; few very long CPU bursts

Figure 3. 7 - Addition of a medium-term scheduling to the queueing diagram

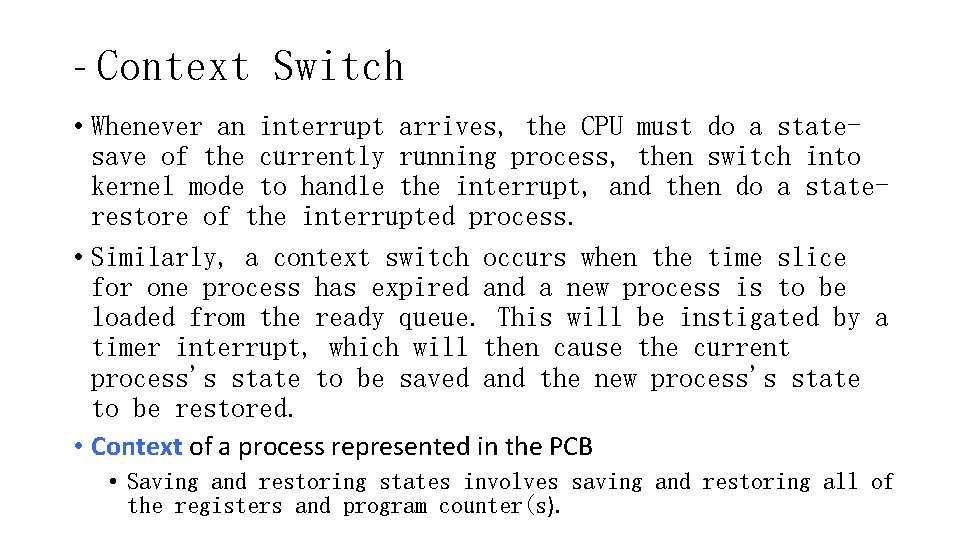

- Context Switch • Whenever an interrupt arrives, the CPU must do a statesave of the currently running process, then switch into kernel mode to handle the interrupt, and then do a staterestore of the interrupted process. • Similarly, a context switch occurs when the time slice for one process has expired and a new process is to be loaded from the ready queue. This will be instigated by a timer interrupt, which will then cause the current process's state to be saved and the new process's state to be restored. • Context of a process represented in the PCB • Saving and restoring states involves saving and restoring all of the registers and program counter(s).

• Context switching happens VERY frequently, and the overhead of doing the switching is just lost CPU time, so context switches ( state saves & restores ) need to be as fast as possible. • Some hardware has special provisions for speeding this up, such as a single machine instruction for saving or restoring all registers at once.

Operations on Processes • Process Creation • Process termination

- Process Creation • Parent process create children processes, which, in turn create other processes, forming a tree of processes • Generally, process identified and managed via a process identifier (pid) used as an index to access various attributes of a process within the kernel. • Resource sharing options • Parent and children share all resources • Children share subset of parent’s resources • Parent and child share no resources

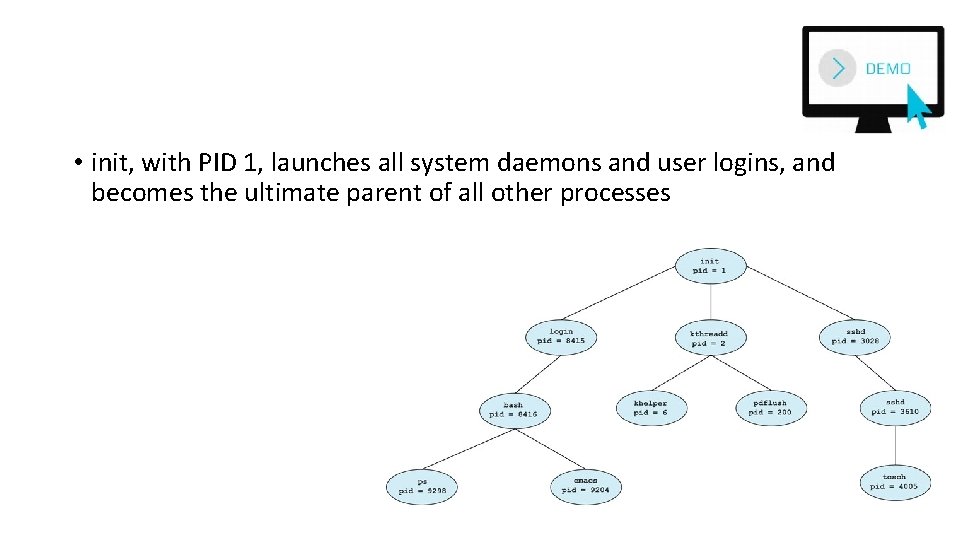

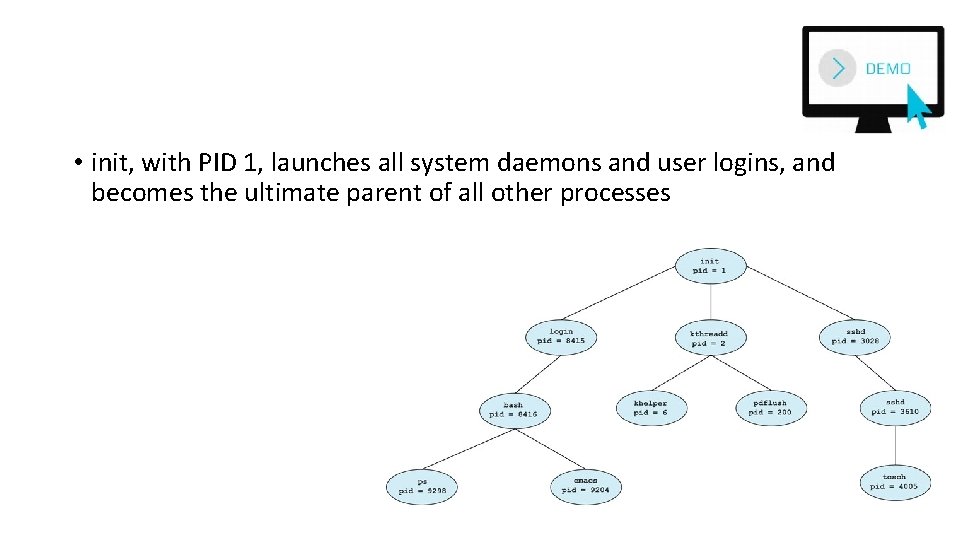

• init, with PID 1, launches all system daemons and user logins, and becomes the ultimate parent of all other processes

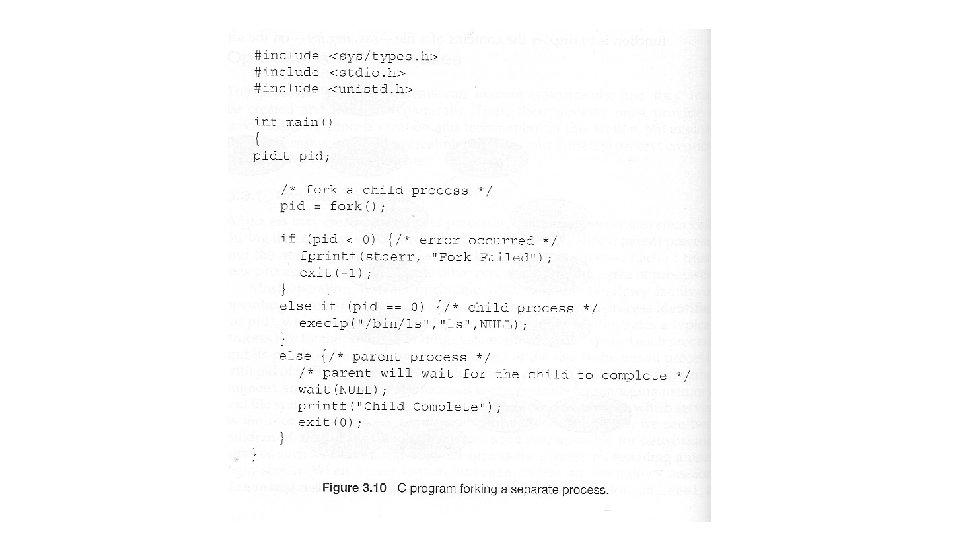

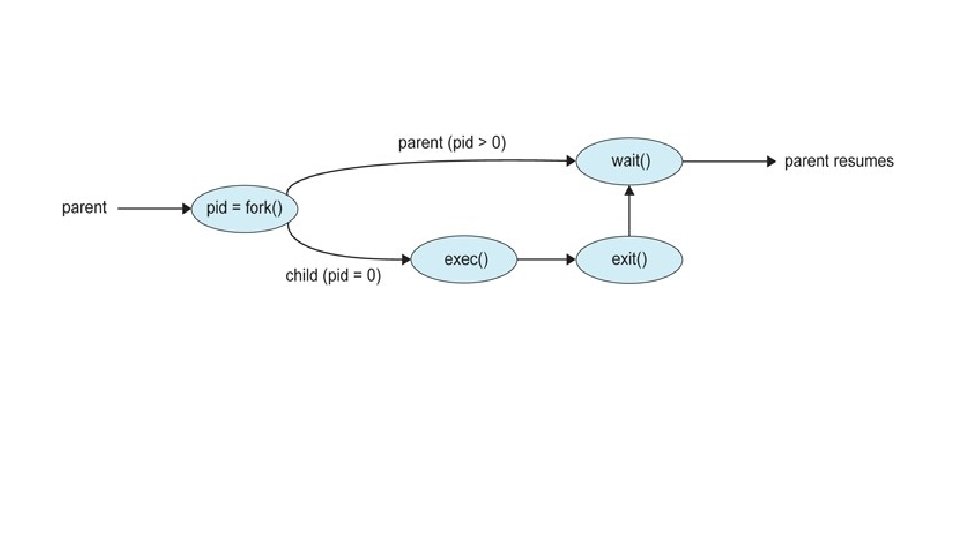

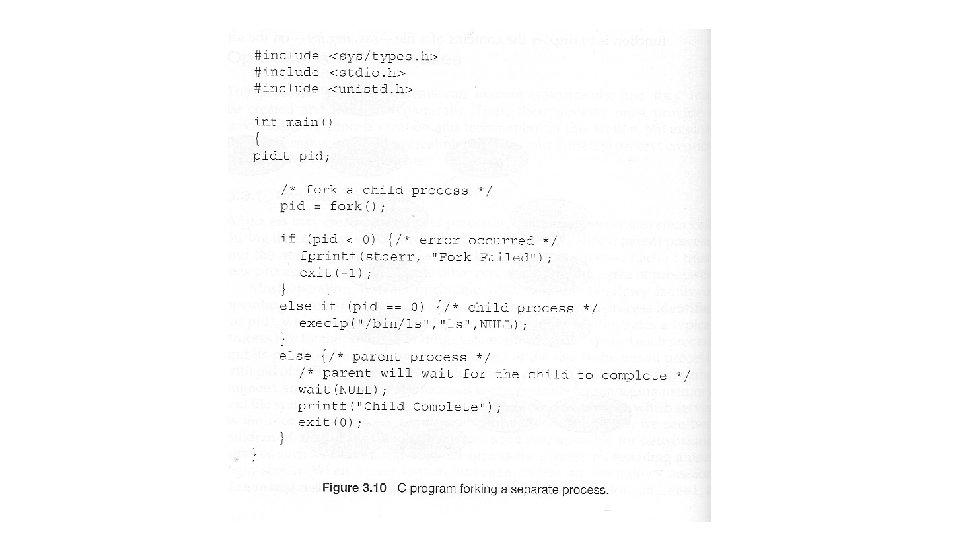

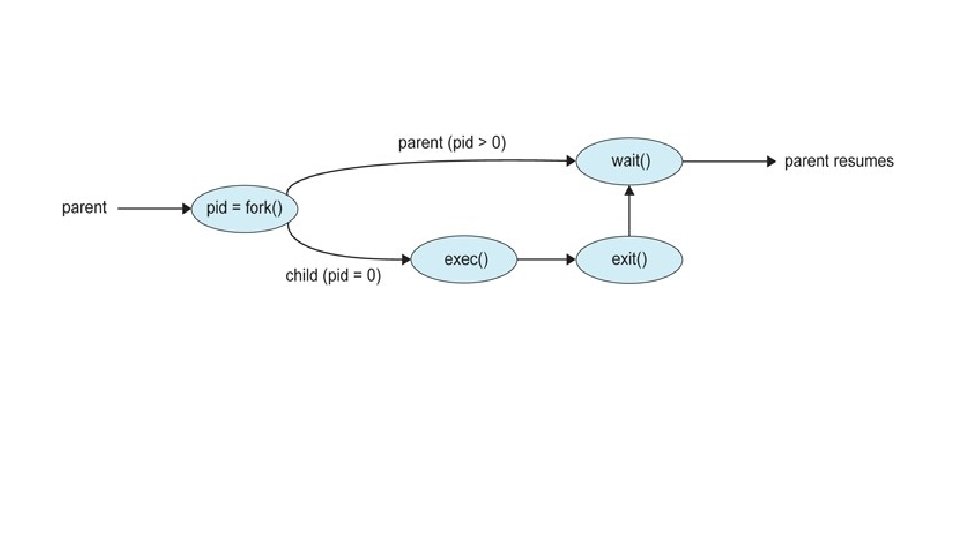

• There are two options for the parent process after creating the child: • Wait for the child process to terminate before proceeding. The parent makes a wait( ) system call, for either a specific child or for any child, which causes the parent process to block until the wait( ) returns. UNIX shells normally wait for their children to complete before issuing a new prompt. • Run concurrently with the child, continuing to process without waiting. This is the operation seen when a UNIX shell runs a process as a background task. It is also possible for the parent to run for a while, and then wait for the child later, which might occur in a sort of a parallel processing operation. ( E. g. the parent may fork off a number of children without waiting for any of them, then do a little work of its own, and

• Two possibilities for the address space of the child relative to the parent: • The child may be an exact duplicate of the parent, sharing the same program and data segments in memory. Each will have their own PCB, including program counter, registers, and PID. This is the behavior of the fork system call in UNIX. • The child process may have a new program loaded into its address space, with all new code and data segments. This is the behavior of the spawn system calls in Windows. UNIX systems implement this as a second step, using the exec system call.

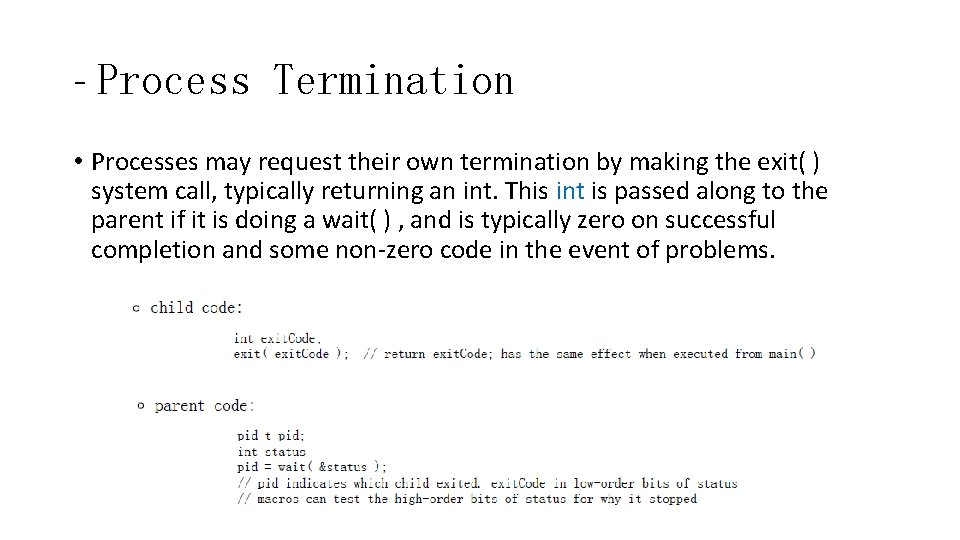

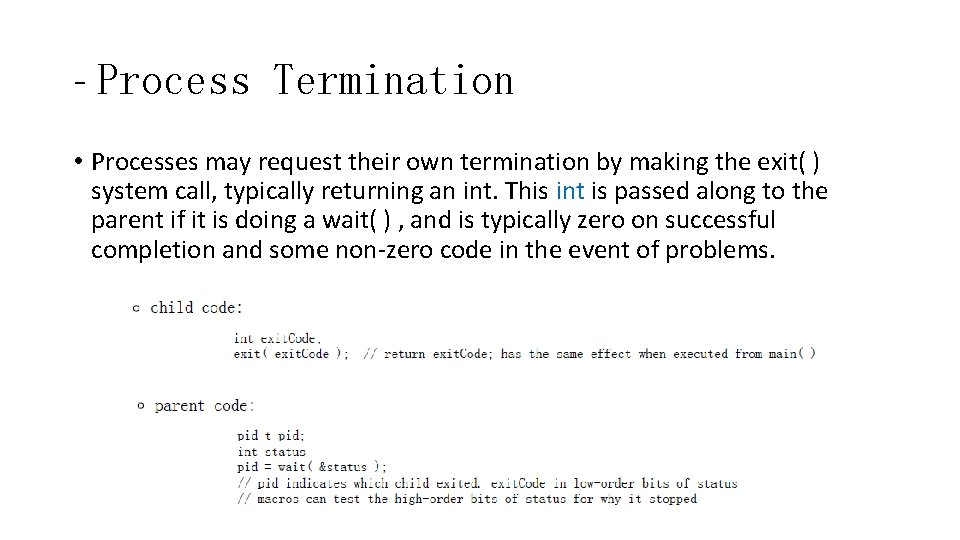

- Process Termination • Processes may request their own termination by making the exit( ) system call, typically returning an int. This int is passed along to the parent if it is doing a wait( ) , and is typically zero on successful completion and some non-zero code in the event of problems.

• Parent may terminate the execution of children processes using the abort() system call. Some reasons for doing so: • Child has exceeded allocated resources • Task assigned to child is no longer required • The parent is exiting and the operating systems does not allow a child to continue if its parent terminates

• Processes may also be terminated by the system for a variety of reasons, including: • In response to a KILL command, or other unhandled process interrupt (signal).

• Some operating systems do not allow child to exists if its parent has terminated. If a process terminates, then all its children must also be terminated. • cascading termination. All children, grandchildren, etc. are terminated. • The termination is initiated by the operating system. • If no parent waiting (did not invoke wait()) process is a zombie • When a process terminates, its resources are deallocated by the operating system. However, its entry in the process table must remain there until the parent calls wait(), because the process table contains the process’s exit status • If parent terminated without invoking wait , process is an orphan

Interprocess Communication • Processes within a system may be independent or cooperating • Cooperating Processes are those that can affect or be affected by other processes. There are reasons why cooperating processes are allowed: • Information Sharing - allow concurrent access to shared information • Computation speedup - Often a solution to a problem can be solved faster if the problem can be broken down into subtasks to be solved simultaneously ( particularly when multiple processors are involved. ) • Modularity - The most efficient architecture may be to break a system down into cooperating modules (processes). • Convenience - Even a single user may be multi-tasking, such as editing, compiling, printing, and running the same code in different windows.

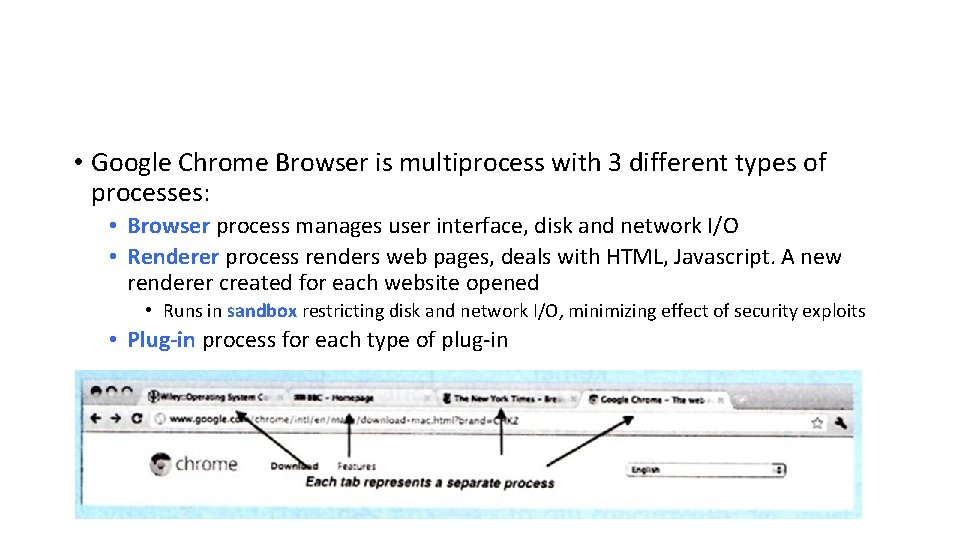

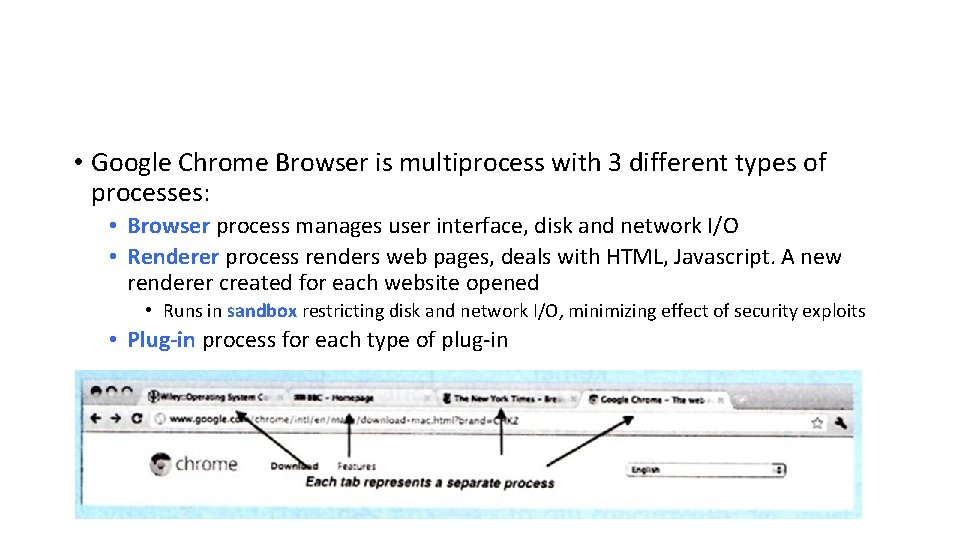

• Google Chrome Browser is multiprocess with 3 different types of processes: • Browser process manages user interface, disk and network I/O • Renderer process renders web pages, deals with HTML, Javascript. A new renderer created for each website opened • Runs in sandbox restricting disk and network I/O, minimizing effect of security exploits • Plug-in process for each type of plug-in

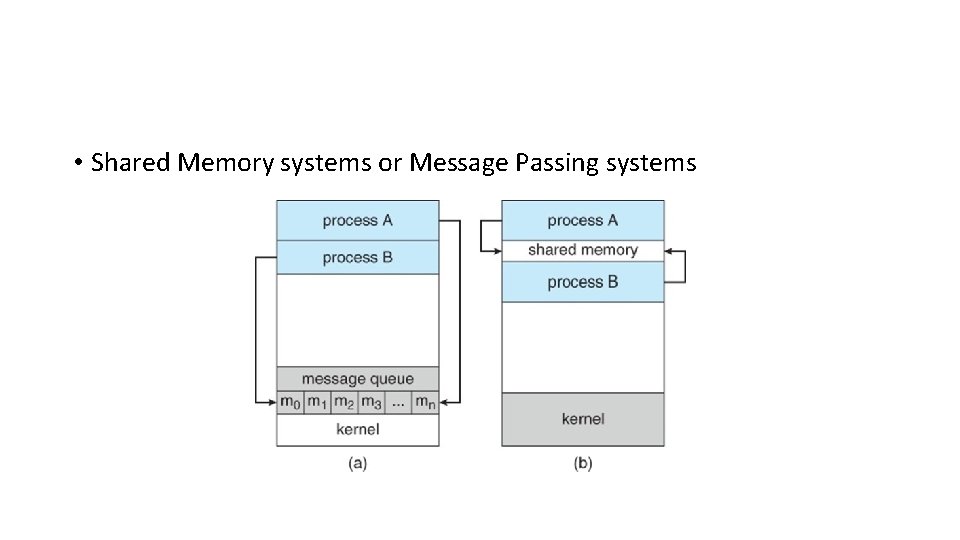

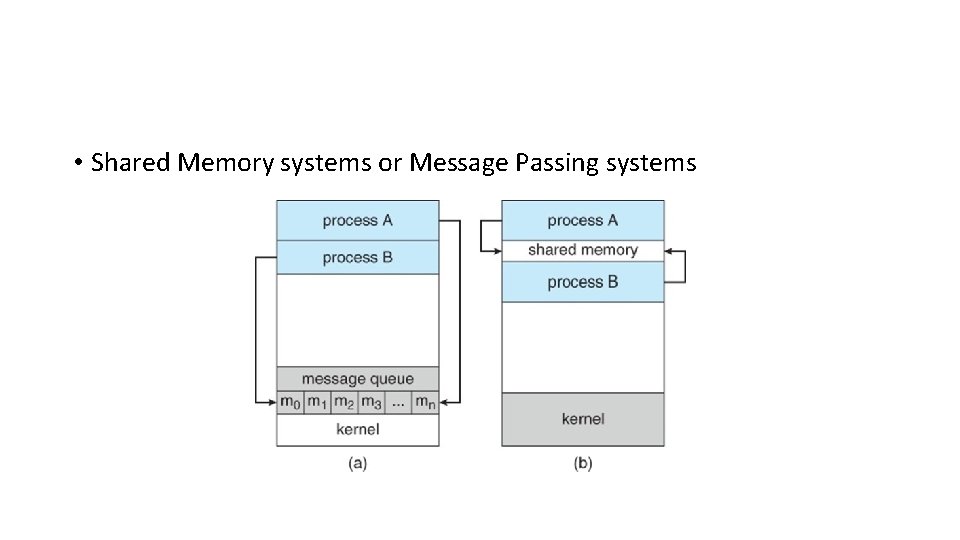

• Shared Memory systems or Message Passing systems

• Shared Memory is faster once it is set up, because no system calls are required and access occurs at normal memory speeds. However it is more complicated to set up, and doesn't work as well across multiple computers. Shared memory is generally preferable when large amounts of information must be shared quickly on the same computer. • Message Passing requires system calls for every message transfer, and is therefore slower, but it is simpler to set up and works well across multiple computers. Message passing is generally preferable when the amount and/or frequency of data transfers is small, or when Multiple

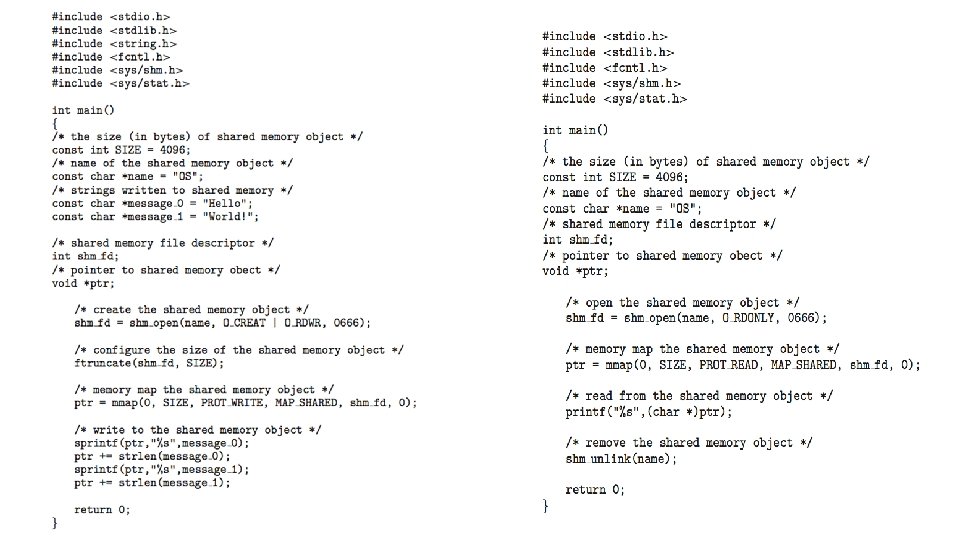

- Shared-Memory Systems • normally, the operating system tries to prevent one process from accessing another process’s memory • a shared-memory region resides in the address space of the process creating the shared-memory segment. Other processes that wish to communicate using this shared-memory segment must attach it to their address space • exchange information by reading and writing data in the shared areas • Major issues is to provide mechanism that will allow the user processes to synchronize their actions when they access shared memory.

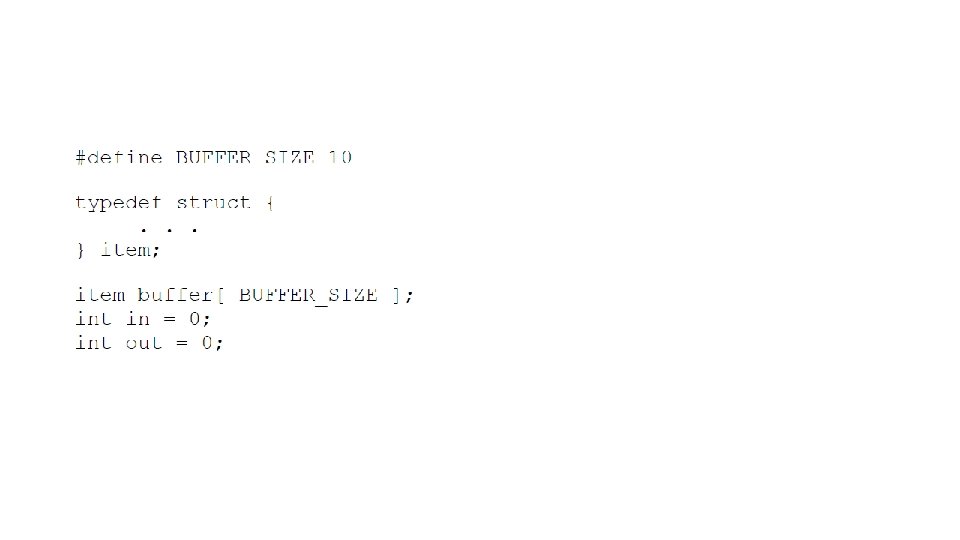

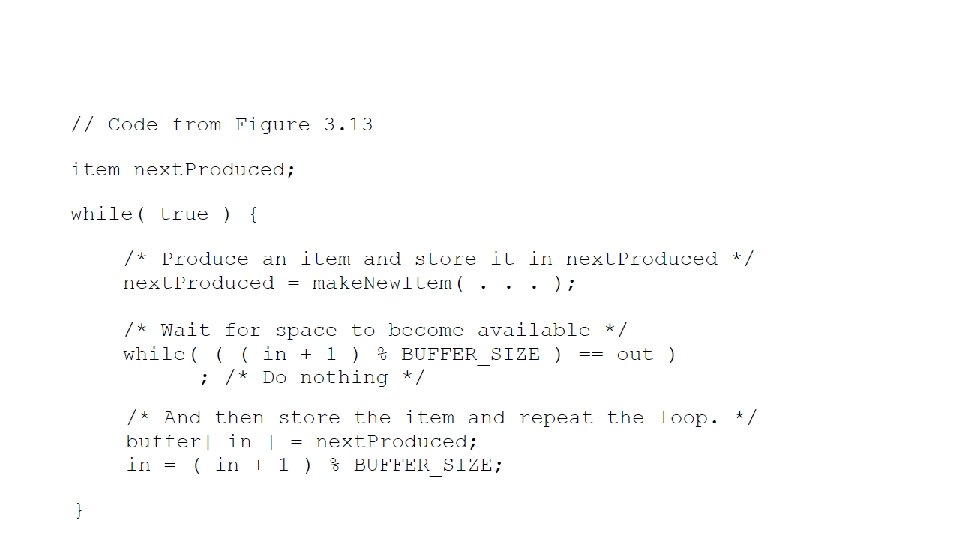

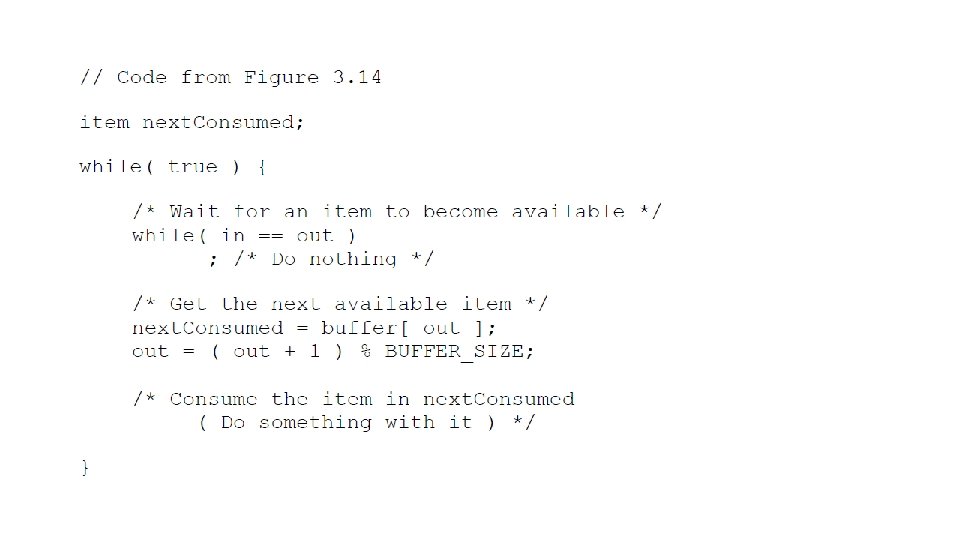

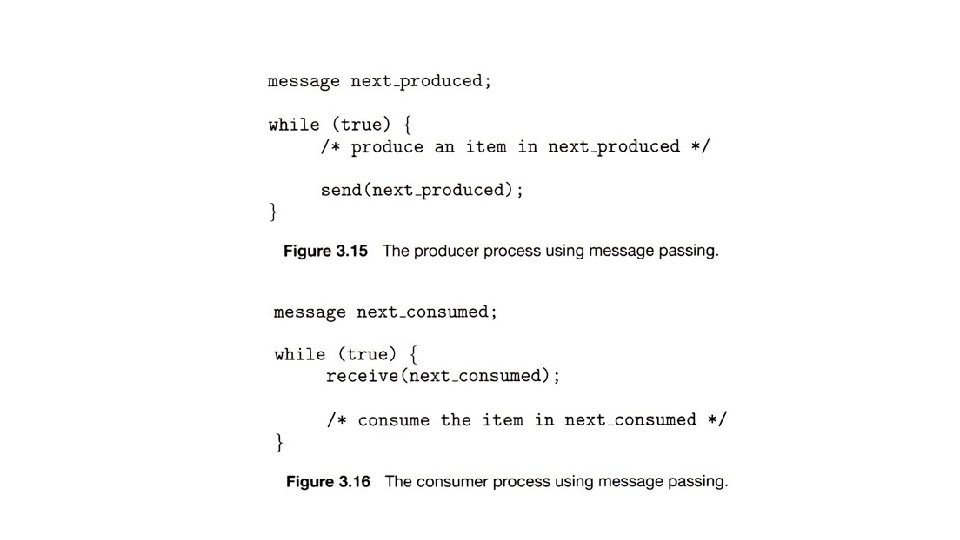

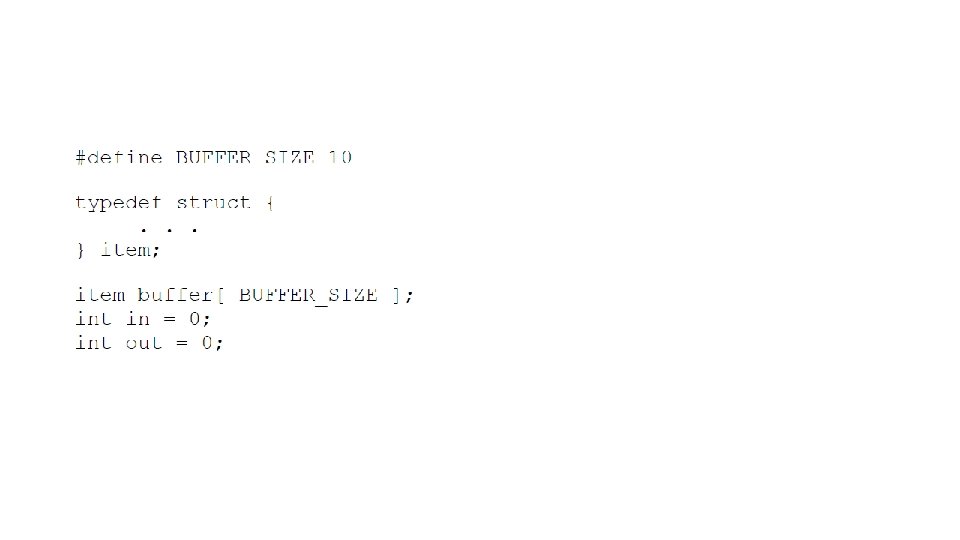

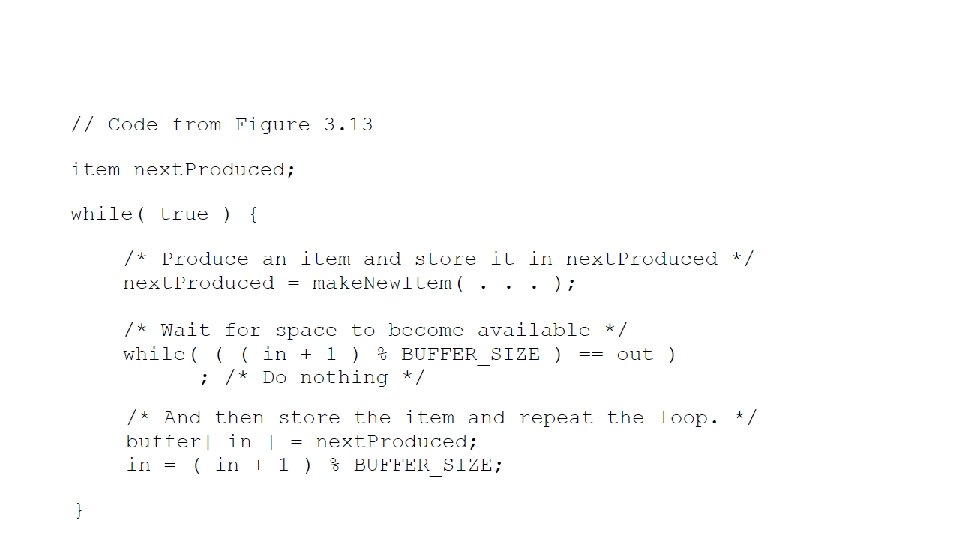

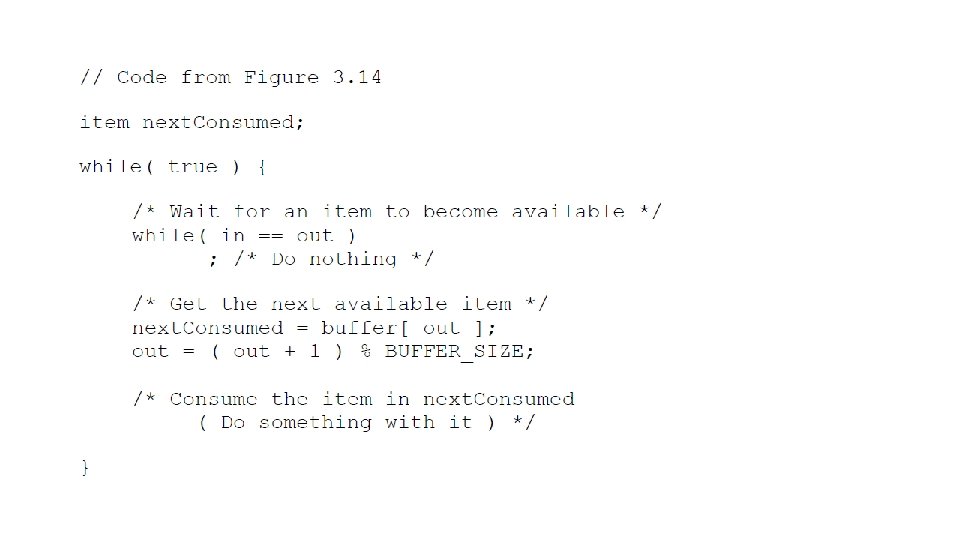

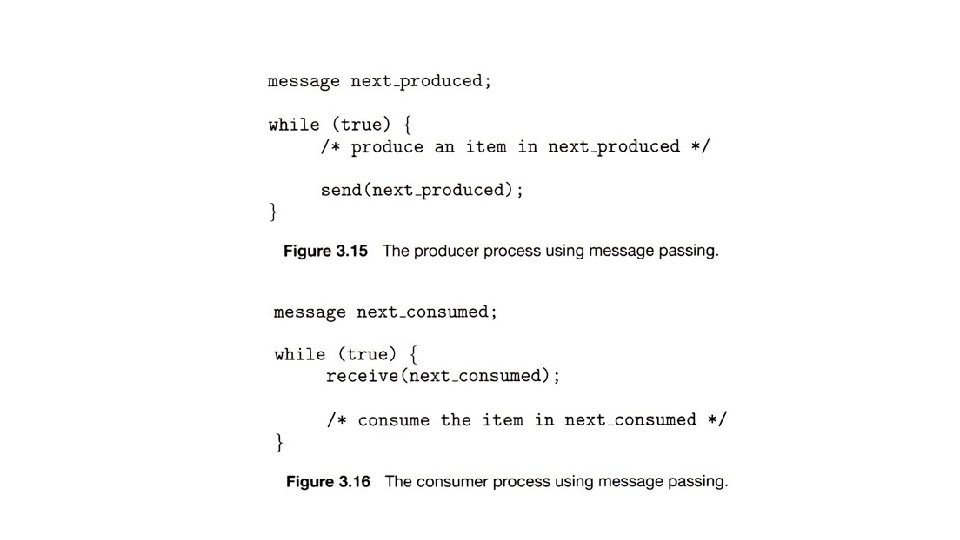

Producer-Consumer Example Using Shared Memory • This is a classic example, in which one process is producing data and another process is consuming the data. • The data is passed via an intermediary buffer, which may be either unbounded or bounded. • With a bounded buffer • the producer may have to wait until there is space available in the buffer. • The consumer may need to wait in either case until there is data available.

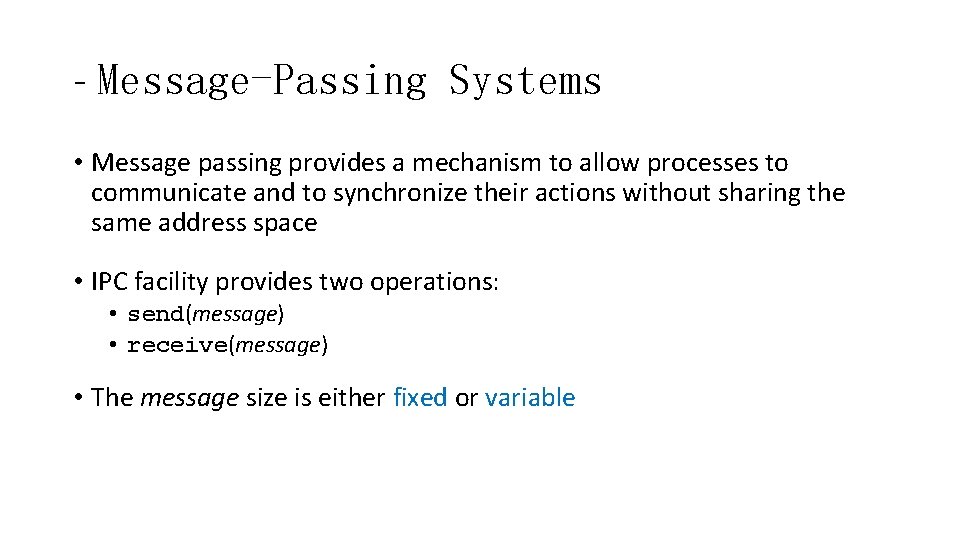

- Message-Passing Systems • Message passing provides a mechanism to allow processes to communicate and to synchronize their actions without sharing the same address space • IPC facility provides two operations: • send(message) • receive(message) • The message size is either fixed or variable

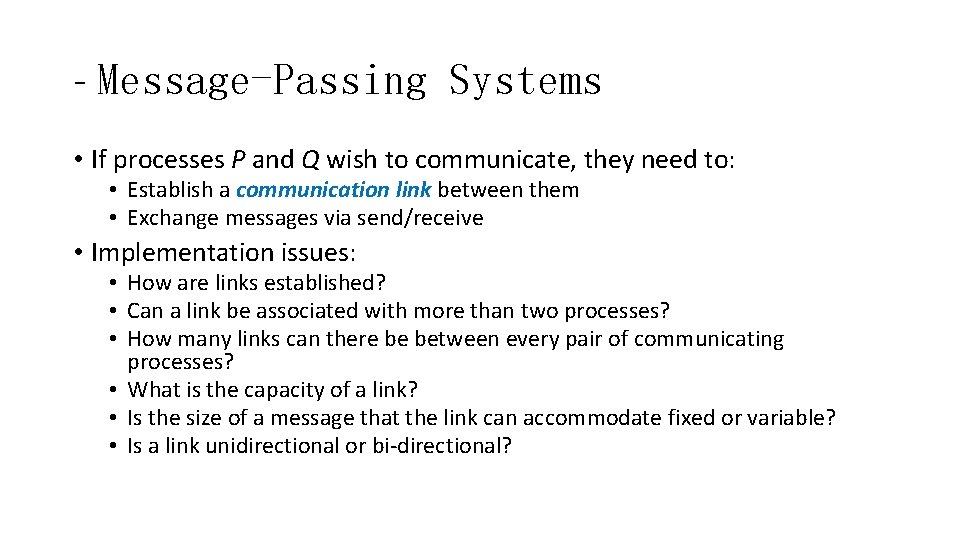

- Message-Passing Systems • If processes P and Q wish to communicate, they need to: • Establish a communication link between them • Exchange messages via send/receive • Implementation issues: • How are links established? • Can a link be associated with more than two processes? • How many links can there be between every pair of communicating processes? • What is the capacity of a link? • Is the size of a message that the link can accommodate fixed or variable? • Is a link unidirectional or bi-directional?

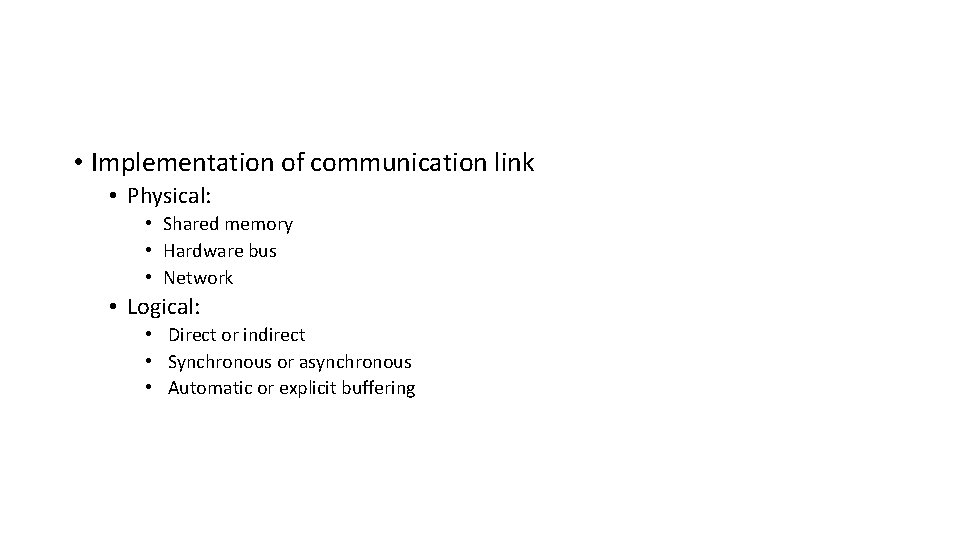

• Implementation of communication link • Physical: • Shared memory • Hardware bus • Network • Logical: • Direct or indirect • Synchronous or asynchronous • Automatic or explicit buffering

Direct Communication • Processes must name each other explicitly: • send (P, message) – send a message to process P • receive(Q, message) – receive a message from process Q • Properties of communication link • A link is established automatically between every pair of processes that want to communicate • A link is associated with exactly one pair of communicating processes • Between each pair there exists exactly one link • The link may be unidirectional, but is usually bi-directional

• asymmetry in addressing: • send (P, message) – send a message to process P • receive(id, message) – receive a message from any process. The variable id is set to the name of the process

Indirect Communication • Messages are directed and received from mailboxes (also referred to as ports) • Each mailbox has a unique id • Processes can communicate only if they share a mailbox • Properties of communication link • • Link established only if processes share a common mailbox A link may be associated with many processes Each pair of processes may share several communication links Link may be unidirectional or bi-directional

• Operations • create a new mailbox (port) • send and receive messages through mailbox • destroy a mailbox • Primitives are defined as: send(A, message) – send a message to mailbox A receive(A, message) – receive a message from mailbox A

• Mailbox sharing • P 1, P 2, and P 3 share mailbox A • P 1, sends; P 2 and P 3 receive • Who gets the message? • Solutions • Allow a link to be associated with at most two processes • Allow only one process at a time to execute a receive operation • Allow the system to select arbitrarily the receiver. Sender is notified who the receiver was.

• Indirect communication uses shared mailboxes, or ports. • Multiple processes can share the same mailbox or boxes. • Only one process can read any given message in a mailbox. Initially the process that creates the mailbox is the owner, and is the only one allowed to read mail in the mailbox, although this privilege may be transferred. • The OS must provide system calls to create and delete mailboxes, and to send and receive messages to/from mailboxes.

- - Synchronization • Message passing may be either blocking or non-blocking • Blocking is considered synchronous • Blocking send -- the sender is blocked until the message is transferred or received • Blocking receive -- the receiver is blocked until a message is available • Non-blocking is considered asynchronous • Non-blocking send -- the sender sends the message and continue • Non-blocking receive -- the receiver receives: l A valid message, or l Null message

- - Buffering • Messages are passed via queues, which may have one of three capacity configurations: 1. Zero capacity - Messages cannot be stored in the queue, so senders must block until receivers accept the messages. 2. Bounded capacity - There is a certain pre-determined finite capacity in the queue. Senders must block if the queue is full, until space becomes available in the queue, but may be either blocking or non-blocking otherwise. 3. Unbounded capacity - The queue has a theoretical

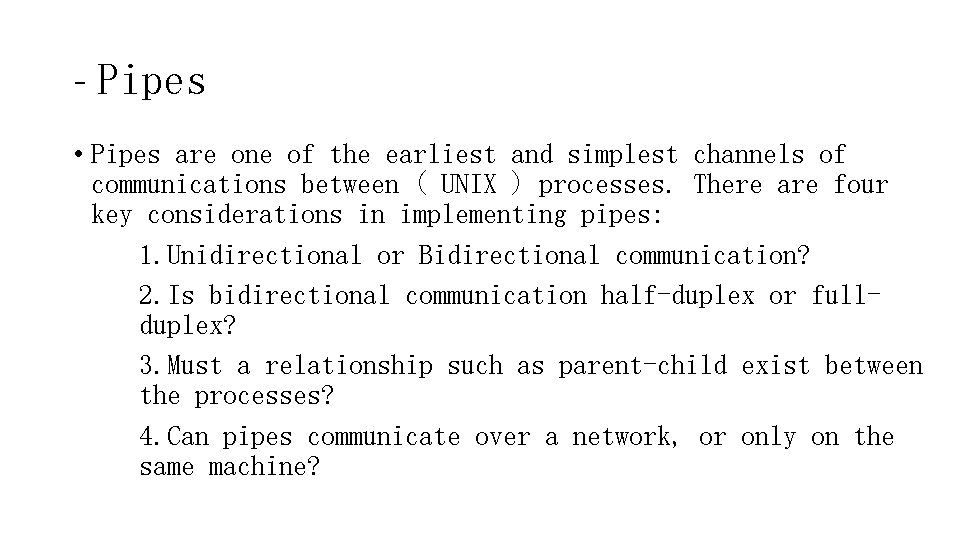

- Pipes • Pipes are one of the earliest and simplest channels of communications between ( UNIX ) processes. There are four key considerations in implementing pipes: 1. Unidirectional or Bidirectional communication? 2. Is bidirectional communication half-duplex or fullduplex? 3. Must a relationship such as parent-child exist between the processes? 4. Can pipes communicate over a network, or only on the same machine?

• Ordinary pipes – cannot be accessed from outside the process that created it. Typically, a parent process creates a pipe and uses it to communicate with a child process that it created. • Named pipes – can be accessed without a parent-child relationship.

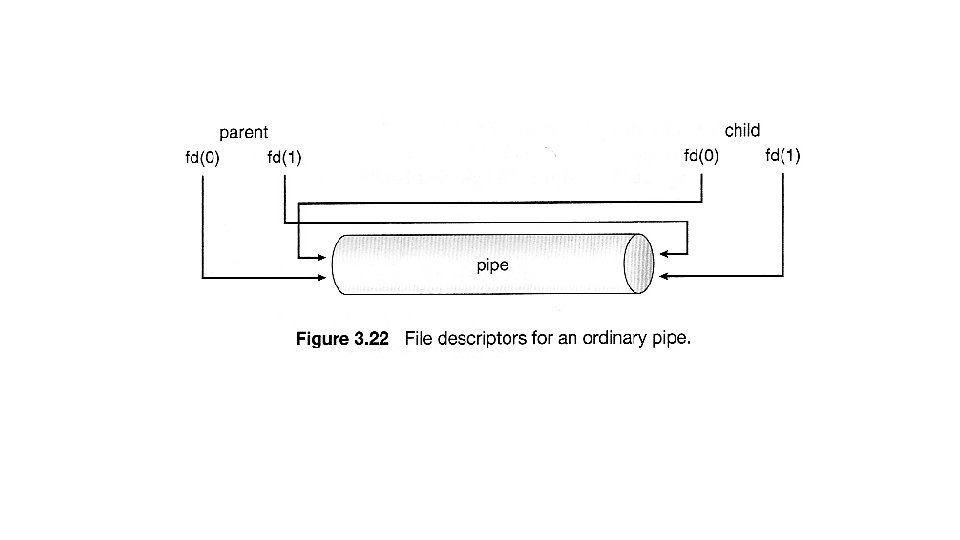

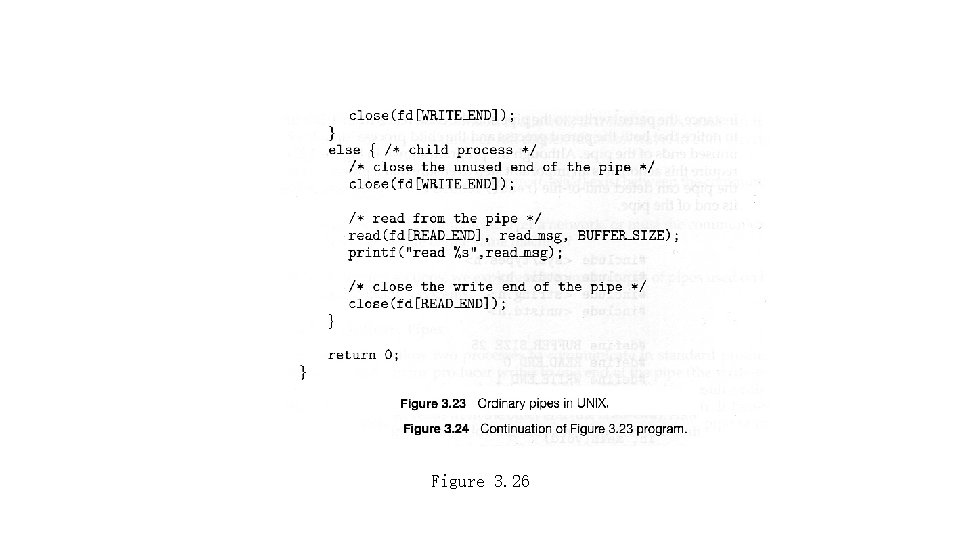

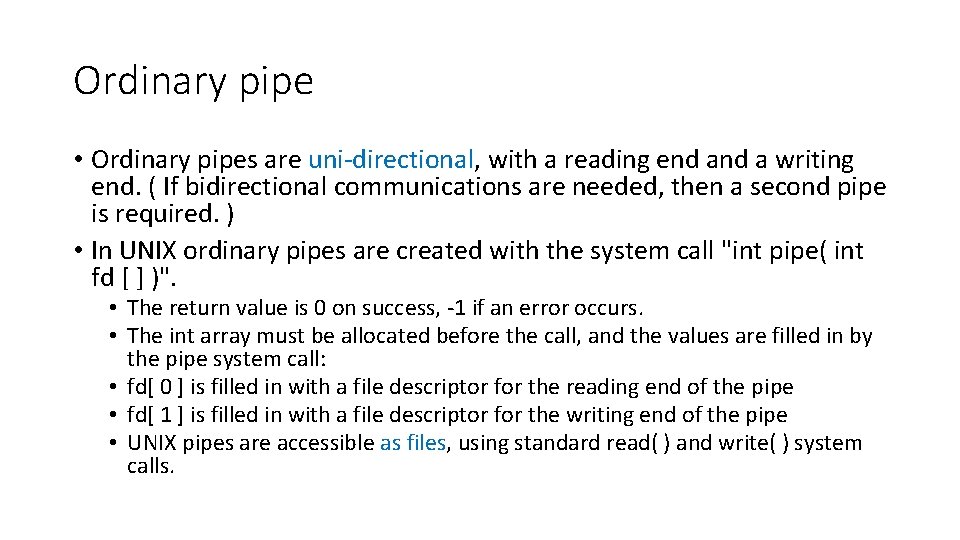

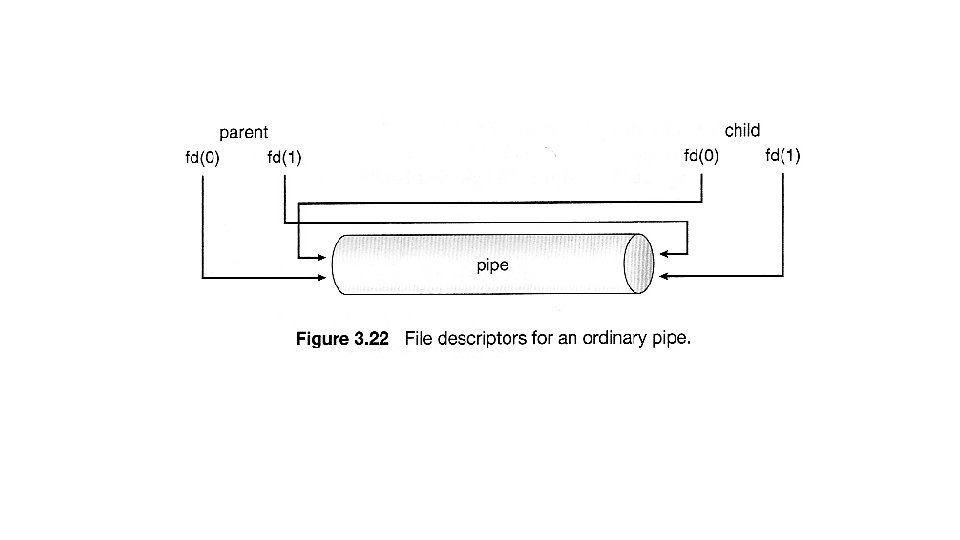

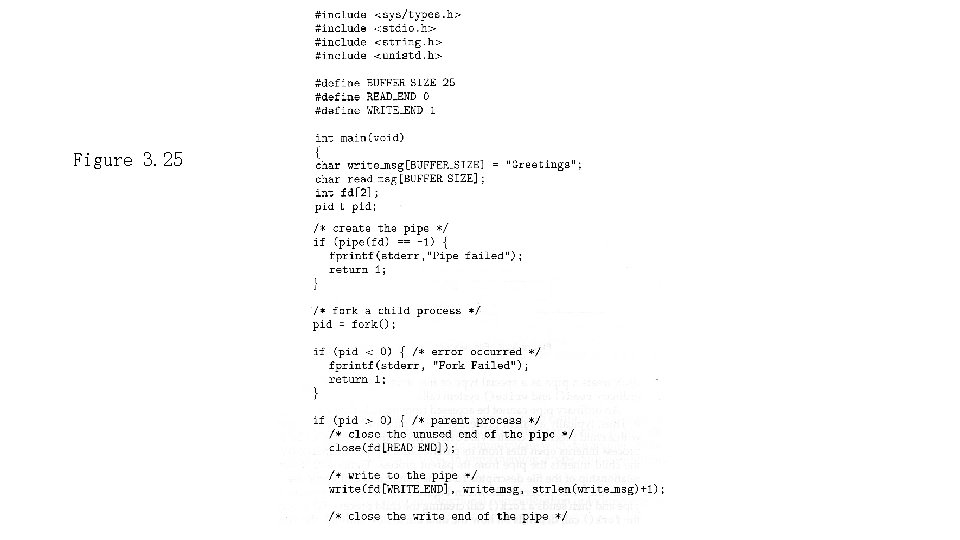

Ordinary pipe • Ordinary pipes are uni-directional, with a reading end a writing end. ( If bidirectional communications are needed, then a second pipe is required. ) • In UNIX ordinary pipes are created with the system call "int pipe( int fd [ ] )". • The return value is 0 on success, -1 if an error occurs. • The int array must be allocated before the call, and the values are filled in by the pipe system call: • fd[ 0 ] is filled in with a file descriptor for the reading end of the pipe • fd[ 1 ] is filled in with a file descriptor for the writing end of the pipe • UNIX pipes are accessible as files, using standard read( ) and write( ) system calls.

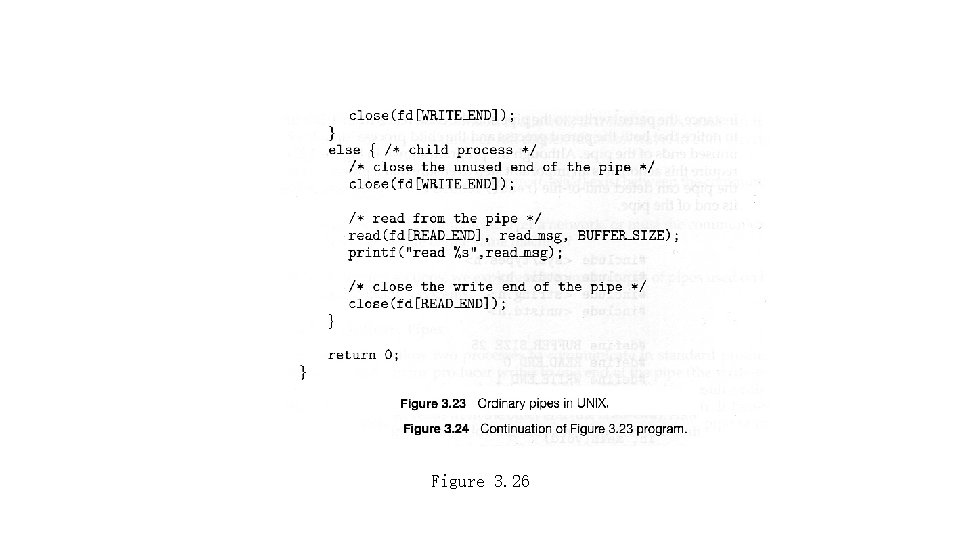

• Ordinary pipes are only accessible within the process that created them. • Typically a parent creates the pipe before forking off a child. • When the child inherits open files from its parent, including the pipe file(s), a channel of communication is established. • Each process ( parent and child ) should first close the ends of the pipe that they are not using. For example, if the parent is writing to the pipe and the child is reading, then the parent should close the reading end of its pipe after the fork and the child should close the writing end.

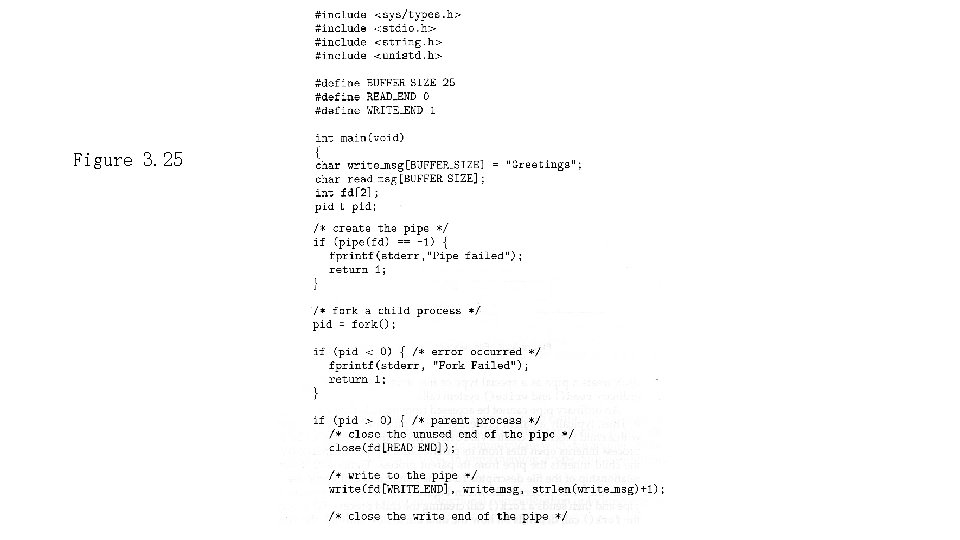

Figure 3. 25

Figure 3. 26

Named Pipe • Named Pipes are more powerful than ordinary pipes • Communication is bidirectional • No parent-child relationship is necessary between the communicating processes • Several processes can use the named pipe for communication

In UNIX, named pipes are termed fifos, and appear as ordinary files in the file system. • ( Recognizable by a "p" as the first character of a long listing, e. g. /dev/initctl ) • Created with mkfifo( ) and manipulated with read( ), write( ), open( ), close( ), etc. • UNIX named pipes are bidirectional, but half-duplex, so two pipes are still typically used for bidirectional communications. • UNIX named pipes still require that all processes be running on the same machine. Otherwise sockets are used.

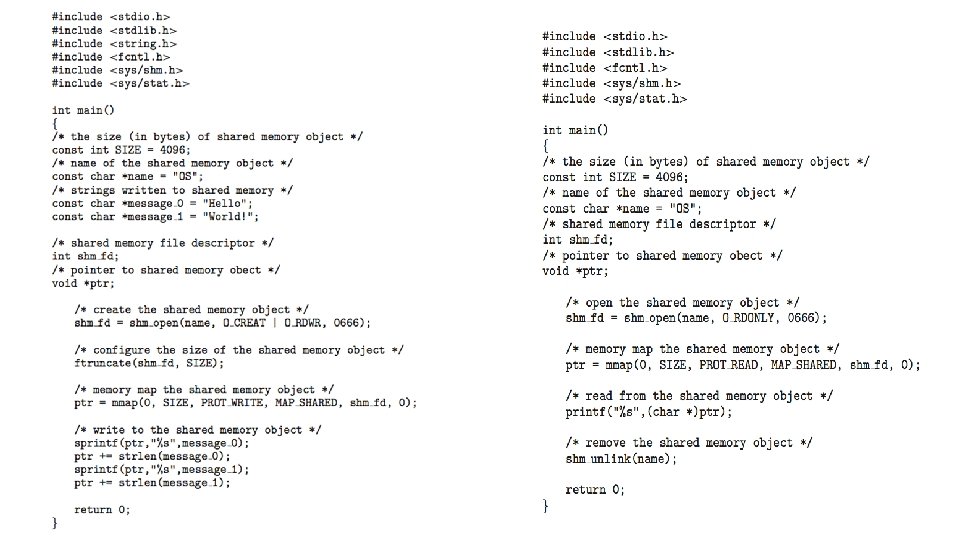

Examples of IPC Systems • Shared memory • Message queue

Communication in Client-Server Systems* • Socket programming • Remote Procedure Calls

Exercises • 3. 1, 3. 5 • 3. 8, 3. 9, 3. 12, 3. 13, 3. 14, 3. 17,