Processes Operating Systems Fall 2002 OS Fall 02

- Slides: 42

Processes Operating Systems Fall 2002 OS Fall’ 02

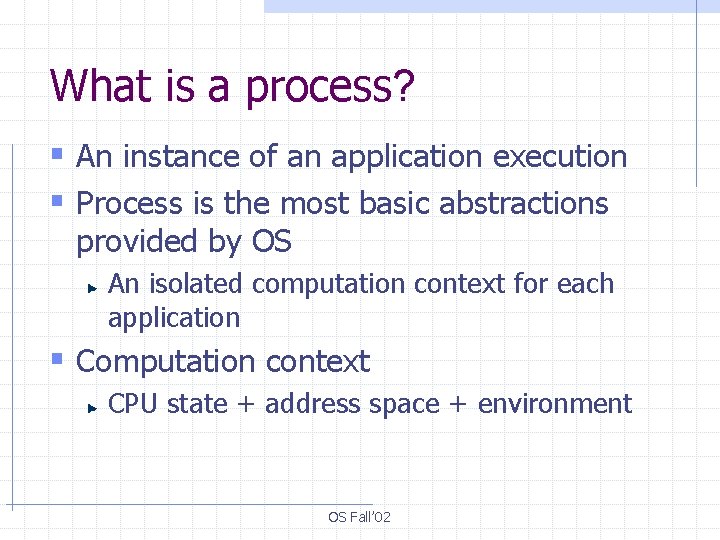

What is a process? § An instance of an application execution § Process is the most basic abstractions provided by OS An isolated computation context for each application § Computation context CPU state + address space + environment OS Fall’ 02

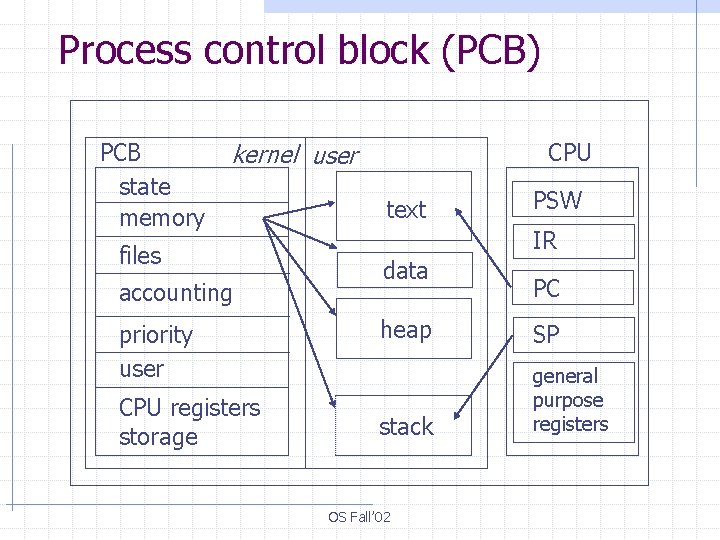

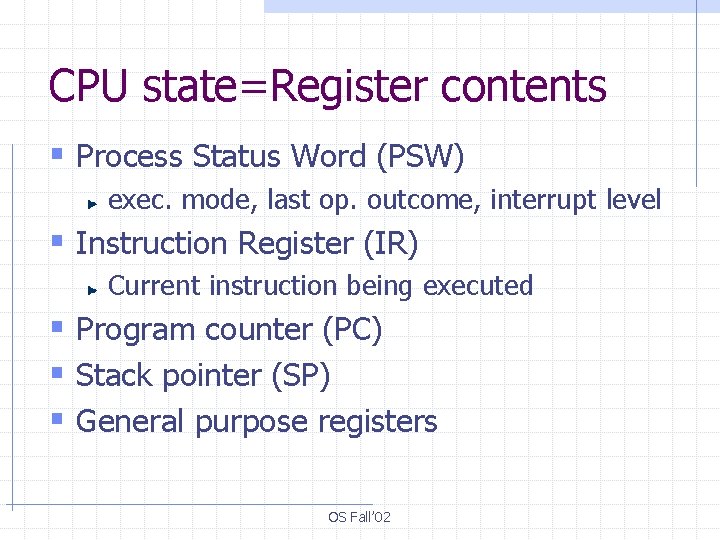

CPU state=Register contents § Process Status Word (PSW) exec. mode, last op. outcome, interrupt level § Instruction Register (IR) Current instruction being executed § Program counter (PC) § Stack pointer (SP) § General purpose registers OS Fall’ 02

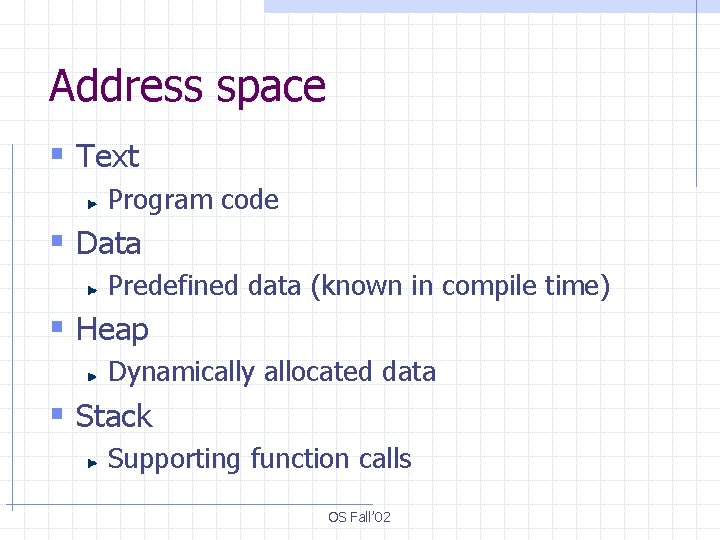

Address space § Text Program code § Data Predefined data (known in compile time) § Heap Dynamically allocated data § Stack Supporting function calls OS Fall’ 02

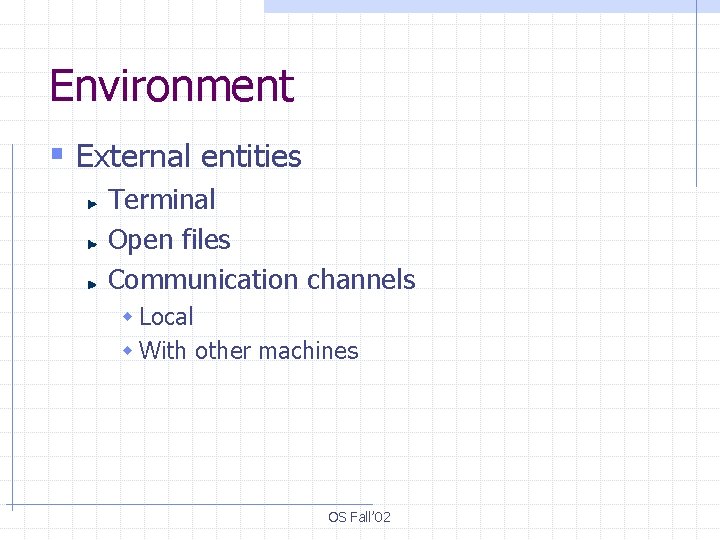

Environment § External entities Terminal Open files Communication channels w Local w With other machines OS Fall’ 02

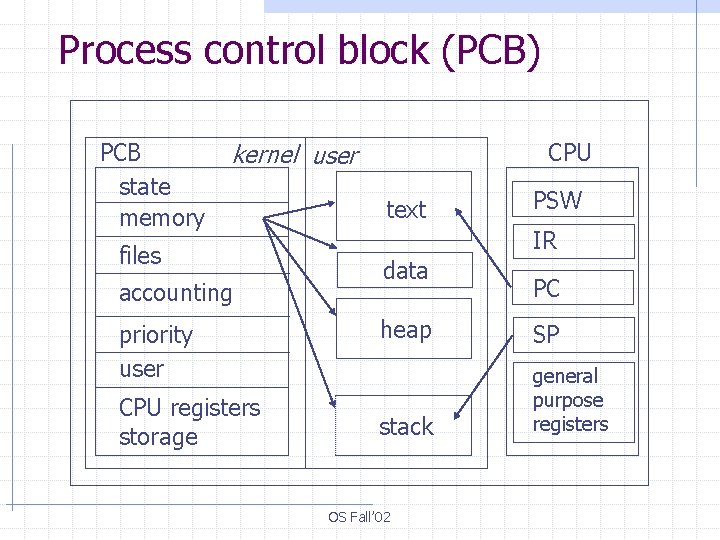

Process control block (PCB) PCB state memory CPU kernel user files accounting priority user CPU registers storage text PSW IR data PC heap SP stack general purpose registers OS Fall’ 02

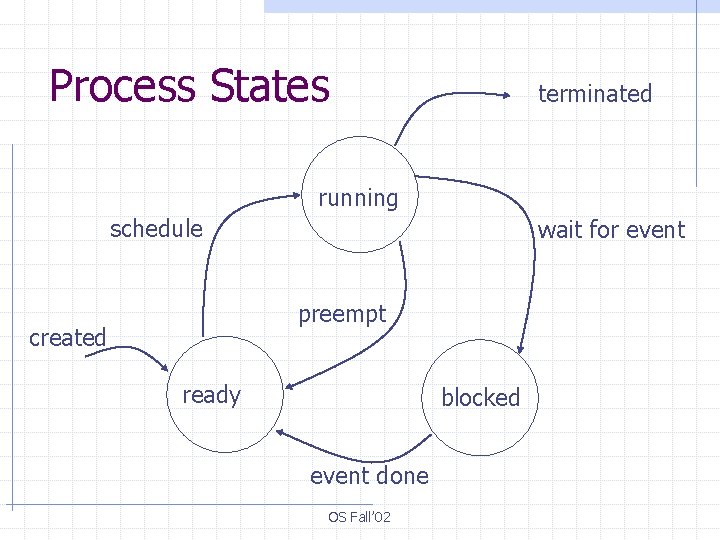

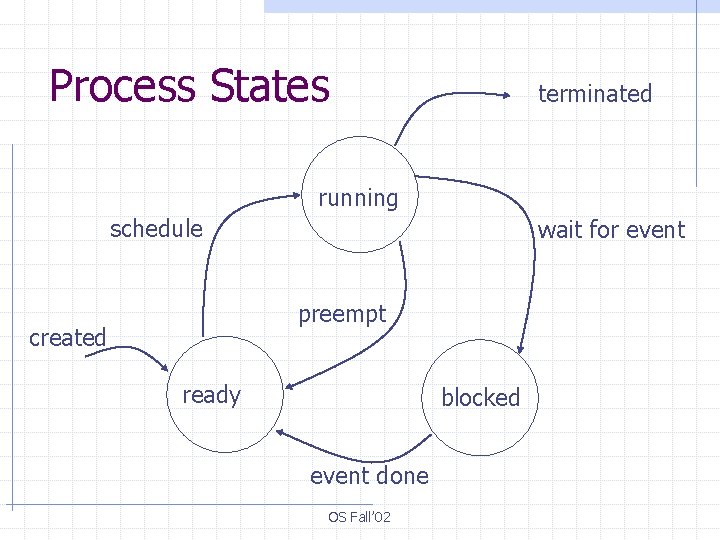

Process States terminated running schedule wait for event preempt created ready blocked event done OS Fall’ 02

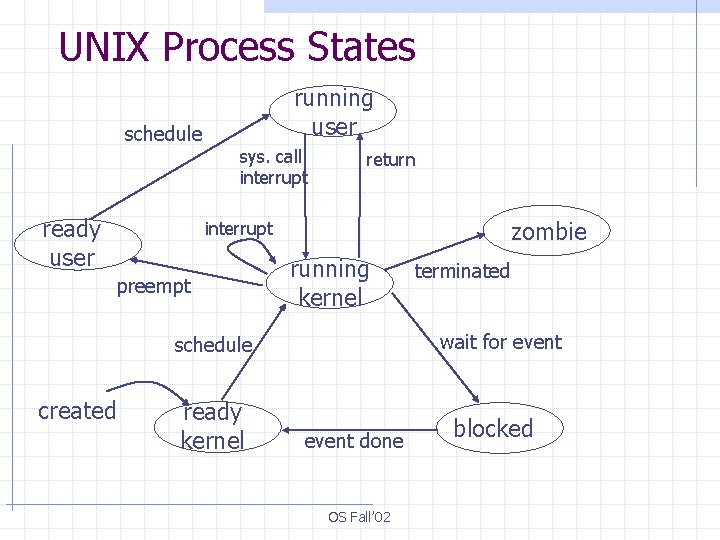

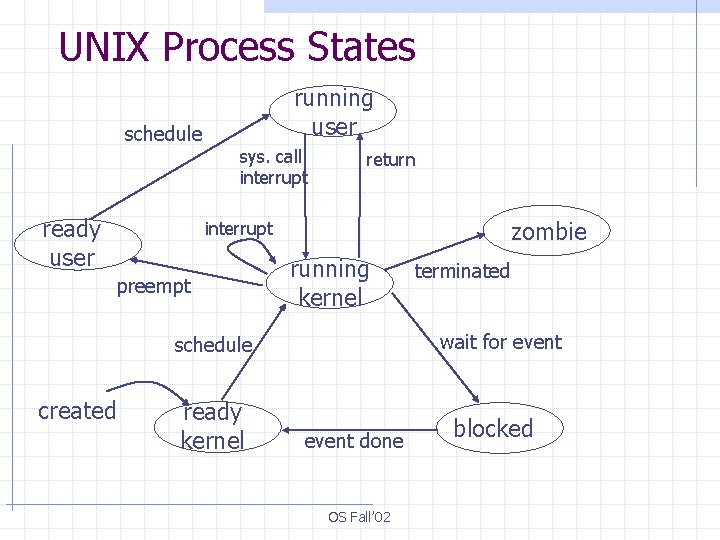

UNIX Process States schedule ready user running user sys. call interrupt return zombie interrupt preempt running kernel wait for event schedule created ready kernel terminated event done OS Fall’ 02 blocked

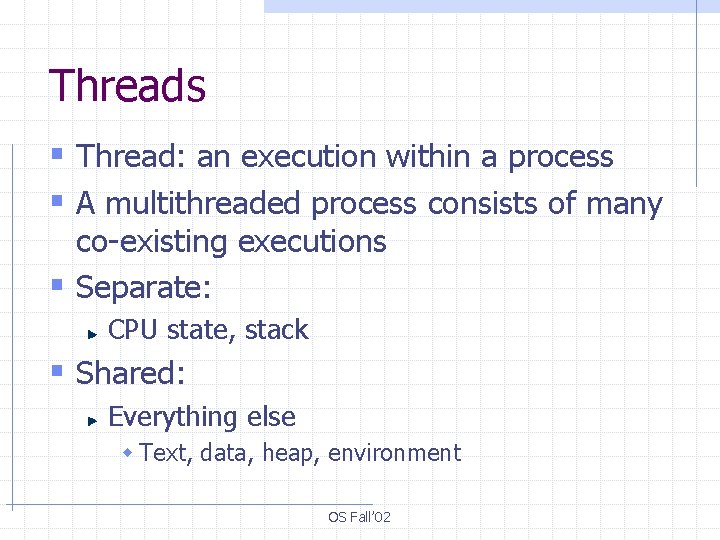

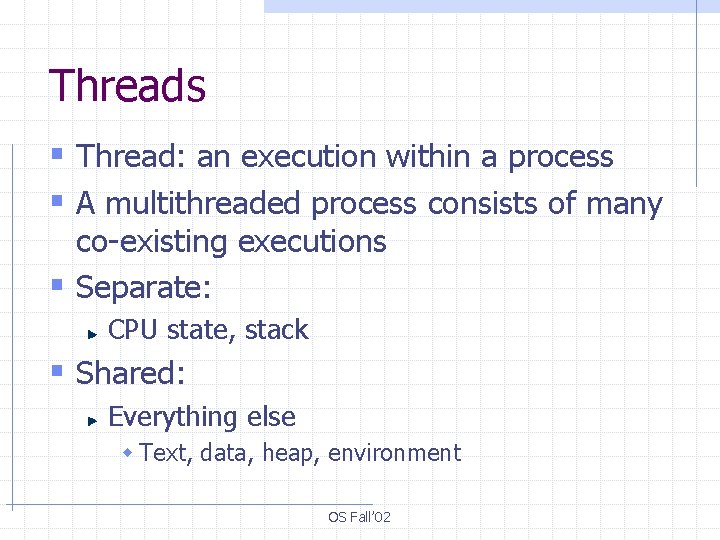

Threads § Thread: an execution within a process § A multithreaded process consists of many co-existing executions § Separate: CPU state, stack § Shared: Everything else w Text, data, heap, environment OS Fall’ 02

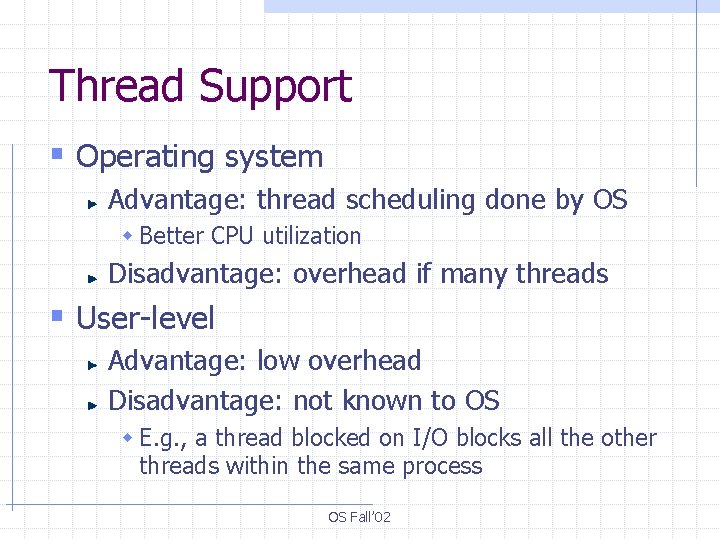

Thread Support § Operating system Advantage: thread scheduling done by OS w Better CPU utilization Disadvantage: overhead if many threads § User-level Advantage: low overhead Disadvantage: not known to OS w E. g. , a thread blocked on I/O blocks all the other threads within the same process OS Fall’ 02

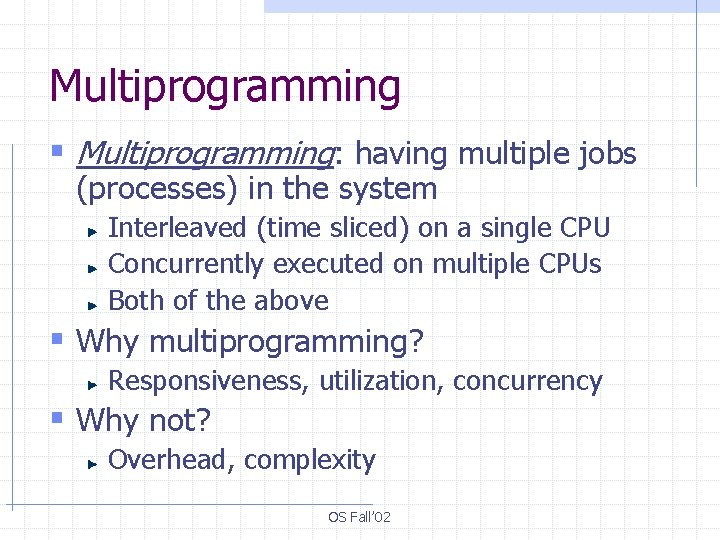

Multiprogramming § Multiprogramming: having multiple jobs (processes) in the system Interleaved (time sliced) on a single CPU Concurrently executed on multiple CPUs Both of the above § Why multiprogramming? Responsiveness, utilization, concurrency § Why not? Overhead, complexity OS Fall’ 02

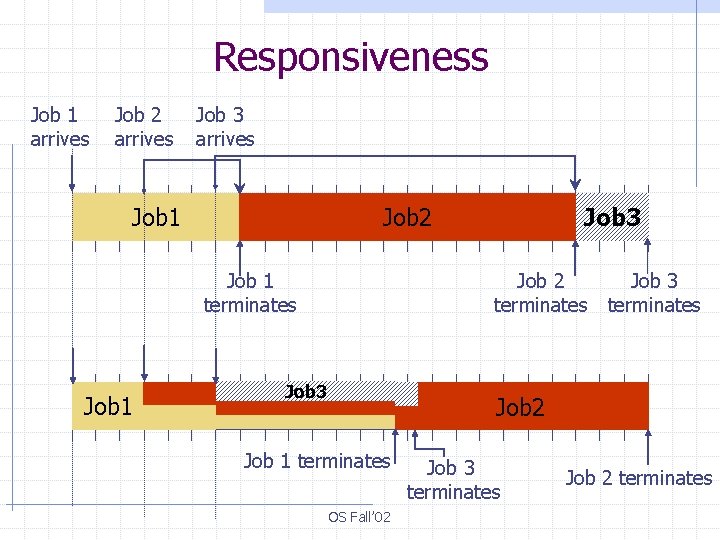

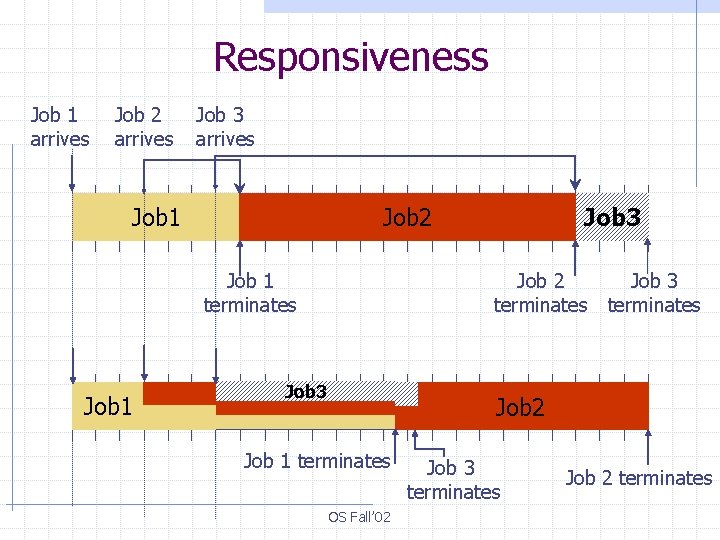

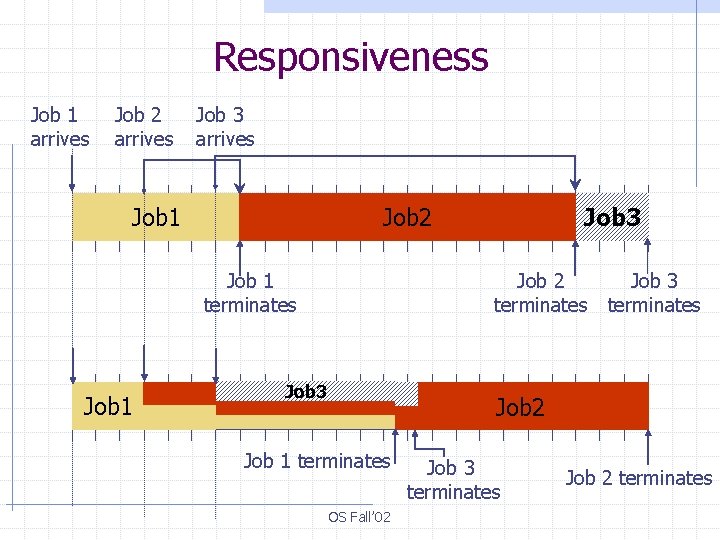

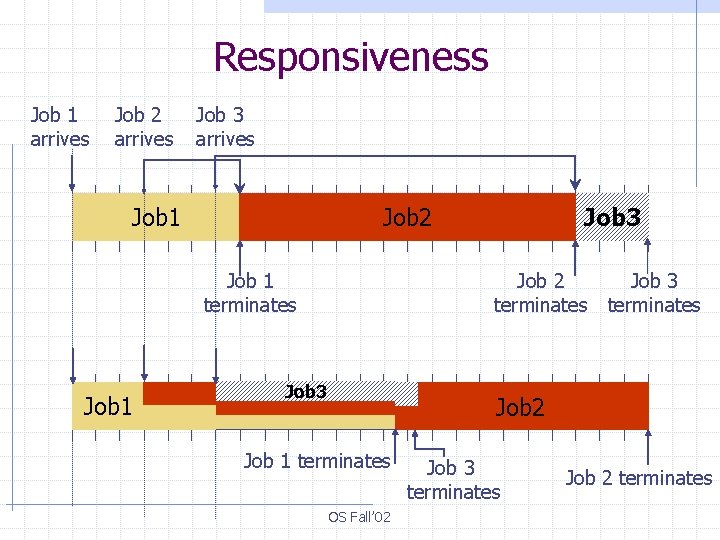

Responsiveness Job 1 arrives Job 2 arrives Job 3 arrives Job 1 terminates Job 1 Job 3 Job 2 terminates Job 3 terminates Job 2 Job 1 terminates OS Fall’ 02 Job 3 terminates Job 2 terminates

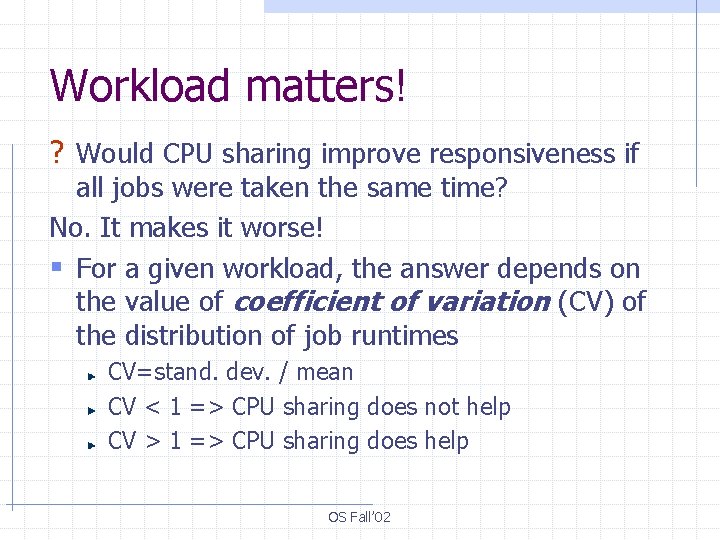

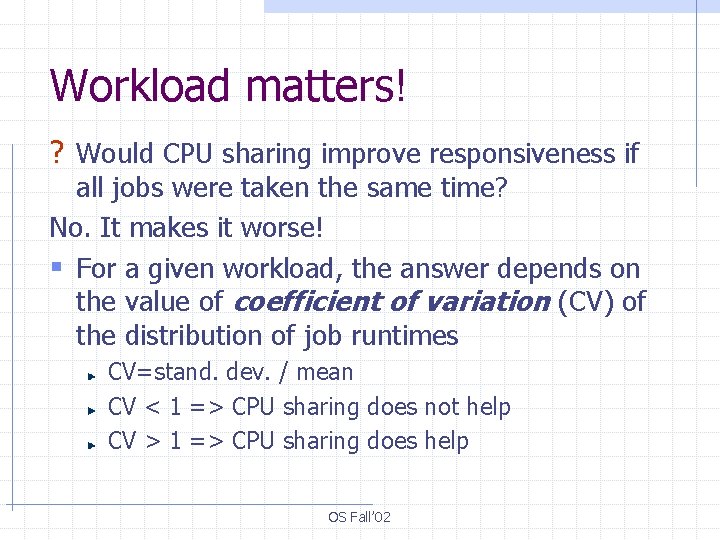

Workload matters! ? Would CPU sharing improve responsiveness if all jobs were taken the same time? No. It makes it worse! § For a given workload, the answer depends on the value of coefficient of variation (CV) of the distribution of job runtimes CV=stand. dev. / mean CV < 1 => CPU sharing does not help CV > 1 => CPU sharing does help OS Fall’ 02

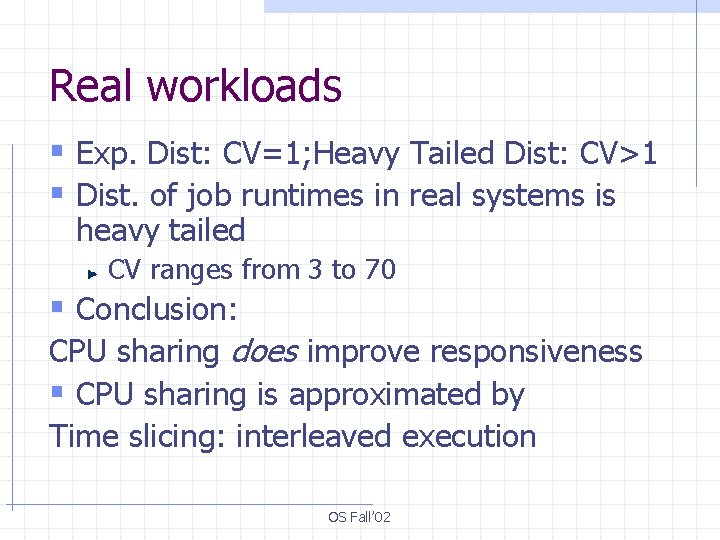

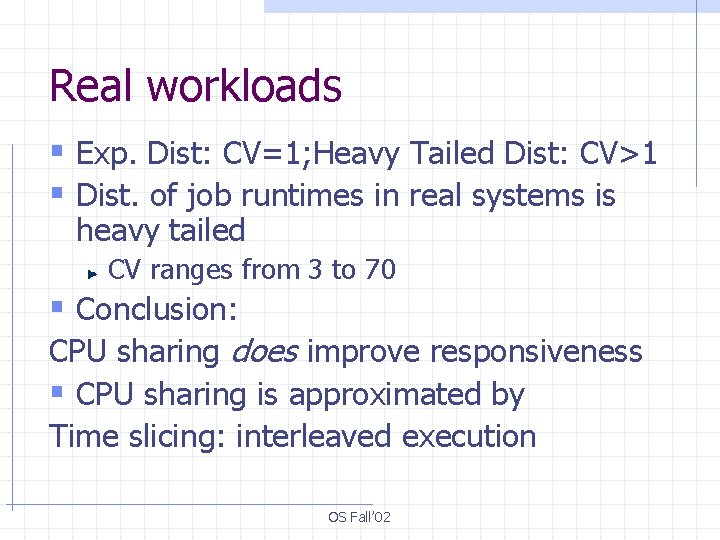

Real workloads § Exp. Dist: CV=1; Heavy Tailed Dist: CV>1 § Dist. of job runtimes in real systems is heavy tailed CV ranges from 3 to 70 § Conclusion: CPU sharing does improve responsiveness § CPU sharing is approximated by Time slicing: interleaved execution OS Fall’ 02

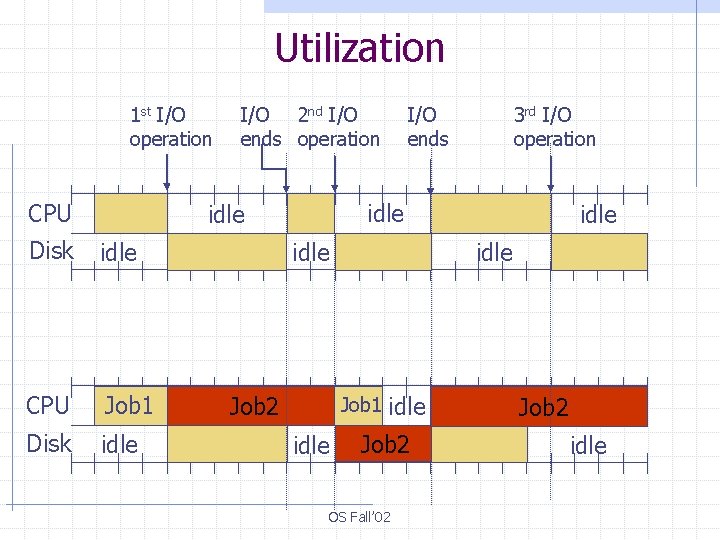

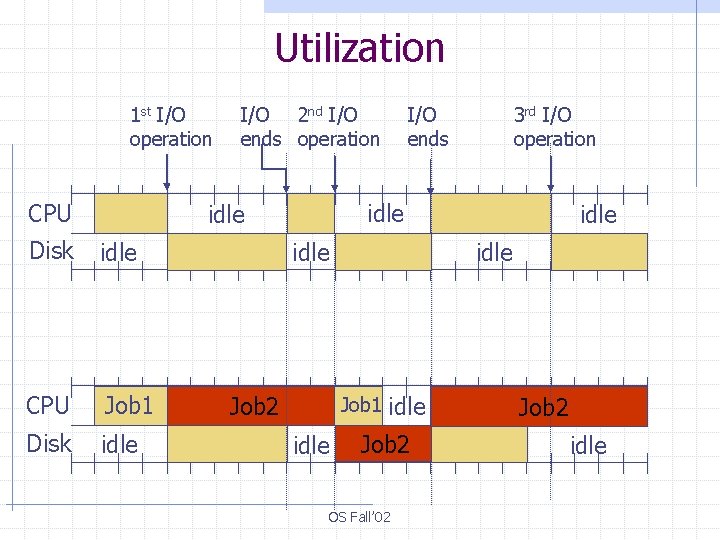

Utilization 1 st I/O operation CPU I/O 2 nd I/O ends operation idle CPU Job 1 Disk idle Job 2 Job 1 3 rd I/O operation idle Disk I/O ends Job 1 idle Job 2 OS Fall’ 02 Job 1 idle

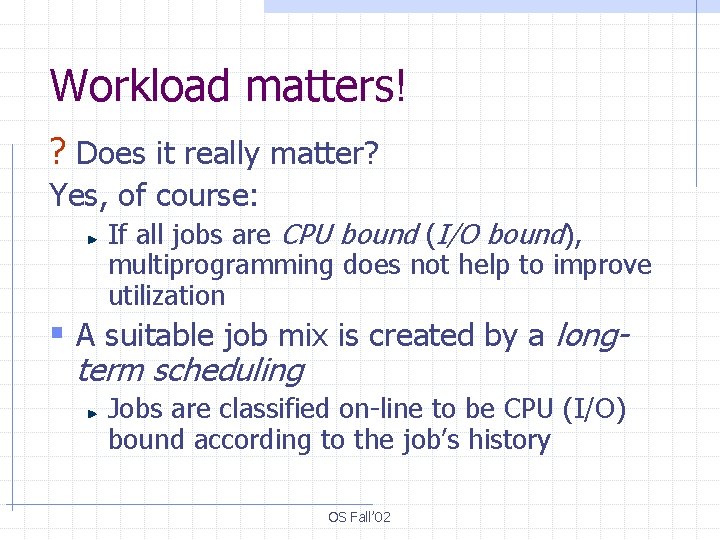

Workload matters! ? Does it really matter? Yes, of course: If all jobs are CPU bound (I/O bound), multiprogramming does not help to improve utilization § A suitable job mix is created by a longterm scheduling Jobs are classified on-line to be CPU (I/O) bound according to the job’s history OS Fall’ 02

Concurrency § Concurrent programming Several process interact to work on the same problem w ls –l | more Simultaneous execution of related applications w Word + Excel + Power. Point Background execution w Polling/receiving Email while working on smth else OS Fall’ 02

The cost of multiprogramming § Switching overhead Saving/restoring context wastes CPU cycles § Degrades performance Resource contention Cache misses § Complexity Synchronization, concurrency control, deadlock avoidance/prevention OS Fall’ 02

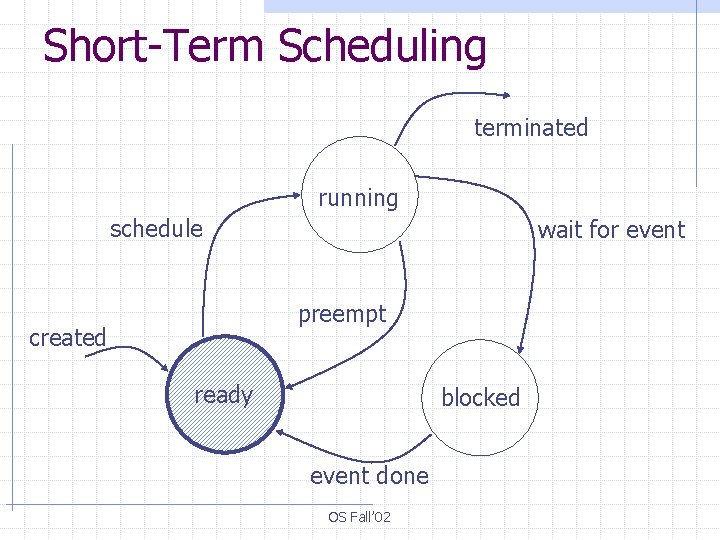

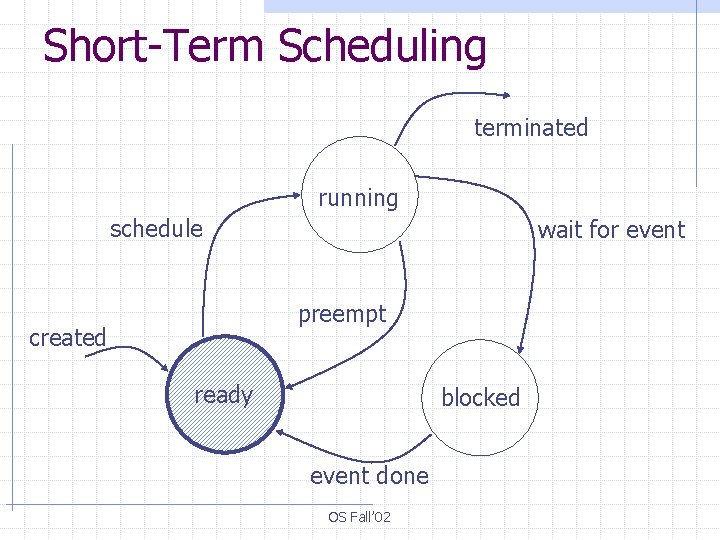

Short-Term Scheduling terminated running schedule wait for event preempt created ready blocked event done OS Fall’ 02

Short-Term scheduling § Process execution pattern consists of alternating CPU cycle and I/O wait w CPU burst – I/O burst – CPU burst – I/O burst. . . § Processes ready for execution are hold in a ready (run) queue § STS is schedules process from the ready queue once CPU becomes idle OS Fall’ 02

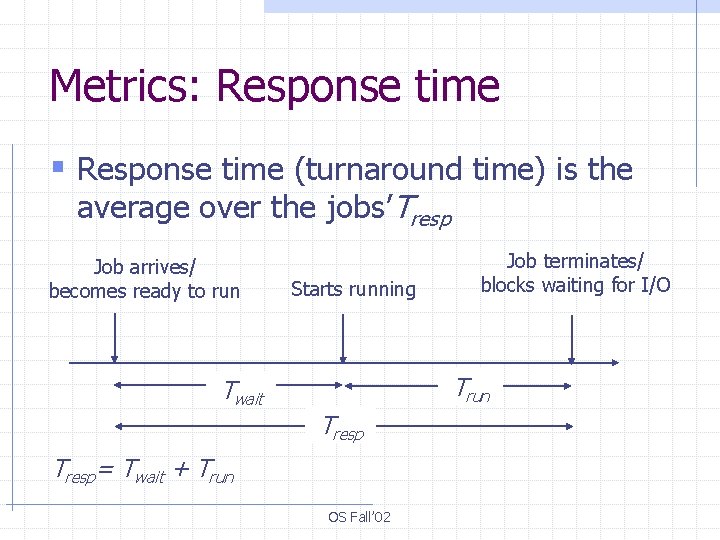

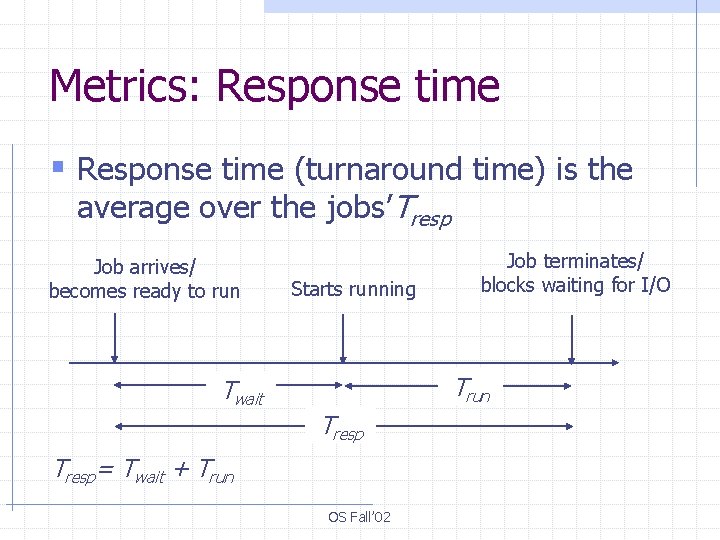

Metrics: Response time § Response time (turnaround time) is the average over the jobs’Tresp Job arrives/ becomes ready to run Starts running Job terminates/ blocks waiting for I/O Trun Twait Tresp= Twait + Trun OS Fall’ 02

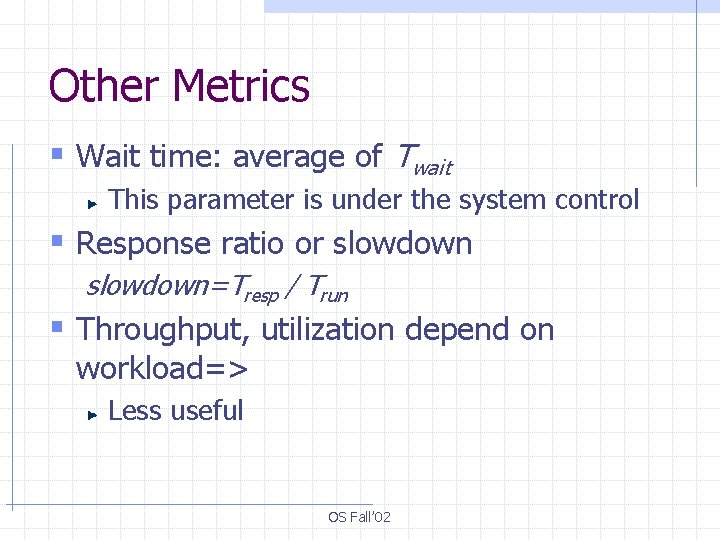

Other Metrics § Wait time: average of Twait This parameter is under the system control § Response ratio or slowdown=Tresp / Trun § Throughput, utilization depend on workload=> Less useful OS Fall’ 02

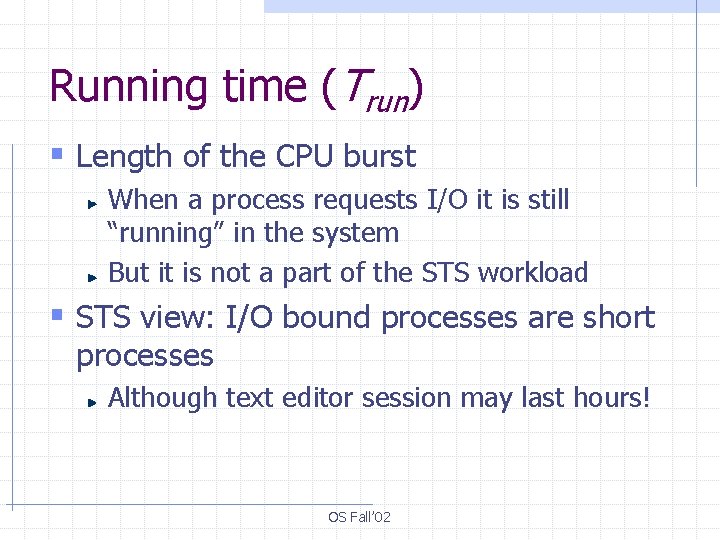

Running time (Trun) § Length of the CPU burst When a process requests I/O it is still “running” in the system But it is not a part of the STS workload § STS view: I/O bound processes are short processes Although text editor session may last hours! OS Fall’ 02

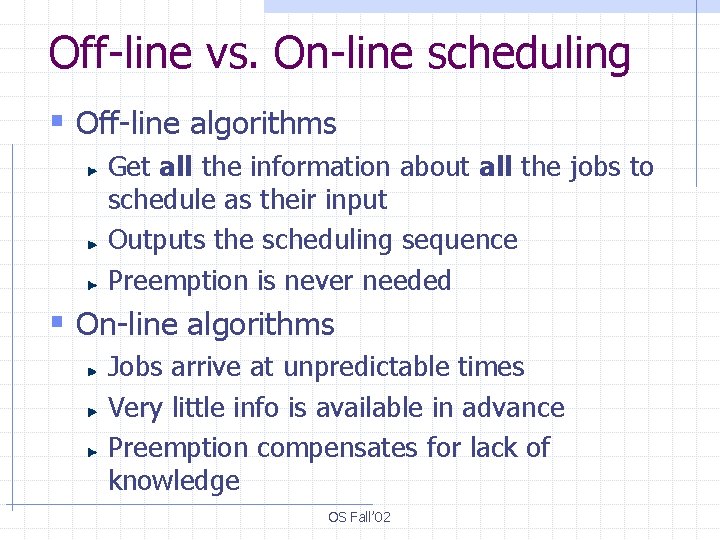

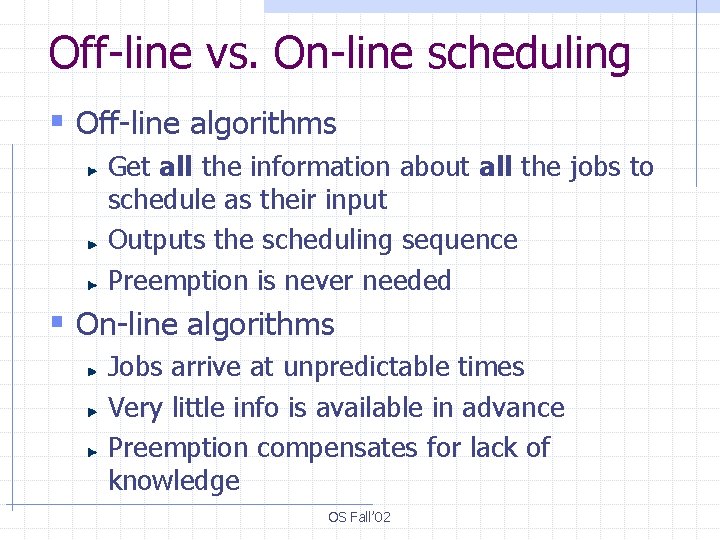

Off-line vs. On-line scheduling § Off-line algorithms Get all the information about all the jobs to schedule as their input Outputs the scheduling sequence Preemption is never needed § On-line algorithms Jobs arrive at unpredictable times Very little info is available in advance Preemption compensates for lack of knowledge OS Fall’ 02

First-Come-First-Serve (FCFS( § Schedules the jobs in the order in which they arrive Off-line FCFS schedules in the order the jobs appear in the input § Runs each job to completion § Both on-line and off-line § Simple, a base case for analysis § Poor response time OS Fall’ 02

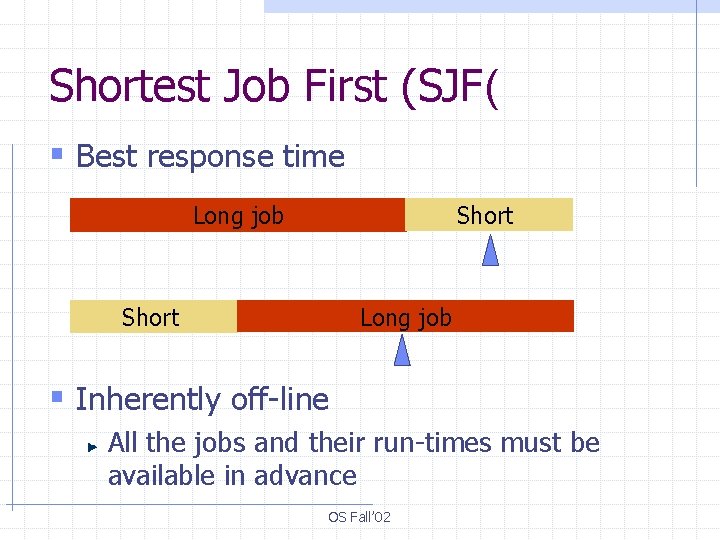

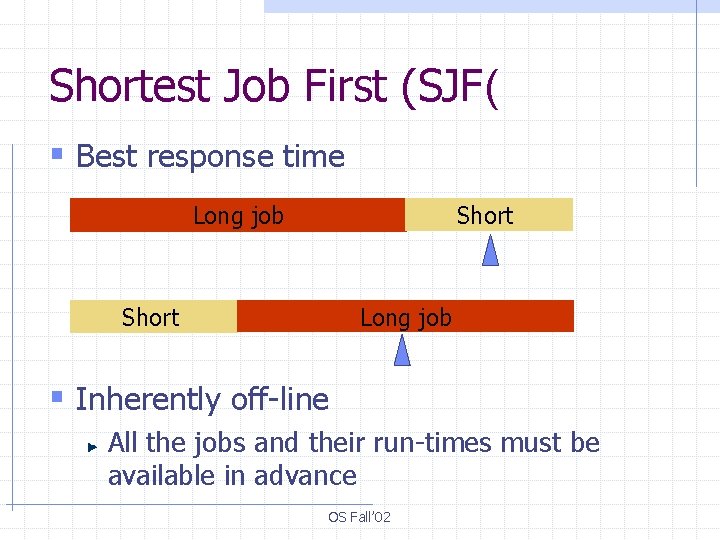

Shortest Job First (SJF( § Best response time Short Long job § Inherently off-line All the jobs and their run-times must be available in advance OS Fall’ 02

Preemption § Preemption is the action of stopping a running job and scheduling another in its place § Context switch: Switching from one job to another OS Fall’ 02

Using preemption § On-line short-term scheduling algorithms Adapting to changing conditions w e. g. , new jobs arrive Compensating for lack of knowledge w e. g. , job run-time § Periodic preemption keeps system in control § Improves fairness Gives I/O bound processes chance to run OS Fall’ 02

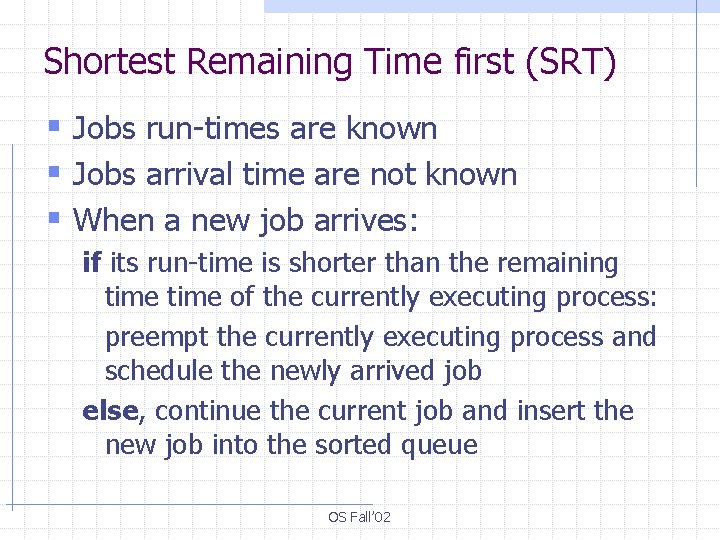

Shortest Remaining Time first (SRT) § Jobs run-times are known § Jobs arrival time are not known § When a new job arrives: if its run-time is shorter than the remaining time of the currently executing process: preempt the currently executing process and schedule the newly arrived job else, continue the current job and insert the new job into the sorted queue OS Fall’ 02

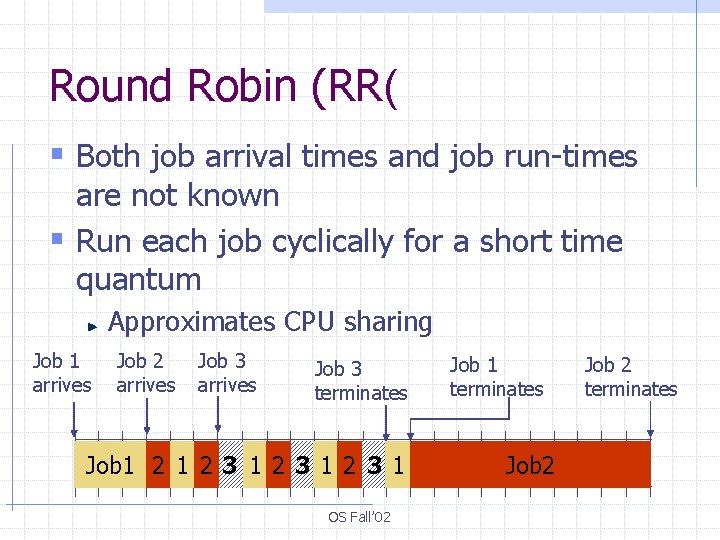

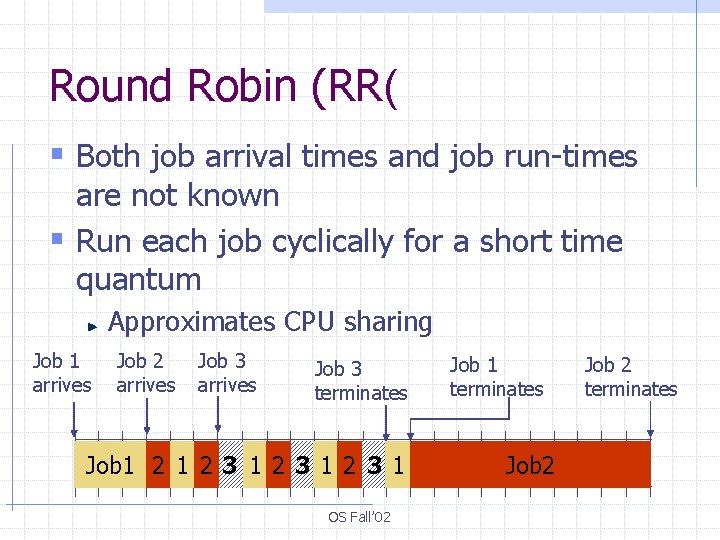

Round Robin (RR( § Both job arrival times and job run-times are not known § Run each job cyclically for a short time quantum Approximates CPU sharing Job 1 arrives Job 2 arrives Job 3 terminates Job 1 2 3 1 OS Fall’ 02 Job 1 terminates Job 2 terminates

Responsiveness Job 1 arrives Job 2 arrives Job 3 arrives Job 1 terminates Job 1 Job 3 Job 2 terminates Job 3 terminates Job 2 Job 1 terminates OS Fall’ 02 Job 3 terminates Job 2 terminates

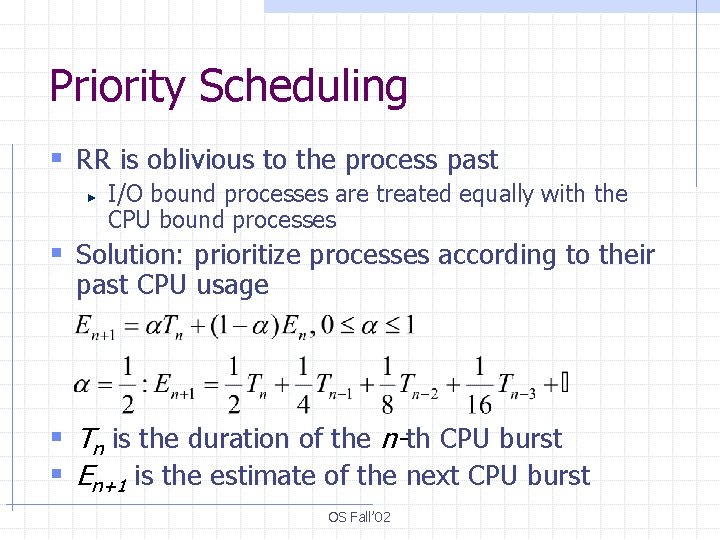

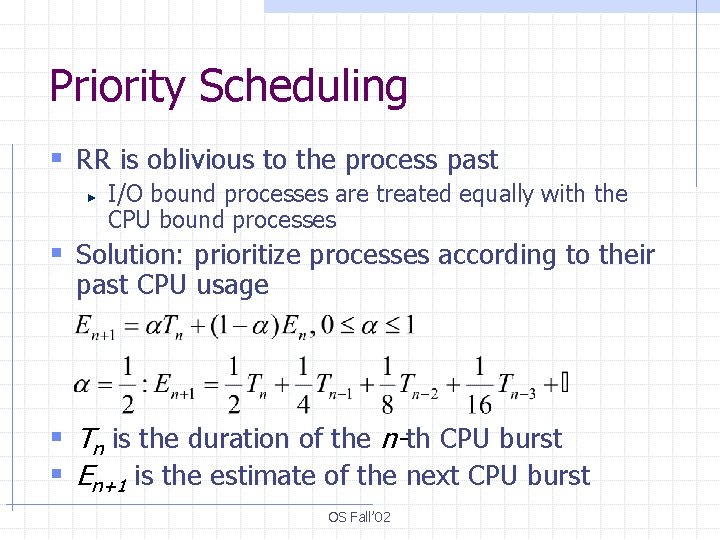

Priority Scheduling § RR is oblivious to the process past I/O bound processes are treated equally with the CPU bound processes § Solution: prioritize processes according to their past CPU usage § Tn is the duration of the n-th CPU burst § En+1 is the estimate of the next CPU burst OS Fall’ 02

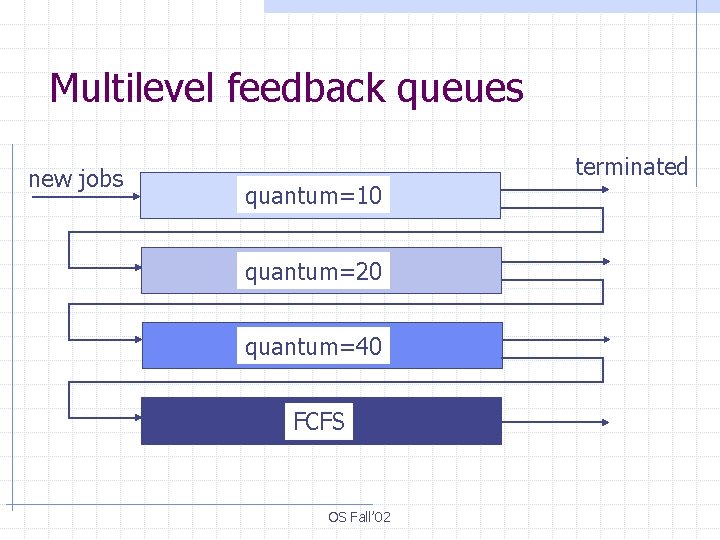

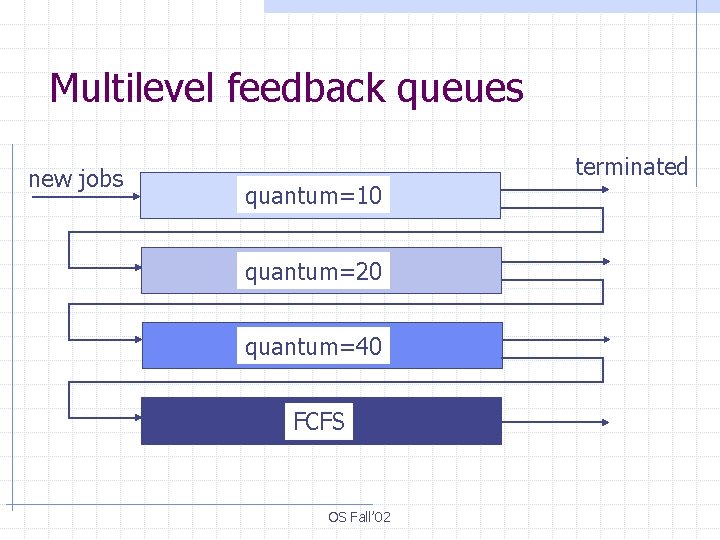

Multilevel feedback queues new jobs quantum=10 quantum=20 quantum=40 FCFS OS Fall’ 02 terminated

Multilevel feedback queues § Priorities are implicit in this scheme § Very flexible § Starvation is possible Short jobs keep arriving => long jobs get starved § Solutions: Let it be Aging OS Fall’ 02

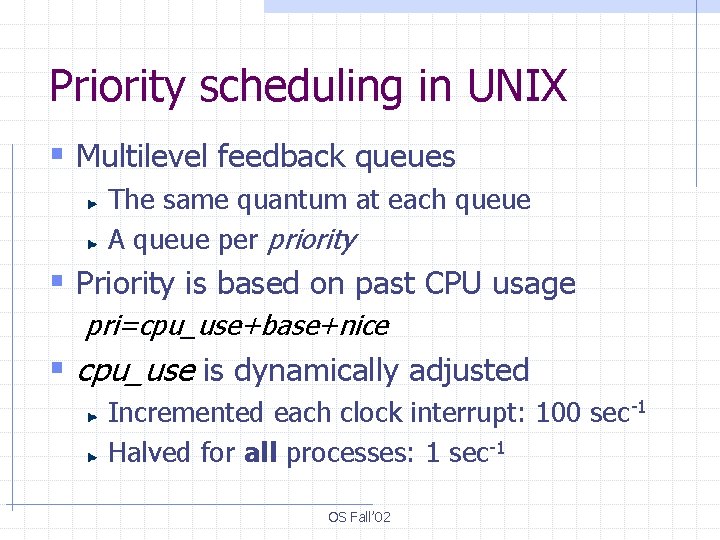

Priority scheduling in UNIX § Multilevel feedback queues The same quantum at each queue A queue per priority § Priority is based on past CPU usage pri=cpu_use+base+nice § cpu_use is dynamically adjusted Incremented each clock interrupt: 100 sec-1 Halved for all processes: 1 sec-1 OS Fall’ 02

Fair Share scheduling § Achieving pre-defined goals Administrative considerations w Paying for machine usage, importance of the project, personal importance, etc. Quality-of-service, soft real-time w Video, audio OS Fall’ 02

Fair Share scheduling: algorithms § Term in the priority formula: Reflects a cumulative CPU usage + aging when not used § Lottery scheduling: Each process gets a number of lottery tickets proportional to its CPU allocation The scheduler picks a ticket at random and gives it to the winning client OS Fall’ 02

Fair Share scheduling: VTRR § Virtual Time Round Robin (VTRR) Order ready queue in the order of decreasing shares (the highest share at the head) Run Round Robin as usual Once a process that exhausted its share is encountered: Go back to the head of the queue OS Fall’ 02

Multiprocessor Scheduling § Homogeneous vs. heterogeneous § Homogeneity allows for load sharing Separate ready queue for each processor or common ready queue? § Scheduling Symmetric Master/slave OS Fall’ 02

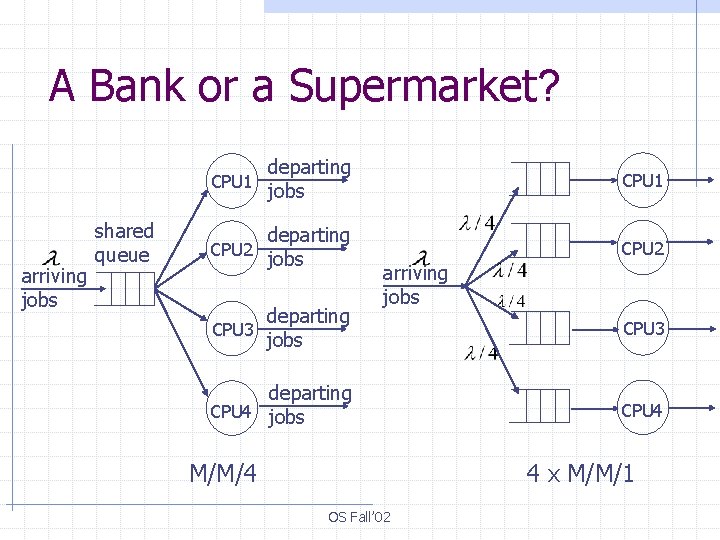

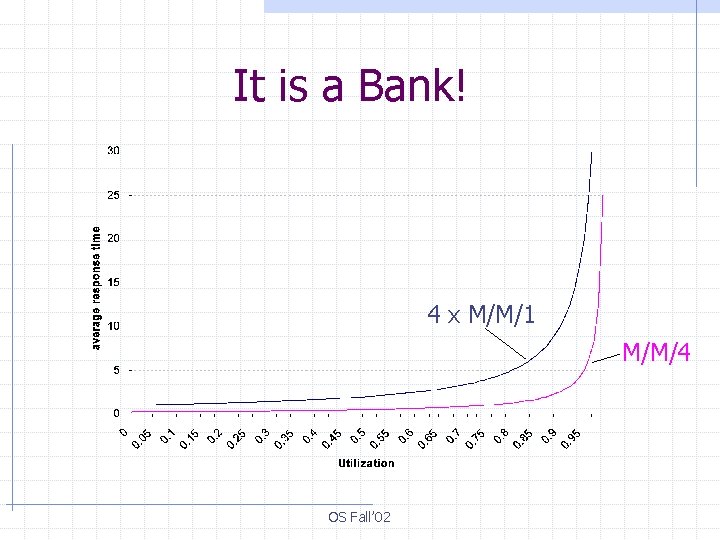

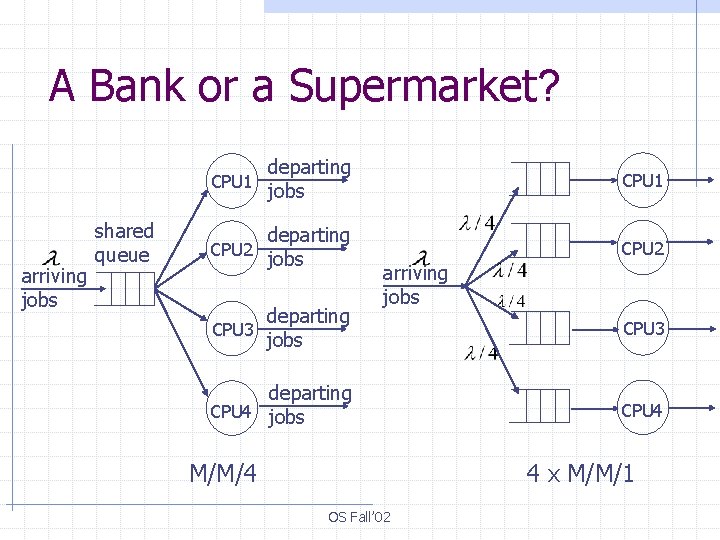

A Bank or a Supermarket? arriving jobs shared queue departing CPU 1 jobs CPU 1 departing jobs CPU 2 CPU 3 departing jobs arriving jobs departing CPU 4 jobs M/M/4 CPU 3 CPU 4 4 x M/M/1 OS Fall’ 02

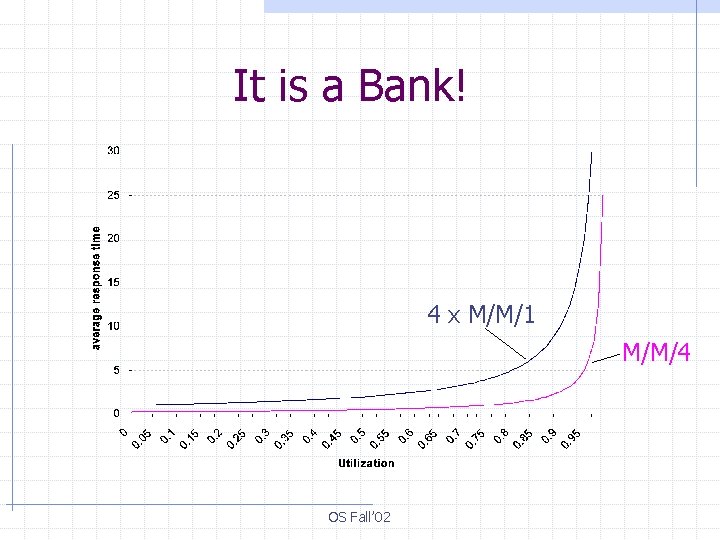

It is a Bank! 4 x M/M/1 M/M/4 OS Fall’ 02

Next: § Concurrency OS Fall’ 02