Probability theory provides a mathematical framework Example Coin

• Probability theory provides a mathematical framework

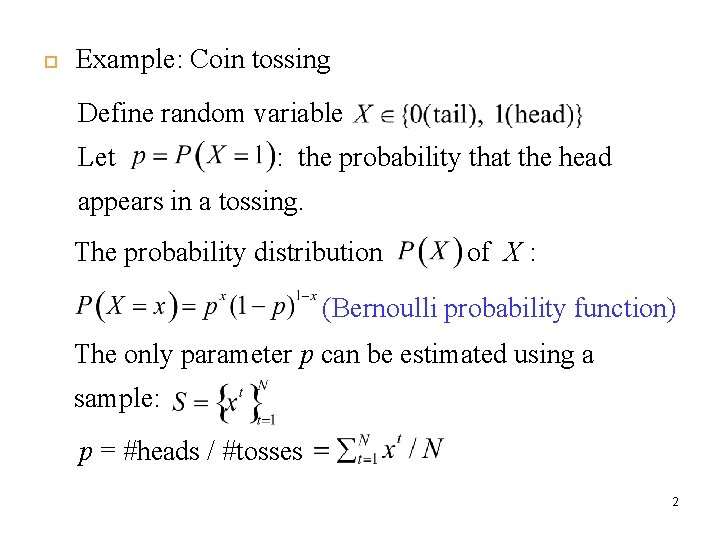

Example: Coin tossing Define random variable Let : the probability that the head appears in a tossing. The probability distribution of X : (Bernoulli probability function) The only parameter p can be estimated using a sample: p = #heads / #tosses 2

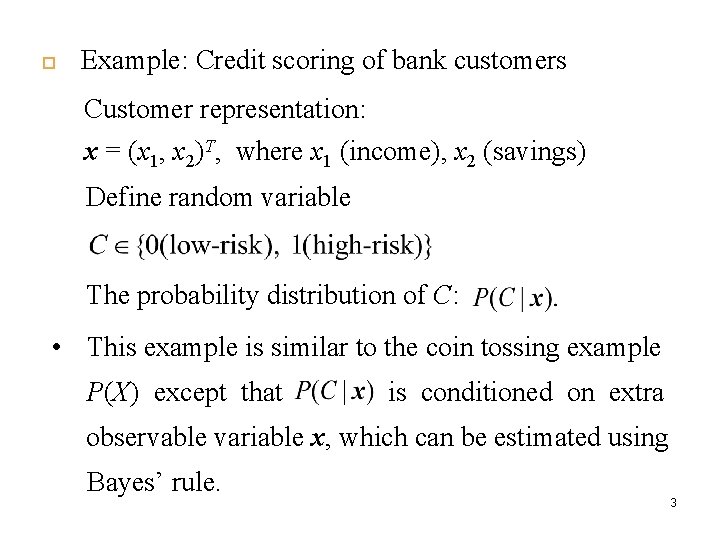

Example: Credit scoring of bank customers Customer representation: x = (x 1, x 2)T, where x 1 (income), x 2 (savings) Define random variable The probability distribution of C: • This example is similar to the coin tossing example P(X) except that is conditioned on extra observable variable x, which can be estimated using Bayes’ rule. 3

Bayes’ Rule: posteri or likelihood prior evidence prior probability -- The probability that a customer belongs to class C regardless of observation x 4

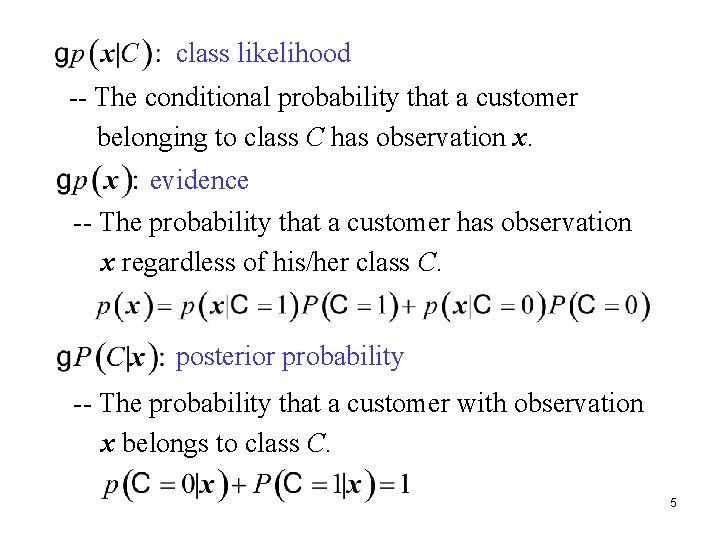

class likelihood -- The conditional probability that a customer belonging to class C has observation x. evidence -- The probability that a customer has observation x regardless of his/her class C. posterior probability -- The probability that a customer with observation x belongs to class C. 5

3. 2 Classification Prior probability: Given a sample S, can be estimated. Class likelihood: can also be estimated from the sample S. e. g. , ML: 6

Posterior probability: e. g. , MAP: Probability of error (PE): 7

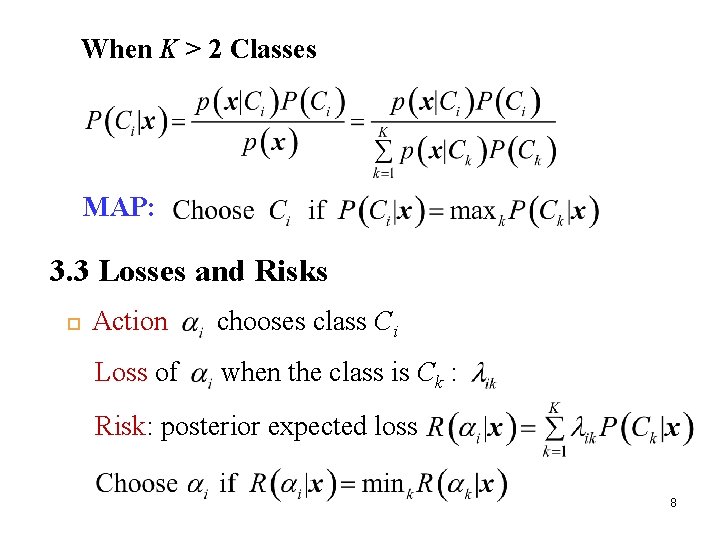

When K > 2 Classes MAP: 3. 3 Losses and Risks Action chooses class Ci Loss of when the class is Ck : Risk: posterior expected loss 8

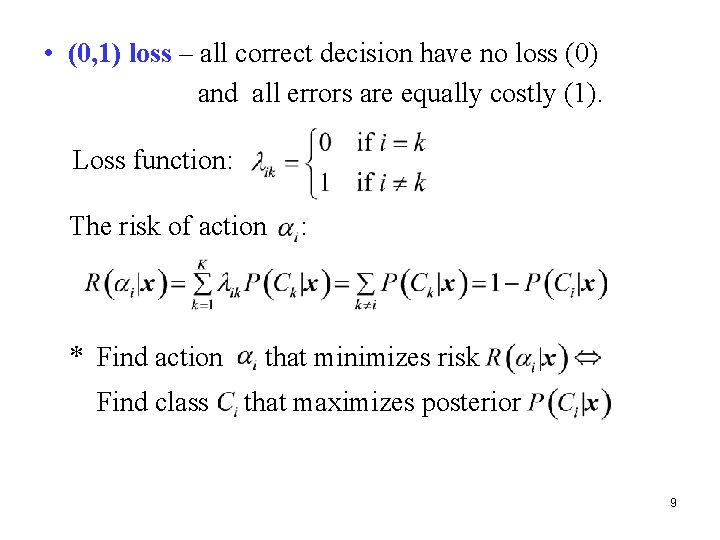

• (0, 1) loss – all correct decision have no loss (0) and all errors are equally costly (1). Loss function: The risk of action * Find action Find class : that minimizes risk that maximizes posterior 9

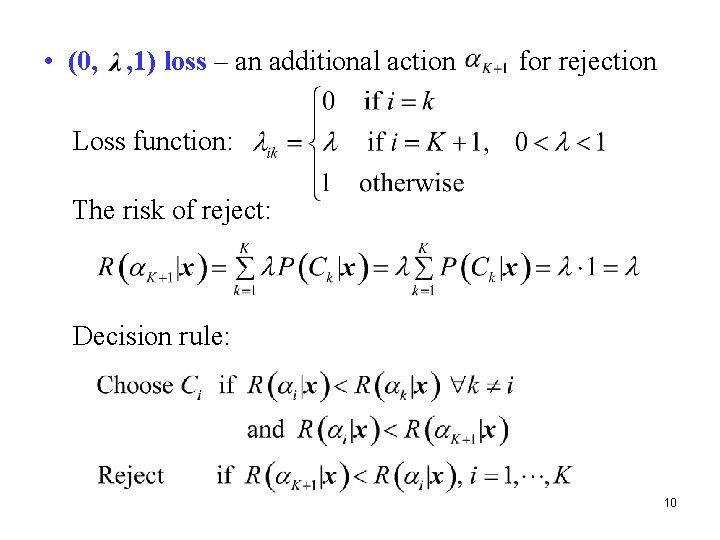

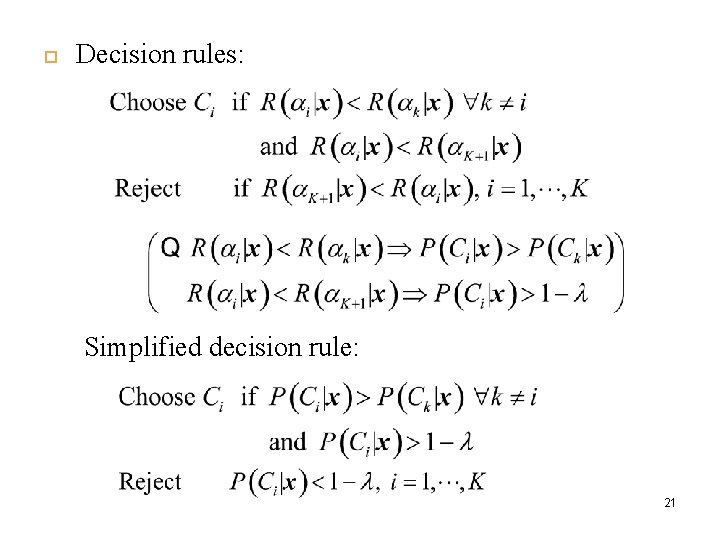

• (0, , 1) loss – an additional action for rejection Loss function: The risk of reject: Decision rule: 10

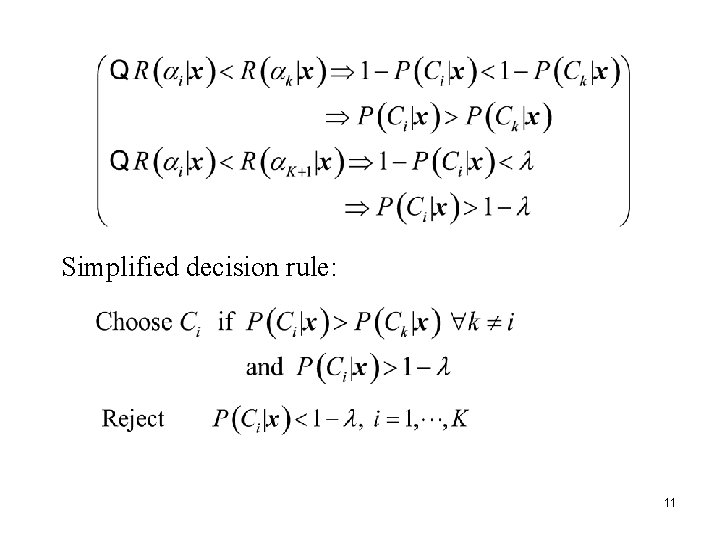

Simplified decision rule: 11

3. 4 Discriminant Functions • Discriminant functions: Example: For the Bayes’ classifier, 12

• The above examples define a discriminant function for each class. Discriminant functions may be defined for boundaries between classes. Example: Two classes. A single discriminant function is defined for the two classes as 13

3. 5 Association Rules An association rule is often expressed as a conditional probability P(X|Y), graphically, , where X: antecedence (predecessor), Y: consequence (successor). Describes the dependency between X and Y. Three measures of preference of rules: Consider customers of a supermarket. Let X: buying milk, Y: buying chocolate 14

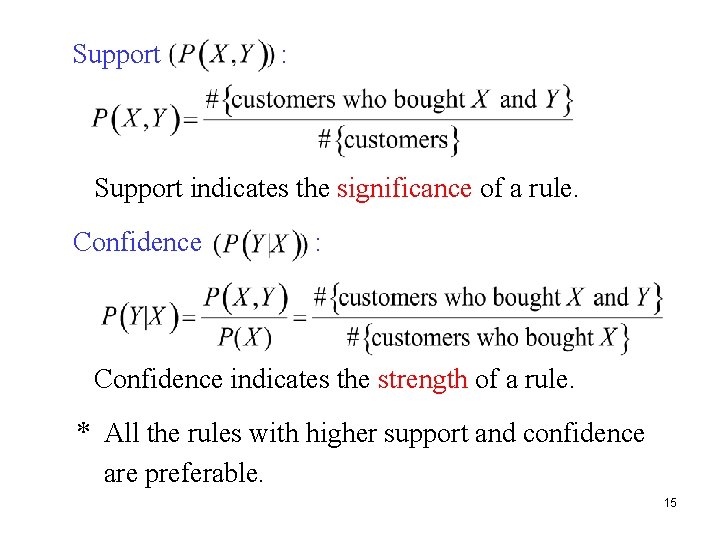

Support : Support indicates the significance of a rule. Confidence : Confidence indicates the strength of a rule. * All the rules with higher support and confidence are preferable. 15

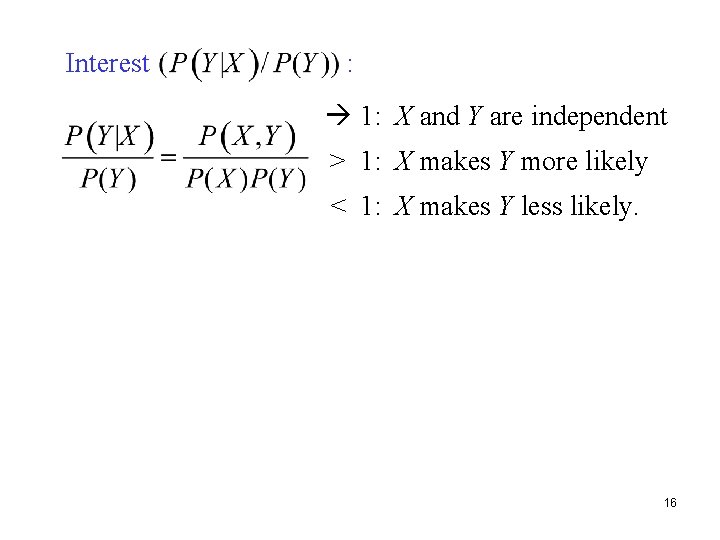

Interest : 1: X and Y are independent > 1: X makes Y more likely < 1: X makes Y less likely. 16

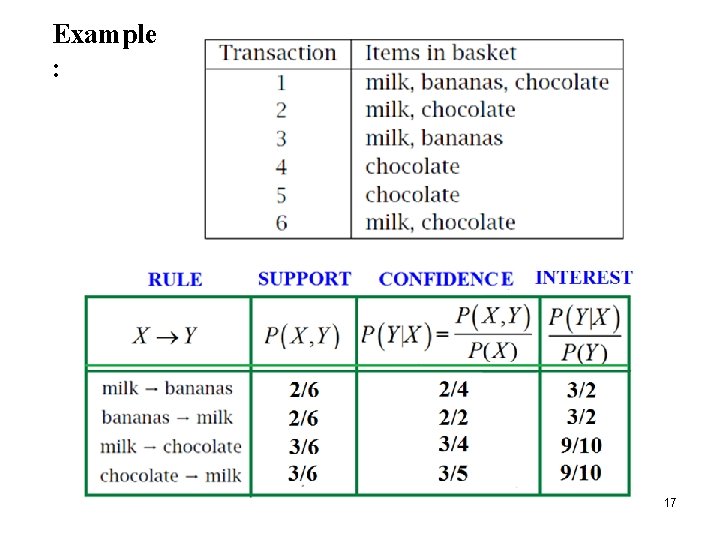

Example : 17 17

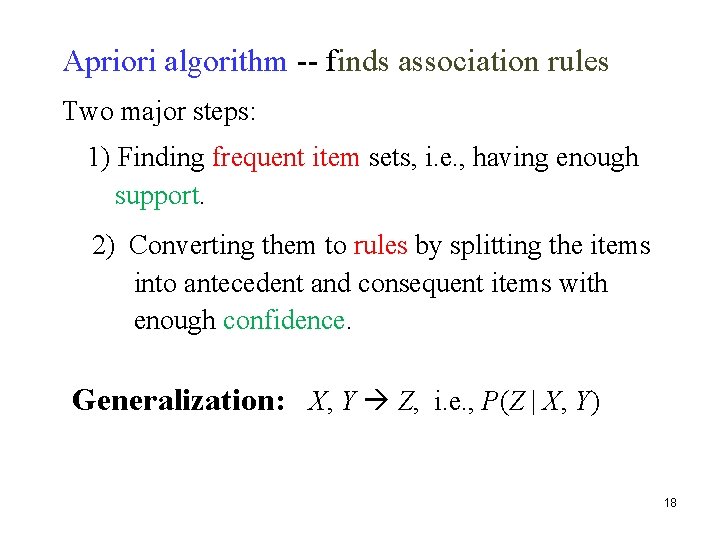

Apriori algorithm -- finds association rules Two major steps: 1) Finding frequent item sets, i. e. , having enough support. 2) Converting them to rules by splitting the items into antecedent and consequent items with enough confidence. Generalization: X, Y Z, i. e. , P(Z | X, Y) 18

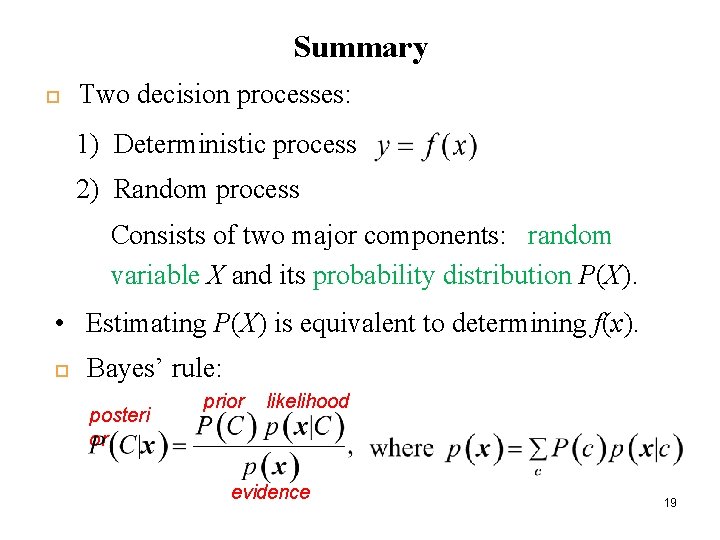

Summary Two decision processes: 1) Deterministic process 2) Random process Consists of two major components: random variable X and its probability distribution P(X). • Estimating P(X) is equivalent to determining f(x). Bayes’ rule: posteri or prior likelihood evidence 19

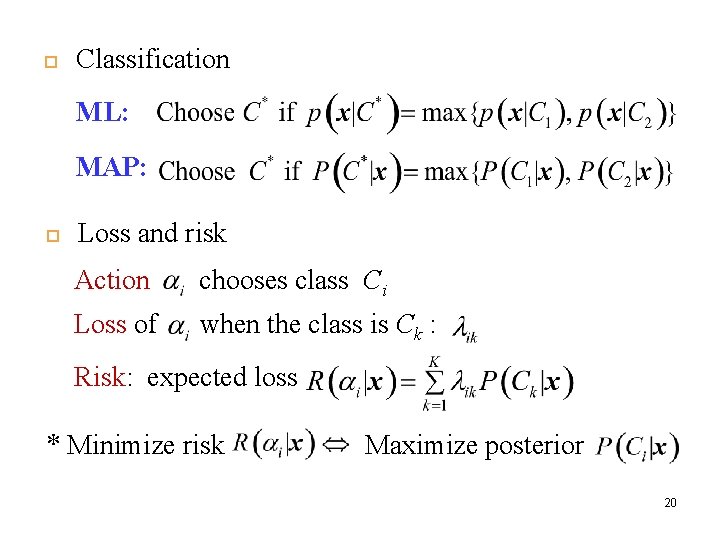

Classification ML: MAP: Loss and risk Action chooses class Ci Loss of when the class is Ck : Risk: expected loss * Minimize risk Maximize posterior 20

Decision rules: Simplified decision rule: 21

Discriminant functions Measures of preference of associative rules Support Confidence Interest : indicates the significance of a rule. : indicates the strength of a rule. : 1: X and Y are independent > 1: X makes Y more likely < 1: X makes Y less likely. 22

Apriori algorithm -- looks for associative rules Two major steps: 1) Finding frequent item sets, i. e. , having enough support. 2) Converting them to rules by splitting the items into antecedent and consequent items with enough confidence. Generalization: X, Y Z, i. e. , P(Z | X, Y) 23

- Slides: 23