Priority layered approach to Transport protocol for Long

- Slides: 37

Priority layered approach to Transport protocol for Long fat networks Vidhyashankar Venkataraman Cornell University 18 th December 2007 1

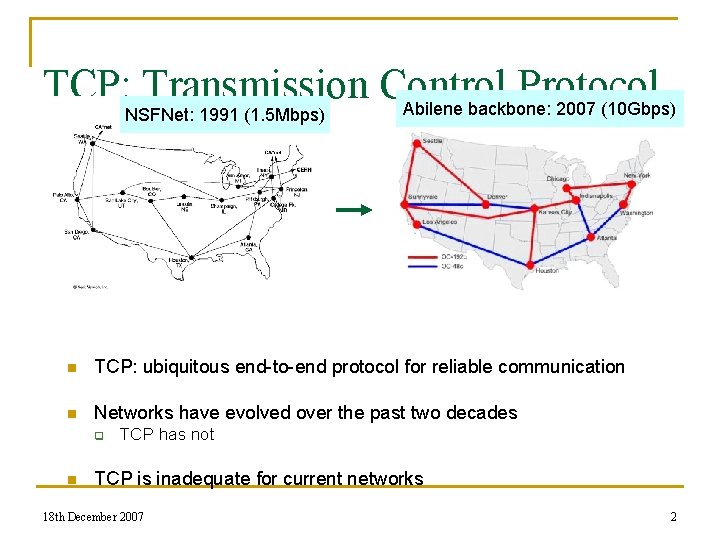

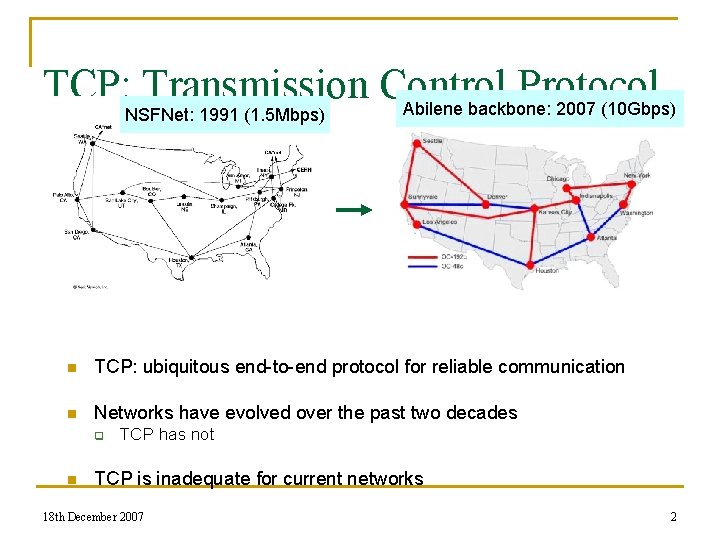

TCP: Transmission Control Protocol Abilene backbone: 2007 (10 Gbps) NSFNet: 1991 (1. 5 Mbps) n TCP: ubiquitous end-to-end protocol for reliable communication n Networks have evolved over the past two decades q n TCP has not TCP is inadequate for current networks 18 th December 2007 2

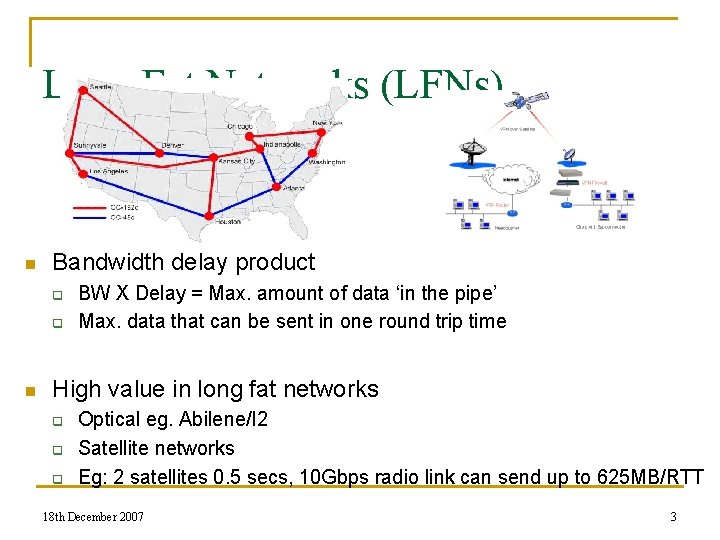

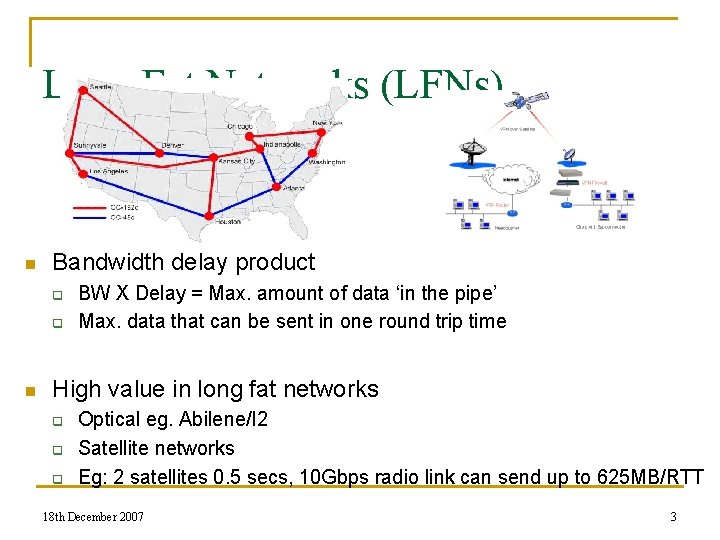

Long Fat Networks (LFNs) n Bandwidth delay product q q n BW X Delay = Max. amount of data ‘in the pipe’ Max. data that can be sent in one round trip time High value in long fat networks q q q Optical eg. Abilene/I 2 Satellite networks Eg: 2 satellites 0. 5 secs, 10 Gbps radio link can send up to 625 MB/RTT 18 th December 2007 3

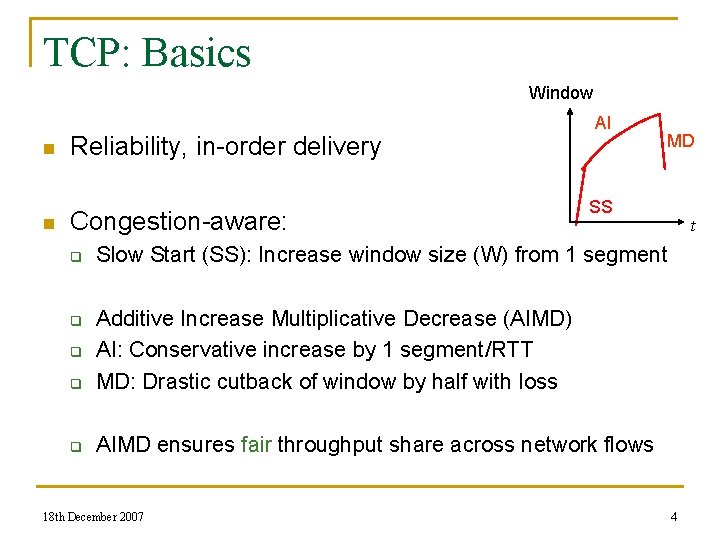

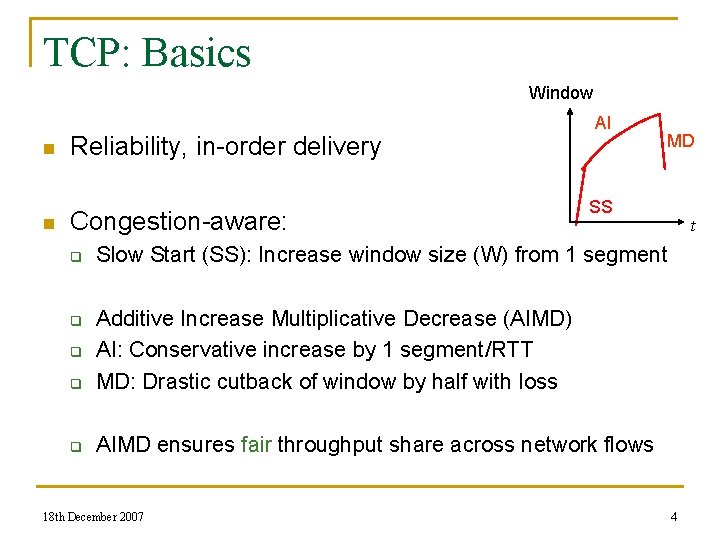

TCP: Basics Window n n Reliability, in-order delivery Congestion-aware: q AI SS q q AIMD ensures fair throughput share across network flows q t Slow Start (SS): Increase window size (W) from 1 segment Additive Increase Multiplicative Decrease (AIMD) AI: Conservative increase by 1 segment/RTT MD: Drastic cutback of window by half with loss q MD 18 th December 2007 4

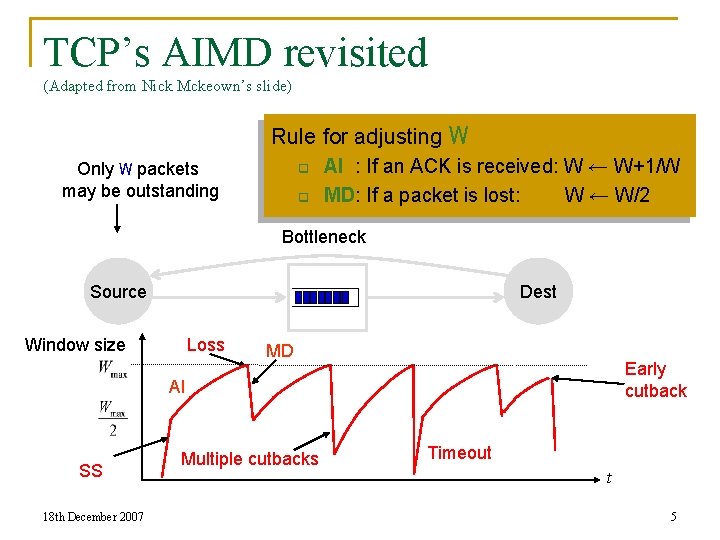

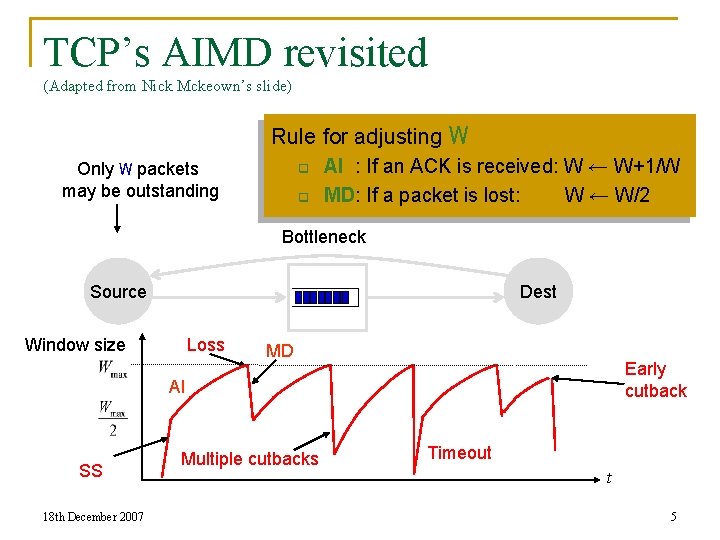

TCP’s AIMD revisited (Adapted from Nick Mckeown’s slide) Rule for adjusting W Only W packets may be outstanding q q AI : If an ACK is received: W ← W+1/W MD: If a packet is lost: W ← W/2 Bottleneck Source Dest Window size Loss MD Early cutback AI SS 18 th December 2007 Multiple cutbacks Timeout t 5

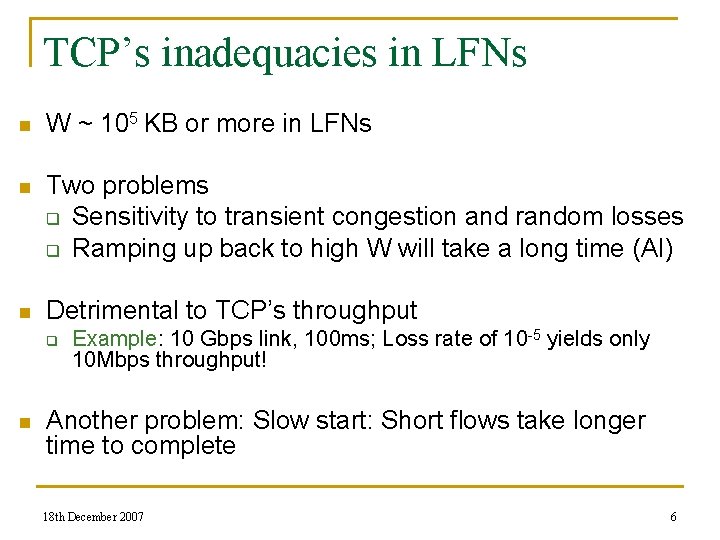

TCP’s inadequacies in LFNs n W ~ 105 KB or more in LFNs n Two problems q Sensitivity to transient congestion and random losses q Ramping up back to high W will take a long time (AI) n Detrimental to TCP’s throughput q n Example: 10 Gbps link, 100 ms; Loss rate of 10 -5 yields only 10 Mbps throughput! Another problem: Slow start: Short flows take longer time to complete 18 th December 2007 6

Alternate Transport Solutions Taxonomy based on Congestion signal to end host Congestion Control in LFNs Explicit • Explicit notification from routers • XCP General Idea: Window growth curve `better’ than AIMD 18 th December 2007 End-to-end (like TCP) Loss • Loss: signal for congestion • CUBIC, HS-TCP, STCP Implicit Delay • RTT increase: signal for congestion (Queue builds up) • Fast 7

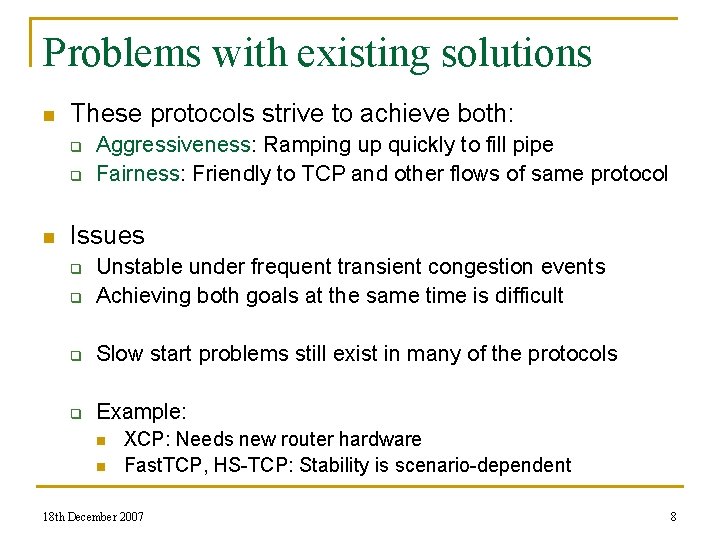

Problems with existing solutions n These protocols strive to achieve both: q q n Aggressiveness: Ramping up quickly to fill pipe Fairness: Friendly to TCP and other flows of same protocol Issues q Unstable under frequent transient congestion events Achieving both goals at the same time is difficult q Slow start problems still exist in many of the protocols q Example: q n n XCP: Needs new router hardware Fast. TCP, HS-TCP: Stability is scenario-dependent 18 th December 2007 8

A new transport protocol n Need: “good” aggressiveness without loss in fairness q n “good”: Near-100% bottleneck utilization Strike this balance without requiring any new network support 18 th December 2007 9

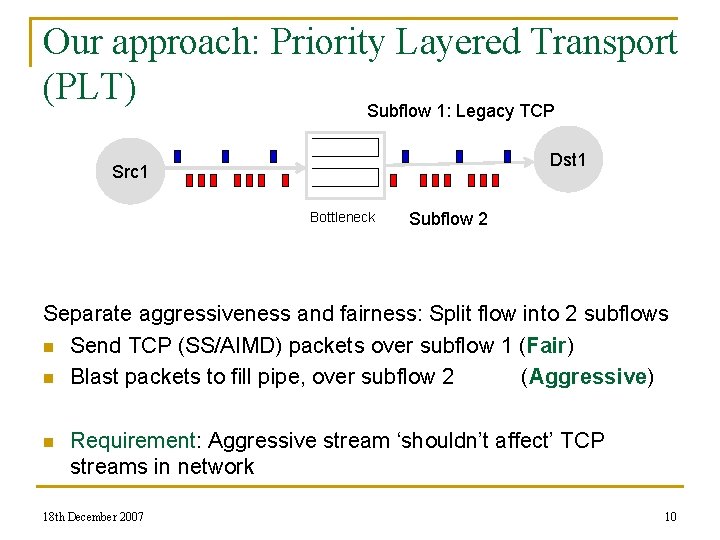

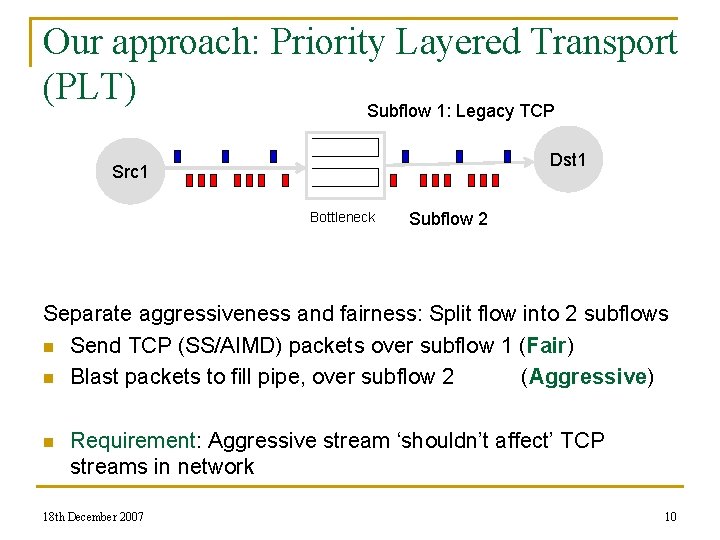

Our approach: Priority Layered Transport (PLT) Subflow 1: Legacy TCP Dst 1 Src 1 Bottleneck Subflow 2 Separate aggressiveness and fairness: Split flow into 2 subflows n Send TCP (SS/AIMD) packets over subflow 1 (Fair) n Blast packets to fill pipe, over subflow 2 (Aggressive) n Requirement: Aggressive stream ‘shouldn’t affect’ TCP streams in network 18 th December 2007 10

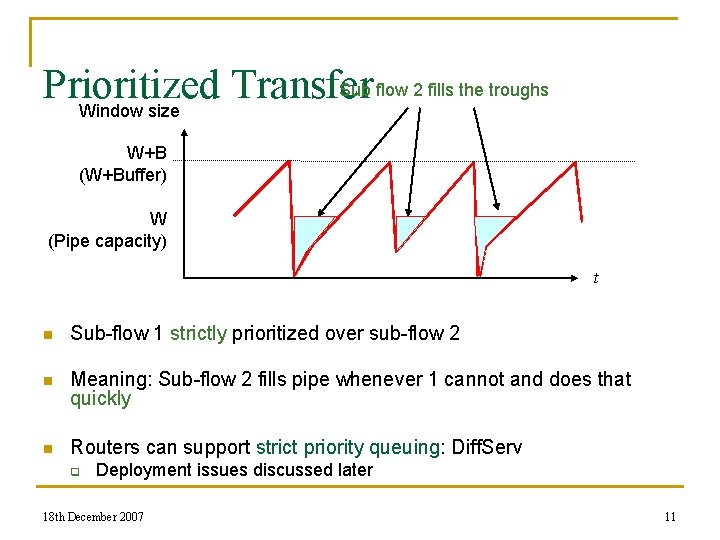

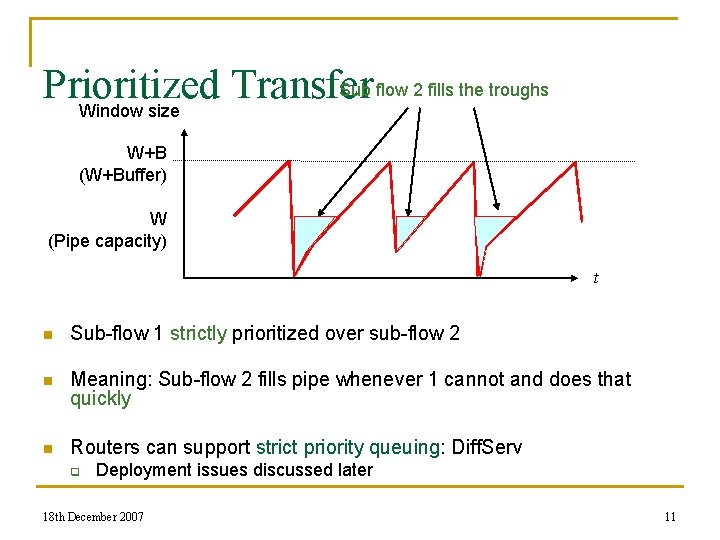

Sub flow 2 fills the troughs Prioritized Transfer Window size W+B (W+Buffer) W (Pipe capacity) t n Sub-flow 1 strictly prioritized over sub-flow 2 n Meaning: Sub-flow 2 fills pipe whenever 1 cannot and does that quickly n Routers can support strict priority queuing: Diff. Serv q Deployment issues discussed later 18 th December 2007 11

Evident Benefits from PLT n n Fairness q Inter protocol fairness: TCP friendly q Intra protocol fairness: As fair as TCP Aggression q Overcomes TCP’s limitations with slow start n Requires no new network support n Congestion control independence at subflow 1 q Sub flow 2 supplements performance of sub flow 1 18 th December 2007 12

PLT Design n Scheduler assigns packets to sub-flows q High priority Congestion Module (HCM): TCP n q Low priority Congestion Module (LCM) n n Module handling subflow 1 Module handling subflow 2 LCM is lossy q q Packets could get lost or starved when HCM saturates pipe LCM Sender knows packets lost and received from receiver 18 th December 2007 13

The LCM n Is naïve no-holds-barred sending enough? q q q n No! Can lead to congestion collapse Wastage of Bandwidth in non-bottleneck links Outstanding windows could get large and simply cripple flow Congestion control is necessary… 18 th December 2007 14

Congestion control at LCM n Simple, Loss-based, aggressive q n Loss-rate based: q q n Multiplicative increase Multiplicative Decrease (MIMD) Sender keeps ramping up if it incurs tolerable loss rates More robust to transient congestion LCM sender monitors loss rate p periodically q q Max. tolerable loss rate μ p<μ => cwnd = *cwnd (MI, >1) p >= μ => cwnd = *cwnd (MD, <1) Timeout also results in MD 18 th December 2007 15

Choice of μ n Too High: Wastage of bandwidth Too Low : LCM is less aggressive, less robust n Decide from expected loss rate over Internet n q q Preferably kernel tuned in the implementation Predefined in simulations 18 th December 2007 16

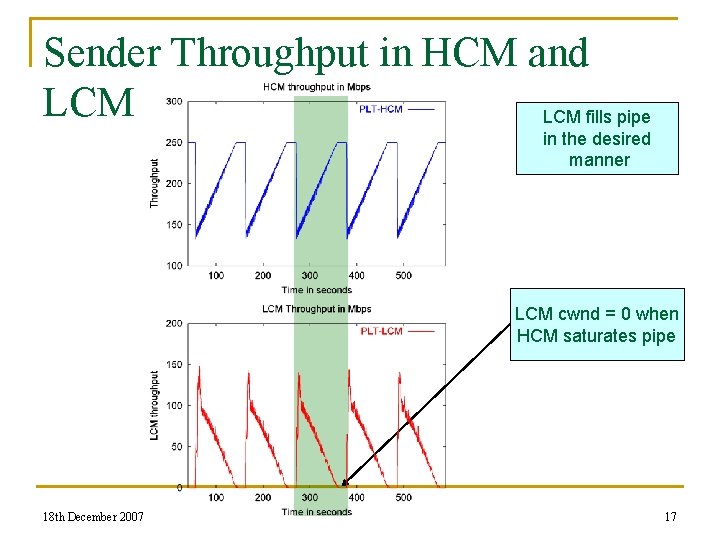

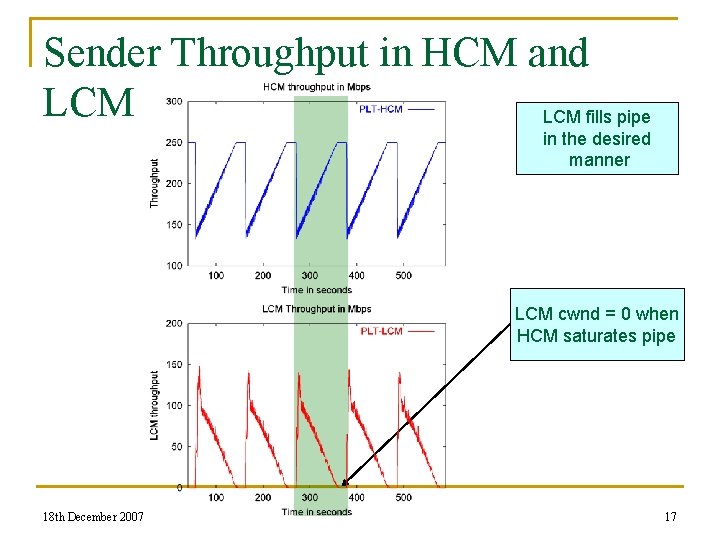

Sender Throughput in HCM and LCM fills pipe in the desired manner LCM cwnd = 0 when HCM saturates pipe 18 th December 2007 17

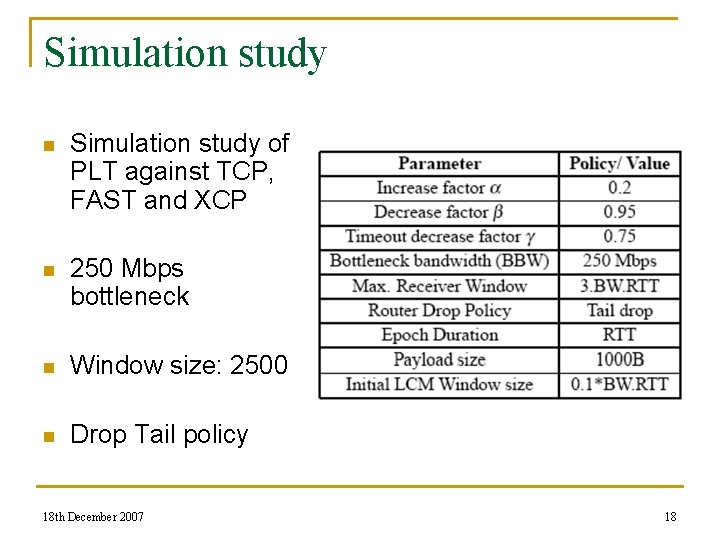

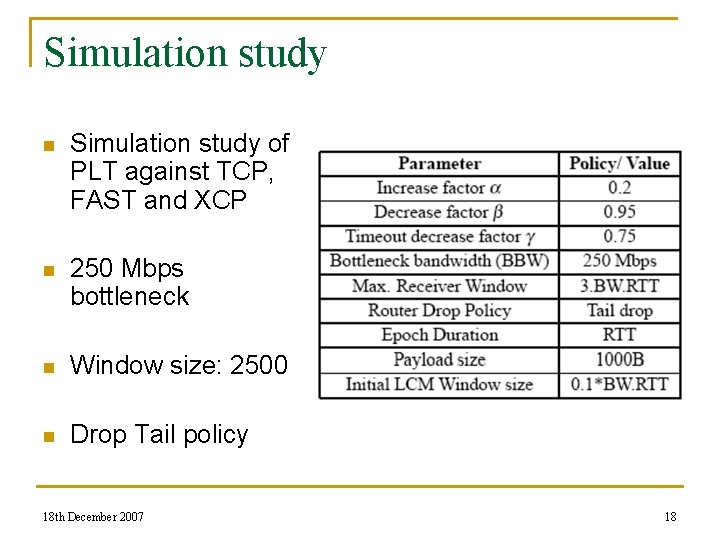

Simulation study n Simulation study of PLT against TCP, FAST and XCP n 250 Mbps bottleneck n Window size: 2500 n Drop Tail policy 18 th December 2007 18

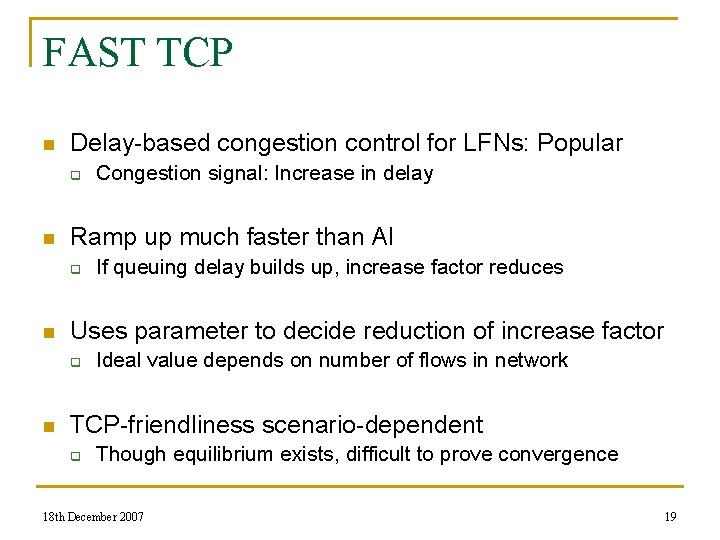

FAST TCP n Delay-based congestion control for LFNs: Popular q n Ramp up much faster than AI q n If queuing delay builds up, increase factor reduces Uses parameter to decide reduction of increase factor q n Congestion signal: Increase in delay Ideal value depends on number of flows in network TCP-friendliness scenario-dependent q Though equilibrium exists, difficult to prove convergence 18 th December 2007 19

XCP: Baseline n Requires explicit feedback from routers n Routers equipped to provide cwnd increment n Converges quite fast n TCP-friendliness requires extra router support 18 th December 2007 20

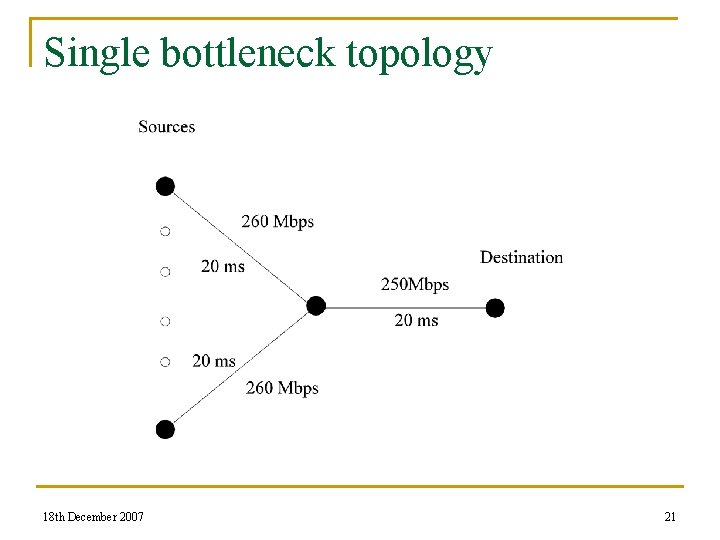

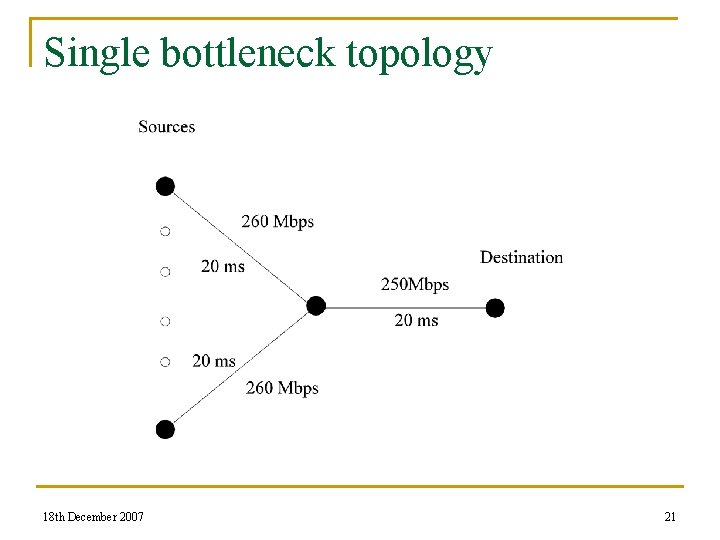

Single bottleneck topology 18 th December 2007 21

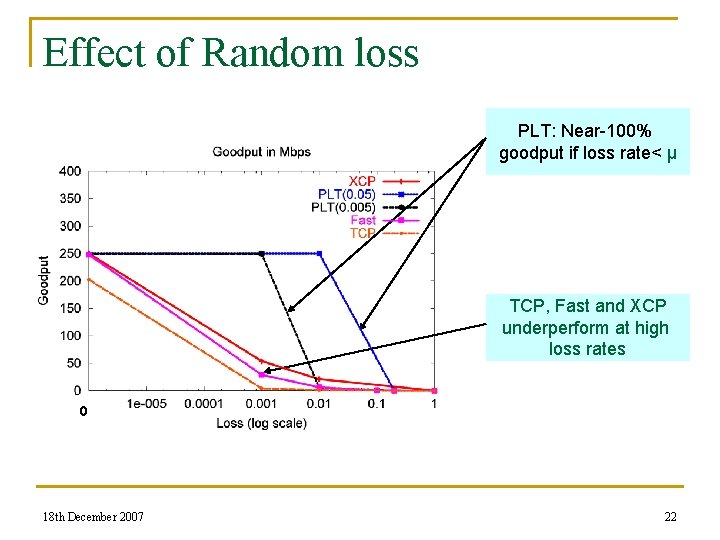

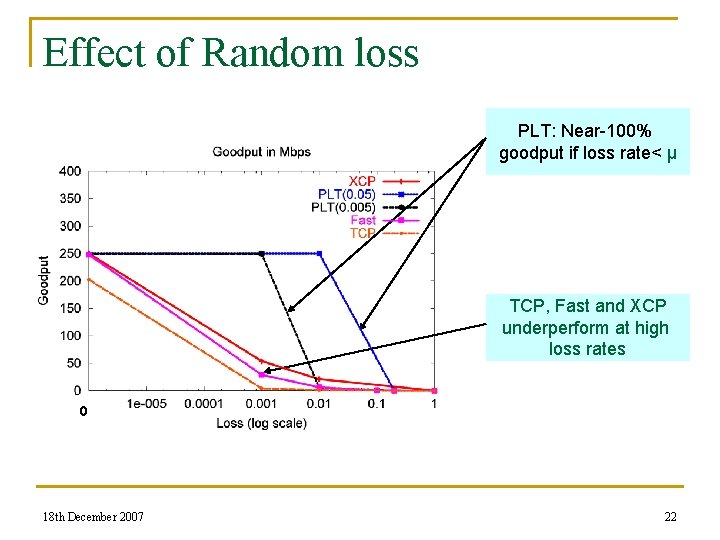

Effect of Random loss PLT: Near-100% goodput if loss rate< μ TCP, Fast and XCP underperform at high loss rates 0 18 th December 2007 22

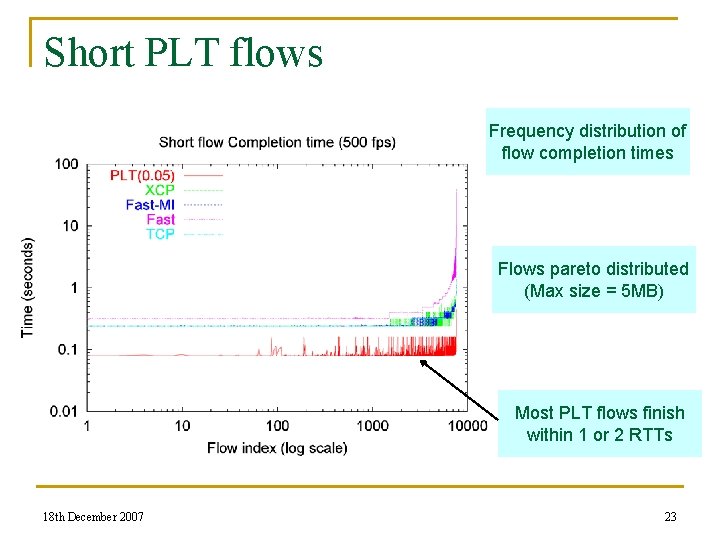

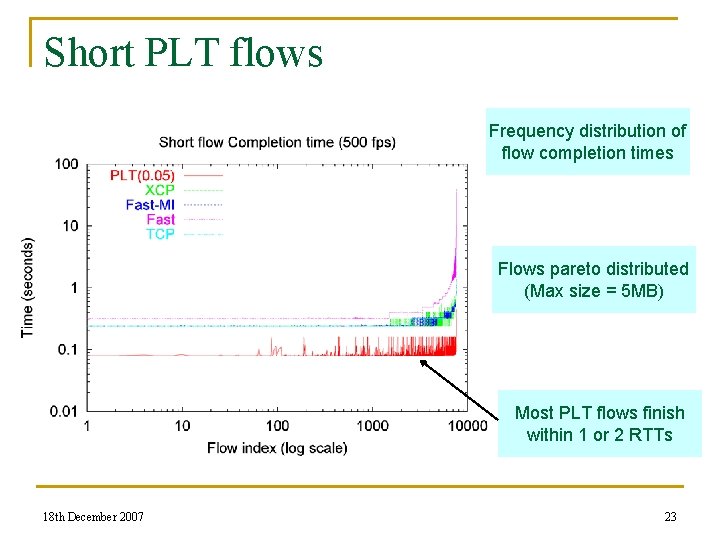

Short PLT flows Frequency distribution of flow completion times Flows pareto distributed (Max size = 5 MB) Most PLT flows finish within 1 or 2 RTTs 18 th December 2007 23

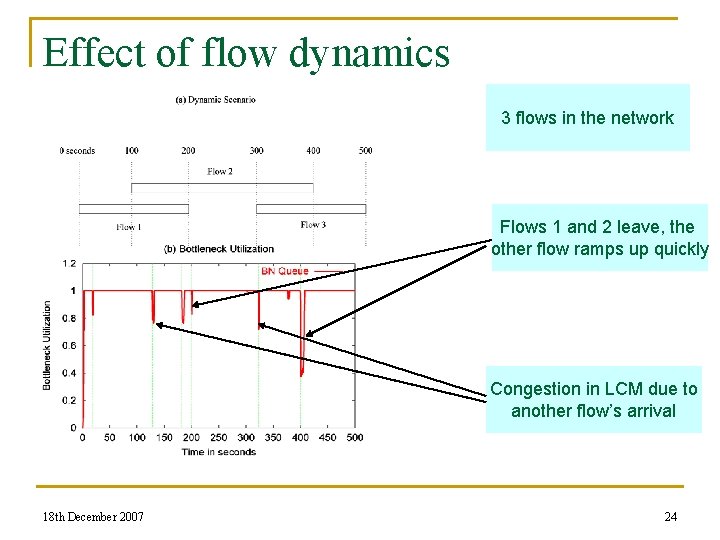

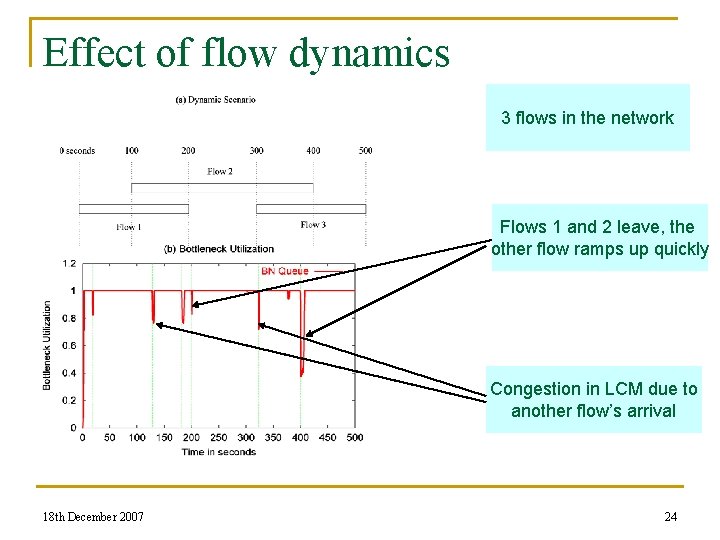

Effect of flow dynamics 3 flows in the network Flows 1 and 2 leave, the other flow ramps up quickly Congestion in LCM due to another flow’s arrival 18 th December 2007 24

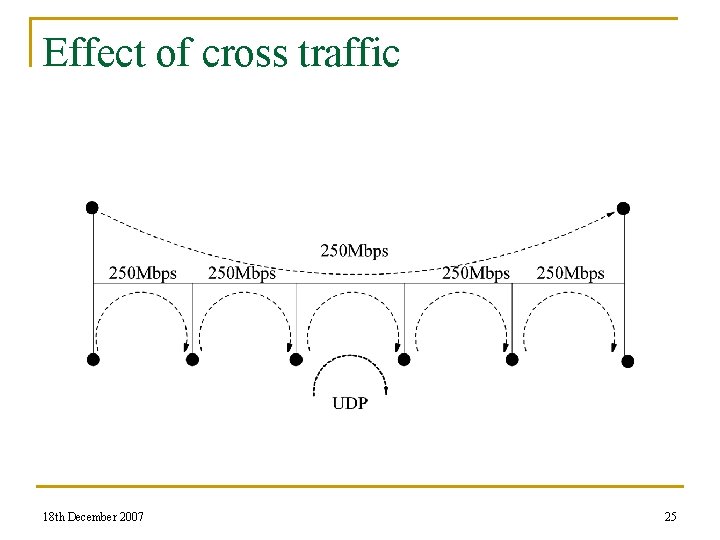

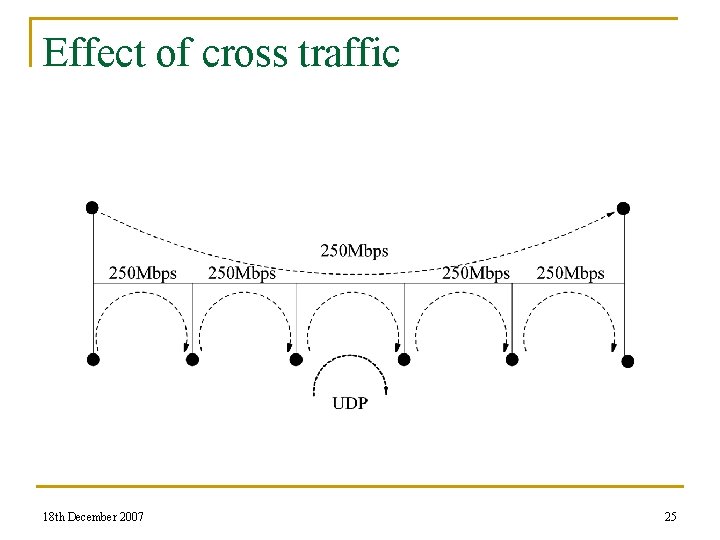

Effect of cross traffic 18 th December 2007 25

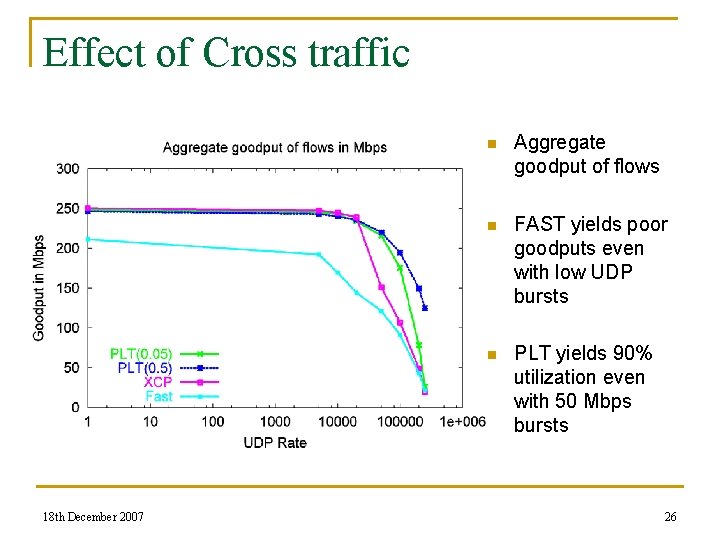

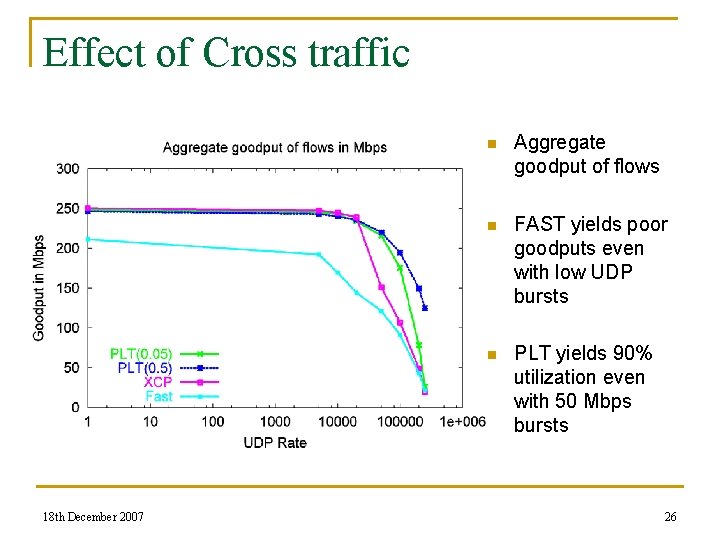

Effect of Cross traffic 18 th December 2007 n Aggregate goodput of flows n FAST yields poor goodputs even with low UDP bursts n PLT yields 90% utilization even with 50 Mbps bursts 26

Conclusion n PLT: layered approach to transport q q n Prioritize fairness over aggressiveness Supplements aggression to a legacy congestion control Simulation results are promising q q PLT robust to random losses and transient congestion We have also tested PLT-Fast and results are promising! 18 th December 2007 27

Issues and Challenges ahead n Deployability Challenges q q q n PEPs in VPNs Applications over PLT-shutdown Other issues q q Fairness issues Receiver Window dependencies 18 th December 2007 28

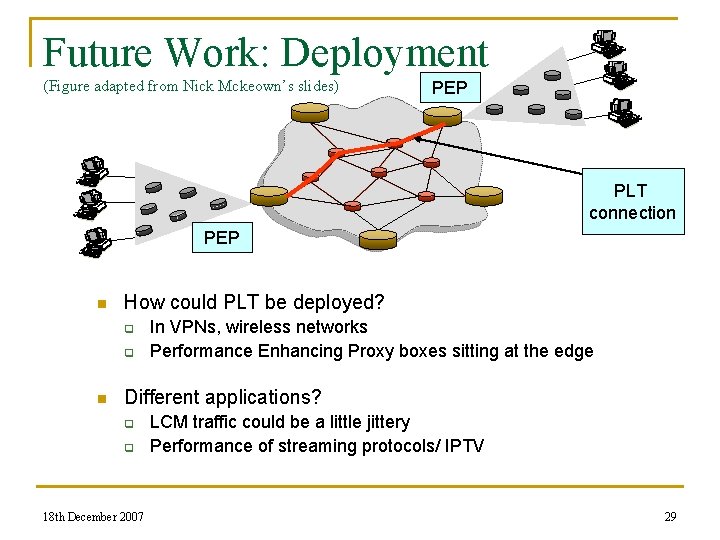

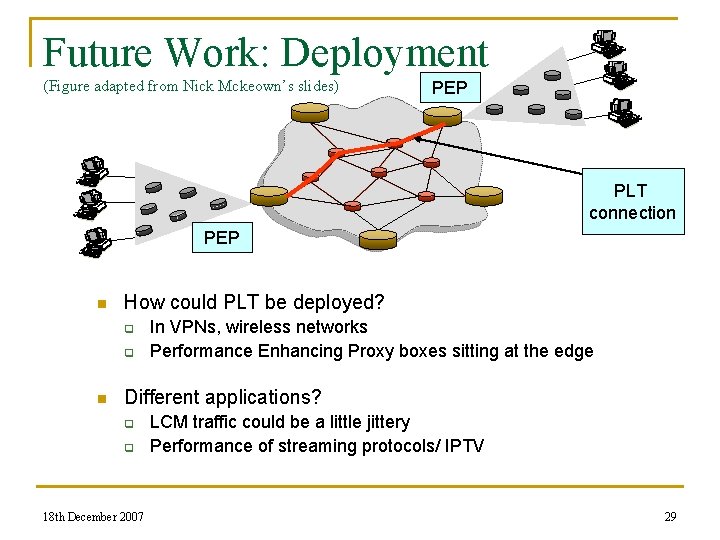

Future Work: Deployment (Figure adapted from Nick Mckeown’s slides) PEP PLT connection PEP n How could PLT be deployed? q q n In VPNs, wireless networks Performance Enhancing Proxy boxes sitting at the edge Different applications? q q 18 th December 2007 LCM traffic could be a little jittery Performance of streaming protocols/ IPTV 29

Deployment: PLT-SHUTDOWN n In the wide area, PLT should be disabled if no priority queuing q Unfriendly to fellow TCP flows otherwise! n We need methods to detect priority queuing at bottleneck in an end-to-end manner n To be implemented and tested on the real internet 18 th December 2007 30

Receive Window dependency n PLT needs larger outstanding windows q q n LCM is lossy: Aggression & Starvation Waiting time for retransmitting lost LCM packets Receive window could be bottleneck q q LCM should cut back if HCM is restricted Should be explored more 18 th December 2007 31

Fairness considerations n Inter-protocol fairness: TCP friendliness Intra-protocol fairness: HCM fairness n Is LCM fairness necessary? n q q q LCM is more dominant in loss-prone networks Can provide relaxed fairness Effect of queuing disciplines 18 th December 2007 32

EXTRA SLIDES 18 th December 2007 33

Analyses of TCP in LFNs n Some known analytical results q q q At loss p, (p. (BW. RTT)2)>1 => small throughputs Throughput 1/RTT Throughput 1/√p (Padhye et. al. and Lakshman et. al. ) n Several solutions proposed for modified transport 18 th December 2007 34

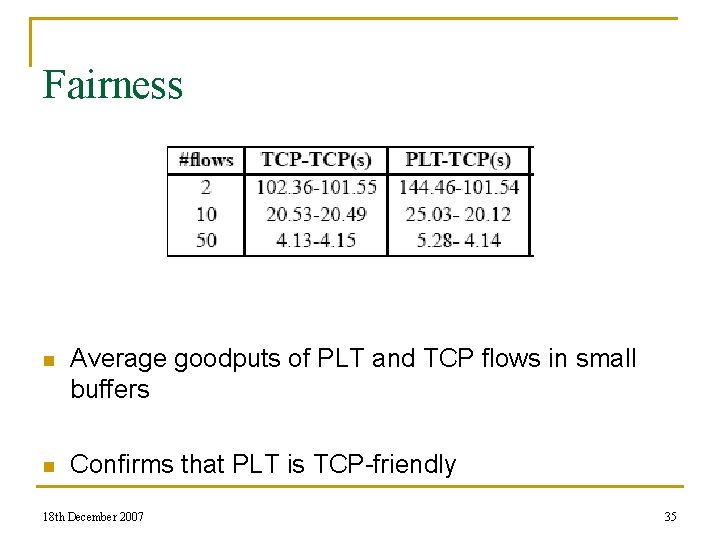

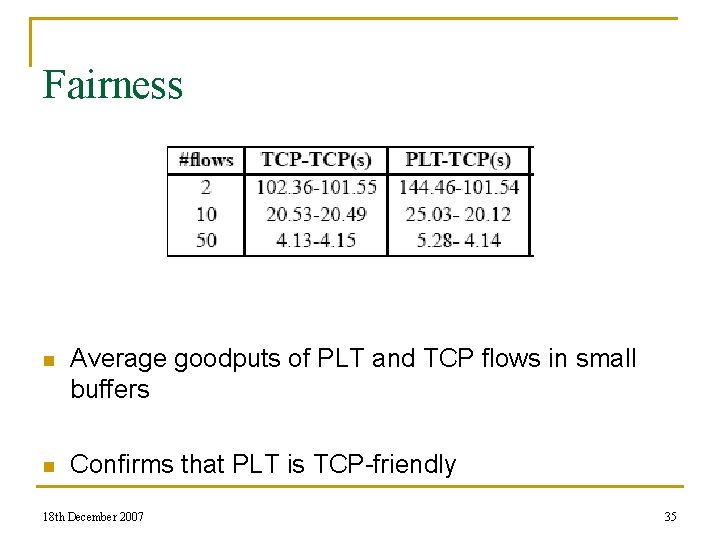

Fairness n Average goodputs of PLT and TCP flows in small buffers n Confirms that PLT is TCP-friendly 18 th December 2007 35

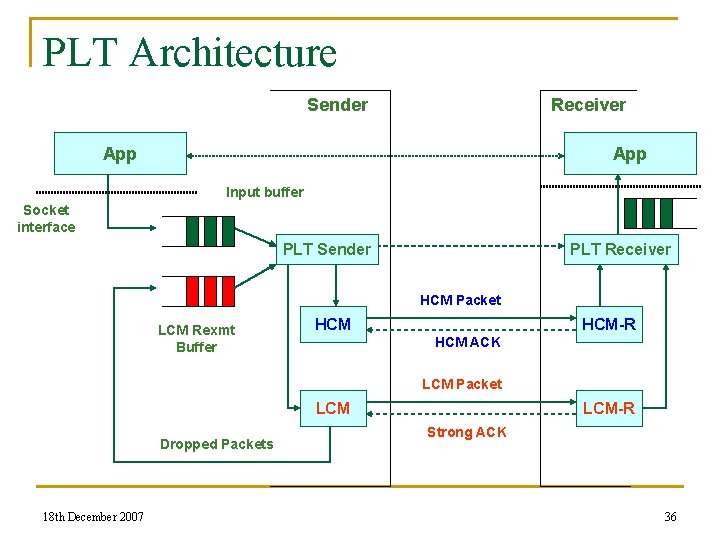

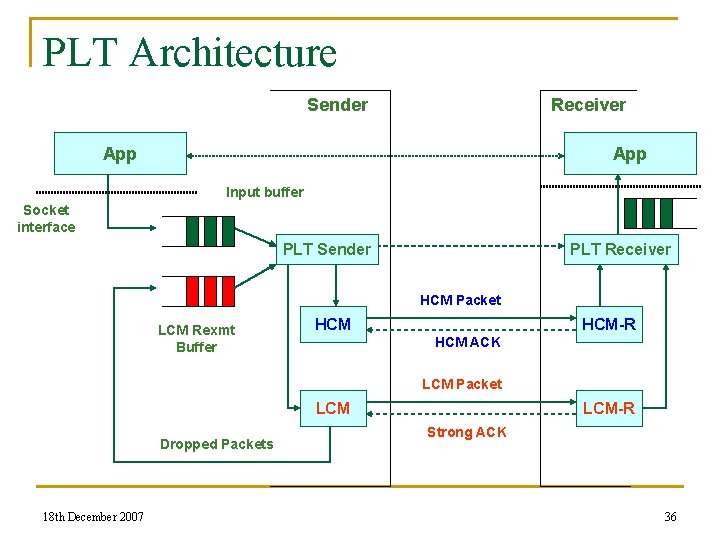

PLT Architecture Sender Receiver App Input buffer Socket interface PLT Sender PLT Receiver HCM Packet LCM Rexmt Buffer HCM-R HCM ACK LCM Packet LCM Dropped Packets 18 th December 2007 LCM-R Strong ACK 36

Other work: Chunkyspread n Bandwidth-sensitive peer-to-peer multicast for livestreaming n Scalable solution: q q q Robustness to churn, latency and bandwidth Heterogeneity-aware Random graph Multiple trees provided: robustness to churn n Balances load across peers n IPTPS’ 06, ICNP’ 06 18 th December 2007 37