Presentation Outline l A word or two about

Presentation Outline l A word or two about our program l Our HPC system acquisition process l Program benchmark suite l Evolution of benchmark-based performance metrics l Where do we go from here?

HPC Modernization Program

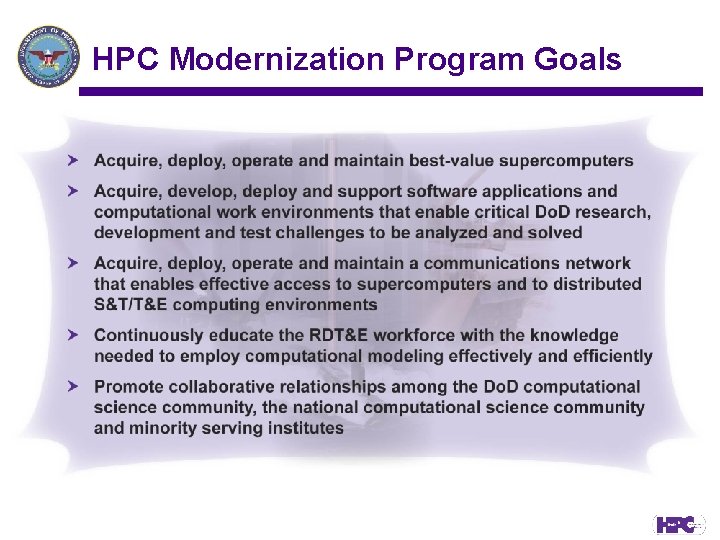

HPC Modernization Program Goals

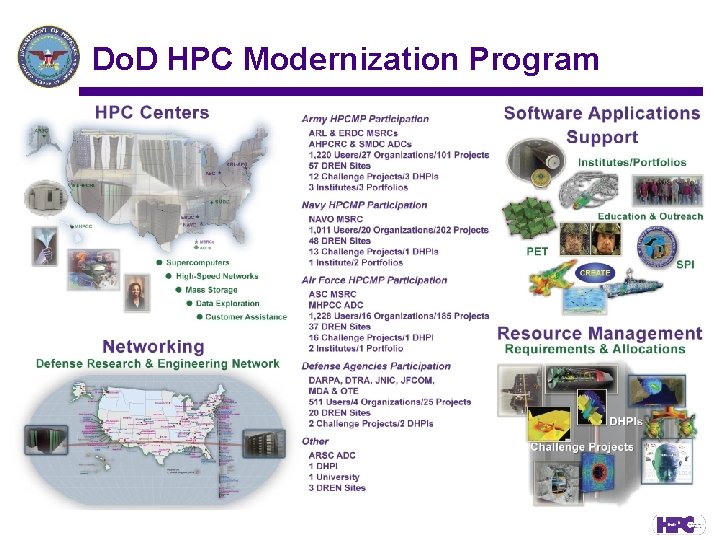

Do. D HPC Modernization Program

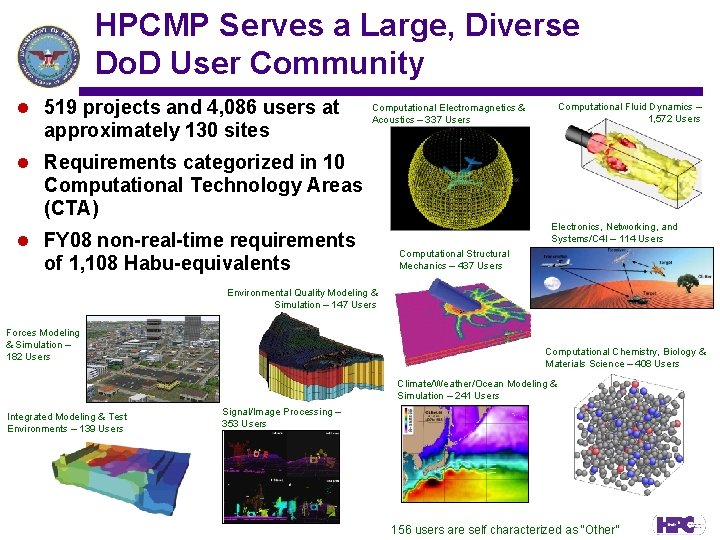

HPCMP Serves a Large, Diverse Do. D User Community l 519 projects and 4, 086 users at approximately 130 sites Computational Fluid Dynamics – 1, 572 Users Computational Electromagnetics & Acoustics – 337 Users l Requirements categorized in 10 Computational Technology Areas (CTA) l FY 08 non-real-time requirements of 1, 108 Habu-equivalents Electronics, Networking, and Systems/C 4 I – 114 Users Computational Structural Mechanics – 437 Users Environmental Quality Modeling & Simulation – 147 Users Forces Modeling & Simulation – 182 Users Computational Chemistry, Biology & Materials Science – 408 Users Climate/Weather/Ocean Modeling & Simulation – 241 Users Integrated Modeling & Test Environments – 139 Users Signal/Image Processing – 353 Users 156 users are self characterized as “Other”

High Performance Computing Centers Strategic Consolidation of Resources 4 Major Shared Resource Centers 4 Allocated Distributed Centers

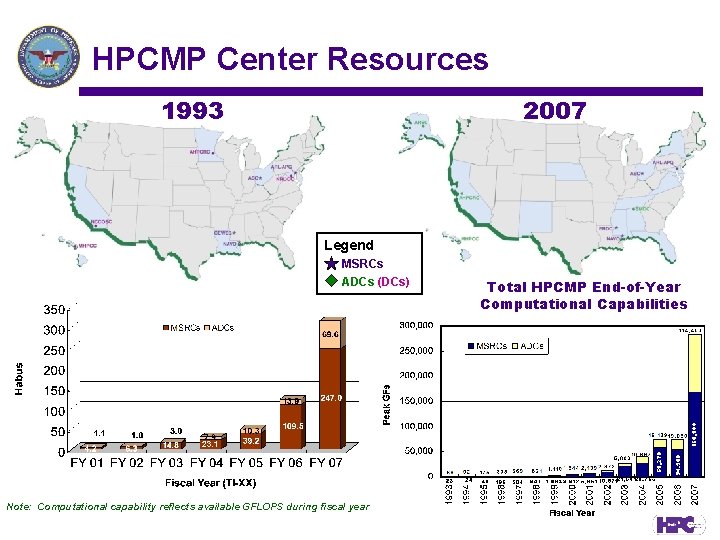

HPCMP Center Resources 1993 2007 Legend MSRCs ADCs (DCs) Note: Computational capability reflects available GFLOPS during fiscal year Total HPCMP End-of-Year Computational Capabilities

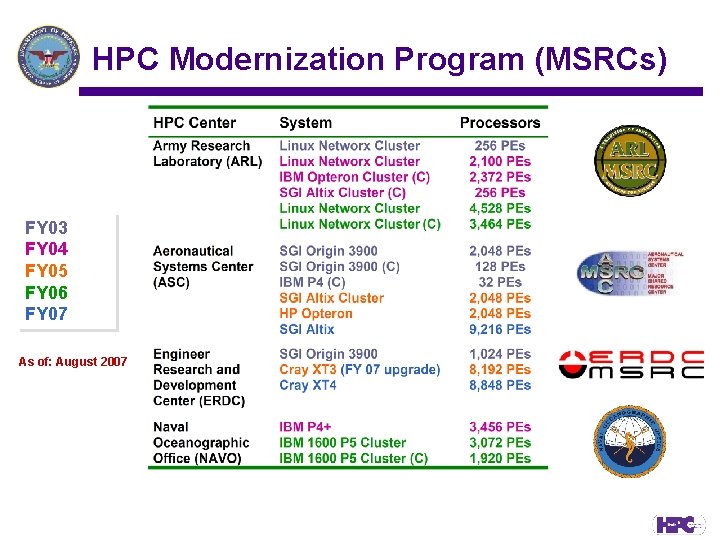

HPC Modernization Program (MSRCs) FY 03 FY 04 FY 05 FY 06 FY 07 As of: August 2007

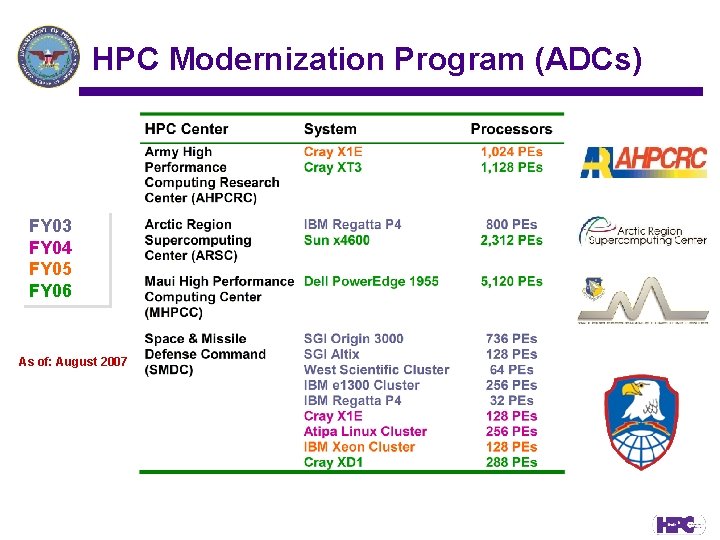

HPC Modernization Program (ADCs) FY 03 FY 04 FY 05 FY 06 As of: August 2007

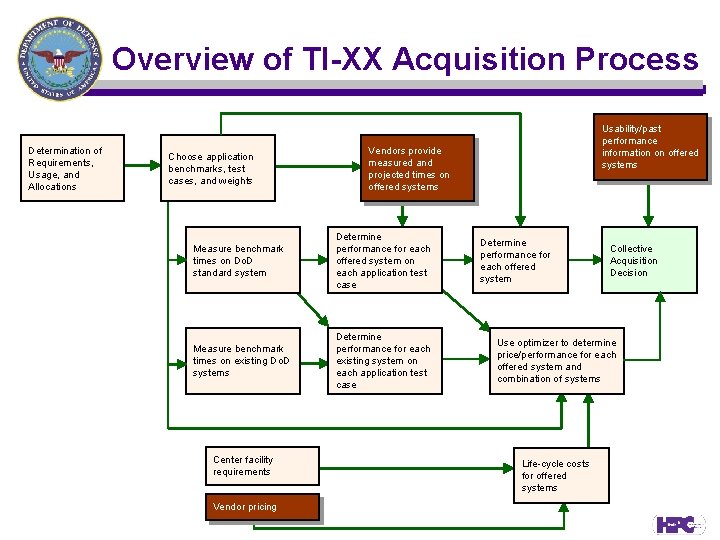

Overview of TI-XX Acquisition Process Determination of Requirements, Usage, and Allocations Choose application benchmarks, test cases, and weights Vendors provide measured and projected times on offered systems Measure benchmark times on Do. D standard system Determine performance for each offered system on each application test case Measure benchmark times on existing Do. D systems Determine performance for each existing system on each application test case Center facility requirements Vendor pricing Usability/past performance information on offered systems Determine performance for each offered system Collective Acquisition Decision Use optimizer to determine price/performance for each offered system and combination of systems Life-cycle costs for offered systems

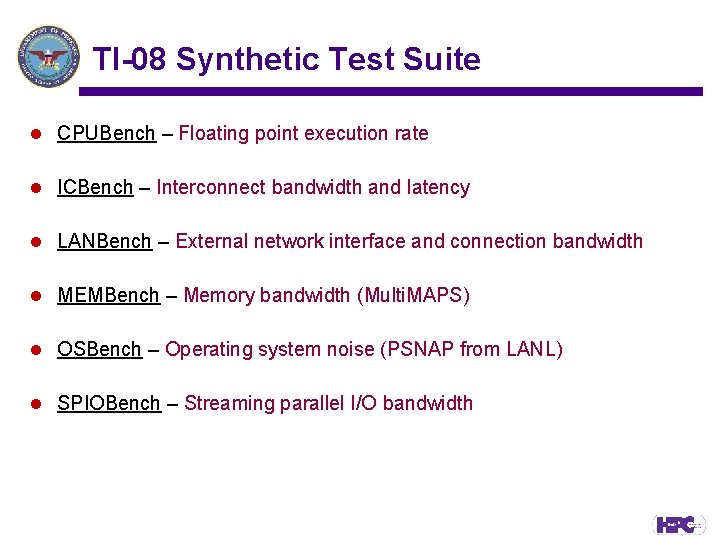

TI-08 Synthetic Test Suite l CPUBench – Floating point execution rate l ICBench – Interconnect bandwidth and latency l LANBench – External network interface and connection bandwidth l MEMBench – Memory bandwidth (Multi. MAPS) l OSBench – Operating system noise (PSNAP from LANL) l SPIOBench – Streaming parallel I/O bandwidth

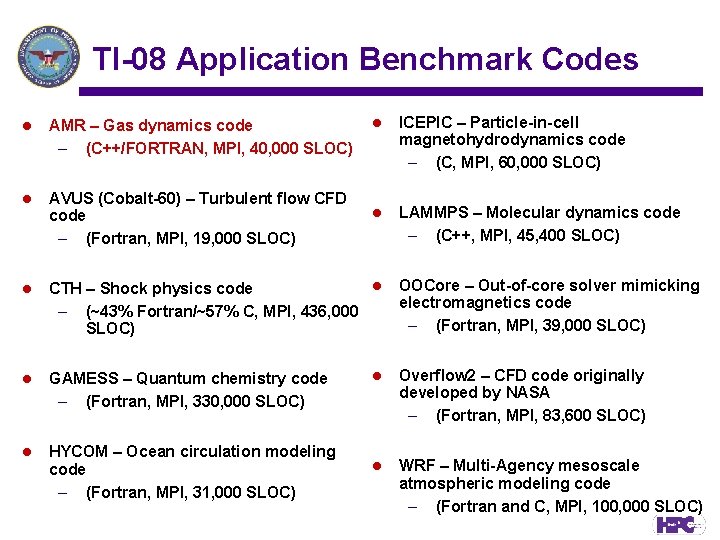

TI-08 Application Benchmark Codes l ICEPIC – Particle-in-cell magnetohydrodynamics code – (C, MPI, 60, 000 SLOC) l LAMMPS – Molecular dynamics code – (C++, MPI, 45, 400 SLOC) l AMR – Gas dynamics code – (C++/FORTRAN, MPI, 40, 000 SLOC) l AVUS (Cobalt-60) – Turbulent flow CFD code – (Fortran, MPI, 19, 000 SLOC) l l CTH – Shock physics code – (~43% Fortran/~57% C, MPI, 436, 000 SLOC) l GAMESS – Quantum chemistry code – (Fortran, MPI, 330, 000 SLOC) l HYCOM – Ocean circulation modeling code – (Fortran, MPI, 31, 000 SLOC) OOCore – Out-of-core solver mimicking electromagnetics code – (Fortran, MPI, 39, 000 SLOC) l Overflow 2 – CFD code originally developed by NASA – (Fortran, MPI, 83, 600 SLOC) l WRF – Multi-Agency mesoscale atmospheric modeling code – (Fortran and C, MPI, 100, 000 SLOC)

Application Benchmark History Computational Technology Area FY 2003 FY 2004 FY 2005 FY 2006 FY 2007 FY 2008 Computational Structural Mechanics CTH RFCTH CTH Computational Fluid Dynamics Cobalt 60 LESLIE 3 D Aero Cobalt 60 Aero AVUS Overflow 2 AMR Computational Chemistry, Biology, and Materials Science GAMESS NAMD GAMESS LAMMPS OOCore ICEPIC OOCore ICEPIC HYCOM WRF HYCOM WRF Computational Electromagnetics and Acoustics Climate/Weather/ Ocean Modeling and Simulation NLOM

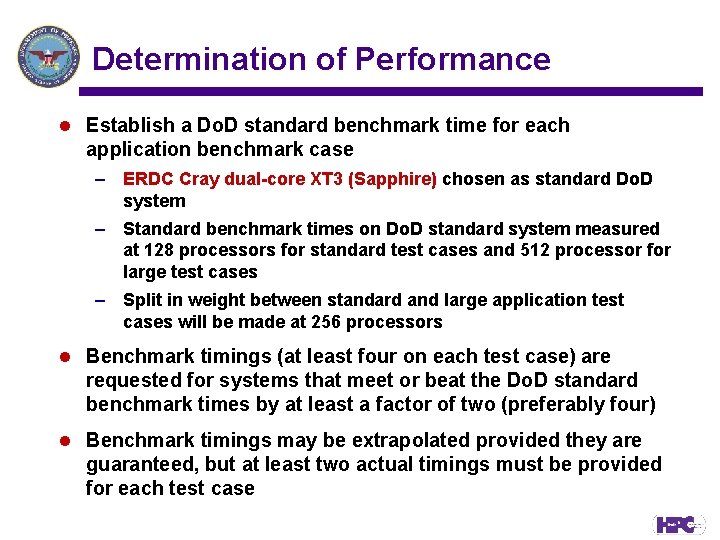

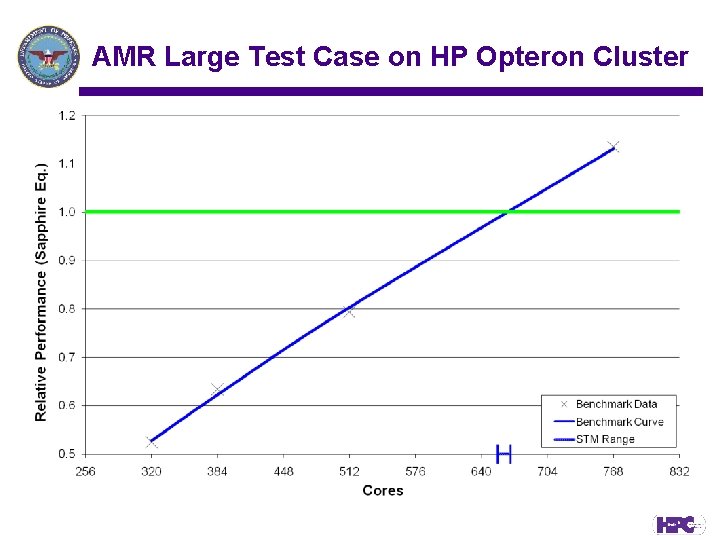

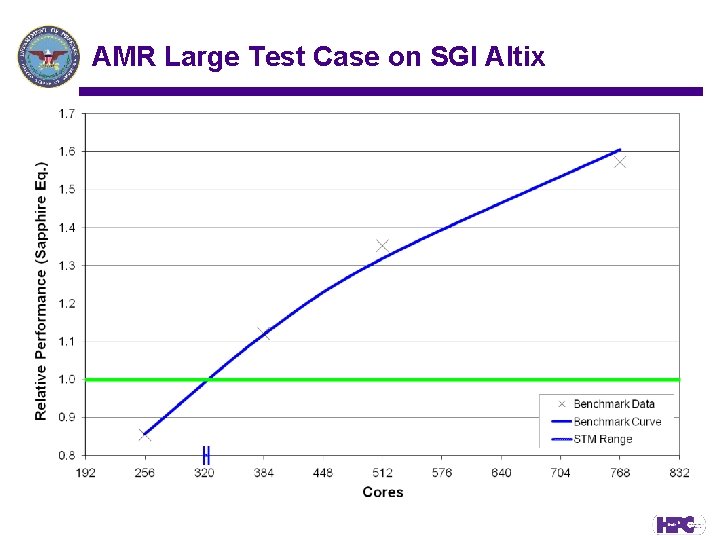

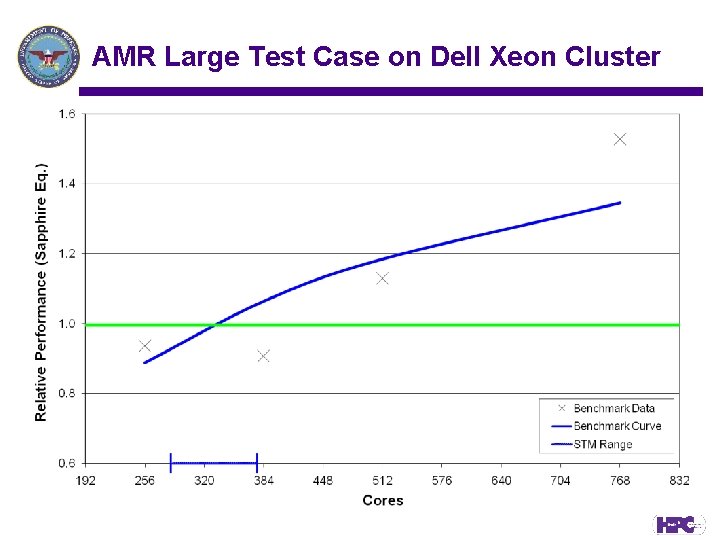

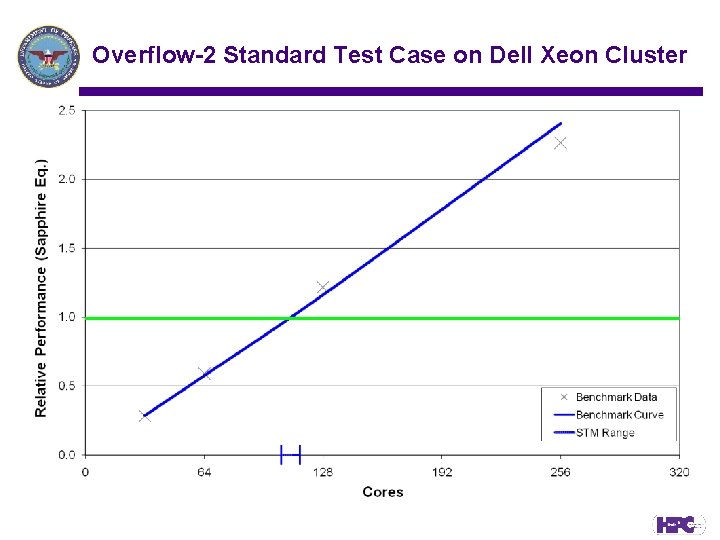

Determination of Performance l Establish a Do. D standard benchmark time for each application benchmark case – ERDC Cray dual-core XT 3 (Sapphire) chosen as standard Do. D system – Standard benchmark times on Do. D standard system measured at 128 processors for standard test cases and 512 processor for large test cases – Split in weight between standard and large application test cases will be made at 256 processors l Benchmark timings (at least four on each test case) are requested for systems that meet or beat the Do. D standard benchmark times by at least a factor of two (preferably four) l Benchmark timings may be extrapolated provided they are guaranteed, but at least two actual timings must be provided for each test case

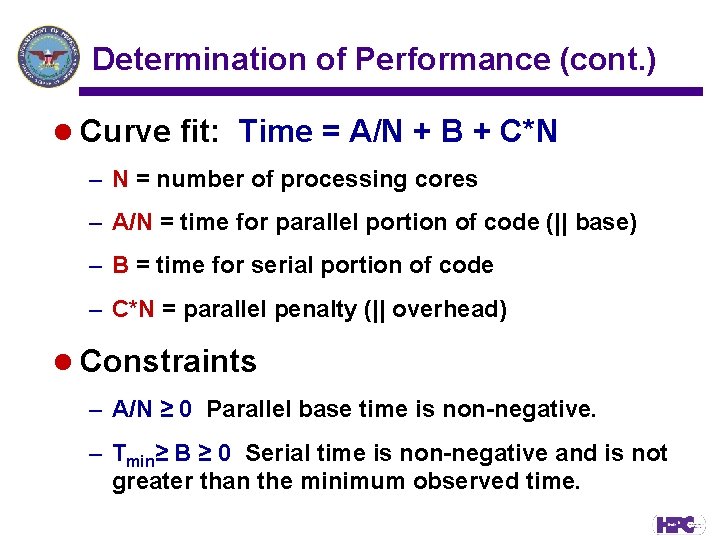

Determination of Performance (cont. ) l Curve fit: Time = A/N + B + C*N – N = number of processing cores – A/N = time for parallel portion of code (|| base) – B = time for serial portion of code – C*N = parallel penalty (|| overhead) l Constraints – A/N ≥ 0 Parallel base time is non-negative. – Tmin≥ B ≥ 0 Serial time is non-negative and is not greater than the minimum observed time.

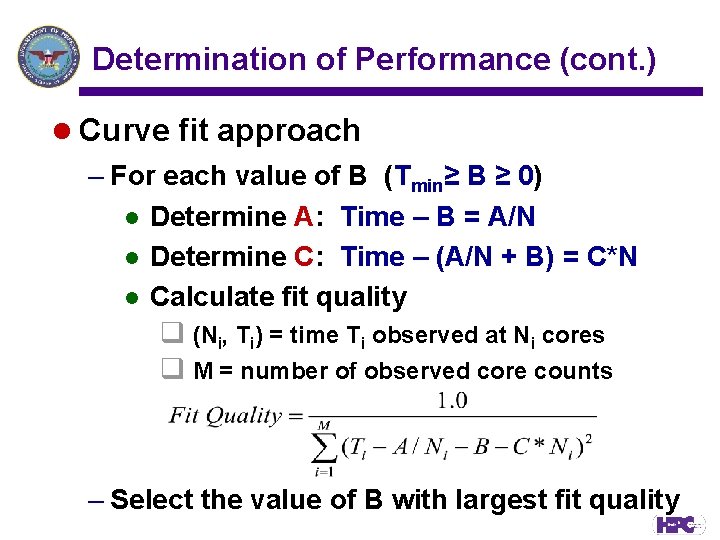

Determination of Performance (cont. ) l Curve fit approach – For each value of B (Tmin≥ B ≥ 0) l Determine A: Time – B = A/N l Determine C: Time – (A/N + B) = C*N l Calculate fit quality q (Ni, Ti) = time Ti observed at Ni cores q M = number of observed core counts – Select the value of B with largest fit quality

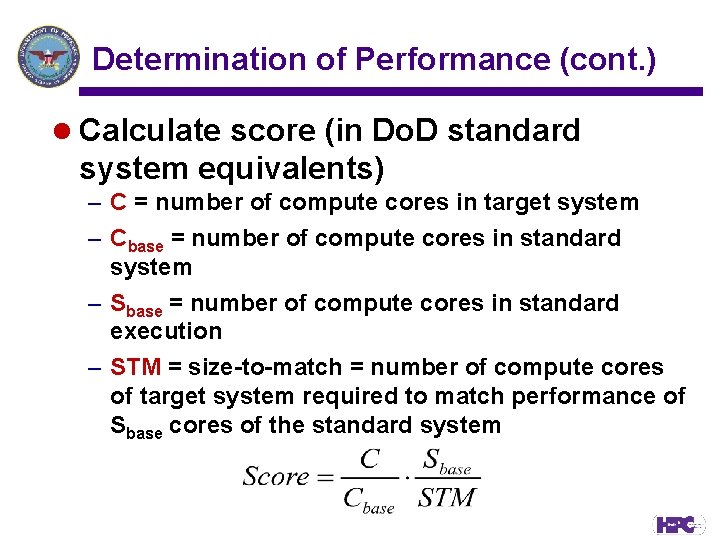

Determination of Performance (cont. ) l Calculate score (in Do. D standard system equivalents) – C = number of compute cores in target system – Cbase = number of compute cores in standard system – Sbase = number of compute cores in standard execution – STM = size-to-match = number of compute cores of target system required to match performance of Sbase cores of the standard system

AMR Large Test Case on HP Opteron Cluster

AMR Large Test Case on SGI Altix

AMR Large Test Case on Dell Xeon Cluster

Overflow-2 Standard Test Case on Dell Xeon Cluster

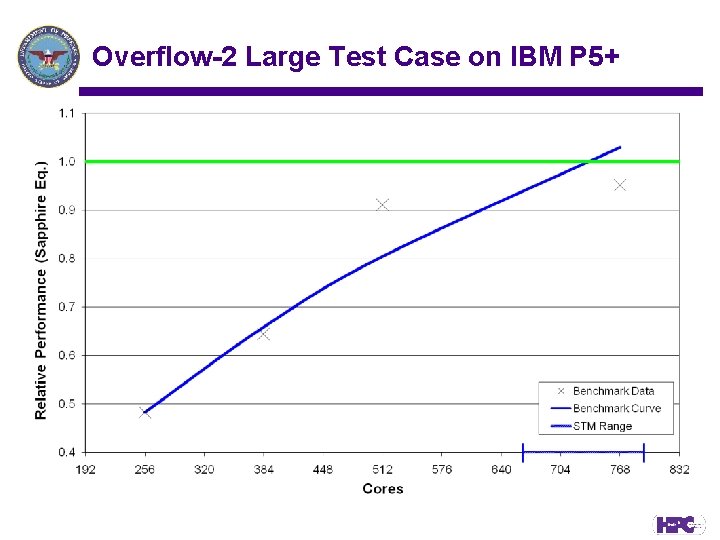

Overflow-2 Large Test Case on IBM P 5+

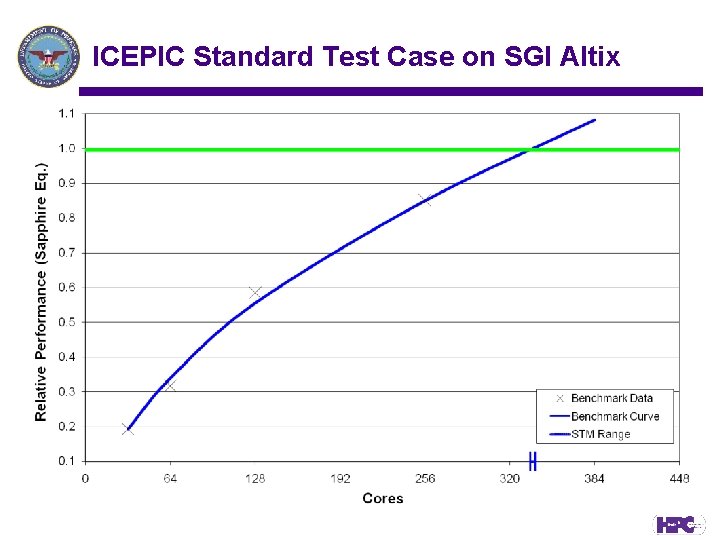

ICEPIC Standard Test Case on SGI Altix

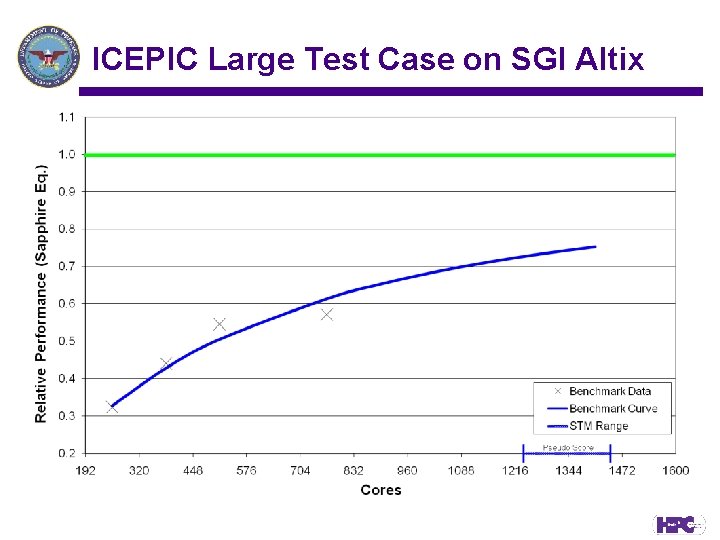

ICEPIC Large Test Case on SGI Altix

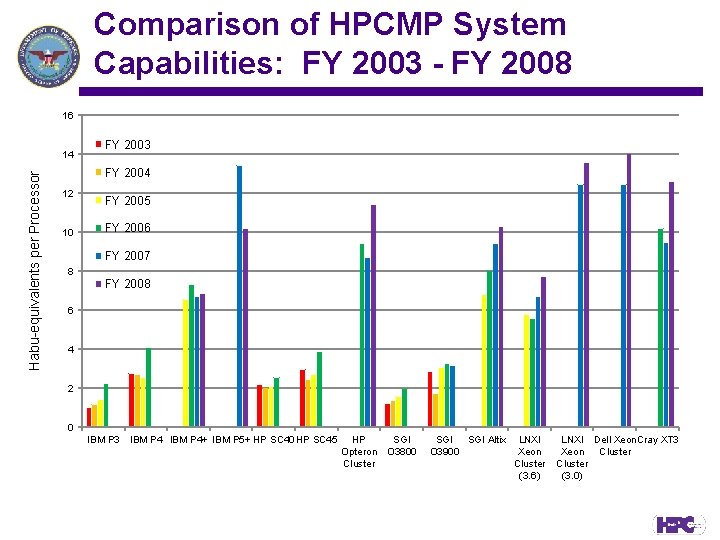

Comparison of HPCMP System Capabilities: FY 2003 - FY 2008 16 Habu-equivalents per Processor 14 FY 2003 FY 2004 12 10 FY 2005 FY 2006 FY 2007 8 FY 2008 6 4 2 0 IBM P 3 IBM P 4+ IBM P 5+ HP SC 40 HP SC 45 HP SGI Opteron O 3800 Cluster SGI Altix O 3900 LNXI Xeon Cluster (3. 6) LNXI Dell Xeon. Cray XT 3 Xeon Cluster (3. 0)

What’s Next? l Continue to evolve application benchmarks to represent accurately the HPCMP computational workload l Increase profiling and performance modeling to understand application performance better l Use performance predictions to supplement application benchmark measurements and guide vendors in designing more efficient systems

- Slides: 26