Presentation On Surf No C A Low Latency

![REFERENCE [1] Hassan M. G. Wassely, Ying Gaoy, Jason K. Oberg, Ted Huffmirez, Ryan REFERENCE [1] Hassan M. G. Wassely, Ying Gaoy, Jason K. Oberg, Ted Huffmirez, Ryan](https://slidetodoc.com/presentation_image/4d5c0cc531eb0354aac9c4db24d75ac4/image-25.jpg)

- Slides: 26

Presentation On Surf. No. C: A Low Latency and Provably Non-Interfering Approach to Secure Networks-On-Chip DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING Guided By Submitted By Basavraj Talwar Meejuru Siva kumar (13 IS 12 F) Asst. Professor Nuresh Kumar Dewangan (13 IS 14 F) Computer Science and Engineering

Title- Surf. No. C: A Low Latency and Provably Non-Interfering Approach to Secure Networks-On-Chip Authors – Hassan M. G. Wassel Jason K. Oberg Ted Huffmire Ying Gao Ryan Kastner (Naval Postgraduate School) Frederic T. Chong (UC San Diego) Timothy Sherwood (UC Santa Barbara) Conference – ACM/IEEE International Symposium on Computer Architecture 2013

INTRODUCTION ØNow a days multicore processor is in demand, they are adapting by so many domain such as aerospace and medical devices. ØBut in the multicore processor strong isolation and security is first class design. ØWhen cores are executing different set of program, using strong time and space partitioning we can provide such guarantees, but when the number of partitions or the asymmetry in partition bandwidth allocations grows, the additional problems occur and significantly impact the network.

Contd… Ø In this paper they introduce Surf. No. C, an on-chip network that reduces the latency occurred by partitioning, It avoids the overhead associated with time multiplexing. Ø It schedules the network into waves which is non-interfering and uses these waves to carry data from different domain. Ø The main problem in the typical network-on-chip is that it is uses the so many resources in shared manner, these resources are buffers that holding packets, crossbar switches and the channels, which causes the performance impact.

THE STATE OF THE ART First Paper- Ø Low Latency and Energy Efficient Scalable Architecture for Massive No. Cs Using Generalized de Bruijn Graph. Ø In this paper they propose the generalized binary de bruijn graph as a reliable and efficient network topology for a large Noc. Ø Also they propose a new routing algorithm to detour a faulty channel between two adjacent switches. Ø Just because of low energy consumption of de bruijn graph we can use this scheme in portable devices which has limited batteries. Ø Problem- When we compare this paper with our paper based on latency our paper is more suitable.

Contd. . . Second Paper- Ø Prediction Router: A Low-Latency On-Chip Router Architecture with Multiple Predictors. Ø In this paper they propose a new router architecture that predicts the output channel to be used by the next packet Transfer. Ø If the prediction hits the packets coming into the prediction routers are transferred without waiting for the routing computation. And if the prediction fails they are transferred through the original pipeline stages.

Contd… Ø Problem§ The design of router architecture is complex and hard. § It require more hardware for prediction, so it increases the router size and also the processor size which ultimately leads to more energy consumption. Ø Non-interference means that injection of packets from one domain can not affect the timing delivery of packets from other domains. Ø So for non-interference flow of data we can statically schedule the domain on the network over time, because if we use time multiplexing flow of data than it significantly increases the latencies.

SPECIFICALLY IN THIS PAPER 1. In this paper they specifies a channel scheduling scheme and network router design which simultaneously supports both low latency and non-interference between domains. 2. They show that as the network grows in size, as the number of domains increases, and as the asymmetry between domains becomes larger, the benefit for a surfscheduled network over TDMA continues to increase. 3. They evaluate the latency, throughput, area, and power consumption of these approaches through a detailed network simulation, and compare all these properties with time division multiplexing.

BACKGROUND Ø Time Division Multiplexing. Ø Basics of Network-on-chips. Ø Topologies. Ø Routing Protocol- Dimension Order Routing. Ø Flow Control- Flit Based Flow Control Ø Router Microarchitecture- Virtual Channel and Pipeline Router Microarchitecture.

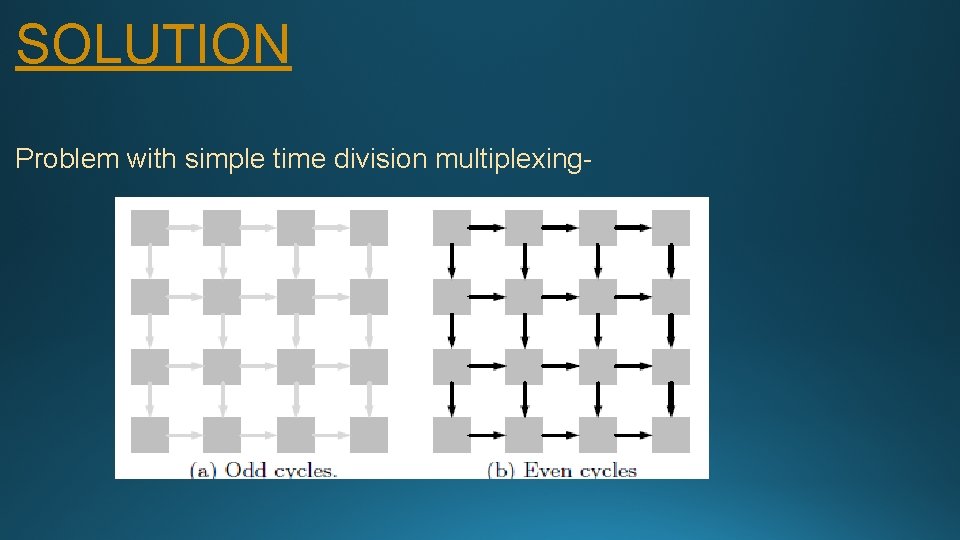

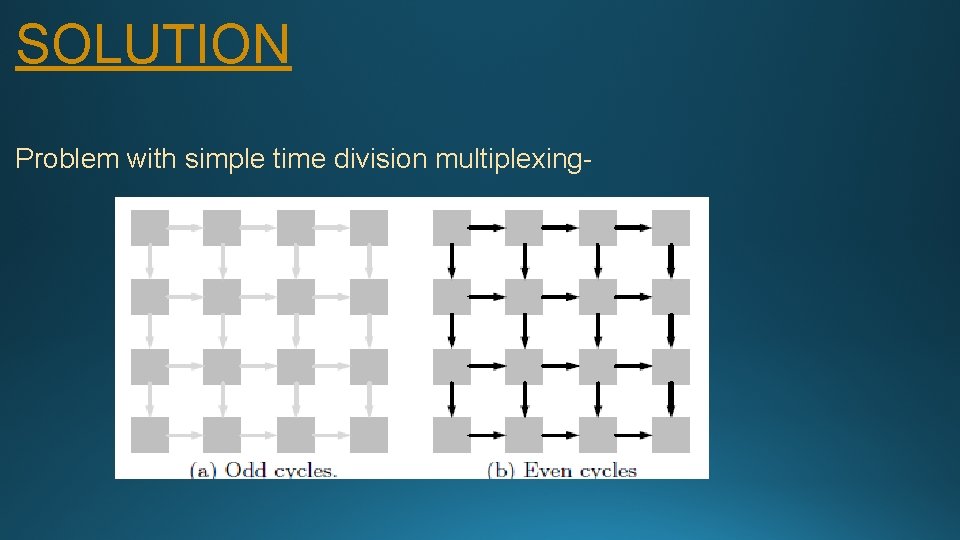

SOLUTION Problem with simple time division multiplexing-

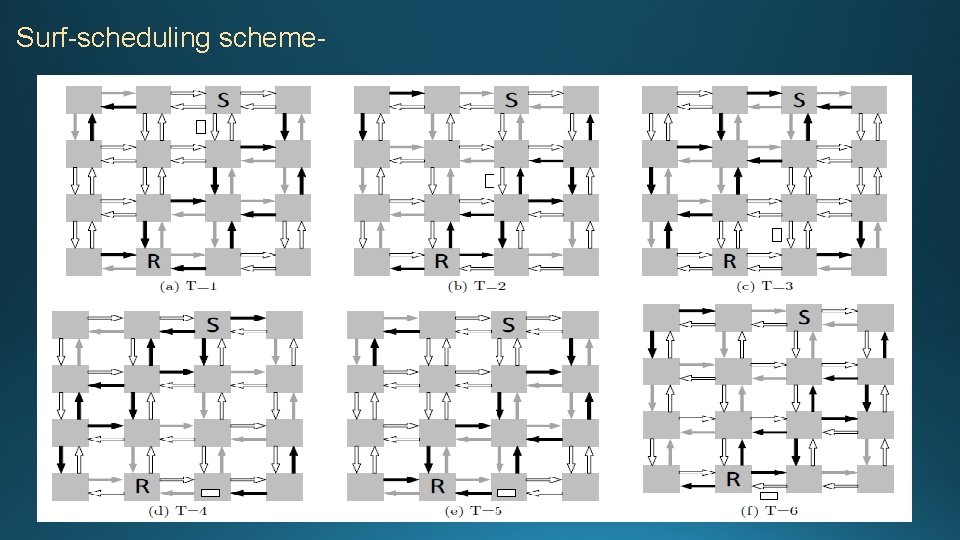

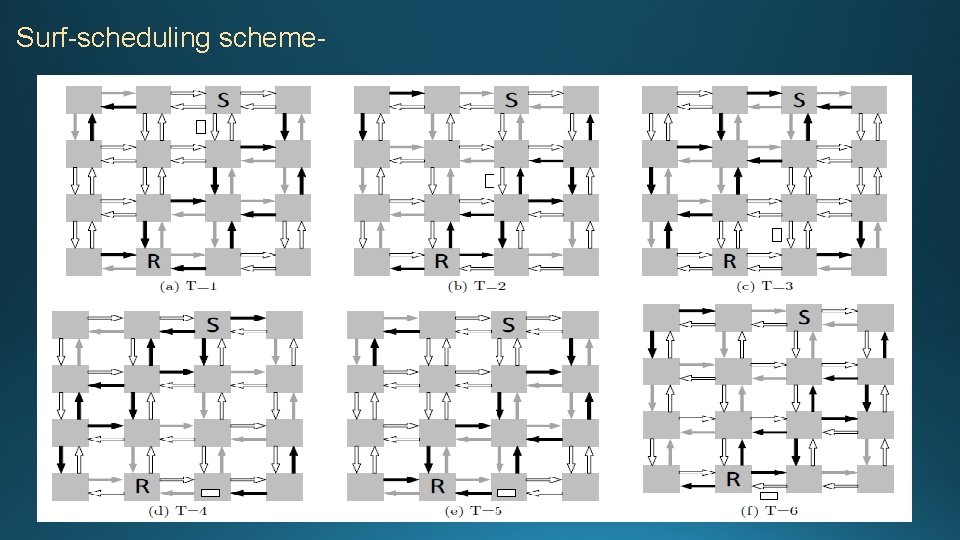

Surf-scheduling scheme-

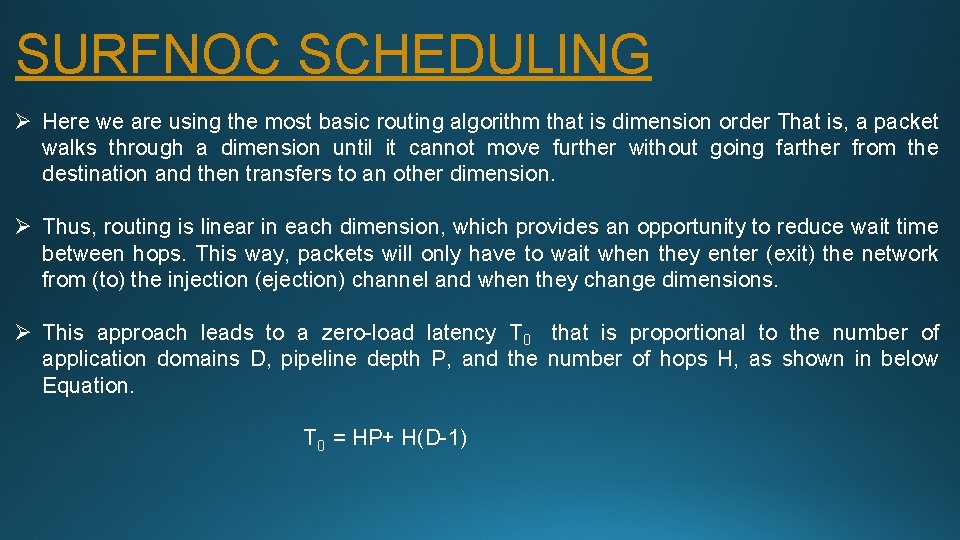

SURFNOC SCHEDULING Ø Here we are using the most basic routing algorithm that is dimension order That is, a packet walks through a dimension until it cannot move further without going farther from the destination and then transfers to an other dimension. Ø Thus, routing is linear in each dimension, which provides an opportunity to reduce wait time between hops. This way, packets will only have to wait when they enter (exit) the network from (to) the injection (ejection) channel and when they change dimensions. Ø This approach leads to a zero-load latency T 0 that is proportional to the number of application domains D, pipeline depth P, and the number of hops H, as shown in below Equation. T 0 = HP+ H(D-1)

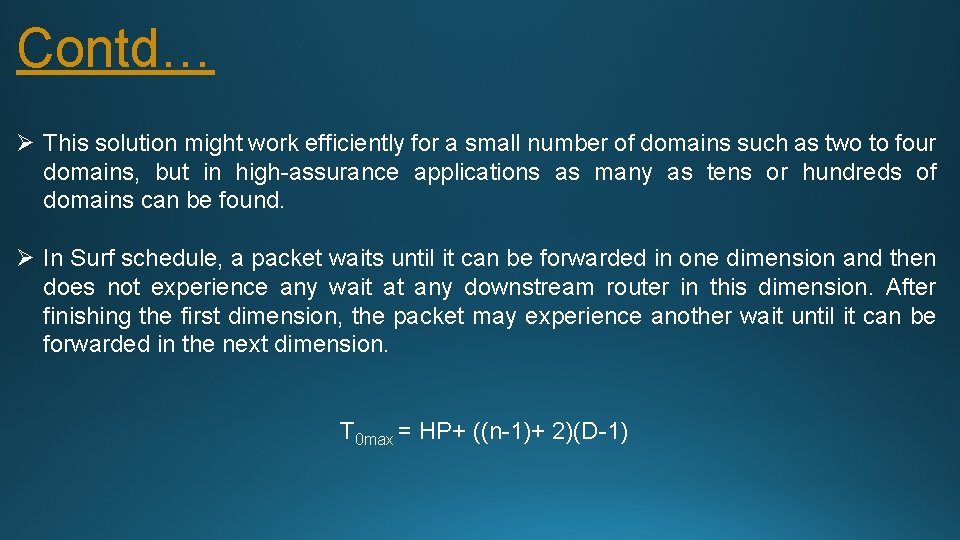

Contd… Ø This solution might work efficiently for a small number of domains such as two to four domains, but in high-assurance applications as many as tens or hundreds of domains can be found. Ø In Surf schedule, a packet waits until it can be forwarded in one dimension and then does not experience any wait at any downstream router in this dimension. After finishing the first dimension, the packet may experience another wait until it can be forwarded in the next dimension. T 0 max = HP+ ((n-1)+ 2)(D-1)

ROUTER MICROARCHITECTURE Ø Surf. Noc router has two main goals- § Ensuring a timing-channel-free contention between packets, i. e. , contention can occur between packets from the same domain but not between packets from different domains. § Scheduling the output channels of each router in a way that maintains the surf schedule across the whole network. Ø The Surf. Noc router has two allocator§ The VC Allocator § The SW Allocator

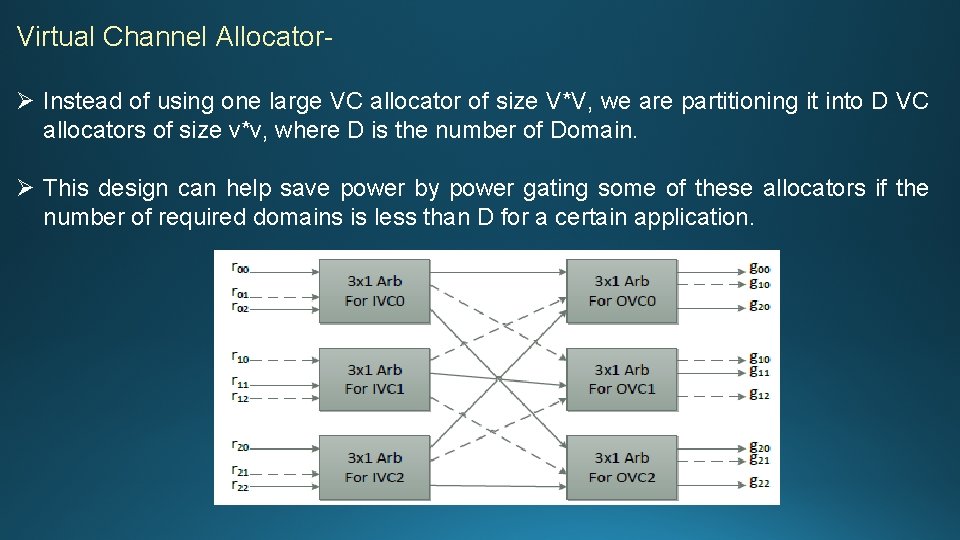

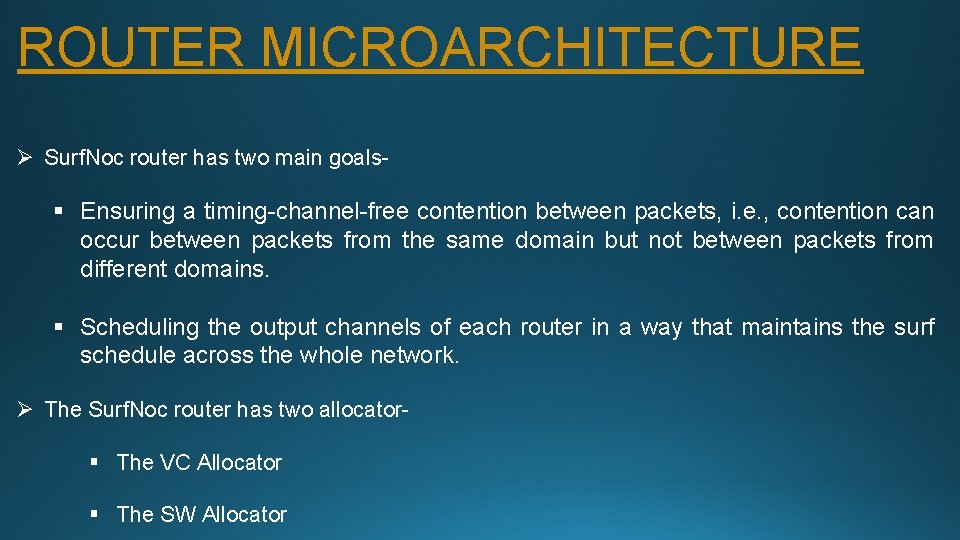

Virtual Channel AllocatorØ Instead of using one large VC allocator of size V*V, we are partitioning it into D VC allocators of size v*v, where D is the number of Domain. Ø This design can help save power by power gating some of these allocators if the number of required domains is less than D for a certain application.

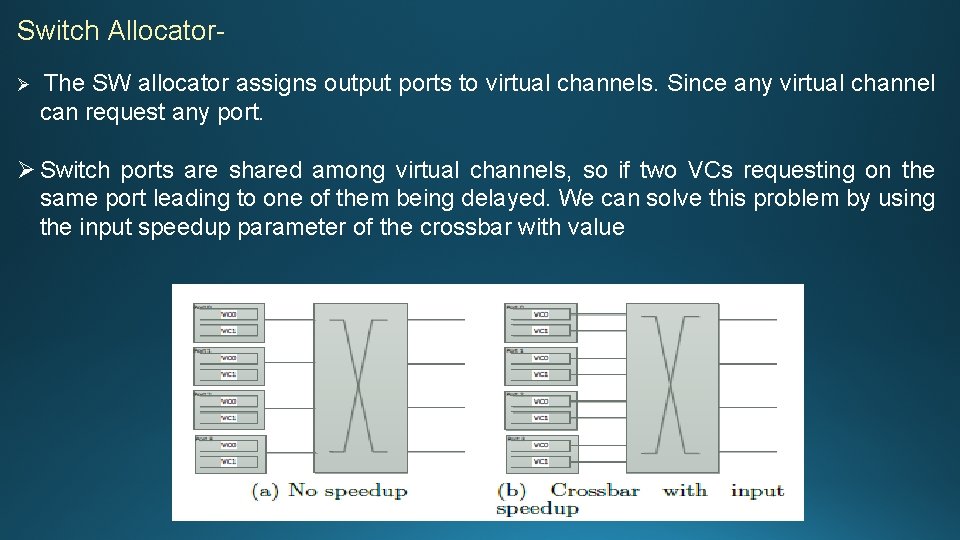

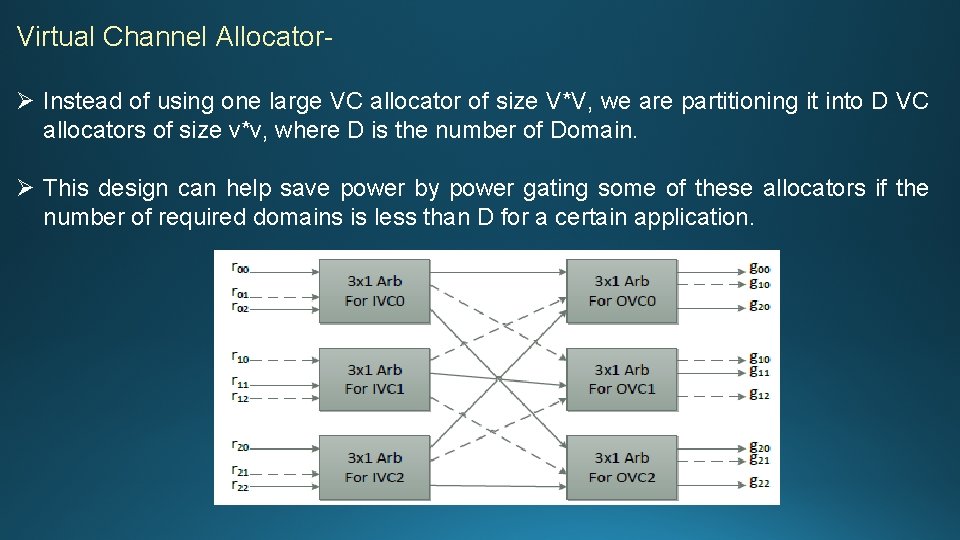

Switch AllocatorØ The SW allocator assigns output ports to virtual channels. Since any virtual channel can request any port. Ø Switch ports are shared among virtual channels, so if two VCs requesting on the same port leading to one of them being delayed. We can solve this problem by using the input speedup parameter of the crossbar with value

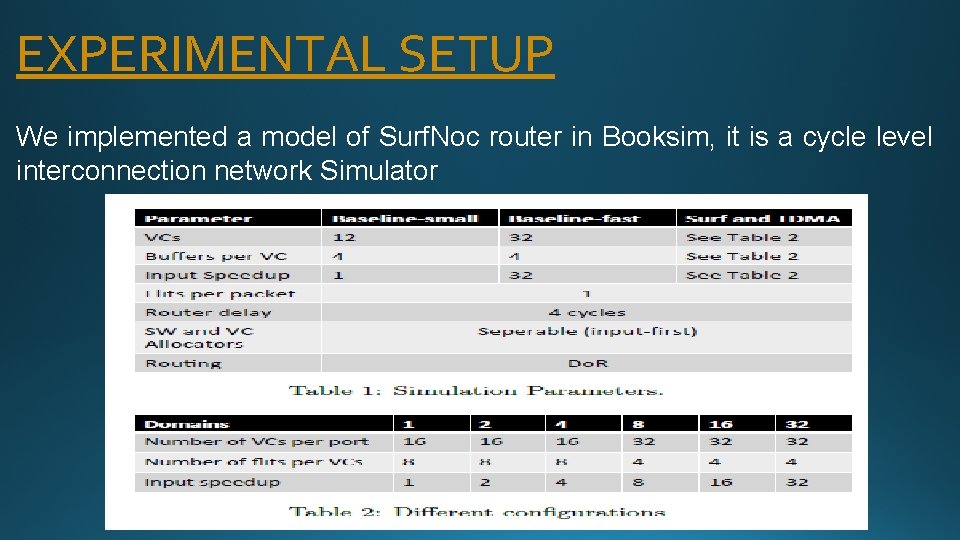

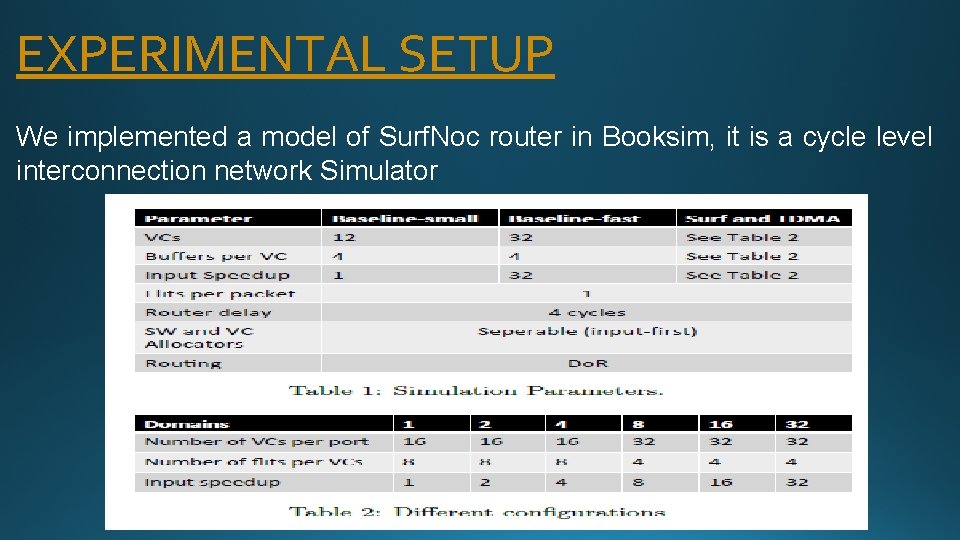

EXPERIMENTAL SETUP We implemented a model of Surf. Noc router in Booksim, it is a cycle level interconnection network Simulator

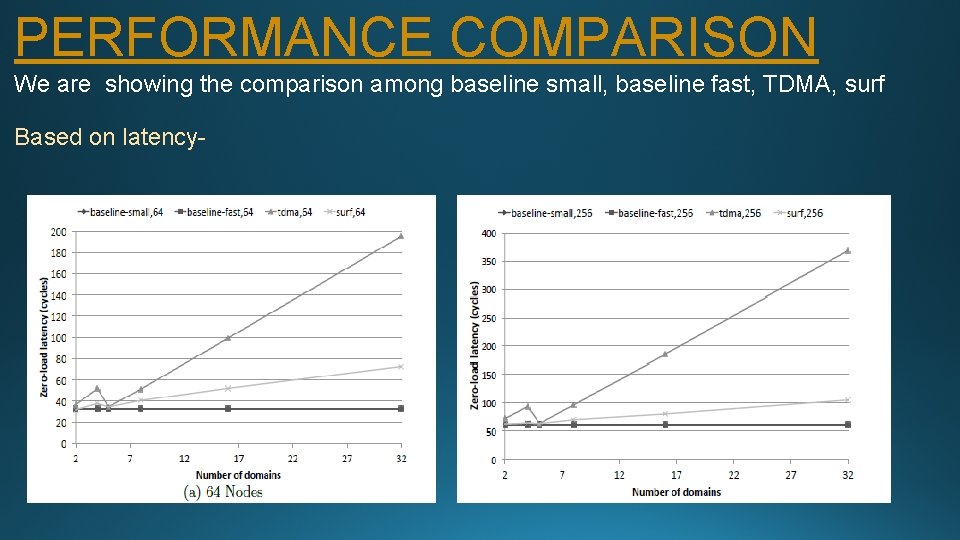

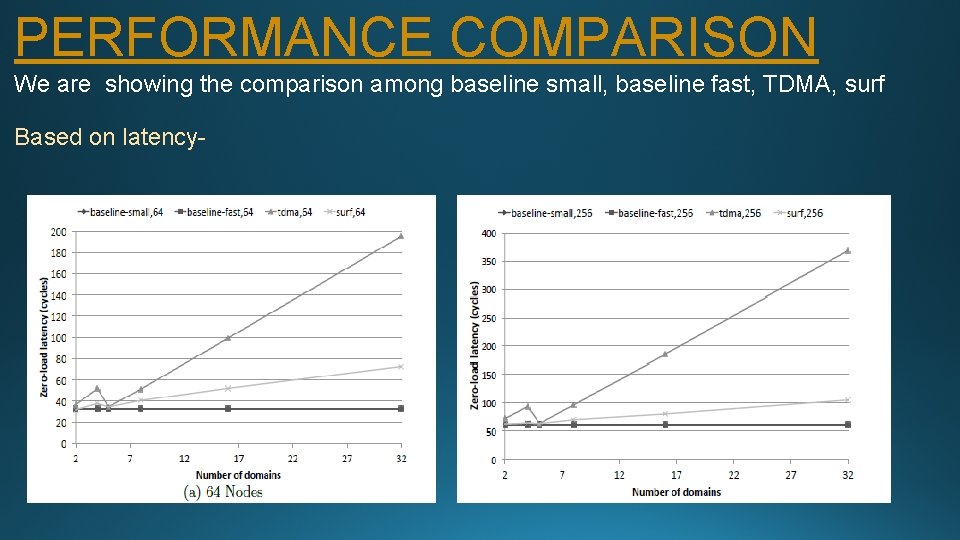

PERFORMANCE COMPARISON We are showing the comparison among baseline small, baseline fast, TDMA, surf Based on latency-

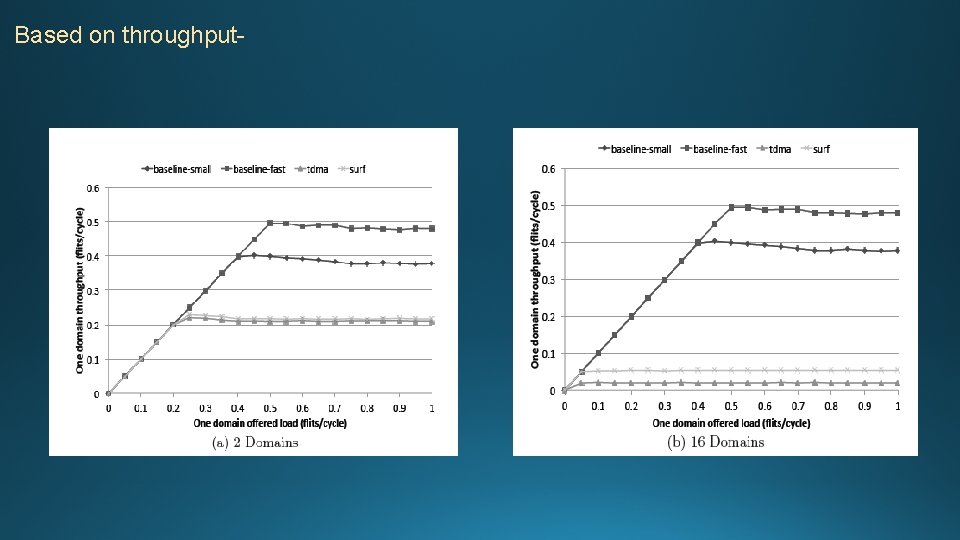

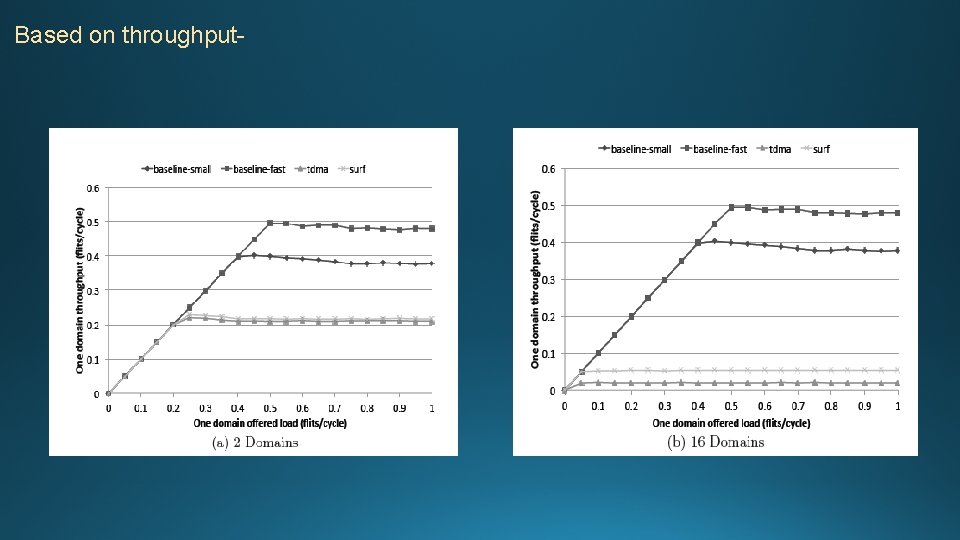

Based on throughput

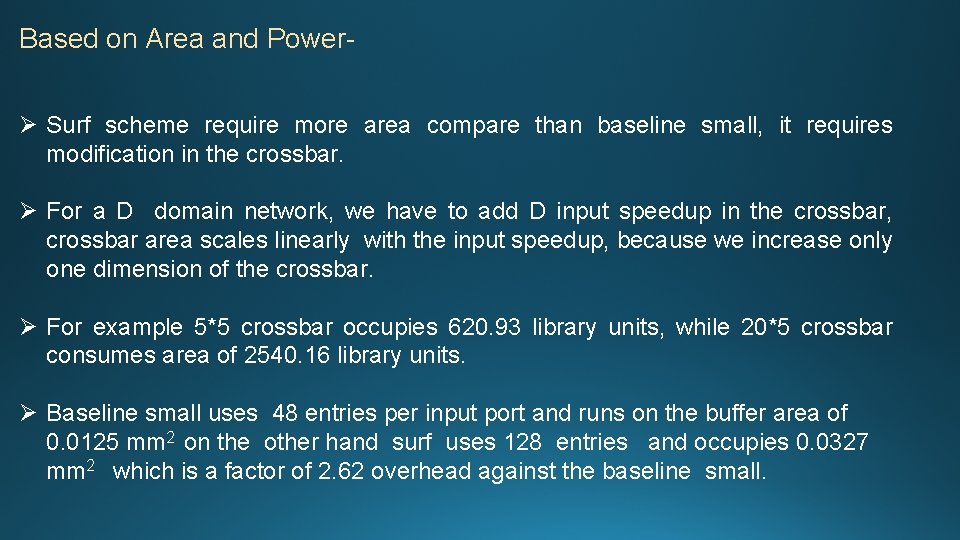

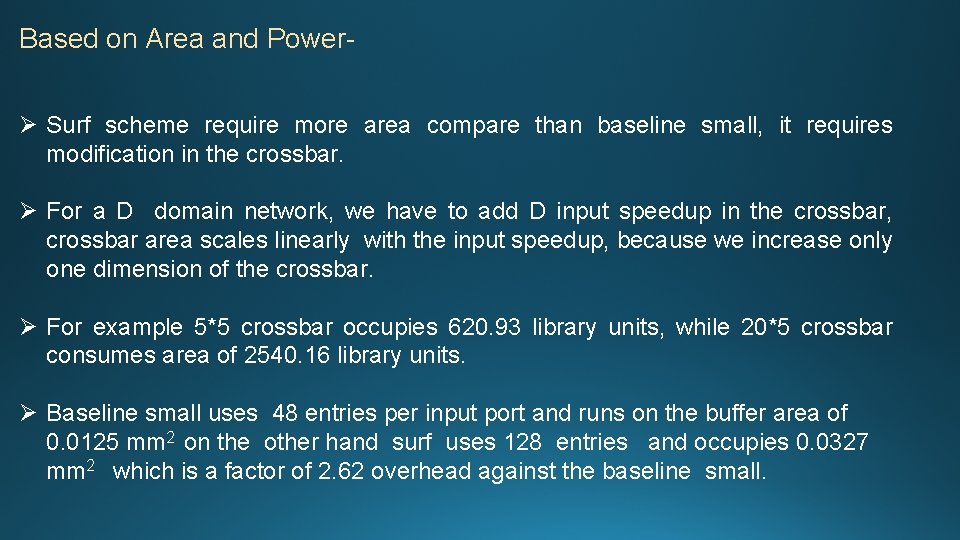

Based on Area and PowerØ Surf scheme require more area compare than baseline small, it requires modification in the crossbar. Ø For a D domain network, we have to add D input speedup in the crossbar, crossbar area scales linearly with the input speedup, because we increase only one dimension of the crossbar. Ø For example 5*5 crossbar occupies 620. 93 library units, while 20*5 crossbar consumes area of 2540. 16 library units. Ø Baseline small uses 48 entries per input port and runs on the buffer area of 0. 0125 mm 2 on the other hand surf uses 128 entries and occupies 0. 0327 mm 2 which is a factor of 2. 62 overhead against the baseline small.

Contd. . . Ø Surf scheme also requires the more power compare than baseline small. Ø Crossbar with input speedup D power consumption scales linearly with D because dynamic power consumption is directly proportional to capacitance. Ø Design Compiler estimates a 55 crossbar to consume 74. 26 W of power, while a 205 crossbar consumes 309. 26 W. Ø The power consumption of buffers increases from 11. 9 m. W for the baseline small to 29. 3 m. W for the baseline-fast and surf schemes, which creates an overhead of 146%.

NON-INTERFERENCE VERIFICATION Ø In order to prove non-interference between domains of our arbitration scheme, we used Gate-level information-flow tracking (GLIFT) logic. Ø GLIFT logic captures all digital information, including implicit and timing-channel flows, because all information flows represent themselves in decision-making circuit constructs such as multiplexers. Ø For example, an arbitration operation leaks information if the control bits of the multiplexers depend on one of the two domains, but it will not leak information (or cause interference) if arbitration is based on a static schedule.

CONCLUSION Ø This paper propose a low-latency time-division-multiplexed packet-switched static scheduled network. Ø Surf. No. C exploits the dimension-ordered routing algorithms in which packets have to wait for their domain's turn only when they enter, exit, and potentially when changing Ø By this paper we can conclude that our schedule latency overhead scales efficiently compared with synchronous TDMA, it saves up to 75% of latency overhead in the case of a 64 -node with 32 domains.

FUTURE WORK Ø In this paper they are using dimension order routing algorithm, if the number of turns increases latency will increase, so we need to explore for more optimistic design. Ø Here we are considering mesh topology, there are other topologies to be consider(i. e. flattened butterflies) where surf scheduling, might directly be applied, non-minimal routing is usually required to improve throughput. Enforcing a surflike schedule with adaptive routing might increase latency.

![REFERENCE 1 Hassan M G Wassely Ying Gaoy Jason K Oberg Ted Huffmirez Ryan REFERENCE [1] Hassan M. G. Wassely, Ying Gaoy, Jason K. Oberg, Ted Huffmirez, Ryan](https://slidetodoc.com/presentation_image/4d5c0cc531eb0354aac9c4db24d75ac4/image-25.jpg)

REFERENCE [1] Hassan M. G. Wassely, Ying Gaoy, Jason K. Oberg, Ted Huffmirez, Ryan Kastner, Frederic T. Chongy, Timothy Sherwoody, “Surf. No. C: A Low Latency and Provably Non-Interfering Approach to Secure Networks-On-Chip”, ISCA 13. [2] Natalie Enright Jerger, Li-Shiuan Peh, “On-Chip. Networks”, Morgan &c. Laypool publishers 2009. [3] Mohammad Hosseinabady, Mohammad Reza Kakoee, Jimson Mathew, and Dhiraj K. Pradhan, “Low Latency and Energy Efficient Scalable Architecture for Massive No. Cs Using Generalized de Bruijn Graph, IEEE Transactions on very large scale integration (vlsi) systems, vol. 19, no. 8, august 2011. [4] Hiroki Matsutani, Michihiro Koibuchi, Hideharu Amano, and Tsutomu Yoshinaga, “Prediction Router: A Low-Latency On-Chip Router Architecture with Multiple Predictors”, Ieee transactions on computers, vol. 60, no. 6, june 2011.

THANK YOU