Prepared for the 2005 Software Assurance Symposium SAS

- Slides: 18

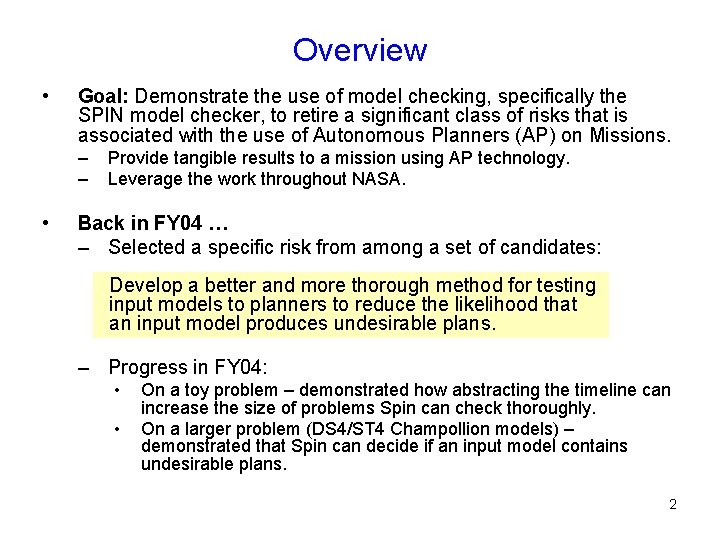

Prepared for the 2005 Software Assurance Symposium (SAS) DS 1 MSL EO 1 Verifying Autonomous Planning Systems Even the best laid plans need to be verified Gordon Cucullu Gerard Holzmann Rajeev Joshi Benjamin Smith Affiliation: Jet Propulsion Laboratory Margaret Smith (PI) 1

Overview • Goal: Demonstrate the use of model checking, specifically the SPIN model checker, to retire a significant class of risks that is associated with the use of Autonomous Planners (AP) on Missions. – – • Provide tangible results to a mission using AP technology. Leverage the work throughout NASA. Back in FY 04 … – Selected a specific risk from among a set of candidates: Develop a better and more thorough method for testing input models to planners to reduce the likelihood that an input model produces undesirable plans. – Progress in FY 04: • • On a toy problem – demonstrated how abstracting the timeline can increase the size of problems Spin can check thoroughly. On a larger problem (DS 4/ST 4 Champollion models) – demonstrated that Spin can decide if an input model contains undesirable plans. 2

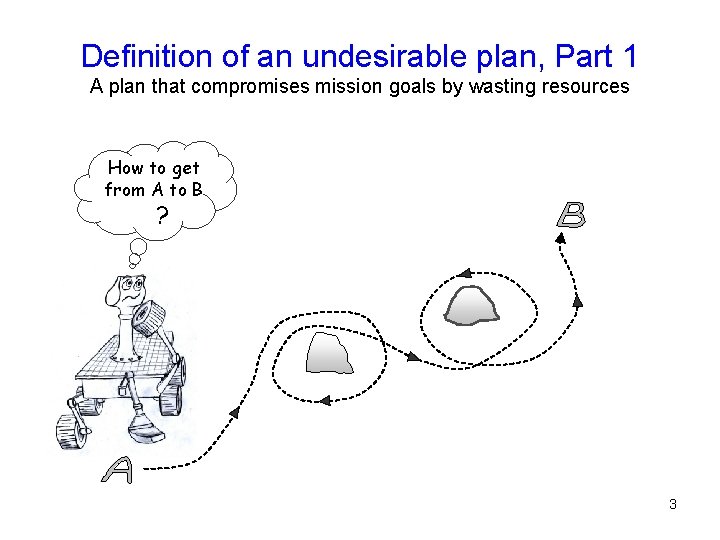

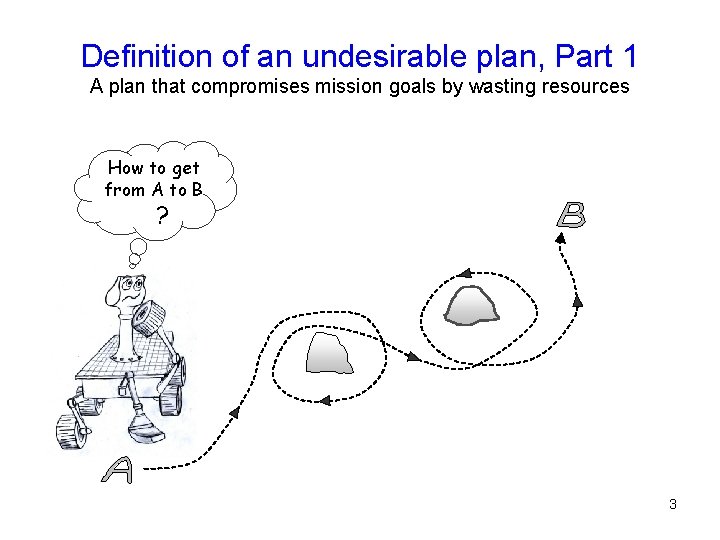

Definition of an undesirable plan, Part 1 A plan that compromises mission goals by wasting resources How to get from A to B ? 3

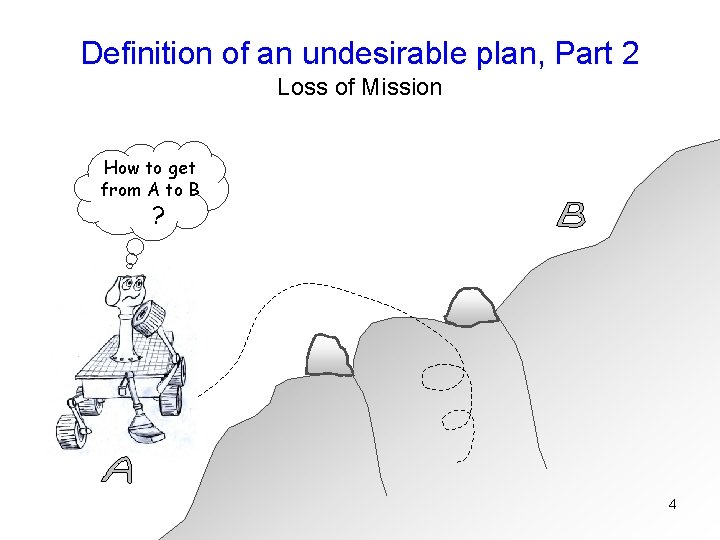

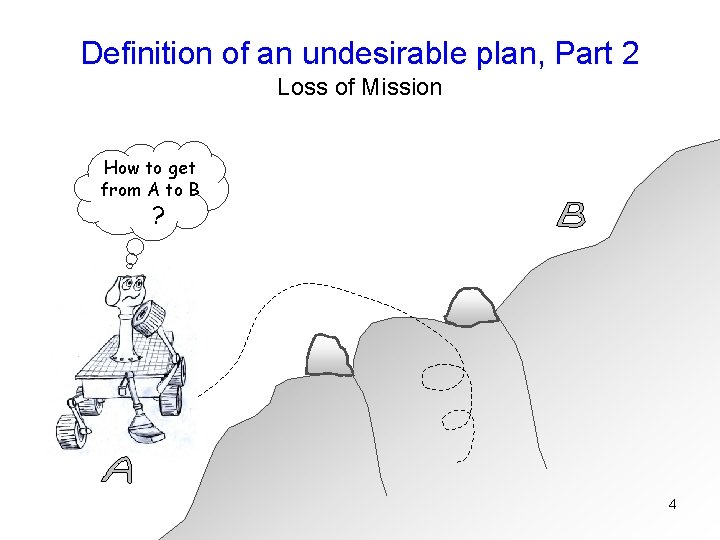

Definition of an undesirable plan, Part 2 Loss of Mission How to get from A to B ? 4

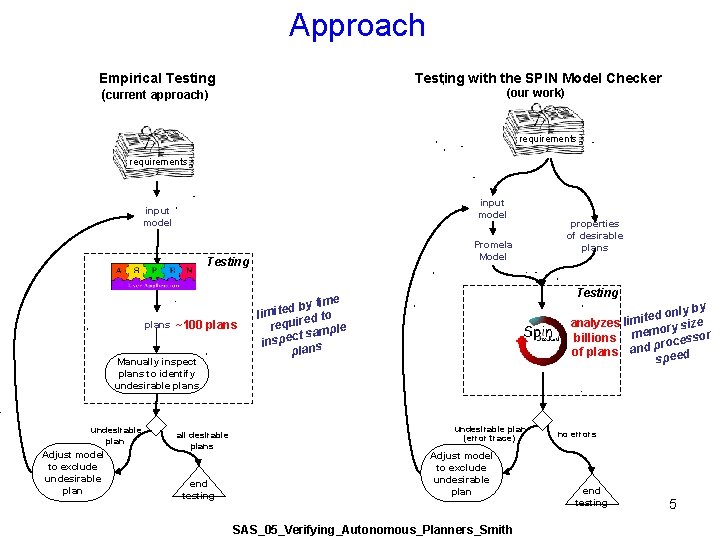

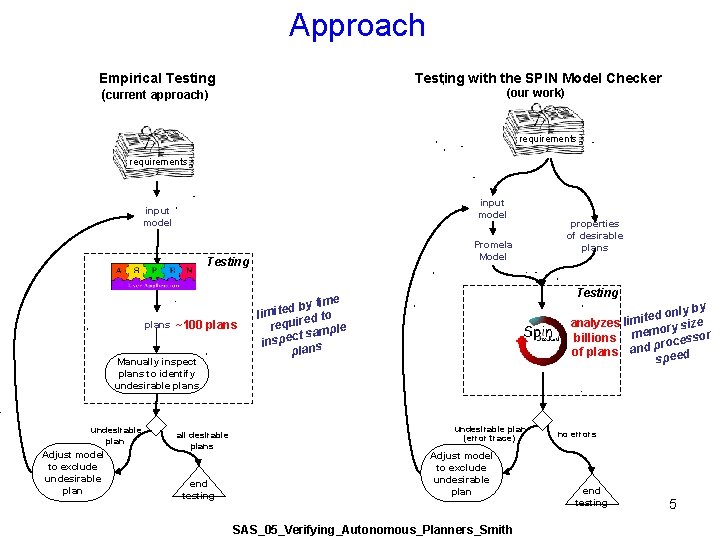

Approach Empirical Testing (current approach) Testing with the SPIN Model Checker (our work) requirements input model Promela Model Testing plans ~100 plans Manually inspect plans to identify undesirable plans undesirable plan Adjust model to exclude undesirable plan all desirable plans end testing properties of desirable plans Testing e by tim d e t i lim ed to requir mple t sa inspec ns pla y only b d e t i analyzes lim ze ory si r m e m billions sso proce of plans and speed undesirable plan (error trace) Adjust model to exclude undesirable plan SAS_05_Verifying_Autonomous_Planners_Smith no errors end testing 5

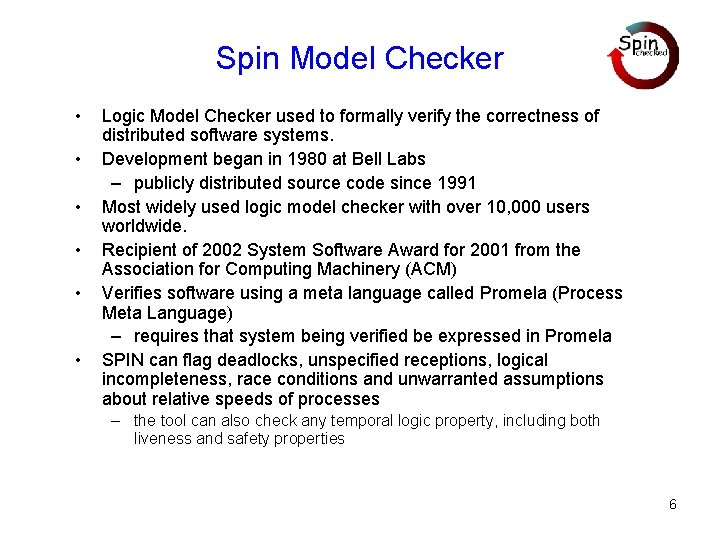

Spin Model Checker • • • Logic Model Checker used to formally verify the correctness of distributed software systems. Development began in 1980 at Bell Labs – publicly distributed source code since 1991 Most widely used logic model checker with over 10, 000 users worldwide. Recipient of 2002 System Software Award for 2001 from the Association for Computing Machinery (ACM) Verifies software using a meta language called Promela (Process Meta Language) – requires that system being verified be expressed in Promela SPIN can flag deadlocks, unspecified receptions, logical incompleteness, race conditions and unwarranted assumptions about relative speeds of processes – the tool can also check any temporal logic property, including both liveness and safety properties 6

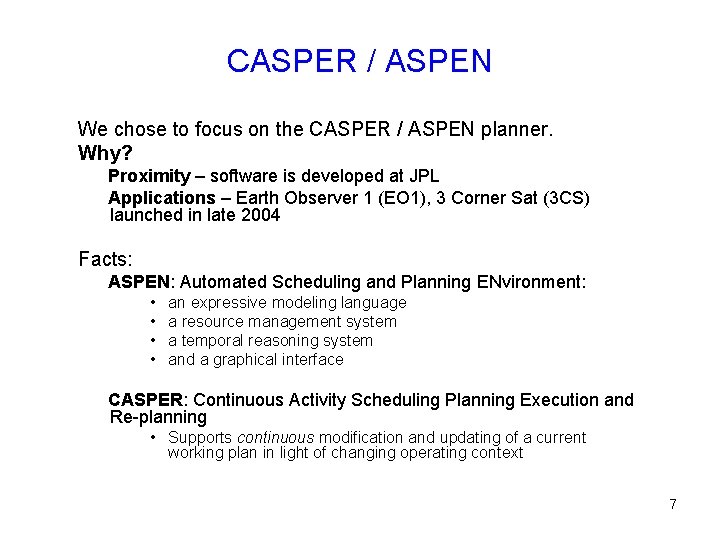

CASPER / ASPEN We chose to focus on the CASPER / ASPEN planner. Why? Proximity – software is developed at JPL Applications – Earth Observer 1 (EO 1), 3 Corner Sat (3 CS) launched in late 2004 Facts: ASPEN: Automated Scheduling and Planning ENvironment: • • an expressive modeling language a resource management system a temporal reasoning system and a graphical interface CASPER: Continuous Activity Scheduling Planning Execution and Re-planning • Supports continuous modification and updating of a current working plan in light of changing operating context 7

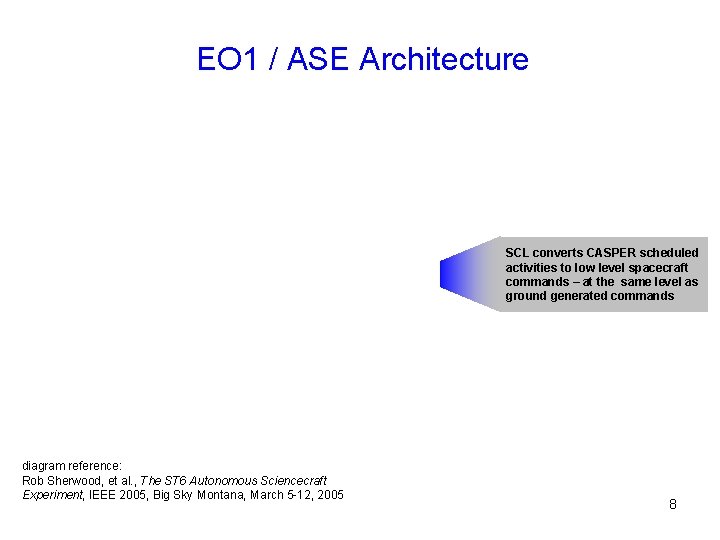

EO 1 / ASE Architecture SCL converts CASPER scheduled activities to low level spacecraft commands – at the same level as ground generated commands diagram reference: Rob Sherwood, et al. , The ST 6 Autonomous Sciencecraft Experiment, IEEE 2005, Big Sky Montana, March 5 -12, 2005 8

Focus of work in FY 05 EO 1 • Apply this work to a mission (Earth Observer 1 mission) – Adaptation of CASPER for EO 1 was performed at JPL – Testing of EO 1 adaptation performed at JPL • Build tools necessary to make our technique scale – The Spin models constructed in FY 04 were built by hand – We need tools to automate the generation of the Spin models from AP input models to: • improve fidelity of the Spin models (avoid human translation errors) • make our technique available to testers who may not be experts in both ASPEN’s input language and Spin’s Promela language • speed up the whole process – testing of each EO 1 build was on the order of months 9

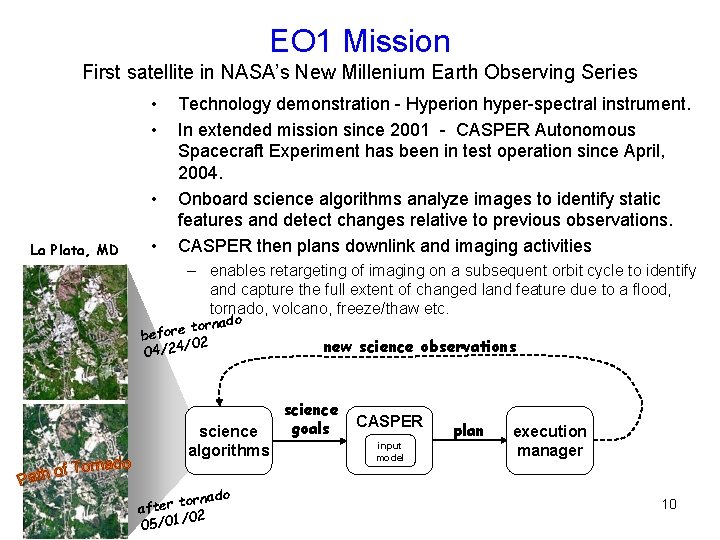

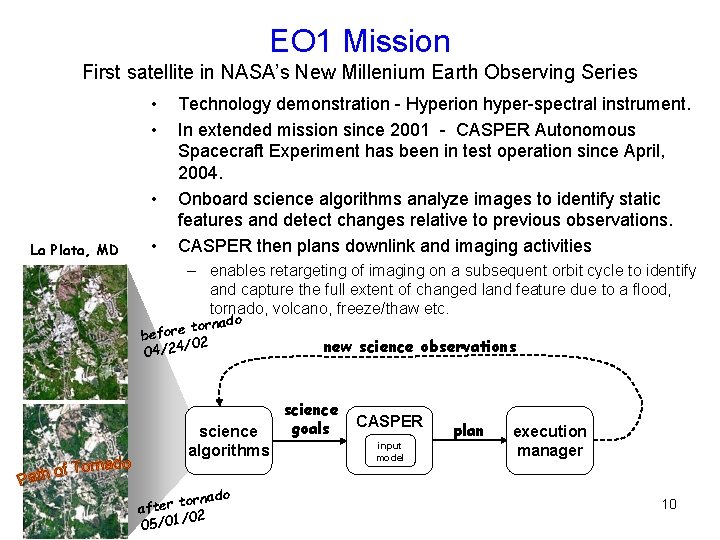

EO 1 Mission First satellite in NASA’s New Millenium Earth Observing Series • • • La Plata, MD • Technology demonstration - Hyperion hyper-spectral instrument. In extended mission since 2001 - CASPER Autonomous Spacecraft Experiment has been in test operation since April, 2004. Onboard science algorithms analyze images to identify static features and detect changes relative to previous observations. CASPER then plans downlink and imaging activities – enables retargeting of imaging on a subsequent orbit cycle to identify and capture the full extent of changed land feature due to a flood, tornado, volcano, freeze/thaw etc. o tornad e r o f e b /02 04/24 science algorithms ornado t r e t af /02 05/01 new science observations science goals CASPER input model plan execution manager 10

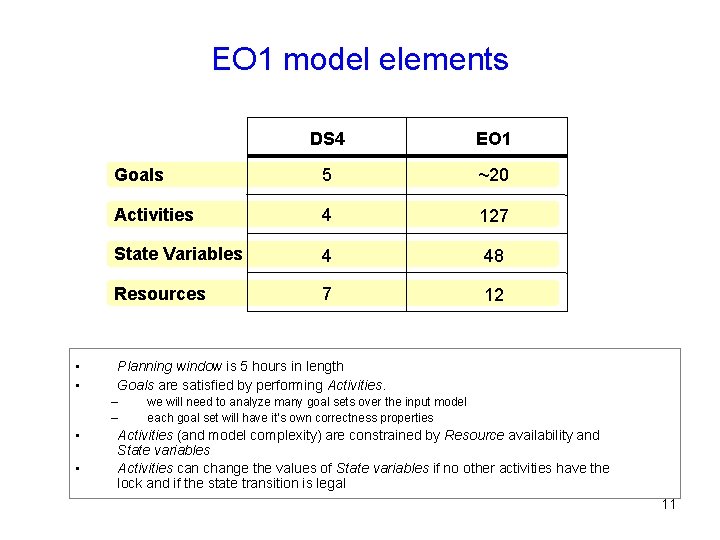

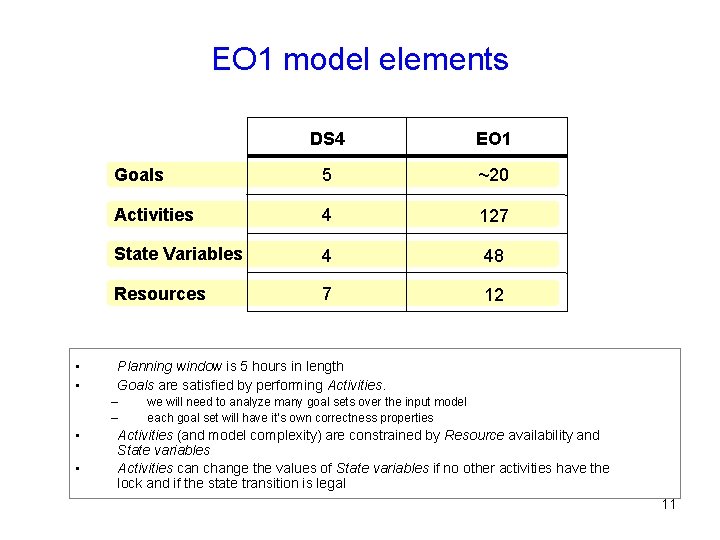

EO 1 model elements • • DS 4 EO 1 Goals 5 ~20 Activities 4 127 State Variables 4 48 Resources 7 12 Planning window is 5 hours in length Goals are satisfied by performing Activities. – – • • we will need to analyze many goal sets over the input model each goal set will have it’s own correctness properties Activities (and model complexity) are constrained by Resource availability and State variables Activities can change the values of State variables if no other activities have the lock and if the state transition is legal 11

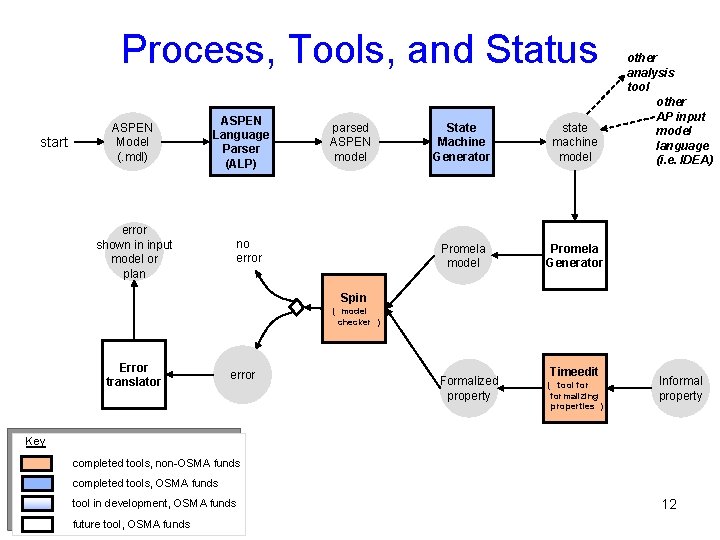

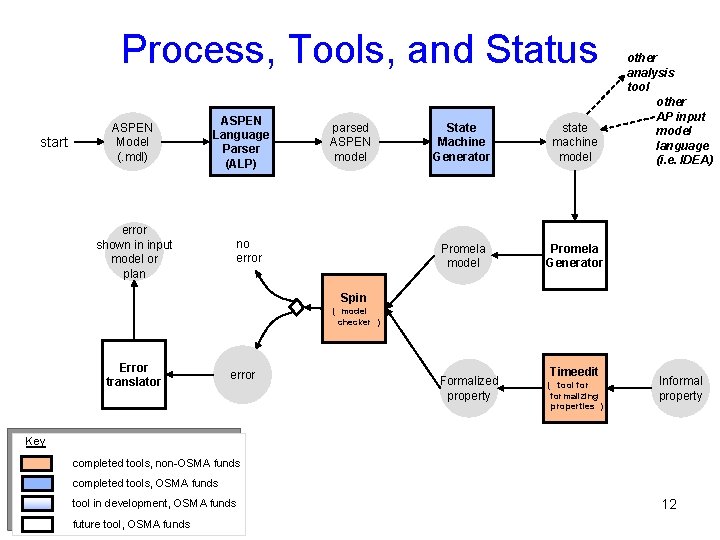

Process, Tools, and Status start ASPEN Model (. mdl) ASPEN Language Parser (ALP) error shown in input model or plan parsed ASPEN model no error State Machine Generator state machine model Promela Generator other analysis tool other AP input model language (i. e. IDEA) Spin ( model checker ) Error translator error Formalized property Timeedit ( tool formalizing properties ) Informal property Key completed tools, non-OSMA funds completed tools, OSMA funds tool in development, OSMA funds future tool, OSMA funds 12

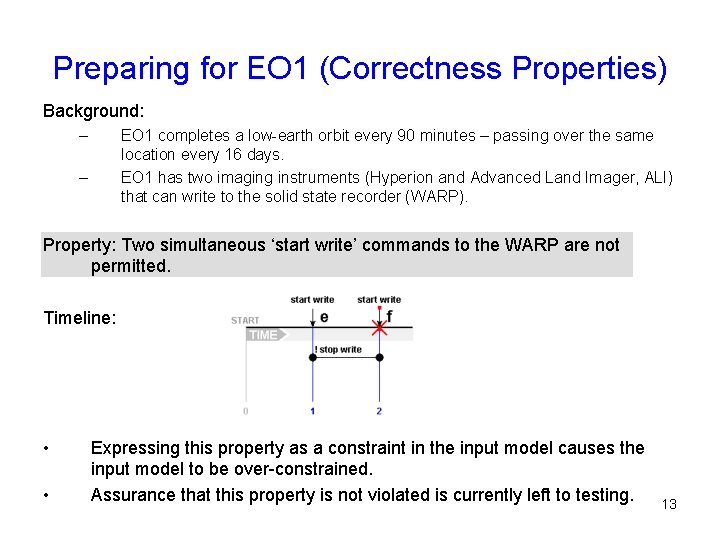

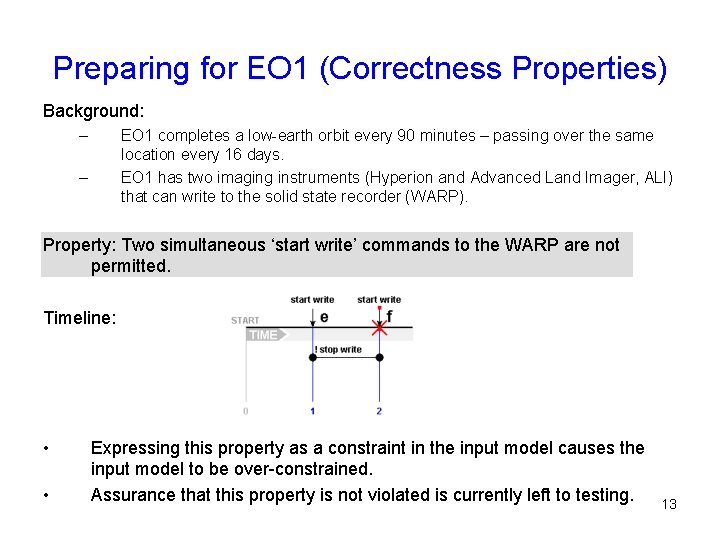

Preparing for EO 1 (Correctness Properties) Background: – EO 1 completes a low-earth orbit every 90 minutes – passing over the same location every 16 days. EO 1 has two imaging instruments (Hyperion and Advanced Land Imager, ALI) that can write to the solid state recorder (WARP). – Property: Two simultaneous ‘start write’ commands to the WARP are not permitted. Timeline: • • Expressing this property as a constraint in the input model causes the input model to be over-constrained. Assurance that this property is not violated is currently left to testing. 13

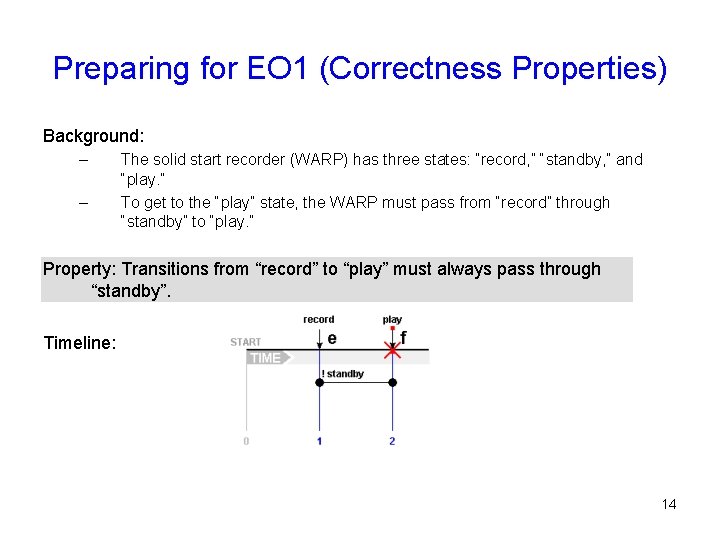

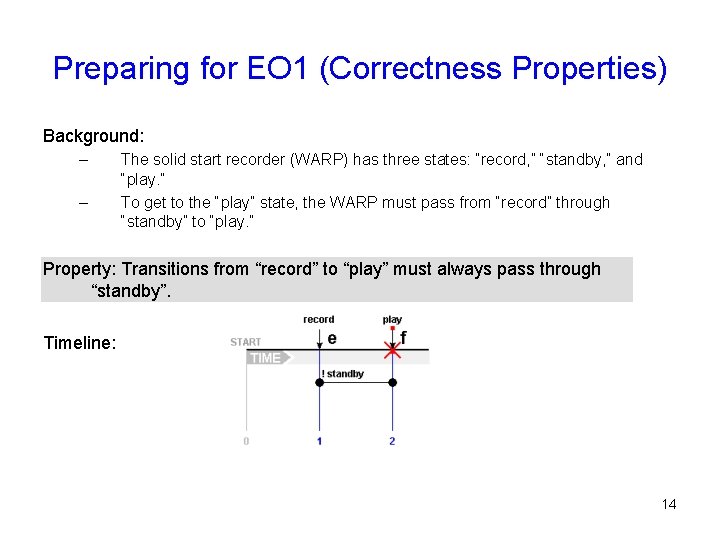

Preparing for EO 1 (Correctness Properties) Background: – – The solid start recorder (WARP) has three states: “record, ” “standby, ” and “play. ” To get to the “play” state, the WARP must pass from “record” through “standby” to “play. ” Property: Transitions from “record” to “play” must always pass through “standby”. Timeline: 14

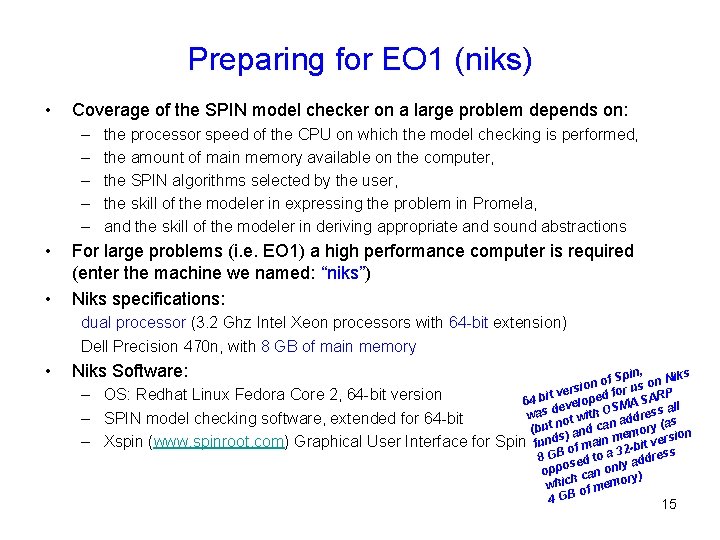

Preparing for EO 1 (niks) • Coverage of the SPIN model checker on a large problem depends on: – – – • • the processor speed of the CPU on which the model checking is performed, the amount of main memory available on the computer, the SPIN algorithms selected by the user, the skill of the modeler in expressing the problem in Promela, and the skill of the modeler in deriving appropriate and sound abstractions For large problems (i. e. EO 1) a high performance computer is required (enter the machine we named: “niks”) Niks specifications: dual processor (3. 2 Ghz Intel Xeon processors with 64 -bit extension) Dell Precision 470 n, with 8 GB of main memory • Niks Software: – OS: Redhat Linux Fedora Core 2, 64 -bit version – SPIN model checking software, extended for 64 -bit – Xspin (www. spinroot. com) Graphical User Interface for , Spin n Niks f o o rsion for us e v t d i RP 64 b evelope SMA SA ll a d was ot with O address n n as (but ) and ca emory ( on Spin funds of main m 2 -bit versi 3 8 GB ed to a address s y oppo can onl y) r h whic of memo 4 GB 15

Preparing for EO 1 (Test cases) • Test cases are needed to test that our tools are generating high fidelity Promela models. • We have developed a set of Promela models and properties by hand from the CASPER input model test suite. • This permits us to compare model checking results between the hand generated models and our autogenerated Promela models. 16

Challenges • The ASPEN language structure is not rigorously defined in the documentation. – parser debugging may have to be revisited as we obtain new ASPEN models • Automated abstraction – resource abstraction can help to reduce the search space and thus further optimize the model checking process • Schedule – EO 1 extended mission may end this Summer – possibly before we complete our tools. 17

Conclusions Our Goal: Develop a better and more thorough method for testing input models to planners to reduce the likelihood that an input model produces undesirable plans. • We have made significant progress in defining and implementing the tools necessary to auto-generate Spin models from ASPEN models. • These tools will be applied to model check the EO 1 input models as well as other ASPEN models (i. e. 3 CS and future applications) • Next steps: – complete and test our tool suite – work with EO 1 project to develop a suite of EO 1 properties to check – perform logic model checking and report results 18 SAS_05_Verifying_ Autonomous_Planners_Smith