Prefetching Challenges in Distributed Memories for CMPs Mart

Prefetching Challenges in Distributed Memories for CMPs Martí Torrents, Raúl Martínez, and Carlos Molina Computer Architecture Department UPC – Barcelona. Tech

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 2

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 3

Prefetching • Reduce memory latency • Bring to a nearest cache next data required by CPU • Increase the hit ratio • It is implemented in most of the commercial processors • Erroneous prefetching may produce – Cache pollution – Resources consumption (queues, bandwidth, etc. ) – Power consumption 4

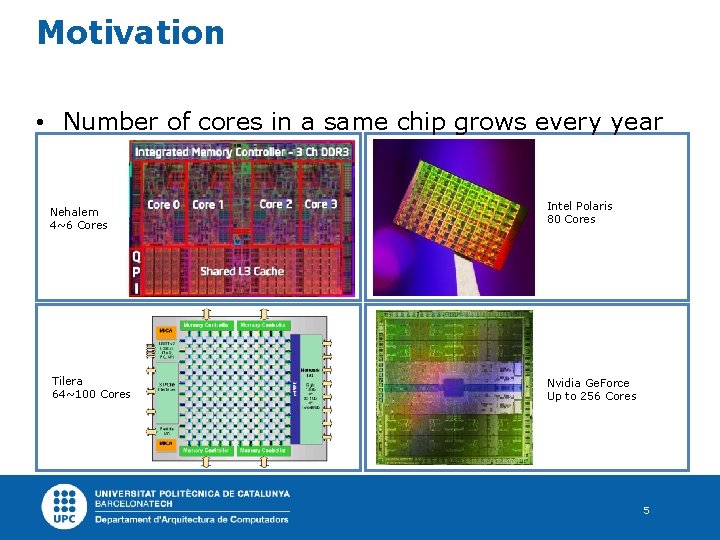

Motivation • Number of cores in a same chip grows every year Nehalem 4~6 Cores Tilera 64~100 Cores Intel Polaris 80 Cores Nvidia Ge. Force Up to 256 Cores 5

Prefetch in CMPs • Useful prefetchers implies more performance – Avoid network latency – Reduce memory access latency • Useless prefetchers implies less performance – More power consumption – More No. C congestion – Interference with other cores requests 6

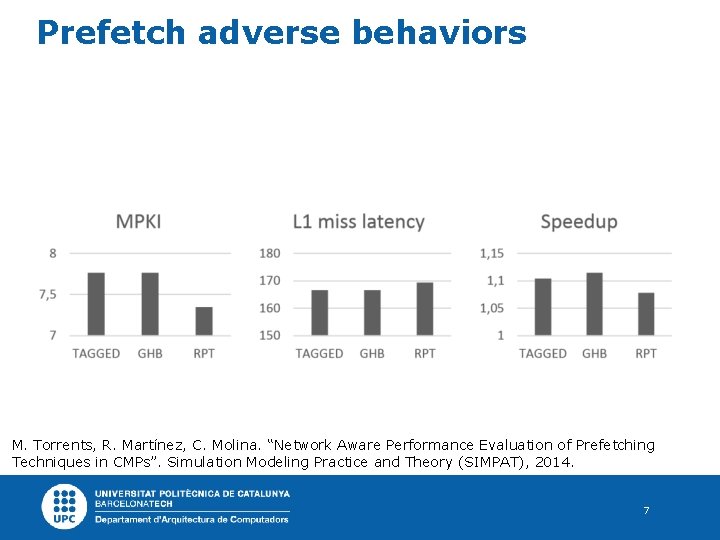

Prefetch adverse behaviors M. Torrents, R. Martínez, C. Molina. “Network Aware Performance Evaluation of Prefetching Techniques in CMPs”. Simulation Modeling Practice and Theory (SIMPAT), 2014. 7

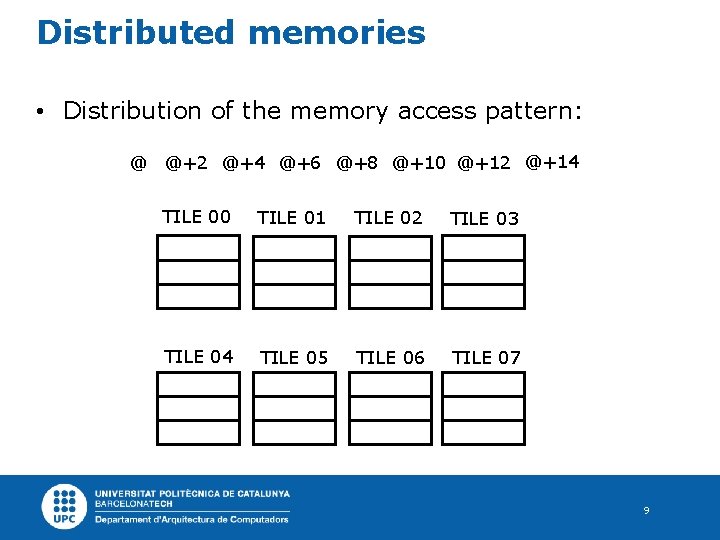

Distributed memories • Distribution of the memory access pattern: @ @+2 @+4 @+6 @+8 @+10 @ @+2 @+4 @+6 @+8 @ + 10 8

Distributed memories • Distribution of the memory access pattern: @ @+2 @+4 @+6 @+8 @+10 @+12 @+14 TILE 00 TILE 01 TILE 02 TILE 03 @ @+2 @+4 @+6 TILE 04 TILE 05 TILE 06 @+8 @ + 10 @ + 12 TILE 07 @ + 14 9

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 10

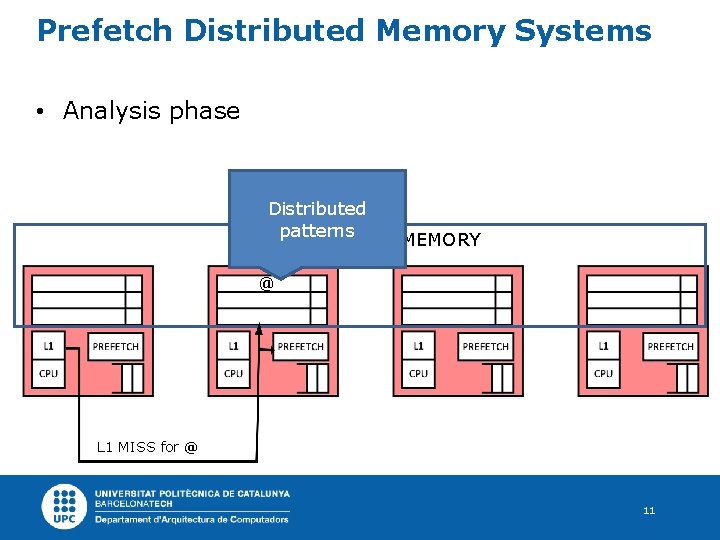

Prefetch Distributed Memory Systems • Analysis phase Distributed patterns DISTRIBUTED L 2 MEMORY @ L 1 MISS for @ 11

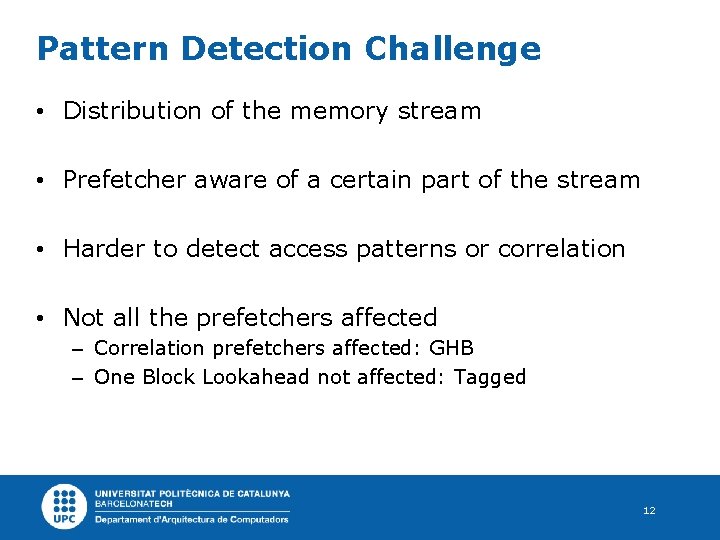

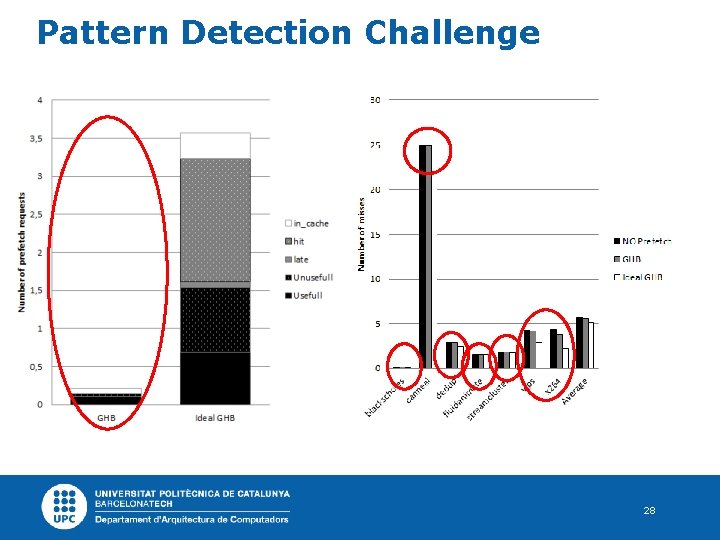

Pattern Detection Challenge • Distribution of the memory stream • Prefetcher aware of a certain part of the stream • Harder to detect access patterns or correlation • Not all the prefetchers affected – Correlation prefetchers affected: GHB – One Block Lookahead not affected: Tagged 12

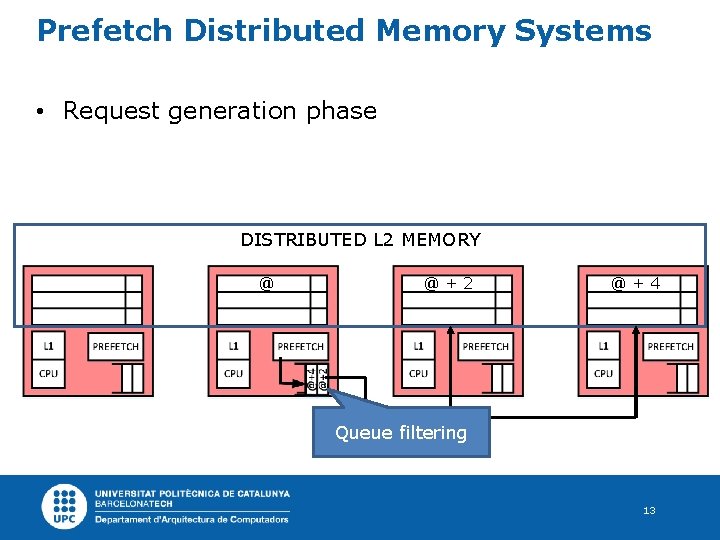

Prefetch Distributed Memory Systems • Request generation phase DISTRIBUTED L 2 MEMORY @ @+2 @+4 Queue filtering 13

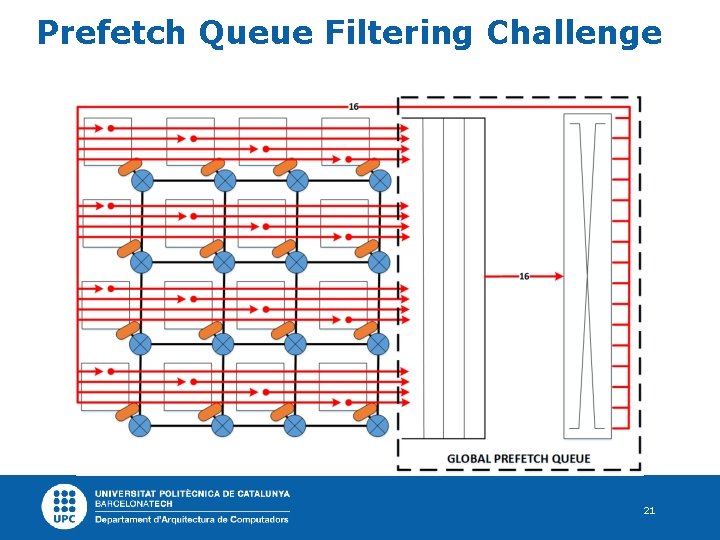

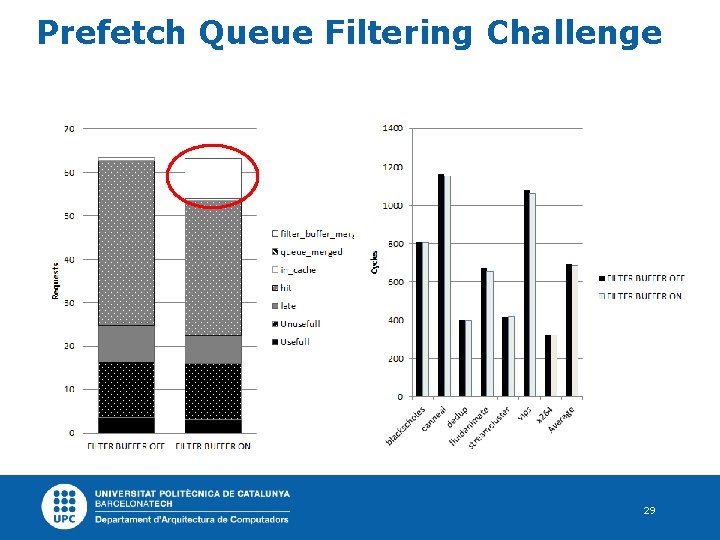

Prefetch Queue Filtering Challenge • Prefetch requests queued in distributed queues • Independent engines generating requests • Repeated requests can be queued • In a centralized queue those would be merged • Adverse effects: – Power consumption – Network contention 14

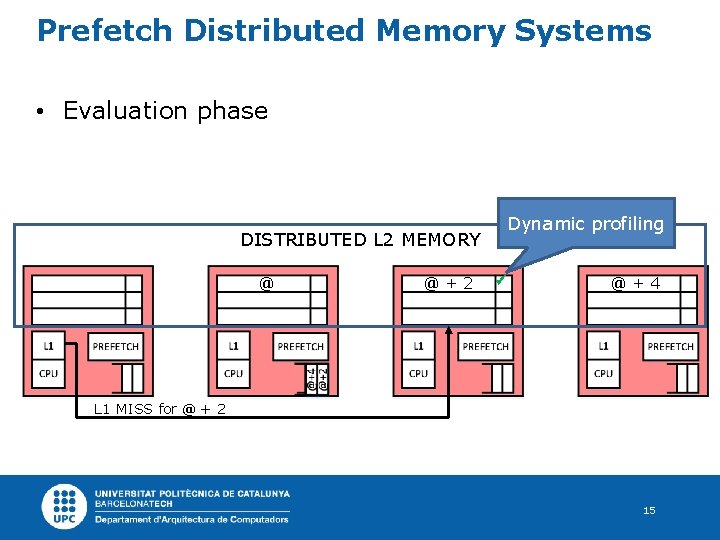

Prefetch Distributed Memory Systems • Evaluation phase Dynamic profiling DISTRIBUTED L 2 MEMORY @ @+2 @+4 L 1 MISS for @ + 2 15

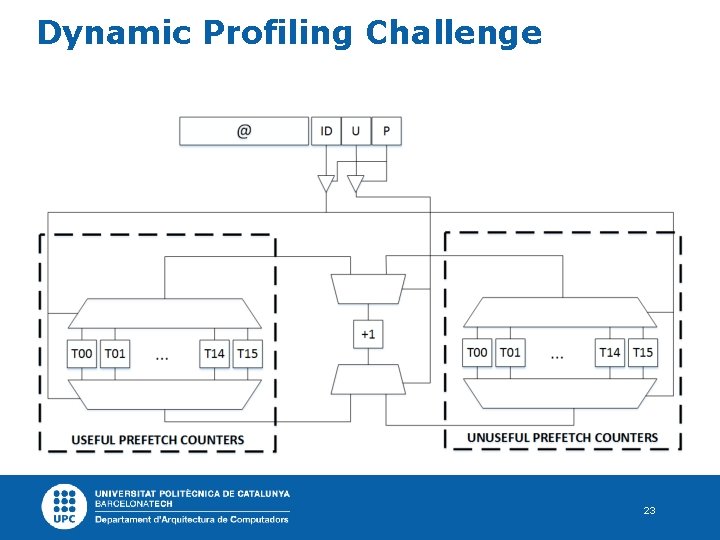

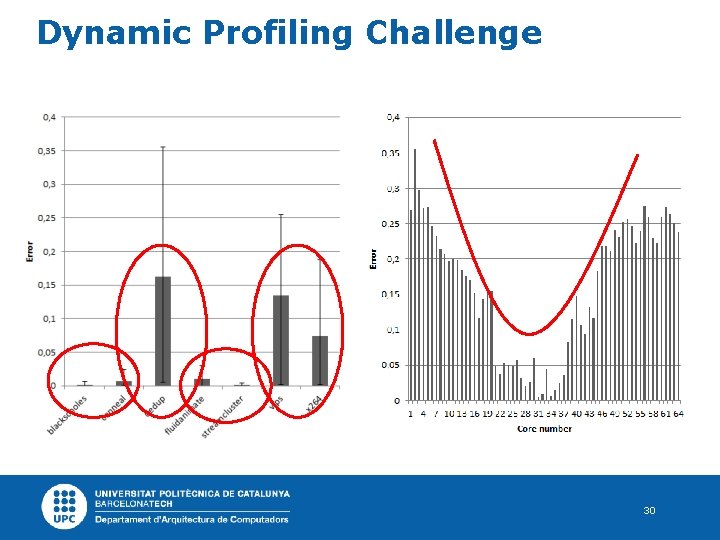

Dynamic Profiling Challenge • Prefetch requests generated in one tile • Dynamic profiling information in another tile • Erroneous profiling in the self tile • Techniques using this info may work erroneously – Filtering – Throttling – Concrete prefetching engines 16

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 17

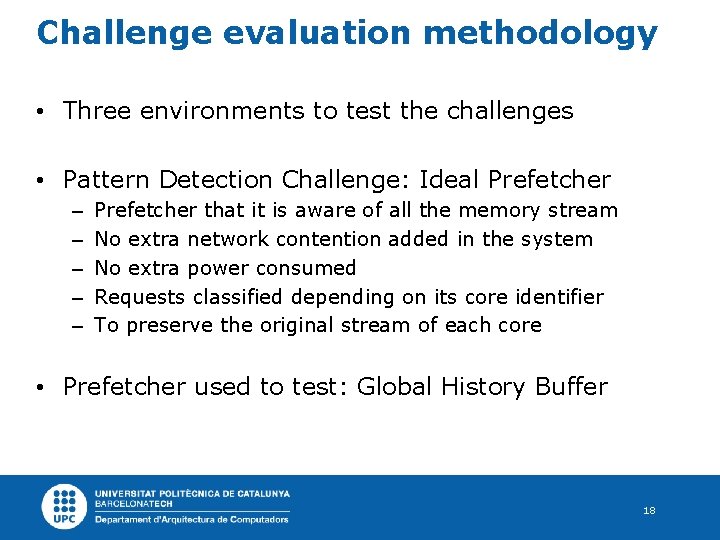

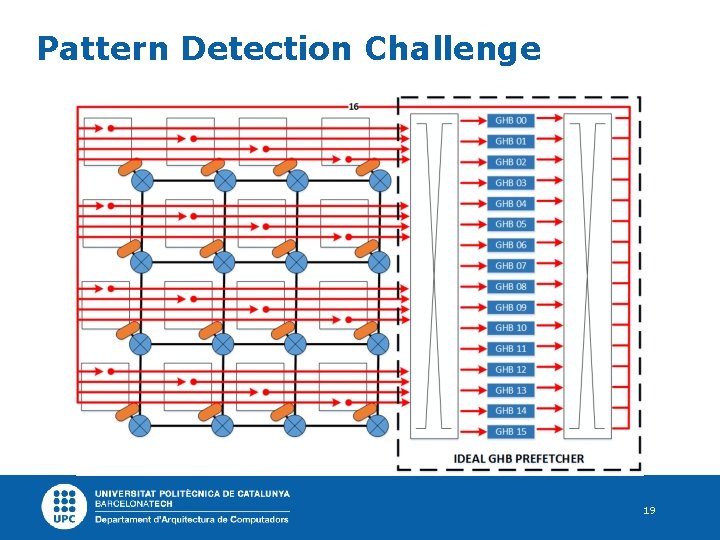

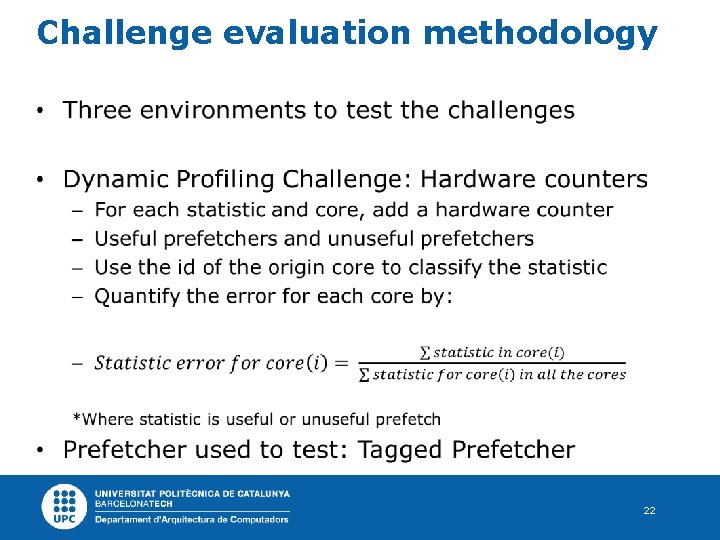

Challenge evaluation methodology • Three environments to test the challenges • Pattern Detection Challenge: Ideal Prefetcher – – – Prefetcher that it is aware of all the memory stream No extra network contention added in the system No extra power consumed Requests classified depending on its core identifier To preserve the original stream of each core • Prefetcher used to test: Global History Buffer 18

Pattern Detection Challenge 19

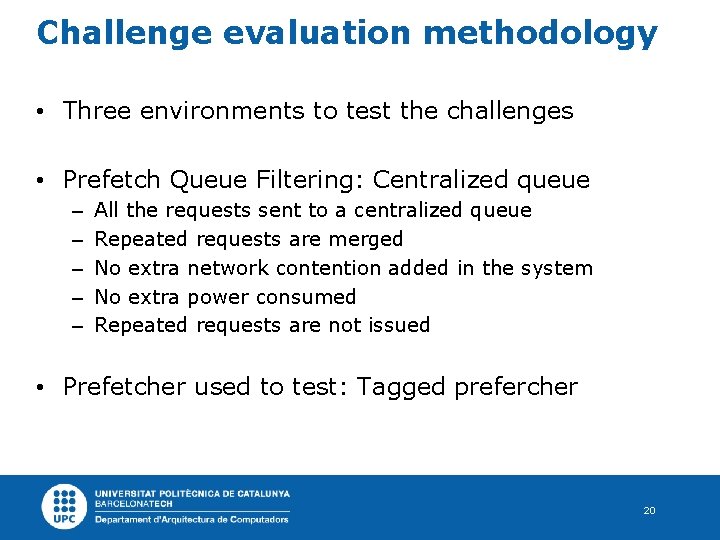

Challenge evaluation methodology • Three environments to test the challenges • Prefetch Queue Filtering: Centralized queue – – – All the requests sent to a centralized queue Repeated requests are merged No extra network contention added in the system No extra power consumed Repeated requests are not issued • Prefetcher used to test: Tagged prefercher 20

Prefetch Queue Filtering Challenge 21

Challenge evaluation methodology • 22

Dynamic Profiling Challenge 23

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 24

Experimental framework • Gem 5 – – – 64 x 86 CPUs Ruby memory system L 2 prefetchers MOESI coherency protocol Garnet network simulator • Parsecs 2. 1 25

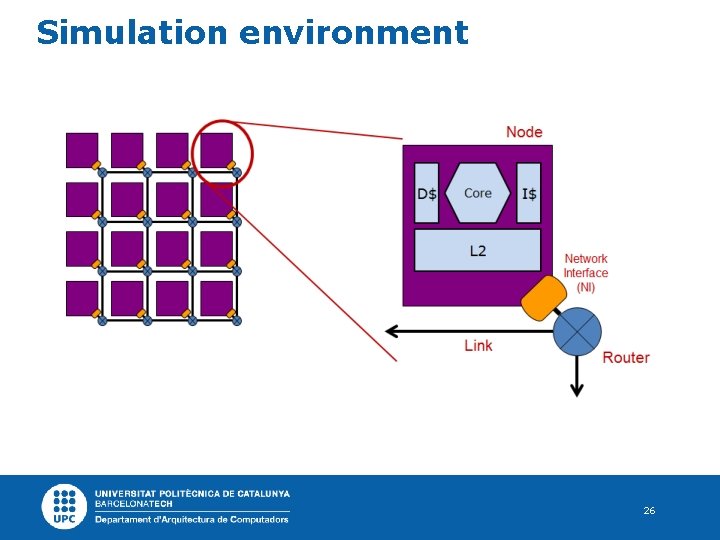

Simulation environment 26

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 27

Pattern Detection Challenge 28

Prefetch Queue Filtering Challenge 29

Dynamic Profiling Challenge 30

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 31

Facing the challenges • There are two main options – Redesign the entire prefetch philosophy – Adapt the current techniques to work with DSMs • Moreover, there are two main directions – Centralize the information – Handicap of communication increment – Distribute the prefetcher – Handicap of smartly distribute the prefetcher 32

Outline Introduction Naming the challenges Challenge evaluation methodology Experimental framework Challenge Quantification Facing the Challenges Conclusions 33

Conclusions • Three challenges when prefetching in DSMs – Prefetch Queue Filtering Challenge – Dynamic Profiling Challenge – Challenge evaluation methodology • Directions for future investigators • There are no evident solutions for them • Not solving them -> limited prefetch performance 34

Q&A 35

Prefetching Challenges in Distributed Memories for CMPs Martí Torrents, Raúl Martínez, and Carlos Molina Computer Architecture Department UPC – Barcelona. Tech

- Slides: 36