PreExecution via Speculative Data Driven Multithreading Amir Roth

![Related Work: Architectures • dataflow architectures Ø [Dennis 75], Manchester [Gurd+85], TTDA [Arvind+90], ETS Related Work: Architectures • dataflow architectures Ø [Dennis 75], Manchester [Gurd+85], TTDA [Arvind+90], ETS](https://slidetodoc.com/presentation_image_h2/505d5bc508c24e66fd50c577da465dbb/image-45.jpg)

![Related Work: Microarchitectures • decoupled runahead Ø slipstream [Rotenberg+00] – decoupled, not proactive out-of-order Related Work: Microarchitectures • decoupled runahead Ø slipstream [Rotenberg+00] – decoupled, not proactive out-of-order](https://slidetodoc.com/presentation_image_h2/505d5bc508c24e66fd50c577da465dbb/image-46.jpg)

- Slides: 53

Pre-Execution via Speculative Data Driven Multithreading Amir Roth University of Wisconsin, Madison August 10, 2001 Amir Roth Pre-Execution via Speculative Data -Driven Multithreading

Explanation of Title Slide • pre-execution: a new way of extracting additional instructionlevel parallelism (ILP), and hence performance, from ordinary sequential programs • speculative data-driven multithreading (DDMT): an implementation of pre-execution thesis: pre-execution and DDMT are good Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 2

Summary of Contributions • pre-execution (concept) – idea: execute to get unpredictable but performance critical values – technology: proactive out-of-order sequencing, decoupling • DDMT (proposed implementation) – idea: extend superscalar design, siphon pre-execution bandwidth – technology: register integration • algorithm for selecting what to pre-execute (framework) – idea: automatically select from computations executed Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 3 by program

Why Am I Doing This? • still need higher performance – new app’s, better performance on existing app’s • still need higher sequential-program performance – many out there, parallel/MT programs composed of sequential code • need parallelism to complement frequency – frequency getting harder, performance returns diminishing • need ILP to complement [program, thread, bit]LP Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 4

Outline • motivation and introduction to pre-execution – problem we are solving and potential gain of solving it (3 slides) – pre-execution basics (5 slides) • • • pre-execution DDMT style automated computation selection DDMT microarchitecture performance evaluation terrace Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 5

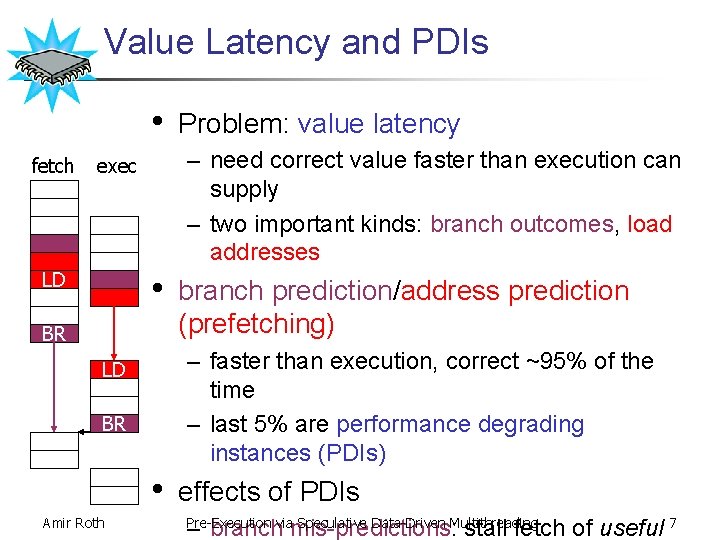

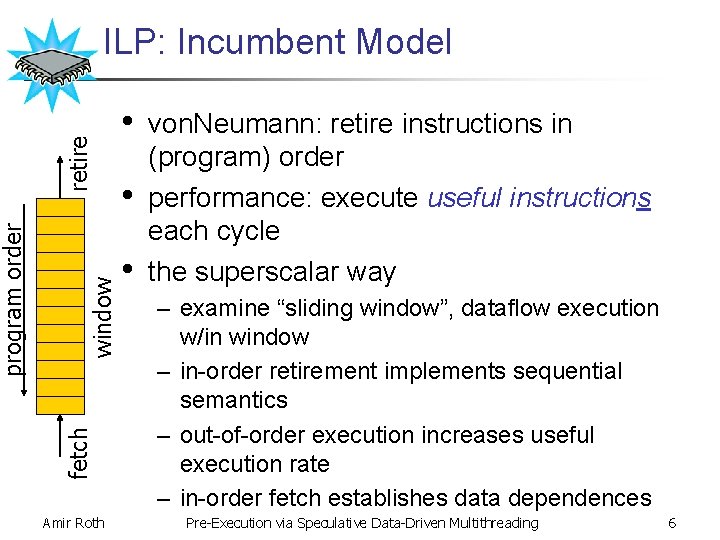

window retire fetch program order ILP: Incumbent Model Amir Roth • • • von. Neumann: retire instructions in (program) order performance: execute useful instructions each cycle the superscalar way – examine “sliding window”, dataflow execution w/in window – in-order retirement implements sequential semantics – out-of-order execution increases useful execution rate – in-order fetch establishes data dependences Pre-Execution via Speculative Data-Driven Multithreading 6

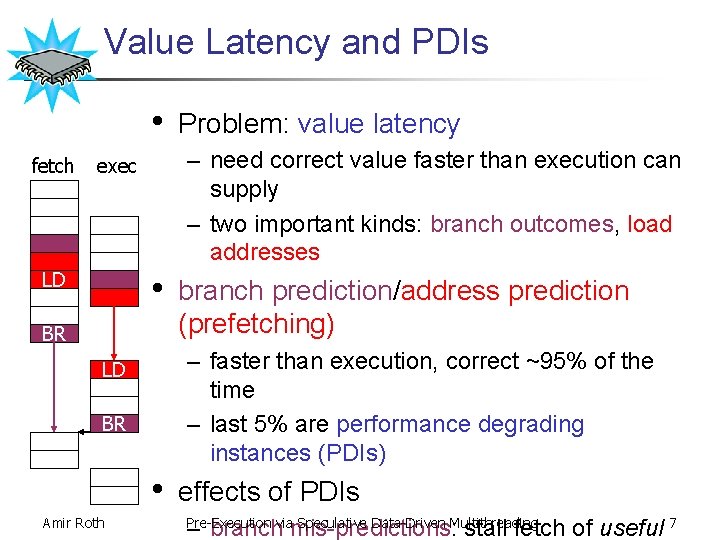

Value Latency and PDIs • fetch – need correct value faster than execution can supply – two important kinds: branch outcomes, load addresses exec • LD BR branch prediction/address prediction (prefetching) – faster than execution, correct ~95% of the time – last 5% are performance degrading instances (PDIs) LD BR • Amir Roth Problem: value latency effects of PDIs Pre-Execution via Speculative Data-Driven Multithreading – branch mis-predictions: stall fetch of useful 7

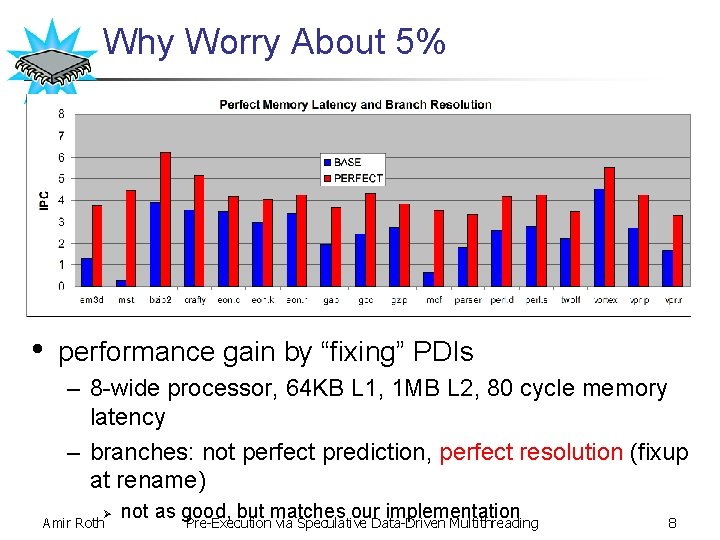

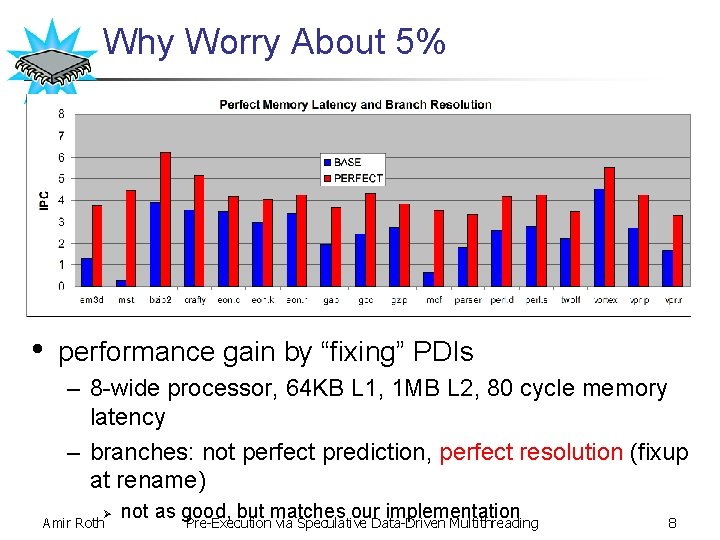

Why Worry About 5% • performance gain by “fixing” PDIs – 8 -wide processor, 64 KB L 1, 1 MB L 2, 80 cycle memory latency – branches: not perfect prediction, perfect resolution (fixup at rename) Ø Amir Roth not as good, but matches our implementation Pre-Execution via Speculative Data-Driven Multithreading 8

Pre-Execution • dilemma – need PDI values faster than execution – can only accurately get PDI values using execution • pre-execution: execution that is faster than execution – part I: (pre) execute PDIs faster than original program – part II: communicate pre-executed PDI values to original program – part II: cache for loads, ? ? for branches (implementation dependent) – part I: the crux Amir–Roth via Speculative Multithreading otherwise just 9 important: Pre-Execution pre-execute PDIData-Driven computations,

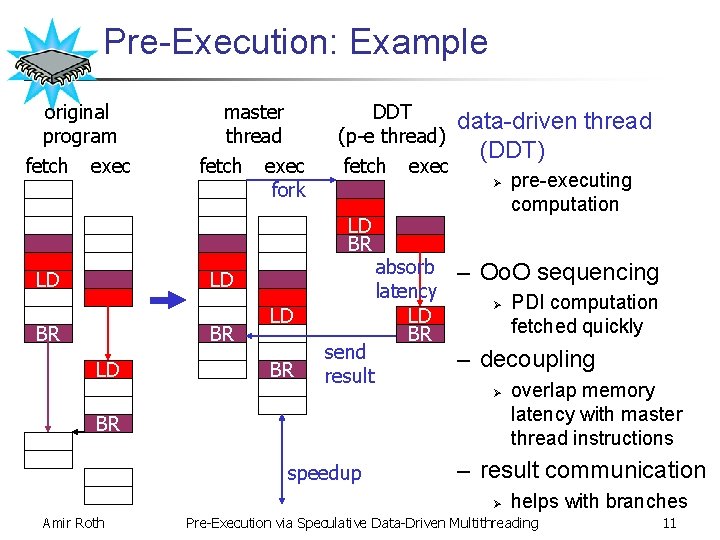

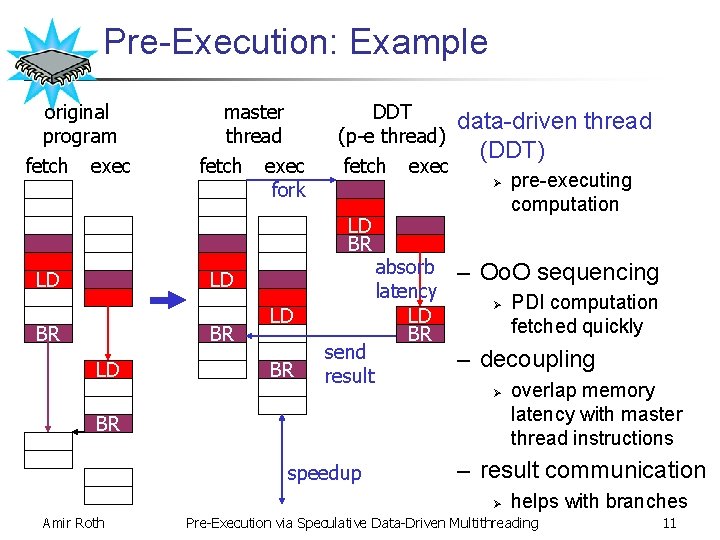

Q: How to Execute Faster than Execution? • A part I: proactive, out-of-order sequencing (fetch) – – • execute (and fetch) fewer instructions (fetch >> battle/2) out-of-order: PDI computation only (not full program) proactive: “know” PDI is coming and its computation hoist computation (and its latency) arbitrary distances A part II: decoupling – pre-execute PDI computation in separate “thread” – “move” stalls to pre-execution thread (inter-thread overlapping) proactive Oo. O sequencing + decoupling = preexecution Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 10

Pre-Execution: Example original program fetch exec master thread fetch DDT (p-e thread) exec fork fetch LD BR LD LD BR send result exec absorb latency LD BR data-driven thread (DDT) Ø – Oo. O sequencing Ø Ø overlap memory latency with master thread instructions – result communication Ø Amir Roth PDI computation fetched quickly – decoupling BR speedup pre-executing computation helps with branches Pre-Execution via Speculative Data-Driven Multithreading 11

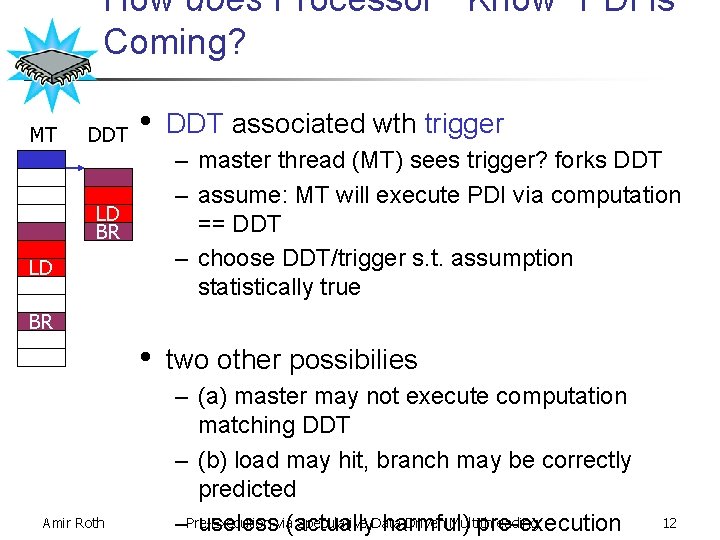

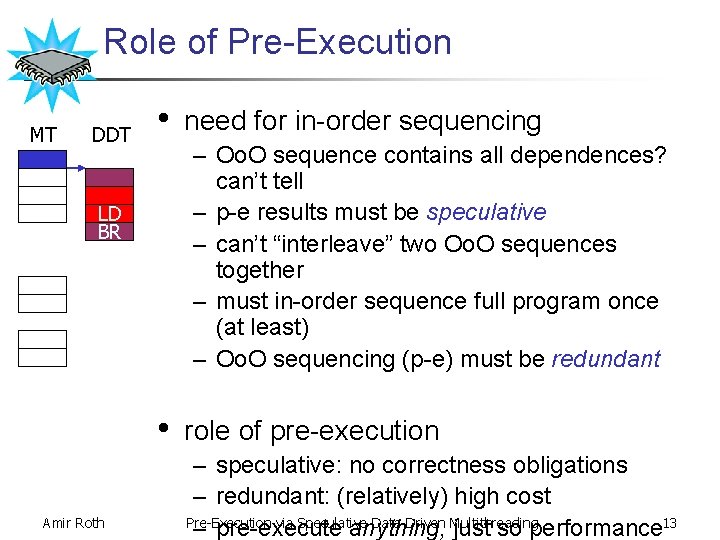

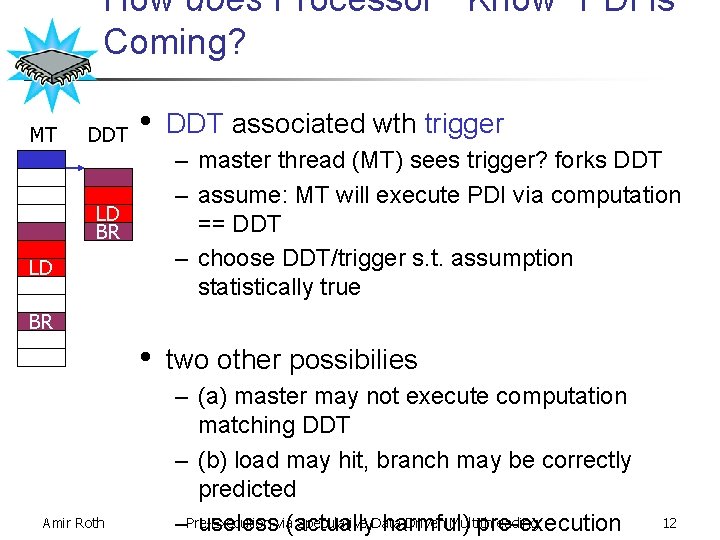

How does Processor “Know” PDI is Coming? MT DDT • DDT associated wth trigger – master thread (MT) sees trigger? forks DDT – assume: MT will execute PDI via computation == DDT – choose DDT/trigger s. t. assumption statistically true LD BR • Amir Roth two other possibilies – (a) master may not execute computation matching DDT – (b) load may hit, branch may be correctly predicted Speculative Data-Driven Multithreading –Pre-Execution uselessvia(actually harmful) pre-execution 12

Role of Pre-Execution MT DDT • – Oo. O sequence contains all dependences? can’t tell – p-e results must be speculative – can’t “interleave” two Oo. O sequences together – must in-order sequence full program once (at least) – Oo. O sequencing (p-e) must be redundant LD BR • Amir Roth need for in-order sequencing role of pre-execution – speculative: no correctness obligations – redundant: (relatively) high cost Pre-Execution via Speculative Data-Driven Multithreading – pre-execute anything, just so performance 13

Outline • • intro to pre-execution DDMT style – DDMT basics (1 slides) – intro to register integration (2 slides) – implicit data-driven sequencing (2 slides) • • • automated computation selection DDMT microarchitecture experimental evaluation Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 14

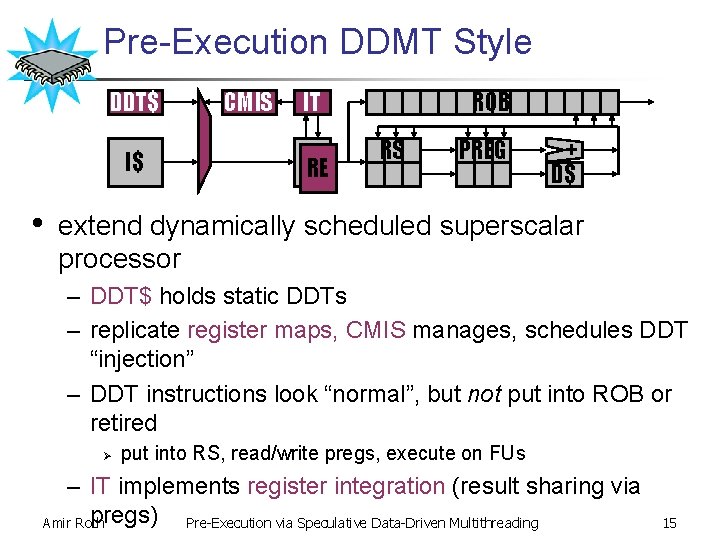

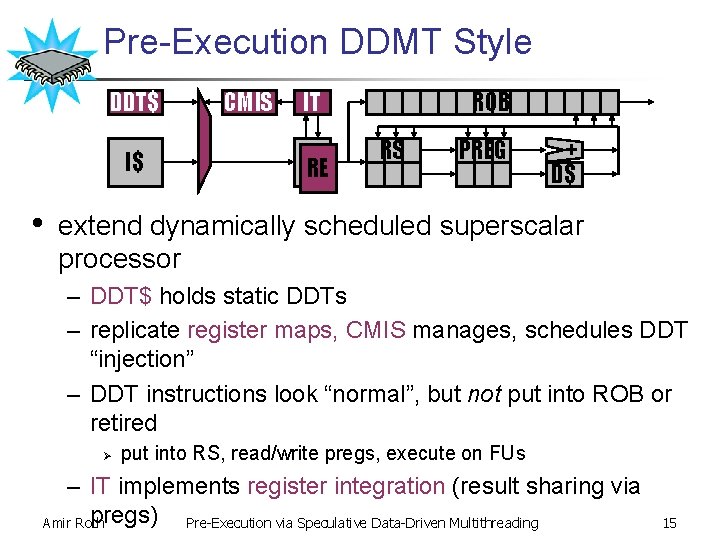

Pre-Execution DDMT Style DDT$ I$ • CMIS IT RE RE ROB RS PREG + D$ extend dynamically scheduled superscalar processor – DDT$ holds static DDTs – replicate register maps, CMIS manages, schedules DDT “injection” – DDT instructions look “normal”, but not put into ROB or retired Ø put into RS, read/write pregs, execute on FUs – IT implements register integration (result sharing via pregs) Pre-Execution via Speculative Data-Driven Multithreading Amir Roth 15

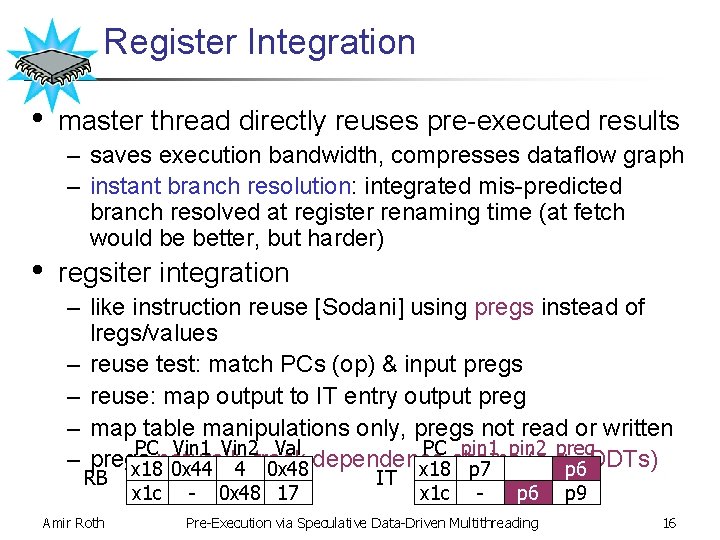

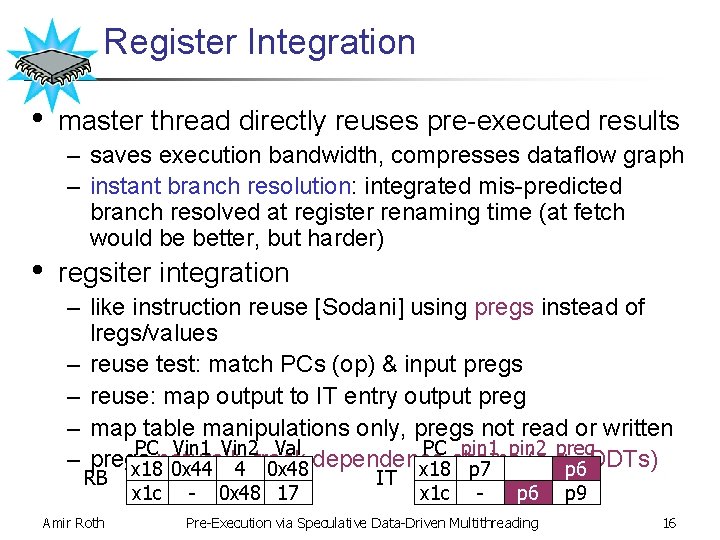

Register Integration • • master thread directly reuses pre-executed results – saves execution bandwidth, compresses dataflow graph – instant branch resolution: integrated mis-predicted branch resolved at register renaming time (at fetch would be better, but harder) regsiter integration – like instruction reuse [Sodani] using pregs instead of lregs/values – reuse test: match PCs (op) & input pregs – reuse: map output to IT entry output preg – map table manipulations only, pregs not read or written PC Vin 1 Vin 2 Val PC pin 1 pin 2 preg – pregs naturally track dependence (e. g. , x 18 0 x 44 4 0 x 48 x 18 chains p 7 p 6 DDTs) RB Amir Roth x 1 c - 0 x 48 17 IT x 1 c - p 6 Pre-Execution via Speculative Data-Driven Multithreading p 9 16

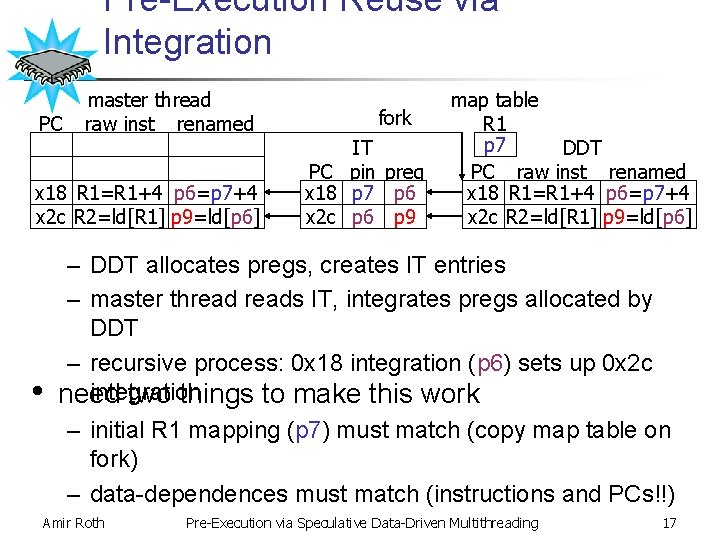

Pre-Execution Reuse via Integration PC x 18 x 2 c • master thread raw inst renamed R 1=R 1+1 p 6=p 7+1 R 1=R 1+4 p 6=p 7+4 p? =p 7+4 R 2=ld[R 1] p 9=ld[p 6] p? =ld[p 6] fork IT PC pin preg x 18 p 7 p 6 x 2 c p 6 p 9 map table R 1 p 7 DDT PC raw inst renamed x 18 R 1=R 1+4 p 6=p 7+4 x 2 c R 2=ld[R 1] p 9=ld[p 6] – DDT allocates pregs, creates IT entries – master threads IT, integrates pregs allocated by DDT – recursive process: 0 x 18 integration (p 6) sets up 0 x 2 c integration need two things to make this work – initial R 1 mapping (p 7) must match (copy map table on fork) – data-dependences must match (instructions and PCs!!) Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 17

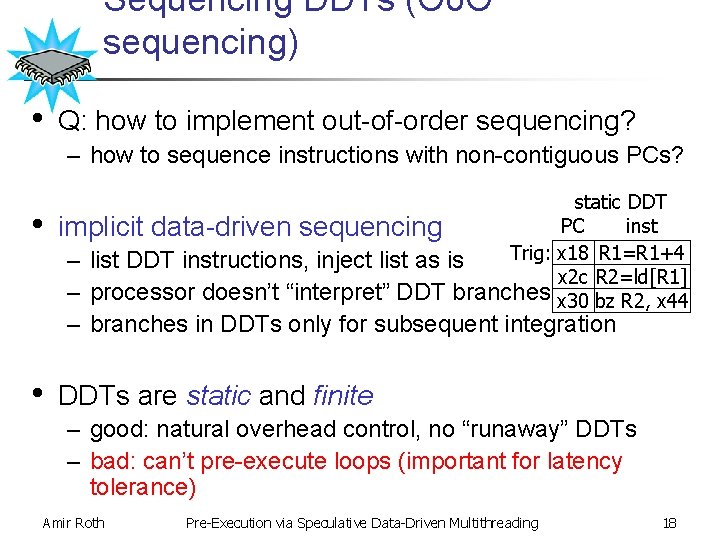

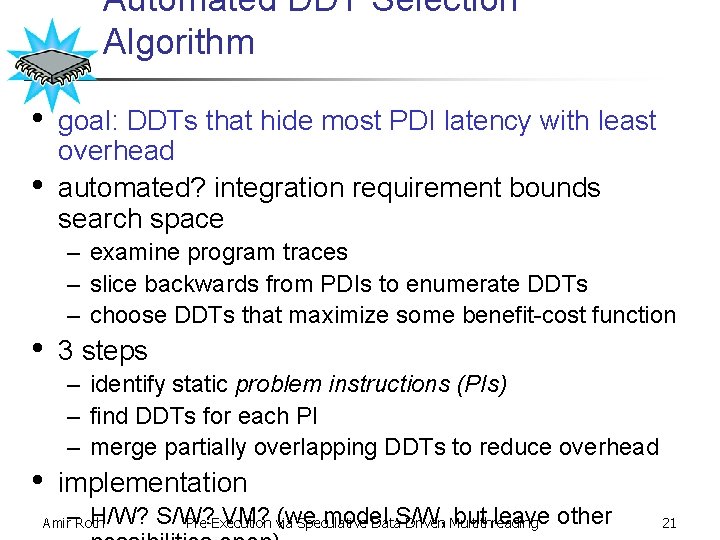

Sequencing DDTs (Oo. O sequencing) • Q: how to implement out-of-order sequencing? – how to sequence instructions with non-contiguous PCs? • static DDT PC inst implicit data-driven sequencing Trig: x 18 R 1=R 1+4 – list DDT instructions, inject list as is x 2 c R 2=ld[R 1] – processor doesn’t “interpret” DDT branches x 30 bz R 2, x 44 – branches in DDTs only for subsequent integration • DDTs are static and finite – good: natural overhead control, no “runaway” DDTs – bad: can’t pre-execute loops (important for latency tolerance) Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 18

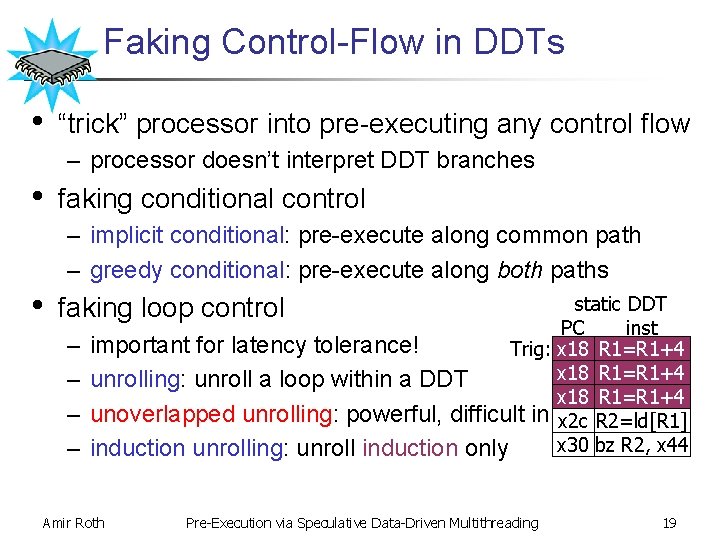

Faking Control-Flow in DDTs • “trick” processor into pre-executing any control flow – processor doesn’t interpret DDT branches • faking conditional control – implicit conditional: pre-execute along common path – greedy conditional: pre-execute along both paths • static DDT PC inst important for latency tolerance! Trig: x 18 R 1=R 1+4 unrolling: unroll a loop within a DDT x 18 R 1=R 1+4 unoverlapped unrolling: powerful, difficult in DDMT x 2 c R 2=ld[R 1] x 30 bz R 2, x 44 induction unrolling: unroll induction only faking loop control – – Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 19

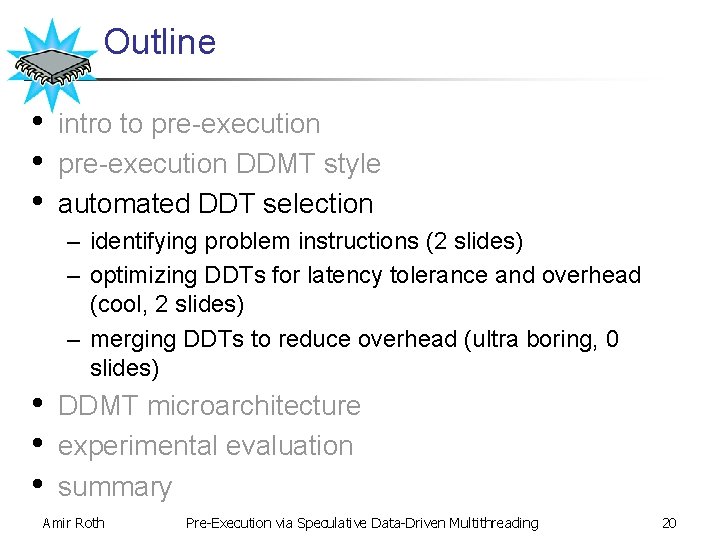

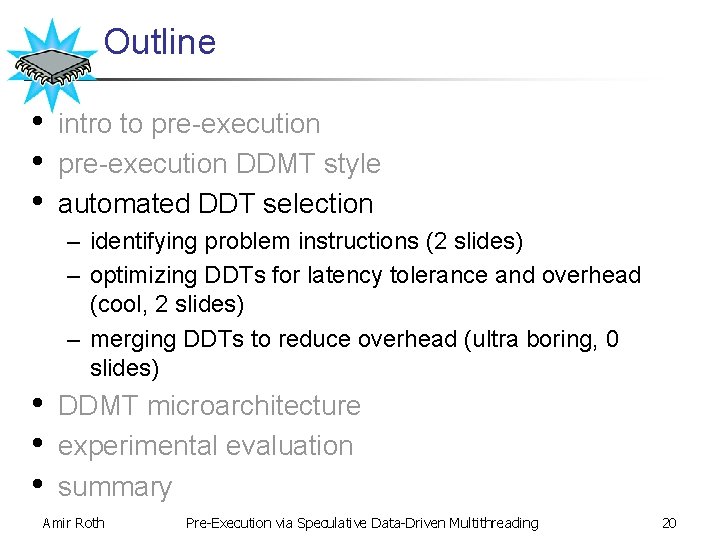

Outline • • • intro to pre-execution DDMT style automated DDT selection – identifying problem instructions (2 slides) – optimizing DDTs for latency tolerance and overhead (cool, 2 slides) – merging DDTs to reduce overhead (ultra boring, 0 slides) • • • DDMT microarchitecture experimental evaluation summary Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 20

Automated DDT Selection Algorithm • • goal: DDTs that hide most PDI latency with least overhead automated? integration requirement bounds search space – examine program traces – slice backwards from PDIs to enumerate DDTs – choose DDTs that maximize some benefit-cost function 3 steps – identify static problem instructions (PIs) – find DDTs for each PI – merge partially overlapping DDTs to reduce overhead implementation – H/W? S/W? VM? via (we model S/W, Multithreading but leave other Pre-Execution Speculative Data-Driven Amir Roth 21

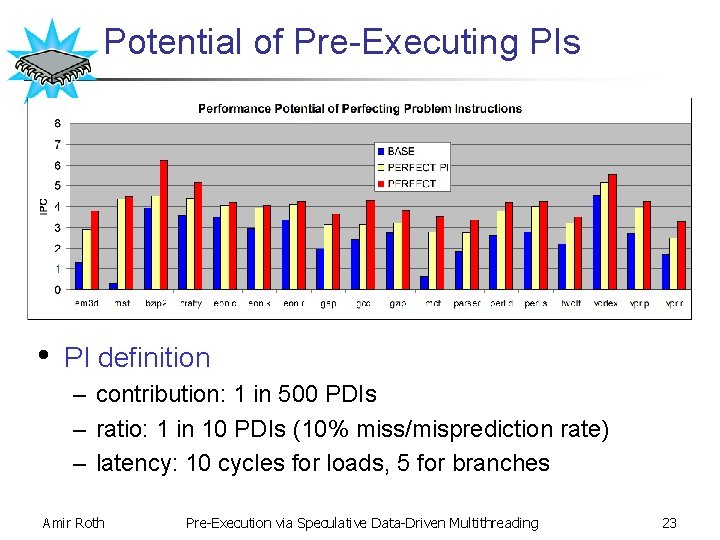

Problem Instructions (PIs) • impractical to pre-execute all PDIs – divide PDIs by static instruction – choose static problem instructions (PIs) with good “preexecutability” – only find-DDTs-for / pre-execute-PDIs-of PIs • good pre-executability criteria – problem ratio: high ratio of PDIs (high miss/misprediction rate) – problem contribution: high PDI representation – problem latency: high latency per PDI Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 22

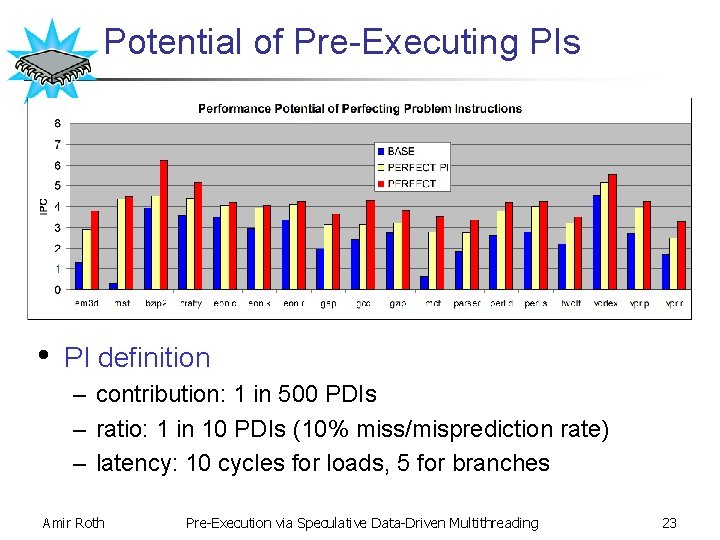

Potential of Pre-Executing PIs • PI definition – contribution: 1 in 500 PDIs – ratio: 1 in 10 PDIs (10% miss/misprediction rate) – latency: 10 cycles for loads, 5 for branches Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 23

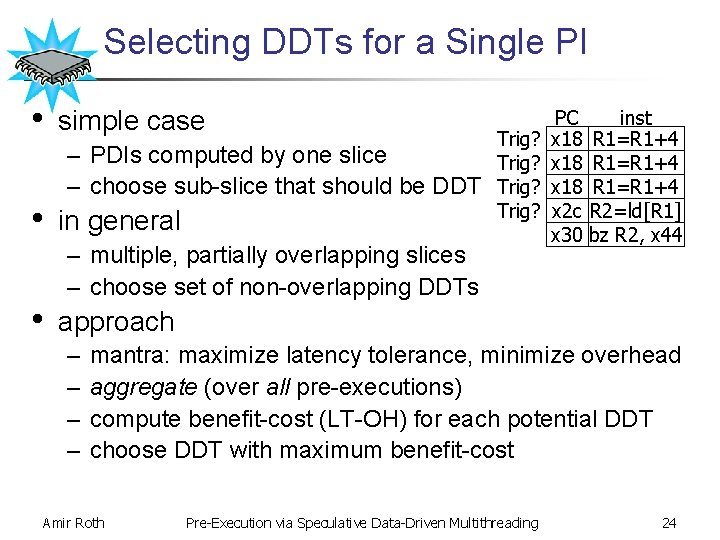

Selecting DDTs for a Single PI • • • simple case – PDIs computed by one slice – choose sub-slice that should be DDT in general Trig? – multiple, partially overlapping slices – choose set of non-overlapping DDTs PC x 18 x 2 c x 30 inst R 1=R 1+4 R 2=ld[R 1] bz R 2, x 44 approach – – mantra: maximize latency tolerance, minimize overhead aggregate (over all pre-executions) compute benefit-cost (LT-OH) for each potential DDT choose DDT with maximum benefit-cost Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 24

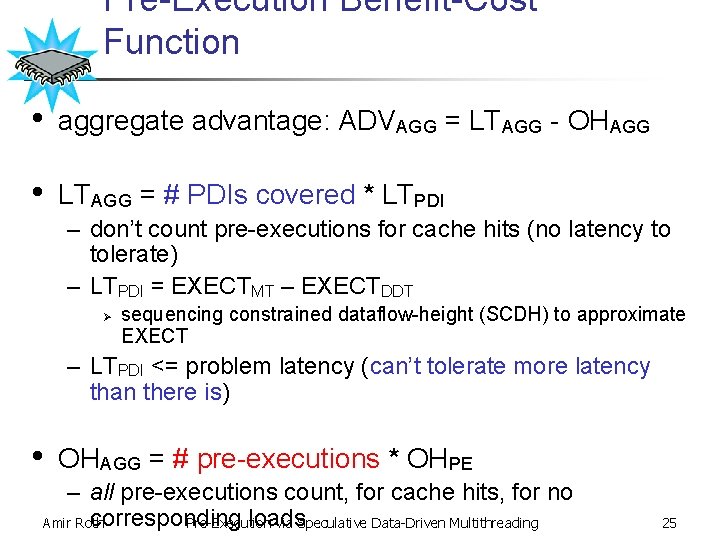

Pre-Execution Benefit-Cost Function • aggregate advantage: ADVAGG = LTAGG - OHAGG • LTAGG = # PDIs covered * LTPDI – don’t count pre-executions for cache hits (no latency to tolerate) – LTPDI = EXECTMT – EXECTDDT Ø sequencing constrained dataflow-height (SCDH) to approximate EXECT – LTPDI <= problem latency (can’t tolerate more latency than there is) • OHAGG = # pre-executions * OHPE – all pre-executions count, for cache hits, for no corresponding loads Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 25

Outline • • intro to pre-execution DDMT style automated DDT selection DDMT microarchitecture – implementing register integration (1 slide) – other implementation notes (1 slide) • • experimental evaluation summary Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 26

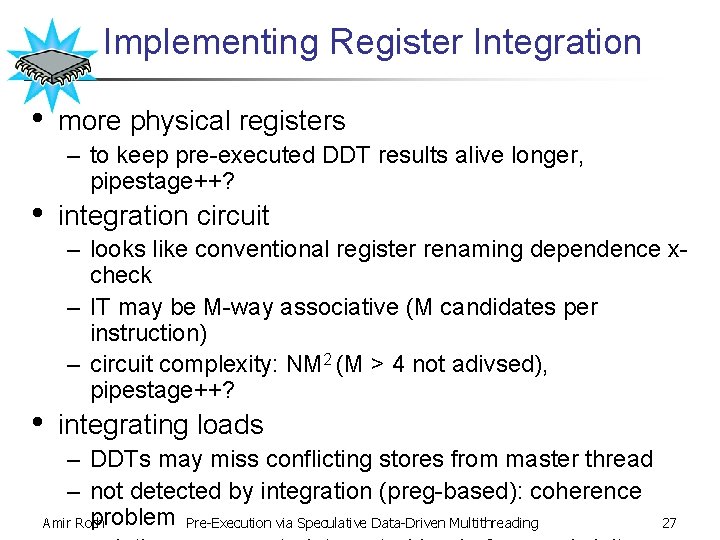

Implementing Register Integration • • • more physical registers – to keep pre-executed DDT results alive longer, pipestage++? integration circuit – looks like conventional register renaming dependence xcheck – IT may be M-way associative (M candidates per instruction) – circuit complexity: NM 2 (M > 4 not adivsed), pipestage++? integrating loads – DDTs may miss conflicting stores from master thread – not detected by integration (preg-based): coherence problem Pre-Execution via Speculative Data-Driven Multithreading Amir Roth 27

Other Implementation Notes • forking – only master thread can fork, no “chaining” • injection scheduling – Q: how fast should DDTs be “injected”? – A: at dataflow speed, but no faster (approximate with DDT-1) • stores and DDT memory communication – DDTs can contain stores, can’t write DDT stores into D$ – small queue (DDSQ): write DDT stores, direct “right” DDT loads • exceptions – buffer until instruction integrated (potentially abort rest 28 of Pre-Execution via Speculative Data-Driven Multithreading Amir Roth

Outline • • • intro to pre-execution DDMT style automated DDT selection DDMT microarchitecture experimental evaluation – numbers (3 slides) – more numbers (6 slides) – explanations of numbers (2 slides) Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 29

Experimental Framework • SPECint 2 K, 2 Olden microbenchmarks, Alpha EV 6, –O 3 –fast – training runs, 10% sampling • Simple. Scalar-based simulation environment – 8 -wide, superscalar, out-of-order – 128 ROB, 64 LDQ, 32 STQ, 80 RS – Pipe: 3 fetch, 2 rename/integrate, 2 schedule, 2 reg read, 3 L 1 hit – 32 KB IL 1/64 KB DL 1 (2 -way), 1 MB L 2$ (4 -way), mem b/w: 8 b/cyc. – 1024 pregs, 1024 -entry, 4 -way IT (baseline does squash reuse) Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 30

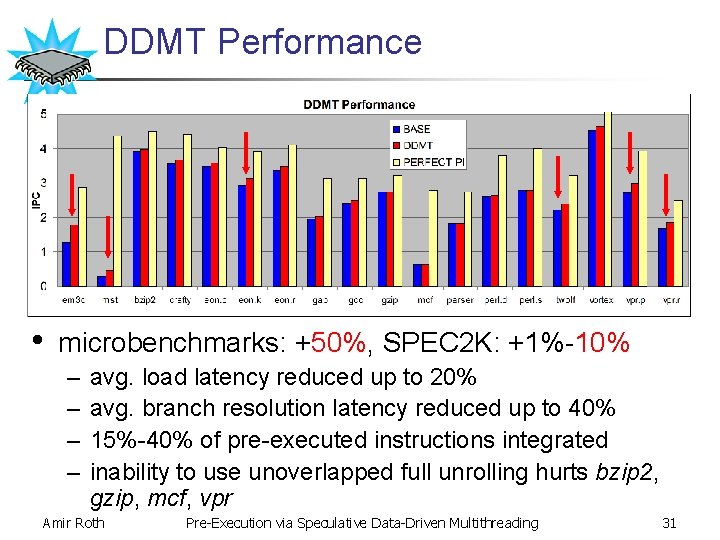

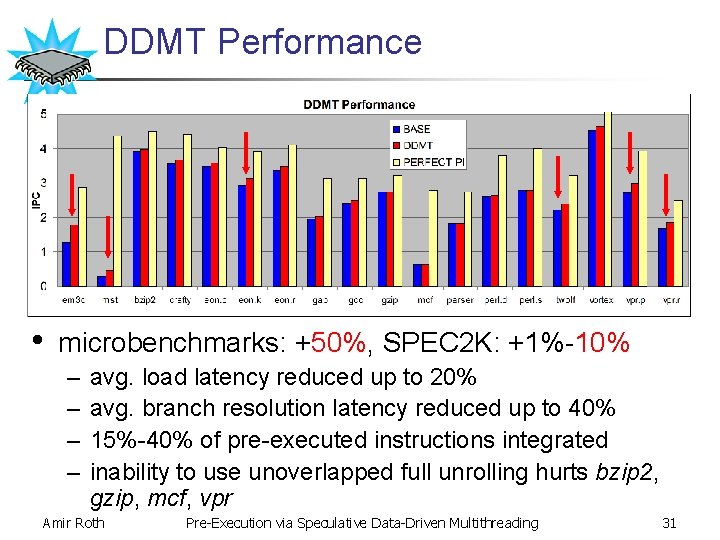

DDMT Performance • microbenchmarks: +50%, SPEC 2 K: +1%-10% – – avg. load latency reduced up to 20% avg. branch resolution latency reduced up to 40% 15%-40% of pre-executed instructions integrated inability to use unoverlapped full unrolling hurts bzip 2, gzip, mcf, vpr Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 31

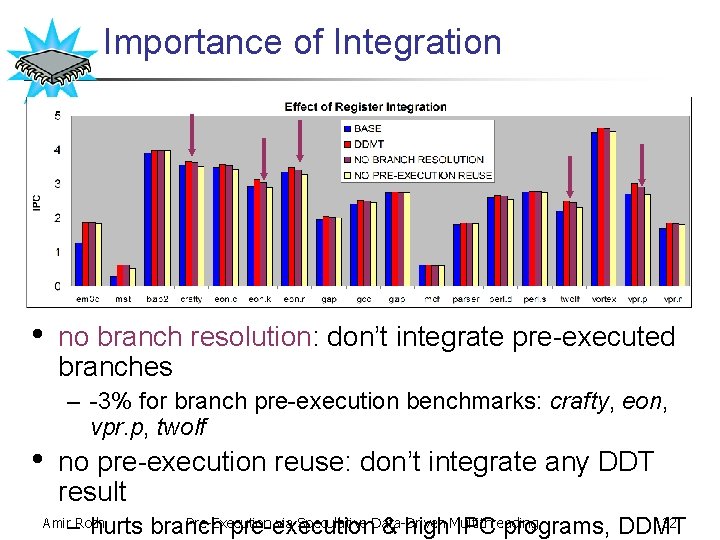

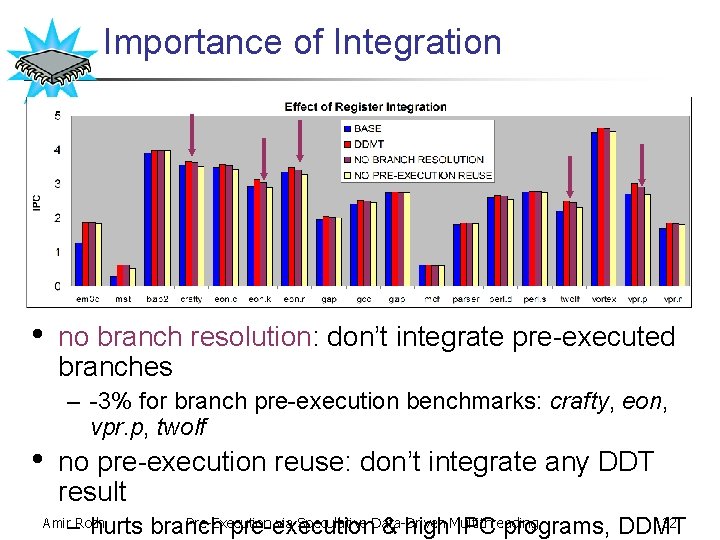

Importance of Integration • • no branch resolution: don’t integrate pre-executed branches – -3% for branch pre-execution benchmarks: crafty, eon, vpr. p, twolf no pre-execution reuse: don’t integrate any DDT result Pre-Execution via Speculative Data-Driven 32 – hurts branch pre-execution & high. Multithreading IPC programs, DDMT Amir Roth

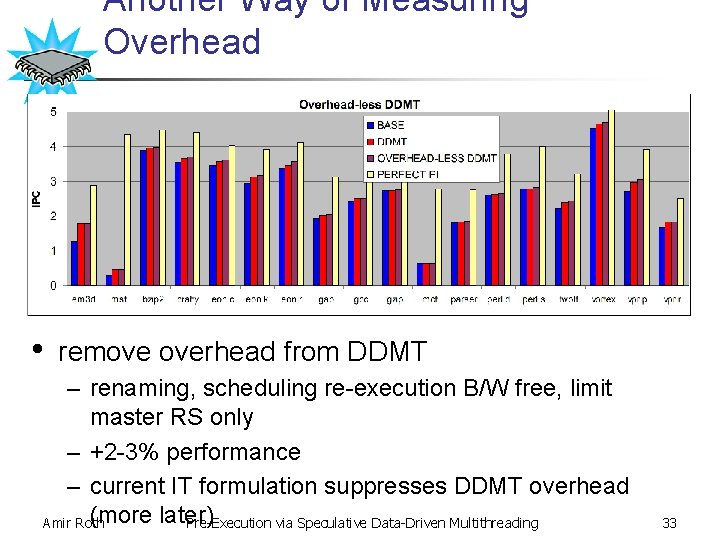

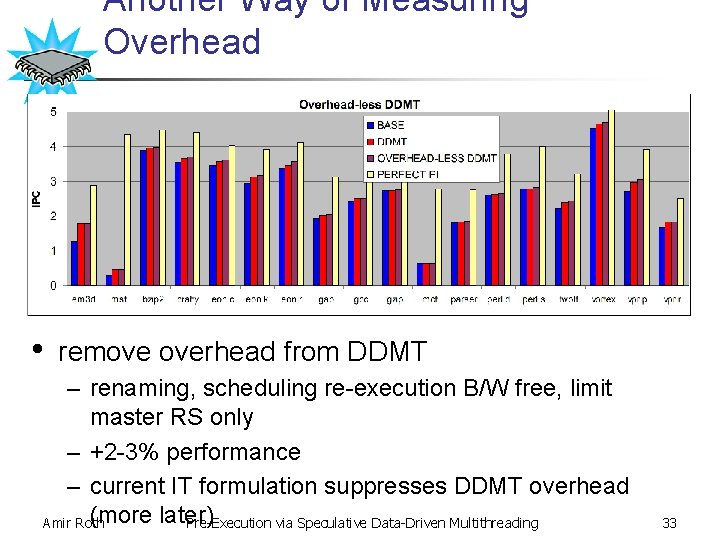

Another Way of Measuring Overhead • remove overhead from DDMT – renaming, scheduling re-execution B/W free, limit master RS only – +2 -3% performance – current IT formulation suppresses DDMT overhead (more later) Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 33

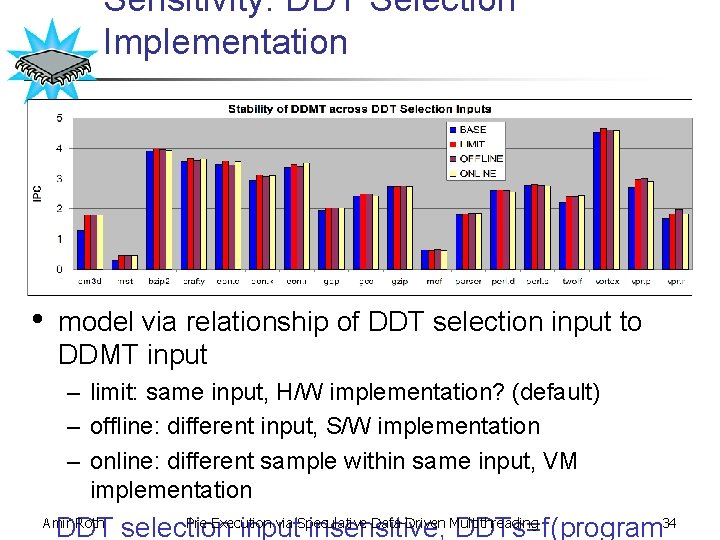

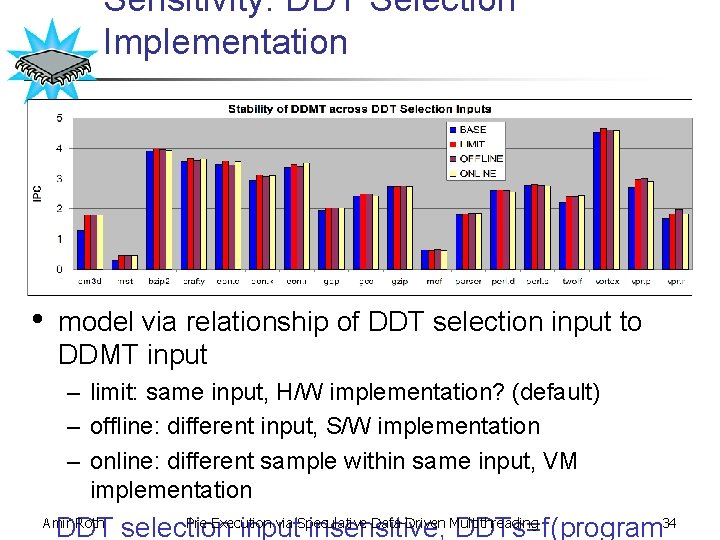

Sensitivity: DDT Selection Implementation • model via relationship of DDT selection input to DDMT input – limit: same input, H/W implementation? (default) – offline: different input, S/W implementation – online: different sample within same input, VM implementation Pre-Execution via Speculative Data-Driven Multithreading DDT selection input insensitive, DDTs=f(program 34 Amir Roth

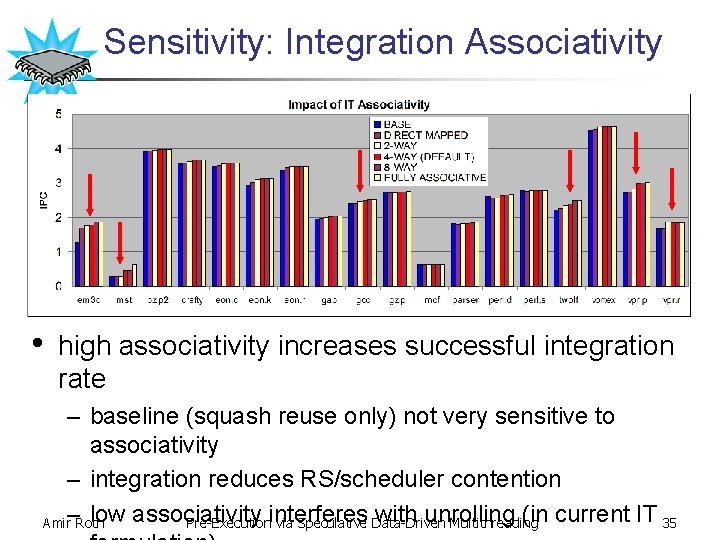

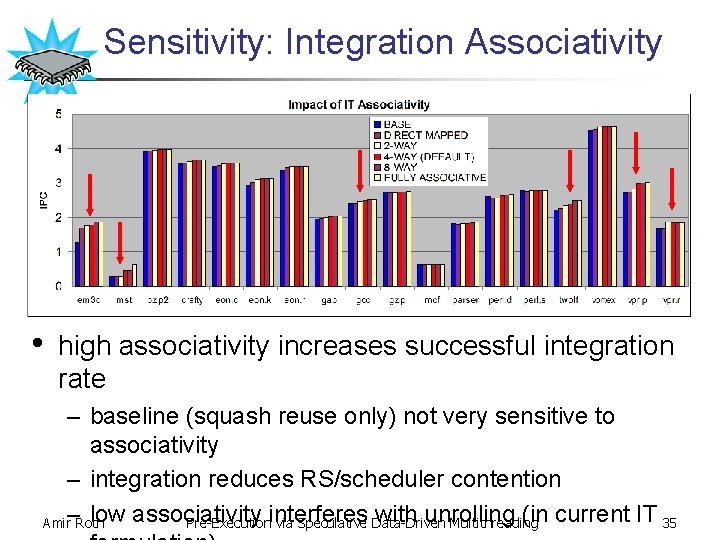

Sensitivity: Integration Associativity • high associativity increases successful integration rate – baseline (squash reuse only) not very sensitive to associativity – integration reduces RS/scheduler contention low associativity with unrolling (in current IT 35 Amir–Roth Pre-Executioninterferes via Speculative Data-Driven Multithreading

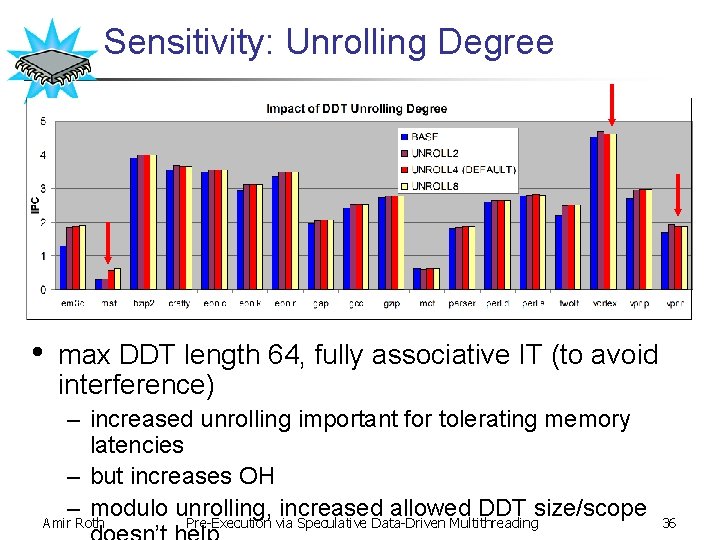

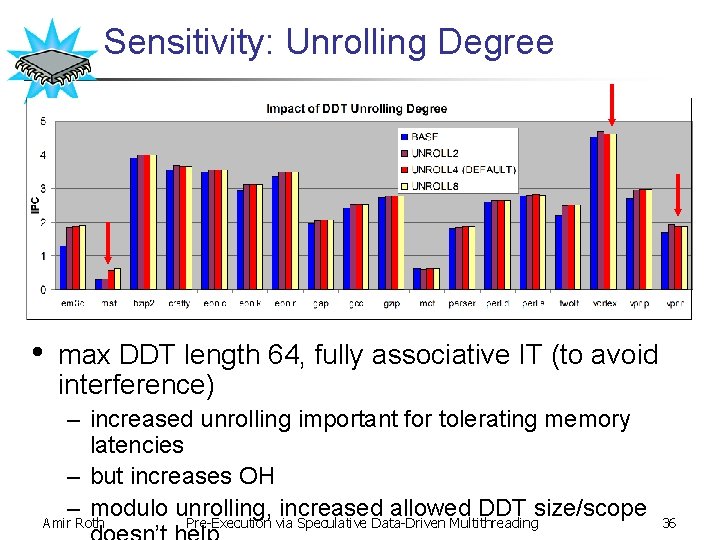

Sensitivity: Unrolling Degree • max DDT length 64, fully associative IT (to avoid interference) – increased unrolling important for tolerating memory latencies – but increases OH – modulo unrolling, increased allowed DDT size/scope Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 36

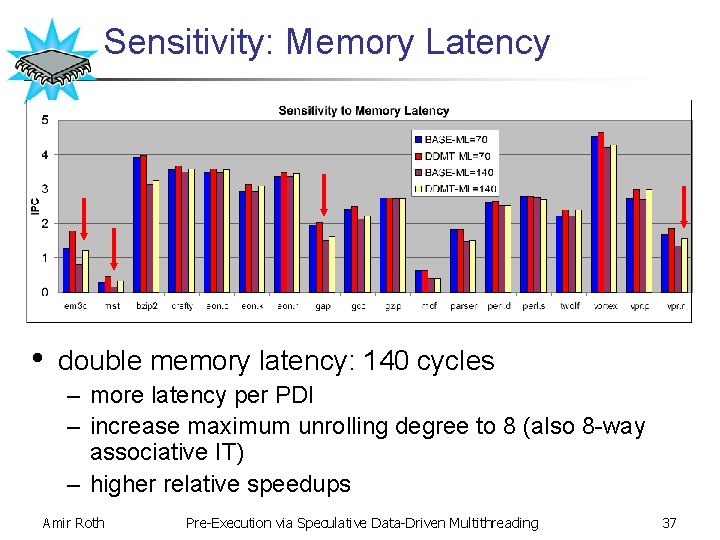

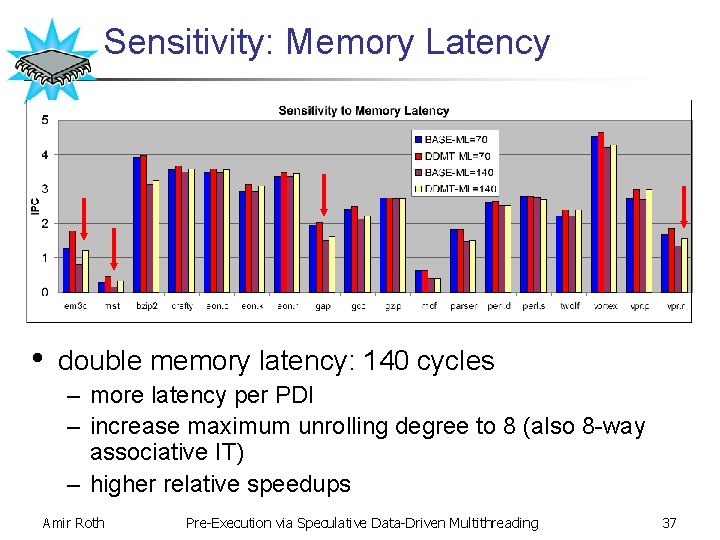

Sensitivity: Memory Latency • double memory latency: 140 cycles – more latency per PDI – increase maximum unrolling degree to 8 (also 8 -way associative IT) – higher relative speedups Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 37

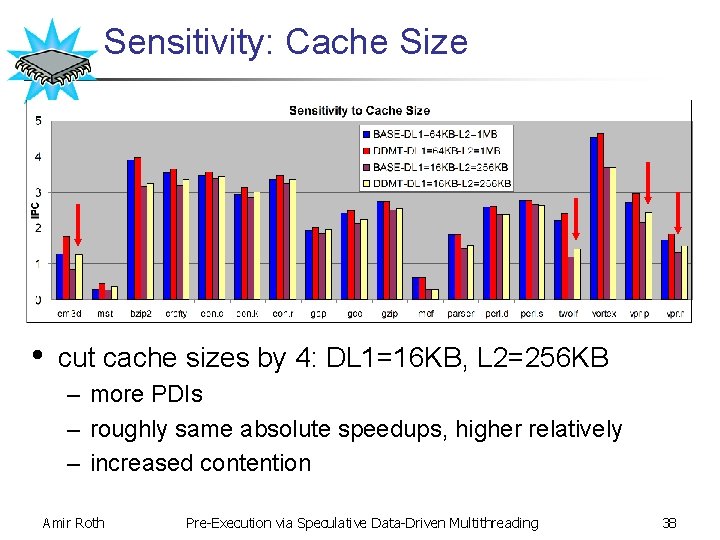

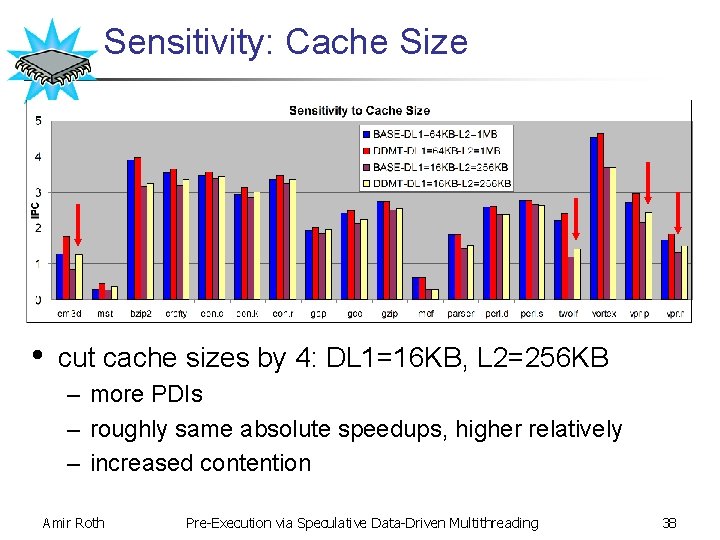

Sensitivity: Cache Size • cut cache sizes by 4: DL 1=16 KB, L 2=256 KB – more PDIs – roughly same absolute speedups, higher relatively – increased contention Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 38

IT Formulation? • old: IT doubles as ledger for pregs allocated by DDTs – if evicted from IT, preg is freed, downstream DDT destroyed – keeps overhead (RS contention) down – only need to re-execute integrated loads – restricts effective unrolling degree to IT associativity – requires incremental invalidations on IT (associative matches) • new: decouple pre-execution from presence in IT – re-execute all integrated instructions – no incremental invalidations Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 39

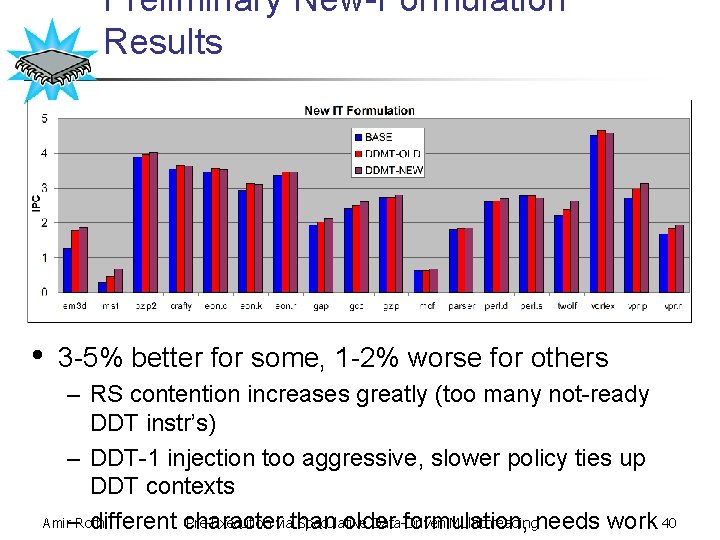

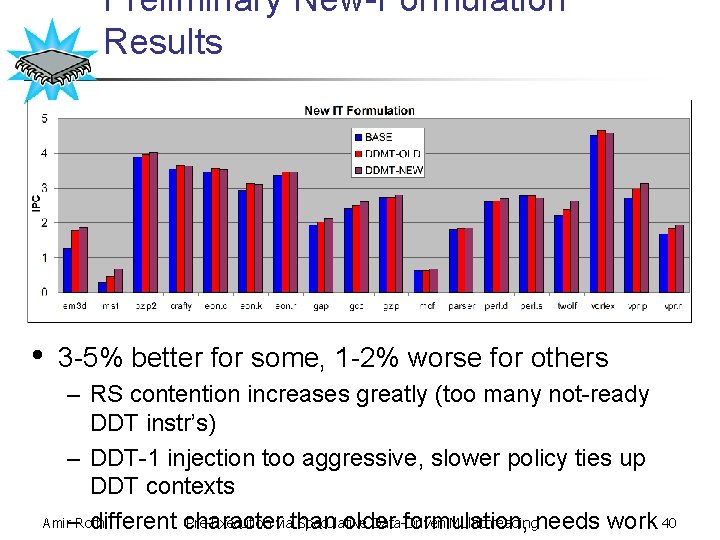

Preliminary New-Formulation Results • 3 -5% better for some, 1 -2% worse for others – RS contention increases greatly (too many not-ready DDT instr’s) – DDT-1 injection too aggressive, slower policy ties up DDT contexts Amir–Roth Pre-Execution viathan Speculative Data-Driven Multithreadingneeds work 40 different character older formulation,

Evaluation Summary • performance – does well on microbenchmarks (like it’s supposed to) – modest to moderate gains on SPEC 2 K + aggressive baseline – relatively better the more there is to do (PDIs or latency per PDI) • limitations (future work? ) – pre-execution/branch predictor interface would be nice Ø others and I have looked at this – difficulties with unoverlapped unrolling Ø external unrolling mechanism, static selection framework – need more RS entries Amir RothØ clustered RS queues should Data-Driven be good, Multithreading DDTs are dependence 41 Pre-Execution via Speculative

The End • pre-execution: more ILP from sequential programs – attacks performance problems directly – key technologies: proactive out-of-order sequencing + decoupling • DDMT: a superscalar-friendly implementation – no dedicated pre-execution bandwidth – register integration for pre-execution reuse • automated DDT selection – stable, has the right knobs Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 42

Outline • • • motivation and introduction to pre-execution DDMT style automated computation selection DDMT microarchitecture performance evaluation terrace Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 43

Tuning DDT Selection • can’t change DDT structure, can control length – Longer DDTs tolerate more latency, more overhead, fewer PDIs • from below – minimal latency tolerance • from above – maximum length, slicing window size, unrolling degree • upshot: maximum ADVAGG DDT has characteristic length – loosening. Pre-Execution controlsviadoesn’t make much difference Speculative Data-Driven Multithreading Amir Roth 44

![Related Work Architectures dataflow architectures Ø Dennis 75 Manchester Gurd85 TTDA Arvind90 ETS Related Work: Architectures • dataflow architectures Ø [Dennis 75], Manchester [Gurd+85], TTDA [Arvind+90], ETS](https://slidetodoc.com/presentation_image_h2/505d5bc508c24e66fd50c577da465dbb/image-45.jpg)

Related Work: Architectures • dataflow architectures Ø [Dennis 75], Manchester [Gurd+85], TTDA [Arvind+90], ETS [Culler+90] – decoupling, data-driven fetch to the limit, but no sequential interface – pre-execution: sequential interface with speculative dataflow helper Ø • [UW-CSTR-#1411] decoupled access/execute architecture Ø [Smith 82] – decoupling, single execution, proactive ooo? – pre-execution: speculative decoupled miss/execute micro Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 45 -architecture

![Related Work Microarchitectures decoupled runahead Ø slipstream Rotenberg00 decoupled not proactive outoforder Related Work: Microarchitectures • decoupled runahead Ø slipstream [Rotenberg+00] – decoupled, not proactive out-of-order](https://slidetodoc.com/presentation_image_h2/505d5bc508c24e66fd50c577da465dbb/image-46.jpg)

Related Work: Microarchitectures • decoupled runahead Ø slipstream [Rotenberg+00] – decoupled, not proactive out-of-order • speculative thread-level parallelism Ø Multiscalar [Franklin+93], SPSM [Dubey+95], DMT [Akkary+98] – decoupled, not proactive out-of-order Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 46

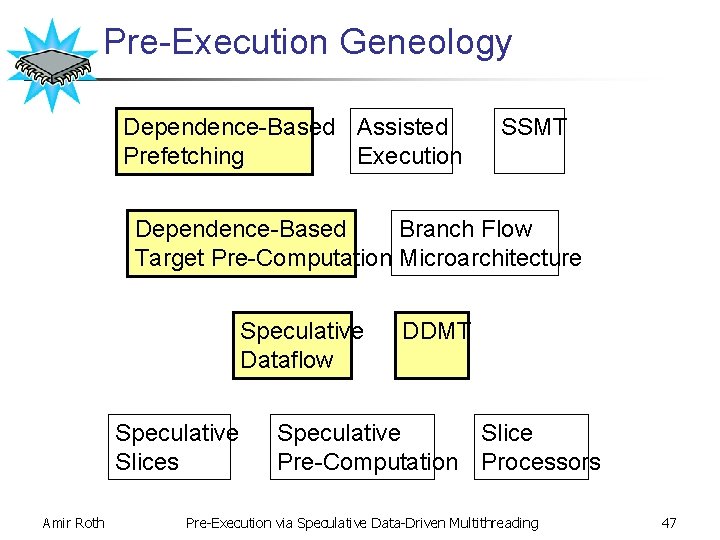

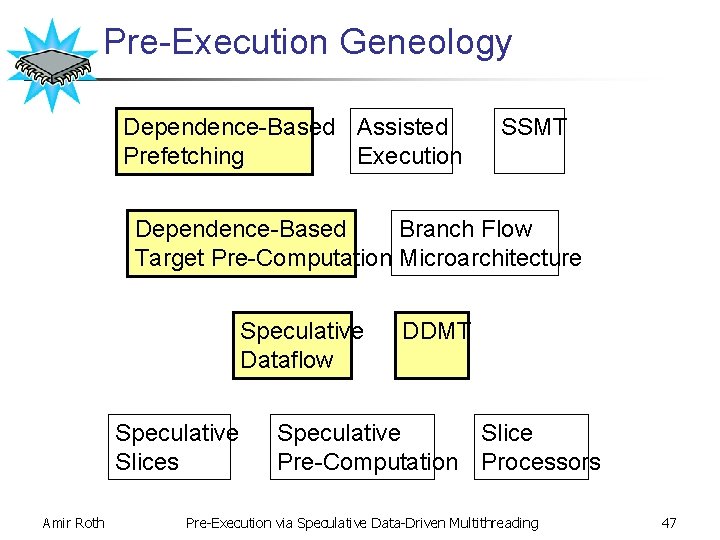

Pre-Execution Geneology Dependence-Based Assisted Prefetching Execution SSMT Dependence-Based Branch Flow Target Pre-Computation Microarchitecture Speculative Dataflow Speculative Slices Amir Roth DDMT Speculative Slice Pre-Computation Processors Pre-Execution via Speculative Data-Driven Multithreading 47

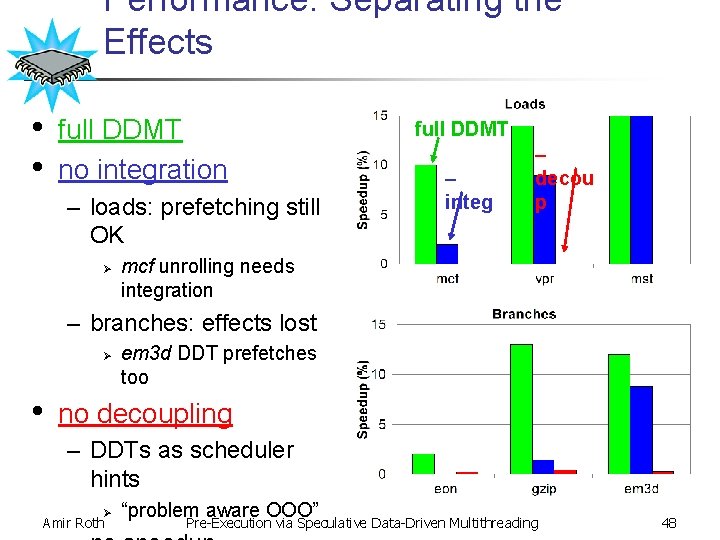

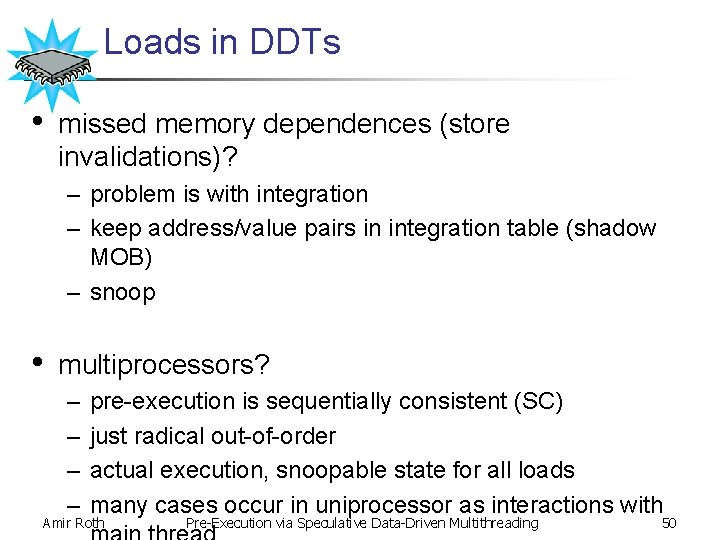

Performance: Separating the Effects • • full DDMT no integration – loads: prefetching still OK Ø full DDMT – integ – decou p mcf unrolling needs integration – branches: effects lost Ø • em 3 d DDT prefetches too no decoupling – DDTs as scheduler hints Ø Amir Roth “problem aware OOO” Pre-Execution via Speculative Data-Driven Multithreading 48

Pre-Execution vs. Inlined Helper Code • must hoist computation – – – • must copy, straight hoist will create WAR dependences hoist past/out-of/into procedures? schedule past/out-of/into procedures? non-binding prefetches or inline stalls branch pre-execution? pre-execution vs. Itanium® – speculative loads ease hoisting past procedures – but that’s it Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 49

Loads in DDTs • missed memory dependences (store invalidations)? – problem is with integration – keep address/value pairs in integration table (shadow MOB) – snoop • multiprocessors? – – pre-execution is sequentially consistent (SC) just radical out-of-order actual execution, snoopable state for all loads many cases occur in uniprocessor as interactions with Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 50

Contributions: Prelim vs. Thesis • Prelim – implementation: Speculative Dataflow (TTDA), DDMT (SMT base) – applications: prefetch data, pre-compute branches, “smoothe” ILP – automated pre-execution computation selection (enough to get by) • Dissertation – implementation: DDMT (superscalar based) – applications: prefetch data, pre-compute branches – automated computation selection Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 51

Superscalar: Obstacles to ILP • backpressure, backpressure… – – out-of-order retirement? can’t do larger window (ROB)? hard (engineering) bigger useful window? harder (P[no mis-pred] shrinks) out-of-order fetch? getting to that Amir Roth Pre-Execution via Speculative Data-Driven Multithreading 52

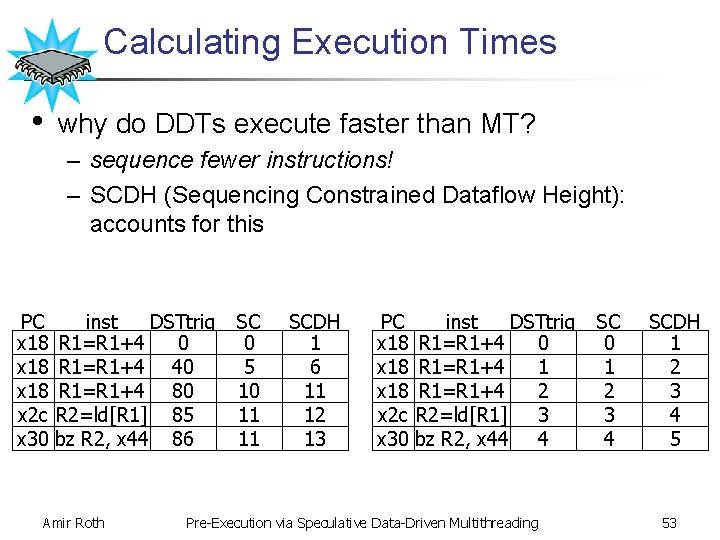

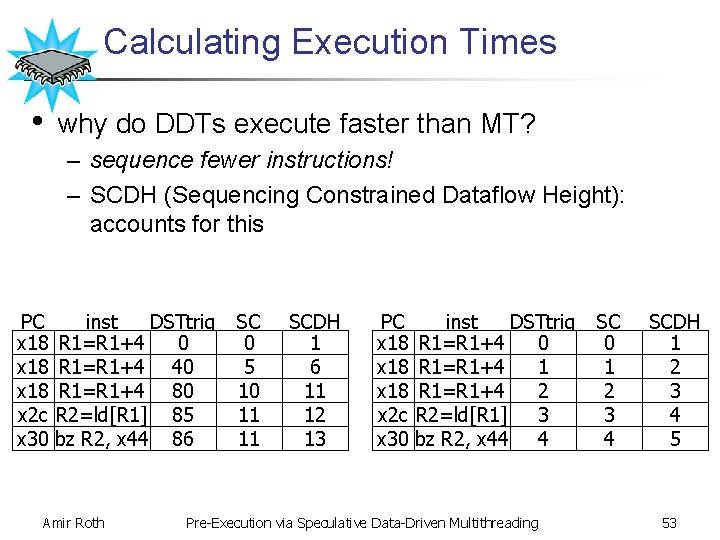

Calculating Execution Times • why do DDTs execute faster than MT? – sequence fewer instructions! – SCDH (Sequencing Constrained Dataflow Height): accounts for this PC x 18 x 2 c x 30 inst DSTtrig R 1=R 1+4 0 R 1=R 1+4 40 R 1=R 1+4 80 R 2=ld[R 1] 85 bz R 2, x 44 86 Amir Roth SC 0 5 10 11 11 SCDH 1 6 11 12 13 PC x 18 x 2 c x 30 inst DSTtrig R 1=R 1+4 0 R 1=R 1+4 1 R 1=R 1+4 2 R 2=ld[R 1] 3 bz R 2, x 44 4 Pre-Execution via Speculative Data-Driven Multithreading SC 0 1 2 3 4 SCDH 1 2 3 4 5 53