Prediction of Protein Secondary Structure from Sequence using

- Slides: 1

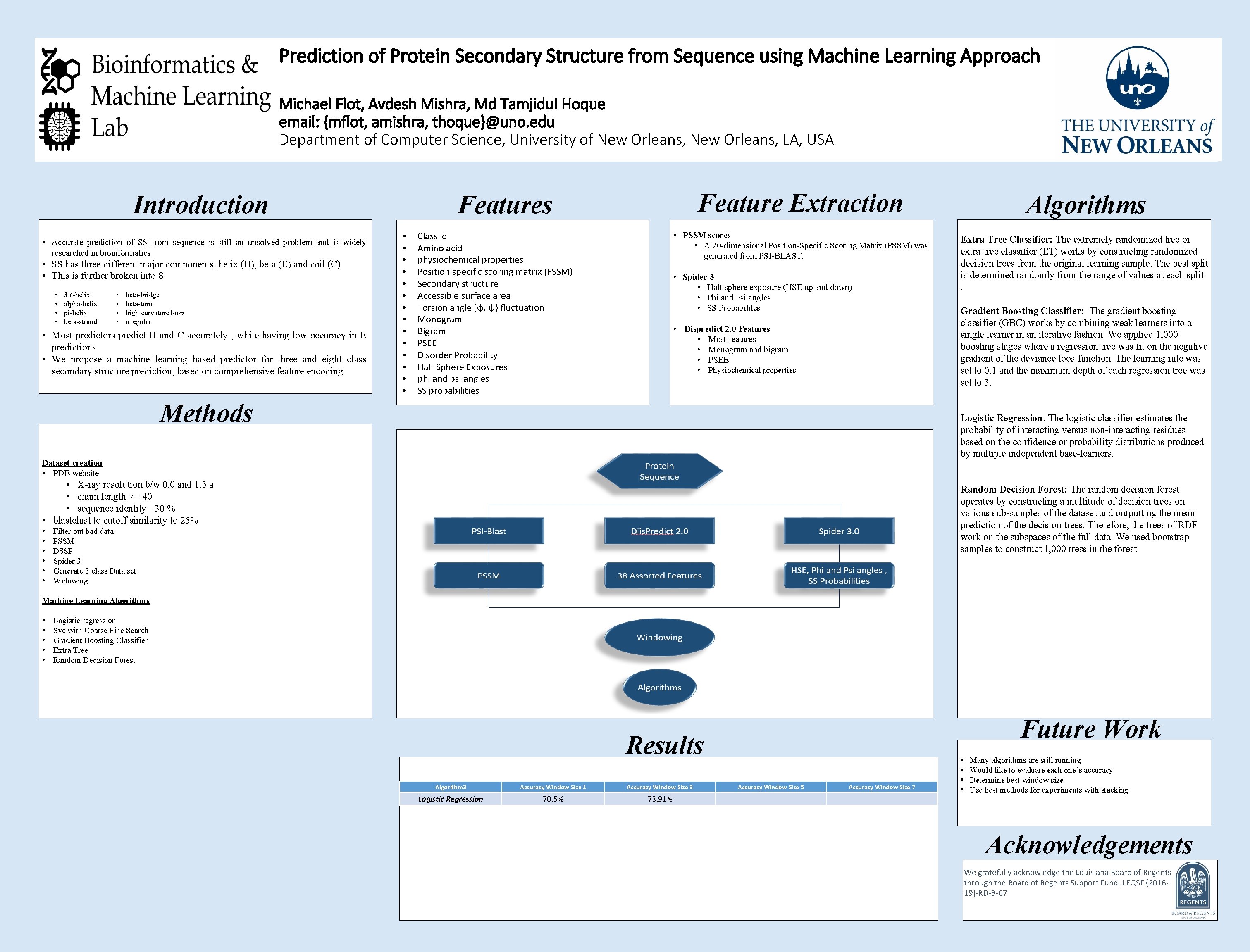

Prediction of Protein Secondary Structure from Sequence using Machine Learning Approach Michael Flot, Avdesh Mishra, Md Tamjidul Hoque email: {mflot, amishra, thoque}@uno. edu Department of Computer Science, University of New Orleans, LA, USA • Accurate prediction of SS from sequence is still an unsolved problem and is widely researched in bioinformatics • SS has three different major components, helix (H), beta (E) and coil (C) • This is further broken into 8 • • 310 -helix alpha-helix pi-helix beta-strand • • beta-bridge beta-turn high curvature loop irregular • Most predictors predict H and C accurately , while having low accuracy in E predictions • We propose a machine learning based predictor for three and eight class secondary structure prediction, based on comprehensive feature encoding Feature Extraction Algorithms • PSSM scores • A 20 -dimensional Position-Specific Scoring Matrix (PSSM) was generated from PSI-BLAST. Extra Tree Classifier: The extremely randomized tree or extra-tree classifier (ET) works by constructing randomized decision trees from the original learning sample. The best split is determined randomly from the range of values at each split. Features Introduction • • • • Class id Amino acid physiochemical properties Position specific scoring matrix (PSSM) Secondary structure Accessible surface area Torsion angle (φ, ψ) fluctuation Monogram Bigram PSEE Disorder Probability Half Sphere Exposures phi and psi angles SS probabilities • Spider 3 • Half sphere exposure (HSE up and down) • Phi and Psi angles • SS Probabilites • Dispredict 2. 0 Features • Most features • Monogram and bigram • PSEE • Physiochemical properties Methods Logistic Regression: The logistic classifier estimates the probability of interacting versus non-interacting residues based on the confidence or probability distributions produced by multiple independent base-learners. Dataset creation • PDB website • X-ray resolution b/w 0. 0 and 1. 5 a • chain length >= 40 • sequence identity =30 % • blastclust to cutoff similarity to 25% • • • Gradient Boosting Classifier: The gradient boosting classifier (GBC) works by combining weak learners into a single learner in an iterative fashion. We applied 1, 000 boosting stages where a regression tree was fit on the negative gradient of the deviance loos function. The learning rate was set to 0. 1 and the maximum depth of each regression tree was set to 3. Random Decision Forest: The random decision forest operates by constructing a multitude of decision trees on various sub-samples of the dataset and outputting the mean prediction of the decision trees. Therefore, the trees of RDF work on the subspaces of the full data. We used bootstrap samples to construct 1, 000 tress in the forest Filter out bad data PSSM DSSP Spider 3 Generate 3 class Data set Widowing Machine Learning Algorithms • • • Logistic regression Svc with Coarse Fine Search Gradient Boosting Classifier Extra Tree Random Decision Forest Future Work Results Algorithm 3 Accuracy Window Size 1 Accuracy Window Size 3 Logistic Regression 70. 5% 73. 91% Accuracy Window Size 5 Accuracy Window Size 7 • • Many algorithms are still running Would like to evaluate each one’s accuracy Determine best window size Use best methods for experiments with stacking Acknowledgements We gratefully acknowledge the Louisiana Board of Regents through the Board of Regents Support Fund, LEQSF (201619)-RD-B-07