Practical Formal Verification of MPI and Thread Programs

- Slides: 111

Practical Formal Verification of MPI and Thread Programs Presented by Ganesh Gopalakrishnan, Robert M. Kirby, and Anh Vo Based on work by many – Notably Sarvani Vakkalanka, Anh Vo, Michael De. Lisi, Alan Humphrey, Chris Derrick, and Sriram Aananthakrishnan School of Computing, University of Utah, Salt Lake City, UT 84112, USA Special Thanks to Rajeev Thakur, Bill Gropp, Robert Palmer A FULL DAY TUTORIAL at Euro. PVM / MPI 2009 http: // www. cs. utah. edu / formal_verification Supported by NSF CNS 0509379 and Microsoft 1

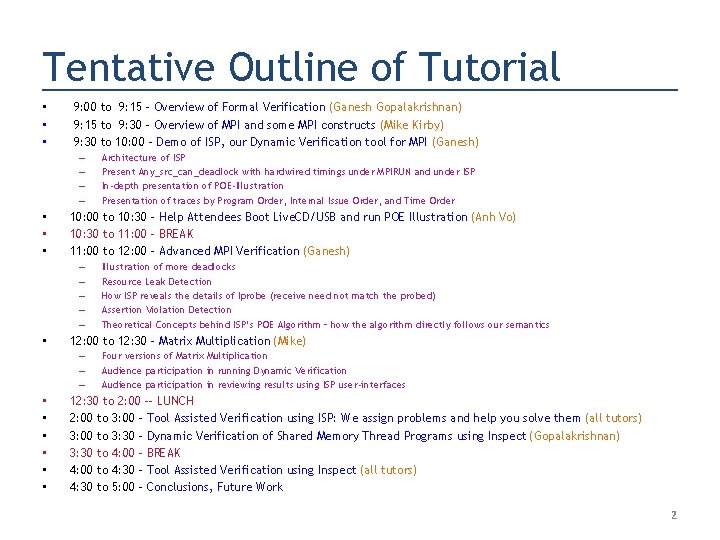

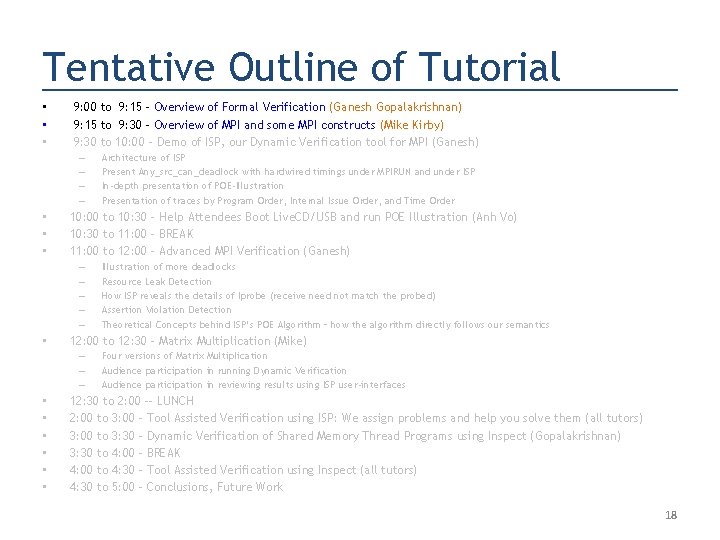

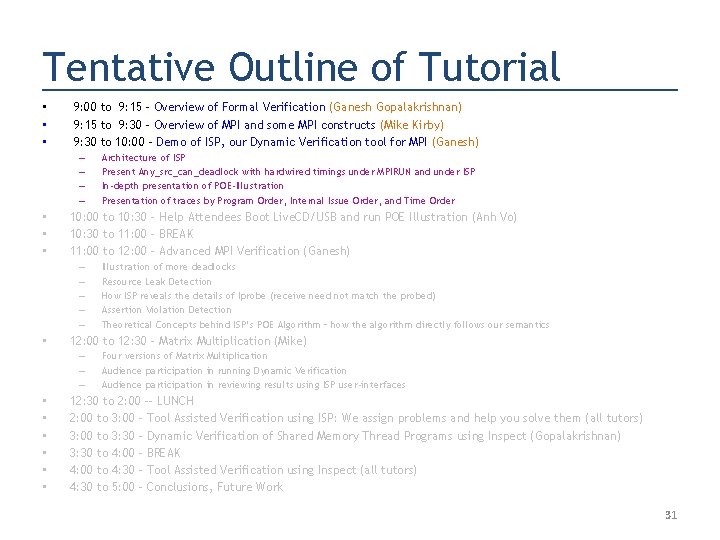

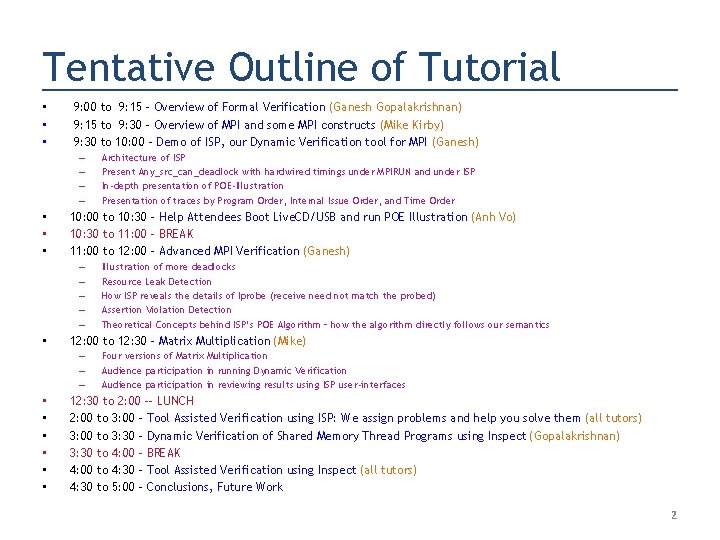

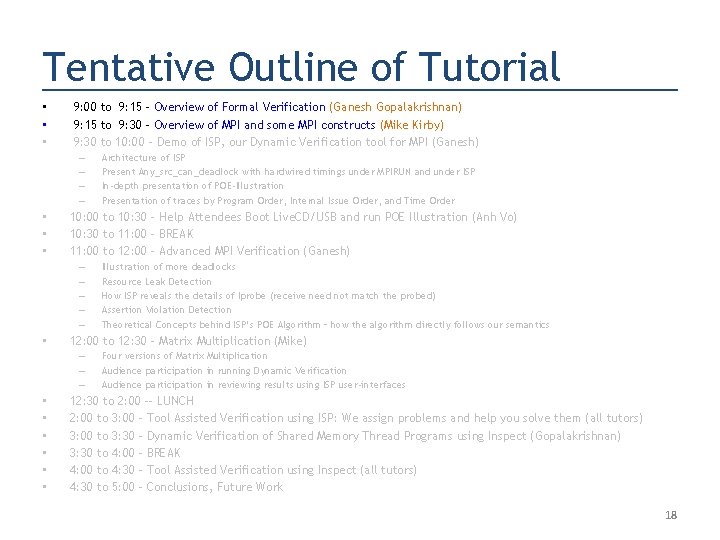

Tentative Outline of Tutorial • • • 9: 00 to 9: 15 – Overview of Formal Verification (Ganesh Gopalakrishnan) 9: 15 to 9: 30 - Overview of MPI and some MPI constructs (Mike Kirby) 9: 30 to 10: 00 – Demo of ISP, our Dynamic Verification tool for MPI (Ganesh) – – • • • 10: 00 to 10: 30 – Help Attendees Boot Live. CD/USB and run POE Illustration (Anh Vo) 10: 30 to 11: 00 – BREAK 11: 00 to 12: 00 – Advanced MPI Verification (Ganesh) – – – • Illustration of more deadlocks Resource Leak Detection How ISP reveals the details of Iprobe (receive need not match the probed) Assertion Violation Detection Theoretical Concepts behind ISP’s POE Algorithm – how the algorithm directly follows our semantics 12: 00 to 12: 30 – Matrix Multiplication (Mike) – – – • • • Architecture of ISP Present Any_src_can_deadlock with hardwired timings under MPIRUN and under ISP In-depth presentation of POE-Illustration Presentation of traces by Program Order, Internal Issue Order, and Time Order Four versions of Matrix Multiplication Audience participation in running Dynamic Verification Audience participation in reviewing results using ISP user-interfaces 12: 30 to 2: 00 -- LUNCH 2: 00 to 3: 00 – Tool Assisted Verification using ISP: We assign problems and help you solve them (all tutors) 3: 00 to 3: 30 - Dynamic Verification of Shared Memory Thread Programs using Inspect (Gopalakrishnan) 3: 30 to 4: 00 – BREAK 4: 00 to 4: 30 - Tool Assisted Verification using Inspect (all tutors) 4: 30 to 5: 00 – Conclusions, Future Work 2

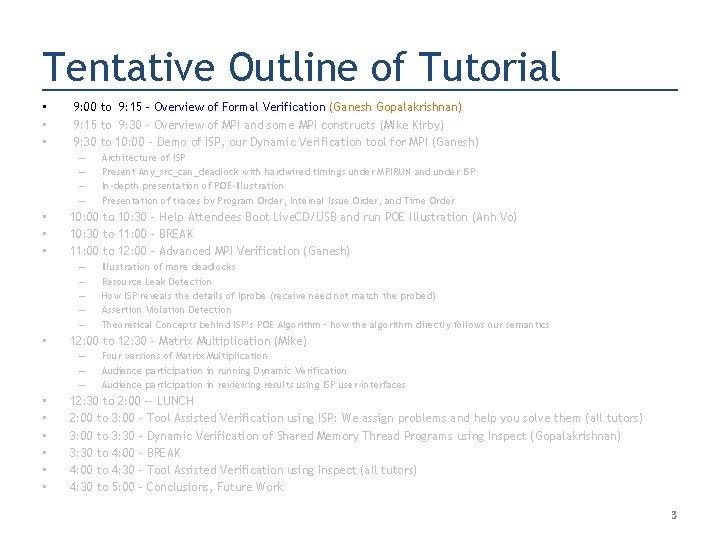

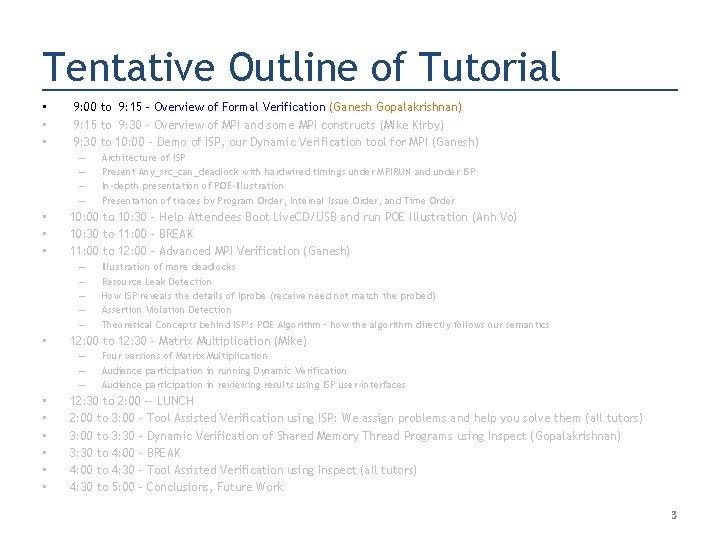

Tentative Outline of Tutorial • • • 9: 00 to 9: 15 – Overview of Formal Verification (Ganesh Gopalakrishnan) 9: 15 to 9: 30 - Overview of MPI and some MPI constructs (Mike Kirby) 9: 30 to 10: 00 – Demo of ISP, our Dynamic Verification tool for MPI (Ganesh) – – • • • 10: 00 to 10: 30 – Help Attendees Boot Live. CD/USB and run POE Illustration (Anh Vo) 10: 30 to 11: 00 – BREAK 11: 00 to 12: 00 – Advanced MPI Verification (Ganesh) – – – • Illustration of more deadlocks Resource Leak Detection How ISP reveals the details of Iprobe (receive need not match the probed) Assertion Violation Detection Theoretical Concepts behind ISP’s POE Algorithm – how the algorithm directly follows our semantics 12: 00 to 12: 30 – Matrix Multiplication (Mike) – – – • • • Architecture of ISP Present Any_src_can_deadlock with hardwired timings under MPIRUN and under ISP In-depth presentation of POE-Illustration Presentation of traces by Program Order, Internal Issue Order, and Time Order Four versions of Matrix Multiplication Audience participation in running Dynamic Verification Audience participation in reviewing results using ISP user-interfaces 12: 30 to 2: 00 -- LUNCH 2: 00 to 3: 00 – Tool Assisted Verification using ISP: We assign problems and help you solve them (all tutors) 3: 00 to 3: 30 - Dynamic Verification of Shared Memory Thread Programs using Inspect (Gopalakrishnan) 3: 30 to 4: 00 – BREAK 4: 00 to 4: 30 - Tool Assisted Verification using Inspect (all tutors) 4: 30 to 5: 00 – Conclusions, Future Work 3

Correctness Concerns Will Loom Everywhere… Debug Concurrent Systems, providing rigorous guarantees 4

Correctness of Concurrent Systems • “Sequential programming is really hard, and parallel programming is a step beyond that” • Tanenbaum, USENIX 2008 Lifetime Achievement Award talk • “Formal methods provide the only truly scalable approach to developing correct code in this complex programming environment. ” • Rusty Lusk, in his EC 2 2009 Invited Talk entitled “Slouching Towards Exascale: Programming Models for High Performance Computing”

Why Formal Verification for Concurrency? • Importance of Concurrency Verification – Increasing use of Concurrency – Difficulties of locating Concurrency Bugs • Why is testing ineffective – Inadequate coverage – Techniques to increase coverage • Unreliable • Slow down execution • Ideal FV tool for modern concurrency practices – DEPENDABLE (D): Finds Bugs in its range Each Time – PARSIMONIOUS (P): Does so without redundant searches – EDUCATIONAL (E): Educates users on concurrency nuances • Tail wags the dog: Complexity of API Semantics 6

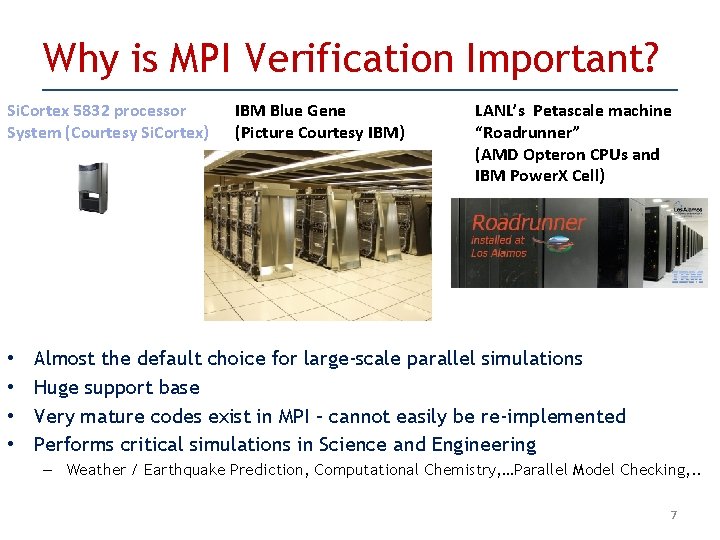

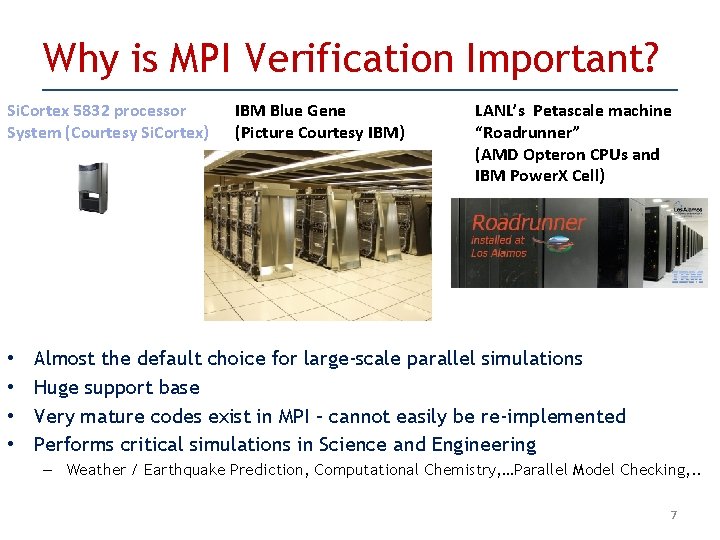

Why is MPI Verification Important? Si. Cortex 5832 processor System (Courtesy Si. Cortex) • • IBM Blue Gene (Picture Courtesy IBM) LANL’s Petascale machine “Roadrunner” (AMD Opteron CPUs and IBM Power. X Cell) Almost the default choice for large-scale parallel simulations Huge support base Very mature codes exist in MPI – cannot easily be re-implemented Performs critical simulations in Science and Engineering – Weather / Earthquake Prediction, Computational Chemistry, …Parallel Model Checking, . . 7

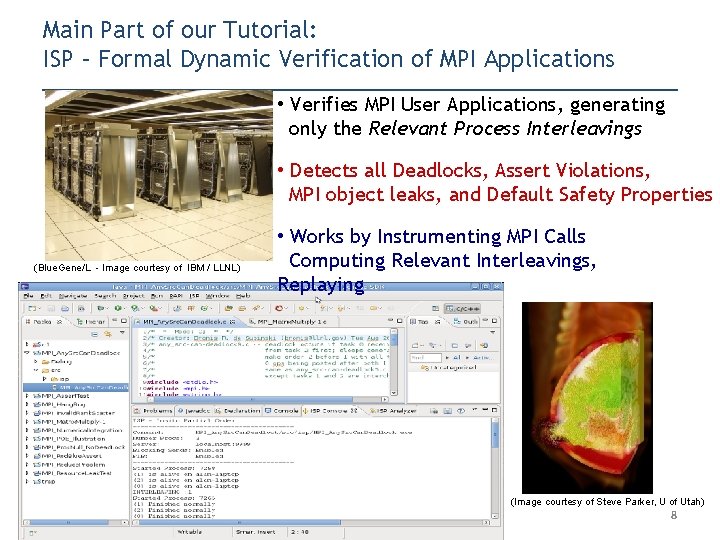

Main Part of our Tutorial: ISP – Formal Dynamic Verification of MPI Applications • Verifies MPI User Applications, generating only the Relevant Process Interleavings • Detects all Deadlocks, Assert Violations, MPI object leaks, and Default Safety Properties (Blue. Gene/L - Image courtesy of IBM / LLNL) • Works by Instrumenting MPI Calls Computing Relevant Interleavings, Replaying (Image courtesy of Steve Parker, U of Utah) 8

Overview of Formal Verification • Disadvantages of conventional testing • Disadvantages of Model based verification and Static Analysis • Advantages of Dynamic Formal Analysis

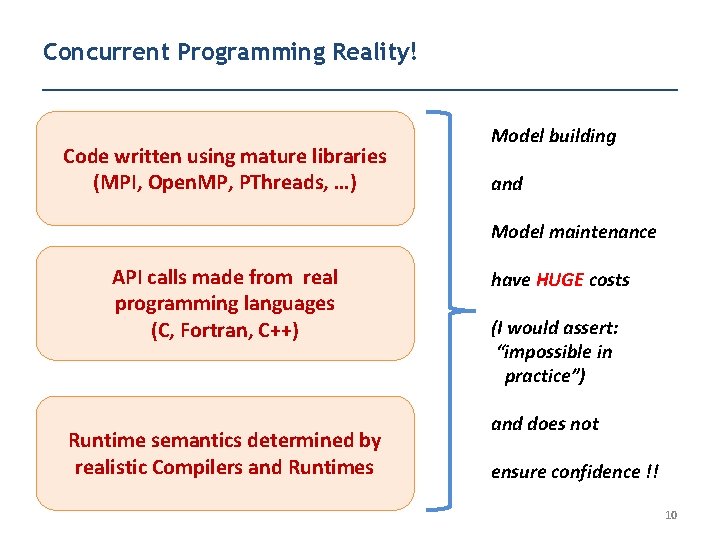

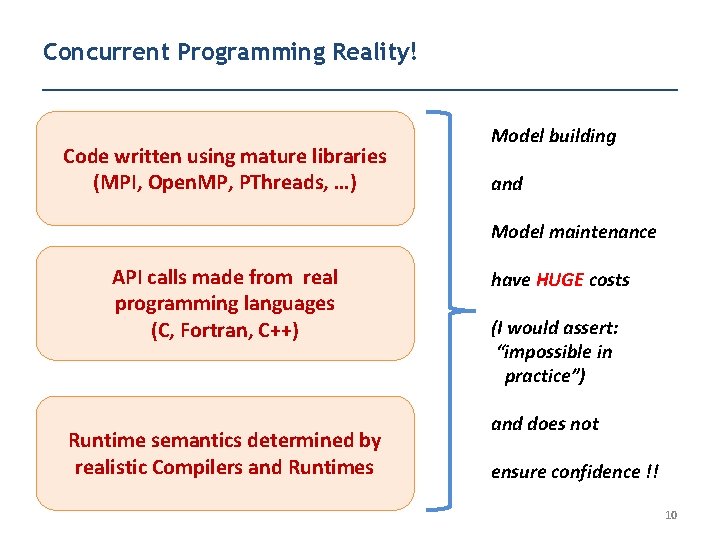

Concurrent Programming Reality! Code written using mature libraries (MPI, Open. MP, PThreads, …) Model building and Model maintenance API calls made from real programming languages (C, Fortran, C++) Runtime semantics determined by realistic Compilers and Runtimes have HUGE costs (I would assert: “impossible in practice”) and does not ensure confidence !! 10

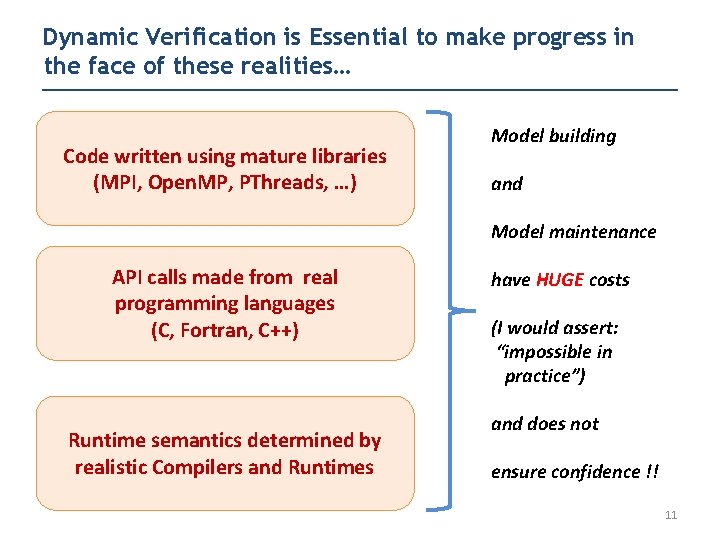

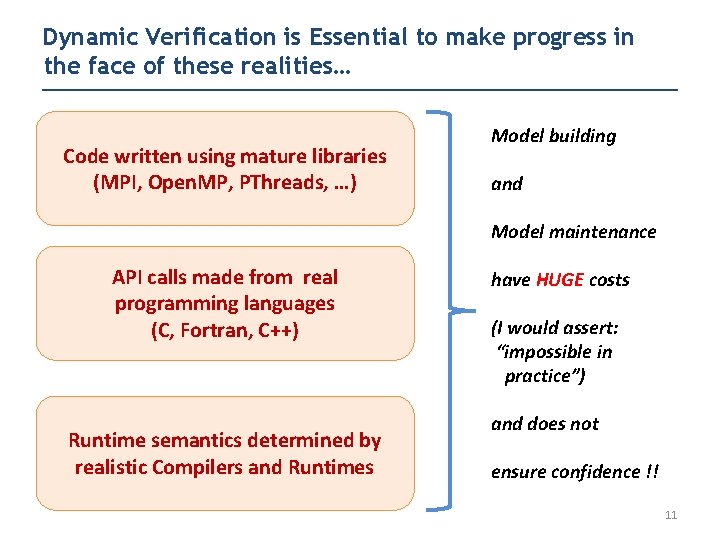

Dynamic Verification is Essential to make progress in the face of these realities… Code written using mature libraries (MPI, Open. MP, PThreads, …) Model building and Model maintenance API calls made from real programming languages (C, Fortran, C++) Runtime semantics determined by realistic Compilers and Runtimes have HUGE costs (I would assert: “impossible in practice”) and does not ensure confidence !! 11

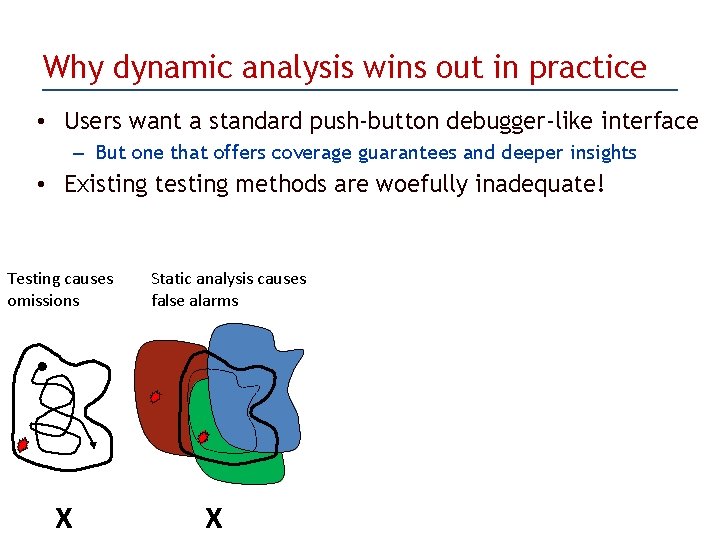

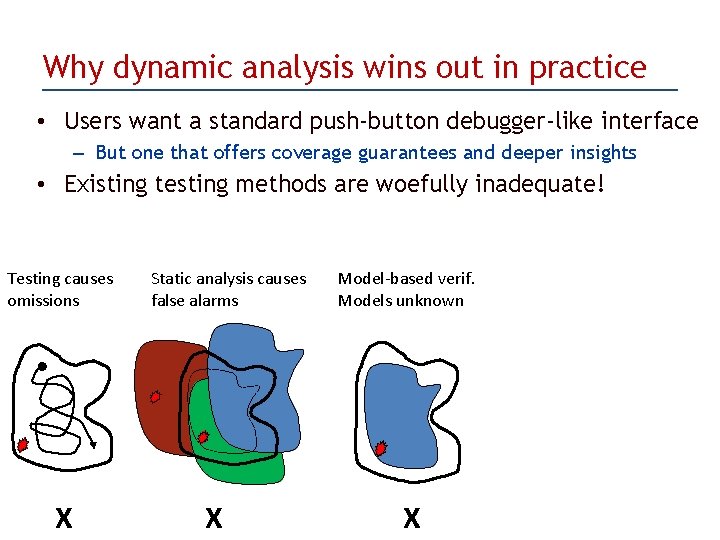

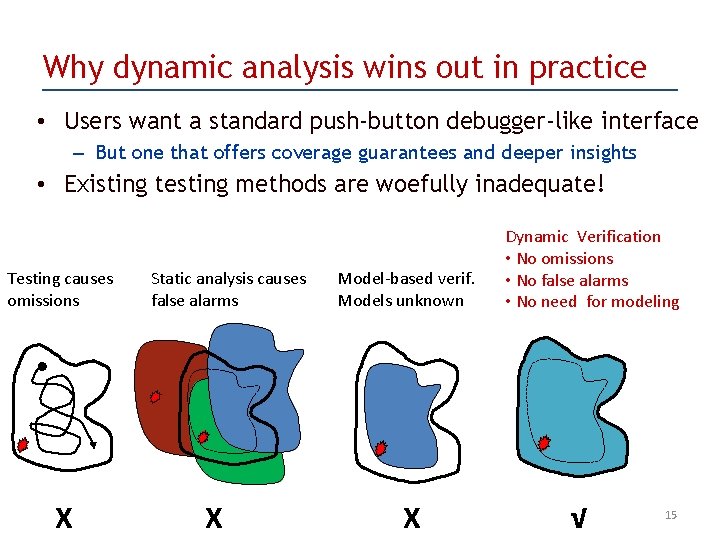

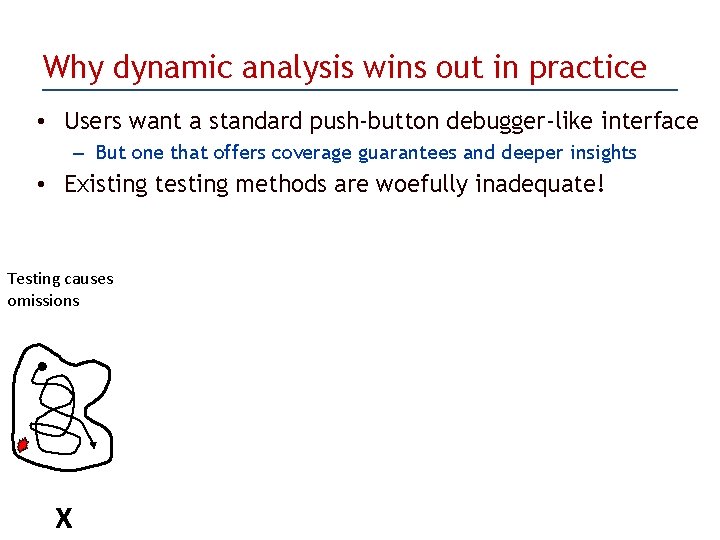

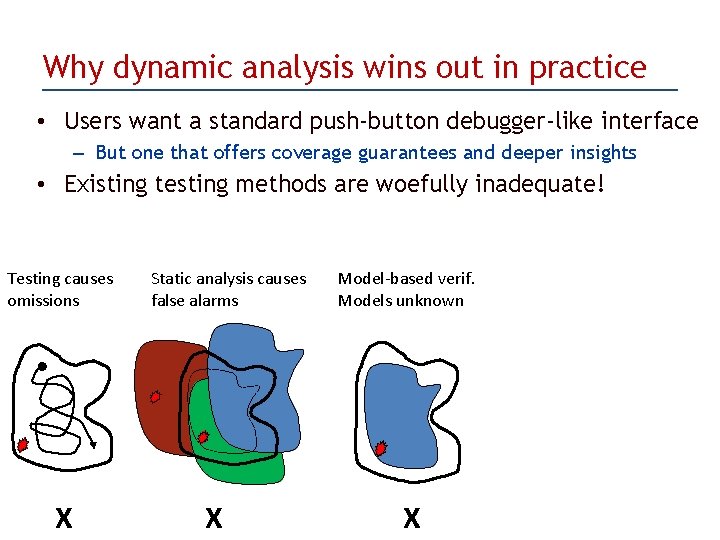

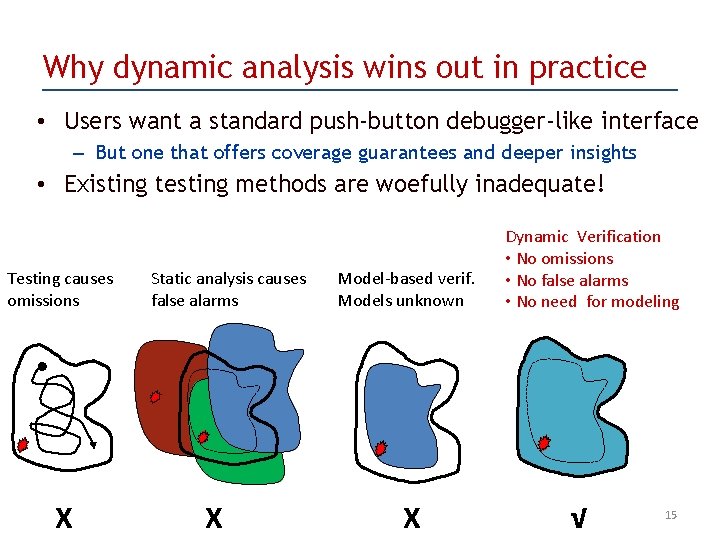

Why dynamic analysis wins out in practice • Users want a standard push-button debugger-like interface – But one that offers coverage guarantees and deeper insights • Existing testing methods are woefully inadequate! Testing causes omissions X

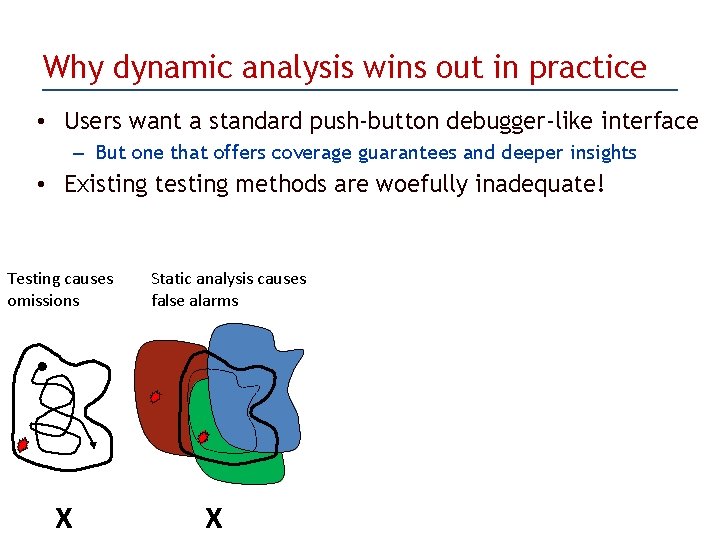

Why dynamic analysis wins out in practice • Users want a standard push-button debugger-like interface – But one that offers coverage guarantees and deeper insights • Existing testing methods are woefully inadequate! Testing causes omissions X Static analysis causes false alarms X

Why dynamic analysis wins out in practice • Users want a standard push-button debugger-like interface – But one that offers coverage guarantees and deeper insights • Existing testing methods are woefully inadequate! Testing causes omissions X Static analysis causes false alarms X Model-based verif. Models unknown X

Why dynamic analysis wins out in practice • Users want a standard push-button debugger-like interface – But one that offers coverage guarantees and deeper insights • Existing testing methods are woefully inadequate! Testing causes omissions X Static analysis causes false alarms X Model-based verif. Models unknown X Dynamic Verification • No omissions • No false alarms • No need for modeling √ 15

Dynamic Verification is catching on! • • MODIST Backtrackable VMs Testing using FPGA hardware (Simics) CHESS project of Microsoft Research • ISP (our work - this tutorial) • Inspect (our work - this tutorial) • MCC (under construction in our group for a multi-core communications API) 16

Also included in our tutorial : Dynamic Formal Verification of Pthread applications • Shared memory threads are still widely used to organize many parallel computations • Judiciously mixing multiple paradigms (MPI and threading) is becoming important – Different paradigms for different communication regimes (latency, throughput, programming approach); for instance, • At the fine grain, use shared memory threads • At the coarse grain, use message passing 17

Tentative Outline of Tutorial • • • 9: 00 to 9: 15 – Overview of Formal Verification (Ganesh Gopalakrishnan) 9: 15 to 9: 30 - Overview of MPI and some MPI constructs (Mike Kirby) 9: 30 to 10: 00 – Demo of ISP, our Dynamic Verification tool for MPI (Ganesh) – – • • • 10: 00 to 10: 30 – Help Attendees Boot Live. CD/USB and run POE Illustration (Anh Vo) 10: 30 to 11: 00 – BREAK 11: 00 to 12: 00 – Advanced MPI Verification (Ganesh) – – – • Illustration of more deadlocks Resource Leak Detection How ISP reveals the details of Iprobe (receive need not match the probed) Assertion Violation Detection Theoretical Concepts behind ISP’s POE Algorithm – how the algorithm directly follows our semantics 12: 00 to 12: 30 – Matrix Multiplication (Mike) – – – • • • Architecture of ISP Present Any_src_can_deadlock with hardwired timings under MPIRUN and under ISP In-depth presentation of POE-Illustration Presentation of traces by Program Order, Internal Issue Order, and Time Order Four versions of Matrix Multiplication Audience participation in running Dynamic Verification Audience participation in reviewing results using ISP user-interfaces 12: 30 to 2: 00 -- LUNCH 2: 00 to 3: 00 – Tool Assisted Verification using ISP: We assign problems and help you solve them (all tutors) 3: 00 to 3: 30 - Dynamic Verification of Shared Memory Thread Programs using Inspect (Gopalakrishnan) 3: 30 to 4: 00 – BREAK 4: 00 to 4: 30 - Tool Assisted Verification using Inspect (all tutors) 4: 30 to 5: 00 – Conclusions, Future Work 18

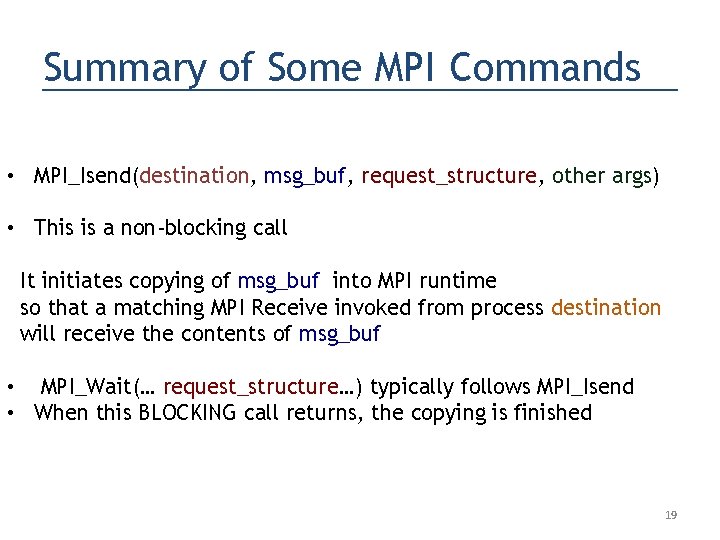

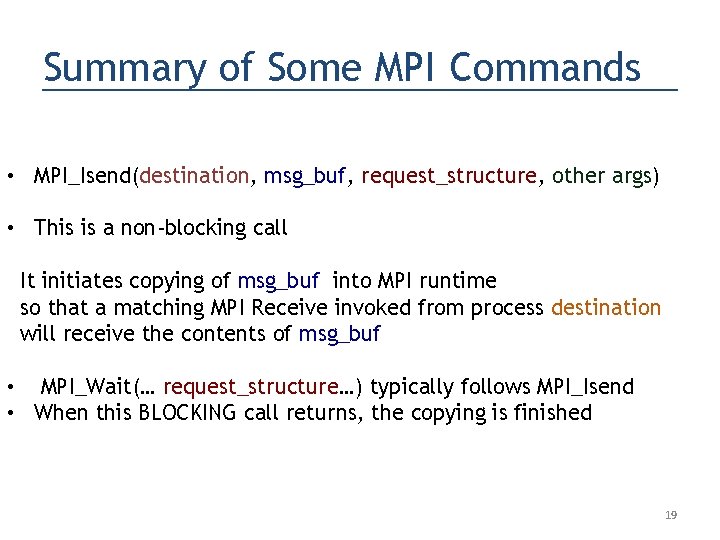

Summary of Some MPI Commands • MPI_Isend(destination, msg_buf, request_structure, other args) • This is a non-blocking call It initiates copying of msg_buf into MPI runtime so that a matching MPI Receive invoked from process destination will receive the contents of msg_buf • MPI_Wait(… request_structure…) typically follows MPI_Isend • When this BLOCKING call returns, the copying is finished 19

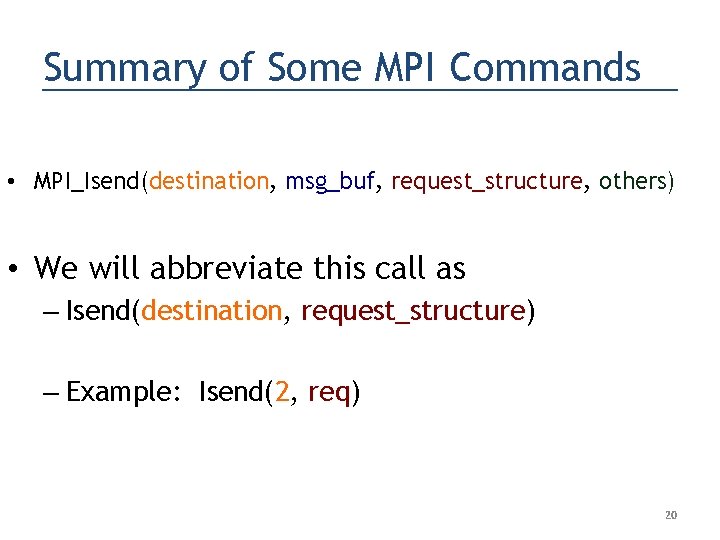

Summary of Some MPI Commands • MPI_Isend(destination, msg_buf, request_structure, others) • We will abbreviate this call as – Isend(destination, request_structure) – Example: Isend(2, req) 20

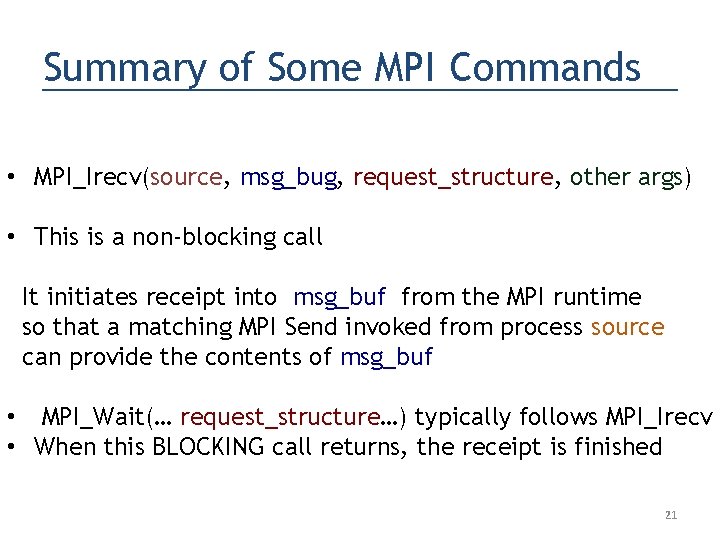

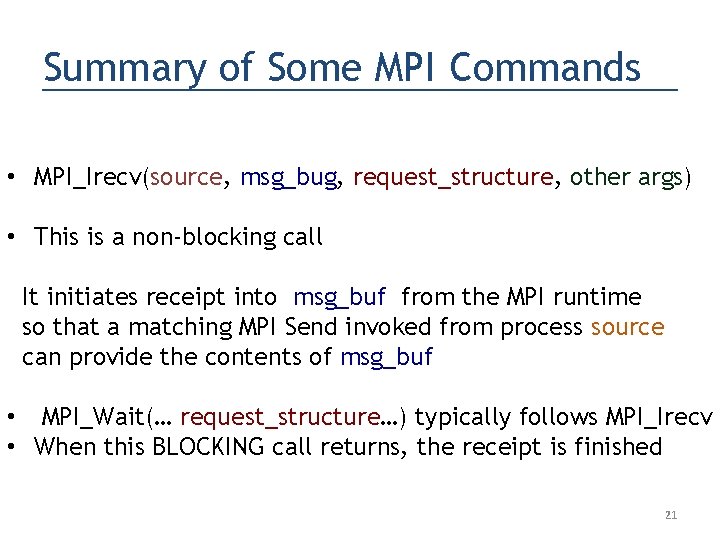

Summary of Some MPI Commands • MPI_Irecv(source, msg_bug, request_structure, other args) • This is a non-blocking call It initiates receipt into msg_buf from the MPI runtime so that a matching MPI Send invoked from process source can provide the contents of msg_buf • MPI_Wait(… request_structure…) typically follows MPI_Irecv • When this BLOCKING call returns, the receipt is finished 21

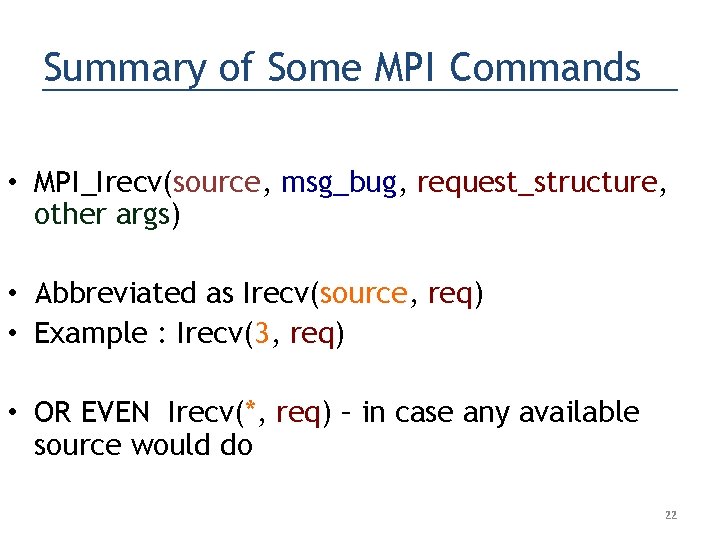

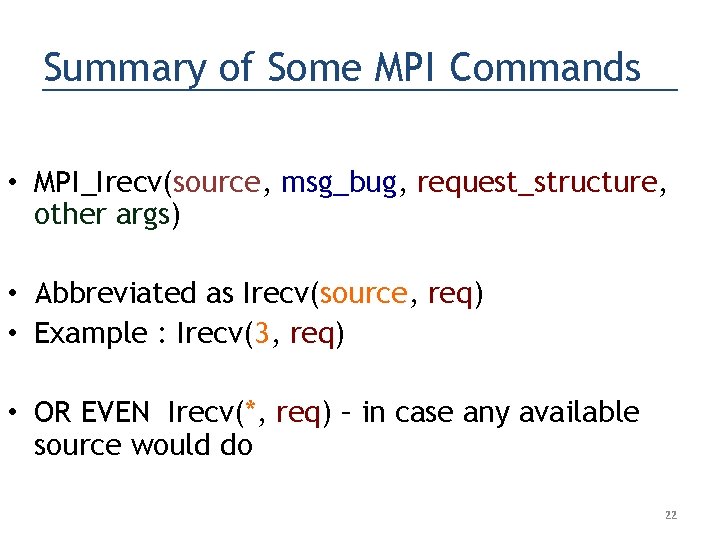

Summary of Some MPI Commands • MPI_Irecv(source, msg_bug, request_structure, other args) • Abbreviated as Irecv(source, req) • Example : Irecv(3, req) • OR EVEN Irecv(*, req) – in case any available source would do 22

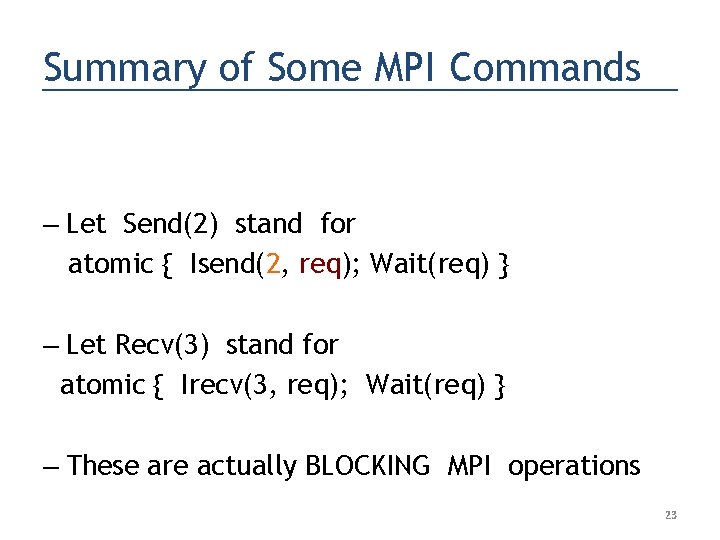

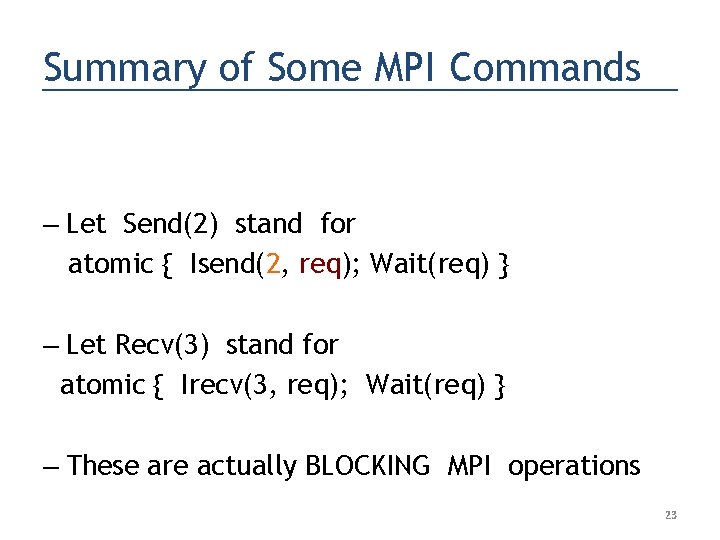

Summary of Some MPI Commands – Let Send(2) stand for atomic { Isend(2, req); Wait(req) } – Let Recv(3) stand for atomic { Irecv(3, req); Wait(req) } – These are actually BLOCKING MPI operations 23

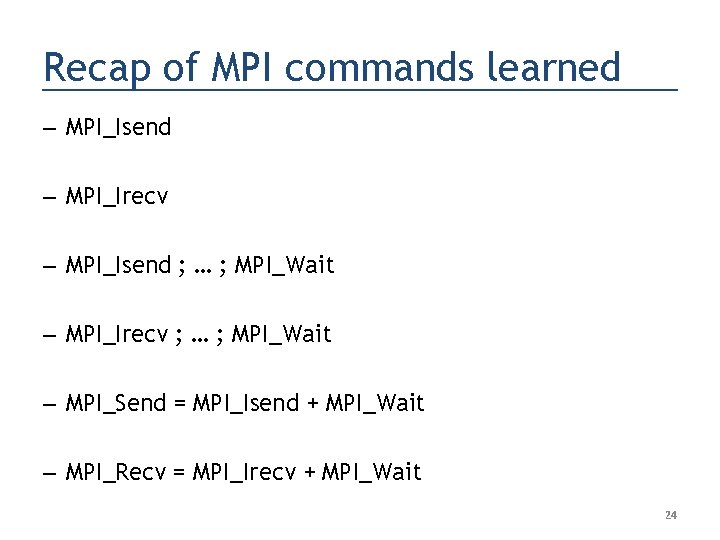

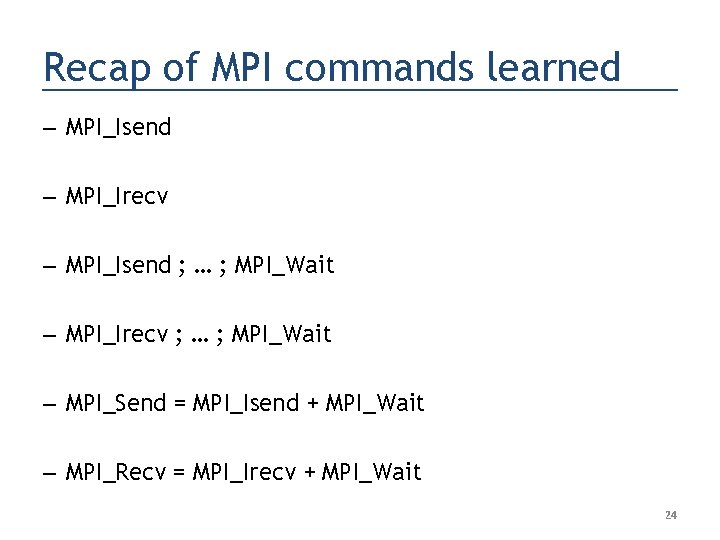

Recap of MPI commands learned – MPI_Isend – MPI_Irecv – MPI_Isend ; … ; MPI_Wait – MPI_Irecv ; … ; MPI_Wait – MPI_Send = MPI_Isend + MPI_Wait – MPI_Recv = MPI_Irecv + MPI_Wait 24

More MPI Commands – MPI_Barrier(…) is abbreviated as Barrier() or even Barrier – All processes must invoke Barrier before any process can return from the Barrier invocation 25

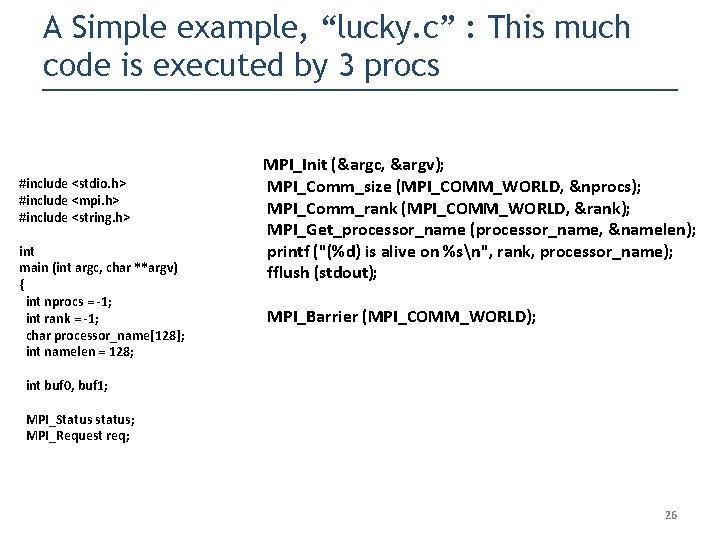

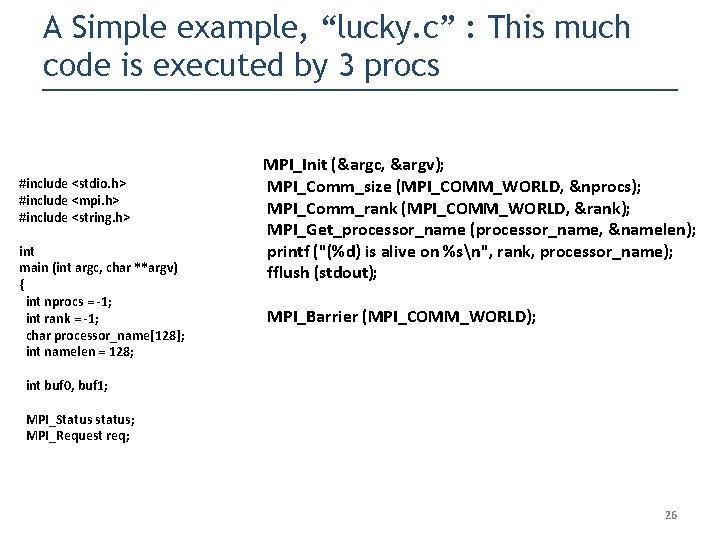

A Simple example, “lucky. c” : This much code is executed by 3 procs #include <stdio. h> #include <mpi. h> #include <string. h> int main (int argc, char **argv) { int nprocs = -1; int rank = -1; char processor_name[128]; int namelen = 128; MPI_Init (&argc, &argv); MPI_Comm_size (MPI_COMM_WORLD, &nprocs); MPI_Comm_rank (MPI_COMM_WORLD, &rank); MPI_Get_processor_name (processor_name, &namelen); printf ("(%d) is alive on %sn", rank, processor_name); fflush (stdout); MPI_Barrier (MPI_COMM_WORLD); int buf 0, buf 1; MPI_Status status; MPI_Request req; 26

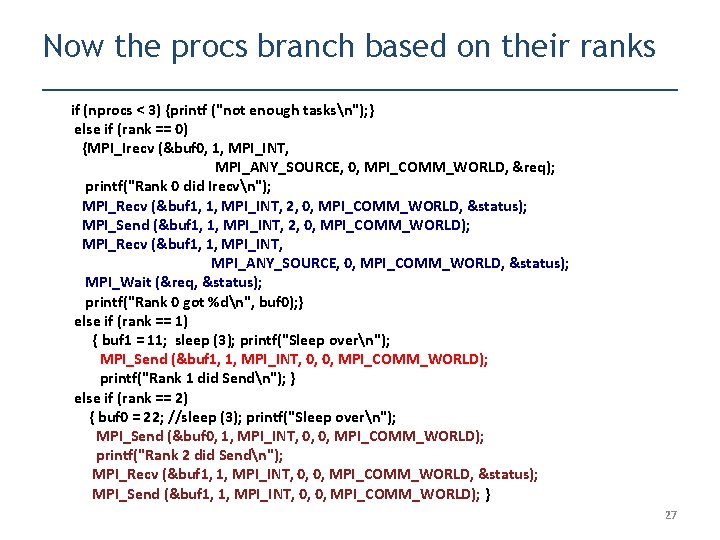

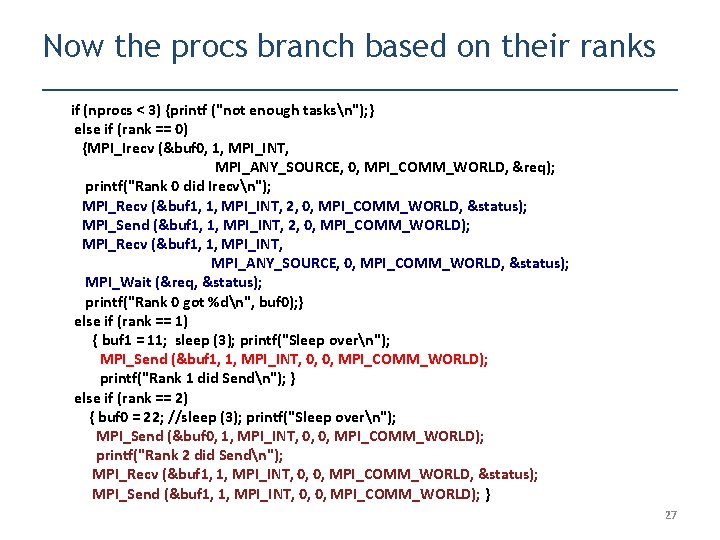

Now the procs branch based on their ranks if (nprocs < 3) {printf ("not enough tasksn"); } else if (rank == 0) {MPI_Irecv (&buf 0, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &req); printf("Rank 0 did Irecvn"); MPI_Recv (&buf 1, 1, MPI_INT, 2, 0, MPI_COMM_WORLD, &status); MPI_Send (&buf 1, 1, MPI_INT, 2, 0, MPI_COMM_WORLD); MPI_Recv (&buf 1, 1, MPI_INT, MPI_ANY_SOURCE, 0, MPI_COMM_WORLD, &status); MPI_Wait (&req, &status); printf("Rank 0 got %dn", buf 0); } else if (rank == 1) { buf 1 = 11; sleep (3); printf("Sleep overn"); MPI_Send (&buf 1, 1, MPI_INT, 0, 0, MPI_COMM_WORLD); printf("Rank 1 did Sendn"); } else if (rank == 2) { buf 0 = 22; //sleep (3); printf("Sleep overn"); MPI_Send (&buf 0, 1, MPI_INT, 0, 0, MPI_COMM_WORLD); printf("Rank 2 did Sendn"); MPI_Recv (&buf 1, 1, MPI_INT, 0, 0, MPI_COMM_WORLD, &status); MPI_Send (&buf 1, 1, MPI_INT, 0, 0, MPI_COMM_WORLD); } 27

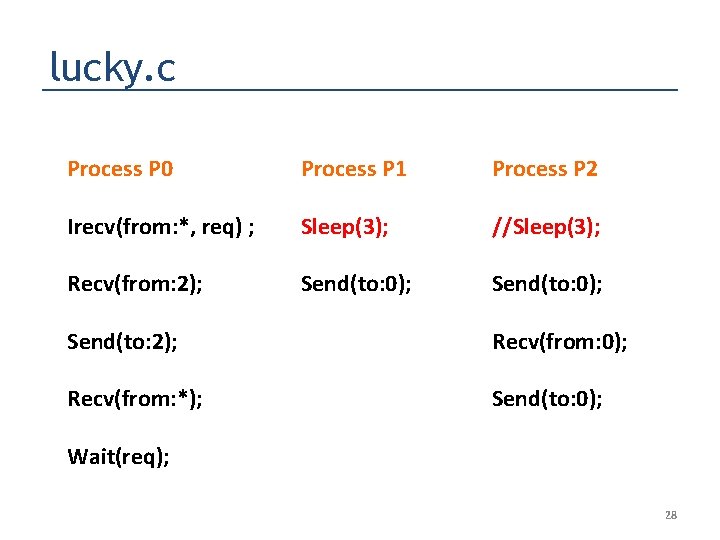

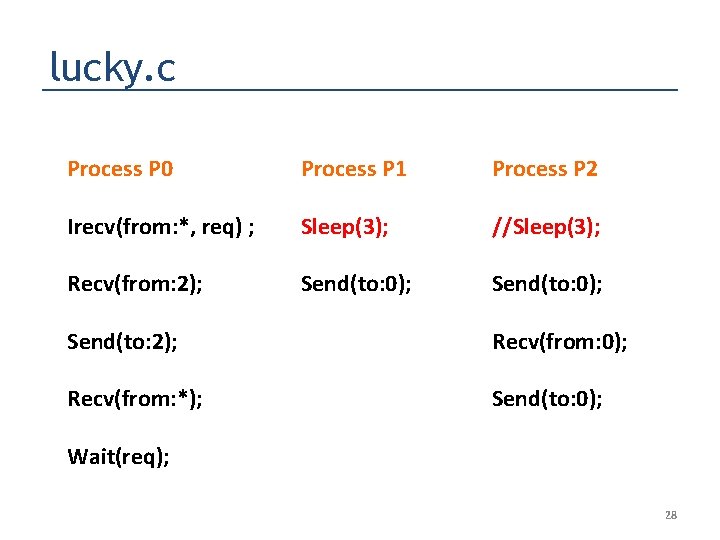

lucky. c Process P 0 Process P 1 Process P 2 Irecv(from: *, req) ; Sleep(3); //Sleep(3); Recv(from: 2); Send(to: 0); Send(to: 2); Recv(from: 0); Recv(from: *); Send(to: 0); Wait(req); 28

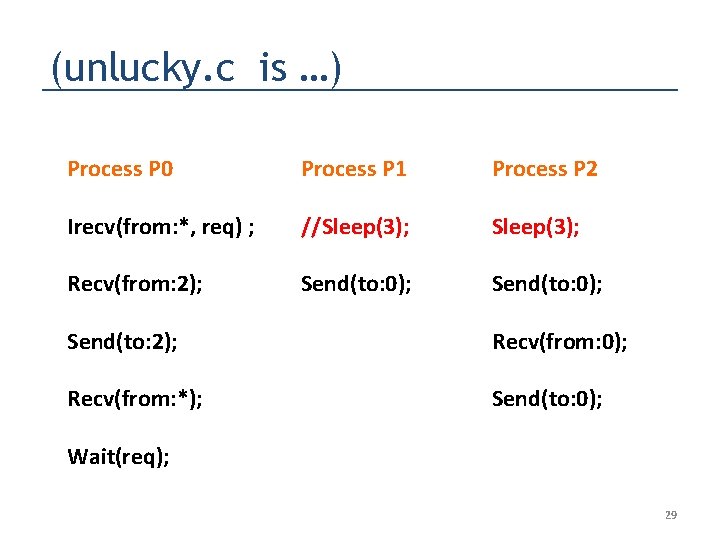

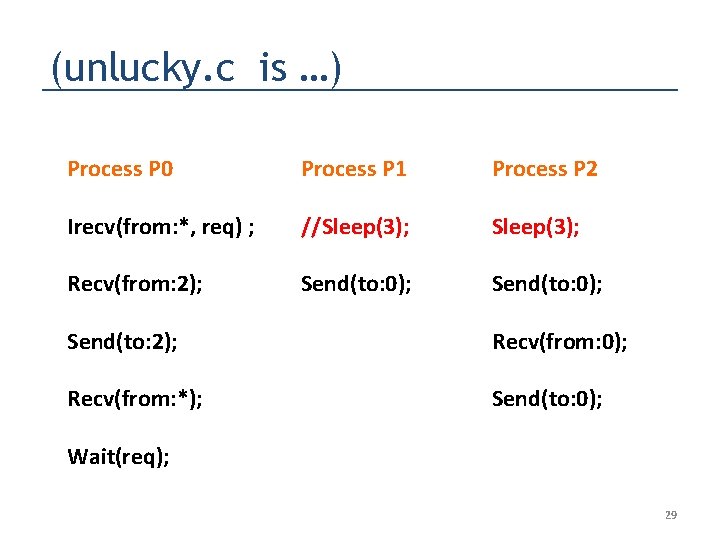

(unlucky. c is …) Process P 0 Process P 1 Process P 2 Irecv(from: *, req) ; //Sleep(3); Recv(from: 2); Send(to: 0); Send(to: 2); Recv(from: 0); Recv(from: *); Send(to: 0); Wait(req); 29

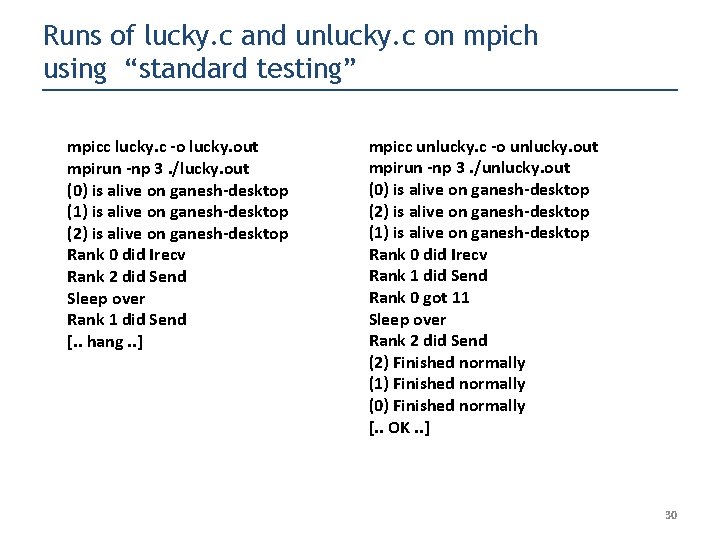

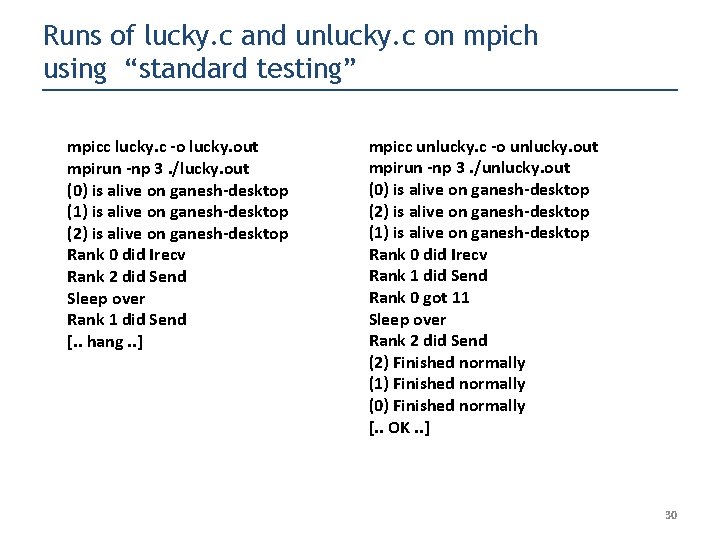

Runs of lucky. c and unlucky. c on mpich using “standard testing” mpicc lucky. c -o lucky. out mpirun -np 3. /lucky. out (0) is alive on ganesh-desktop (1) is alive on ganesh-desktop (2) is alive on ganesh-desktop Rank 0 did Irecv Rank 2 did Send Sleep over Rank 1 did Send [. . hang. . ] mpicc unlucky. c -o unlucky. out mpirun -np 3. /unlucky. out (0) is alive on ganesh-desktop (2) is alive on ganesh-desktop (1) is alive on ganesh-desktop Rank 0 did Irecv Rank 1 did Send Rank 0 got 11 Sleep over Rank 2 did Send (2) Finished normally (1) Finished normally (0) Finished normally [. . OK. . ] 30

Tentative Outline of Tutorial • • • 9: 00 to 9: 15 – Overview of Formal Verification (Ganesh Gopalakrishnan) 9: 15 to 9: 30 - Overview of MPI and some MPI constructs (Mike Kirby) 9: 30 to 10: 00 – Demo of ISP, our Dynamic Verification tool for MPI (Ganesh) – – • • • 10: 00 to 10: 30 – Help Attendees Boot Live. CD/USB and run POE Illustration (Anh Vo) 10: 30 to 11: 00 – BREAK 11: 00 to 12: 00 – Advanced MPI Verification (Ganesh) – – – • Illustration of more deadlocks Resource Leak Detection How ISP reveals the details of Iprobe (receive need not match the probed) Assertion Violation Detection Theoretical Concepts behind ISP’s POE Algorithm – how the algorithm directly follows our semantics 12: 00 to 12: 30 – Matrix Multiplication (Mike) – – – • • • Architecture of ISP Present Any_src_can_deadlock with hardwired timings under MPIRUN and under ISP In-depth presentation of POE-Illustration Presentation of traces by Program Order, Internal Issue Order, and Time Order Four versions of Matrix Multiplication Audience participation in running Dynamic Verification Audience participation in reviewing results using ISP user-interfaces 12: 30 to 2: 00 -- LUNCH 2: 00 to 3: 00 – Tool Assisted Verification using ISP: We assign problems and help you solve them (all tutors) 3: 00 to 3: 30 - Dynamic Verification of Shared Memory Thread Programs using Inspect (Gopalakrishnan) 3: 30 to 4: 00 – BREAK 4: 00 to 4: 30 - Tool Assisted Verification using Inspect (all tutors) 4: 30 to 5: 00 – Conclusions, Future Work 31

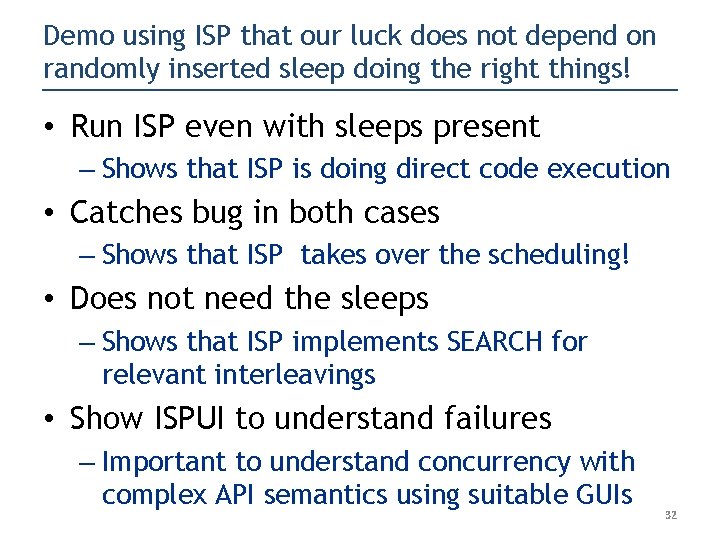

Demo using ISP that our luck does not depend on randomly inserted sleep doing the right things! • Run ISP even with sleeps present – Shows that ISP is doing direct code execution • Catches bug in both cases – Shows that ISP takes over the scheduling! • Does not need the sleeps – Shows that ISP implements SEARCH for relevant interleavings • Show ISPUI to understand failures – Important to understand concurrency with complex API semantics using suitable GUIs 32

ISP’s Range of bugs For an MPI program and an input ISP guarantees to detect these bug classes By generating only relevant interleavings 33

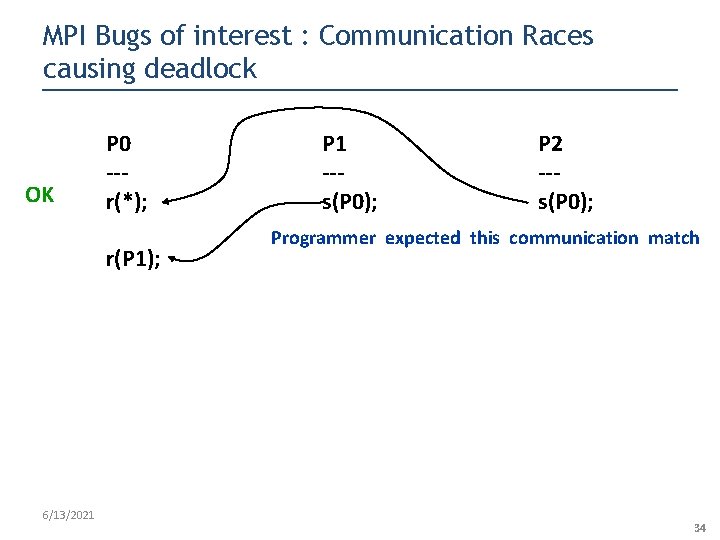

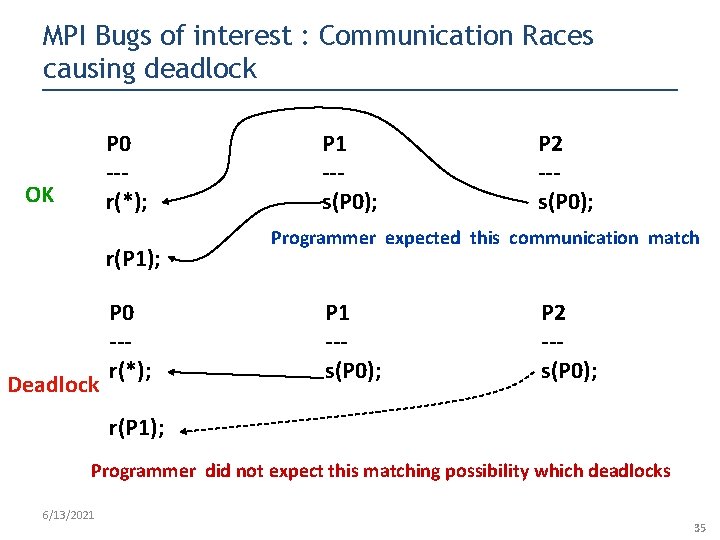

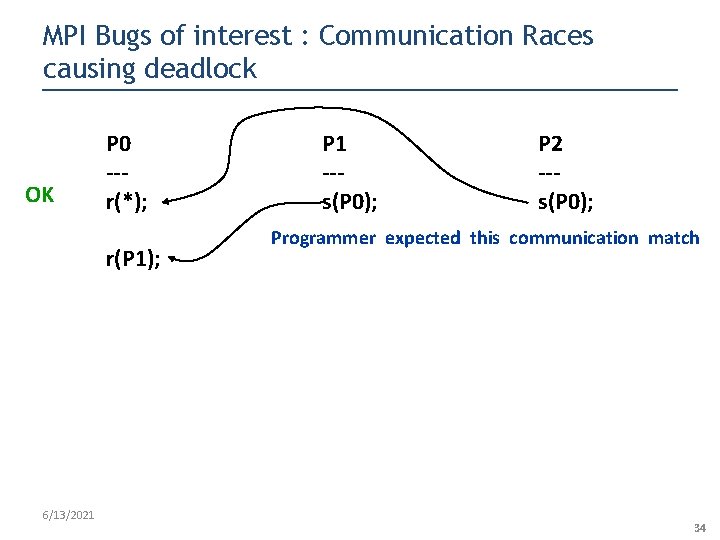

MPI Bugs of interest : Communication Races causing deadlock OK P 0 --r(*); r(P 1); 6/13/2021 P 1 --s(P 0); P 2 --s(P 0); Programmer expected this communication match 34

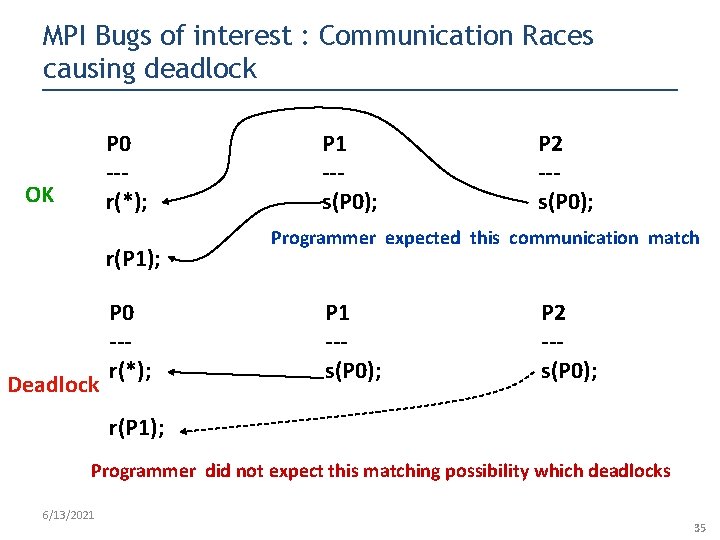

MPI Bugs of interest : Communication Races causing deadlock P 0 --r(*); OK r(P 1); Deadlock P 0 --r(*); P 1 --s(P 0); P 2 --s(P 0); Programmer expected this communication match P 1 --s(P 0); P 2 --s(P 0); r(P 1); Programmer did not expect this matching possibility which deadlocks 6/13/2021 35

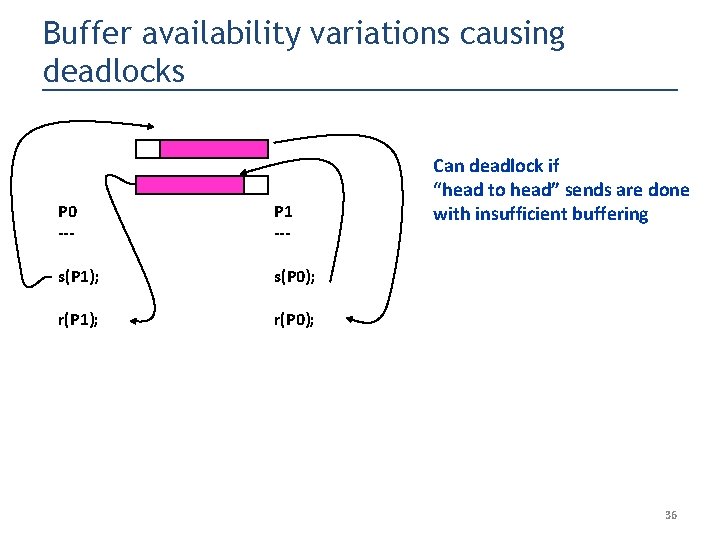

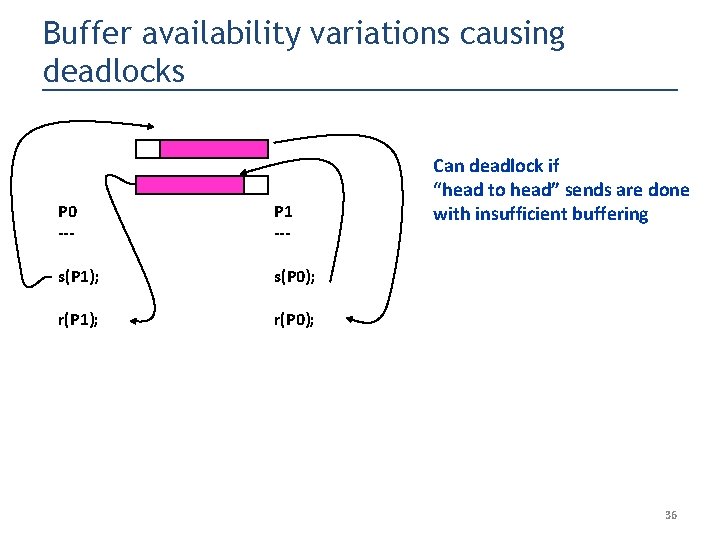

Buffer availability variations causing deadlocks P 0 --- P 1 --- s(P 1); s(P 0); r(P 1); r(P 0); Can deadlock if “head to head” sends are done with insufficient buffering 36

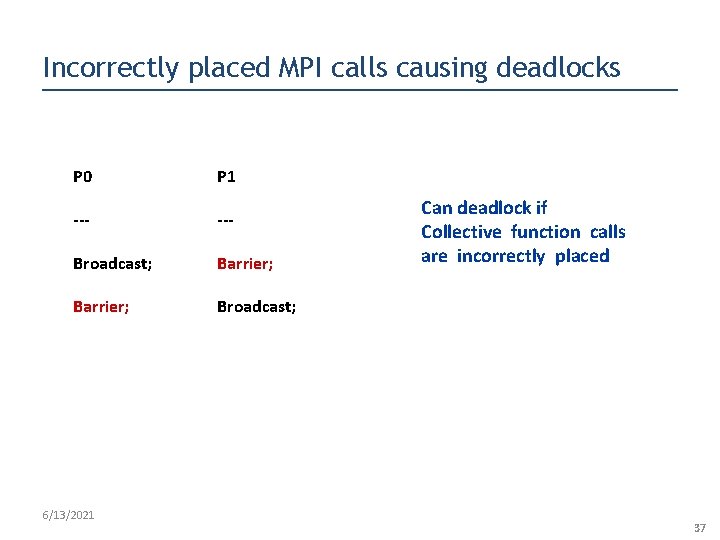

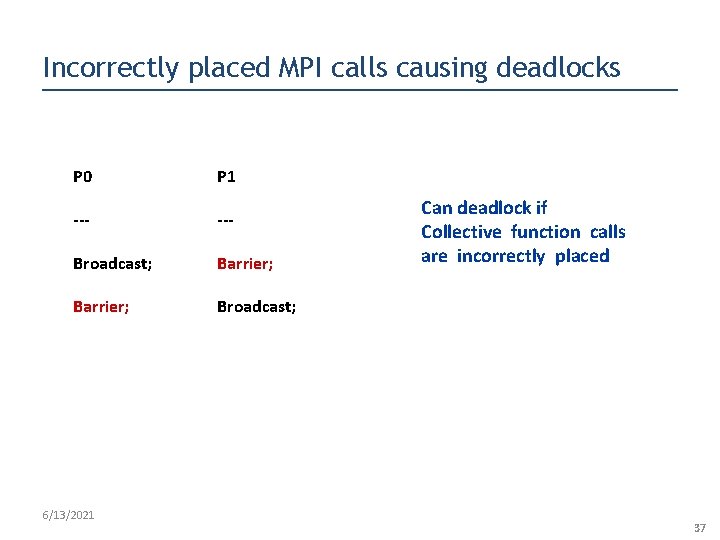

Incorrectly placed MPI calls causing deadlocks P 0 P 1 --- Broadcast; Barrier; Broadcast; 6/13/2021 Can deadlock if Collective function calls are incorrectly placed 37

Resource Leak Pattern… P 0 --some_allocation_operation FORGOTTEN DEALLOCATION !! 6/13/2021 38

Assertion Violations Assert statements planted in sequential code 6/13/2021 39

On the exponential nature of dynamic verification Many things tend to explode with exponential complexity during dynamic verification… must combat these ! 40

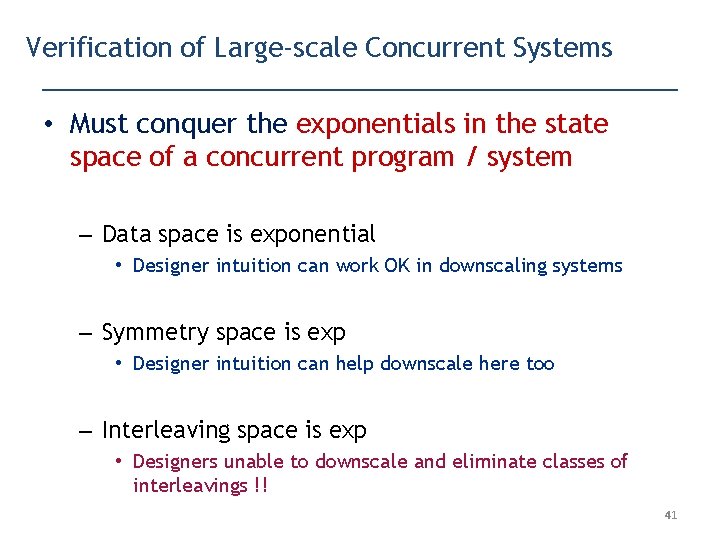

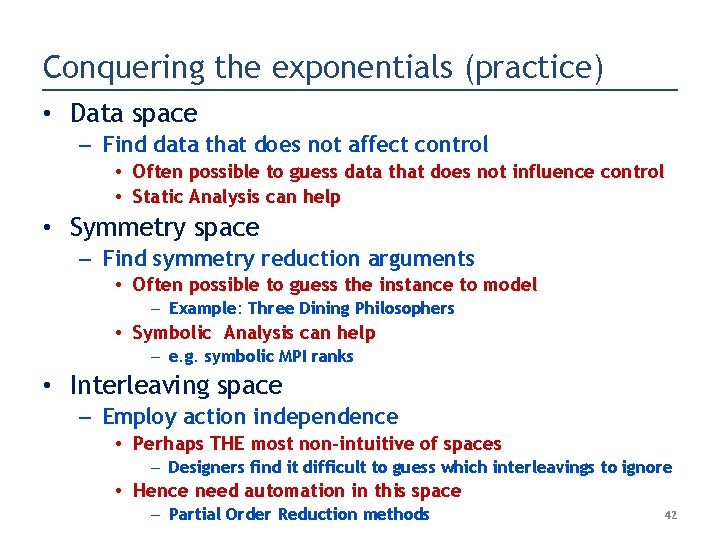

Verification of Large-scale Concurrent Systems • Must conquer the exponentials in the state space of a concurrent program / system – Data space is exponential • Designer intuition can work OK in downscaling systems – Symmetry space is exp • Designer intuition can help downscale here too – Interleaving space is exp • Designers unable to downscale and eliminate classes of interleavings !! 41

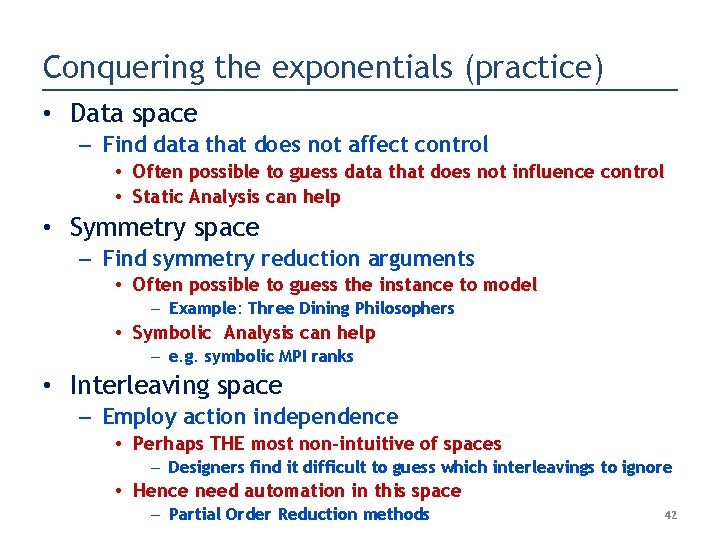

Conquering the exponentials (practice) • Data space – Find data that does not affect control • Often possible to guess data that does not influence control • Static Analysis can help • Symmetry space – Find symmetry reduction arguments • Often possible to guess the instance to model – Example: Three Dining Philosophers • Symbolic Analysis can help – e. g. symbolic MPI ranks • Interleaving space – Employ action independence • Perhaps THE most non-intuitive of spaces – Designers find it difficult to guess which interleavings to ignore • Hence need automation in this space – Partial Order Reduction methods 42

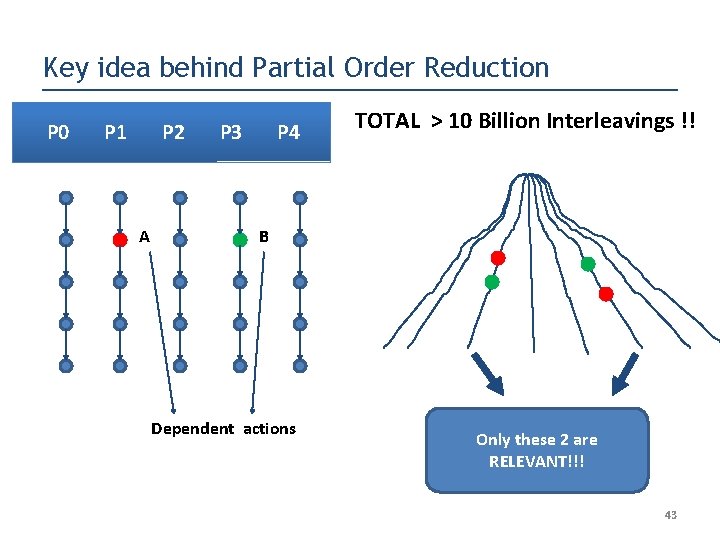

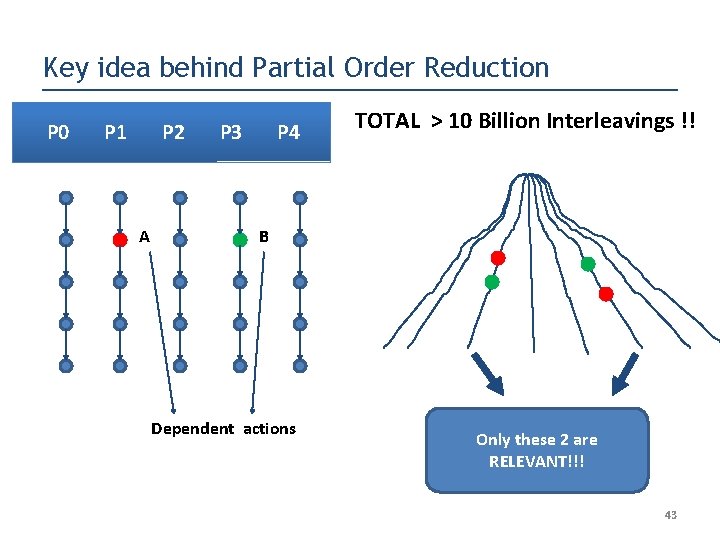

Key idea behind Partial Order Reduction P 0 P 1 P 2 A P 3 P 4 TOTAL > 10 Billion Interleavings !! B Dependent actions Only these 2 are RELEVANT!!! 43

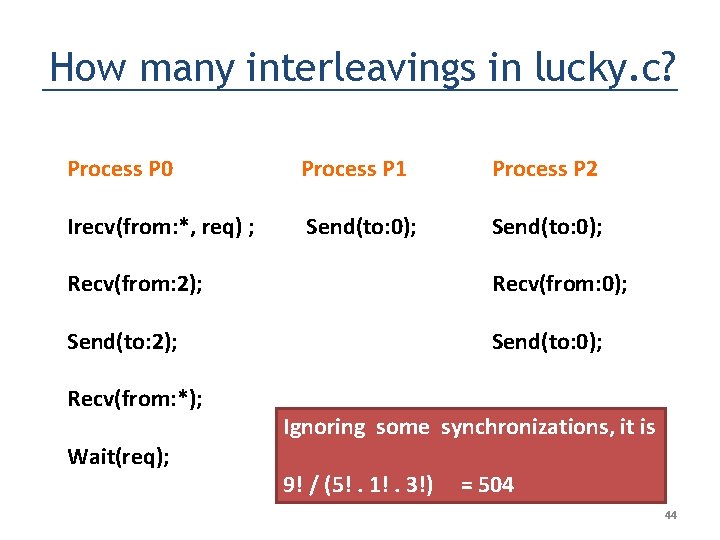

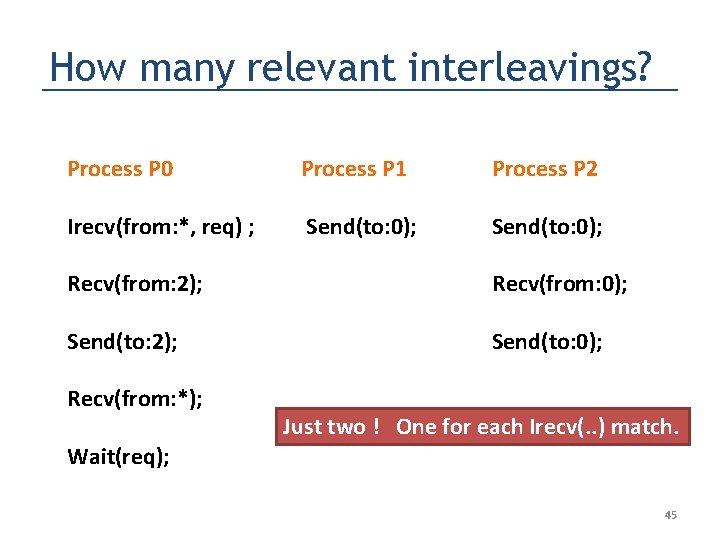

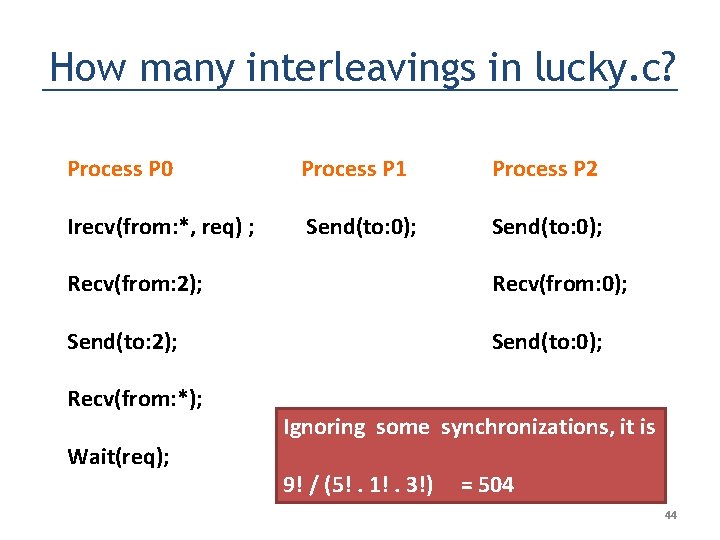

How many interleavings in lucky. c? Process P 0 Process P 1 Process P 2 Irecv(from: *, req) ; Send(to: 0); Recv(from: 2); Recv(from: 0); Send(to: 2); Send(to: 0); Recv(from: *); Wait(req); Ignoring some synchronizations, it is 9! / (5!. 1!. 3!) = 504 44

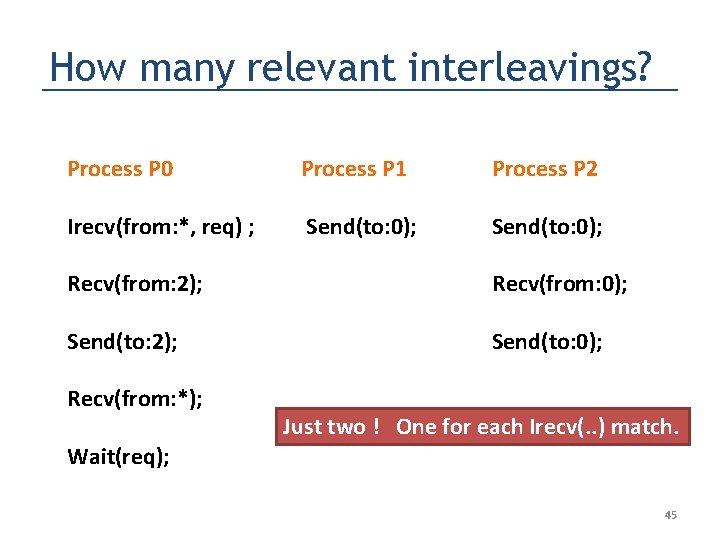

How many relevant interleavings? Process P 0 Process P 1 Process P 2 Irecv(from: *, req) ; Send(to: 0); Recv(from: 2); Recv(from: 0); Send(to: 2); Send(to: 0); Recv(from: *); Just two ! One for each Irecv(. . ) match. Wait(req); 45

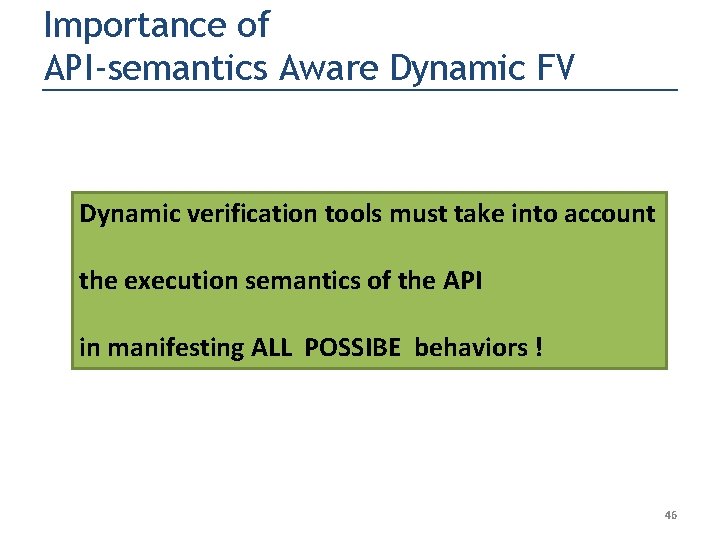

Importance of API-semantics Aware Dynamic FV Dynamic verification tools must take into account the execution semantics of the API in manifesting ALL POSSIBE behaviors ! 46

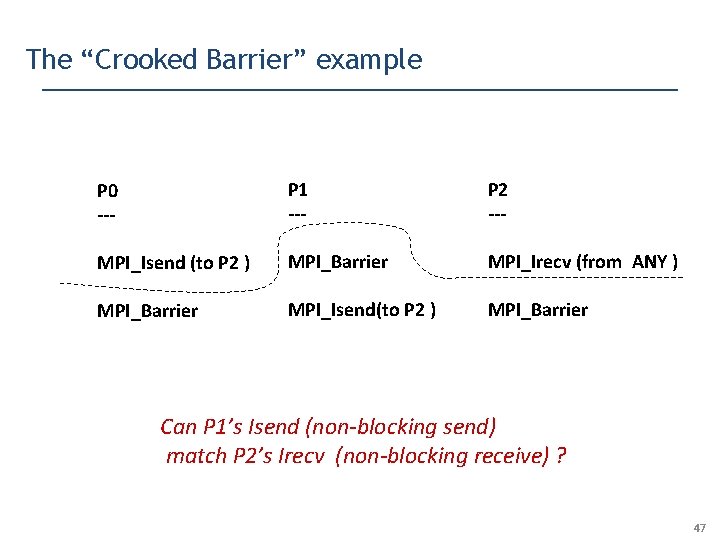

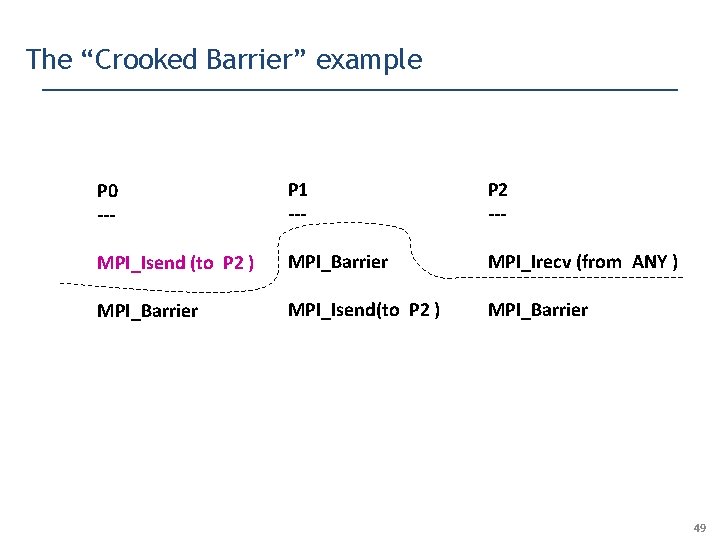

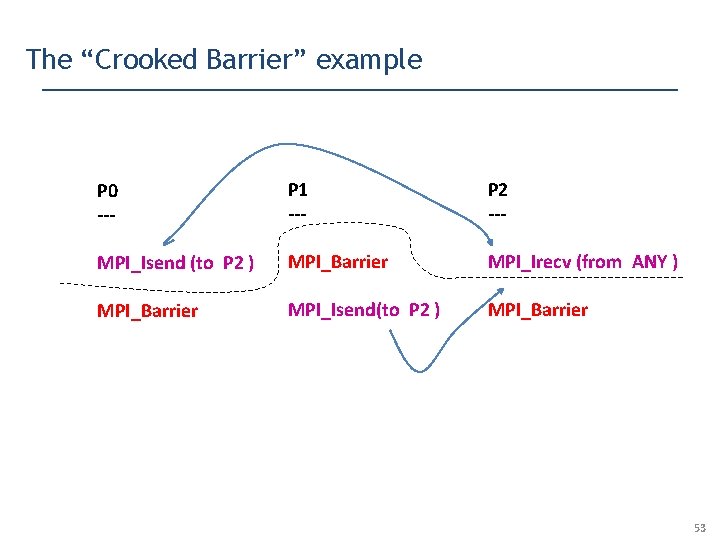

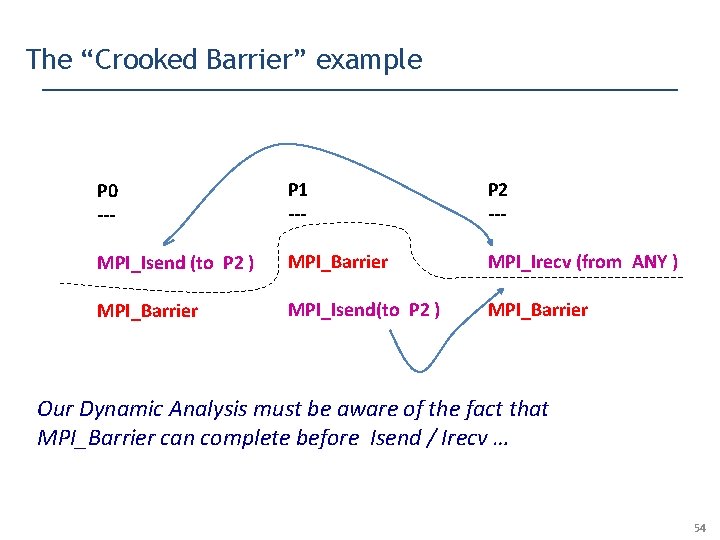

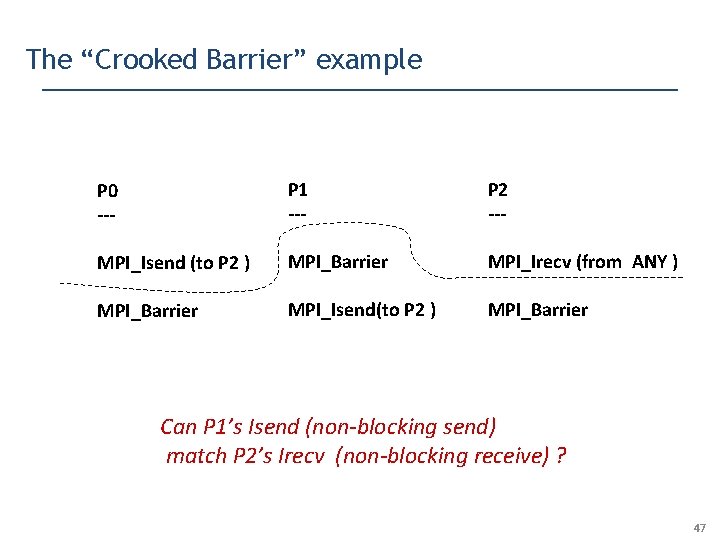

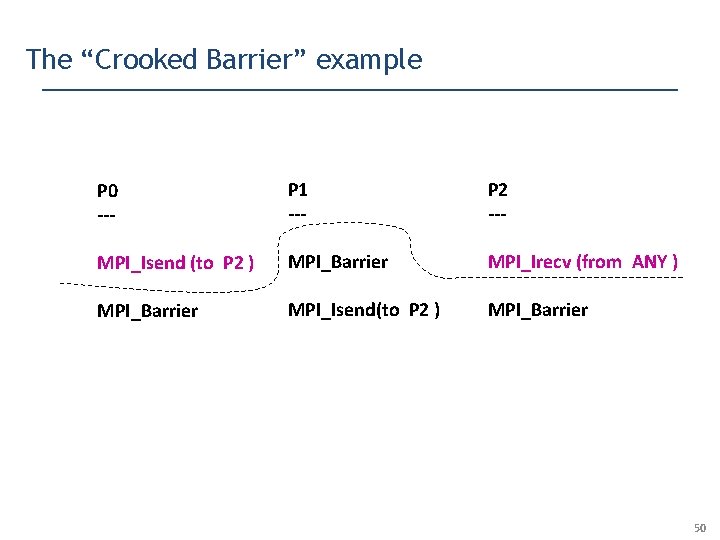

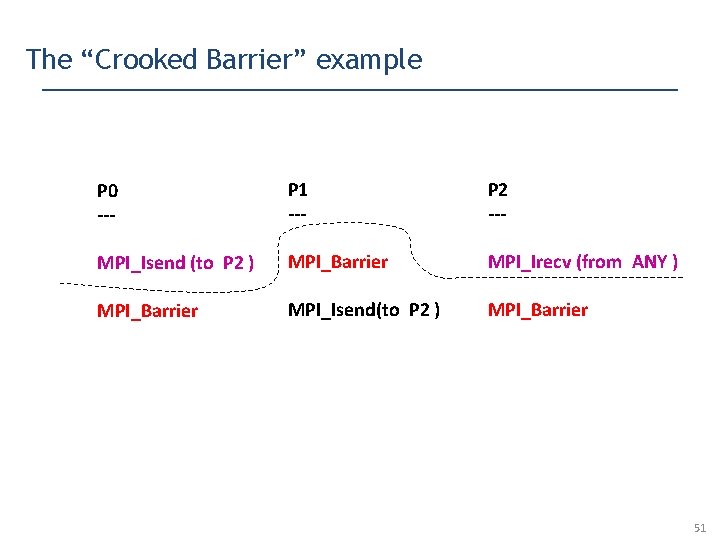

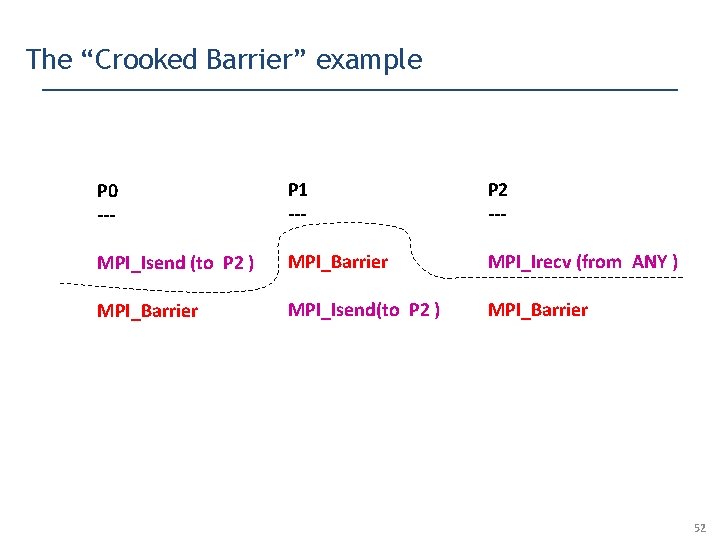

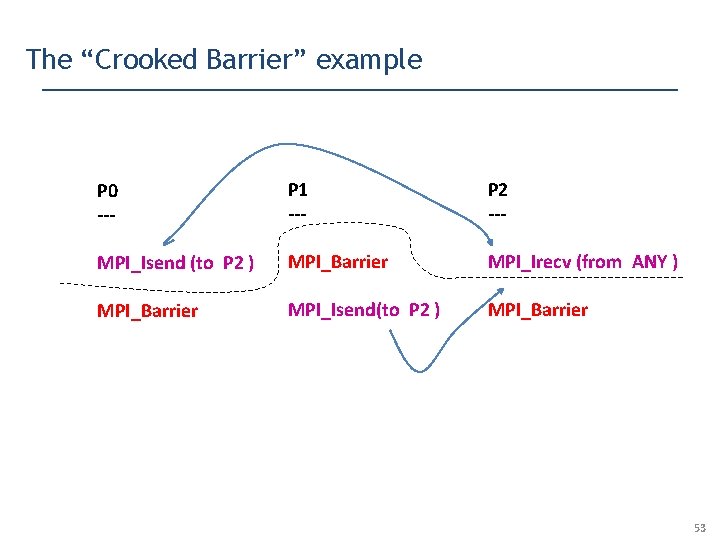

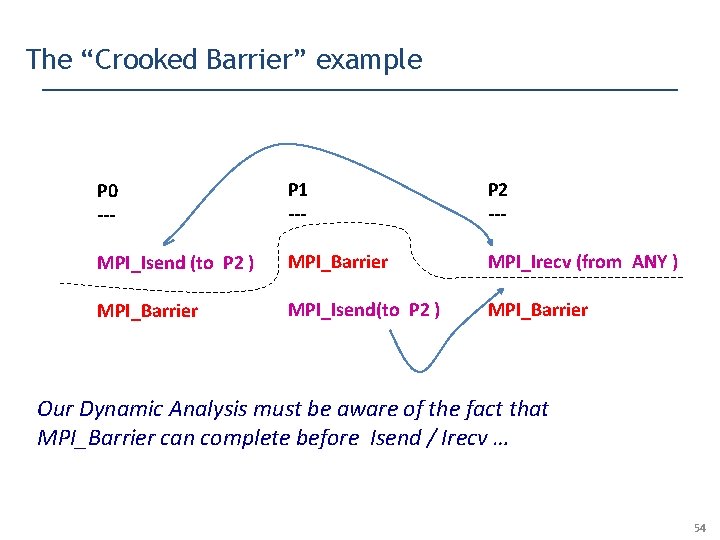

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Can P 1’s Isend (non-blocking send) match P 2’s Irecv (non-blocking receive) ? 47

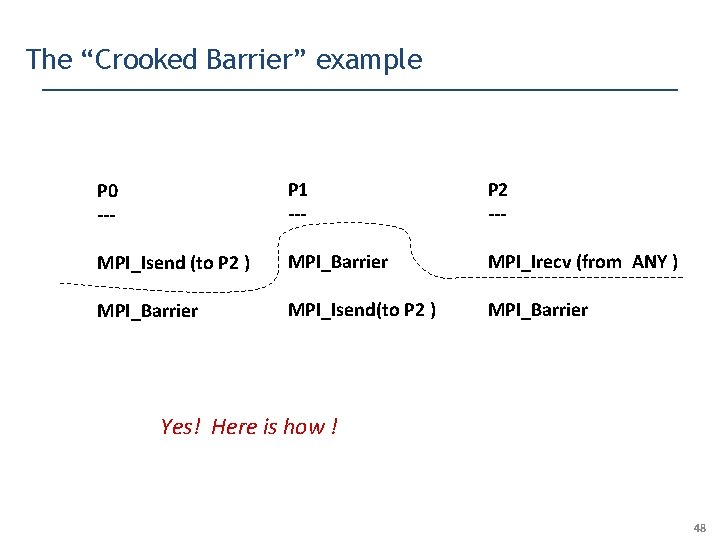

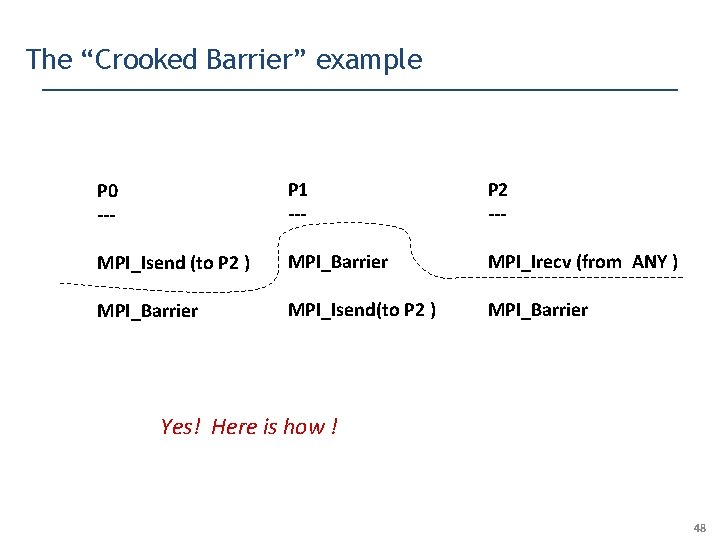

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Yes! Here is how ! 48

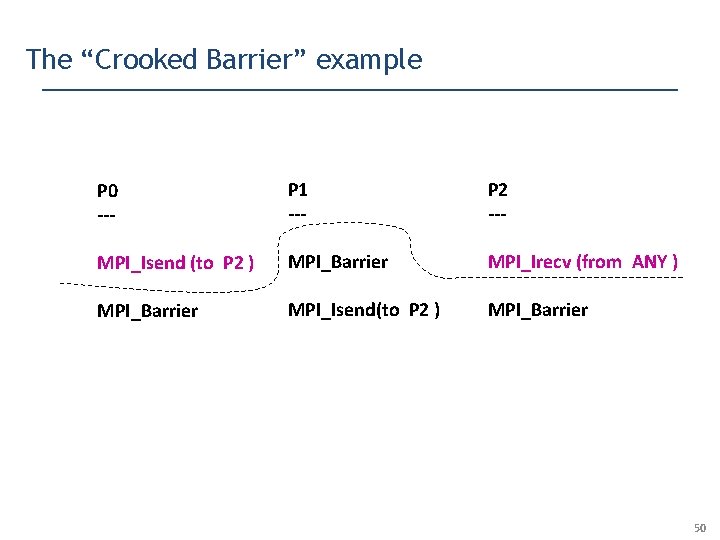

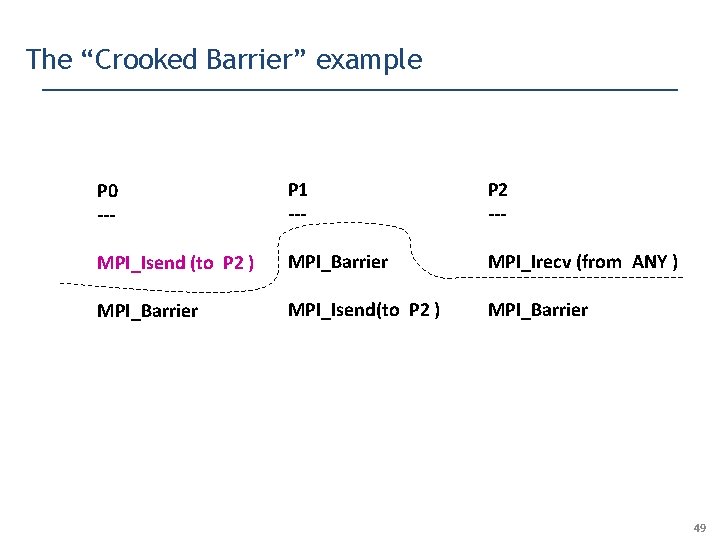

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier 49

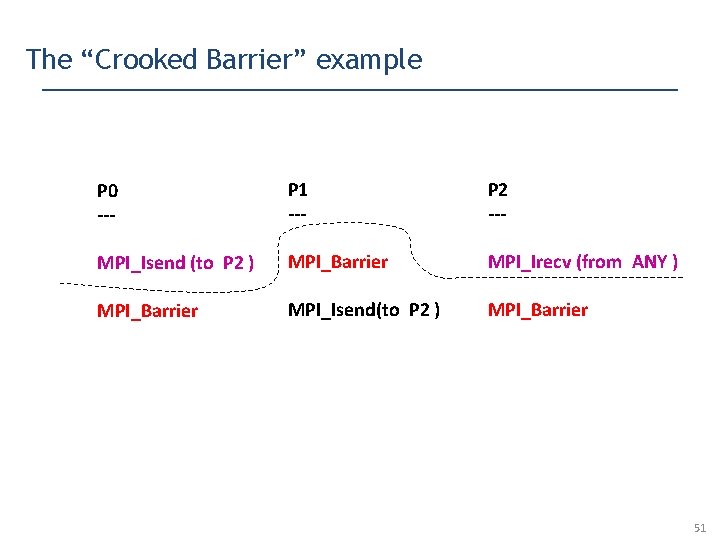

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier 50

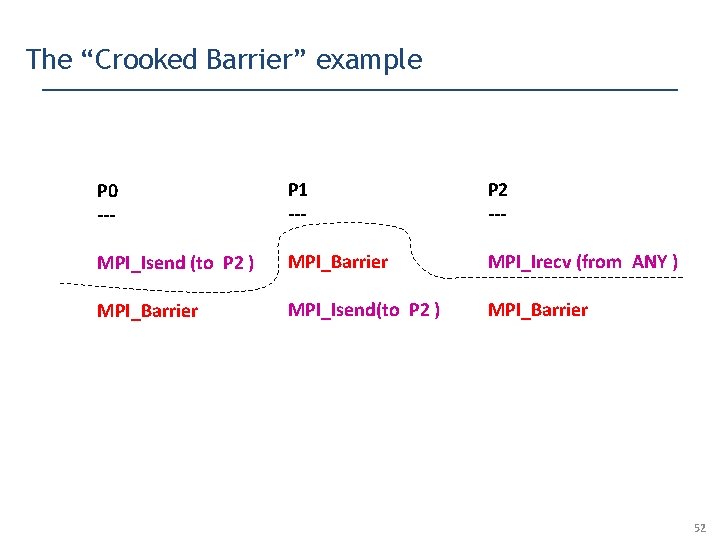

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier 51

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier 52

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier 53

The “Crooked Barrier” example P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Our Dynamic Analysis must be aware of the fact that MPI_Barrier can complete before Isend / Irecv … 54

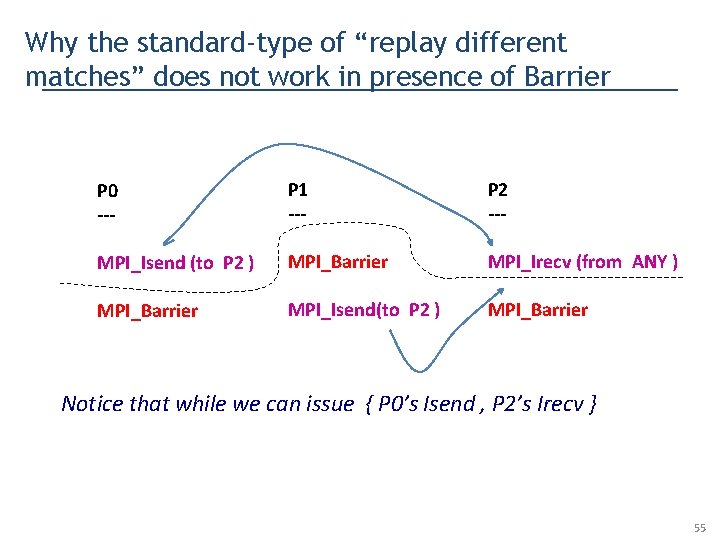

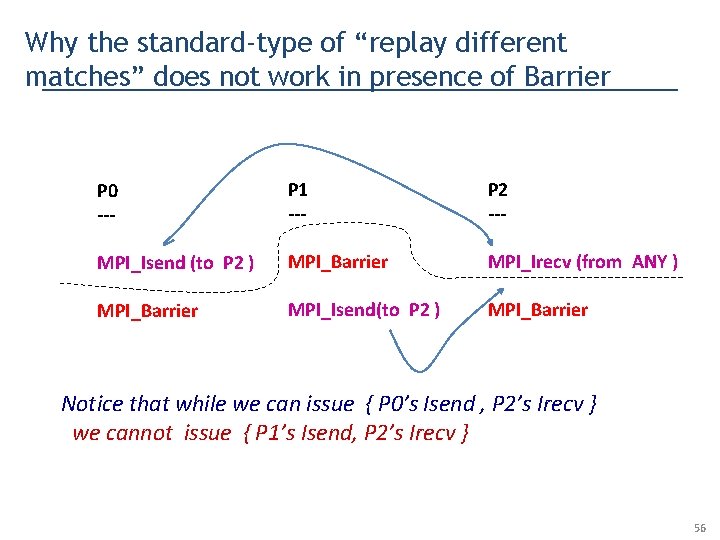

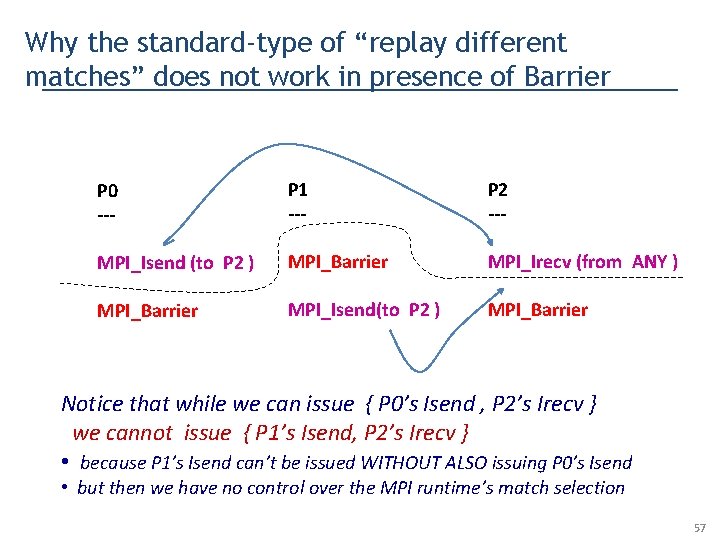

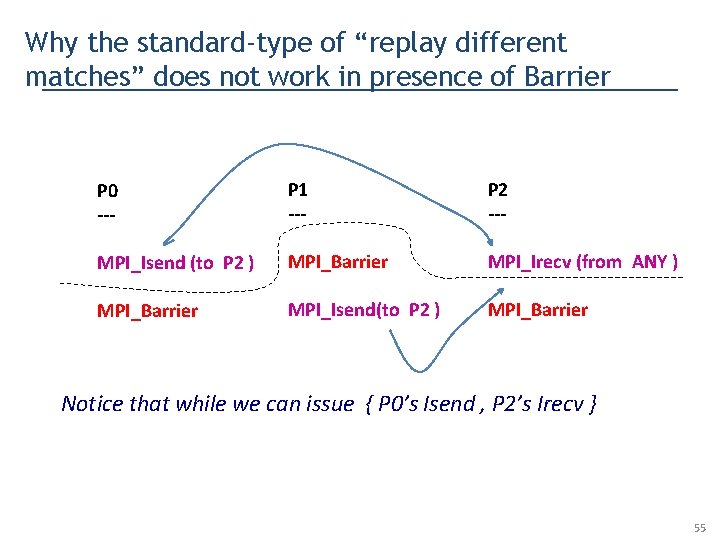

Why the standard-type of “replay different matches” does not work in presence of Barrier P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Notice that while we can issue { P 0’s Isend , P 2’s Irecv } 55

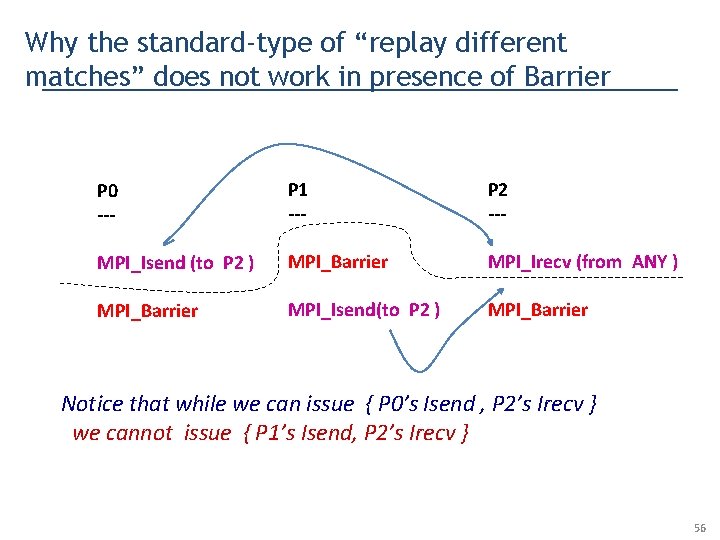

Why the standard-type of “replay different matches” does not work in presence of Barrier P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Notice that while we can issue { P 0’s Isend , P 2’s Irecv } we cannot issue { P 1’s Isend, P 2’s Irecv } 56

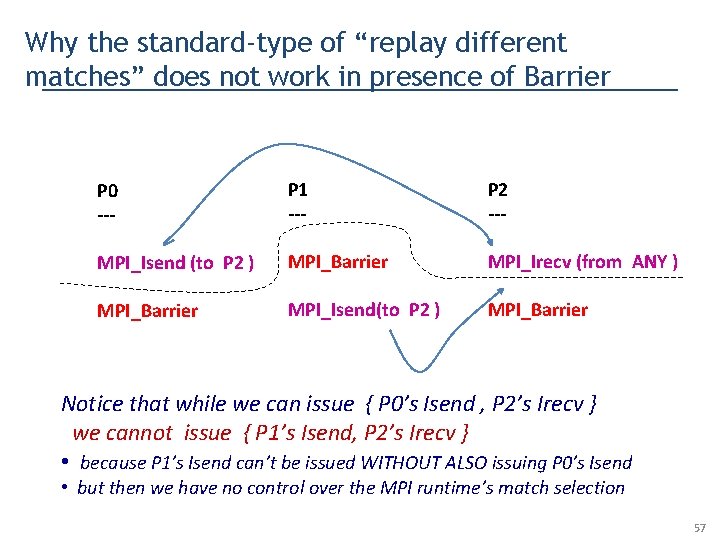

Why the standard-type of “replay different matches” does not work in presence of Barrier P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Notice that while we can issue { P 0’s Isend , P 2’s Irecv } we cannot issue { P 1’s Isend, P 2’s Irecv } • because P 1’s Isend can’t be issued WITHOUT ALSO issuing P 0’s Isend • but then we have no control over the MPI runtime’s match selection 57

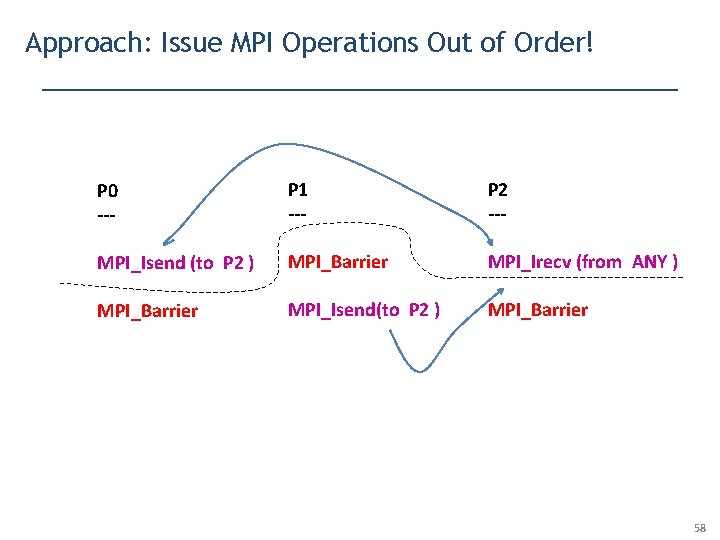

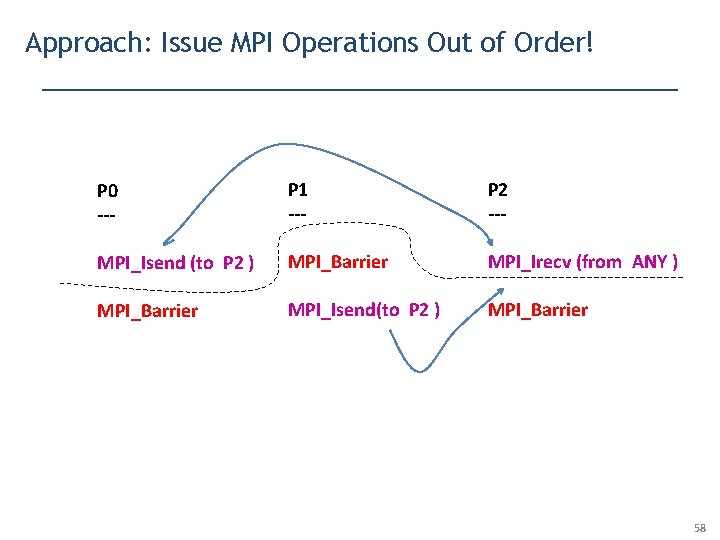

Approach: Issue MPI Operations Out of Order! P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier 58

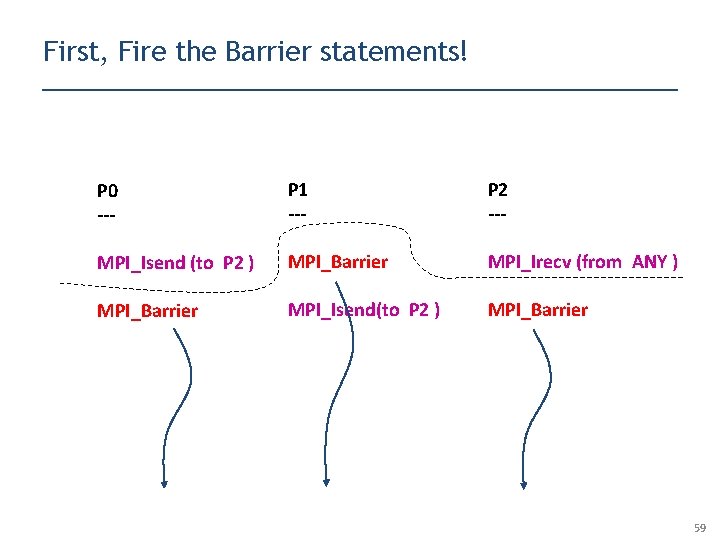

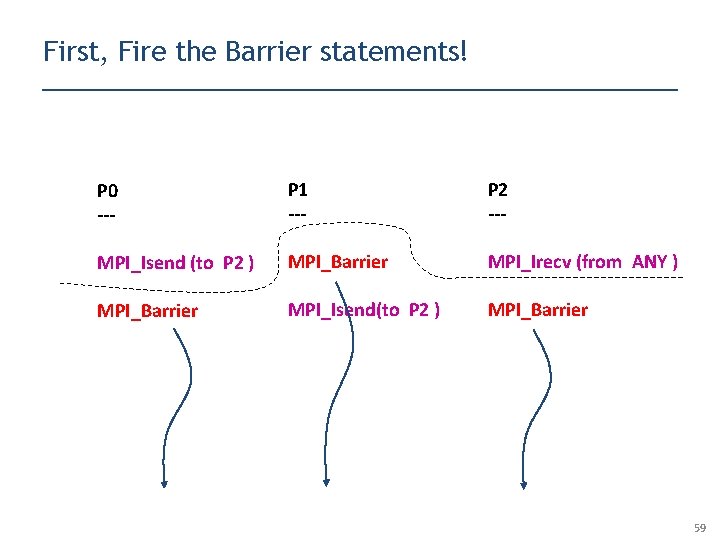

First, Fire the Barrier statements! P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from ANY ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier 59

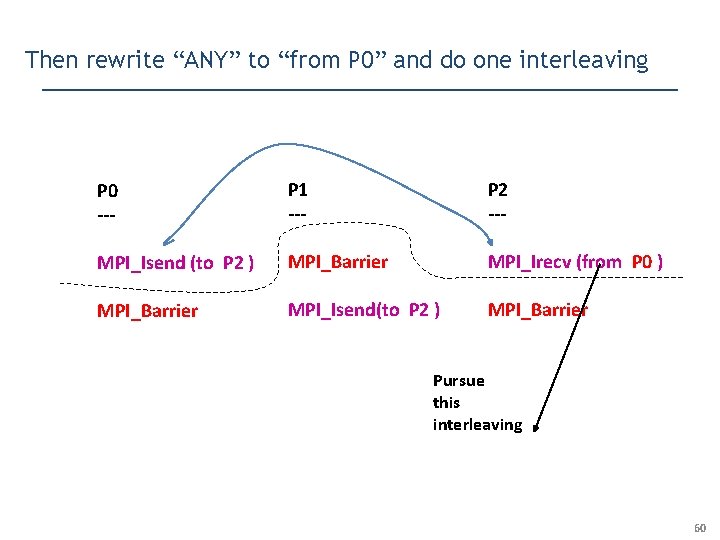

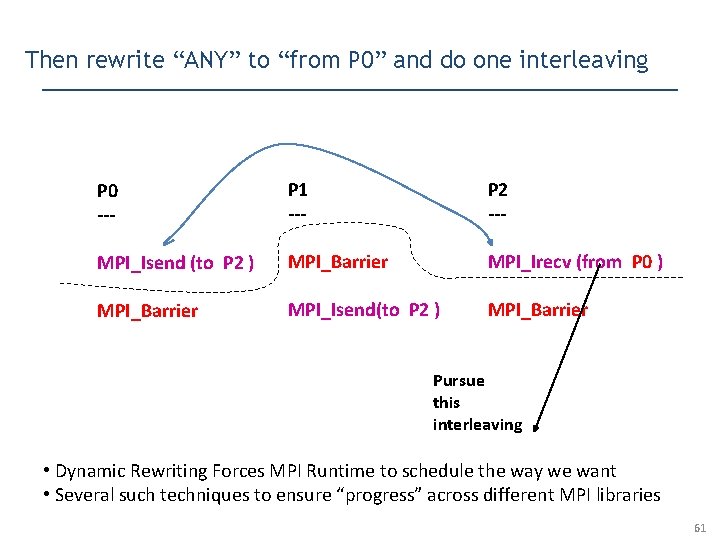

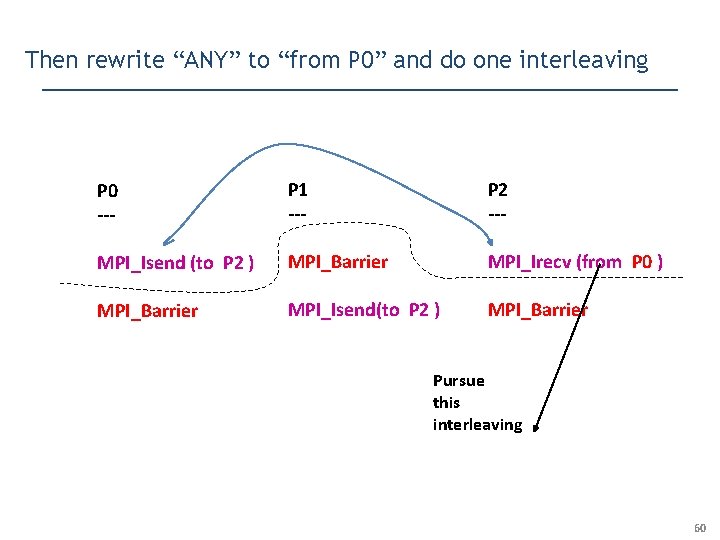

Then rewrite “ANY” to “from P 0” and do one interleaving P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from P 0 ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Pursue this interleaving 60

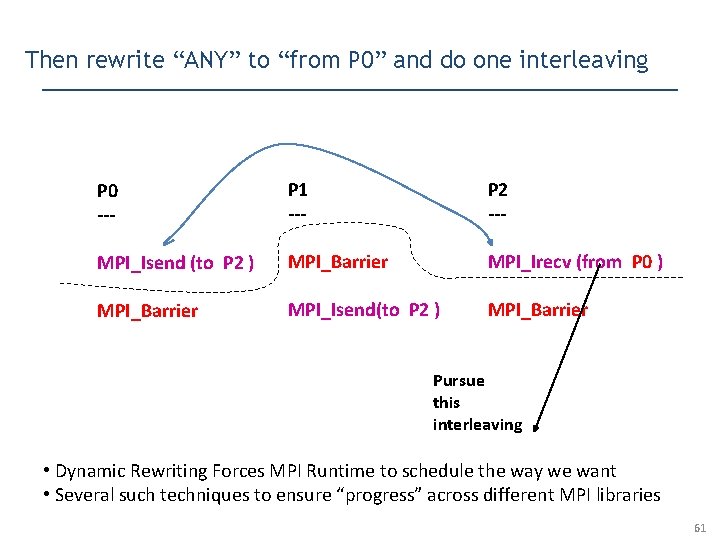

Then rewrite “ANY” to “from P 0” and do one interleaving P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from P 0 ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Pursue this interleaving • Dynamic Rewriting Forces MPI Runtime to schedule the way we want • Several such techniques to ensure “progress” across different MPI libraries 61

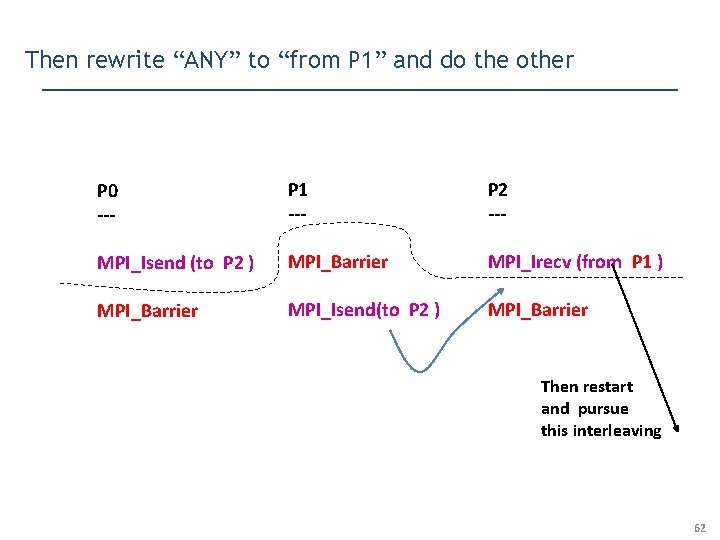

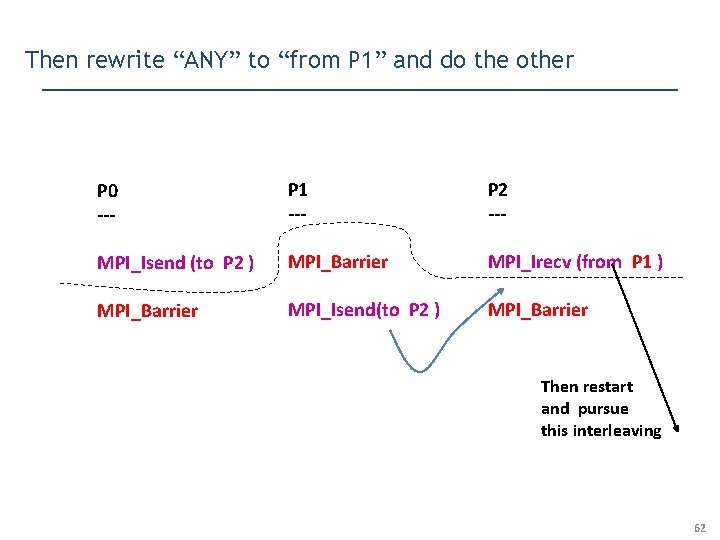

Then rewrite “ANY” to “from P 1” and do the other P 0 --- P 1 --- P 2 --- MPI_Isend (to P 2 ) MPI_Barrier MPI_Irecv (from P 1 ) MPI_Barrier MPI_Isend(to P 2 ) MPI_Barrier Then restart and pursue this interleaving 62

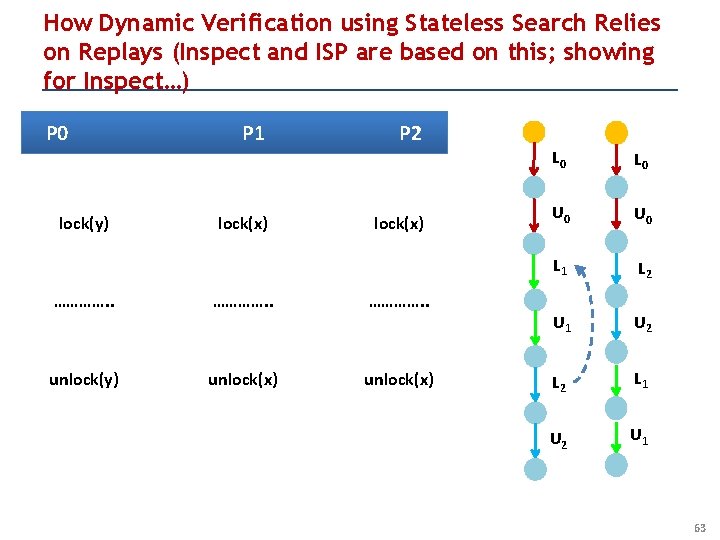

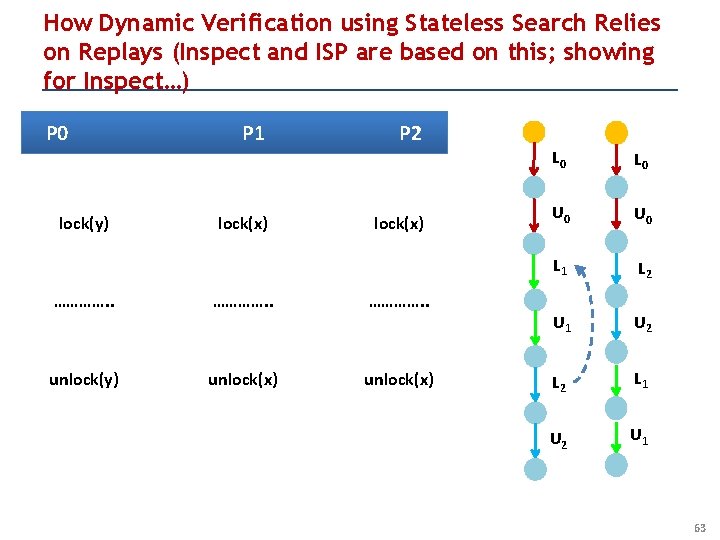

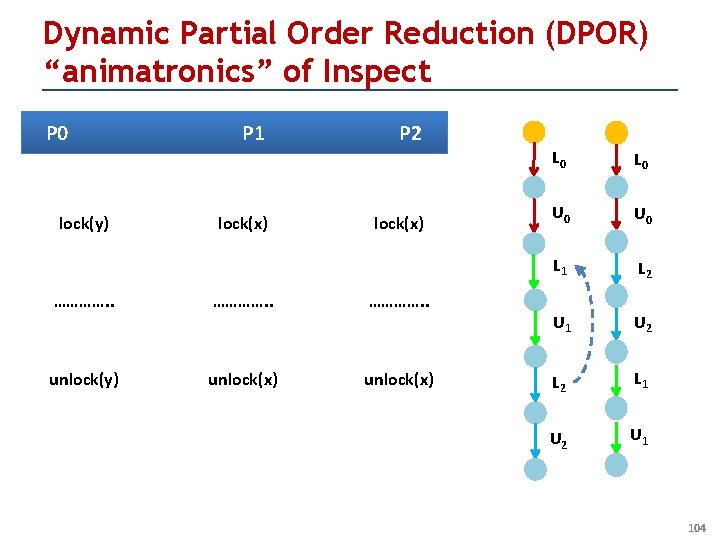

How Dynamic Verification using Stateless Search Relies on Replays (Inspect and ISP are based on this; showing for Inspect…) P 0 lock(y) P 1 lock(x) P 2 lock(x) …………. . unlock(y) unlock(x) L 0 U 0 L 1 L 2 U 1 U 2 L 1 U 2 U 1 63

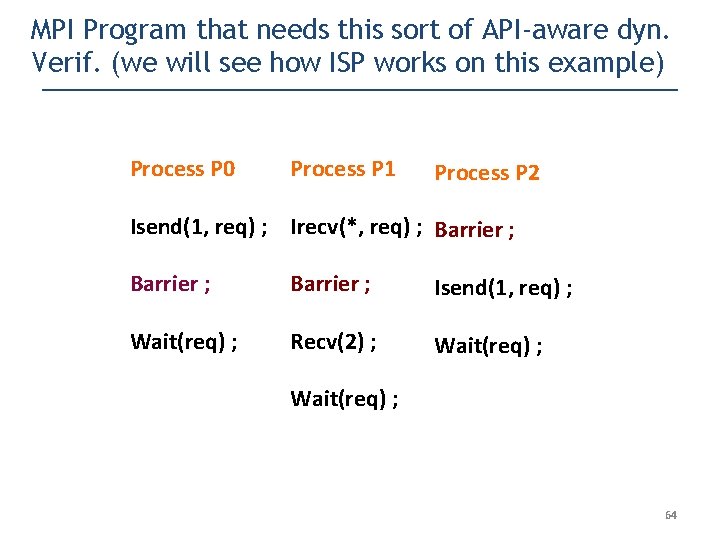

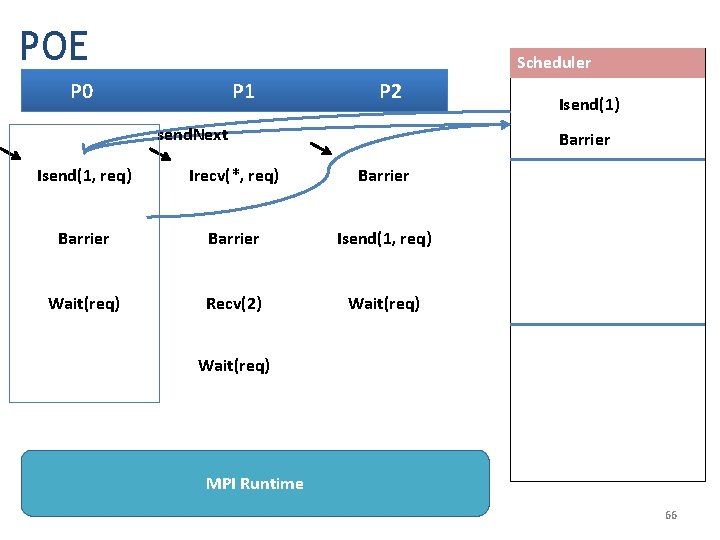

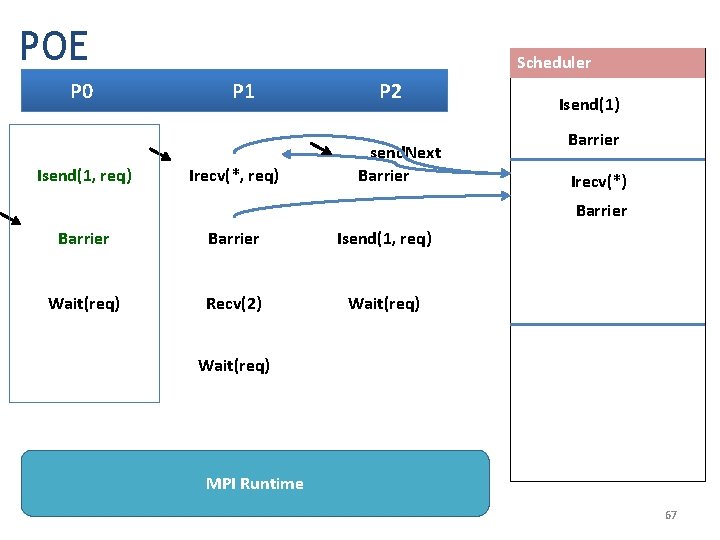

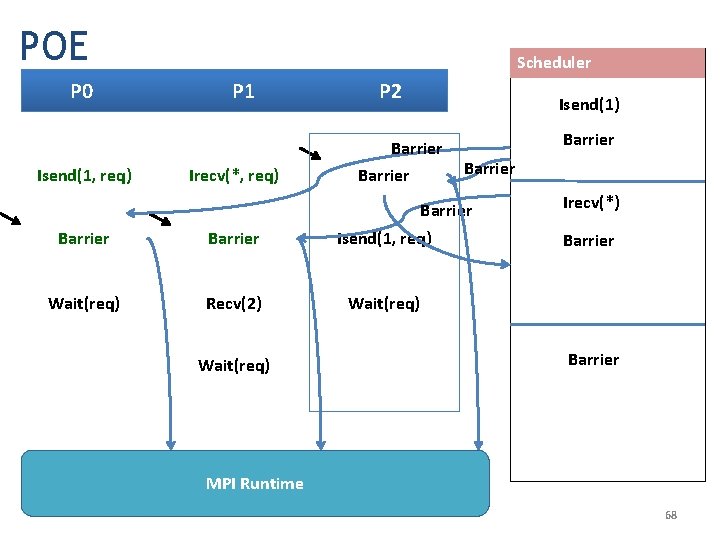

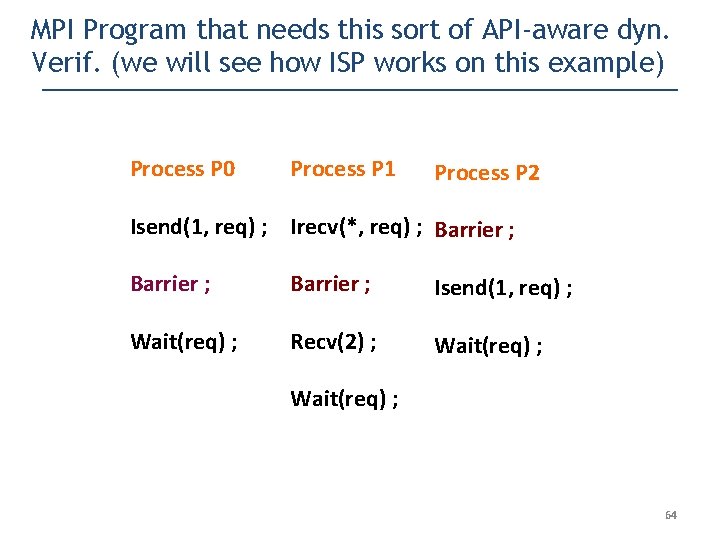

MPI Program that needs this sort of API-aware dyn. Verif. (we will see how ISP works on this example) Process P 0 Process P 1 Process P 2 Isend(1, req) ; Irecv(*, req) ; Barrier ; Isend(1, req) ; Wait(req) ; Recv(2) ; Wait(req) ; 64

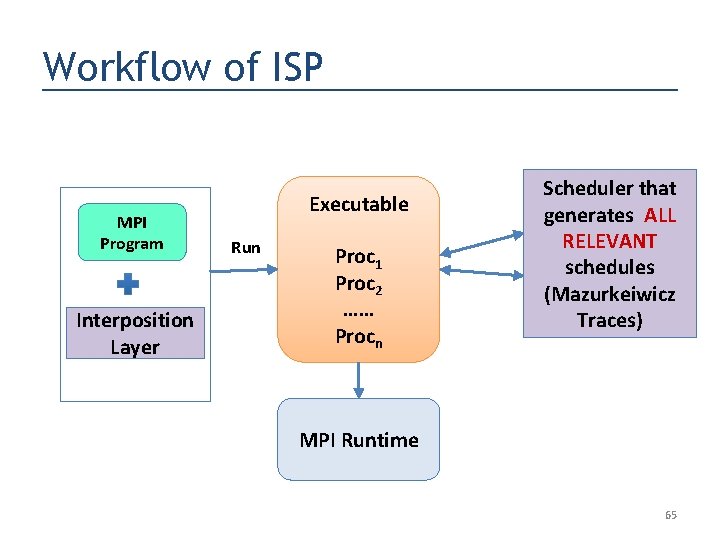

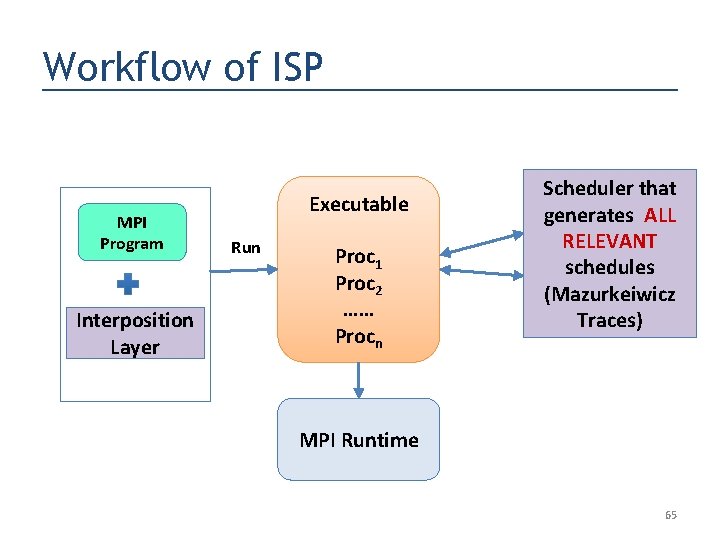

Workflow of ISP MPI Program Interposition Layer Executable Run Proc 1 Proc 2 …… Procn Scheduler that generates ALL RELEVANT schedules (Mazurkeiwicz Traces) MPI Runtime 65

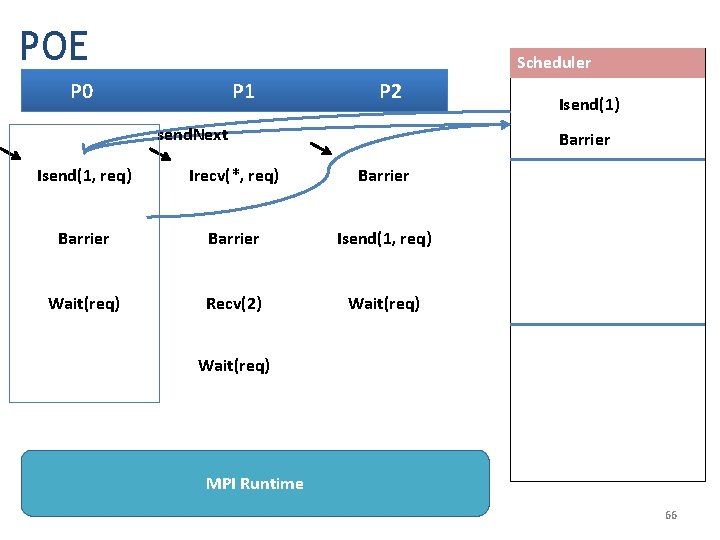

POE Scheduler P 0 P 1 P 2 send. Next Isend(1) Barrier Isend(1, req) Irecv(*, req) Barrier Isend(1, req) Wait(req) Recv(2) Wait(req) MPI Runtime 66

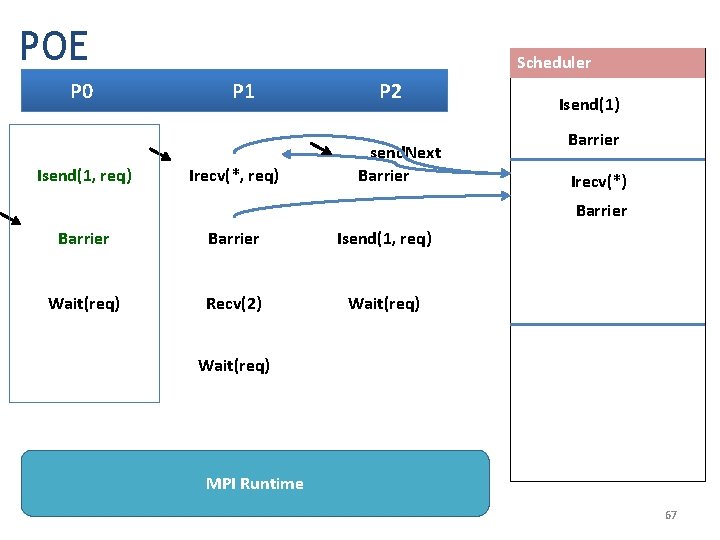

POE P 0 Isend(1, req) Scheduler P 1 Irecv(*, req) P 2 send. Next Barrier Isend(1) Barrier Irecv(*) Barrier Isend(1, req) Wait(req) Recv(2) Wait(req) MPI Runtime 67

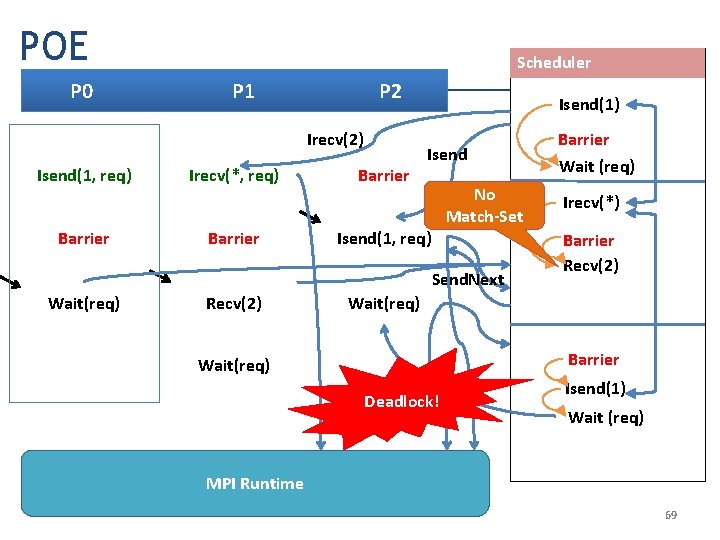

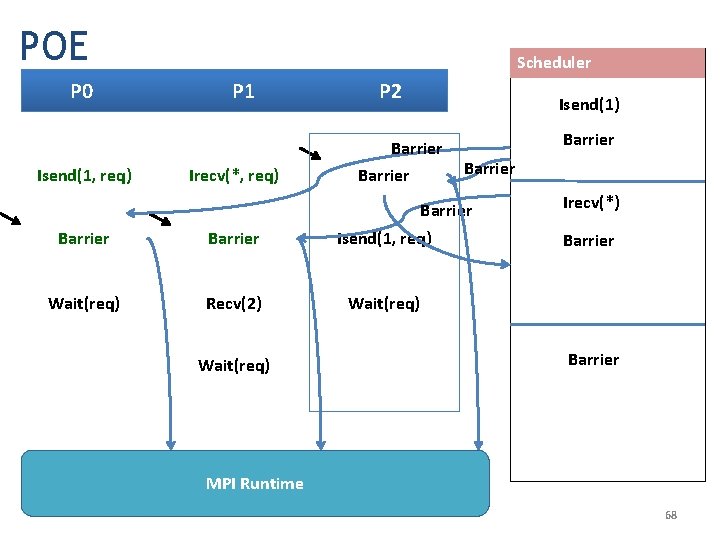

POE P 0 Isend(1, req) Scheduler P 1 Irecv(*, req) P 2 Isend(1) Barrier Barrier Isend(1, req) Wait(req) Recv(2) Wait(req) Irecv(*) Barrier MPI Runtime 68

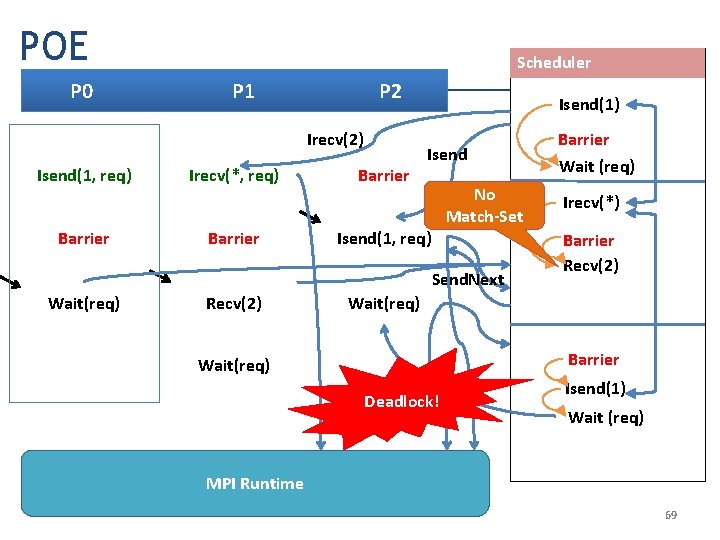

POE P 0 Scheduler P 1 P 2 Irecv(2) Isend(1, req) Barrier Irecv(*, req) Barrier Isend(1) Isend No Match-Set Isend(1, req) Send. Next Wait(req) Recv(2) Barrier Wait (req) Irecv(*) Barrier Recv(2) Wait(req) Barrier Wait(req) Wait Deadlock! Isend(1) Wait (req) MPI Runtime 69

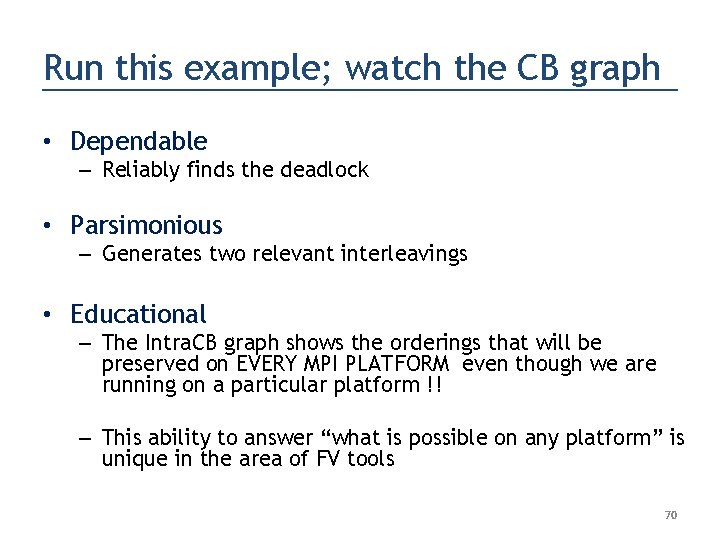

Run this example; watch the CB graph • Dependable – Reliably finds the deadlock • Parsimonious – Generates two relevant interleavings • Educational – The Intra. CB graph shows the orderings that will be preserved on EVERY MPI PLATFORM even though we are running on a particular platform !! – This ability to answer “what is possible on any platform” is unique in the area of FV tools 70

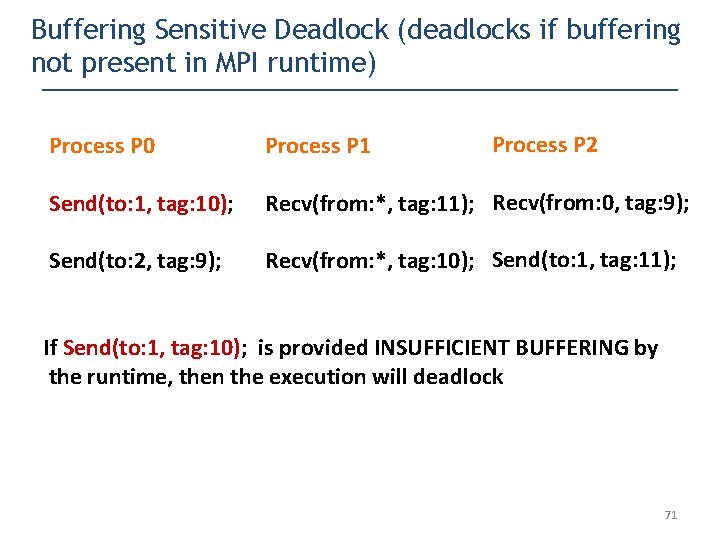

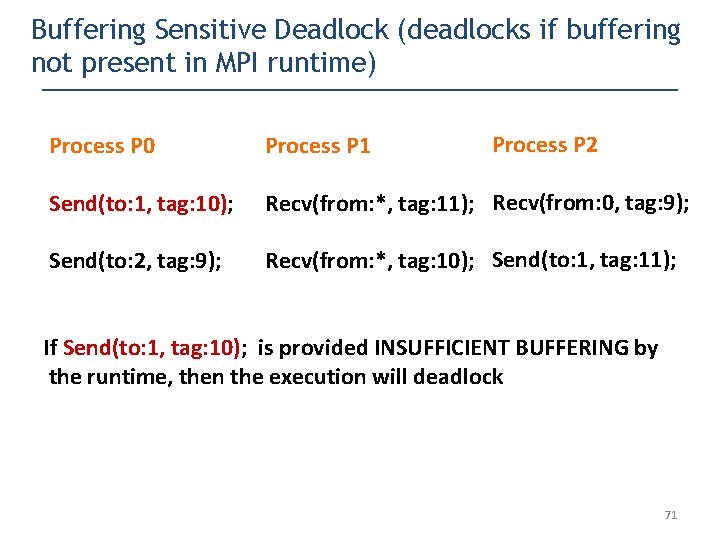

Buffering Sensitive Deadlock (deadlocks if buffering not present in MPI runtime) Process P 2 Process P 0 Process P 1 Send(to: 1, tag: 10); Recv(from: *, tag: 11); Recv(from: 0, tag: 9); Send(to: 2, tag: 9); Recv(from: *, tag: 10); Send(to: 1, tag: 11); If Send(to: 1, tag: 10); is provided INSUFFICIENT BUFFERING by the runtime, then the execution will deadlock 71

Visual Studio and Java GUI (available with ISP distribution) 72

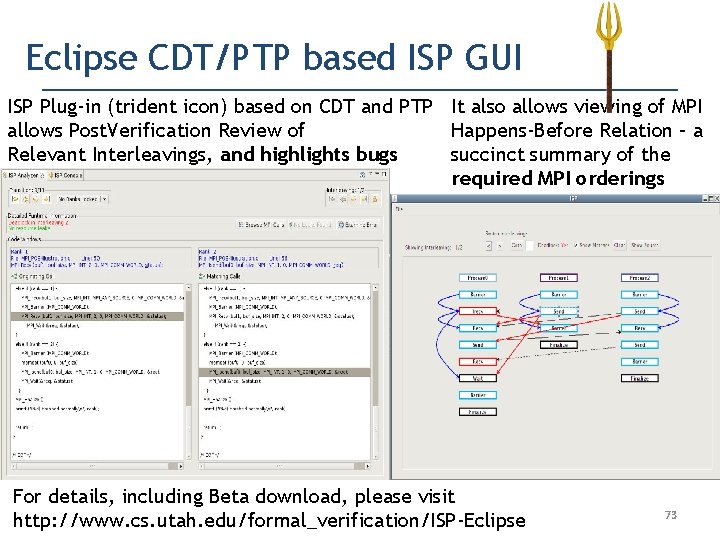

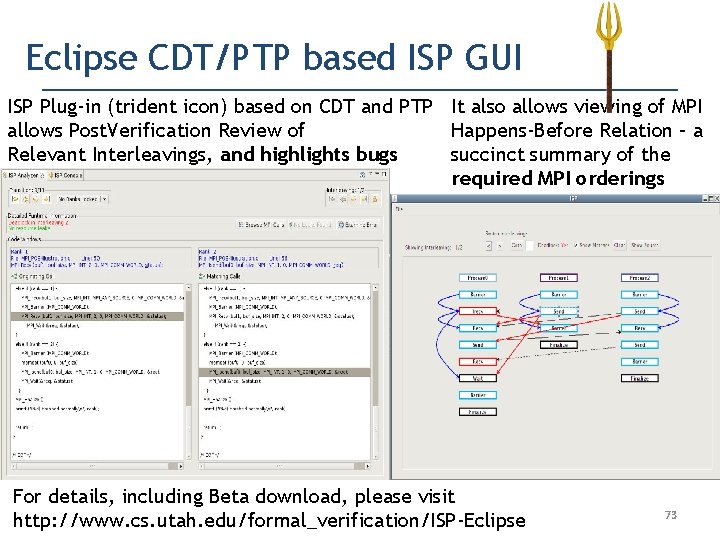

Eclipse CDT/PTP based ISP GUI ISP Plug-in (trident icon) based on CDT and PTP It also allows viewing of MPI allows Post. Verification Review of Happens-Before Relation – a Relevant Interleavings, and highlights bugs succinct summary of the required MPI orderings For details, including Beta download, please visit http: //www. cs. utah. edu/formal_verification/ISP-Eclipse 73

Present Situation • ISP: a push-button dynamic verifier for MPI programs • Find deadlocks, resource leaks, assertion violations – Code level model checking – no manual model building – Guarantee soundness for one test input – Works for Mac. OS, Linux, Windows – Works for MPICH 2, Open. MPI, MS MPI – Verifies 14 KLOC in seconds • ISP is available for download: http: //cs. utah. edu/formal_verification/ISP-release 74

RESULTS USING ISP • The only push-button model checker for MPI/C programs – (the only other model checking approach is MPI-SPIN) • Testing misses deadlocks even on a page of code – See http: //www. cs. utah. edu/formal_verification/ISP_Tests • ISP is meant as a safety-net during manual optimizations – A programmer takes liberties that they would otherwise not – Value amply evident even when tuning the Matrix Mult code • Deadlock found in one test of MADRE (3 K LOC) – Later found to be a known deadlock 75

RESULTS USING ISP • Handled these examples – IRS (Sequoia Benchmarks), Par. METIS (14 K LOC), MADRE (3 K LOC) • Working on these examples – MPI-BLAST, ADLB • There is significant outreach work remaining – The user community of MPI is receptive to FV – But they really have no experience evaluating a model checker – We are offering many tutorials this year • • ICS 2009 Euro. PVM / MPI 2009 (likely) Applying for Cluster 2009 Applying for Super Computing 2009 76

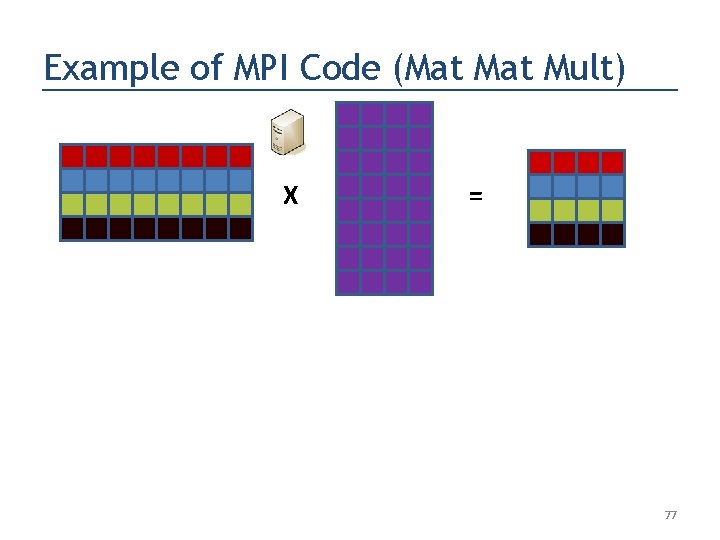

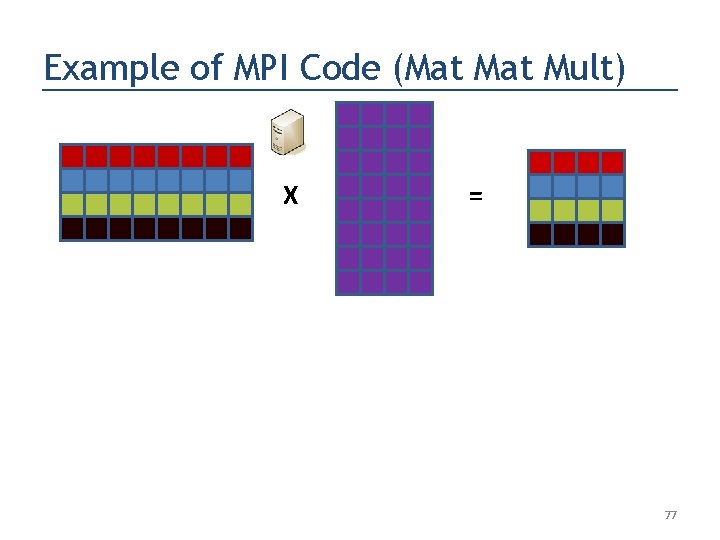

Example of MPI Code (Mat Mult) X = 77

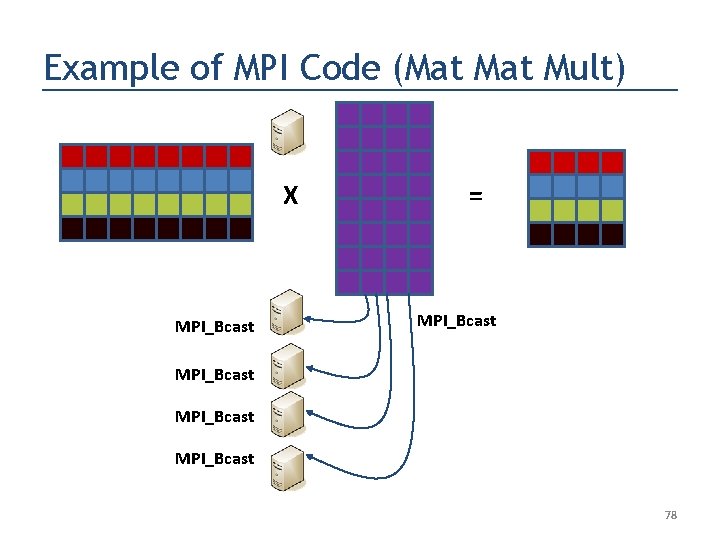

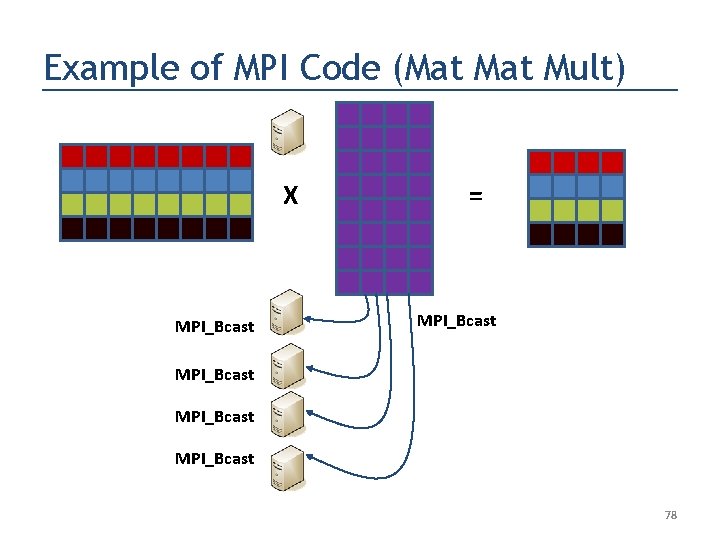

Example of MPI Code (Mat Mult) X MPI_Bcast = MPI_Bcast 78

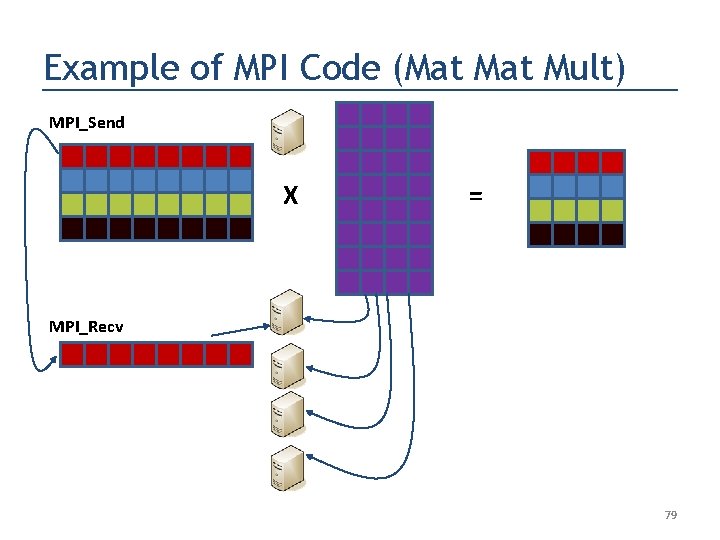

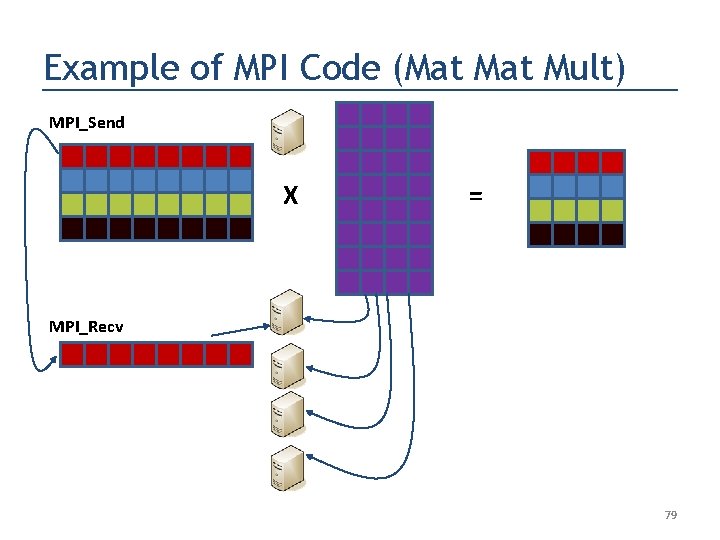

Example of MPI Code (Mat Mult) MPI_Send X = MPI_Recv 79

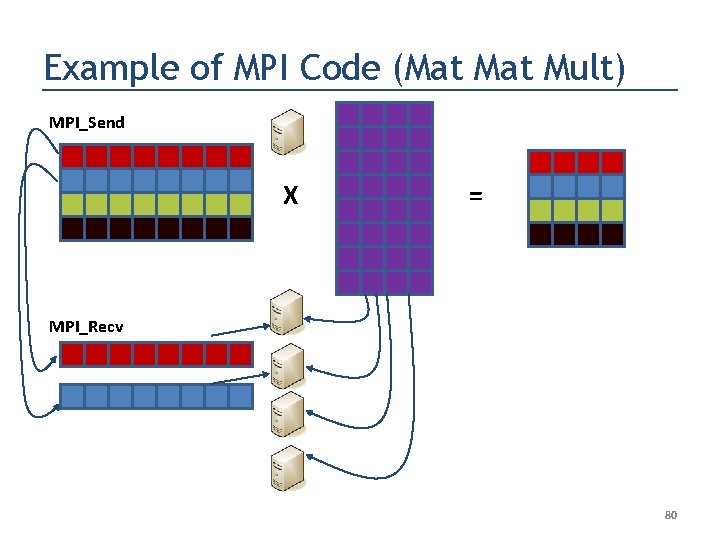

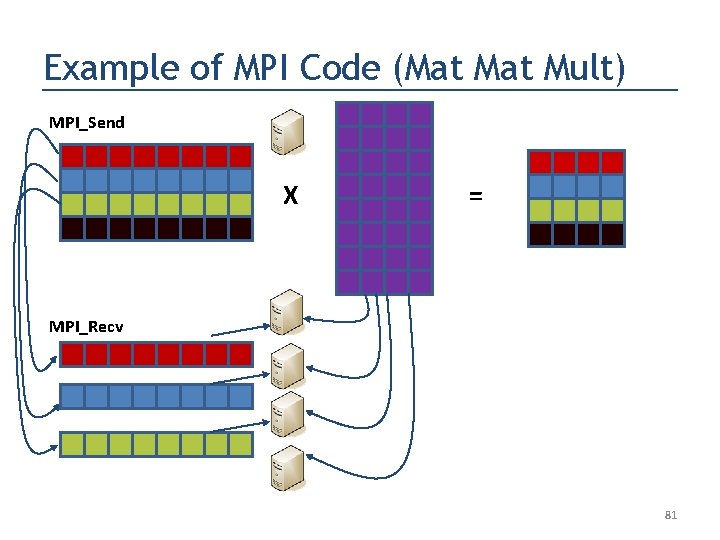

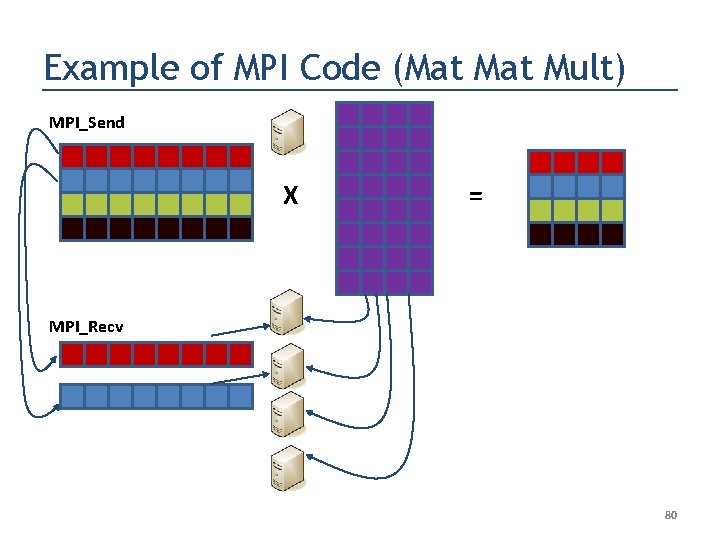

Example of MPI Code (Mat Mult) MPI_Send X = MPI_Recv 80

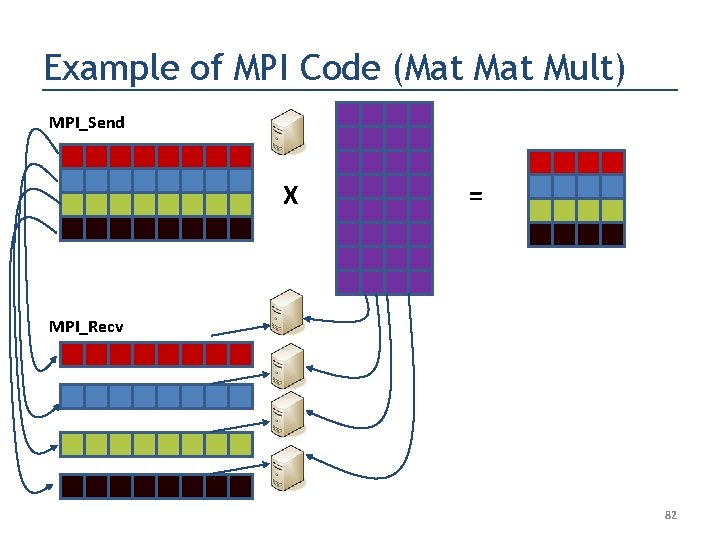

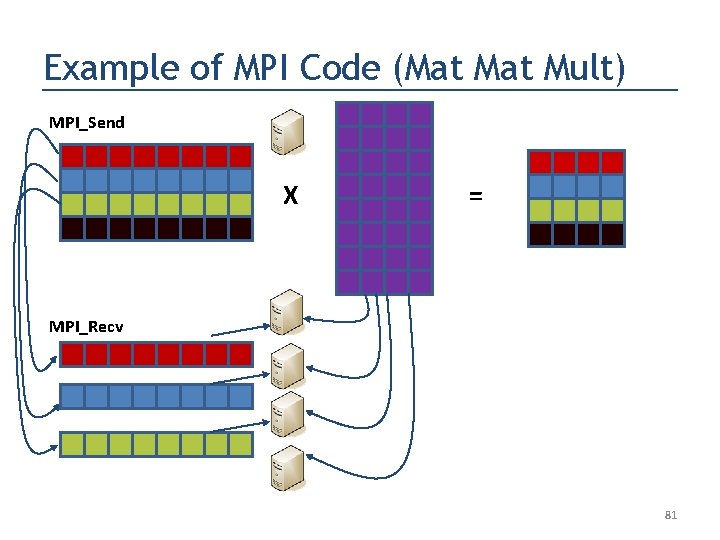

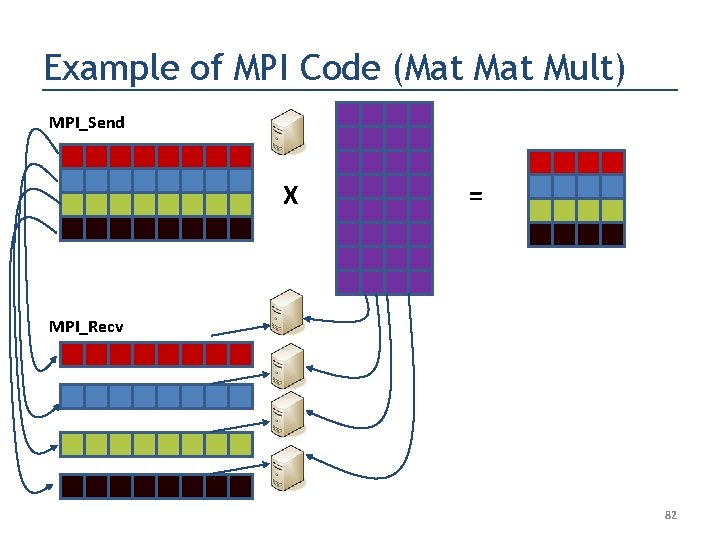

Example of MPI Code (Mat Mult) MPI_Send X = MPI_Recv 81

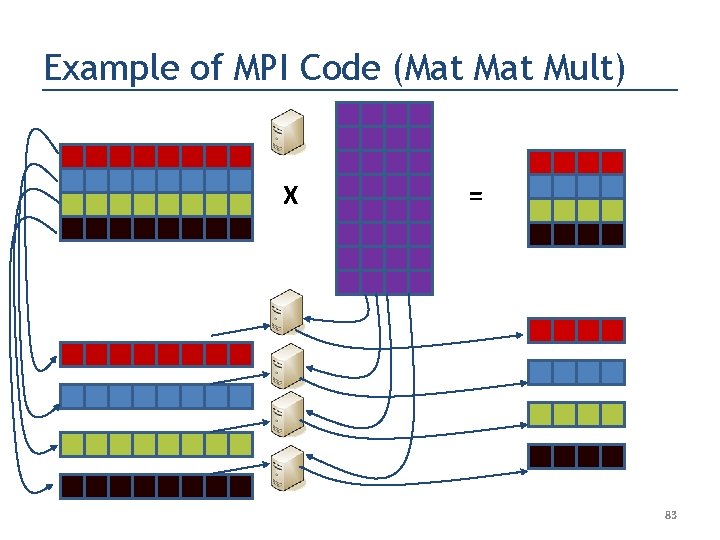

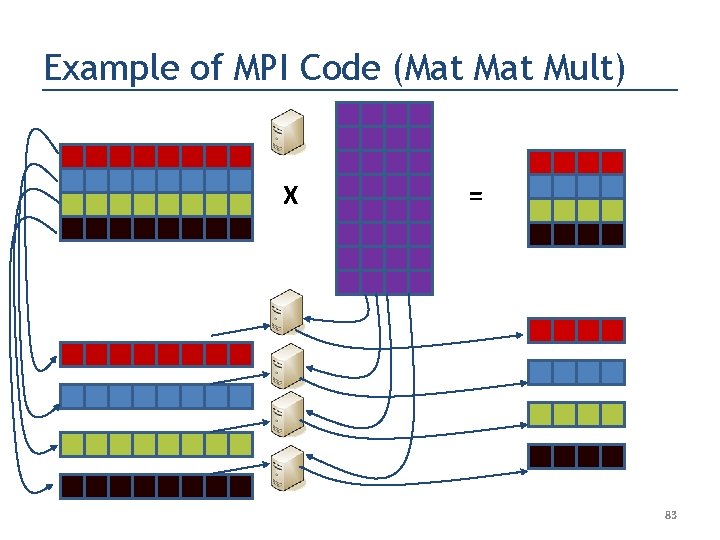

Example of MPI Code (Mat Mult) MPI_Send X = MPI_Recv 82

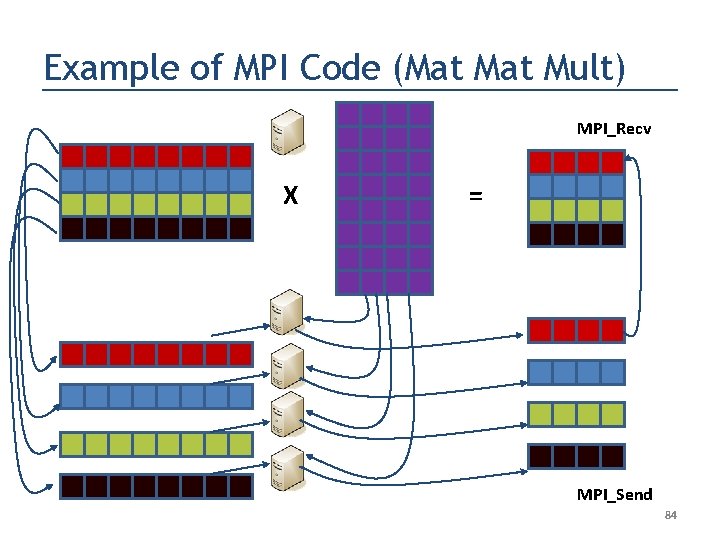

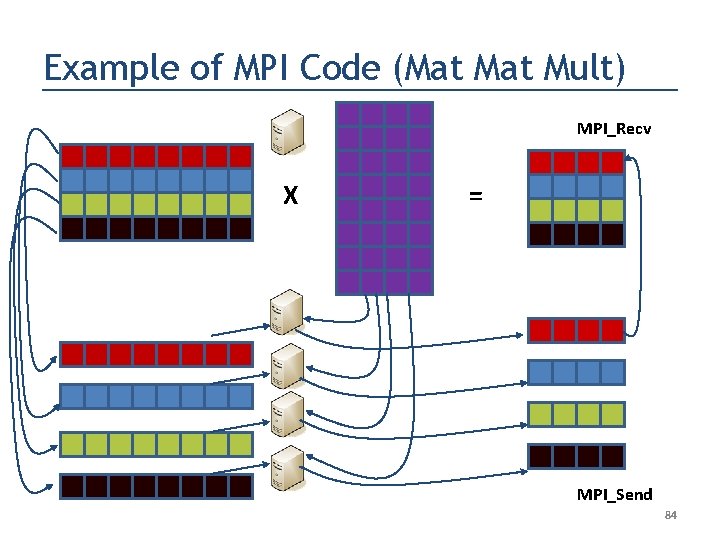

Example of MPI Code (Mat Mult) X = 83

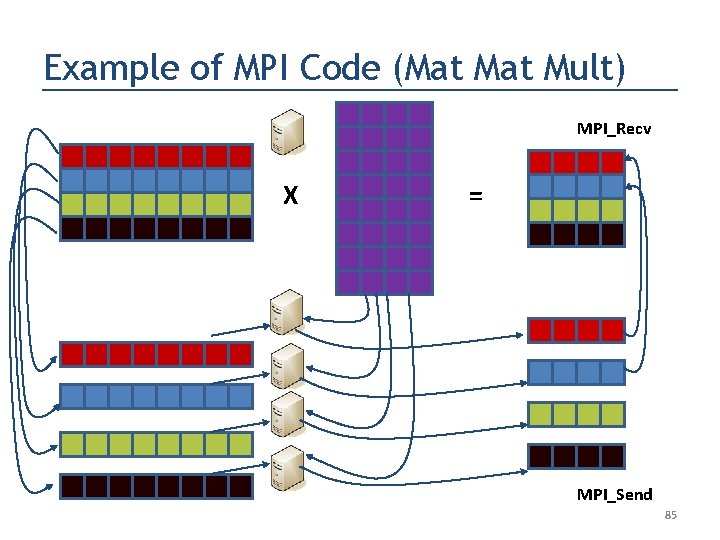

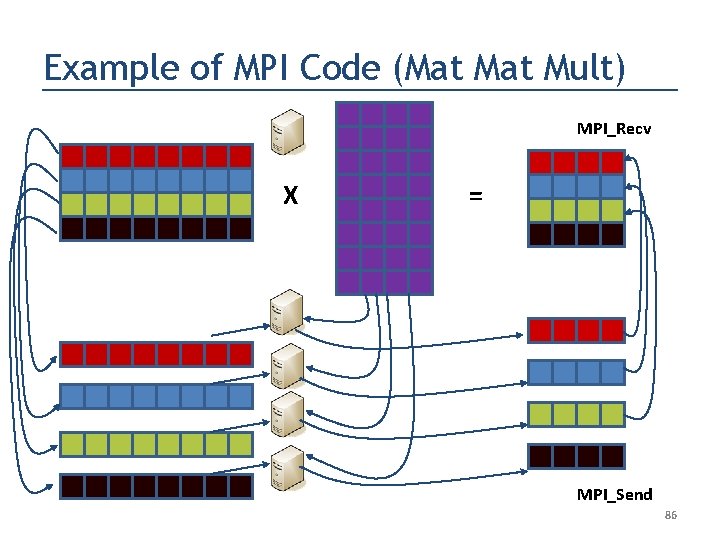

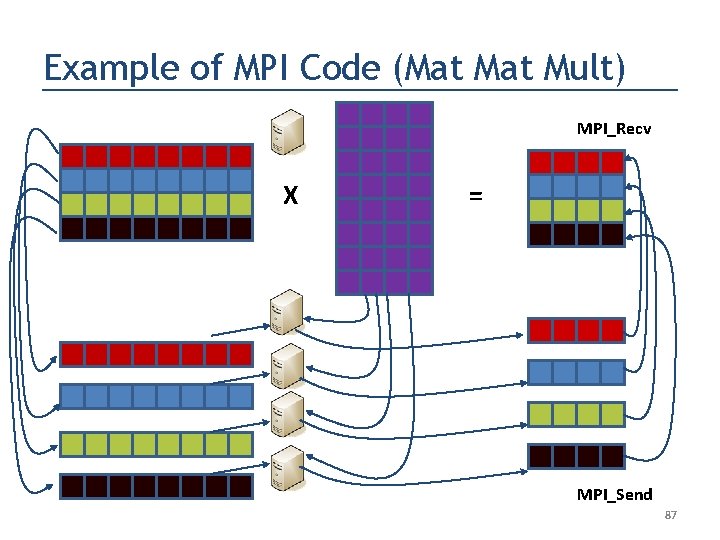

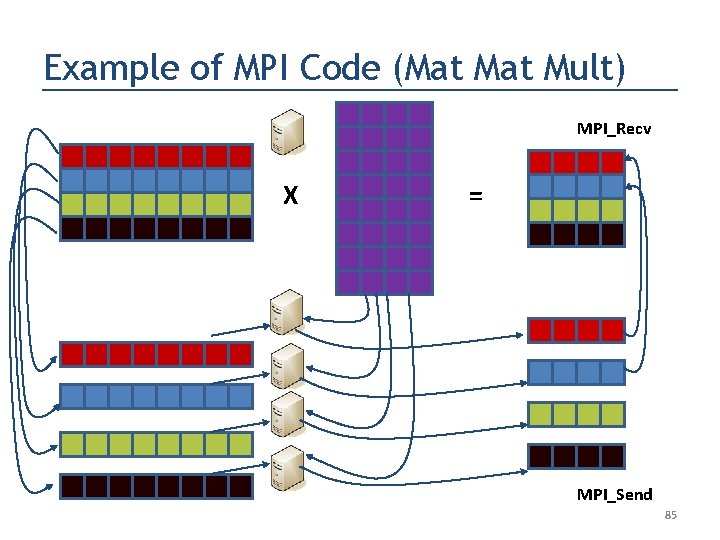

Example of MPI Code (Mat Mult) MPI_Recv X = MPI_Send 84

Example of MPI Code (Mat Mult) MPI_Recv X = MPI_Send 85

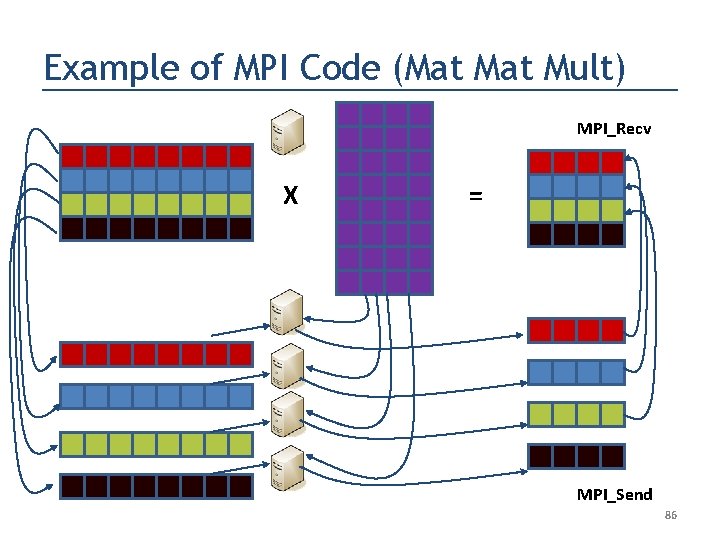

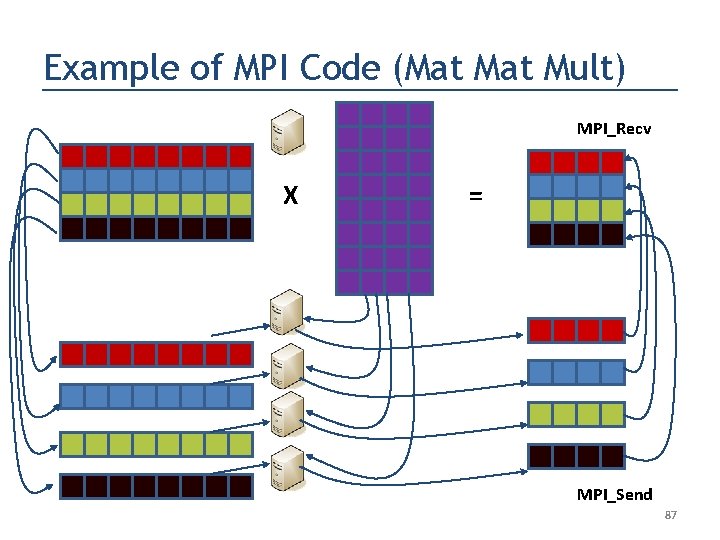

Example of MPI Code (Mat Mult) MPI_Recv X = MPI_Send 86

Example of MPI Code (Mat Mult) MPI_Recv X = MPI_Send 87

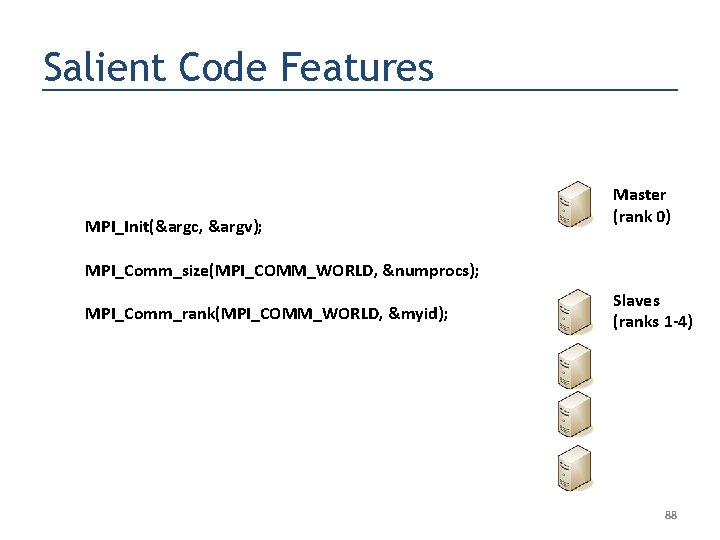

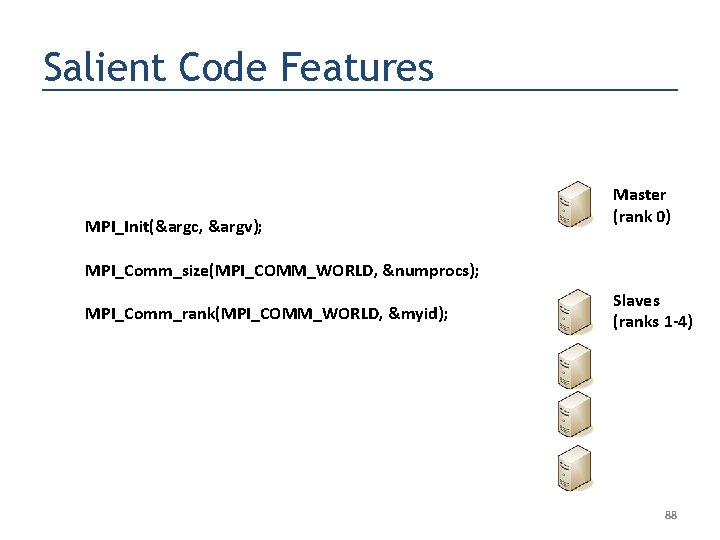

Salient Code Features MPI_Init(&argc, &argv); Master (rank 0) MPI_Comm_size(MPI_COMM_WORLD, &numprocs); MPI_Comm_rank(MPI_COMM_WORLD, &myid); Slaves (ranks 1 -4) 88

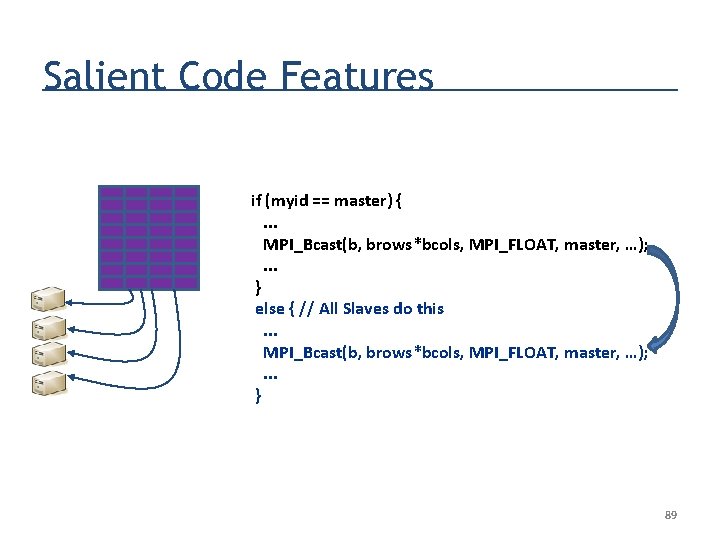

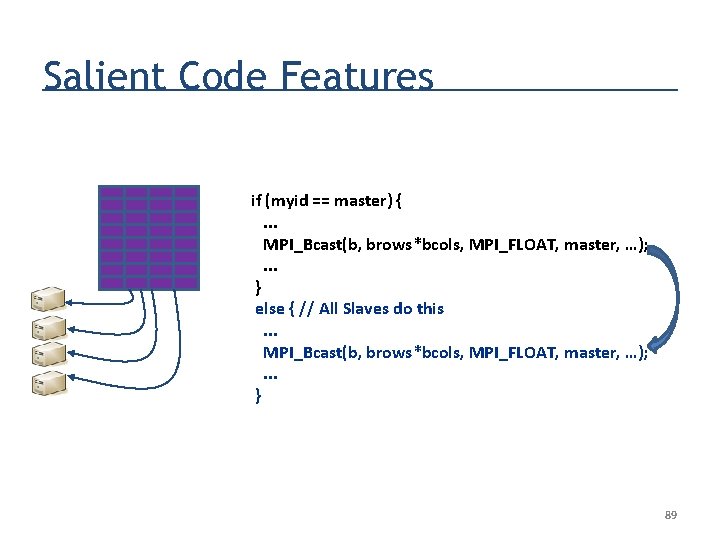

Salient Code Features if (myid == master) {. . . MPI_Bcast(b, brows*bcols, MPI_FLOAT, master, …); . . . } else { // All Slaves do this. . . MPI_Bcast(b, brows*bcols, MPI_FLOAT, master, …); . . . } 89

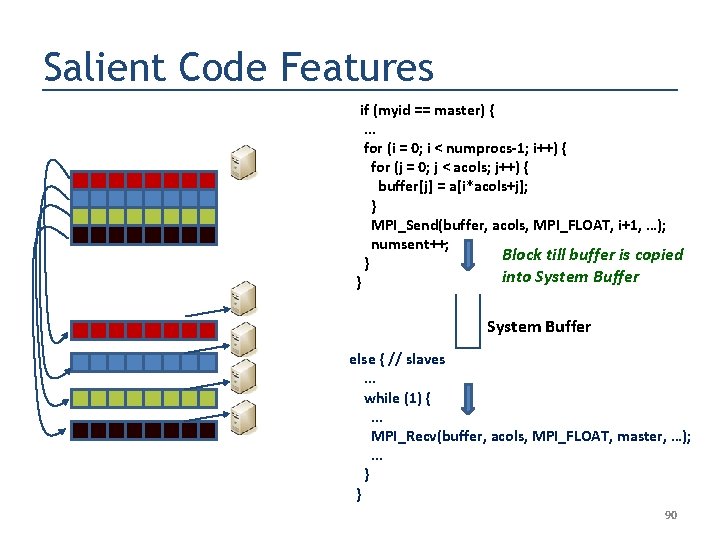

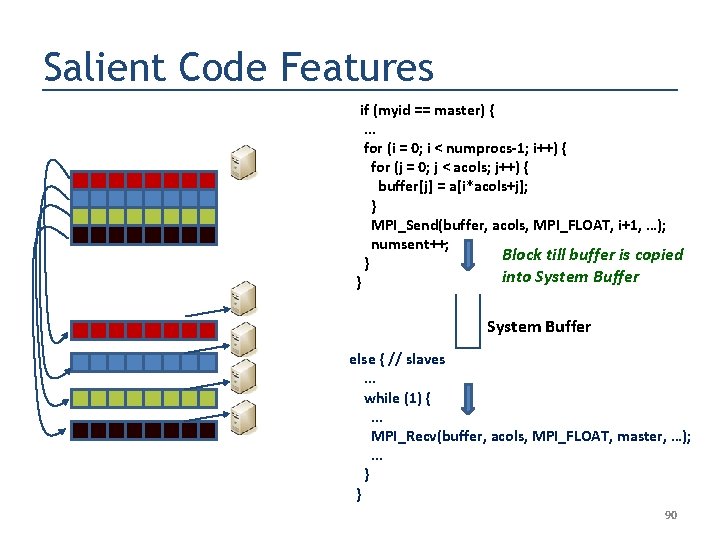

Salient Code Features if (myid == master) {. . . for (i = 0; i < numprocs-1; i++) { for (j = 0; j < acols; j++) { buffer[j] = a[i*acols+j]; } MPI_Send(buffer, acols, MPI_FLOAT, i+1, …); numsent++; Block till buffer is copied } into System Buffer } System Buffer else { // slaves. . . while (1) {. . . MPI_Recv(buffer, acols, MPI_FLOAT, master, …); . . . } } 90

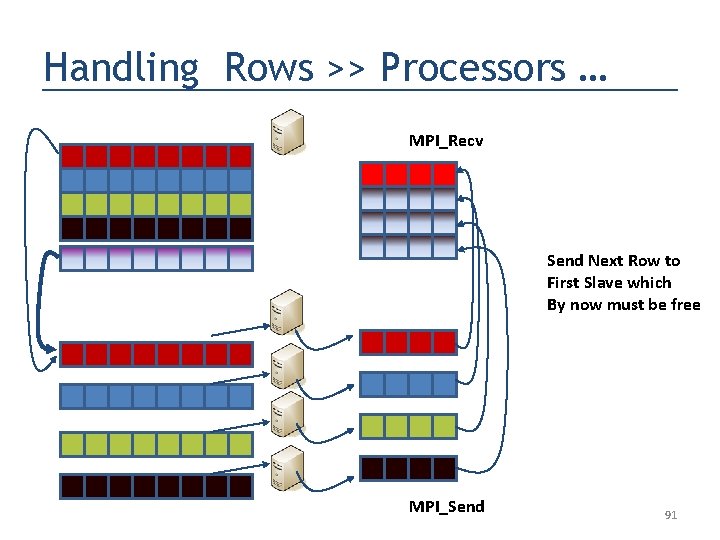

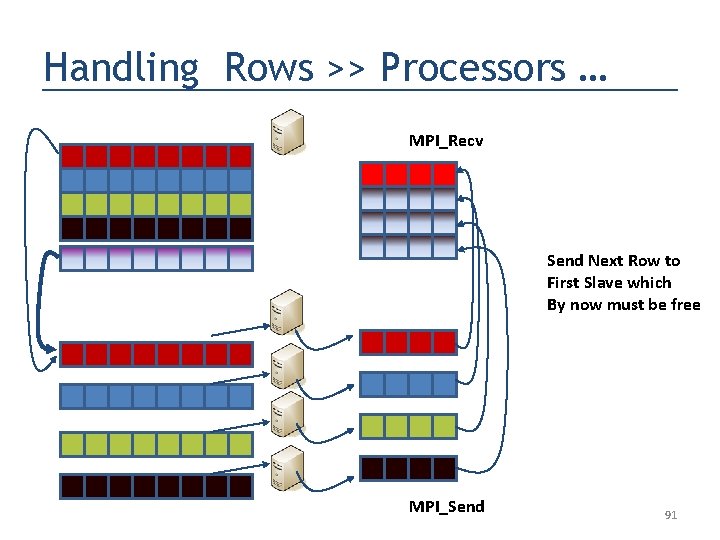

Handling Rows >> Processors … MPI_Recv Send Next Row to First Slave which By now must be free MPI_Send 91

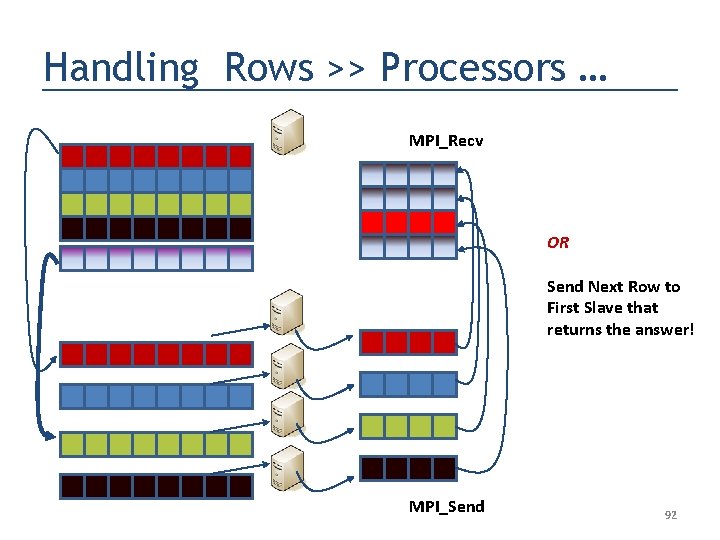

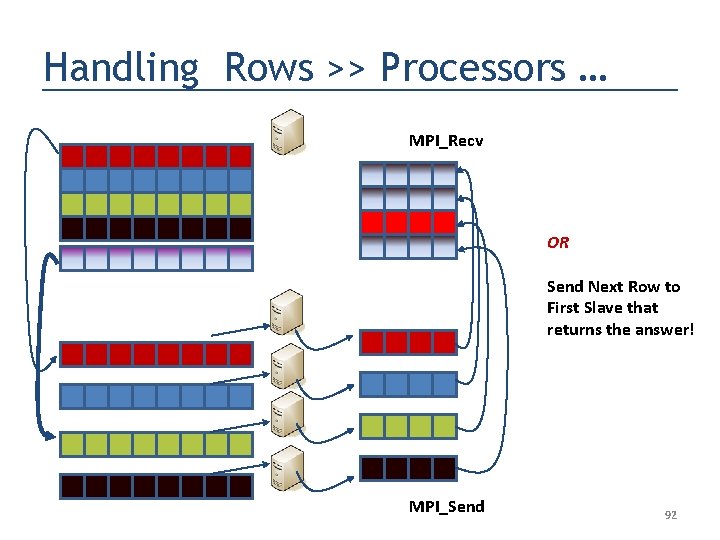

Handling Rows >> Processors … MPI_Recv OR Send Next Row to First Slave that returns the answer! MPI_Send 92

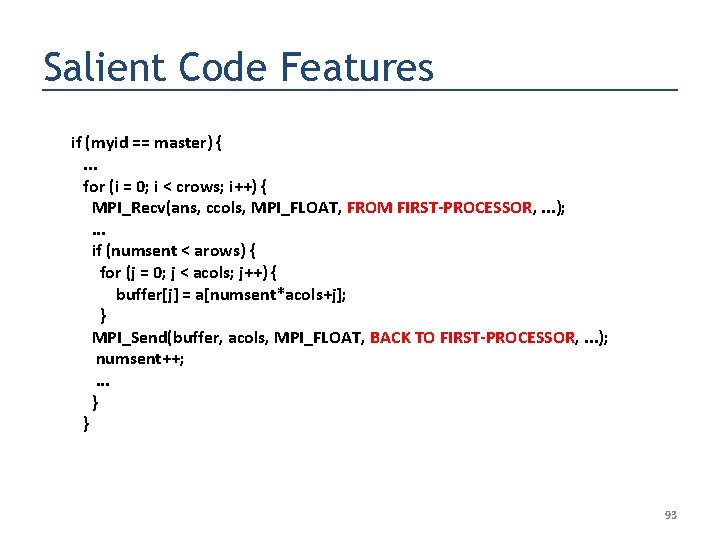

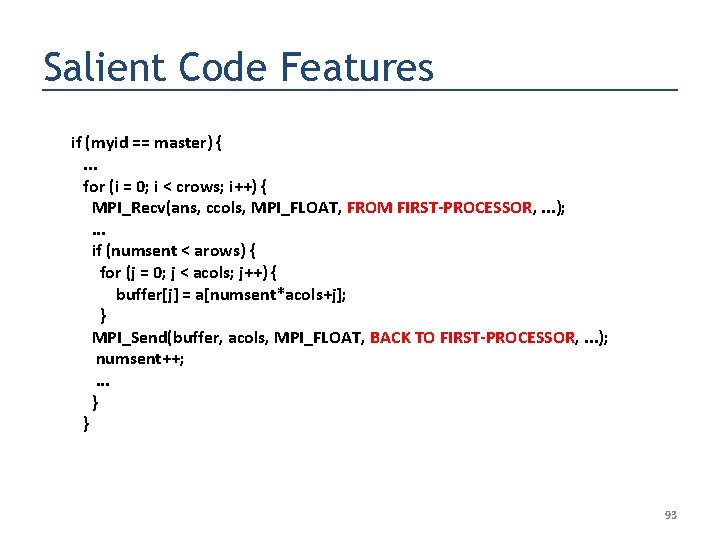

Salient Code Features if (myid == master) {. . . for (i = 0; i < crows; i++) { MPI_Recv(ans, ccols, MPI_FLOAT, FROM FIRST-PROCESSOR, . . . ); . . . if (numsent < arows) { for (j = 0; j < acols; j++) { buffer[j] = a[numsent*acols+j]; } MPI_Send(buffer, acols, MPI_FLOAT, BACK TO FIRST-PROCESSOR, . . . ); numsent++; . . . } } 93

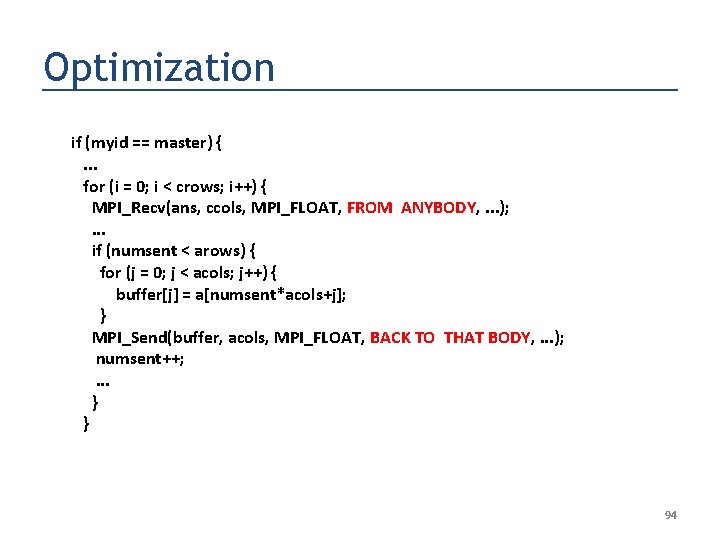

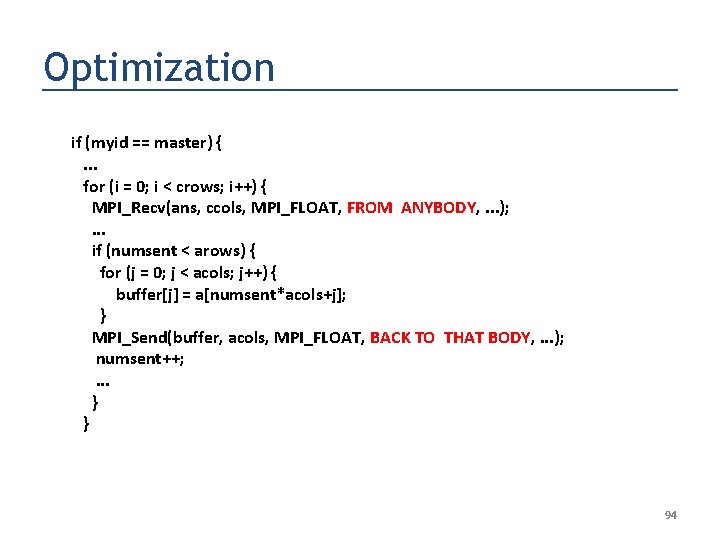

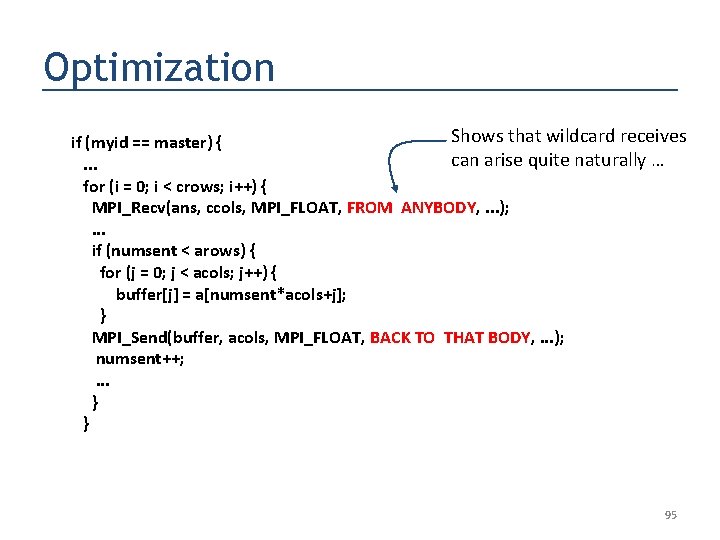

Optimization if (myid == master) {. . . for (i = 0; i < crows; i++) { MPI_Recv(ans, ccols, MPI_FLOAT, FROM ANYBODY, . . . ); . . . if (numsent < arows) { for (j = 0; j < acols; j++) { buffer[j] = a[numsent*acols+j]; } MPI_Send(buffer, acols, MPI_FLOAT, BACK TO THAT BODY, . . . ); numsent++; . . . } } 94

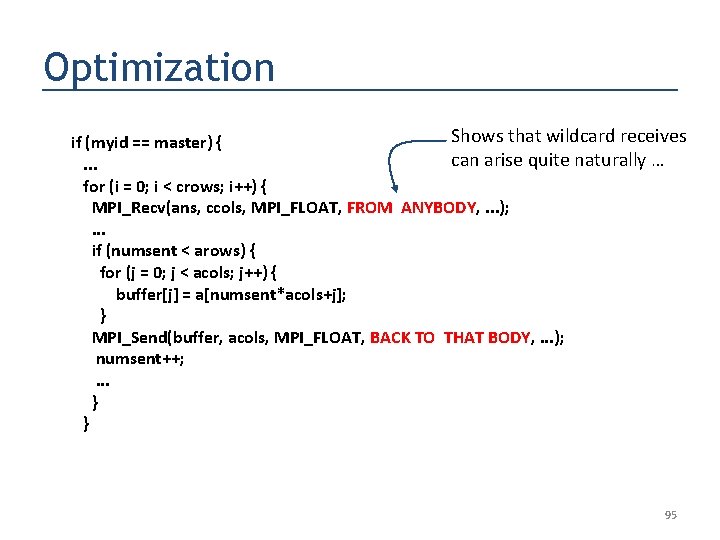

Optimization Shows that wildcard receives if (myid == master) { can arise quite naturally …. . . for (i = 0; i < crows; i++) { MPI_Recv(ans, ccols, MPI_FLOAT, FROM ANYBODY, . . . ); . . . if (numsent < arows) { for (j = 0; j < acols; j++) { buffer[j] = a[numsent*acols+j]; } MPI_Send(buffer, acols, MPI_FLOAT, BACK TO THAT BODY, . . . ); numsent++; . . . } } 95

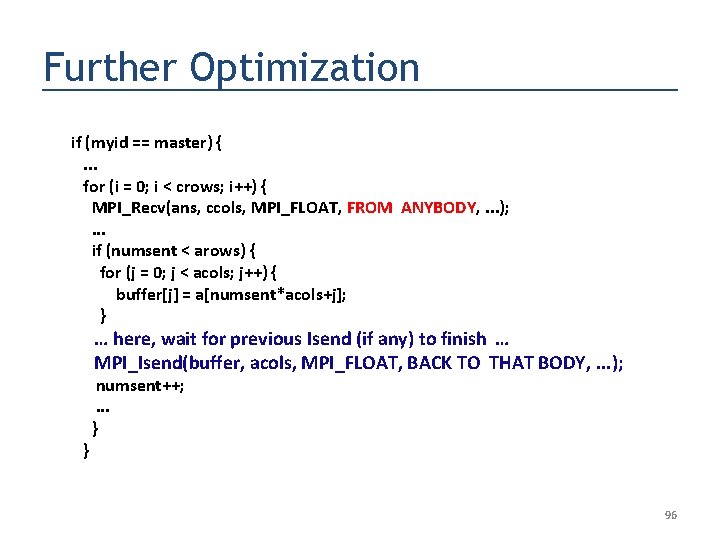

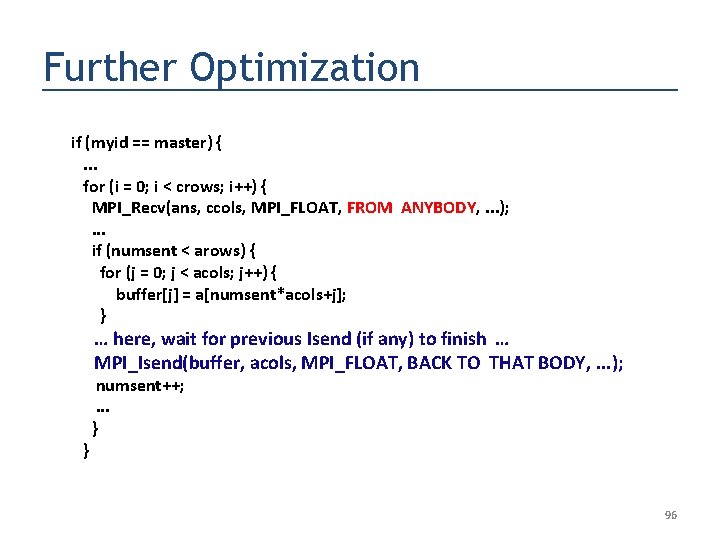

Further Optimization if (myid == master) {. . . for (i = 0; i < crows; i++) { MPI_Recv(ans, ccols, MPI_FLOAT, FROM ANYBODY, . . . ); . . . if (numsent < arows) { for (j = 0; j < acols; j++) { buffer[j] = a[numsent*acols+j]; } … here, wait for previous Isend (if any) to finish … MPI_Isend(buffer, acols, MPI_FLOAT, BACK TO THAT BODY, . . . ); } numsent++; . . . } 96

Run Visual Studio ISP Plug-in Demo 97

Slides on Inspect 98

Inspect is a tool for finding (in a guaranteed manner) 1) Deadlocks 2) Data races 3) Assertion violations in C/Pthread programs Only DPOR-based tool of its kind Available for free download (Only a brief overview is attempted in this tutorial) 99

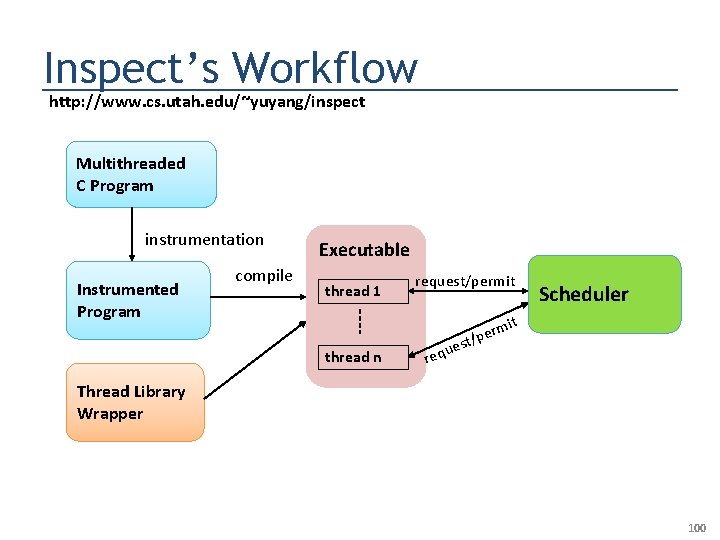

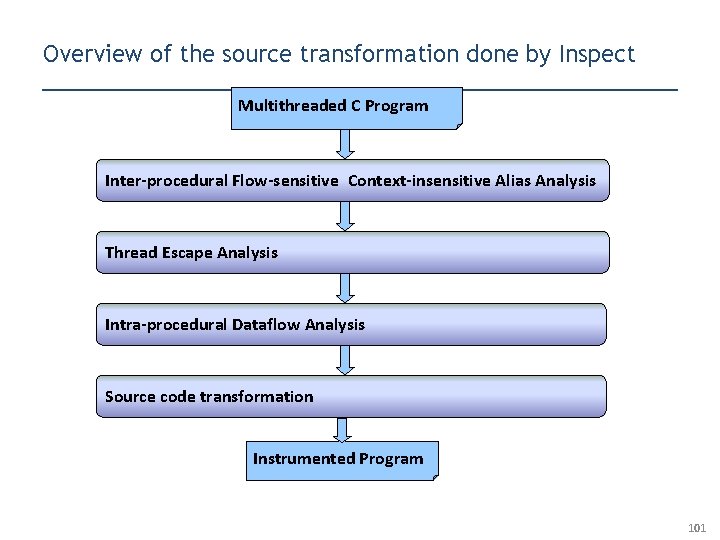

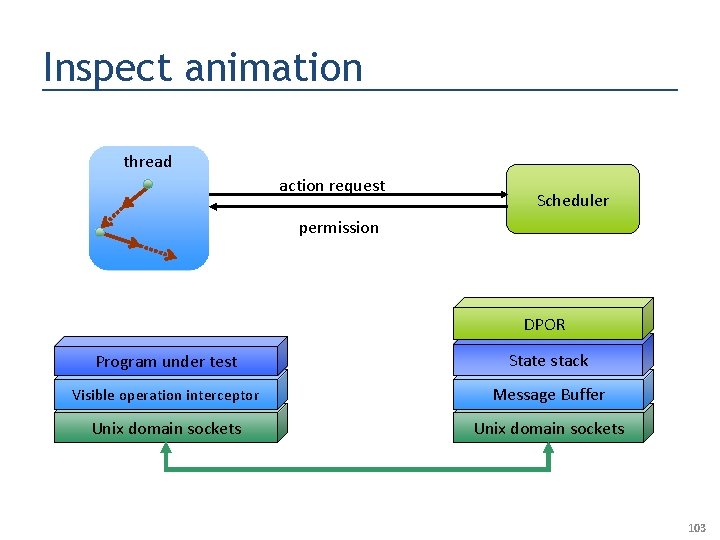

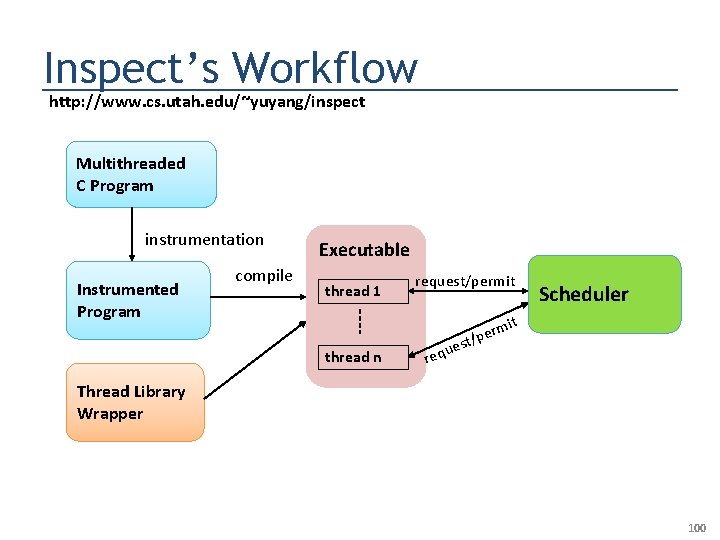

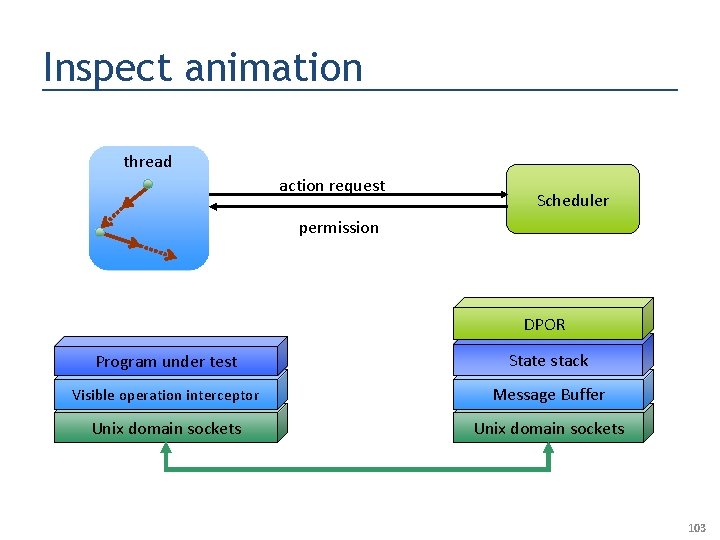

Inspect’s Workflow http: //www. cs. utah. edu/~yuyang/inspect Multithreaded C Program instrumentation Instrumented Program compile Executable thread 1 thread n request/permit ues req rm t/pe Scheduler it Thread Library Wrapper 100

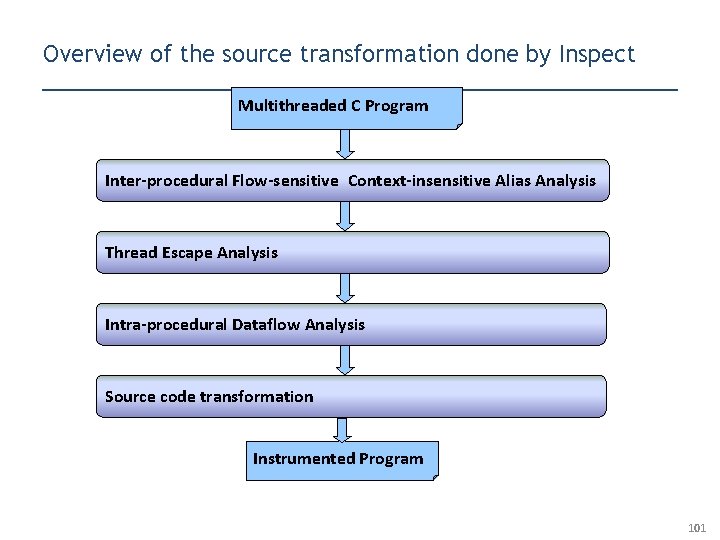

Overview of the source transformation done by Inspect Multithreaded C Program Inter-procedural Flow-sensitive Context-insensitive Alias Analysis Thread Escape Analysis Intra-procedural Dataflow Analysis Source code transformation Instrumented Program 101

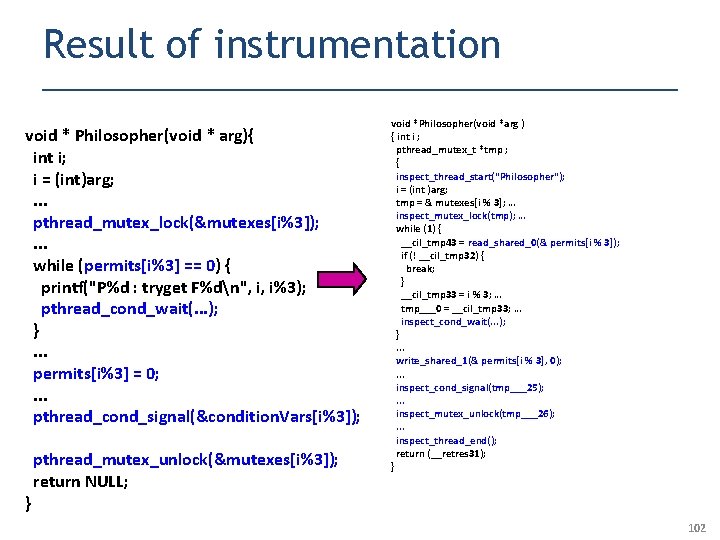

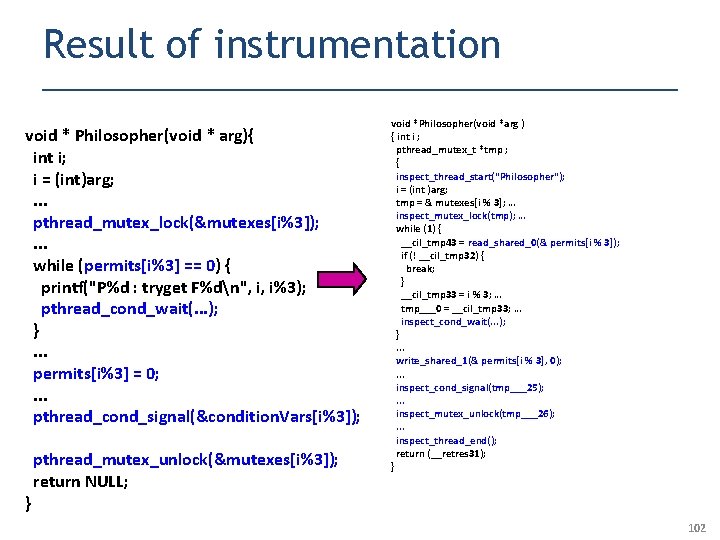

Result of instrumentation void * Philosopher(void * arg){ int i; i = (int)arg; . . . pthread_mutex_lock(&mutexes[i%3]); . . . while (permits[i%3] == 0) { printf("P%d : tryget F%dn", i, i%3); pthread_cond_wait(. . . ); }. . . permits[i%3] = 0; . . . pthread_cond_signal(&condition. Vars[i%3]); } pthread_mutex_unlock(&mutexes[i%3]); return NULL; void *Philosopher(void *arg ) { int i ; pthread_mutex_t *tmp ; { inspect_thread_start("Philosopher"); i = (int )arg; tmp = & mutexes[i % 3]; … inspect_mutex_lock(tmp); … while (1) { __cil_tmp 43 = read_shared_0(& permits[i % 3]); if (! __cil_tmp 32) { break; } __cil_tmp 33 = i % 3; … tmp___0 = __cil_tmp 33; … inspect_cond_wait(. . . ); }. . . write_shared_1(& permits[i % 3], 0); . . . inspect_cond_signal(tmp___25); . . . inspect_mutex_unlock(tmp___26); . . . inspect_thread_end(); return (__retres 31); } 102

Inspect animation thread action request Scheduler permission DPOR Program under test State stack Visible operation interceptor Message Buffer Unix domain sockets 103

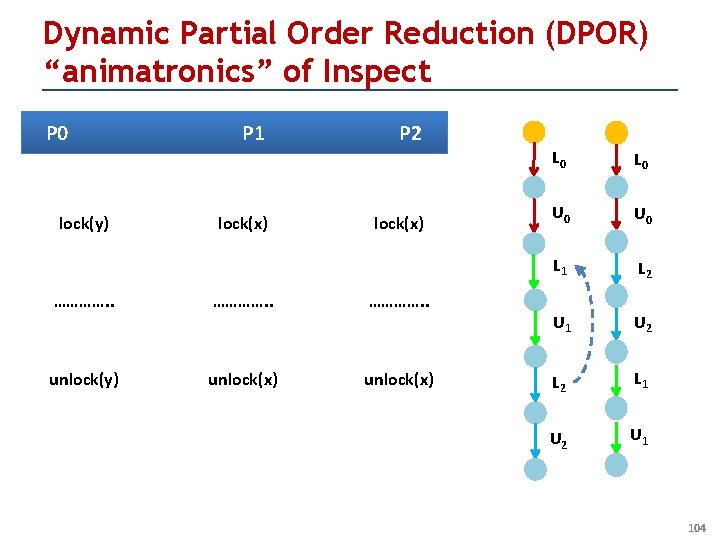

Dynamic Partial Order Reduction (DPOR) “animatronics” of Inspect P 0 lock(y) P 1 lock(x) P 2 lock(x) …………. . unlock(y) unlock(x) L 0 U 0 L 1 L 2 U 1 U 2 L 1 U 2 U 1 104

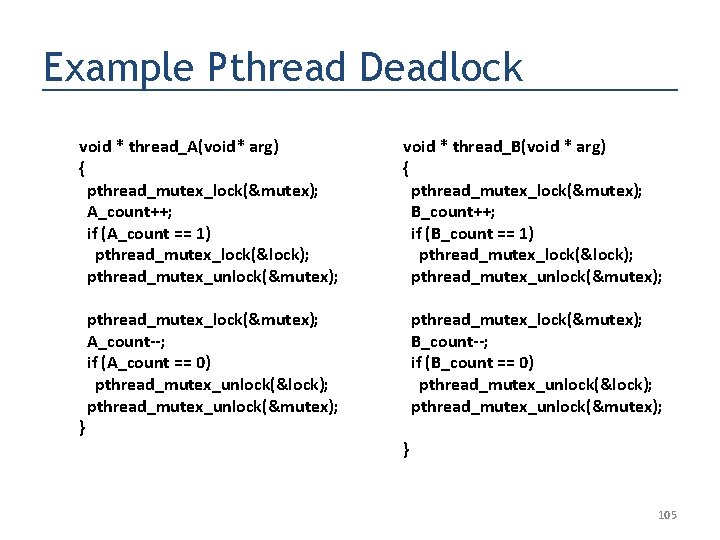

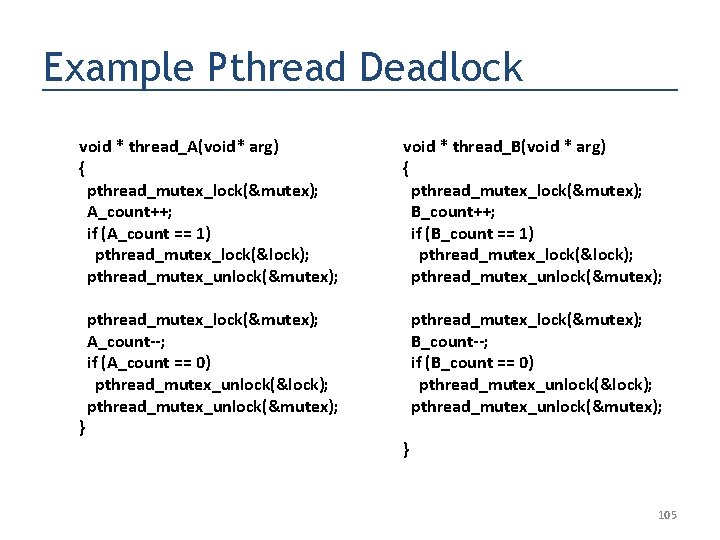

Example Pthread Deadlock void * thread_A(void* arg) { pthread_mutex_lock(&mutex); A_count++; if (A_count == 1) pthread_mutex_lock(&lock); pthread_mutex_unlock(&mutex); void * thread_B(void * arg) { pthread_mutex_lock(&mutex); B_count++; if (B_count == 1) pthread_mutex_lock(&lock); pthread_mutex_unlock(&mutex); pthread_mutex_lock(&mutex); A_count--; if (A_count == 0) pthread_mutex_unlock(&lock); pthread_mutex_unlock(&mutex); pthread_mutex_lock(&mutex); B_count--; if (B_count == 0) pthread_mutex_unlock(&lock); pthread_mutex_unlock(&mutex); } } 105

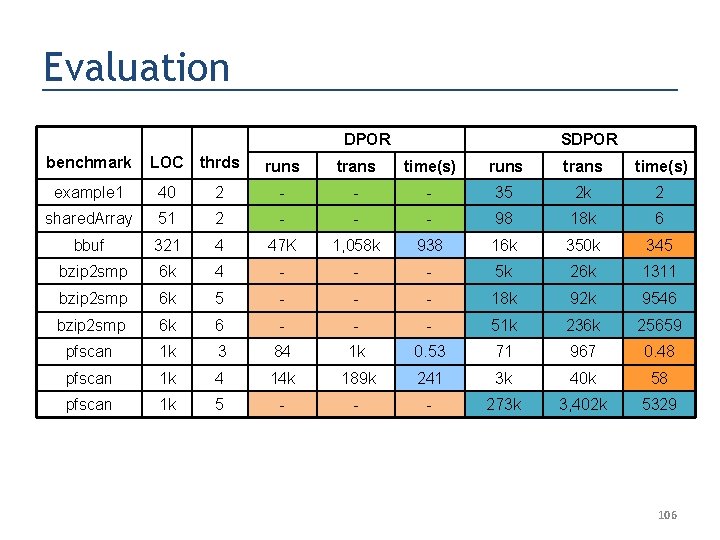

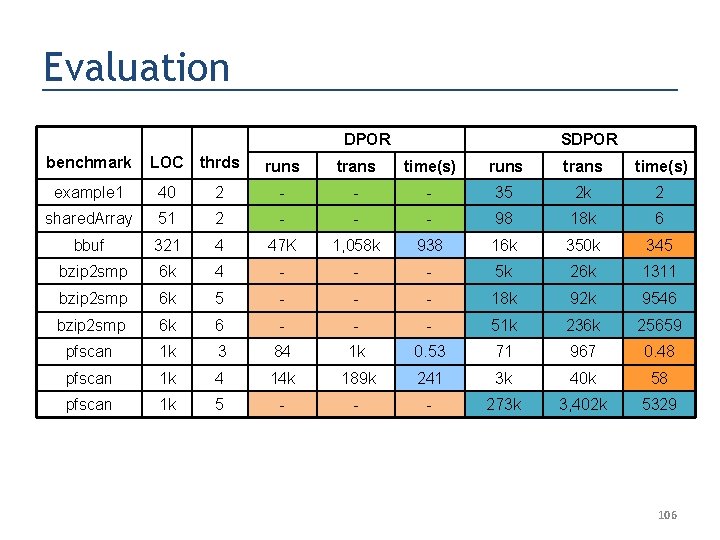

Evaluation DPOR benchmark LOC thrds SDPOR runs trans time(s) example 1 40 2 - - - 35 2 k 2 shared. Array 51 2 - - - 98 18 k 6 bbuf 321 4 47 K 1, 058 k 938 16 k 350 k 345 bzip 2 smp 6 k 4 - - - 5 k 26 k 1311 bzip 2 smp 6 k 5 - - - 18 k 92 k 9546 bzip 2 smp 6 k 6 - - - 51 k 236 k 25659 pfscan 1 k 3 84 1 k 0. 53 71 967 0. 48 pfscan 1 k 4 14 k 189 k 241 3 k 40 k 58 pfscan 1 k 5 - - - 273 k 3, 402 k 5329 106

Summary • Importance of Concurrency Verification • Dynamic formal verification of MPI and Pthread programs • Ideal FV tool for modern concurrency practices – DEPENDABLE (D): Finds Bugs in its range Each Time – PARSIMONIOUS (P): Does so without redundant searches – EDUCATIONAL (E): Educates users on concurrency nuances • Demonstration of ISP and Inspect • Please teach your classes using these tools ! 107

Pedagogical Material • All Examples of MPI book of Pacheco being “solved” using ISP http: //www. cs. utah. edu/formal_verification/geof/pacheco/ Pacheco. Tests. html Similarly we are assembling course material of our examination of all examples of the Herlihy / Shavit book using the MSR tool CHESS For Inspect, we are assembling a case study of verifying a work-stealing queue 108

Combined Thread / Message Passing Verification • Tool for Multicore Communications API (MCAPI) is under construction • See http: //www. multicore-association. org • Extending ISP to handle MPI_THREAD_MULTIPLE planned 109

User-Interface Work around ISP • Microsoft Visual Studio Front-end of ISP available • Eclipse / PTP integration of ISP is in progress – Can show you screenshots of tool prototype that is operational 110

Concluding Remarks • Formal Verification for Concurrency serves many purposes – Helps find bugs – Helps understand programs – Helps improve efficiency of code with FV serving as safety-net • One of the biggest remaining challenges – Efficient DEBUGGING – Safe Design Practices – Exploitation of Concurrency Patterns to reduce verification complexity 111