Powering Data Science with Azure and Databricks ABC

Powering Data Science with Azure and Databricks ABC Supply for Fox. Pass (with Justin Stadler and Turner Kunkel) September 4 th, 2019

Turner Kunkel - Introduction turner. kunkel@talavant. com • Education • B. S. in Computer Science from UW-Milwaukee • M. S. in Science of Management from Cardinal Stritch • Professional • Senior Consultant @ Talavant • Data Engineering • Consultant mentoring • President @ Fox. Pass • Hobbies • Home brewing • Tinkering with 3 D printing, Raspberry Pi, other things to ruin

Given Assumption Before Starting Source data is organized in the Data Lake already. Storage from Databricks during model building is using Databricks Delta This type of project enables data science efforts to be successful.

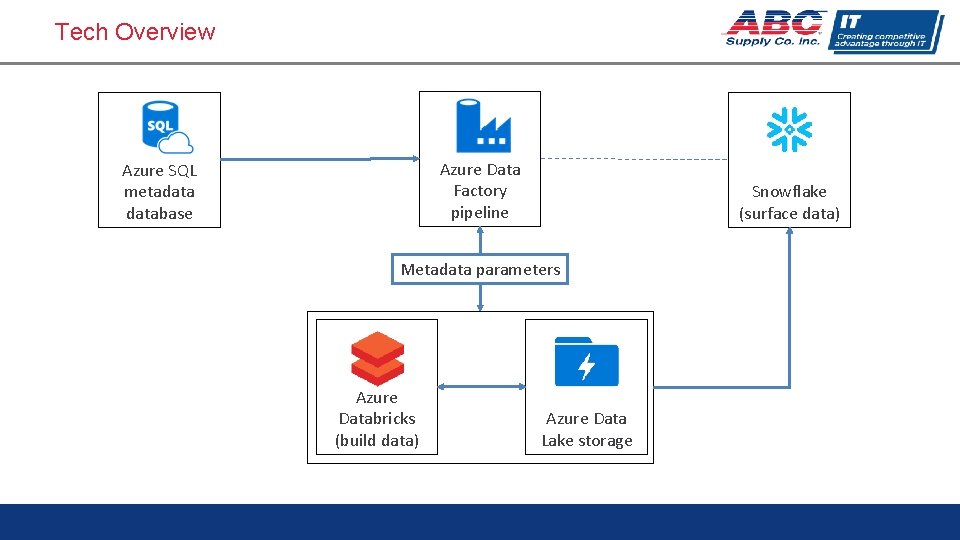

Tech Overview Azure Data Factory pipeline Azure SQL metadatabase Snowflake (surface data) Metadata parameters Azure Databricks (build data) Azure Data Lake storage

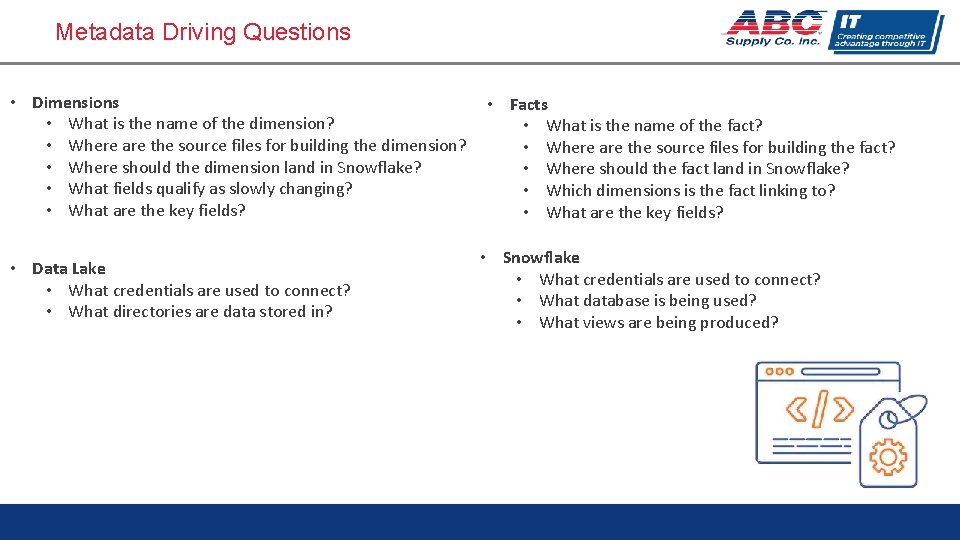

Metadata Driving Questions • Dimensions • What is the name of the dimension? • Where are the source files for building the dimension? • Where should the dimension land in Snowflake? • What fields qualify as slowly changing? • What are the key fields? • Data Lake • What credentials are used to connect? • What directories are data stored in? • Facts • What is the name of the fact? • Where are the source files for building the fact? • Where should the fact land in Snowflake? • Which dimensions is the fact linking to? • What are the key fields? • Snowflake • What credentials are used to connect? • What database is being used? • What views are being produced?

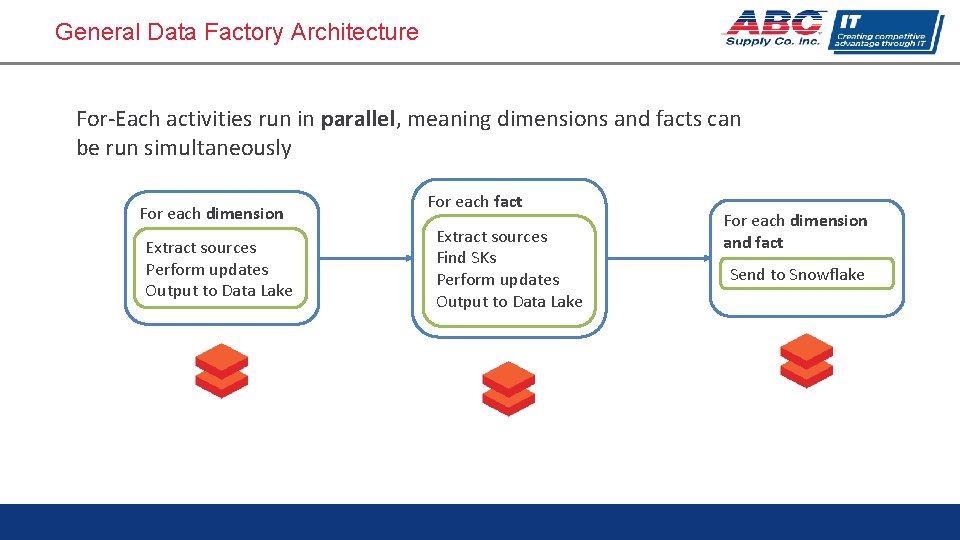

General Data Factory Architecture For-Each activities run in parallel, meaning dimensions and facts can be run simultaneously For each dimension Extract sources Perform updates Output to Data Lake For each fact Extract sources Find SKs Perform updates Output to Data Lake For each dimension and fact Send to Snowflake

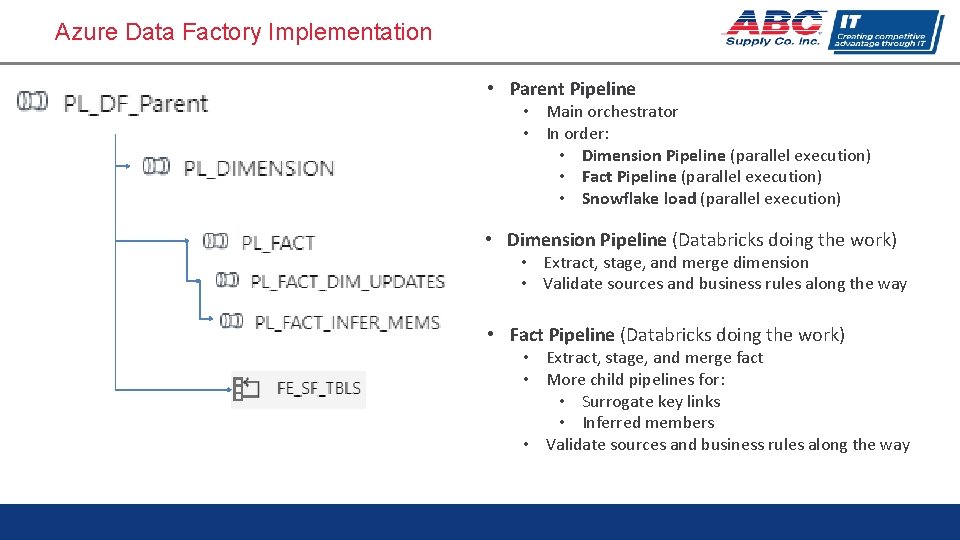

Azure Data Factory Implementation • Parent Pipeline • Main orchestrator • In order: • Dimension Pipeline (parallel execution) • Fact Pipeline (parallel execution) • Snowflake load (parallel execution) • Dimension Pipeline (Databricks doing the work) • Extract, stage, and merge dimension • Validate sources and business rules along the way • Fact Pipeline (Databricks doing the work) • Extract, stage, and merge fact • More child pipelines for: • Surrogate key links • Inferred members • Validate sources and business rules along the way

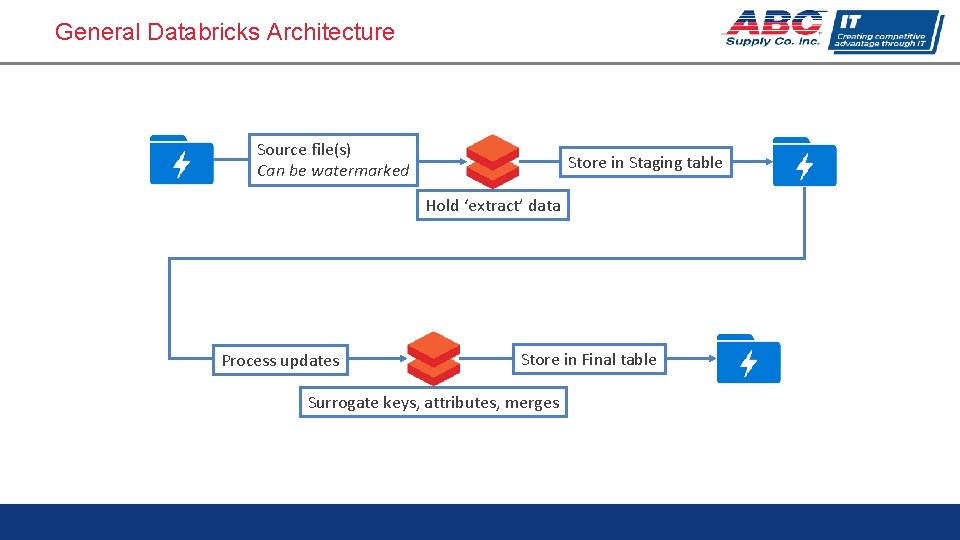

General Databricks Architecture Source file(s) Can be watermarked Store in Staging table Hold ‘extract’ data Process updates Store in Final table Surrogate keys, attributes, merges

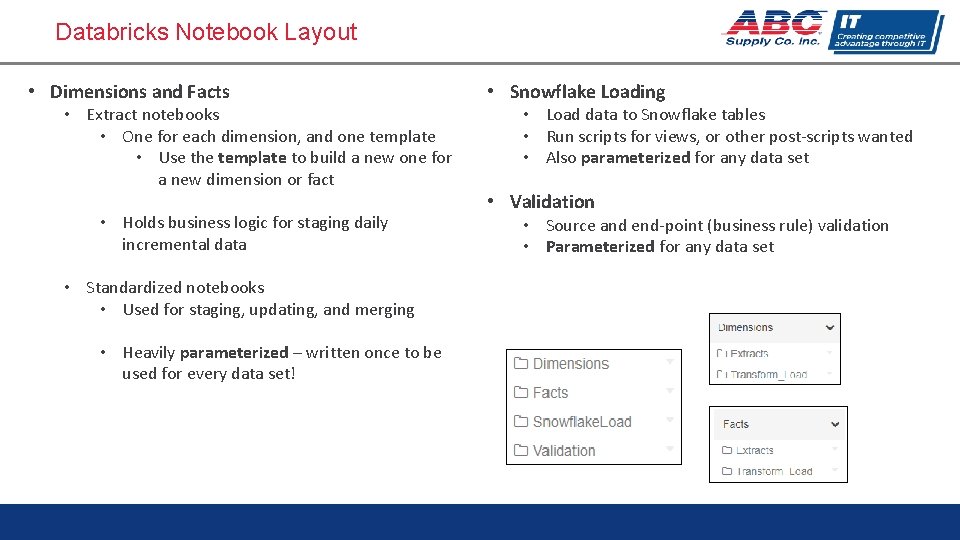

Databricks Notebook Layout • Dimensions and Facts • Extract notebooks • One for each dimension, and one template • Use the template to build a new one for a new dimension or fact • Holds business logic for staging daily incremental data • Standardized notebooks • Used for staging, updating, and merging • Heavily parameterized – written once to be used for every data set! • Snowflake Loading • Load data to Snowflake tables • Run scripts for views, or other post-scripts wanted • Also parameterized for any data set • Validation • Source and end-point (business rule) validation • Parameterized for any data set

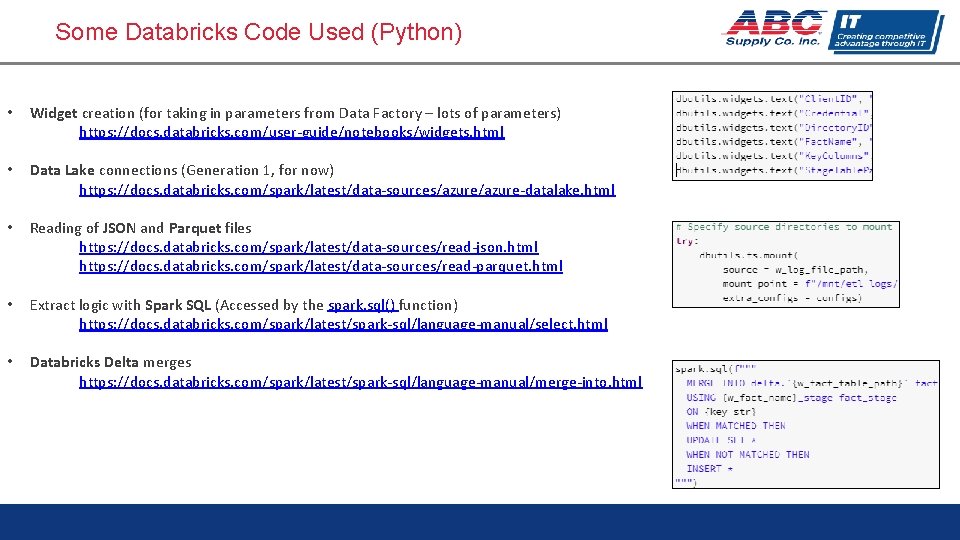

Some Databricks Code Used (Python) • Widget creation (for taking in parameters from Data Factory – lots of parameters) https: //docs. databricks. com/user-guide/notebooks/widgets. html • Data Lake connections (Generation 1, for now) https: //docs. databricks. com/spark/latest/data-sources/azure-datalake. html • Reading of JSON and Parquet files https: //docs. databricks. com/spark/latest/data-sources/read-json. html https: //docs. databricks. com/spark/latest/data-sources/read-parquet. html • Extract logic with Spark SQL (Accessed by the spark. sql() function) https: //docs. databricks. com/spark/latest/spark-sql/language-manual/select. html • Databricks Delta merges https: //docs. databricks. com/spark/latest/spark-sql/language-manual/merge-into. html

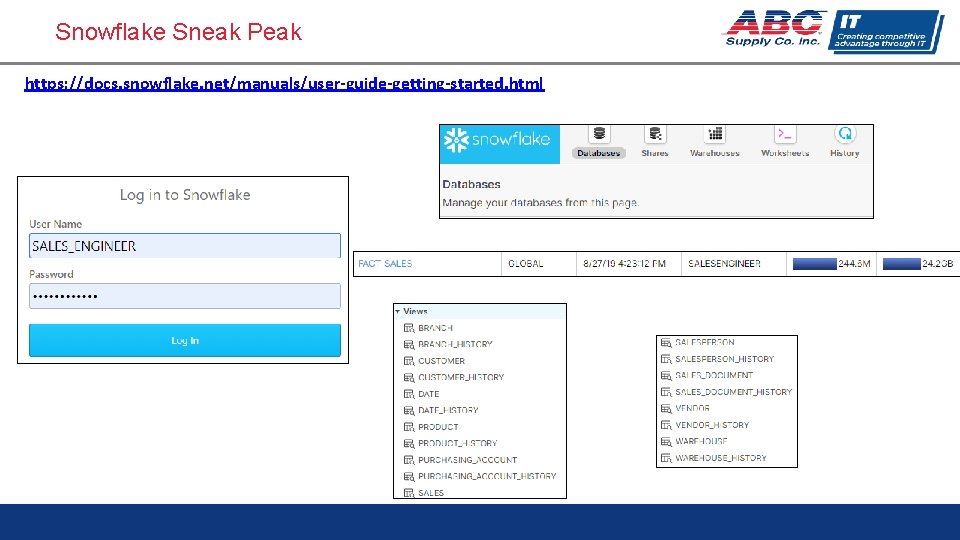

Snowflake Sneak Peak https: //docs. snowflake. net/manuals/user-guide-getting-started. html

Summary • Key take-aways • Metadata and configuration-driven development • Extensible for other data sets • Enables data science projects • Gives business confidence in data • Not a silver bullet! • Used well with big data and scaling needs • Extended thoughts • Concepts can be ported for other cloudbased services (such as AWS) • Data can be surfaced in different database systems/warehouses (such as Azure SQL Data Warehouse) • Features • Scalable • Data Factory, Data Lake, Databricks, Snowflake • Logging baked in • Validation baked in • Runtime diagnostics can be sent to Azure Log Analytics • Future Potential • Data Lake Generation 2

Questions? Comments?

- Slides: 13