POSIX Threads Pthreads for Shared Address Space Programming

![Single Producer Single Consumer: Wait-Free and Thread Safe bool deq(T &out){ if(count. load()>0){ out=arr[mask(head++)]. Single Producer Single Consumer: Wait-Free and Thread Safe bool deq(T &out){ if(count. load()>0){ out=arr[mask(head++)].](https://slidetodoc.com/presentation_image_h2/3b3e18faa1a1b07b2c40ddbae6adbf0a/image-31.jpg)

![bool enq(T &data){ int mytail=tail. fetch_add(1); if(arr[mask(mytail)]. load()==EMPTY){ arr[mask(mytail)]. store(data); return 1; } else{ bool enq(T &data){ int mytail=tail. fetch_add(1); if(arr[mask(mytail)]. load()==EMPTY){ arr[mask(mytail)]. store(data); return 1; } else{](https://slidetodoc.com/presentation_image_h2/3b3e18faa1a1b07b2c40ddbae6adbf0a/image-42.jpg)

- Slides: 54

POSIX Threads (Pthreads) for Shared Address Space Programming

Pthreads • Posix threads package • Available on almost all machines (portable standard) • Sort of like doing “parallel” (not “parallel for”) in Open. MP explicitly • Basic calls: • • pthread_create: creates a thread, to execute a given function Pthread_join barrier, lock, mutex Thread private variables • Many online resources: • E. g. , https: //computing. llnl. gov/tutorials/pthreads/ L. V. Kale 2

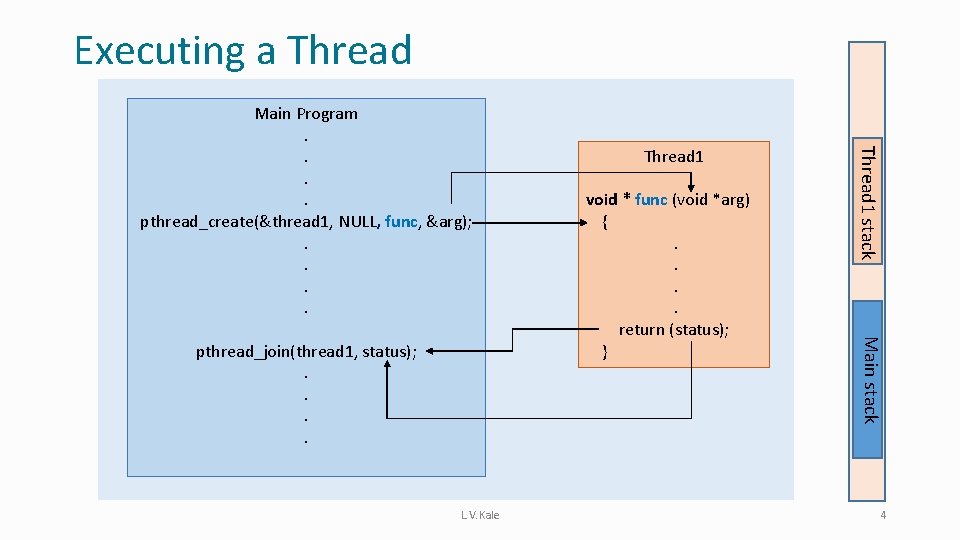

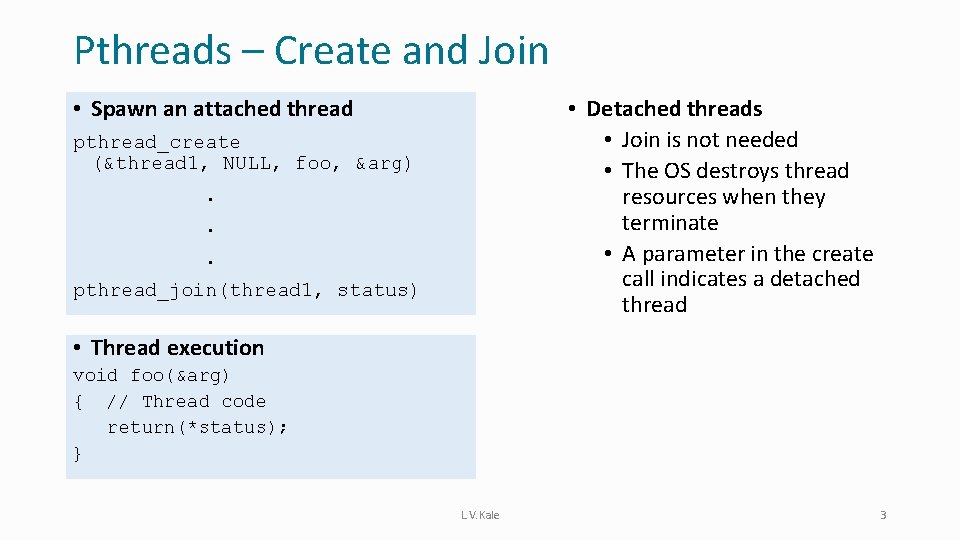

Pthreads – Create and Join • Spawn an attached thread • Detached threads • Join is not needed • The OS destroys thread resources when they terminate • A parameter in the create call indicates a detached thread pthread_create (&thread 1, NULL, foo, &arg). . . pthread_join(thread 1, status) • Thread execution void foo(&arg) { // Thread code return(*status); } L. V. Kale 3

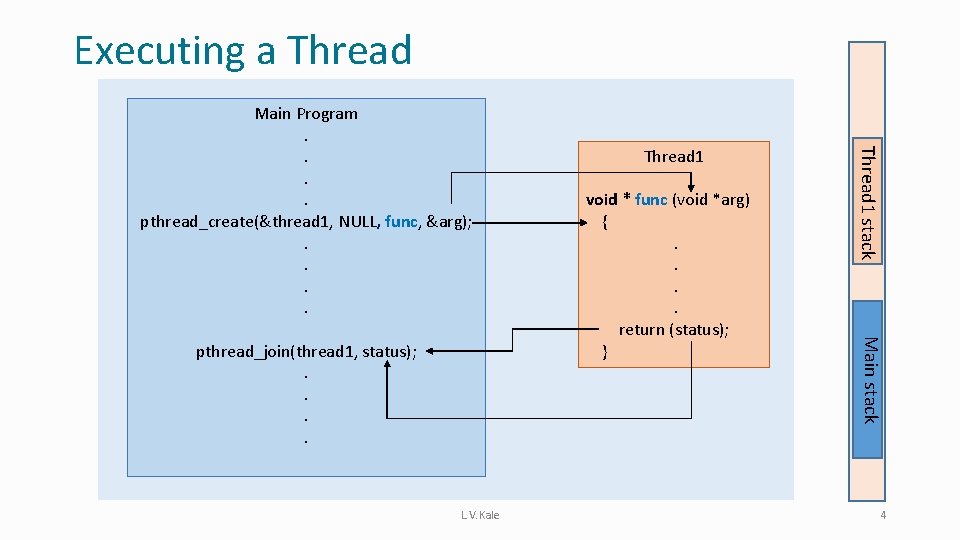

Executing a Thread L. V. Kale void * func (void *arg) {. . return (status); } Main stack pthread_join(thread 1, status); . . Thread 1 stack Main Program. . pthread_create(&thread 1, NULL, func, &arg); . . 4

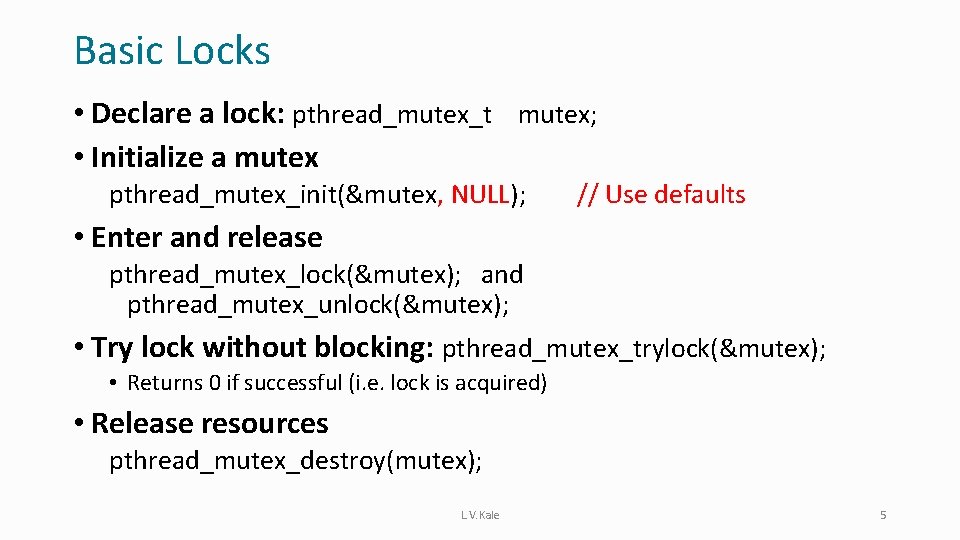

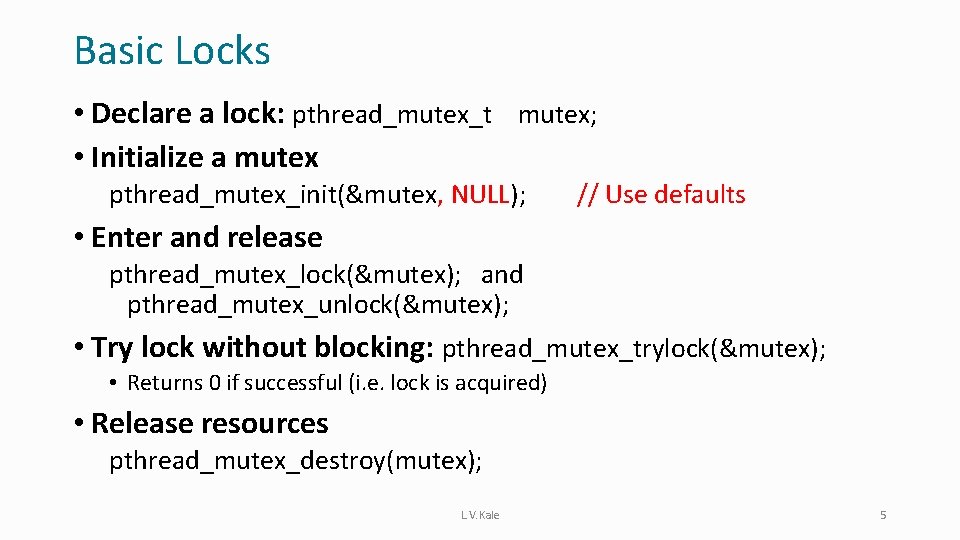

Basic Locks • Declare a lock: pthread_mutex_t mutex; • Initialize a mutex pthread_mutex_init(&mutex, NULL); // Use defaults • Enter and release pthread_mutex_lock(&mutex); and pthread_mutex_unlock(&mutex); • Try lock without blocking: pthread_mutex_trylock(&mutex); • Returns 0 if successful (i. e. lock is acquired) • Release resources pthread_mutex_destroy(mutex); L. V. Kale 5

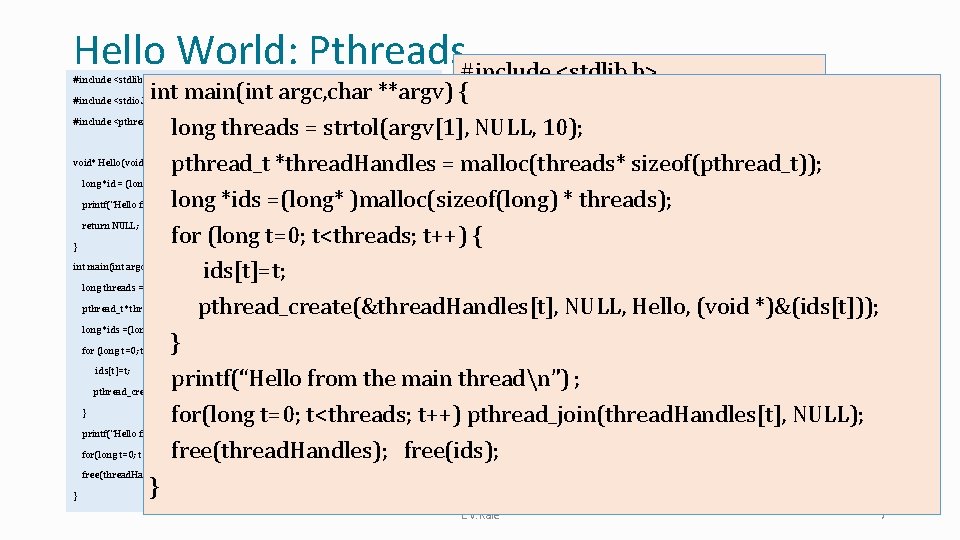

Hello World: Pthreads#include <stdlib. h> int main(int argc, char **argv) { #include <stdio. h> #include <pthread. h> Hello(void* my. Rank) { long threads = strtol(argv[1], void* NULL, 10); #include <pthread. h> void* Hello(void* my. Rank) { long *id = (long* )(my. Rank); pthread_t *thread. Handles = malloc(threads* sizeof(pthread_t)); long *id = (long* )(my. Rank); printf(“Hello from thread %ldn”, *id); long =(long* )malloc(sizeof(long) * threads); printf(“Hello from thread %ldn”, *ids *id); return NULL; for (long t=0; t<threads; t++) {return NULL; } int main(int argc, char **argv) { } ids[t]=t; #include <stdio. h> long threads = strtol(argv[1], NULL, 10); pthread_create(&thread. Handles[t], NULL, Hello, (void *)&(ids[t])); pthread_t *thread. Handles = malloc(threads* sizeof(pthread_t)); long *ids =(long* )malloc(sizeof(long) * threads); } ids[t]=t; printf(“Hello from the main threadn”) ; pthread_create(&thread. Handles[t], NULL, Hello, (void *)&(ids[t])); } for(long t=0; t<threads; t++) pthread_join(thread. Handles[t], NULL); printf(“Hello from the main threadn”) ; for(long t=0; t<threads; t++) pthread_join(thread. Handles[t], NULL); free(thread. Handles); free(ids); } } for (long t=0; t<threads; t++) { L. V. Kale 7

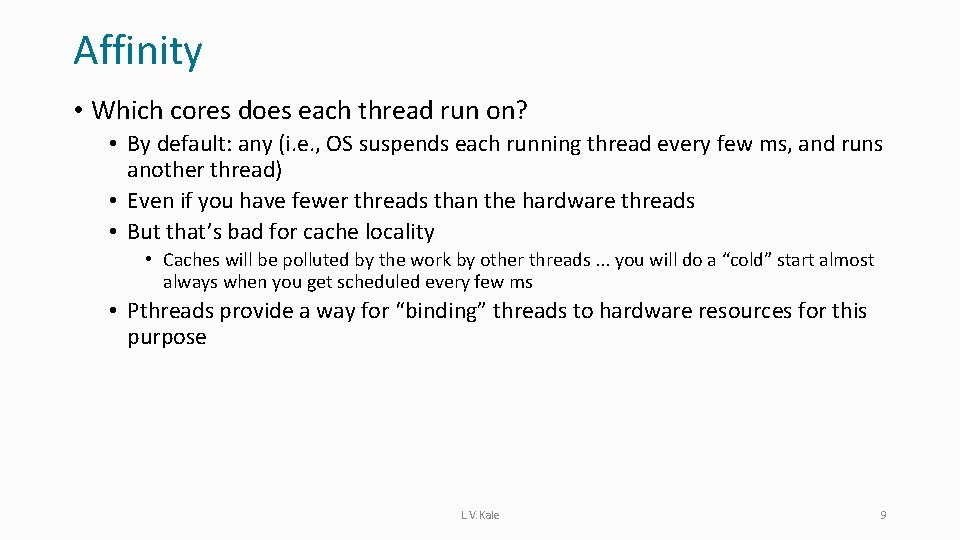

Threads and Resources • Suppose you are running on a machine with K cores • Each core may have 2 “hardware threads” (SMT) • This is often called hyperthreading on SMT (simultaneous multi-threading) • How many pthreads can you create? • Unlimited (well … the system may run out of resources like memory) • Can be smaller or larger than K • In performance oriented programs, its rarely more than 2 K (assuming 2 -way SMT) • We want to prevent OS from swapping out our threads • Which cores does each thread run on? • By default: any (i. e. , OS suspends each running thread every few ms, and runs another thread) L. V. Kale 8

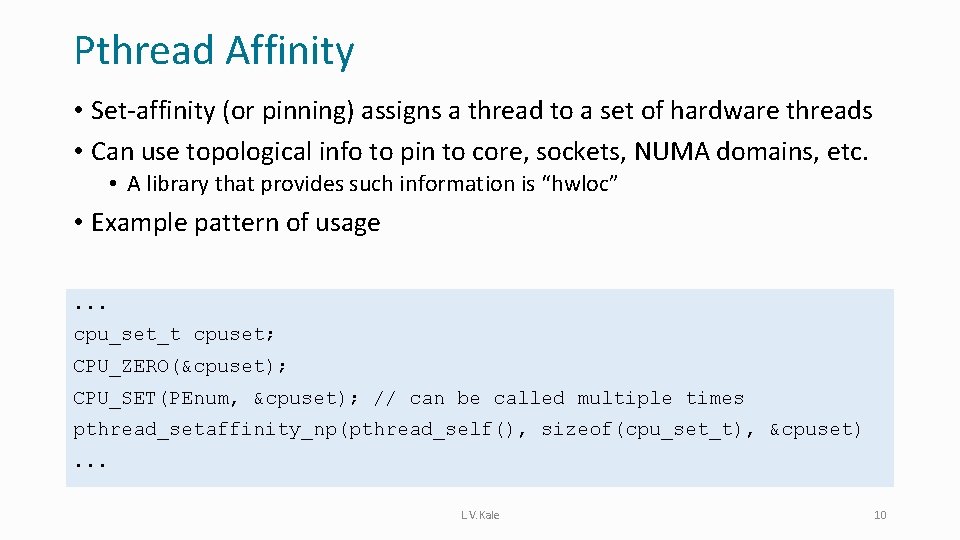

Affinity • Which cores does each thread run on? • By default: any (i. e. , OS suspends each running thread every few ms, and runs another thread) • Even if you have fewer threads than the hardware threads • But that’s bad for cache locality • Caches will be polluted by the work by other threads. . . you will do a “cold” start almost always when you get scheduled every few ms • Pthreads provide a way for “binding” threads to hardware resources for this purpose L. V. Kale 9

Pthread Affinity • Set-affinity (or pinning) assigns a thread to a set of hardware threads • Can use topological info to pin to core, sockets, NUMA domains, etc. • A library that provides such information is “hwloc” • Example pattern of usage. . . cpu_set_t cpuset; CPU_ZERO(&cpuset); CPU_SET(PEnum, &cpuset); // can be called multiple times pthread_setaffinity_np(pthread_self(), sizeof(cpu_set_t), &cpuset). . . L. V. Kale 10

Open. MP vs. Pthreads • Open. MP is great for parallel loops • And for many simple situations with just “#pragma omp parallel” as well • But when there is complicated synchronization, and performance is important, pthreads are (currently) better • However, pthreads are not available on all machines/OS’s • Especially Windows L. V. Kale 11

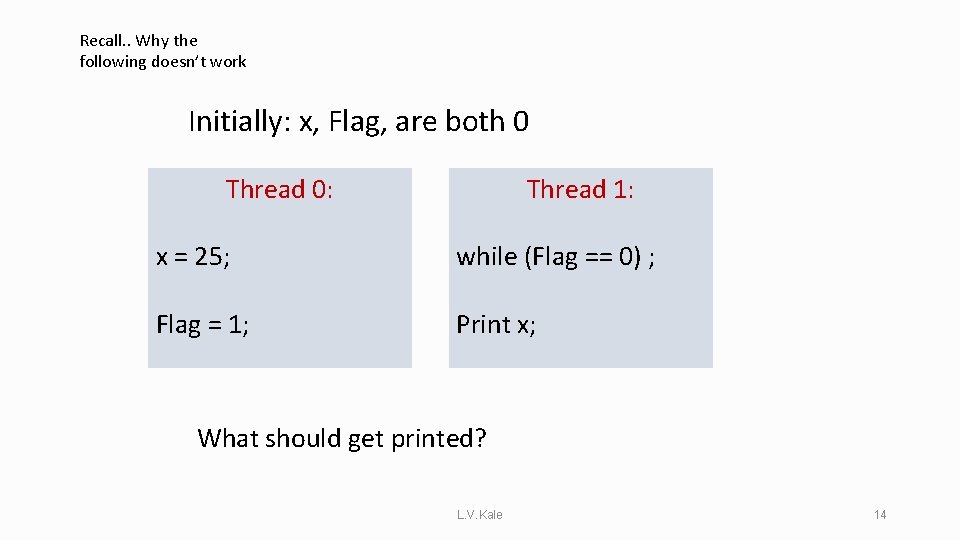

Performance Oriented Programming in Pthreads • Pthreads as used in OS programming don’t need to be as performance oriented as what we need in HPC • E. g. , “synchronizing” every few microseconds • I. e. , exchanging data or waiting for signals • Improving performance: • Always use affinity • Decide the number of pthreads to avoid any over-subscription and use SMT only if memory bandwidth (and floating point intensity) permit • Minimize barriers, using point-to-point synchronization as much as possible (say, between producer and a consumer, as in Gauss-Seidel) • Reduce cross-core communication (it’s much better to use the data produced on one core on the same core if/when possible) • Locks cause serialization of computation across threads L. V. Kale 12

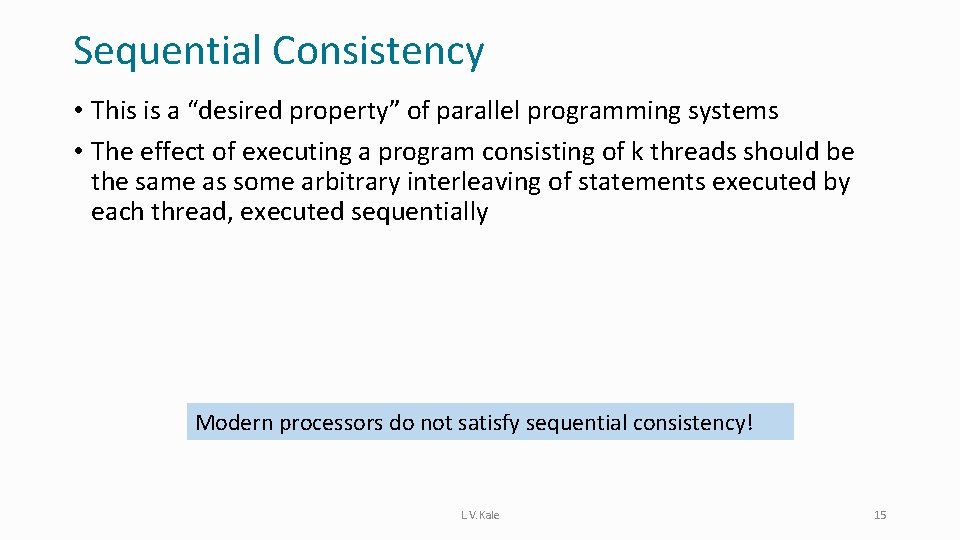

C++11 Atomics Wait-free Synchronization and Queues

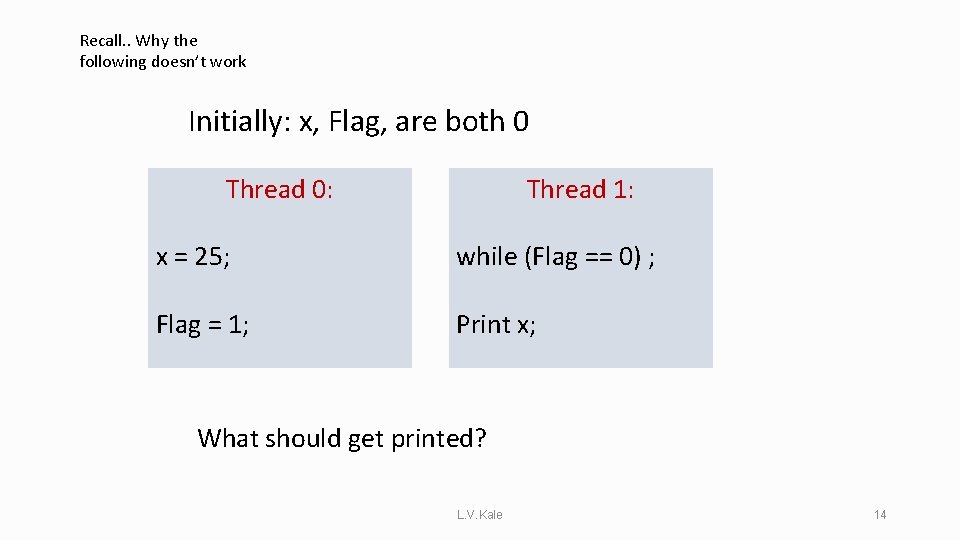

Recall. . Why the following doesn’t work Initially: x, Flag, are both 0 Thread 0: Thread 1: x = 25; while (Flag == 0) ; Flag = 1; Print x; What should get printed? L. V. Kale 14

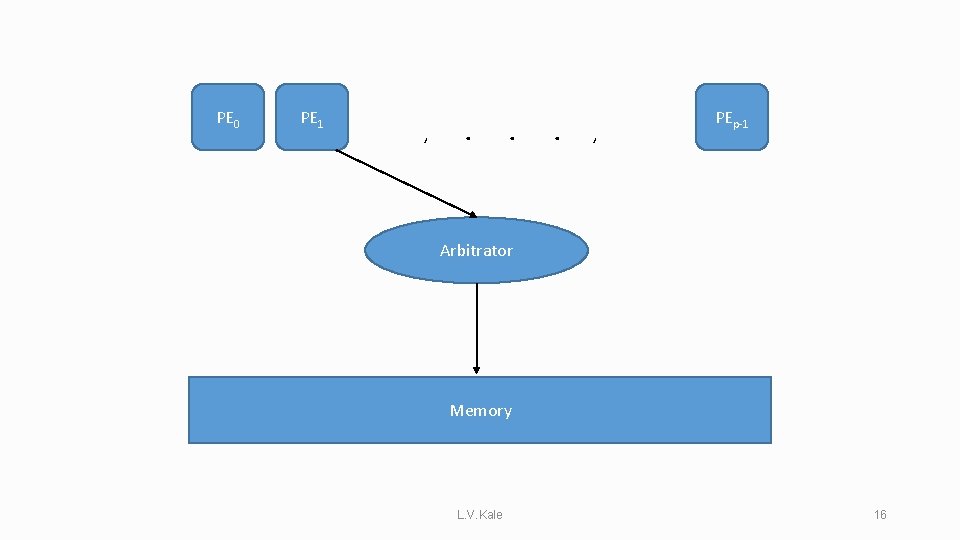

Sequential Consistency • This is a “desired property” of parallel programming systems • The effect of executing a program consisting of k threads should be the same as some arbitrary interleaving of statements executed by each thread, executed sequentially Modern processors do not satisfy sequential consistency! L. V. Kale 15

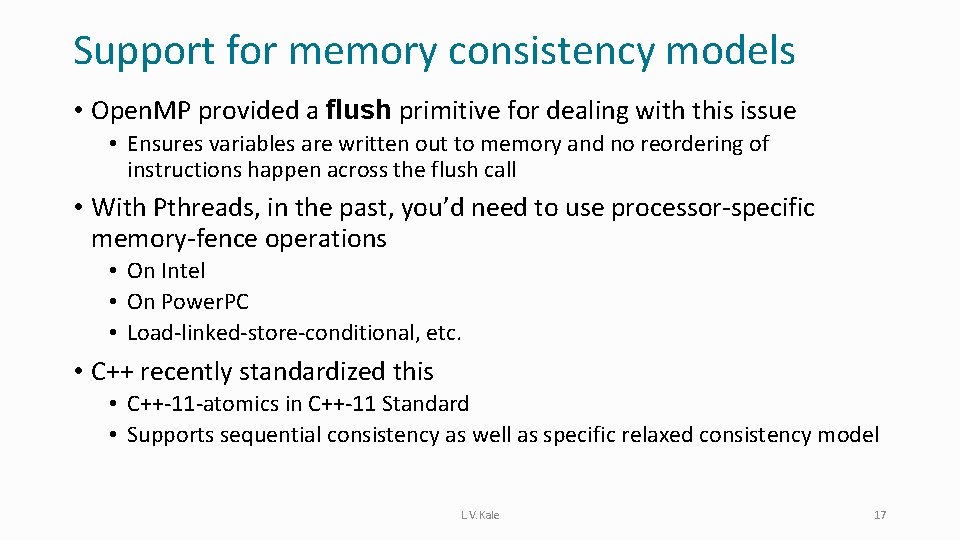

PE 0 PE 1 , . . . , PEp-1 Arbitrator Memory L. V. Kale 16

Support for memory consistency models • Open. MP provided a flush primitive for dealing with this issue • Ensures variables are written out to memory and no reordering of instructions happen across the flush call • With Pthreads, in the past, you’d need to use processor-specific memory-fence operations • On Intel • On Power. PC • Load-linked-store-conditional, etc. • C++ recently standardized this • C++-11 -atomics in C++-11 Standard • Supports sequential consistency as well as specific relaxed consistency model L. V. Kale 17

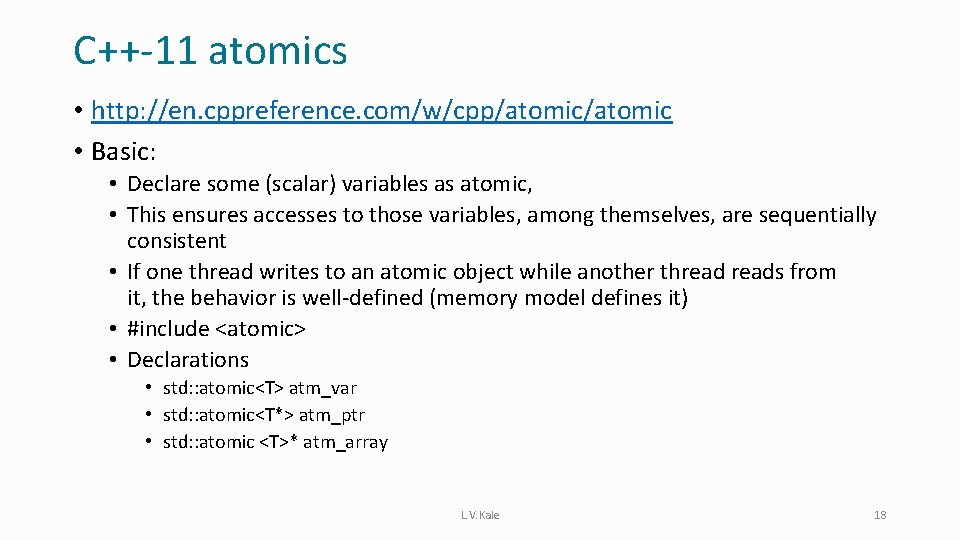

C++-11 atomics • http: //en. cppreference. com/w/cpp/atomic • Basic: • Declare some (scalar) variables as atomic, • This ensures accesses to those variables, among themselves, are sequentially consistent • If one thread writes to an atomic object while another threads from it, the behavior is well-defined (memory model defines it) • #include <atomic> • Declarations • std: : atomic<T> atm_var • std: : atomic<T*> atm_ptr • std: : atomic <T>* atm_array L. V. Kale 18

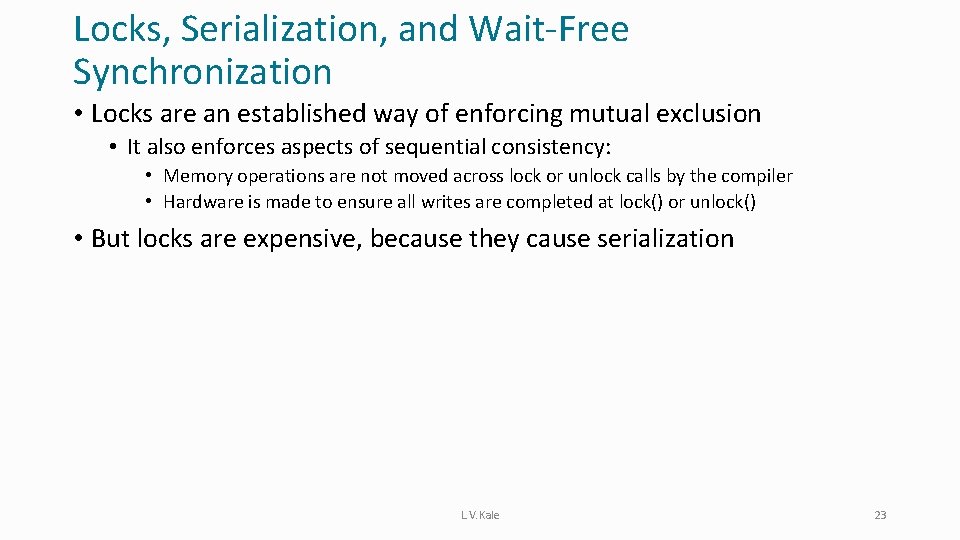

Atomic: C++ 11 atomic class template and specializations for bool, int, and pointer type atomic_store atomically replaces the value of the atomic object with a non-atomic argument atomic_load atomically obtains the value stored in an atomic object atomic_fetch_add adds a non-atomic value to an atomic object and obtains the previous value of the atomic_compare_exchange_strong atomically compares the value of the atomic object with non-atomic argument and performs atomic exchange if equal or atomic load if not Source: https: //en. cppreference. com/w/cpp/atomic L. V. Kale 19

atomic_compare_exchange_strong(a, b, c) a 5 a 0 x = b c 0 7 ? b c = 0 7 ? ✔ L. V. Kale 20

C++11 Atomics Avoiding Locks, serialization and Queues with Atomics

Locks, Serialization, and Wait-Free Synchronization • Locks are an established way of enforcing mutual exclusion • It also enforces aspects of sequential consistency: • Memory operations are not moved across lock or unlock calls by the compiler • Hardware is made to ensure all writes are completed at lock() or unlock() • But locks are expensive, because they cause serialization L. V. Kale 23

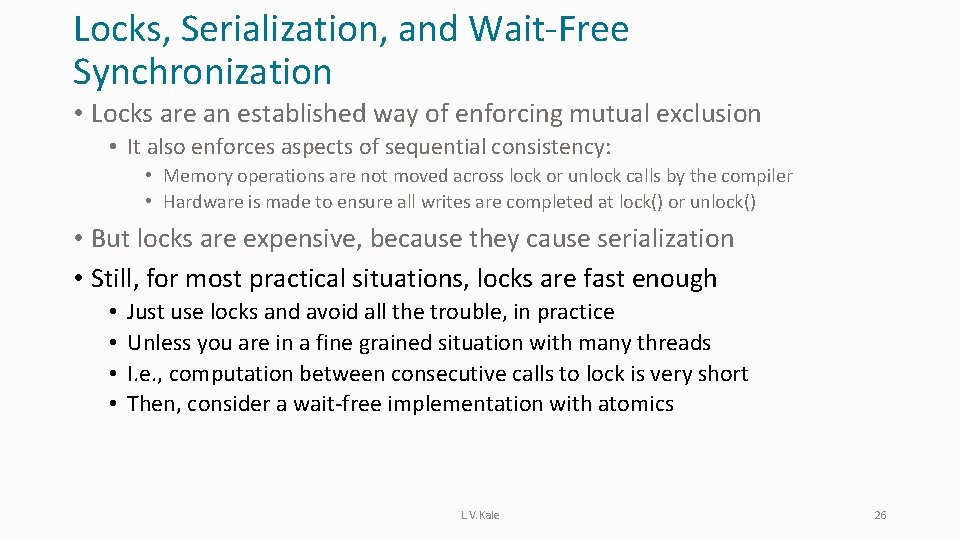

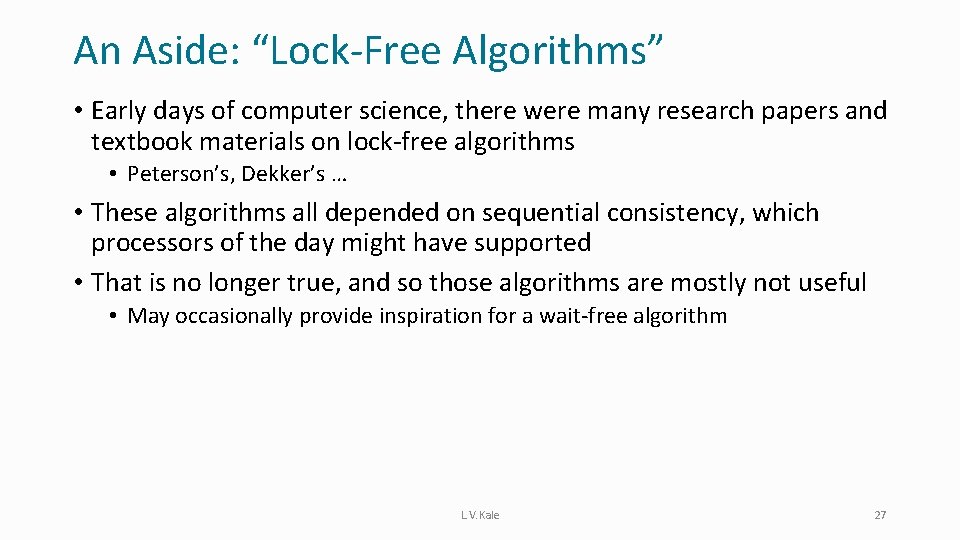

Locks, Critical sections and serialization • Suppose all threads are doing the following • The work in dowork() is tw • The time in critical is tc • The serialization cost becomes a problem as the number of threads increase, but can be small up to #threads < tw/tc L. V. Kale for I = 0, N dowork(); lock(x); critical. . ; unlock(x) 24

tw tw tc tc tw tw tc tw tw tc tw tc tc tc tw tw tc tw tc tw L. V. Kale 25

Locks, Serialization, and Wait-Free Synchronization • Locks are an established way of enforcing mutual exclusion • It also enforces aspects of sequential consistency: • Memory operations are not moved across lock or unlock calls by the compiler • Hardware is made to ensure all writes are completed at lock() or unlock() • But locks are expensive, because they cause serialization • Still, for most practical situations, locks are fast enough • • Just use locks and avoid all the trouble, in practice Unless you are in a fine grained situation with many threads I. e. , computation between consecutive calls to lock is very short Then, consider a wait-free implementation with atomics L. V. Kale 26

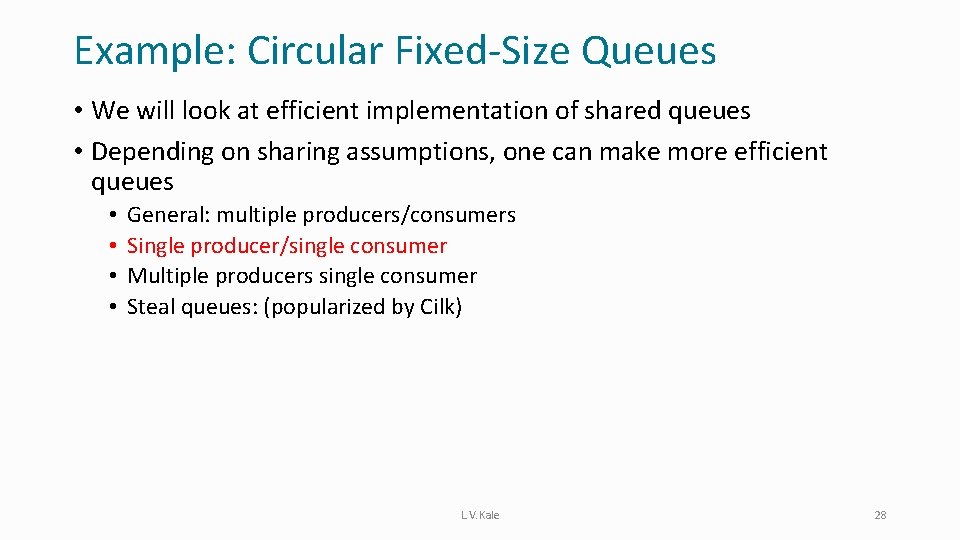

An Aside: “Lock-Free Algorithms” • Early days of computer science, there were many research papers and textbook materials on lock-free algorithms • Peterson’s, Dekker’s … • These algorithms all depended on sequential consistency, which processors of the day might have supported • That is no longer true, and so those algorithms are mostly not useful • May occasionally provide inspiration for a wait-free algorithm L. V. Kale 27

Example: Circular Fixed-Size Queues • We will look at efficient implementation of shared queues • Depending on sharing assumptions, one can make more efficient queues • • General: multiple producers/consumers Single producer/single consumer Multiple producers single consumer Steal queues: (popularized by Cilk) L. V. Kale 28

Circular Queues: Implementation • Array of fixed size 2^n • Masking of indices 0 1 2 3 1021 1022 1023 0 1024 1 1025 2 3 1021 1022 1023 Masking of Indices 1025: 1000001 Masking L. V. Kale 1: 000001 29

Single Producer Single Consumer Queue • We will look at fixed-size queue • Not allowed to grow beyond a fixed limit • Single producer accesses the tail • Single consumer accesses the head • No contention on head and tail • Count number of elements in the queue: used to safeguard against empty and full conditions on the queue • Three implementations • Lockless Thread Unsafe • Locking Thread Safe • Lockless Thread Safe L. V. Kale 30

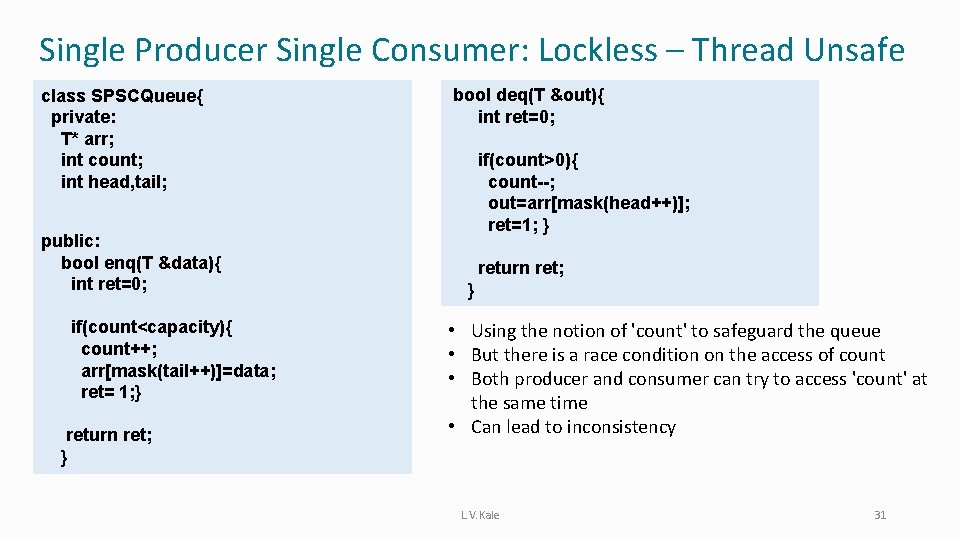

Single Producer Single Consumer: Lockless – Thread Unsafe class SPSCQueue{ private: T* arr; int count; int head, tail; public: bool enq(T &data){ int ret=0; if(count<capacity){ count++; arr[mask(tail++)]=data; ret= 1; } return ret; } bool deq(T &out){ int ret=0; if(count>0){ count--; out=arr[mask(head++)]; ret=1; } return ret; } • Using the notion of 'count' to safeguard the queue • But there is a race condition on the access of count • Both producer and consumer can try to access 'count' at the same time • Can lead to inconsistency L. V. Kale 31

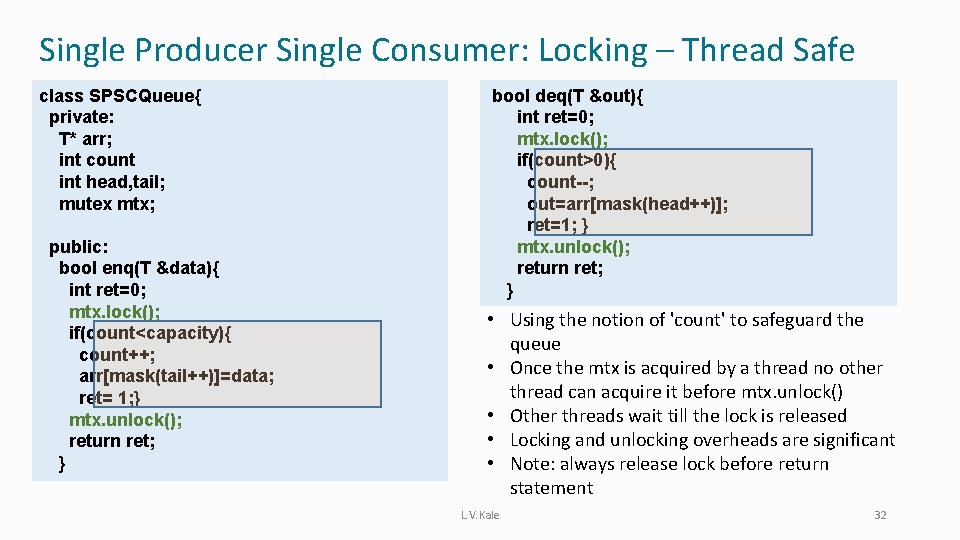

Single Producer Single Consumer: Locking – Thread Safe class SPSCQueue{ private: T* arr; int count int head, tail; mutex mtx; public: bool enq(T &data){ int ret=0; mtx. lock(); if(count<capacity){ count++; arr[mask(tail++)]=data; ret= 1; } mtx. unlock(); return ret; } bool deq(T &out){ int ret=0; mtx. lock(); if(count>0){ count--; out=arr[mask(head++)]; ret=1; } mtx. unlock(); return ret; } • Using the notion of 'count' to safeguard the queue • Once the mtx is acquired by a thread no other thread can acquire it before mtx. unlock() • Other threads wait till the lock is released • Locking and unlocking overheads are significant • Note: always release lock before return statement L. V. Kale 32

![Single Producer Single Consumer WaitFree and Thread Safe bool deqT out ifcount load0 outarrmaskhead Single Producer Single Consumer: Wait-Free and Thread Safe bool deq(T &out){ if(count. load()>0){ out=arr[mask(head++)].](https://slidetodoc.com/presentation_image_h2/3b3e18faa1a1b07b2c40ddbae6adbf0a/image-31.jpg)

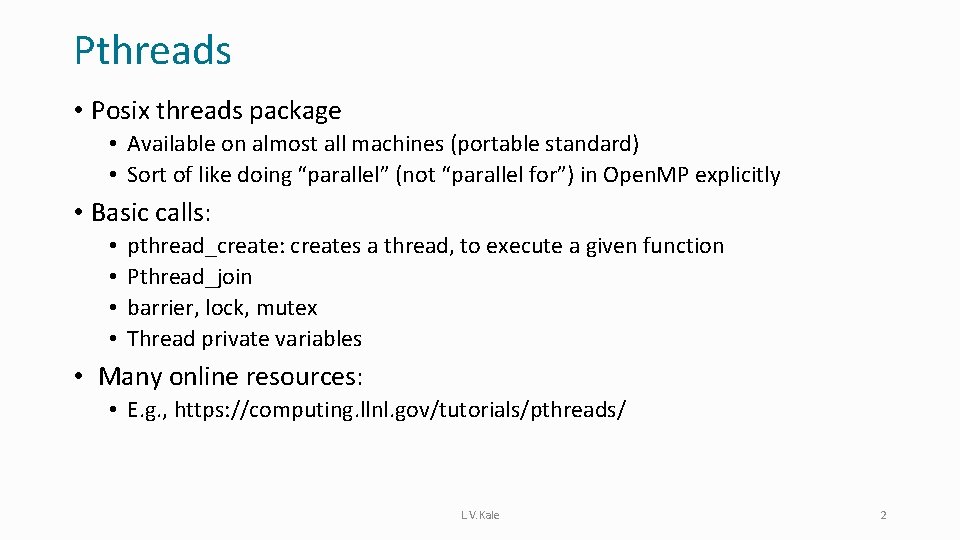

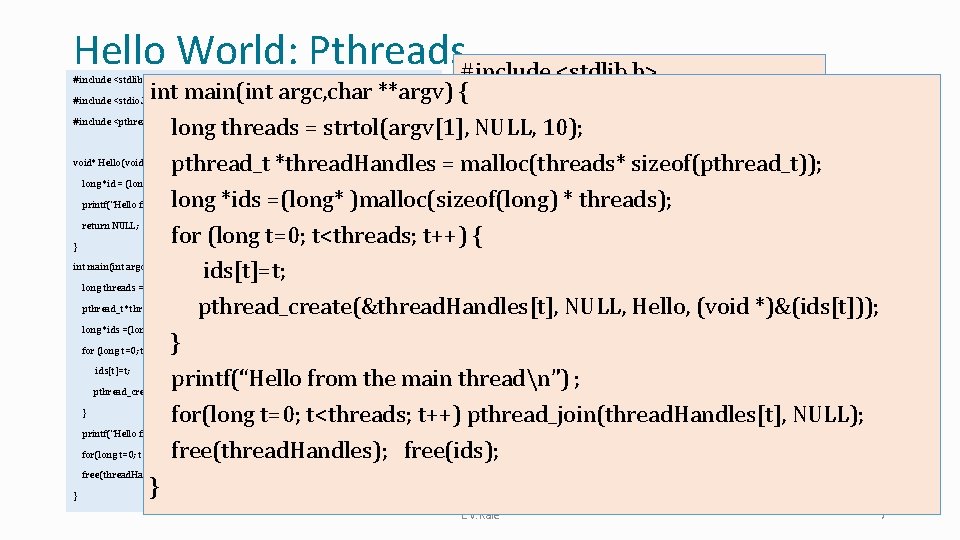

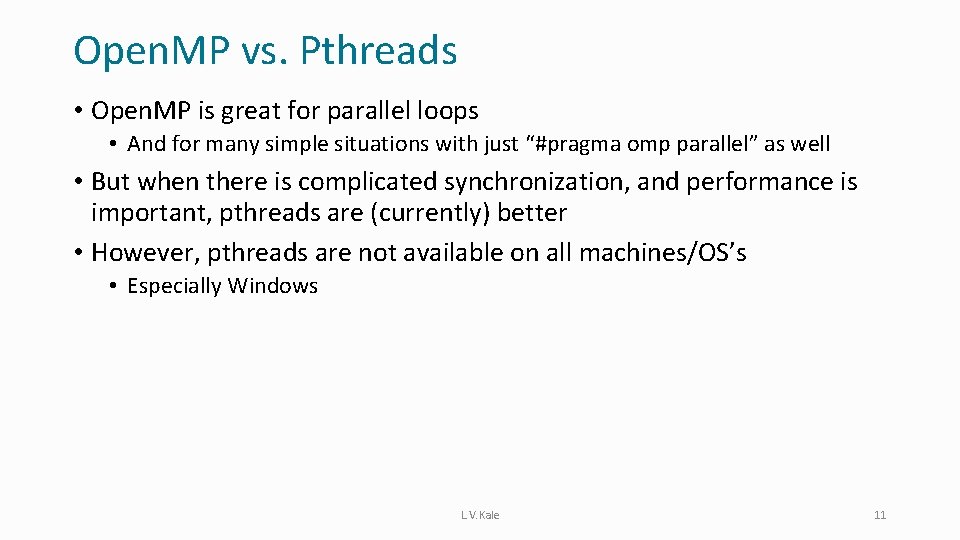

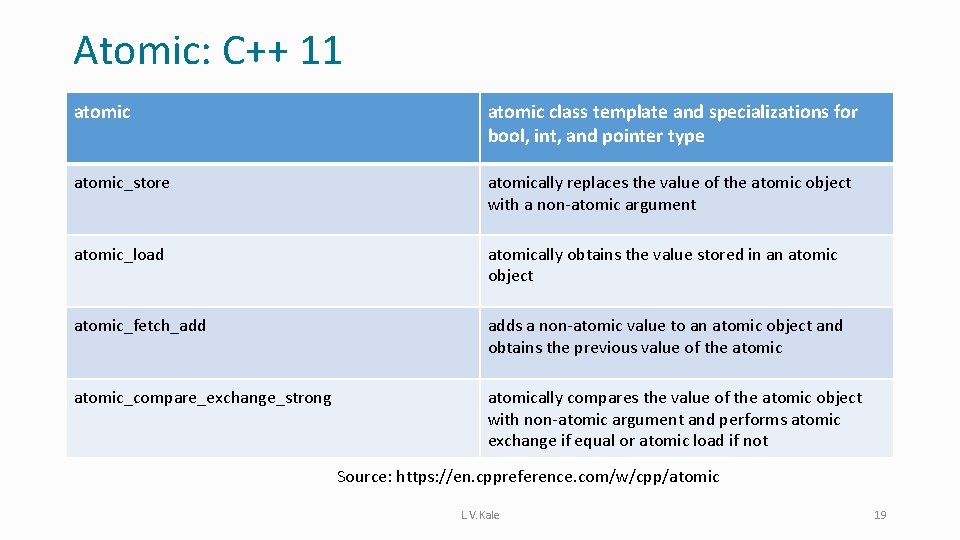

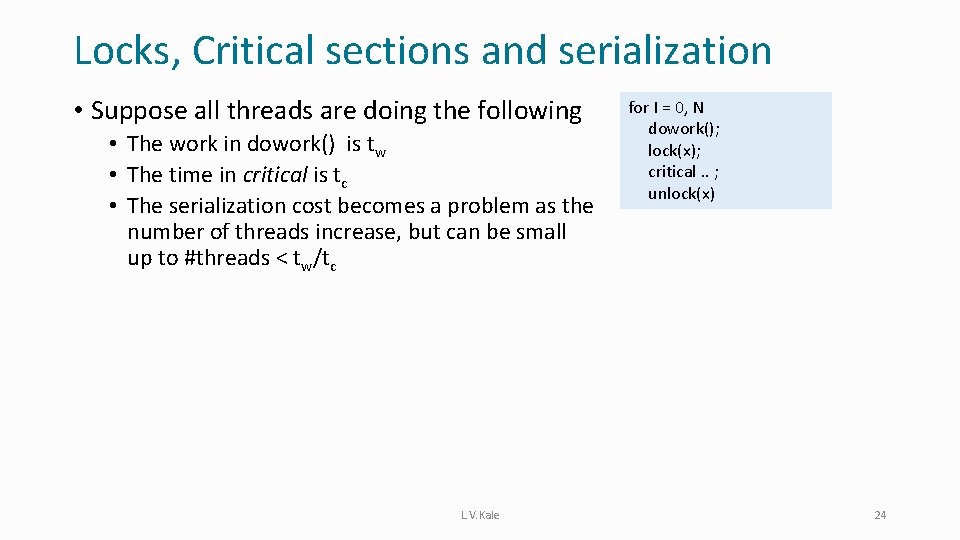

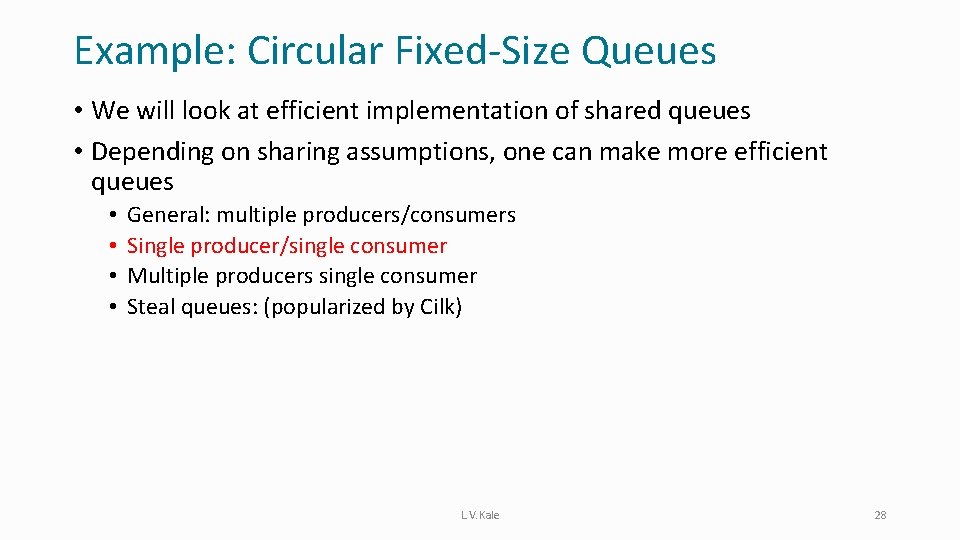

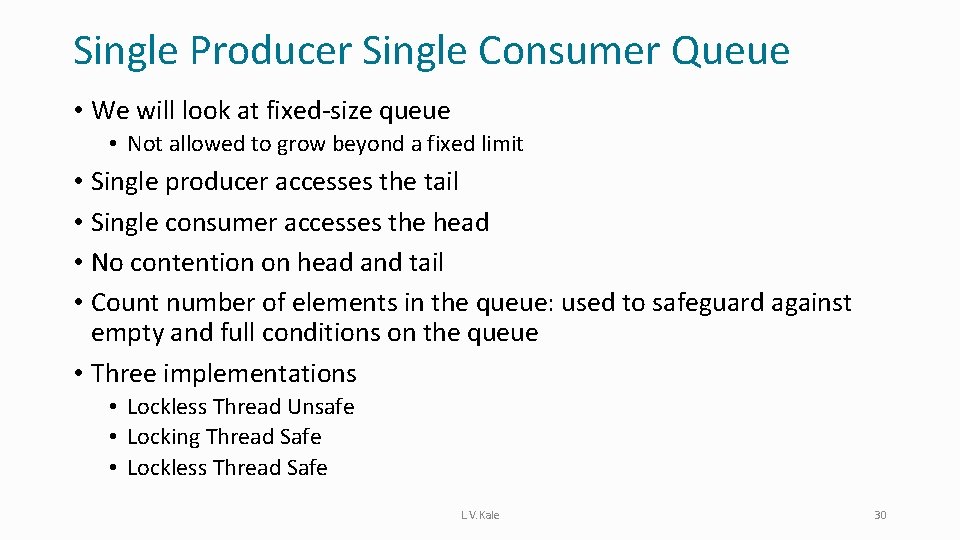

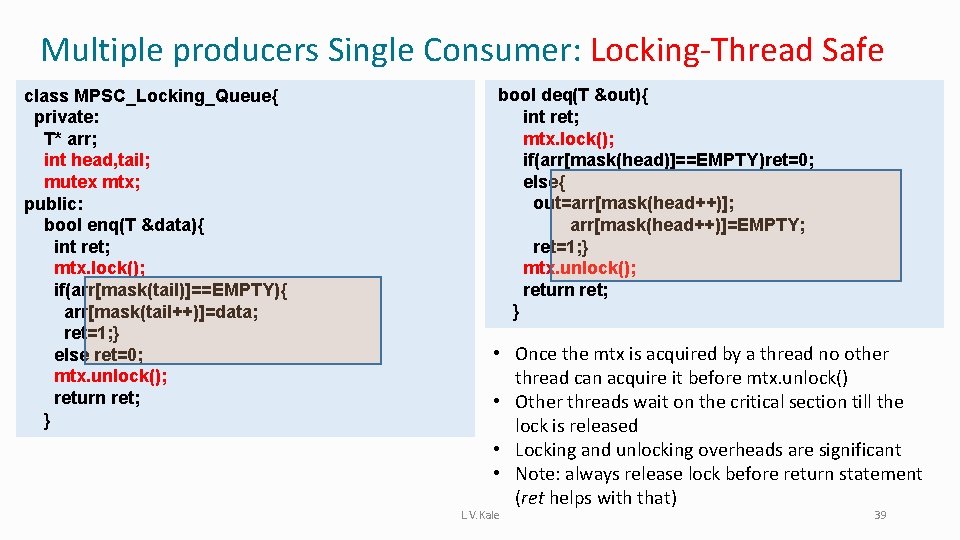

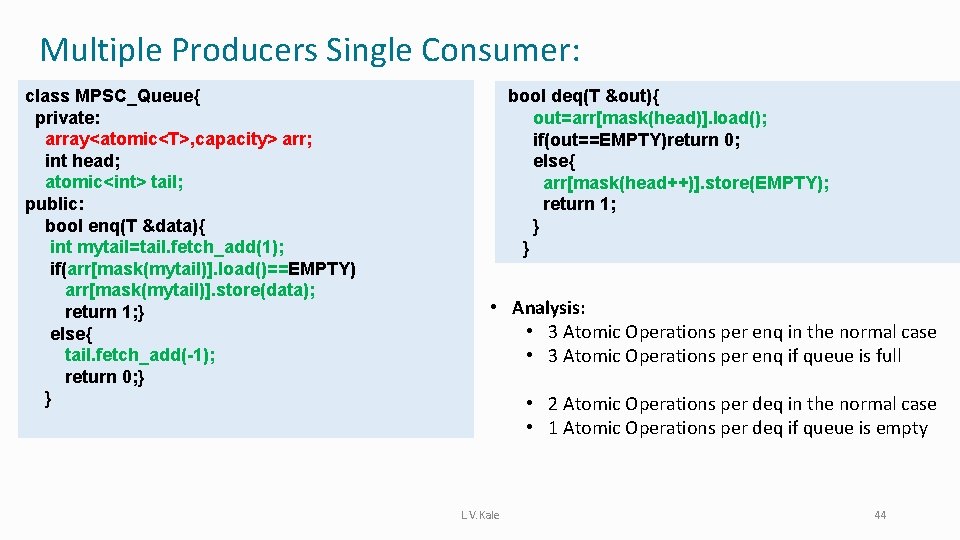

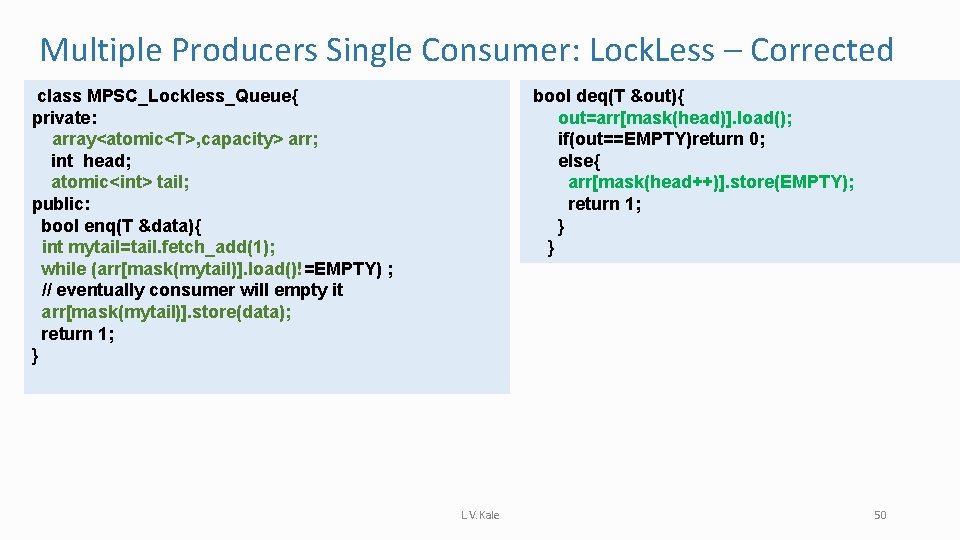

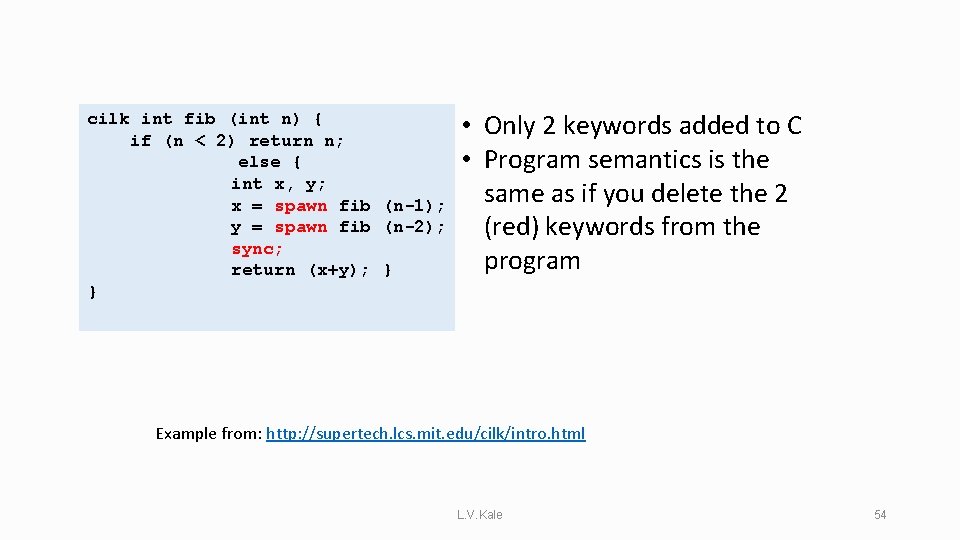

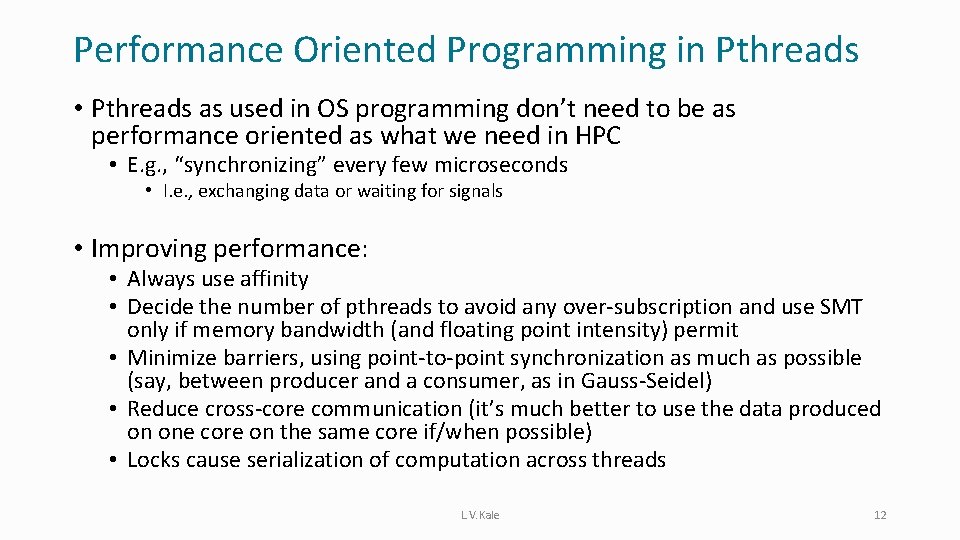

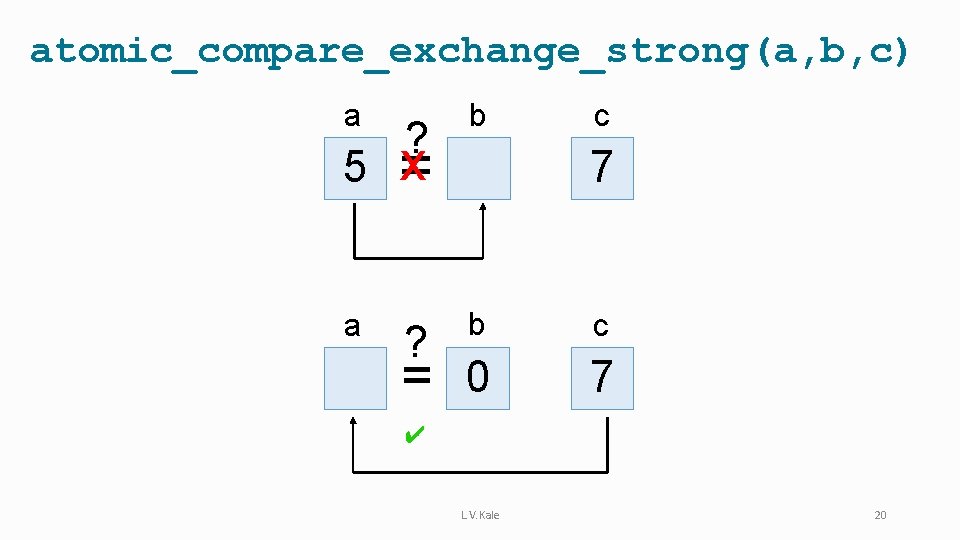

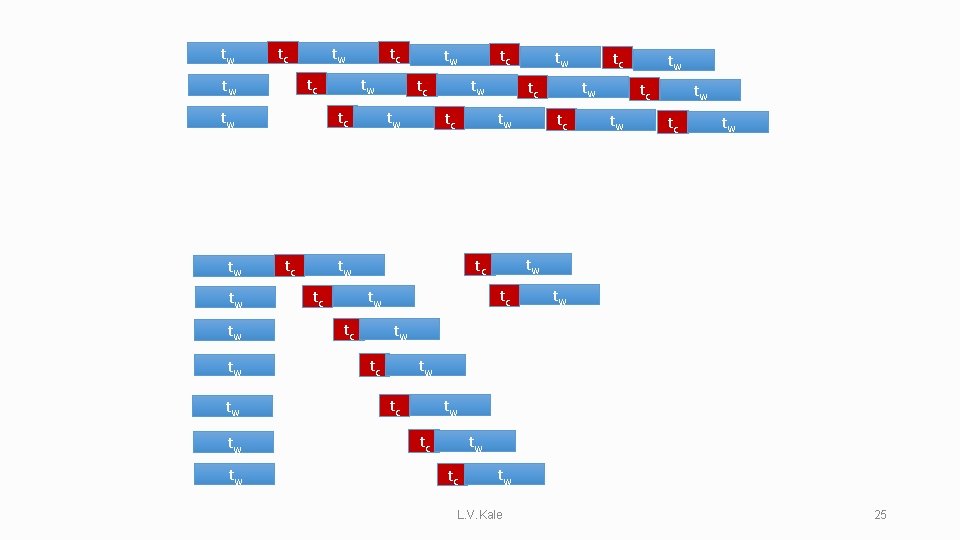

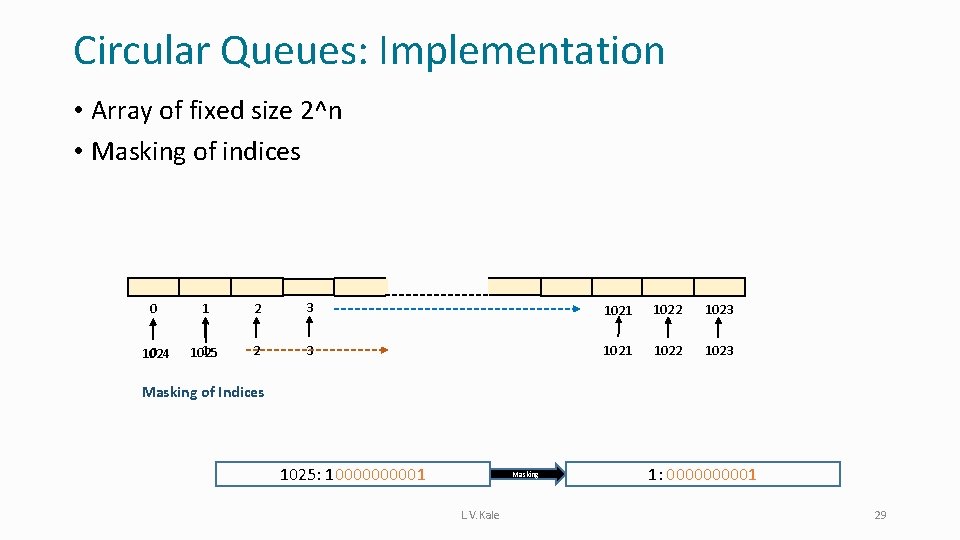

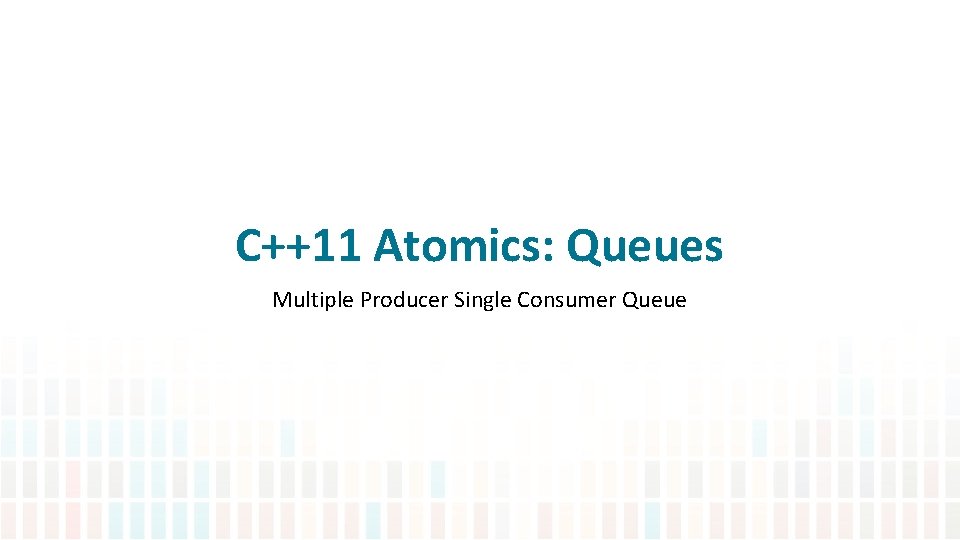

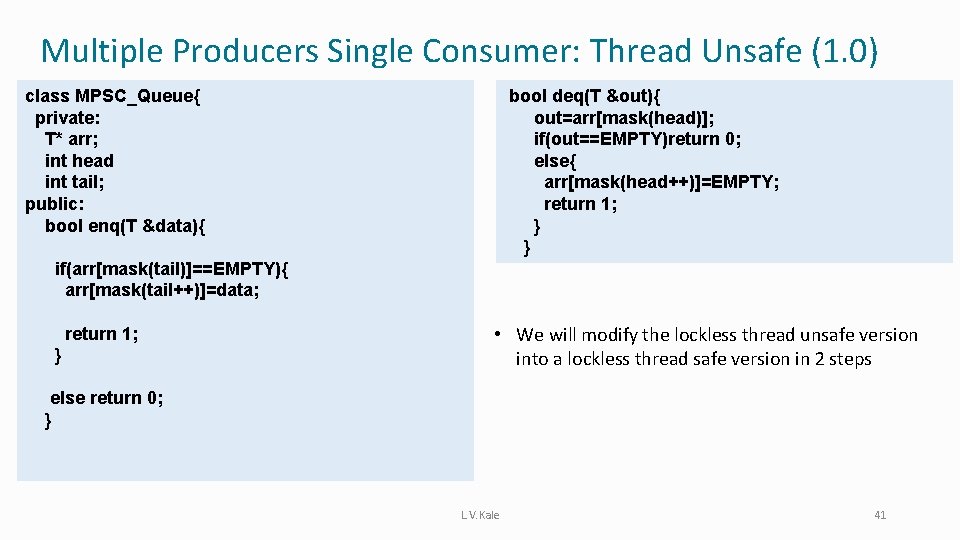

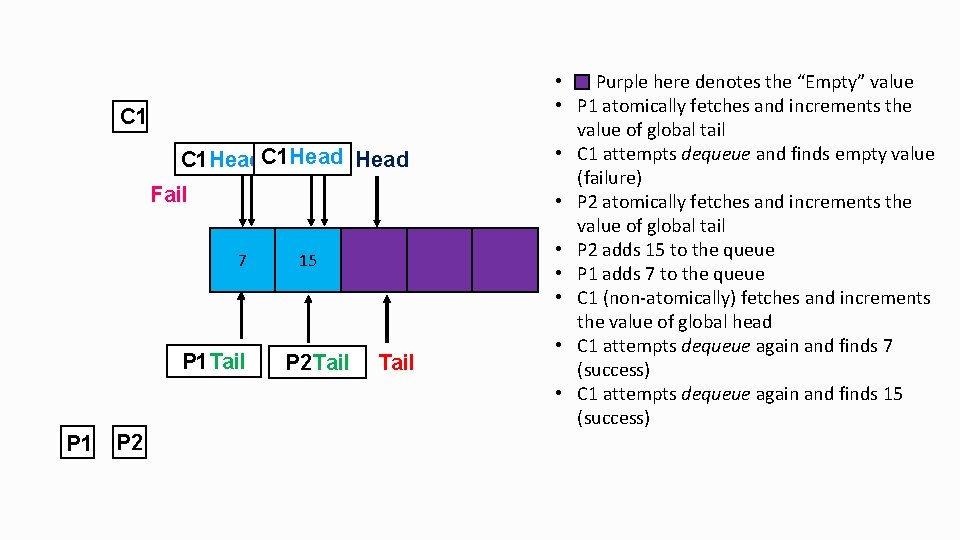

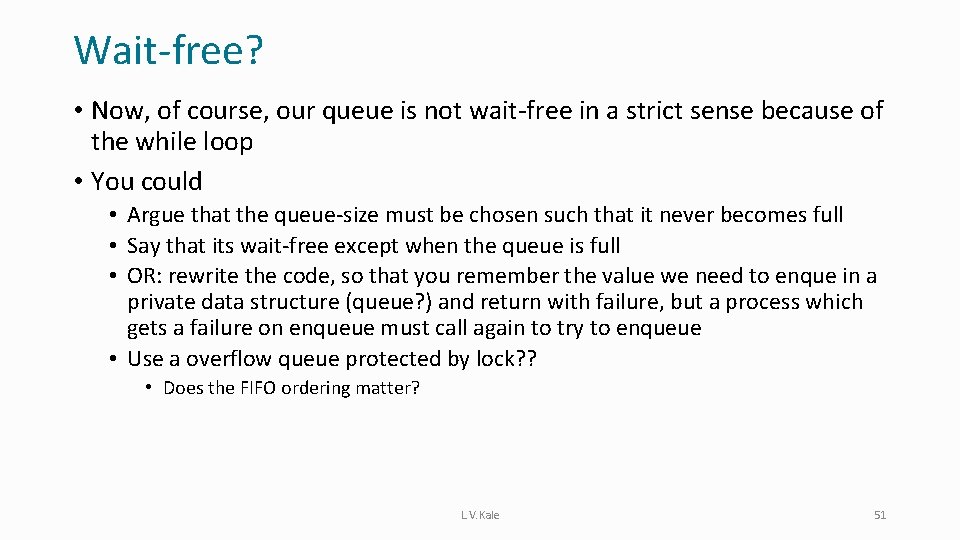

Single Producer Single Consumer: Wait-Free and Thread Safe bool deq(T &out){ if(count. load()>0){ out=arr[mask(head++)]. load() count. fetch_add(-1); return 1; } return 0; } class SPSCQueue{ private: array<atomic<T>, capacity> arr; atomic<int> count; int head, tail; • Make count atomic (accessed by producer and consumer) to prevent contention • 2 atomic operations per enq or deq operation in the normal case public: bool enq(T &data){ if(count. load()<capacity){ arr[mask(tail++)]. store(data); count. fetch_add(1); return 1; } return 0; } L. V. Kale 33

C++11 Atomics: Queues Multiple Producer Single Consumer Queue

Multiple Producer Single Consumer Queue • Assume fixed size, power of 2, queue • We will use the notion of an 'EMPTY' element • A specific value denotes empty in the queue (say – 1) • Producer thread checks if a position contains EMPTY before inserting to it • Consumer thread makes sure a position does not contain EMPTY before extracting a value from it • After extracting the value it inserts EMPTY in that position L. V. Kale 35

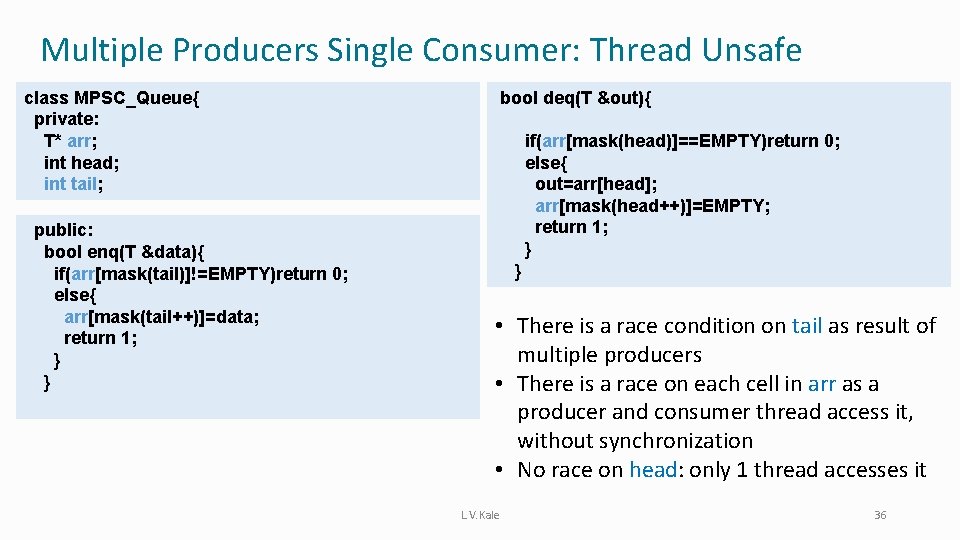

Multiple Producers Single Consumer: Thread Unsafe class MPSC_Queue{ private: T* arr; int head; int tail; public: bool enq(T &data){ if(arr[mask(tail)]!=EMPTY)return 0; else{ arr[mask(tail++)]=data; return 1; } } bool deq(T &out){ if(arr[mask(head)]==EMPTY)return 0; else{ out=arr[head]; arr[mask(head++)]=EMPTY; return 1; } } • There is a race condition on tail as result of multiple producers • There is a race on each cell in arr as a producer and consumer thread access it, without synchronization • No race on head: only 1 thread accesses it L. V. Kale 36

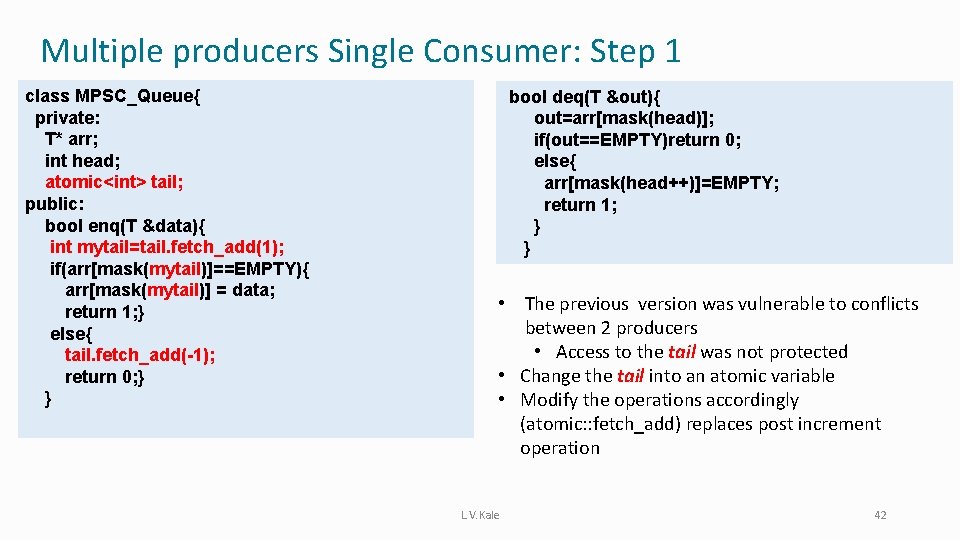

Multiple producers Single Consumer: Locking-Thread Safe class MPSC_Locking_Queue{ private: T* arr; int head, tail; mutex mtx; public: bool enq(T &data){ int ret; mtx. lock(); if(arr[mask(tail)]==EMPTY){ arr[mask(tail++)]=data; ret=1; } else ret=0; mtx. unlock(); return ret; } bool deq(T &out){ int ret; mtx. lock(); if(arr[mask(head)]==EMPTY)ret=0; else{ out=arr[mask(head++)]; arr[mask(head++)]=EMPTY; ret=1; } mtx. unlock(); return ret; } • Once the mtx is acquired by a thread no other thread can acquire it before mtx. unlock() • Other threads wait on the critical section till the lock is released • Locking and unlocking overheads are significant • Note: always release lock before return statement (ret helps with that) L. V. Kale 39

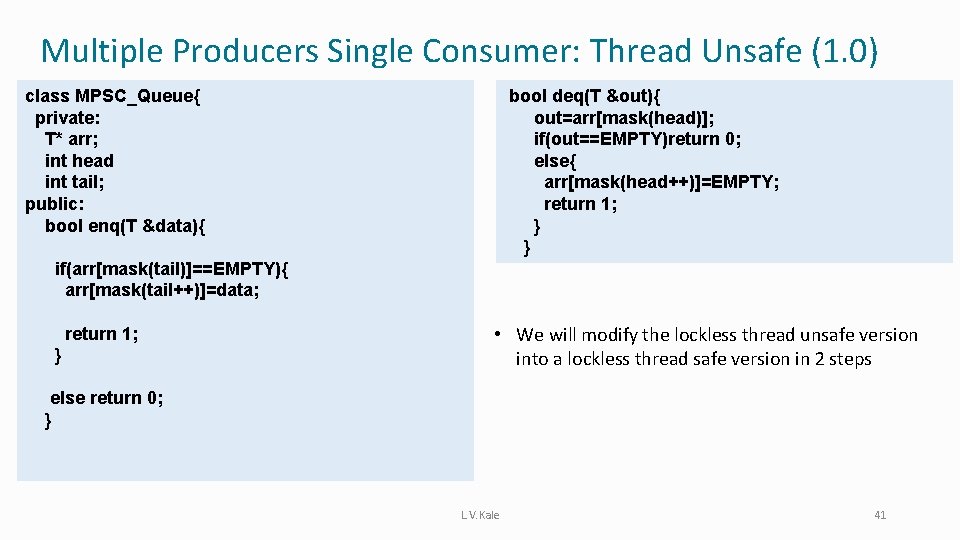

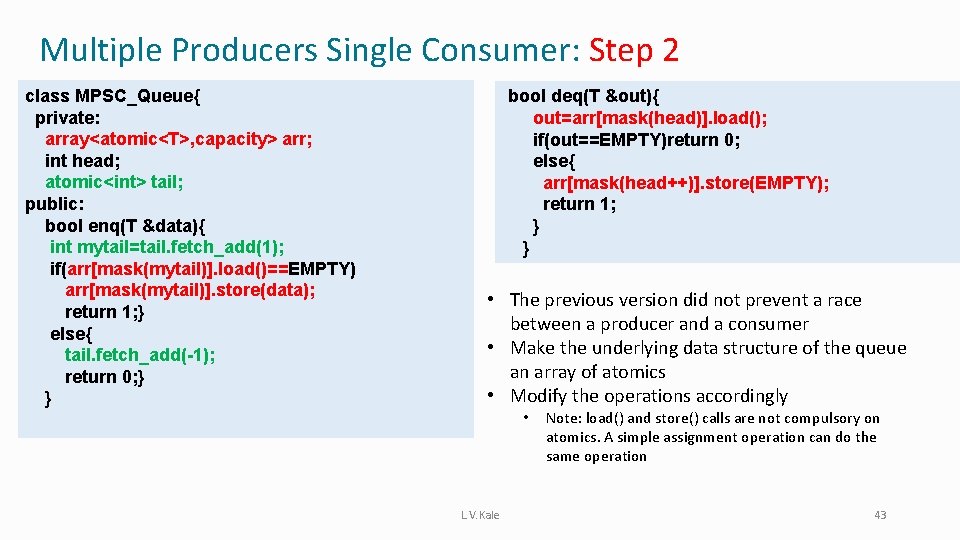

Multiple Producers Single Consumer: Thread Unsafe (1. 0) class MPSC_Queue{ private: T* arr; int head int tail; public: bool enq(T &data){ bool deq(T &out){ out=arr[mask(head)]; if(out==EMPTY)return 0; else{ arr[mask(head++)]=EMPTY; return 1; } } if(arr[mask(tail)]==EMPTY){ arr[mask(tail++)]=data; return 1; } • We will modify the lockless thread unsafe version into a lockless thread safe version in 2 steps else return 0; } L. V. Kale 41

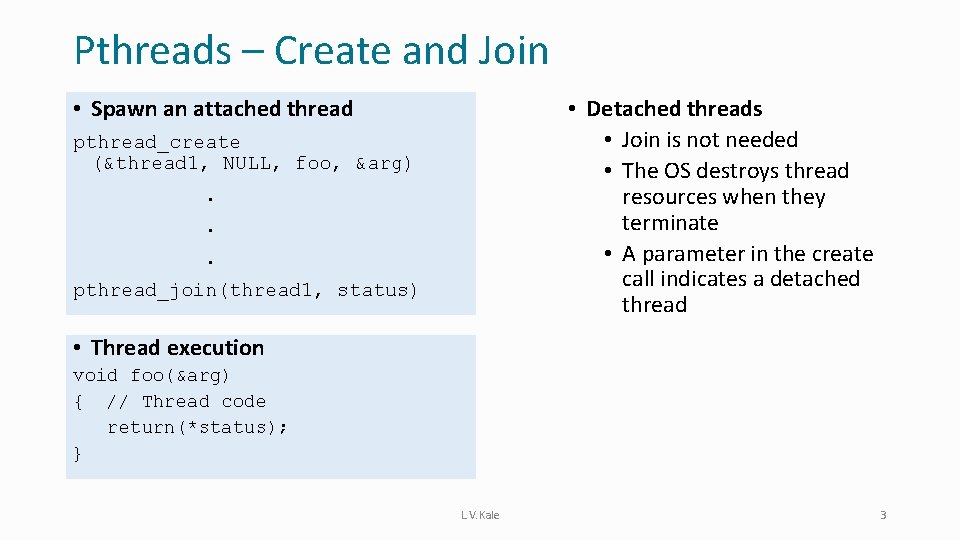

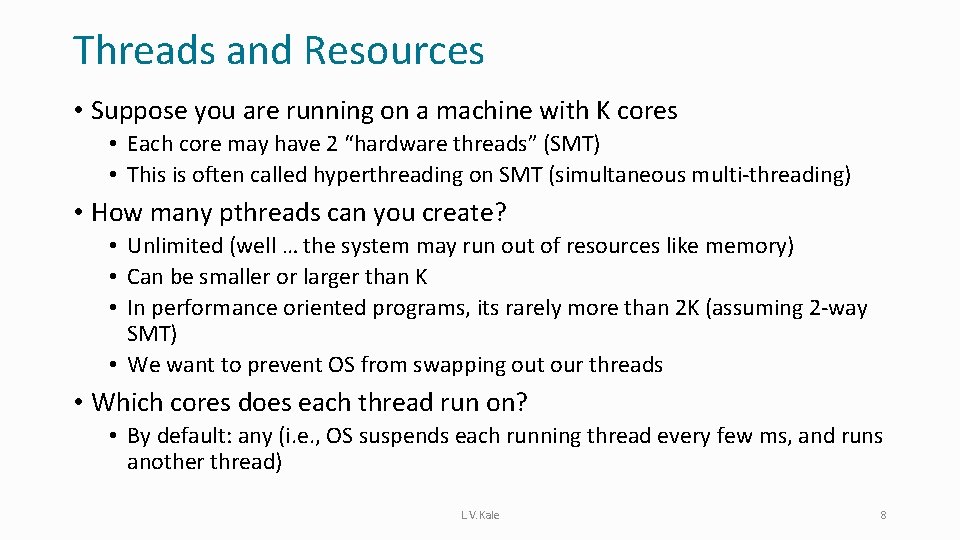

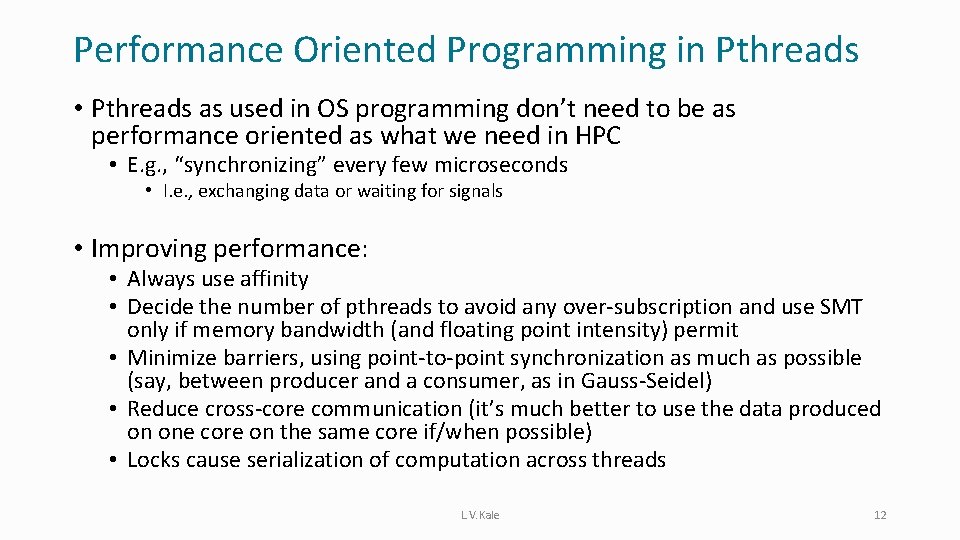

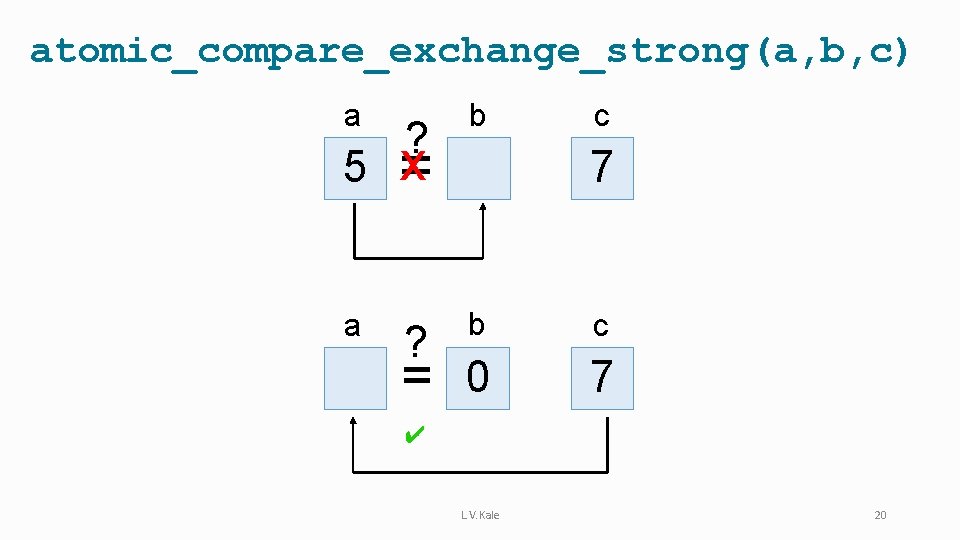

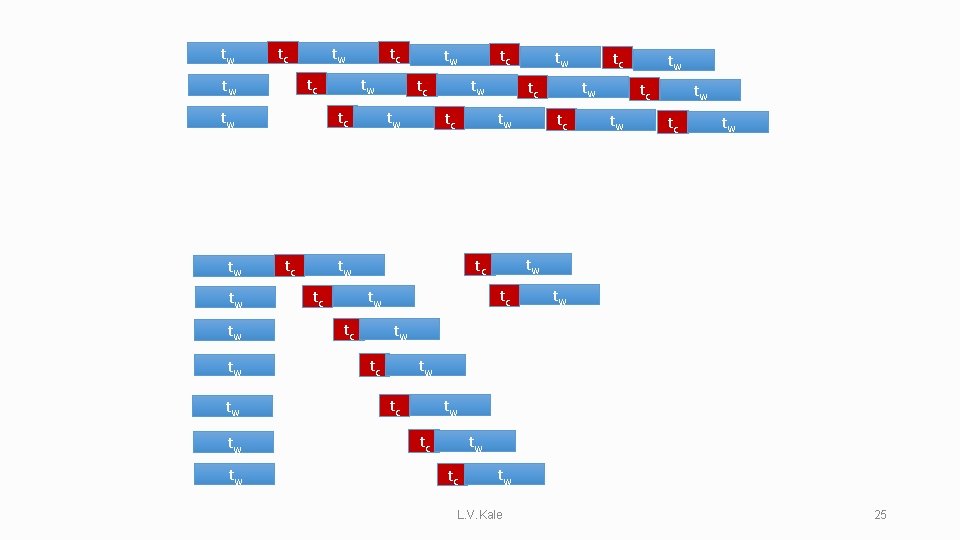

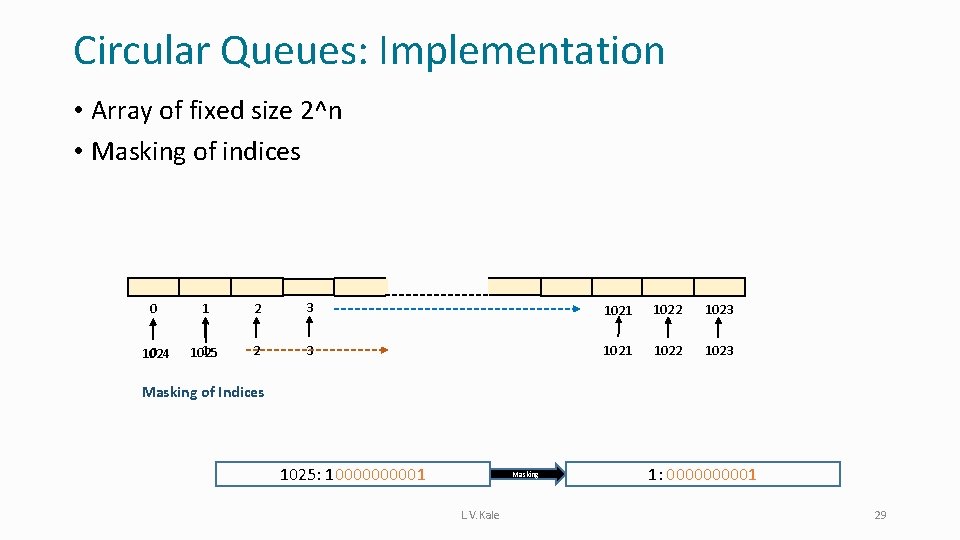

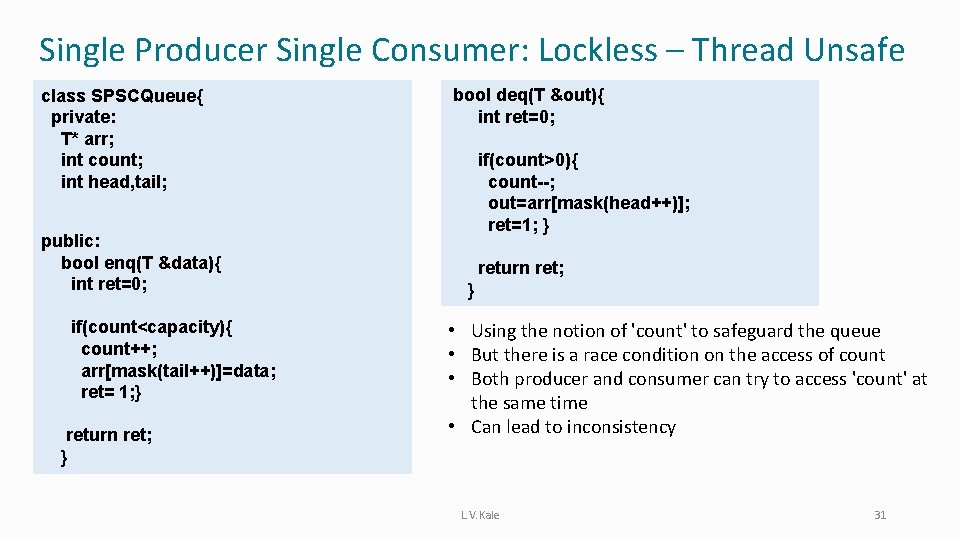

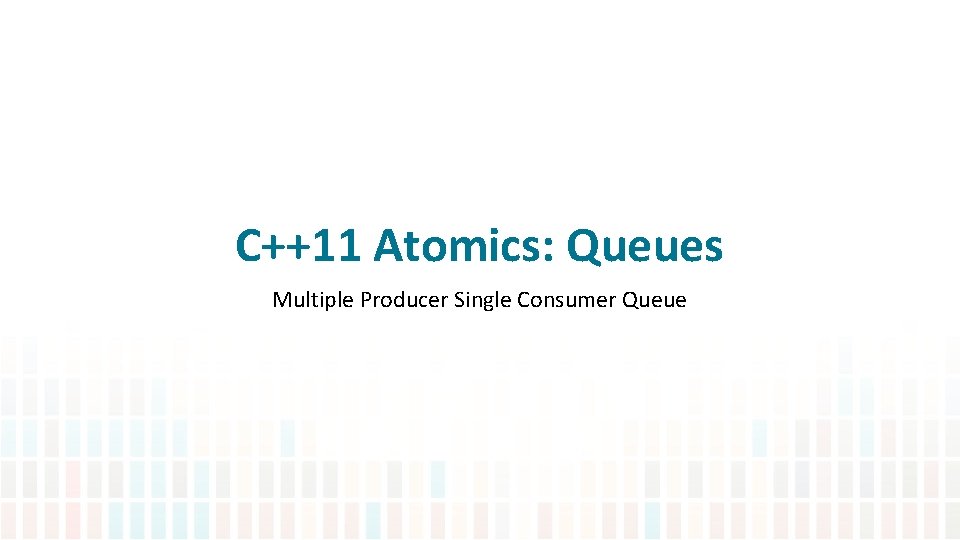

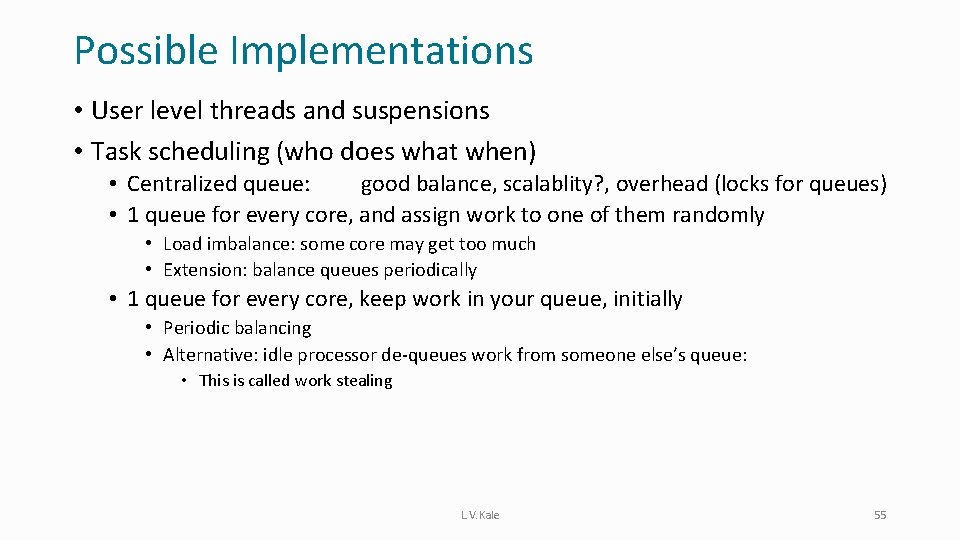

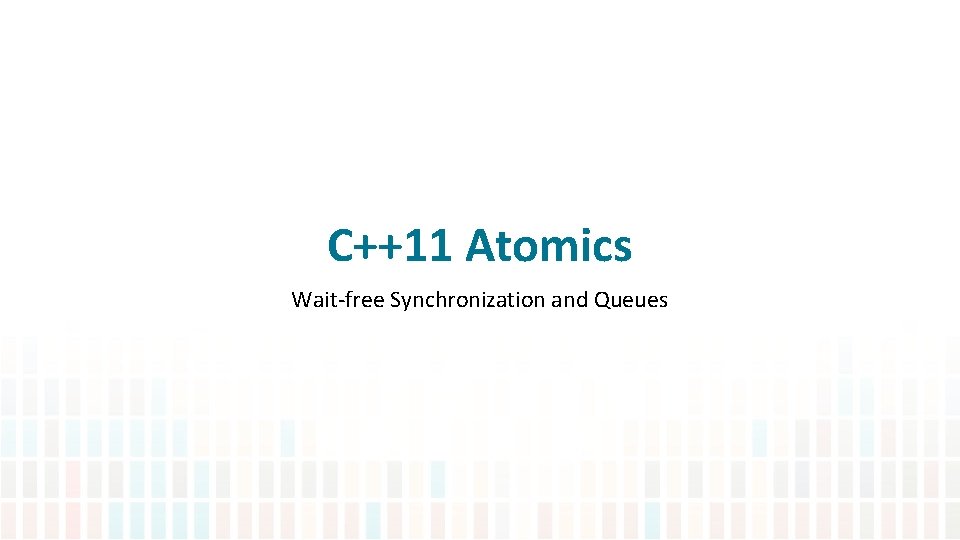

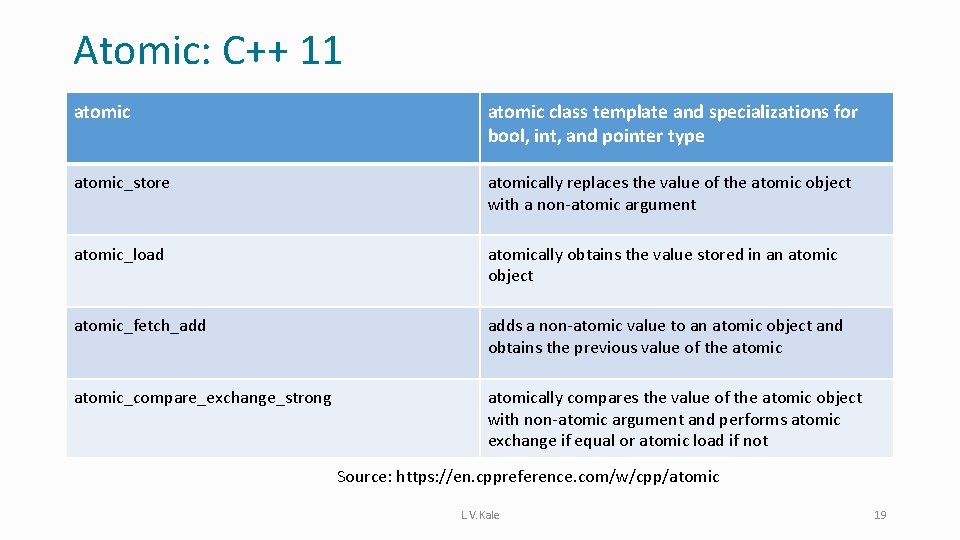

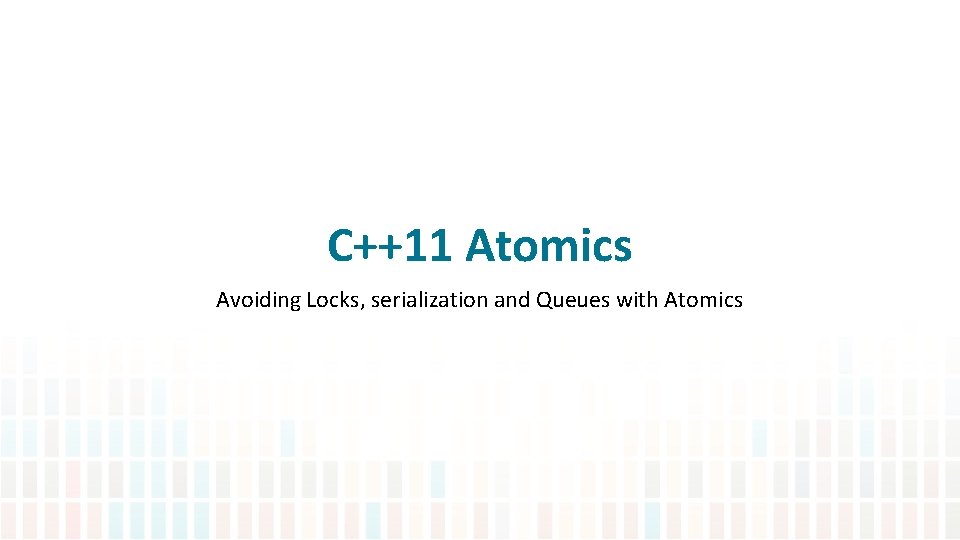

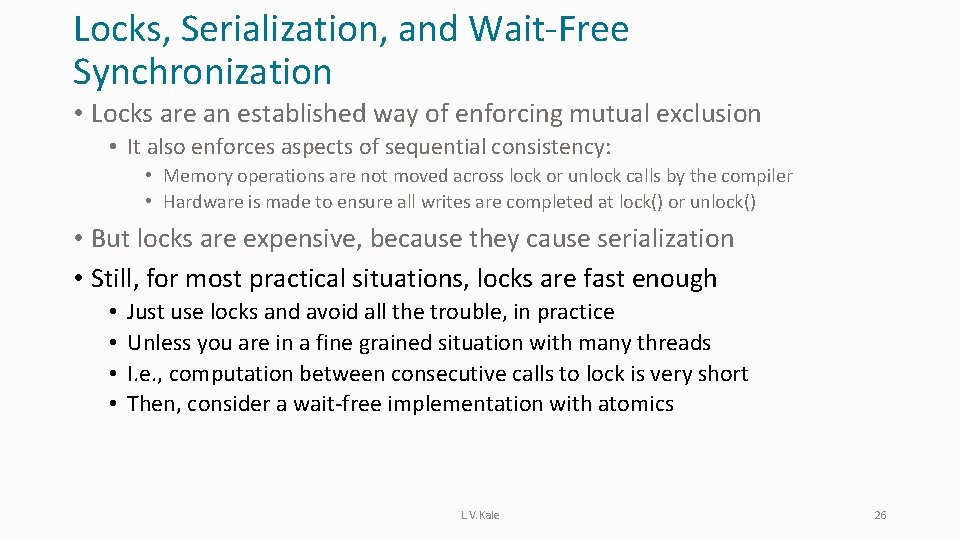

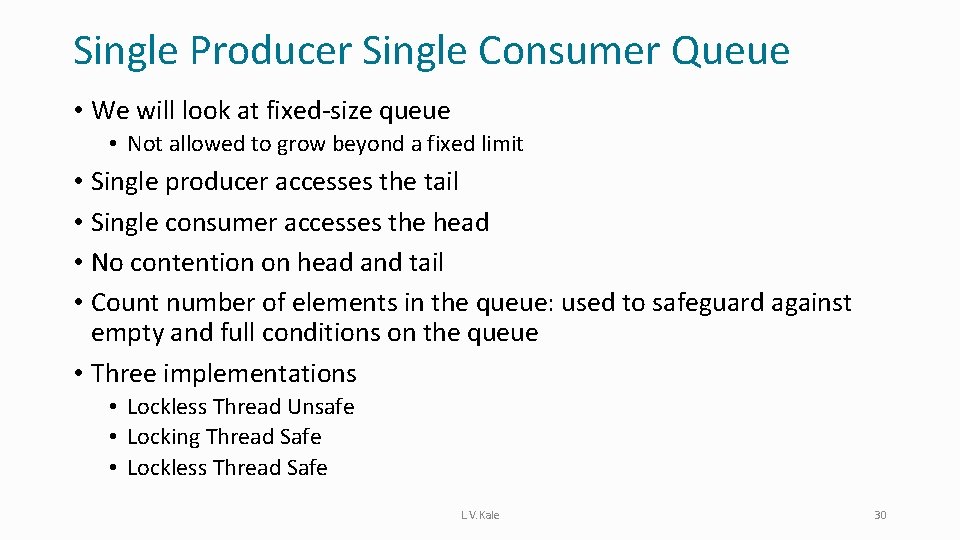

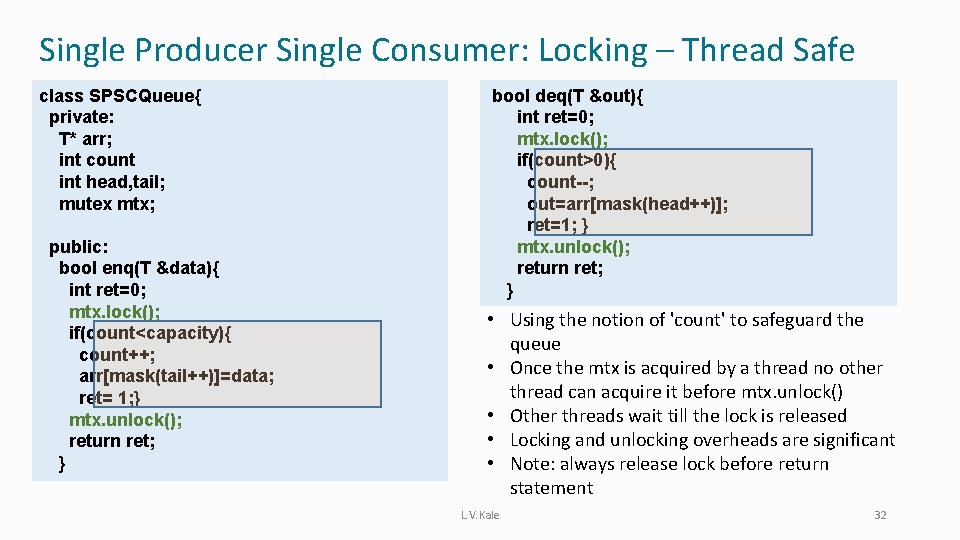

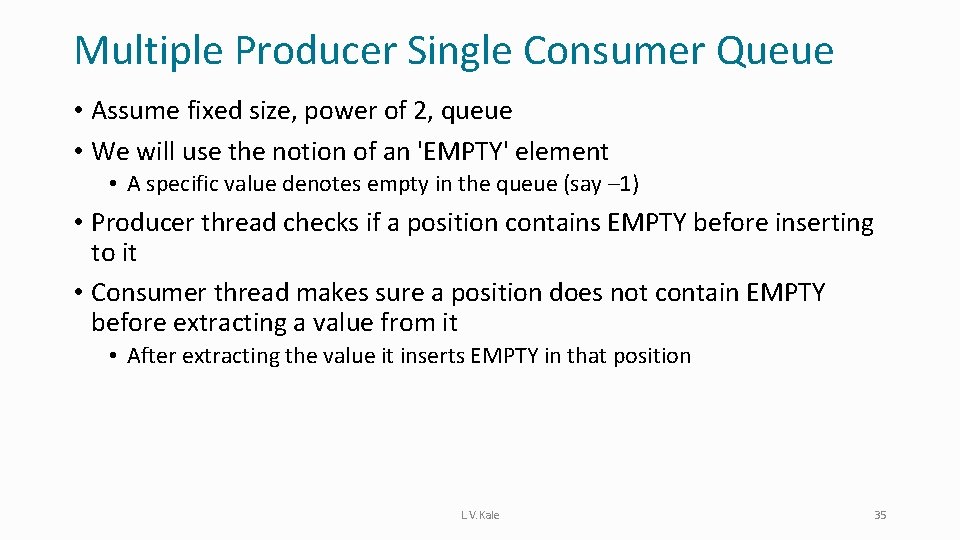

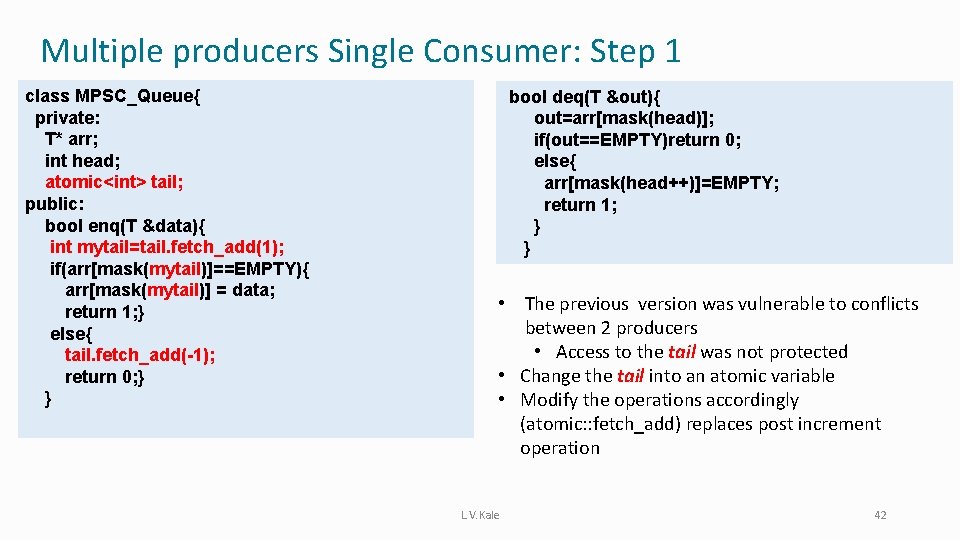

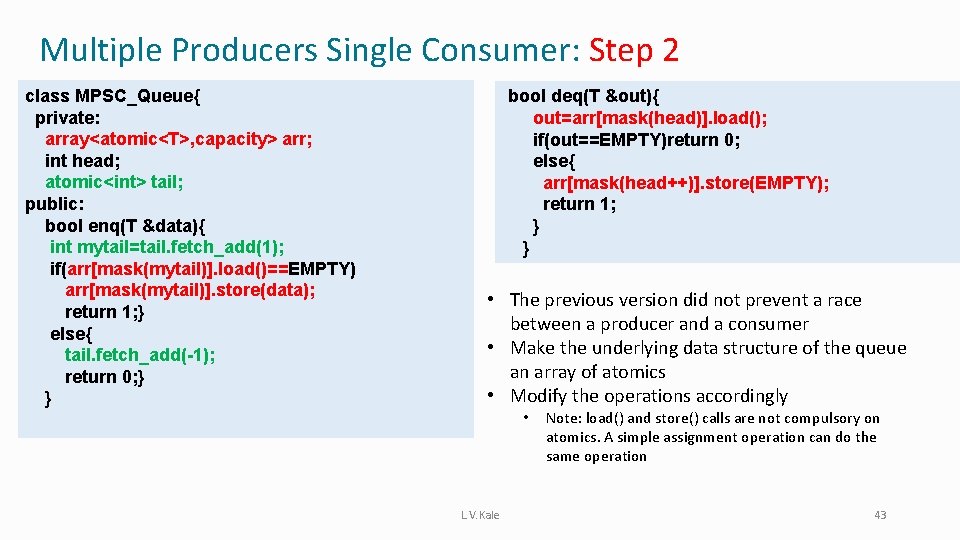

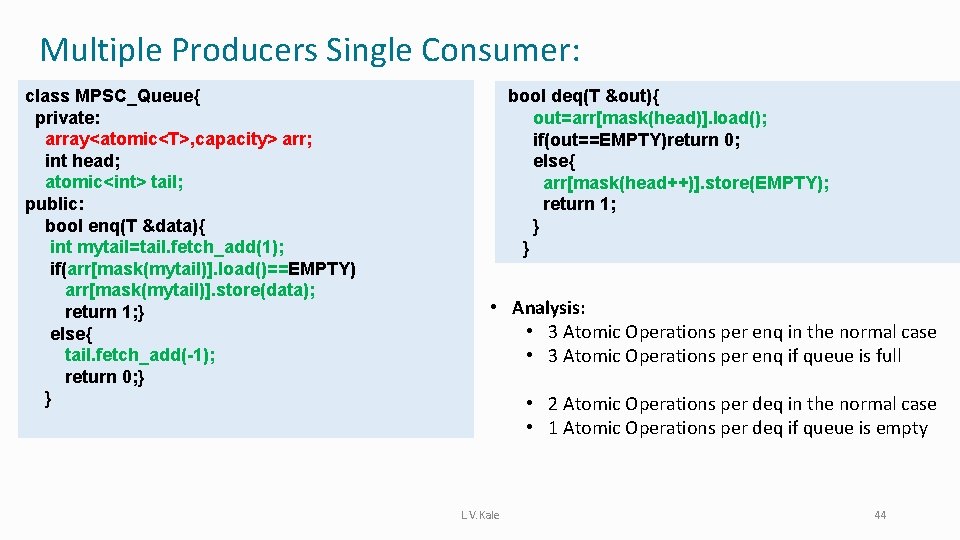

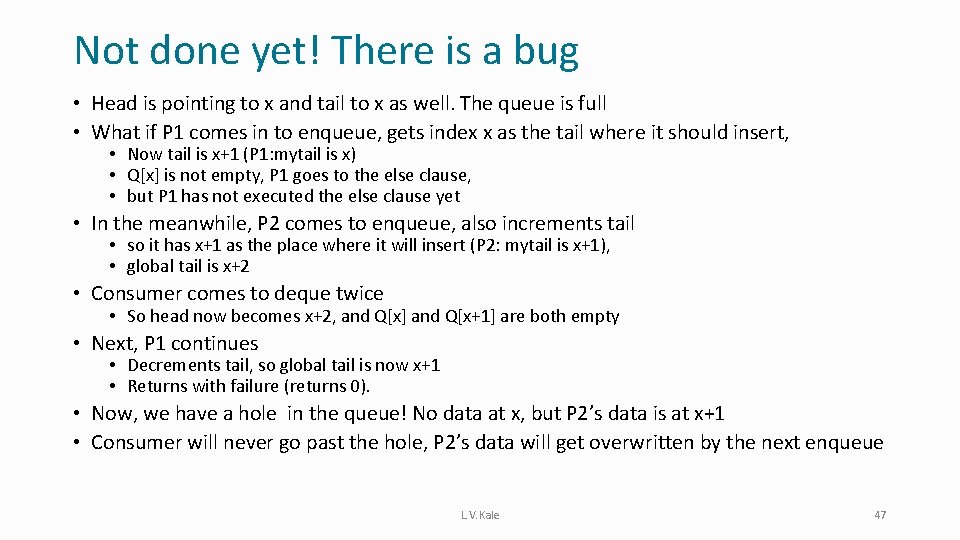

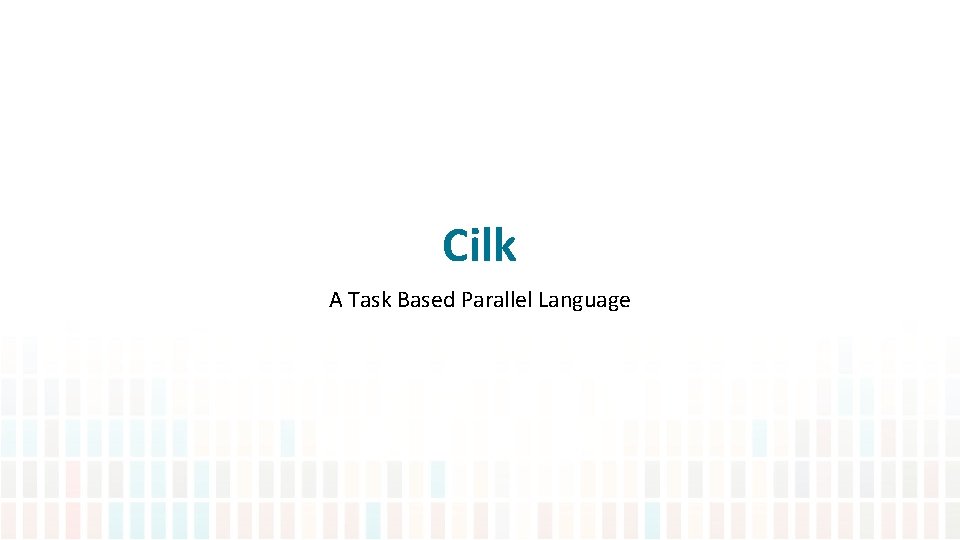

Multiple producers Single Consumer: Step 1 class MPSC_Queue{ private: T* arr; int head; atomic<int> tail; public: bool enq(T &data){ int mytail=tail. fetch_add(1); if(arr[mask(mytail)]==EMPTY){ arr[mask(mytail)] = data; return 1; } else{ tail. fetch_add(-1); return 0; } } bool deq(T &out){ out=arr[mask(head)]; if(out==EMPTY)return 0; else{ arr[mask(head++)]=EMPTY; return 1; } } • The previous version was vulnerable to conflicts between 2 producers • Access to the tail was not protected • Change the tail into an atomic variable • Modify the operations accordingly (atomic: : fetch_add) replaces post increment operation L. V. Kale 42

Multiple Producers Single Consumer: Step 2 class MPSC_Queue{ private: array<atomic<T>, capacity> arr; int head; atomic<int> tail; public: bool enq(T &data){ int mytail=tail. fetch_add(1); if(arr[mask(mytail)]. load()==EMPTY) arr[mask(mytail)]. store(data); return 1; } else{ tail. fetch_add(-1); return 0; } } bool deq(T &out){ out=arr[mask(head)]. load(); if(out==EMPTY)return 0; else{ arr[mask(head++)]. store(EMPTY); return 1; } } • The previous version did not prevent a race between a producer and a consumer • Make the underlying data structure of the queue an array of atomics • Modify the operations accordingly • L. V. Kale Note: load() and store() calls are not compulsory on atomics. A simple assignment operation can do the same operation 43

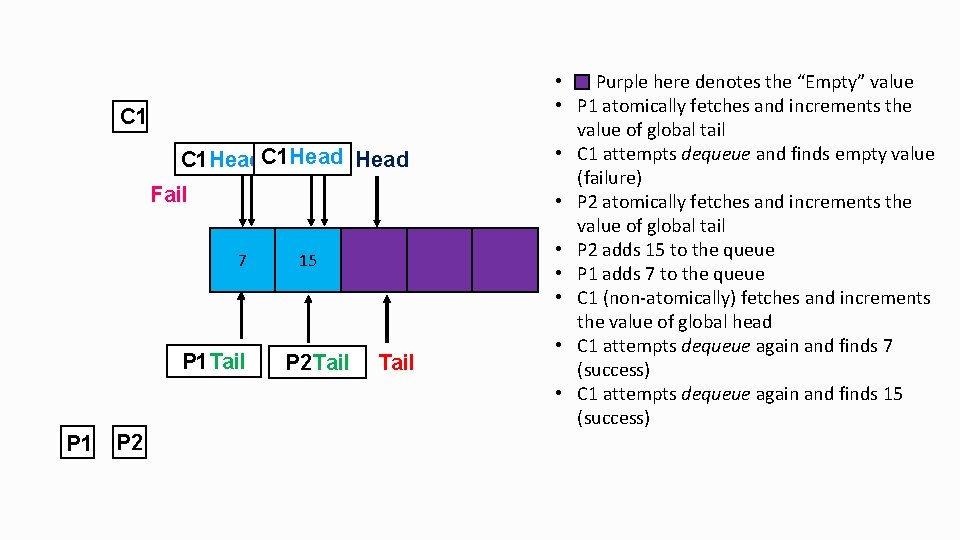

Multiple Producers Single Consumer: class MPSC_Queue{ private: array<atomic<T>, capacity> arr; int head; atomic<int> tail; public: bool enq(T &data){ int mytail=tail. fetch_add(1); if(arr[mask(mytail)]. load()==EMPTY) arr[mask(mytail)]. store(data); return 1; } else{ tail. fetch_add(-1); return 0; } } bool deq(T &out){ out=arr[mask(head)]. load(); if(out==EMPTY)return 0; else{ arr[mask(head++)]. store(EMPTY); return 1; } } • Analysis: • 3 Atomic Operations per enq in the normal case • 3 Atomic Operations per enq if queue is full • 2 Atomic Operations per deq in the normal case • 1 Atomic Operations per deq if queue is empty L. V. Kale 44

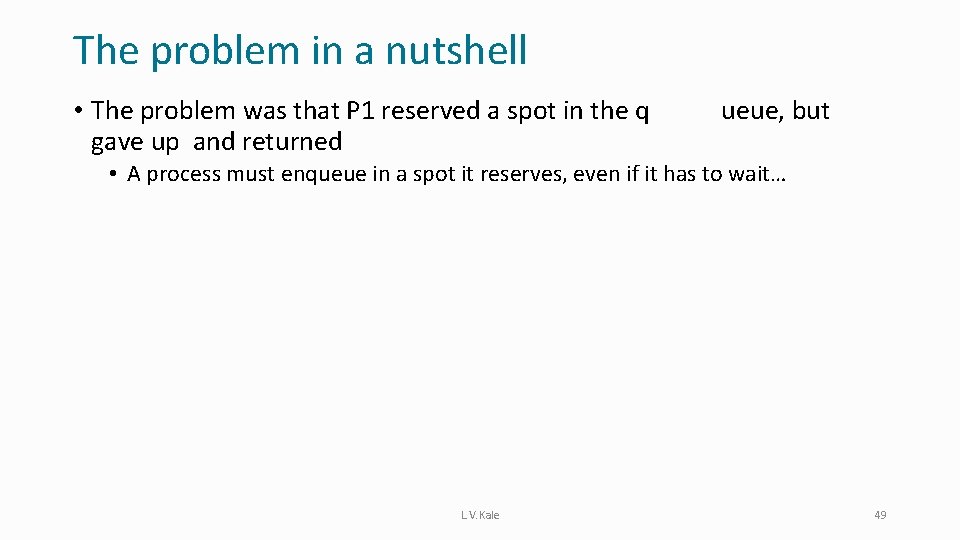

C 1 C 1 Head Head. C 1 Head Fail 7 Tail P 1 P 2 15 Tail P 2 Tail • Purple here denotes the “Empty” value • P 1 atomically fetches and increments the value of global tail • C 1 attempts dequeue and finds empty value (failure) • P 2 atomically fetches and increments the value of global tail • P 2 adds 15 to the queue • P 1 adds 7 to the queue • C 1 (non-atomically) fetches and increments the value of global head • C 1 attempts dequeue again and finds 7 (success) • C 1 attempts dequeue again and finds 15 (success)

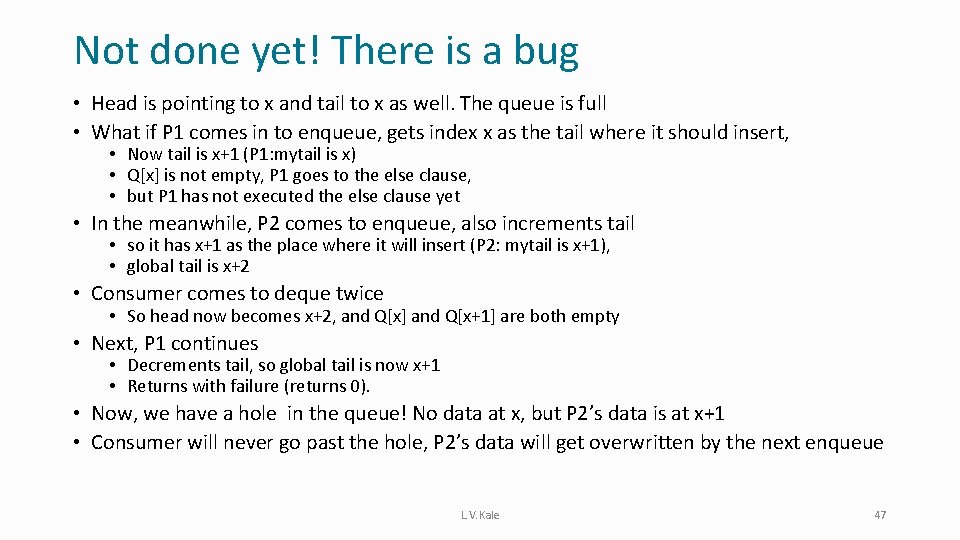

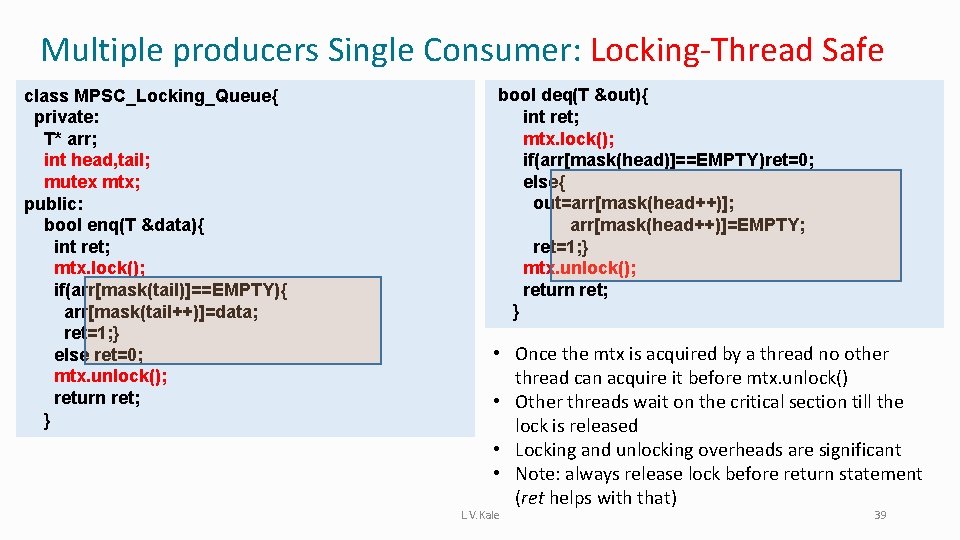

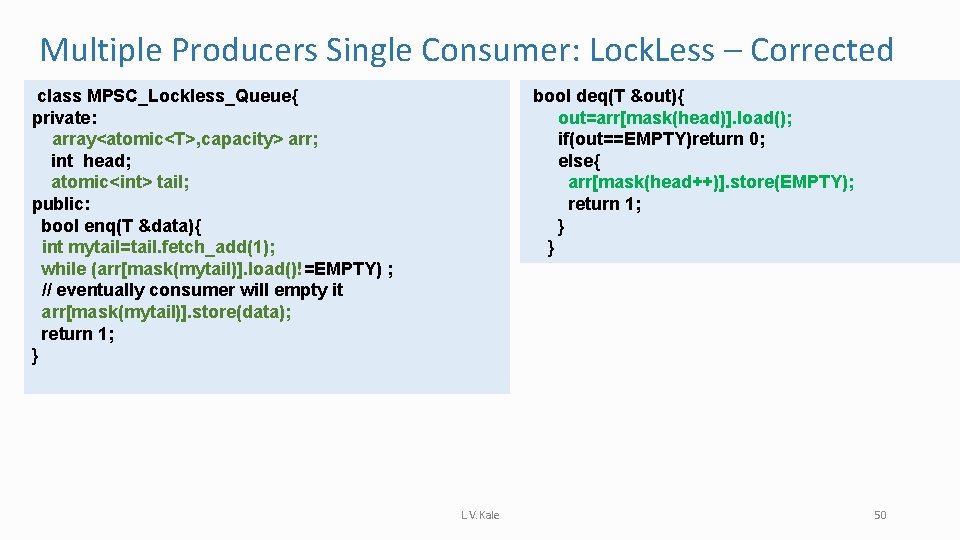

Not done yet! There is a bug • Head is pointing to x and tail to x as well. The queue is full • What if P 1 comes in to enqueue, gets index x as the tail where it should insert, • Now tail is x+1 (P 1: mytail is x) • Q[x] is not empty, P 1 goes to the else clause, • but P 1 has not executed the else clause yet • In the meanwhile, P 2 comes to enqueue, also increments tail • so it has x+1 as the place where it will insert (P 2: mytail is x+1), • global tail is x+2 • Consumer comes to deque twice • So head now becomes x+2, and Q[x] and Q[x+1] are both empty • Next, P 1 continues • Decrements tail, so global tail is now x+1 • Returns with failure (returns 0). • Now, we have a hole in the queue! No data at x, but P 2’s data is at x+1 • Consumer will never go past the hole, P 2’s data will get overwritten by the next enqueue L. V. Kale 47

![bool enqT data int mytailtail fetchadd1 ifarrmaskmytail loadEMPTY arrmaskmytail storedata return 1 else bool enq(T &data){ int mytail=tail. fetch_add(1); if(arr[mask(mytail)]. load()==EMPTY){ arr[mask(mytail)]. store(data); return 1; } else{](https://slidetodoc.com/presentation_image_h2/3b3e18faa1a1b07b2c40ddbae6adbf0a/image-42.jpg)

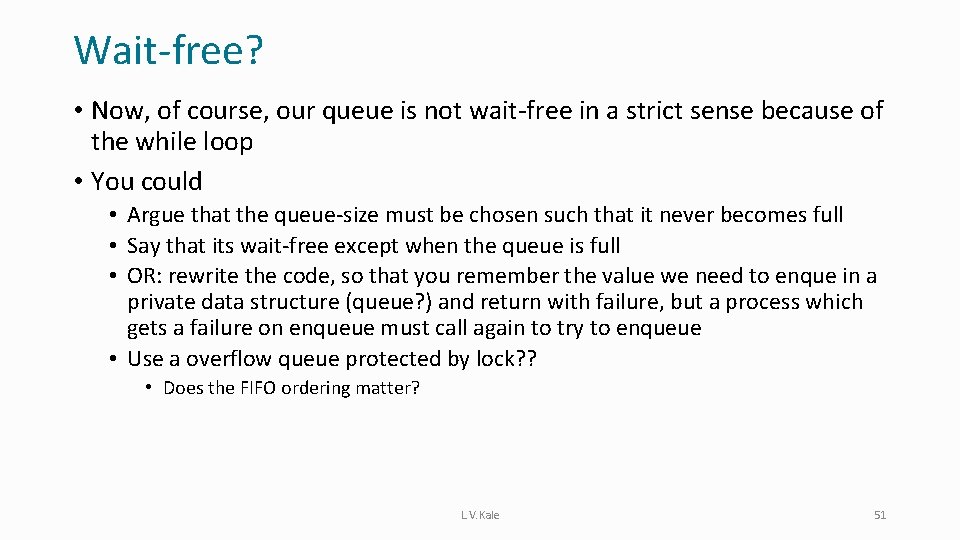

bool enq(T &data){ int mytail=tail. fetch_add(1); if(arr[mask(mytail)]. load()==EMPTY){ arr[mask(mytail)]. store(data); return 1; } else{ tail. fetch_add(-1); return 0; } } Head 0 1 2 3 4 5 6 7 76 12 82 43 90 25 31 69 52 Tail L. V. Kale • Tail is 2, Head is 2, Queue is full • Process P 1 comes to enque • P 1. mytail == 2 • tail == 3 • P 1 discovers arr[2] is not empty • But before it could execute the else • P 2 comes to enque (say, the value 90) • P 1. mytail == 3 • tail == 4 • But before it executes the if. . • Consumer C dequeues twice • Dequeues 82 and 43 ; replaces them with empty values • Now P 2 executes the if, finds arr[3] empty, and enqueues its value there • P 1 executes else, decrements tail, and leaves an empty value (hole) at arr[2]! 48

The problem in a nutshell • The problem was that P 1 reserved a spot in the q gave up and returned ueue, but • A process must enqueue in a spot it reserves, even if it has to wait… L. V. Kale 49

Multiple Producers Single Consumer: Lock. Less – Corrected class MPSC_Lockless_Queue{ private: array<atomic<T>, capacity> arr; int head; atomic<int> tail; public: bool enq(T &data){ int mytail=tail. fetch_add(1); while (arr[mask(mytail)]. load()!=EMPTY) ; // eventually consumer will empty it arr[mask(mytail)]. store(data); return 1; } bool deq(T &out){ out=arr[mask(head)]. load(); if(out==EMPTY)return 0; else{ arr[mask(head++)]. store(EMPTY); return 1; } } L. V. Kale 50

Wait-free? • Now, of course, our queue is not wait-free in a strict sense because of the while loop • You could • Argue that the queue-size must be chosen such that it never becomes full • Say that its wait-free except when the queue is full • OR: rewrite the code, so that you remember the value we need to enque in a private data structure (queue? ) and return with failure, but a process which gets a failure on enqueue must call again to try to enqueue • Use a overflow queue protected by lock? ? • Does the FIFO ordering matter? L. V. Kale 51

Cilk A Task Based Parallel Language

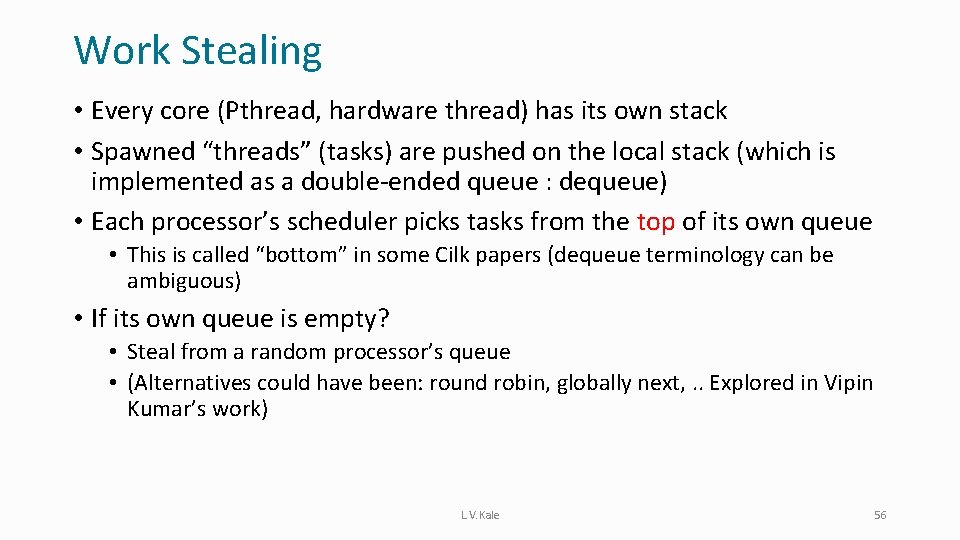

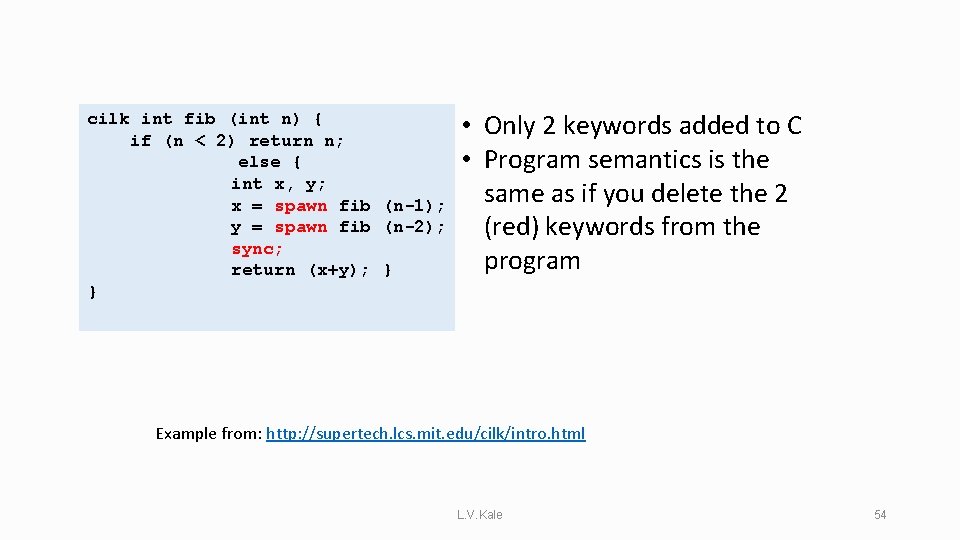

Cilk Language • Developed over 15+ years • Two calls added to C: • Popularized the idea of work stealing • But the idea is older: • Multi. Lisp (Halstead) • State-space search: Vipin Kumar • Formalized the idea of work stealing • Proofs on optimality / time and space complexity • Intel’s Cilk++ : via Cilk Arts L. V. Kale 53

cilk int fib (int n) { if (n < 2) return n; else { int x, y; x = spawn fib (n-1); y = spawn fib (n-2); sync; return (x+y); } } • Only 2 keywords added to C • Program semantics is the same as if you delete the 2 (red) keywords from the program Example from: http: //supertech. lcs. mit. edu/cilk/intro. html L. V. Kale 54

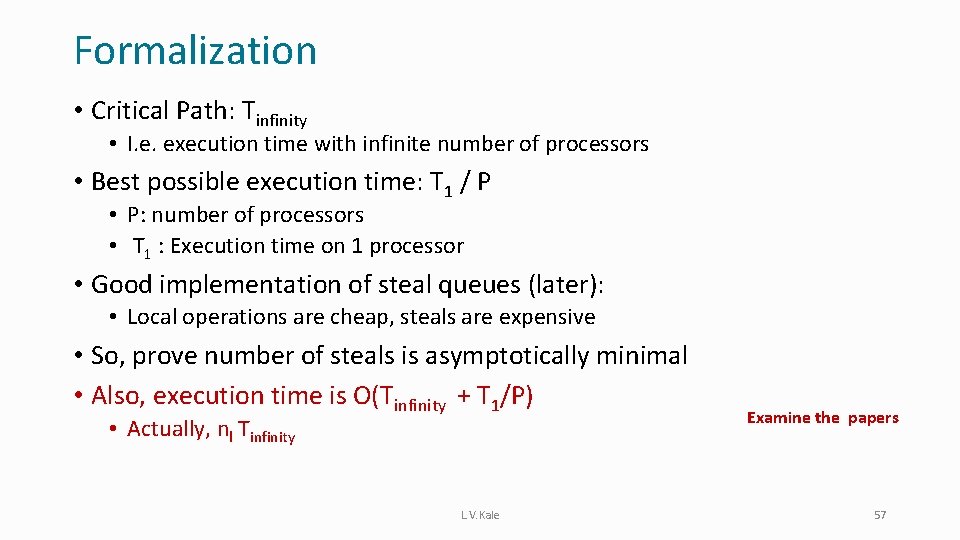

Possible Implementations • User level threads and suspensions • Task scheduling (who does what when) • Centralized queue: good balance, scalablity? , overhead (locks for queues) • 1 queue for every core, and assign work to one of them randomly • Load imbalance: some core may get too much • Extension: balance queues periodically • 1 queue for every core, keep work in your queue, initially • Periodic balancing • Alternative: idle processor de-queues work from someone else’s queue: • This is called work stealing L. V. Kale 55

Work Stealing • Every core (Pthread, hardware thread) has its own stack • Spawned “threads” (tasks) are pushed on the local stack (which is implemented as a double-ended queue : dequeue) • Each processor’s scheduler picks tasks from the top of its own queue • This is called “bottom” in some Cilk papers (dequeue terminology can be ambiguous) • If its own queue is empty? • Steal from a random processor’s queue • (Alternatives could have been: round robin, globally next, . . Explored in Vipin Kumar’s work) L. V. Kale 56

Formalization • Critical Path: Tinfinity • I. e. execution time with infinite number of processors • Best possible execution time: T 1 / P • P: number of processors • T 1 : Execution time on 1 processor • Good implementation of steal queues (later): • Local operations are cheap, steals are expensive • So, prove number of steals is asymptotically minimal • Also, execution time is O(Tinfinity + T 1/P) • Actually, nl Tinfinity L. V. Kale Examine the papers 57

Intel’s Cilk Plus • Somewhat different syntax for spawn and sync • Provides a cilk_for parallel loop • Implemented by divide-and-conquer task spawns • 0: N => 0: N/2, N/2: N, and recurse L. V. Kale 58

Steal Queues • “Agenda” parallelism (set of tasks) • The idea of work stealing • Cilk, and some previous systems L. V. Kale 59

Steal Queues (dequeue) • Basic Idea: • Local Enqueue should be cheap • Local De-queue (pop) should be fast in the common case, and only occasionally use synchronization • Steal (non-local pop) will always use synchronization (locks or atomics) L. V. Kale 60