POS Tagging HMM Taggers continued Today Walk through

- Slides: 17

POS Tagging HMM Taggers (continued)

Today • Walk through the guts of an HMM Tagger • Address problems with HMM Taggers, specifically unknown words

HMM Tagger • What is the goal of a Markov Tagger? • To maximize the following expression: P(wi|tj) x P(ti|t 1, i-1) • Or P(word|tag) x P(tag|previous n tags) • Simplifies, by the Markov assumption, to: P(wi|ti) x P(ti|ti-1)

HMM Tagger P(word|tag) x P(tag|previous n tags) P(word|tag) – – The probability of the word given a tag (not vice versa) – We model this by using a word-tag matrix (often called a language model) – Familiar? • HW 4 (3)

HMM Tagger P(word|tag) x P(tag|previous n tags) – – How likely a tag is given the n so many tags before – Simplified to the previous tag – Modeled by using a tag-tag matrix – Familiar? • HW 4 (2)

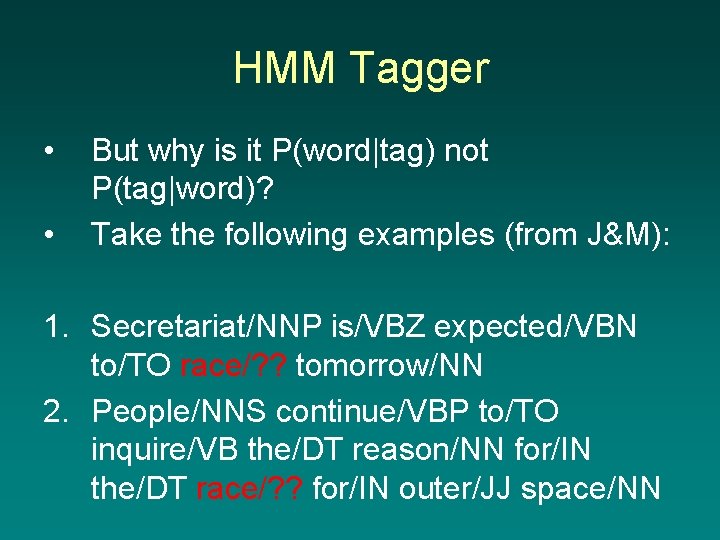

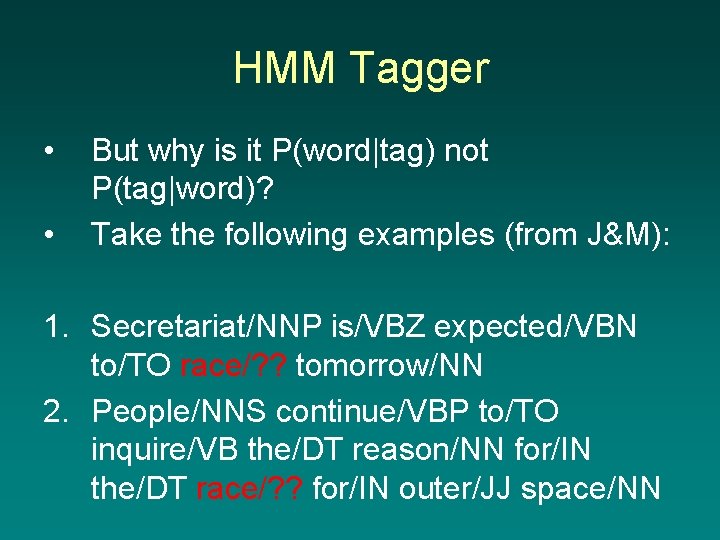

HMM Tagger • • But why is it P(word|tag) not P(tag|word)? Take the following examples (from J&M): 1. Secretariat/NNP is/VBZ expected/VBN to/TO race/? ? tomorrow/NN 2. People/NNS continue/VBP to/TO inquire/VB the/DT reason/NN for/IN the/DT race/? ? for/IN outer/JJ space/NN

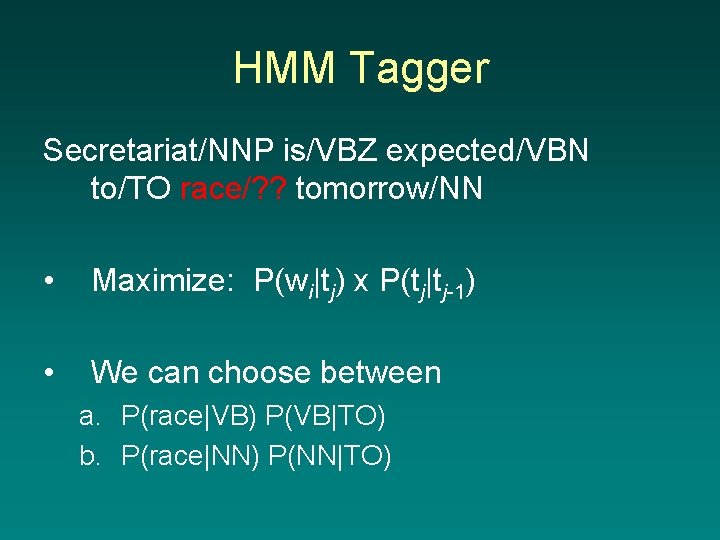

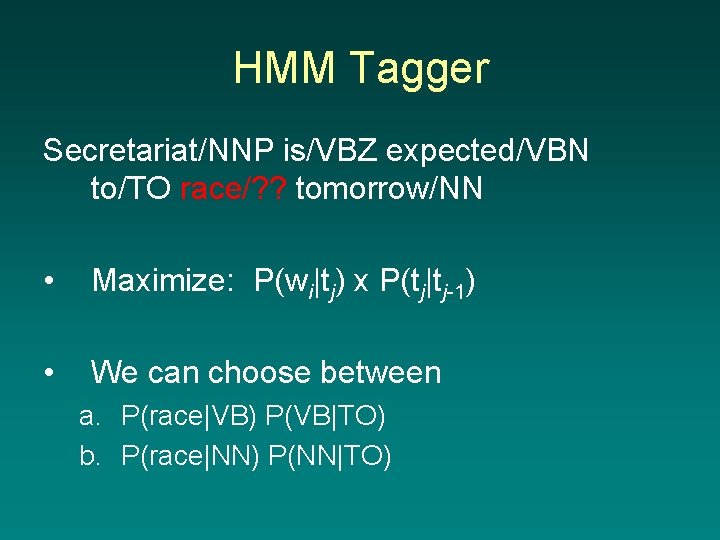

HMM Tagger Secretariat/NNP is/VBZ expected/VBN to/TO race/? ? tomorrow/NN • Maximize: P(wi|tj) x P(tj|tj-1) • We can choose between a. P(race|VB) P(VB|TO) b. P(race|NN) P(NN|TO)

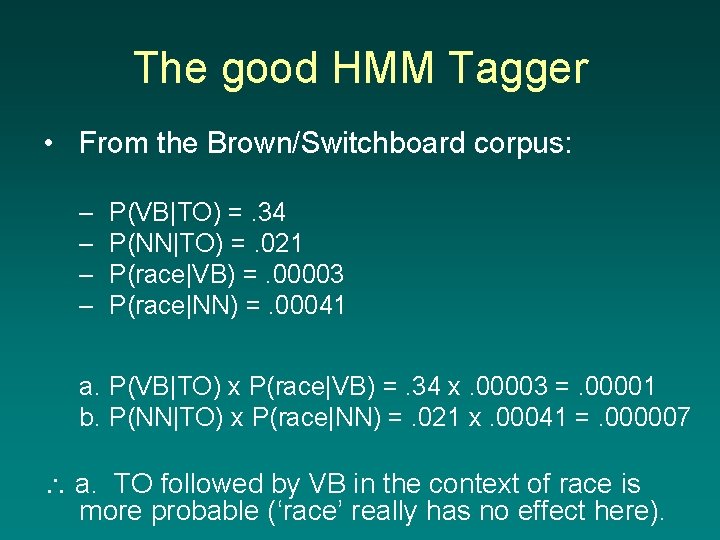

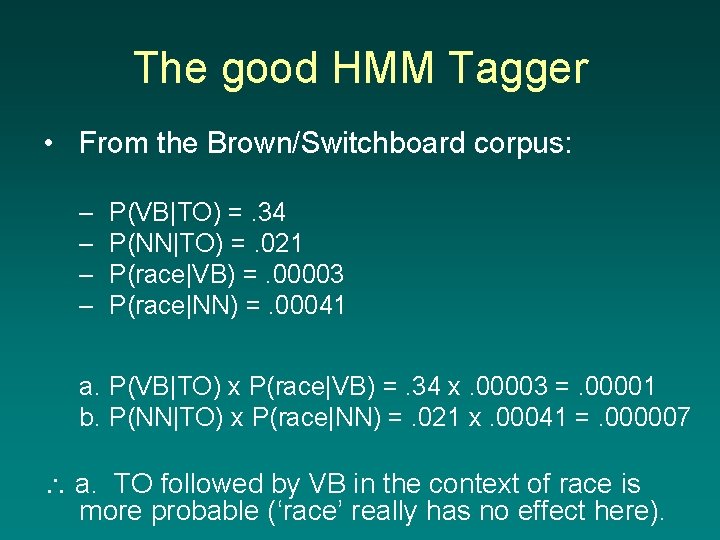

The good HMM Tagger • From the Brown/Switchboard corpus: – – P(VB|TO) =. 34 P(NN|TO) =. 021 P(race|VB) =. 00003 P(race|NN) =. 00041 a. P(VB|TO) x P(race|VB) =. 34 x. 00003 =. 00001 b. P(NN|TO) x P(race|NN) =. 021 x. 00041 =. 000007 a. TO followed by VB in the context of race is more probable (‘race’ really has no effect here).

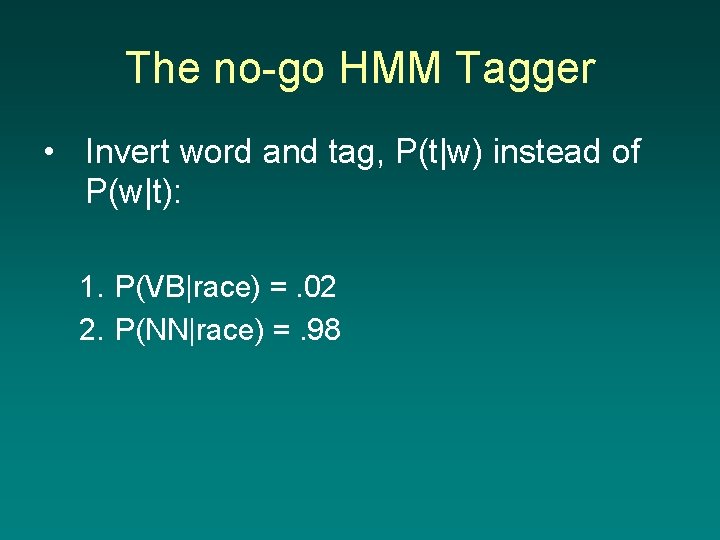

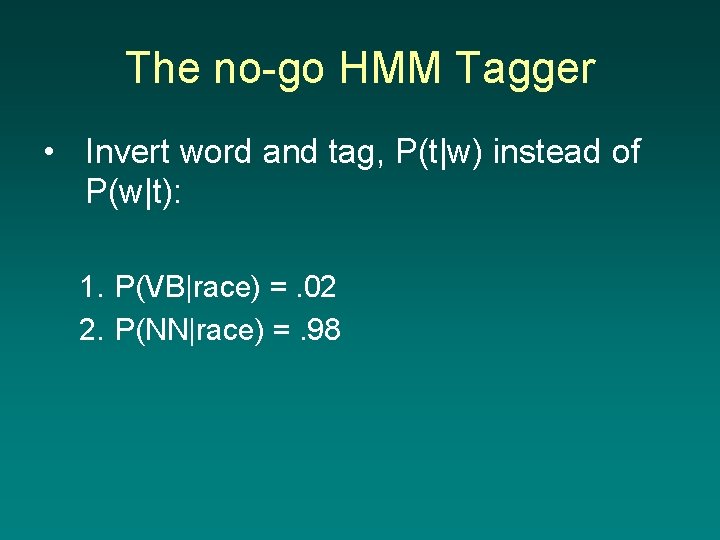

The no-go HMM Tagger • Invert word and tag, P(t|w) instead of P(w|t): 1. P(VB|race) =. 02 2. P(NN|race) =. 98

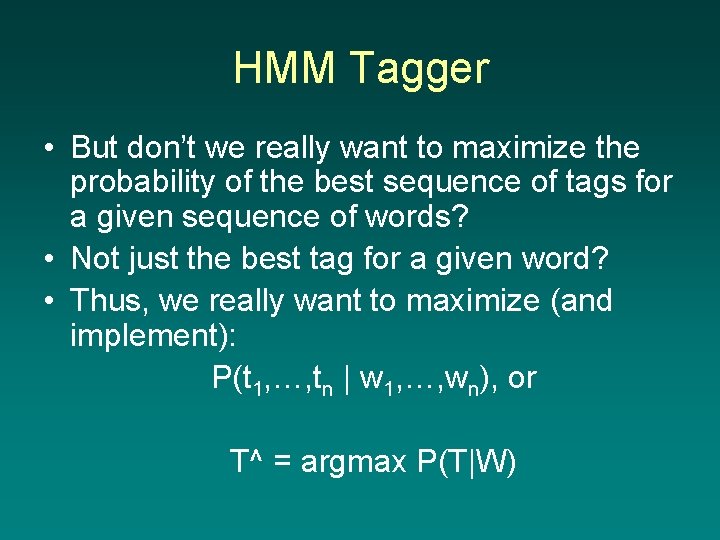

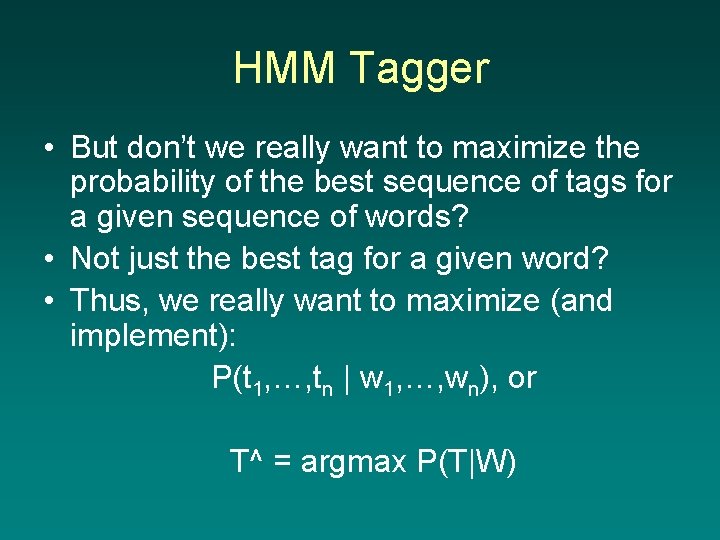

HMM Tagger • But don’t we really want to maximize the probability of the best sequence of tags for a given sequence of words? • Not just the best tag for a given word? • Thus, we really want to maximize (and implement): P(t 1, …, tn | w 1, …, wn), or T^ = argmax P(T|W)

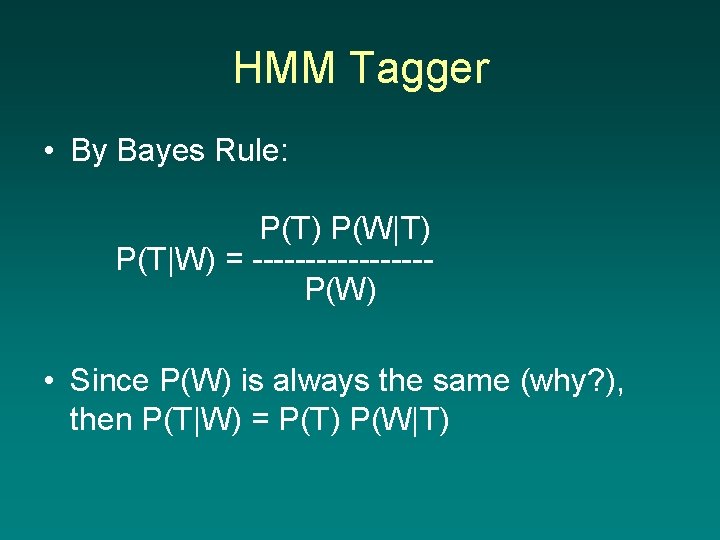

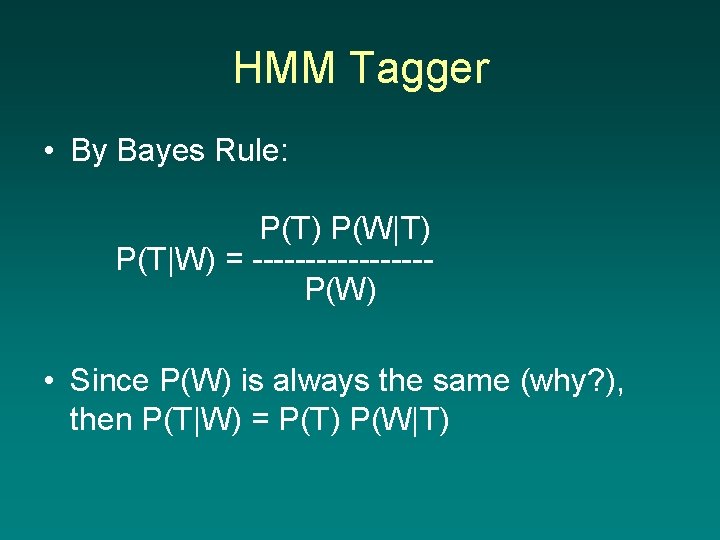

HMM Tagger • By Bayes Rule: P(T) P(W|T) P(T|W) = --------P(W) • Since P(W) is always the same (why? ), then P(T|W) = P(T) P(W|T)

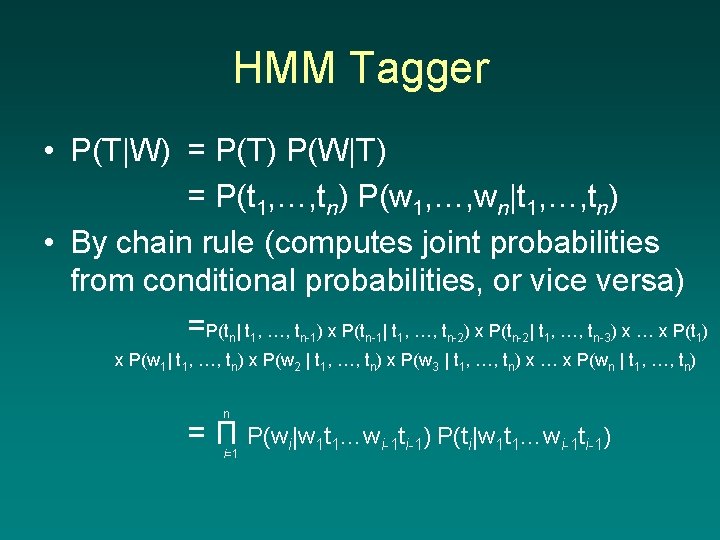

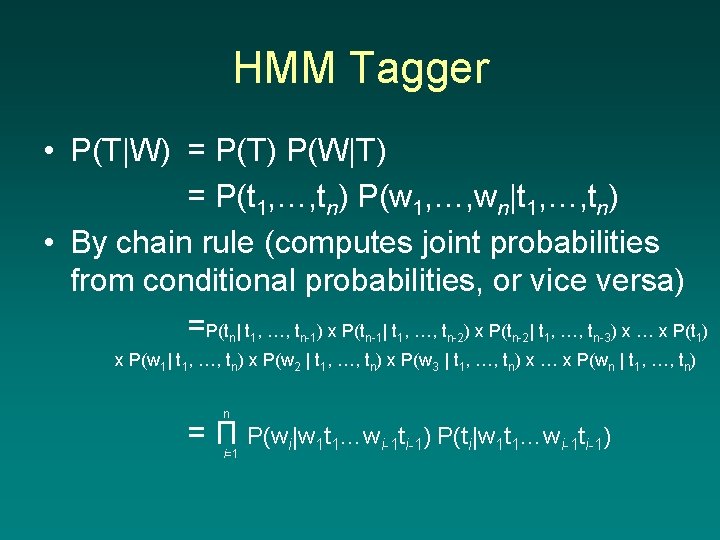

HMM Tagger • P(T|W) = P(T) P(W|T) = P(t 1, …, tn) P(w 1, …, wn|t 1, …, tn) • By chain rule (computes joint probabilities from conditional probabilities, or vice versa) =P(t | t , …, t ) x … x P(t ) n 1 n-1 1 n-2 1 n-3 1 x P(w 1| t 1, …, tn) x P(w 2 | t 1, …, tn) x P(w 3 | t 1, …, tn) x … x P(wn | t 1, …, tn) n = Π P(wi|w 1 t 1…wi-1 ti-1) P(ti|w 1 t 1…wi-1 ti-1) i=1

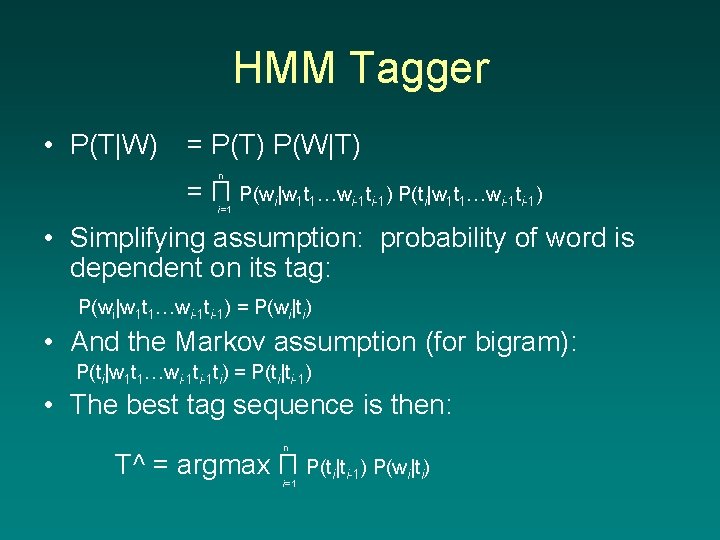

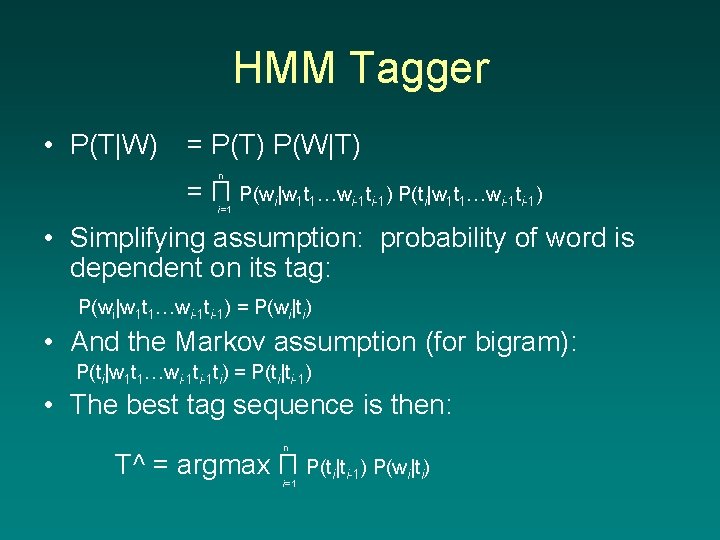

HMM Tagger • P(T|W) = P(T) P(W|T) n = Π P(wi|w 1 t 1…wi-1 ti-1) P(ti|w 1 t 1…wi-1 ti-1) i=1 • Simplifying assumption: probability of word is dependent on its tag: P(wi|w 1 t 1…wi-1 ti-1) = P(wi|ti) • And the Markov assumption (for bigram): P(ti|w 1 t 1…wi-1 ti) = P(ti|ti-1) • The best tag sequence is then: n T^ = argmax Π P(ti|ti-1) P(wi|ti) i=1

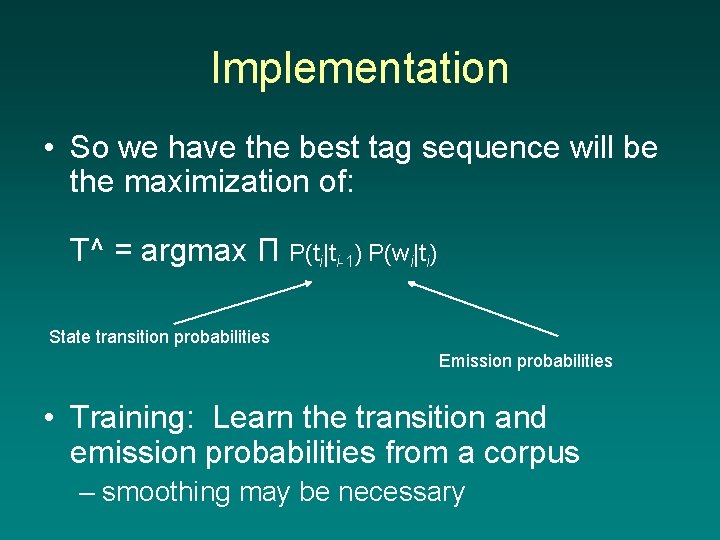

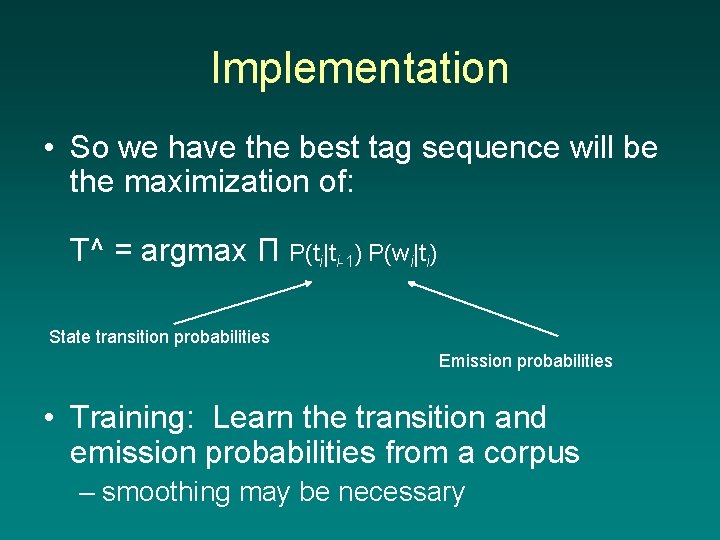

Implementation • So we have the best tag sequence will be the maximization of: T^ = argmax Π P(ti|ti-1) P(wi|ti) State transition probabilities Emission probabilities • Training: Learn the transition and emission probabilities from a corpus – smoothing may be necessary

Training • An HMM needs to be trained on the following: 1. The initial state probabilities 2. The state transition probabilities – The tag-tag matrix 3. The emission probabilities – The tag-word matrix

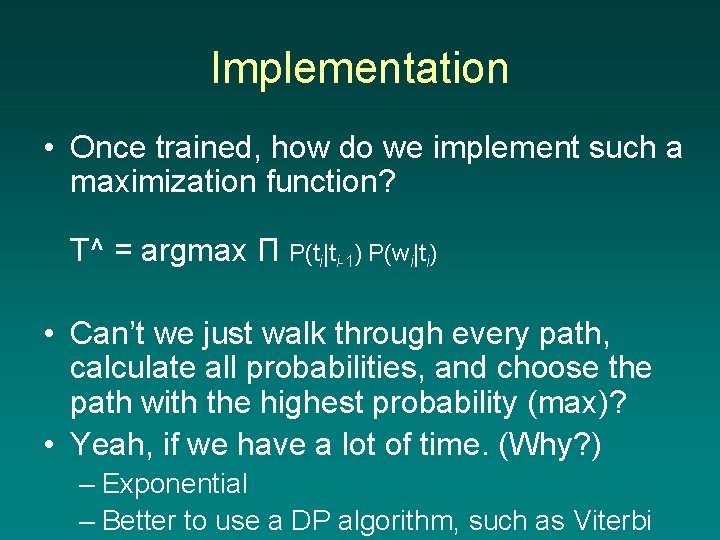

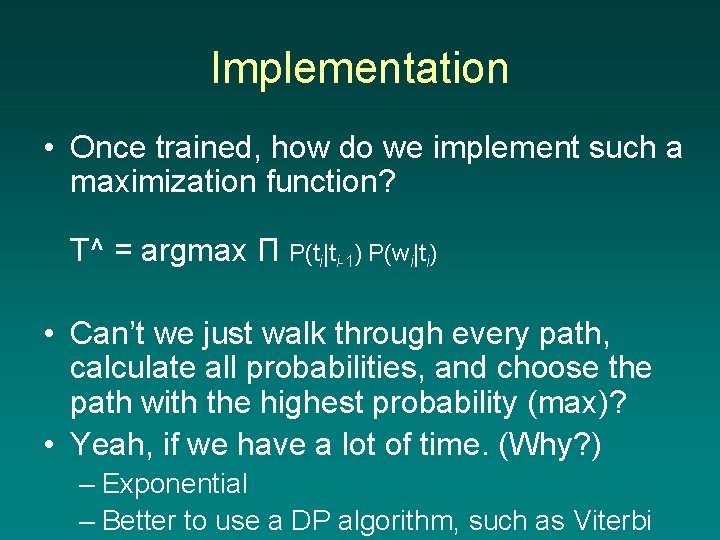

Implementation • Once trained, how do we implement such a maximization function? T^ = argmax Π P(ti|ti-1) P(wi|ti) • Can’t we just walk through every path, calculate all probabilities, and choose the path with the highest probability (max)? • Yeah, if we have a lot of time. (Why? ) – Exponential – Better to use a DP algorithm, such as Viterbi

Unknown Words • The tagger just described will do poorly on unknown words. Why? • Because P(wi|ti) = 0 for a word it has not seen (or more specifically, the given word-tag pair). • How do we resolve this problem? – A dictionary with the most common tag (the stupid tagger) • Still doesn’t solve the problem for completely novel words – Morphological/typographical analysis – Probability of a tag generating an unknown word • Secondary training required