Porting NANOS on SDSM GOAL Christian Perez Porting

- Slides: 44

Porting NANOS on SDSM GOAL Christian Perez Porting a shared memory environment to distributed memory. What is missing to current SDSM ?

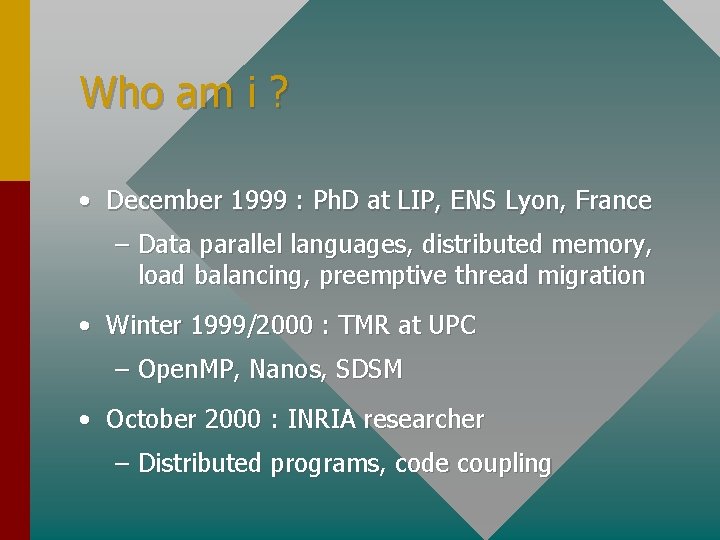

Who am i ? • December 1999 : Ph. D at LIP, ENS Lyon, France – Data parallel languages, distributed memory, load balancing, preemptive thread migration • Winter 1999/2000 : TMR at UPC – Open. MP, Nanos, SDSM • October 2000 : INRIA researcher – Distributed programs, code coupling

Contents • Motivation • Related works • Nanos execution model (Nth. Lib) • Nanos on top of 2 SDSM (JIAJIA & DSM-PM 2) • Missing SDSM functionalities • Conclusion

Motivation • Open. MP : emerging standard – simplicity (no data distribution) • Cluster of machines (mono or multiprocessors) – excellent ratio performance / price Open. MP on top of a cluster !

Open. MP / Cluster : HOW ? • Open. MP paradigm : shared memory • Cluster paradigm : message passing Use of software DSM system ! 2 Hardware DSM system : SCI (write: 2 s) å specific hardware å not yet stable

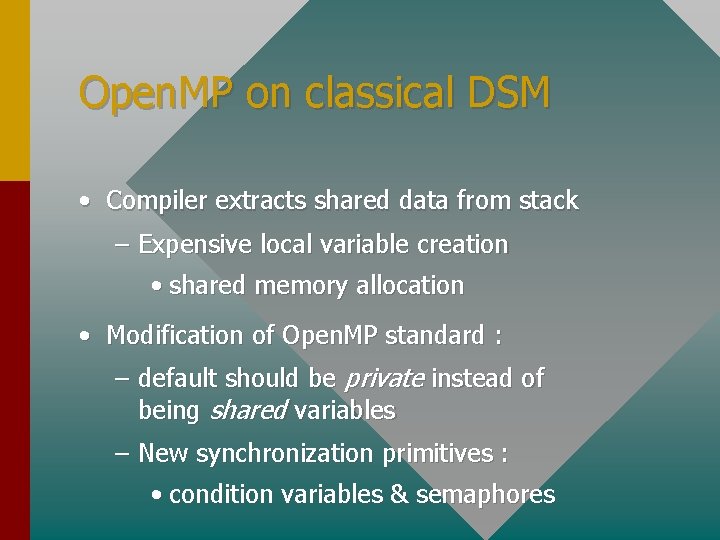

Related work • Several Open. MP/DSM implementations – Open. MP NOW!, Omni • But, – Modification of Open. MP semantics – One level of parallelism – Do not exploit high performance networks

Open. MP on classical DSM • Compiler extracts shared data from stack – Expensive local variable creation • shared memory allocation • Modification of Open. MP standard : – default should be private instead of being shared variables – New synchronization primitives : • condition variables & semaphores

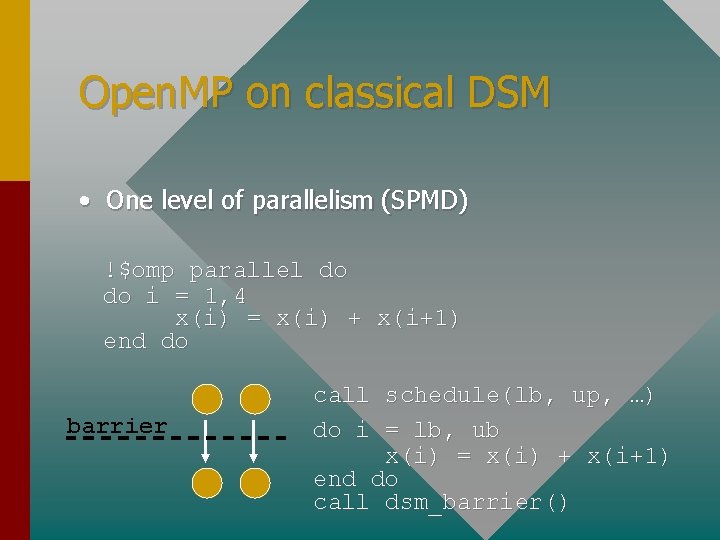

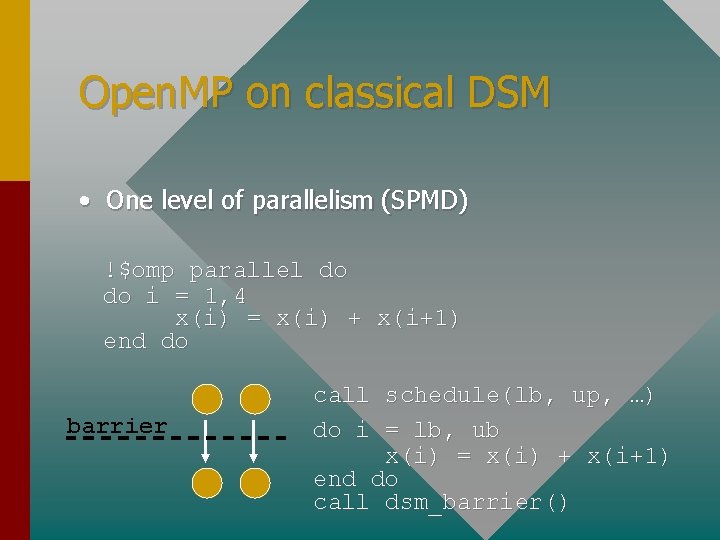

Open. MP on classical DSM • One level of parallelism (SPMD) !$omp parallel do do i = 1, 4 x(i) = x(i) + x(i+1) end do barrier call schedule(lb, up, …) do i = lb, ub x(i) = x(i) + x(i+1) end do call dsm_barrier()

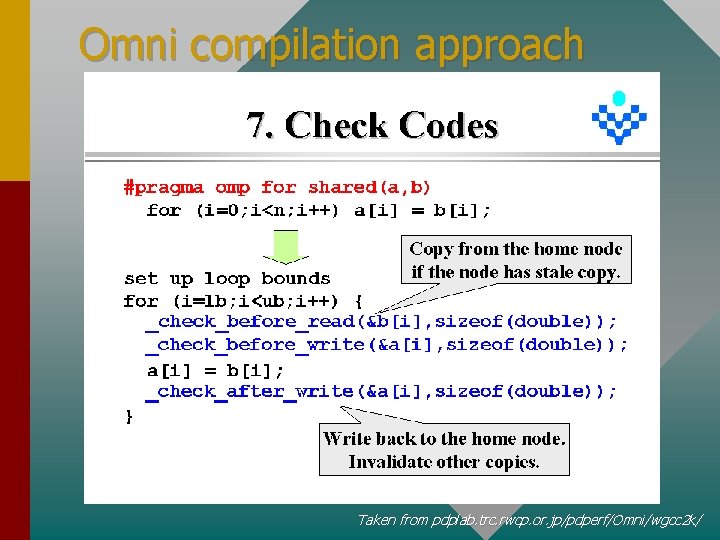

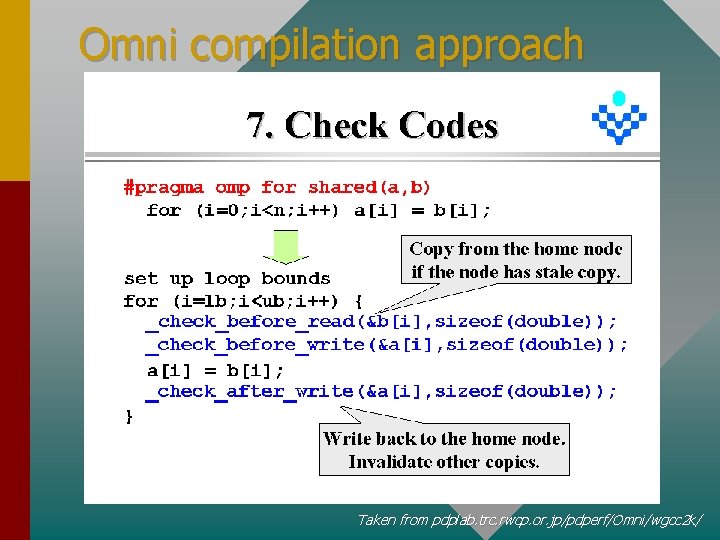

Omni compilation approach Taken from pdplab. trc. rwcp. or. jp/pdperf/Omni/wgcc 2 k/

Our goals • Support Open. MP standard • High performance • Allow exploitation of – multithreading (SMP) – high performance networks

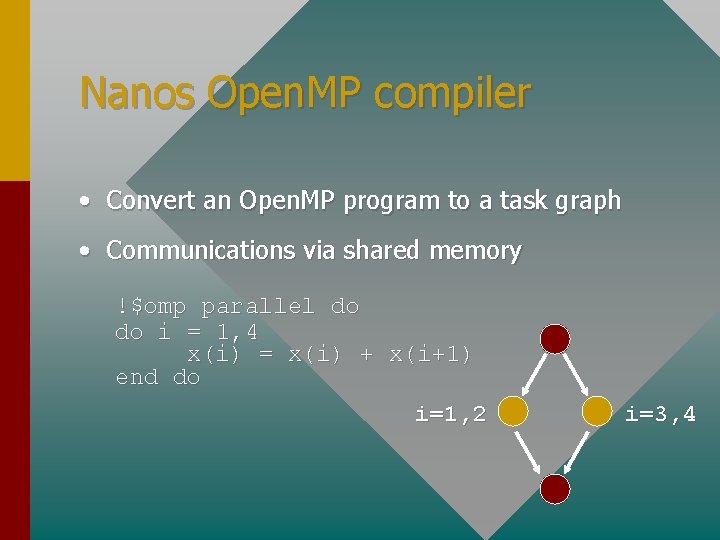

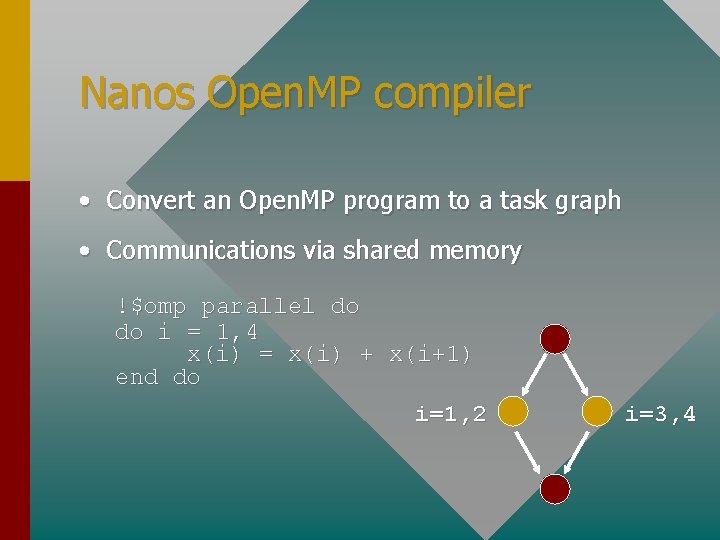

Nanos Open. MP compiler • Convert an Open. MP program to a task graph • Communications via shared memory !$omp parallel do do i = 1, 4 x(i) = x(i) + x(i+1) end do i=1, 2 i=3, 4

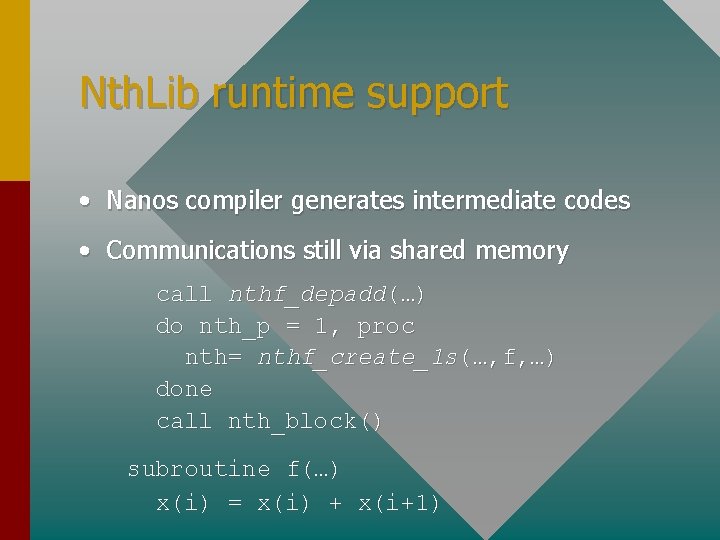

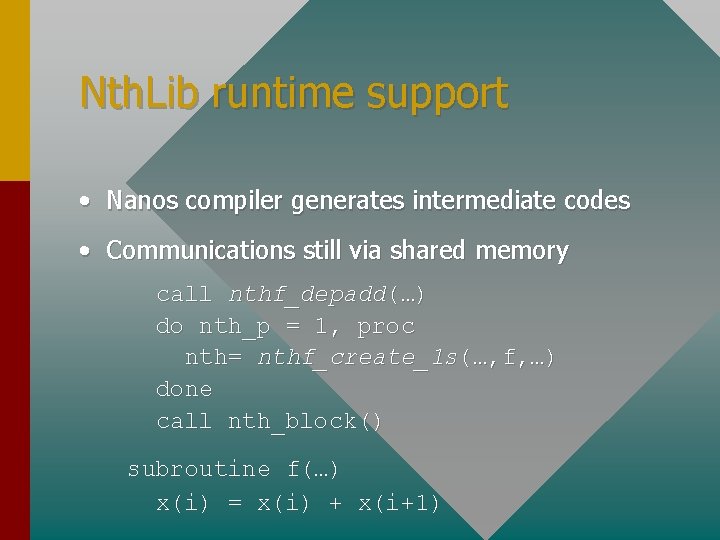

Nth. Lib runtime support • Nanos compiler generates intermediate codes • Communications still via shared memory call nthf_depadd(…) do nth_p = 1, proc nth= nthf_create_1 s(…, f, …) done call nth_block() subroutine f(…) x(i) = x(i) + x(i+1)

Nth. Lib details • Assumes to run on top of kernel threads • Provides user-level threads (QT) • Stack management (allocate) • Stack initialization (argument) • Explicit context switch

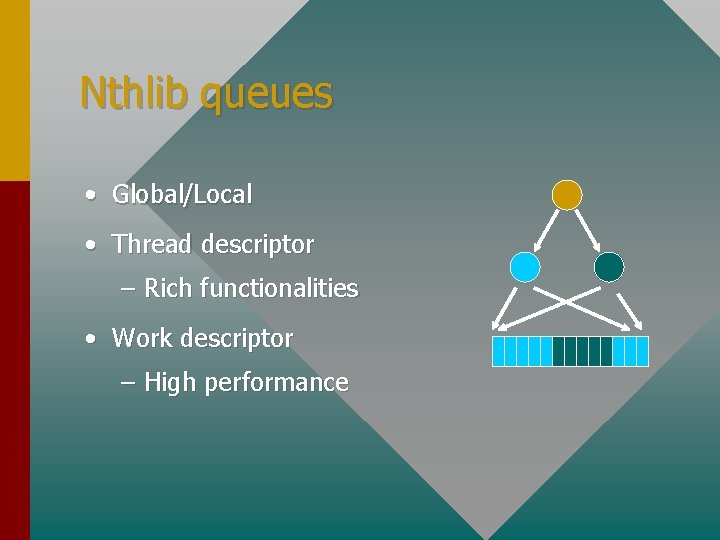

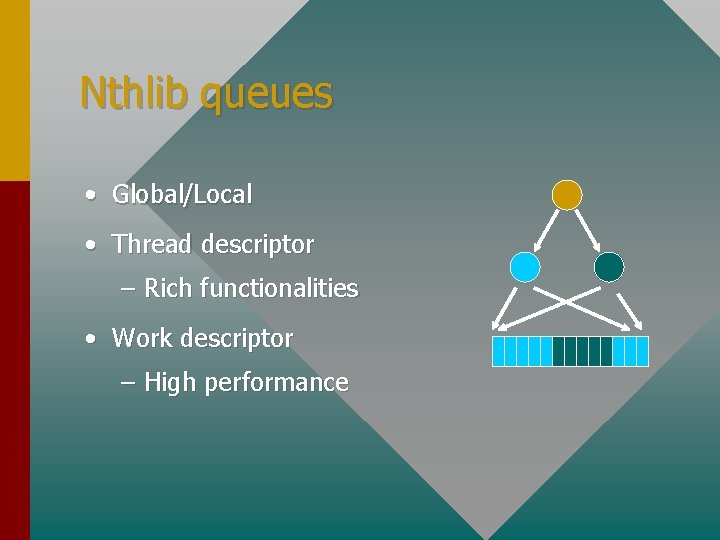

Nthlib queues • Global/Local • Thread descriptor – Rich functionalities • Work descriptor – High performance

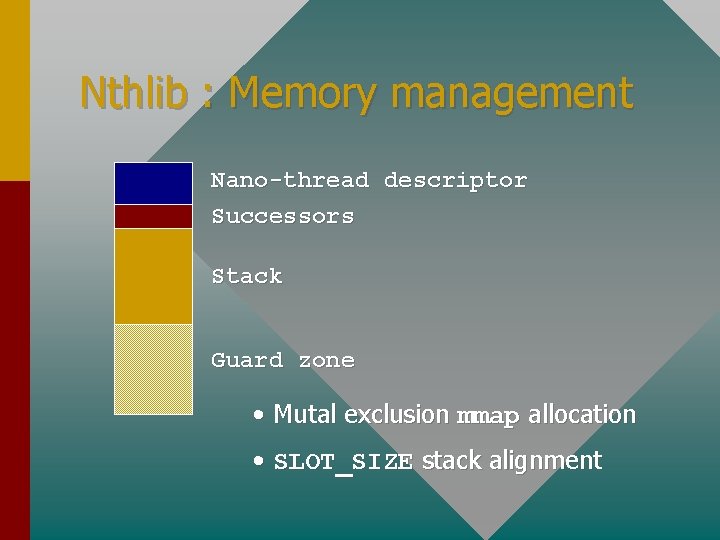

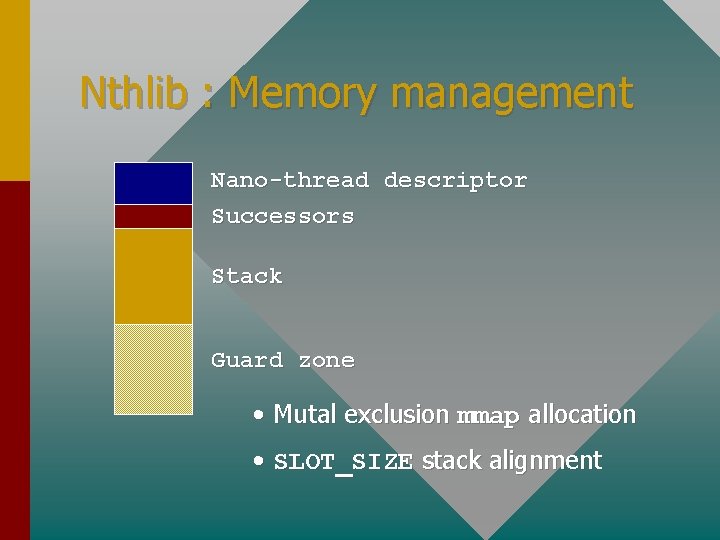

Nthlib : Memory management Nano-thread descriptor Successors Stack Guard zone • Mutal exclusion mmap allocation • SLOT_SIZE stack alignment

Porting Nthlib to SDSM • Data consistency • Shared memory management • Nanos threads • JIAJIA implementation • DSM-PM 2 implementation • Summary of DSM requirements

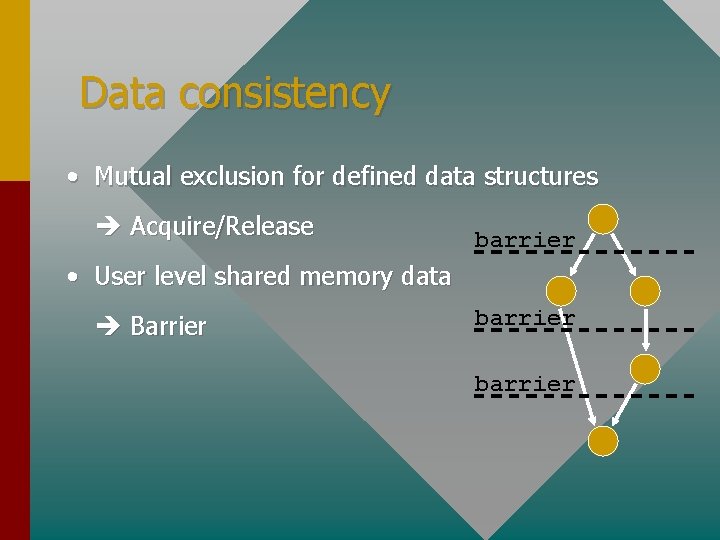

Data consistency • Mutual exclusion for defined data structures Acquire/Release • User level shared memory data Barrier

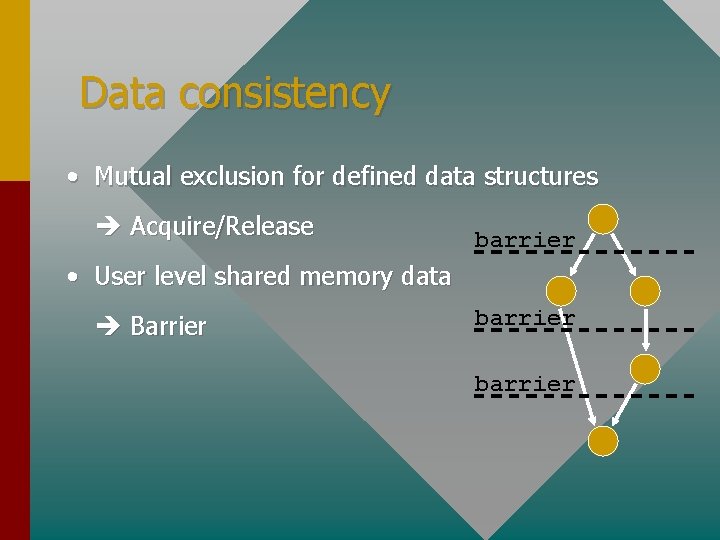

Data consistency • Mutual exclusion for defined data structures Acquire/Release barrier • User level shared memory data Barrier barrier

Shared memory management • Asynchronous shared memory allocation • Alignment parameter (> PAGE_SIZE) • Global variables/common declaration Not yet supported

Nano-threads • Run-to-block execution model • Shared stacks (father/sons relationship) • Implicit thread migration (scheduler)

JIAJIA • Developed at China by W. Hu, W. Shi & Z. Tang • Public domain DSM • User level DSM • DSM : lock/unlock, barrier, cond. variables • MP : send/receive, broadcast, reduce • Solaris, AIX, Irix, Linux, NT (not distributed)

JIAJIA : Memory Allocation • No control of memory alignment (x 2) • Synchronous memory allocation primitive Development of an RPC version – Based on send/receive primitive – Add of a user level message handler Problems – Global lock – Interference with JIAJIA blocking function

JIAJIA : Discussion • Global barrier for data synchronization Not multiple levels of parallelism • No thread aware No efficient use of SMP nodes

DSM/PM 2 • Developed at LIP by G. Antoniu (Ph. D student) • Public domain • User level, module of PM 2 • Generic and multi-protocol DSM • DSM : lock/unlock • MP : LRPC • Linux, Solaris, Irix (32 bits)

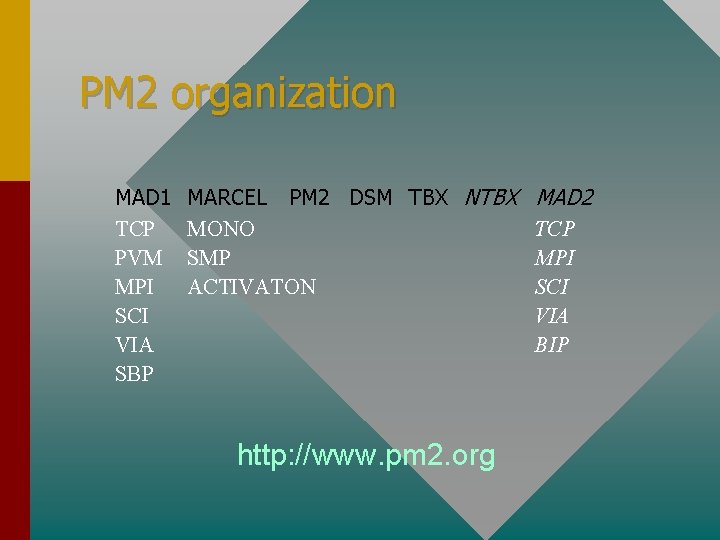

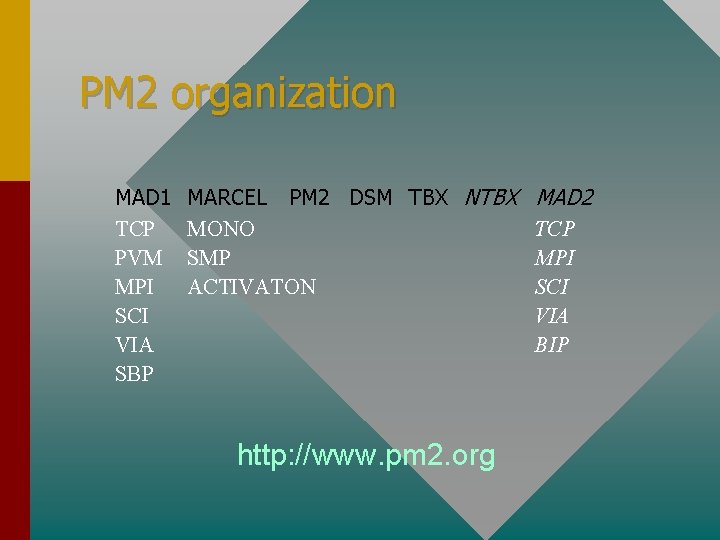

PM 2 organization MAD 1 TCP PVM MPI SCI VIA SBP MARCEL PM 2 DSM TBX NTBX MAD 2 MONO TCP SMP MPI ACTIVATON SCI VIA BIP http: //www. pm 2. org

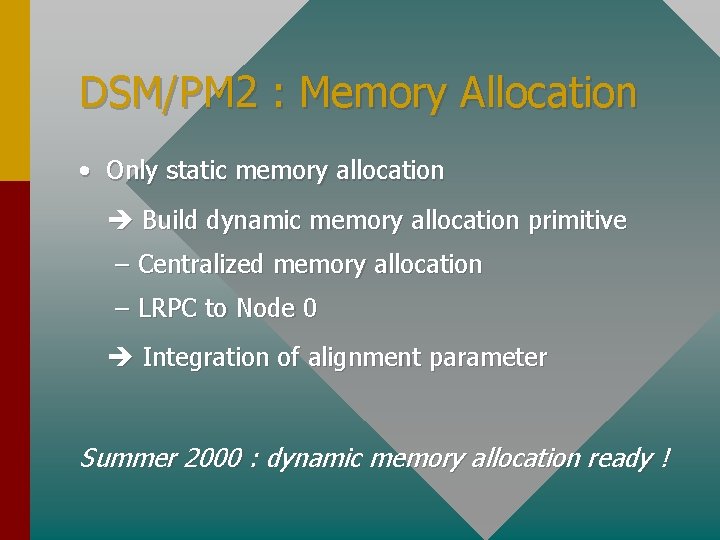

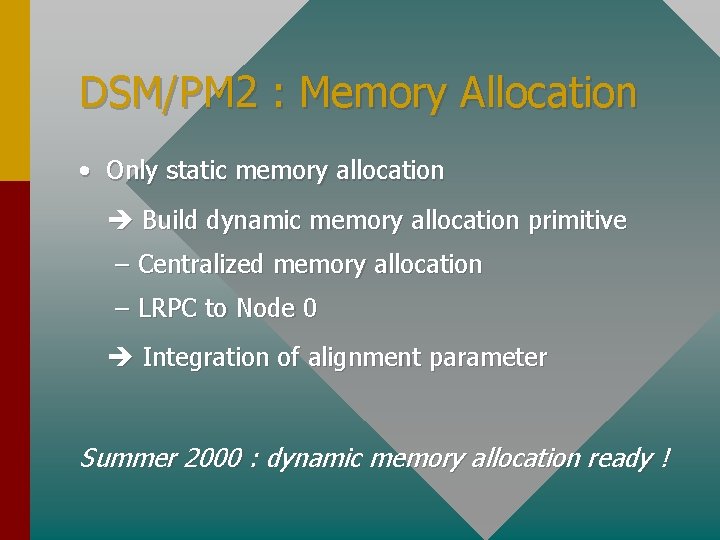

DSM/PM 2 : Memory Allocation • Only static memory allocation Build dynamic memory allocation primitive – Centralized memory allocation – LRPC to Node 0 Integration of alignment parameter Summer 2000 : dynamic memory allocation ready !

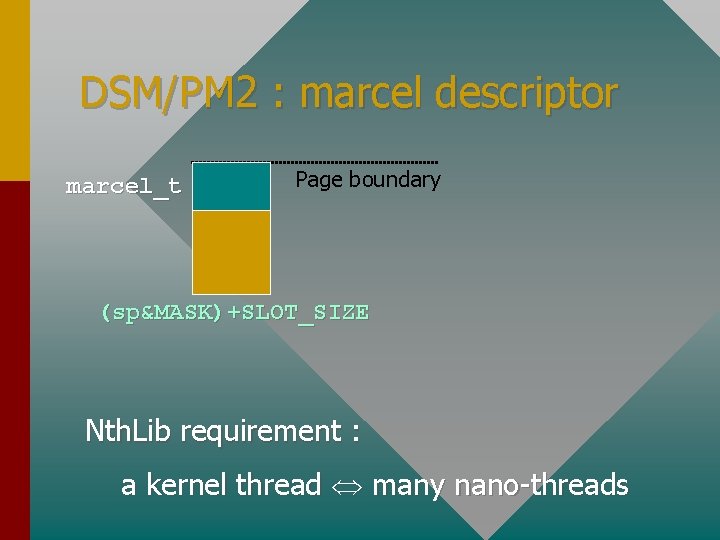

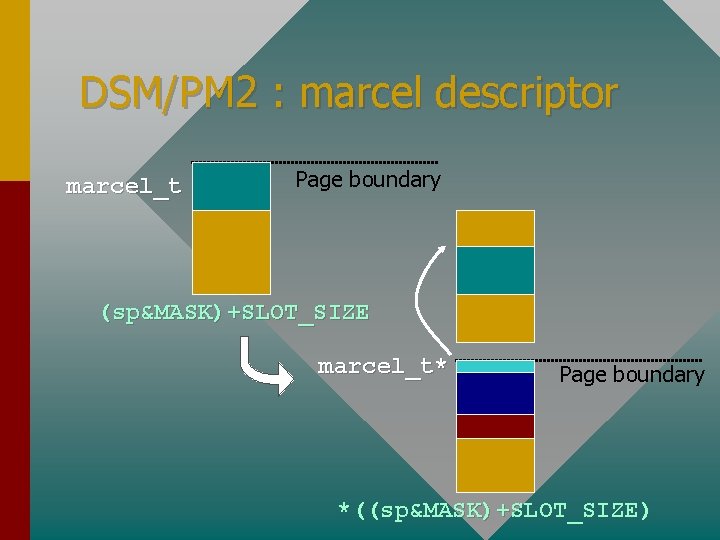

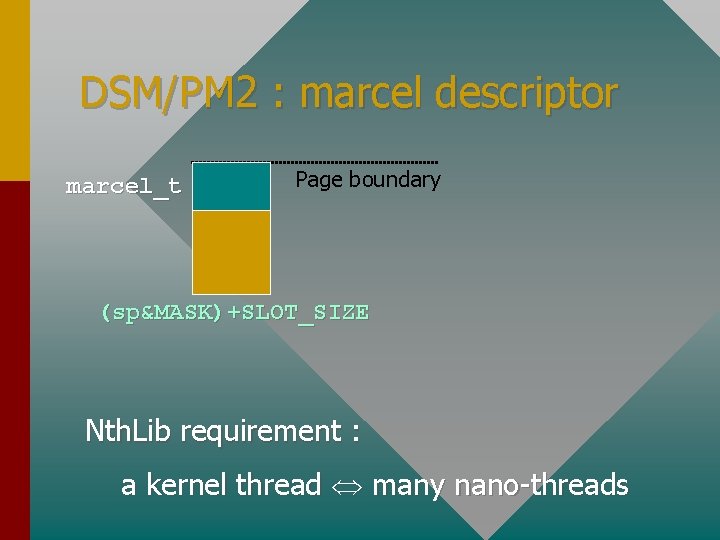

DSM/PM 2 : marcel descriptor marcel_t Page boundary (sp&MASK)+SLOT_SIZE Nth. Lib requirement : a kernel thread many nano-threads

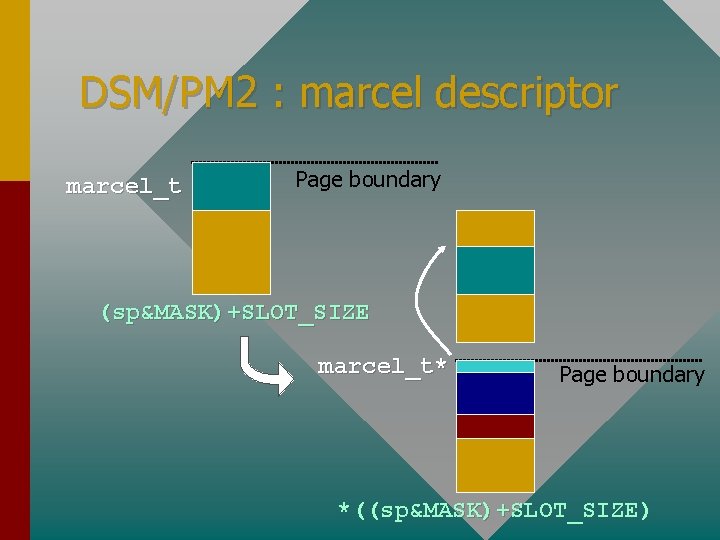

DSM/PM 2 : marcel descriptor marcel_t Page boundary (sp&MASK)+SLOT_SIZE marcel_t* Page boundary *((sp&MASK)+SLOT_SIZE)

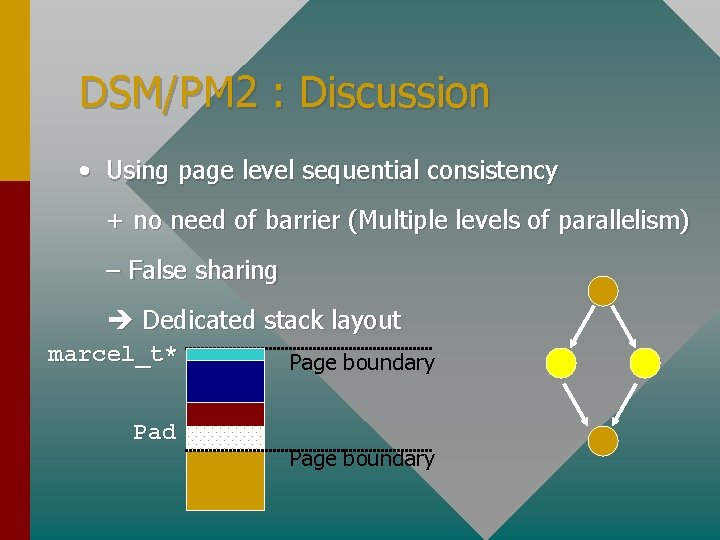

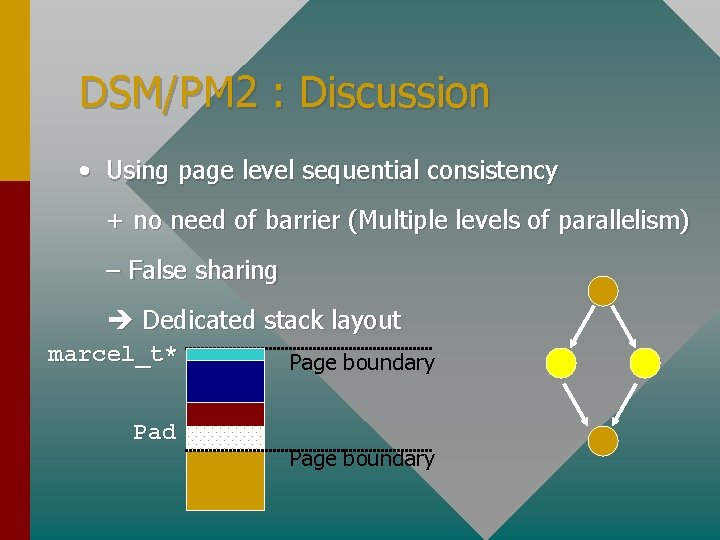

DSM/PM 2 : Discussion • Using page level sequential consistency + no need of barrier (Multiple levels of parallelism) – False sharing Dedicated stack layout marcel_t* Pad Page boundary

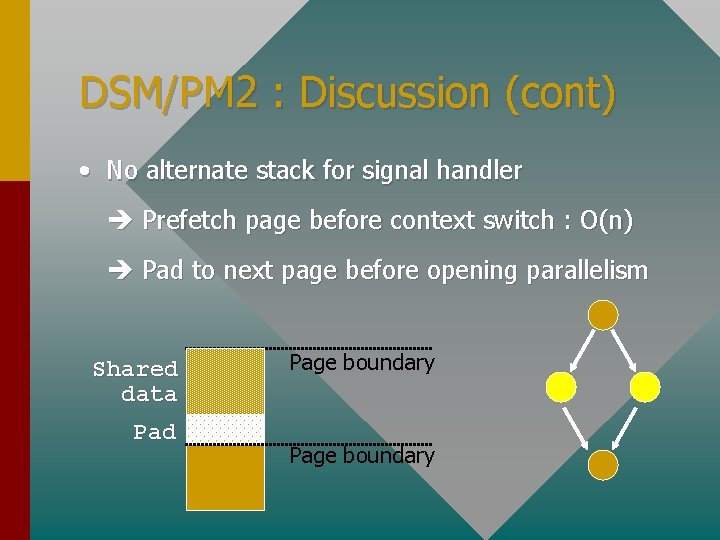

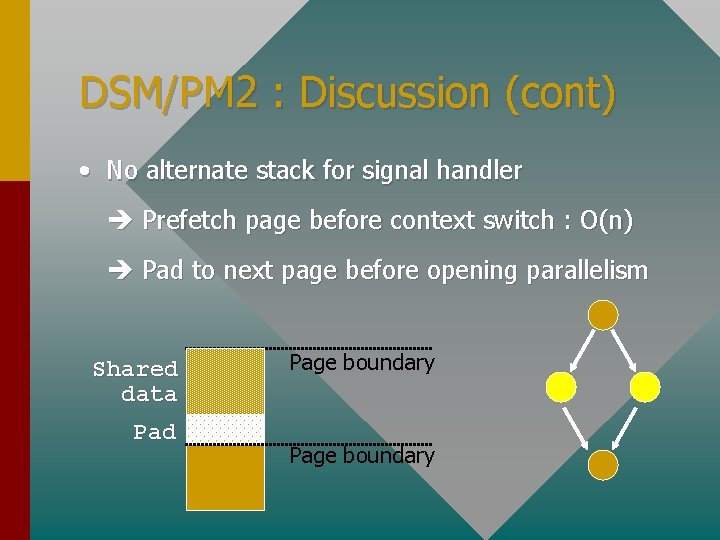

DSM/PM 2 : Discussion (cont) • No alternate stack for signal handler Prefetch page before context switch : O(n) Pad to next page before opening parallelism Shared data Pad Page boundary

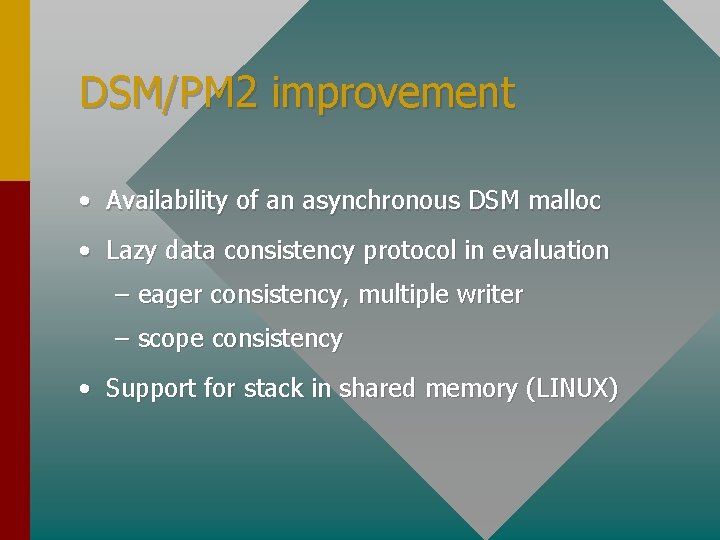

DSM/PM 2 improvement • Availability of an asynchronous DSM malloc • Lazy data consistency protocol in evaluation – eager consistency, multiple writer – scope consistency • Support for stack in shared memory (LINUX)

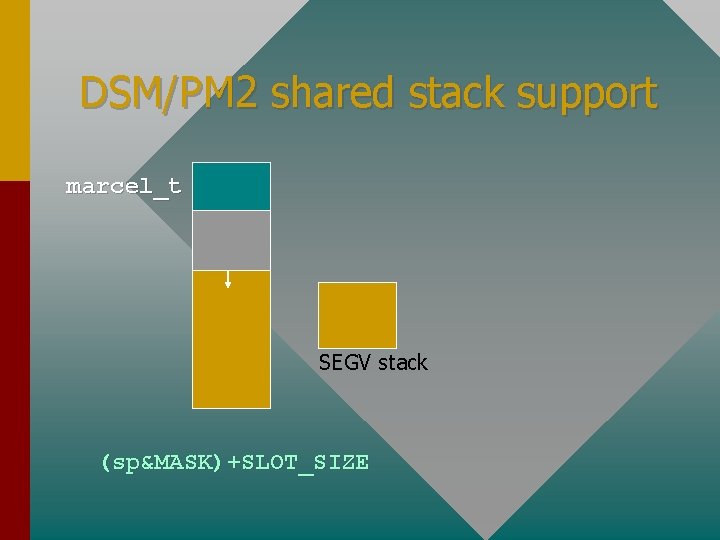

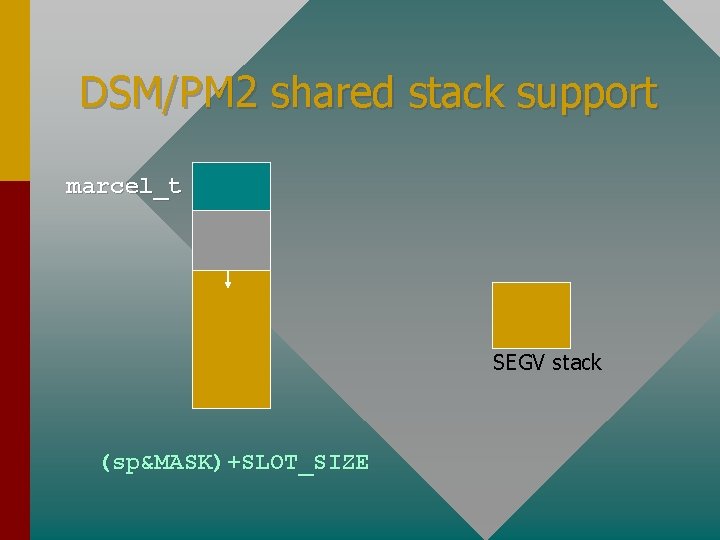

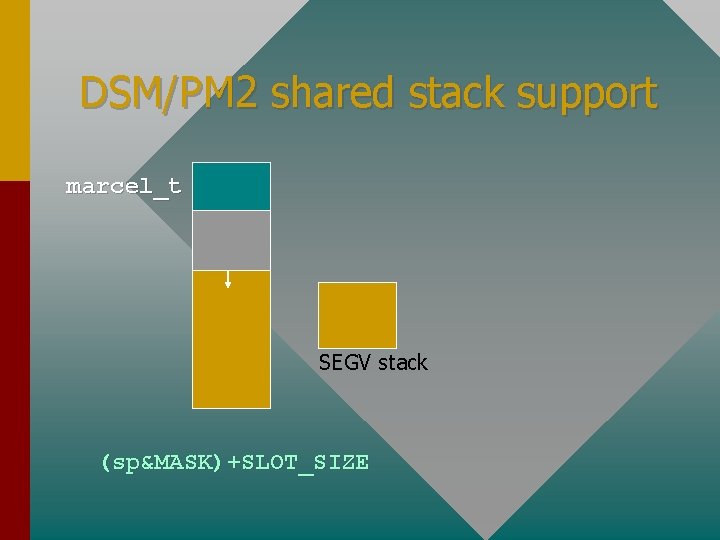

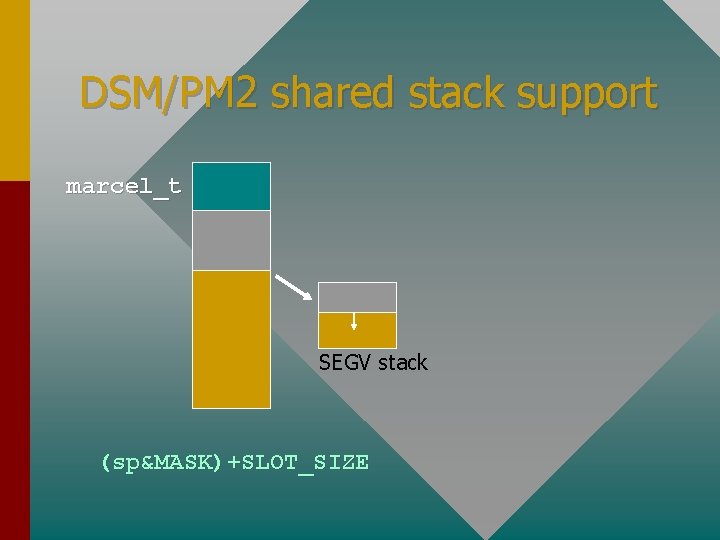

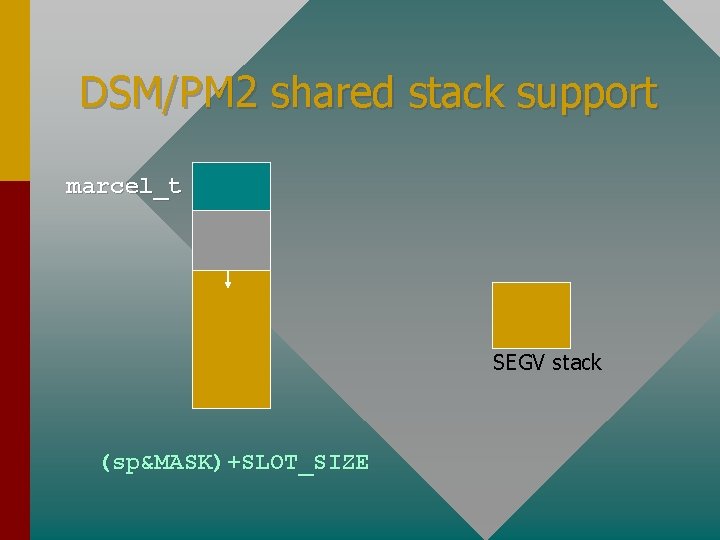

DSM/PM 2 shared stack support marcel_t SEGV stack (sp&MASK)+SLOT_SIZE

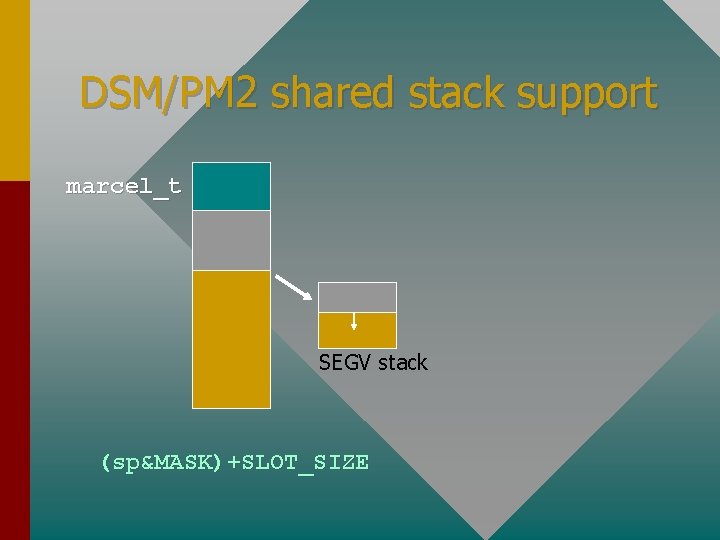

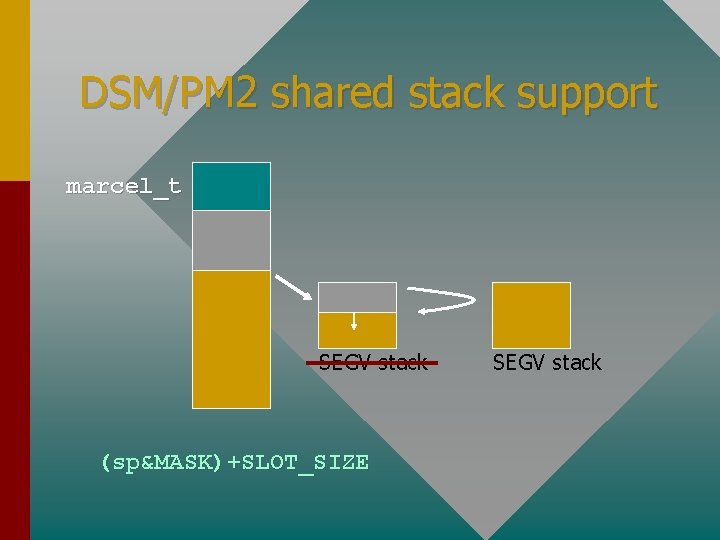

DSM/PM 2 shared stack support marcel_t SEGV stack (sp&MASK)+SLOT_SIZE

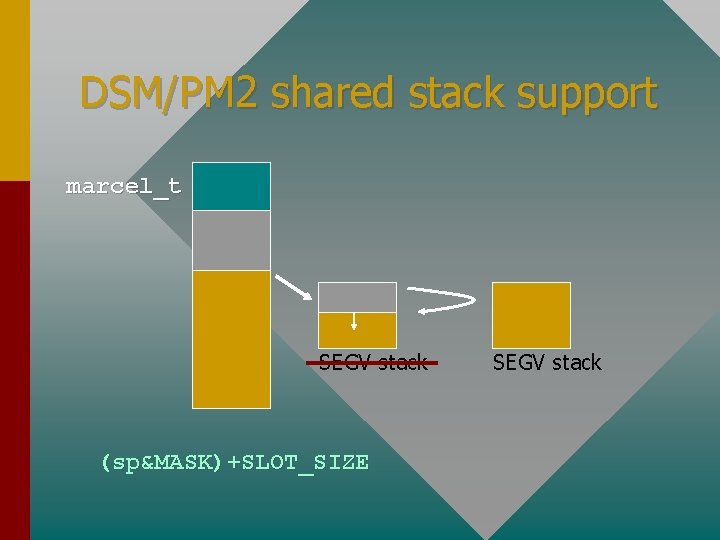

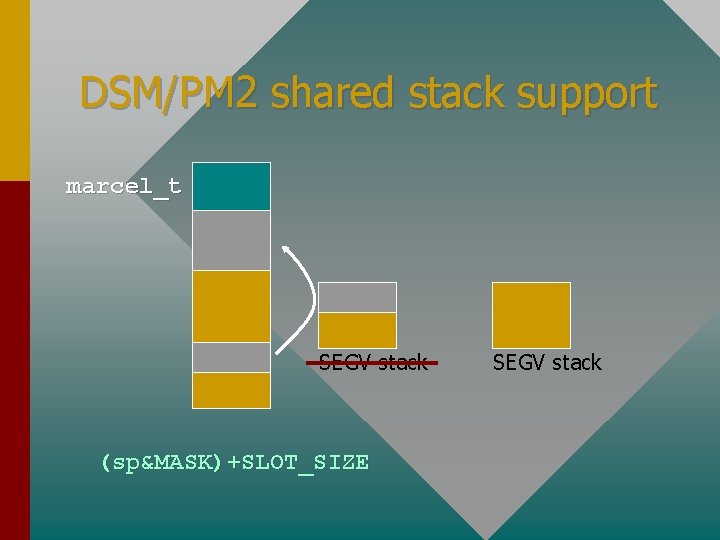

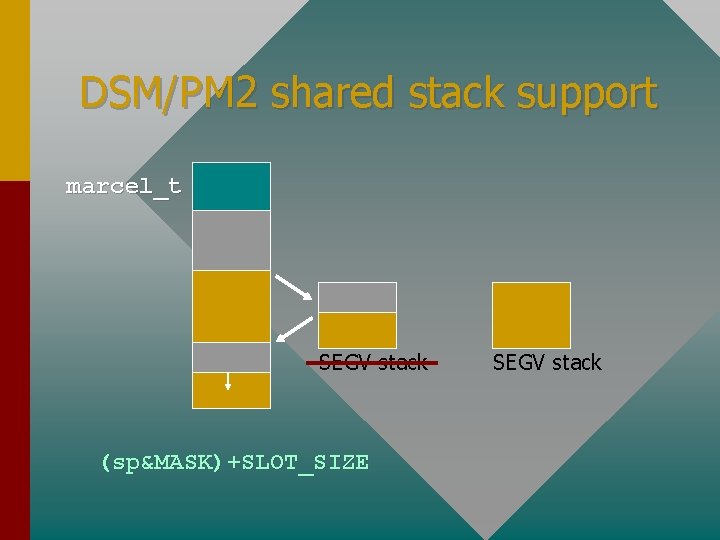

DSM/PM 2 shared stack support marcel_t SEGV stack (sp&MASK)+SLOT_SIZE SEGV stack

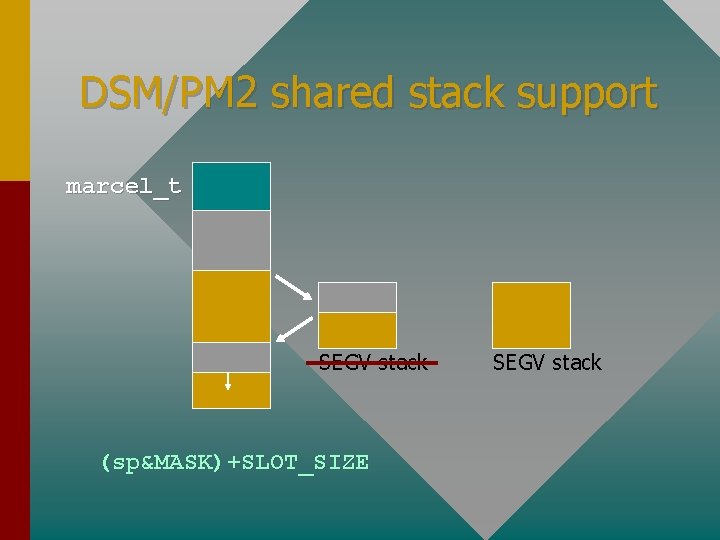

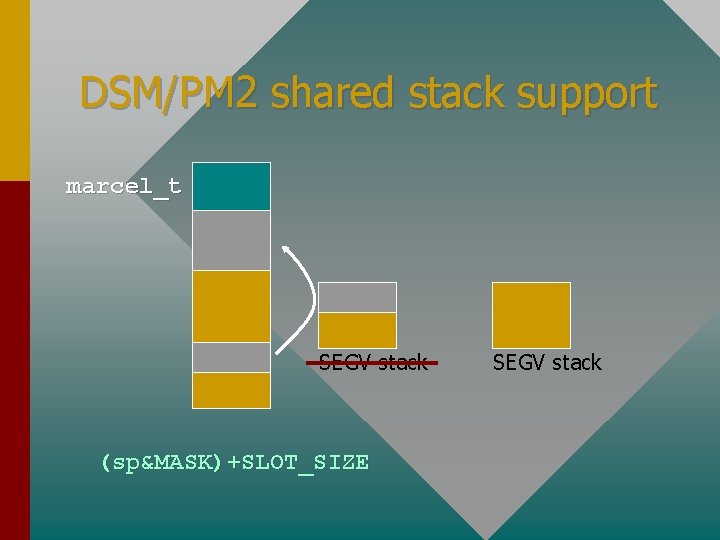

DSM/PM 2 shared stack support marcel_t SEGV stack (sp&MASK)+SLOT_SIZE SEGV stack

DSM/PM 2 shared stack support marcel_t SEGV stack (sp&MASK)+SLOT_SIZE SEGV stack

DSM/PM 2 shared stack support marcel_t SEGV stack (sp&MASK)+SLOT_SIZE

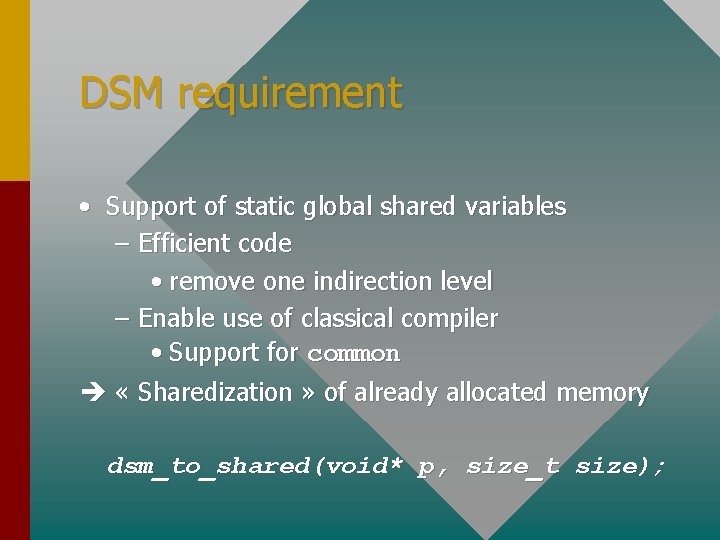

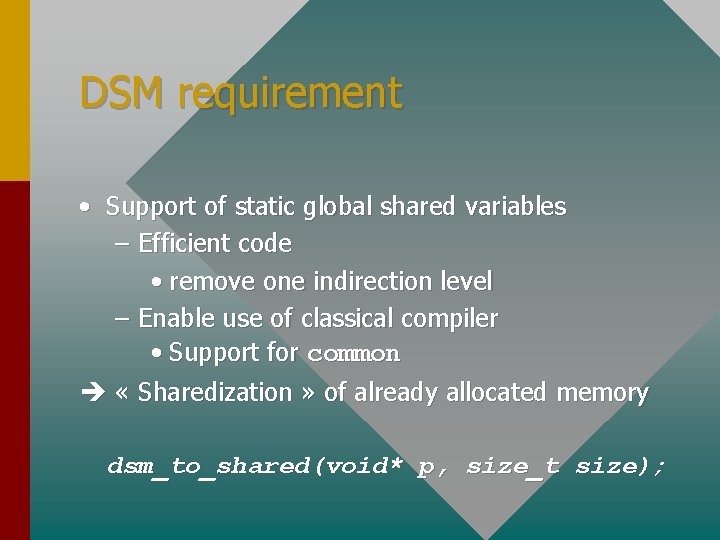

DSM requirement • Support of static global shared variables – Efficient code • remove one indirection level – Enable use of classical compiler • Support for common « Sharedization » of already allocated memory dsm_to_shared(void* p, size_t size);

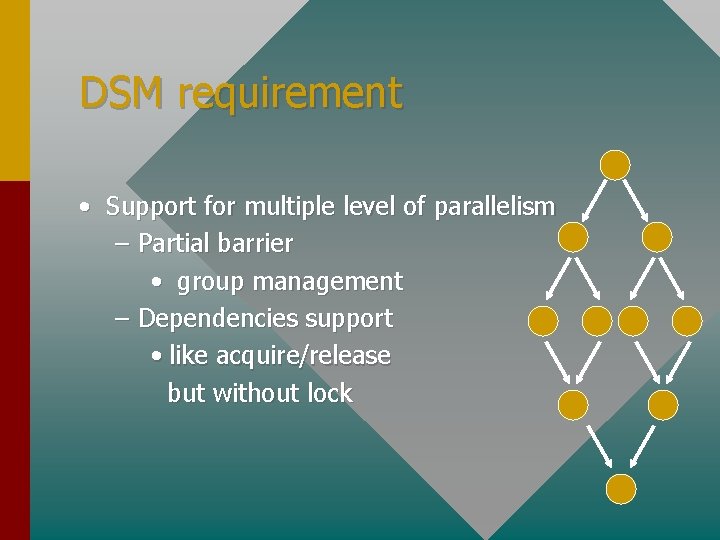

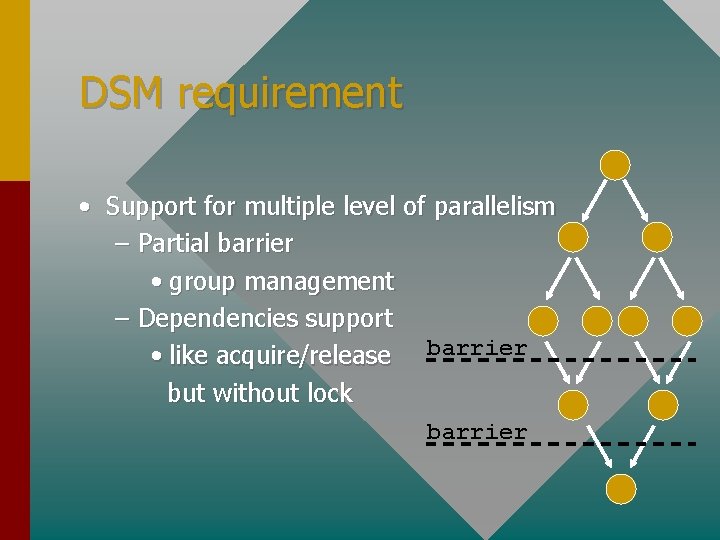

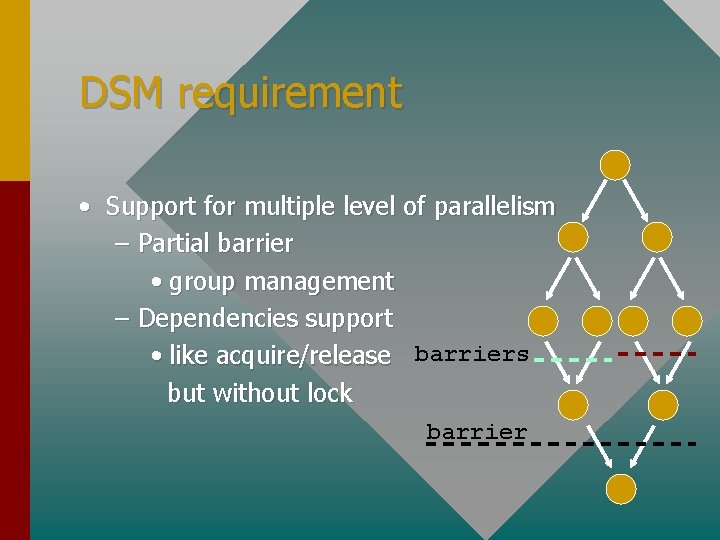

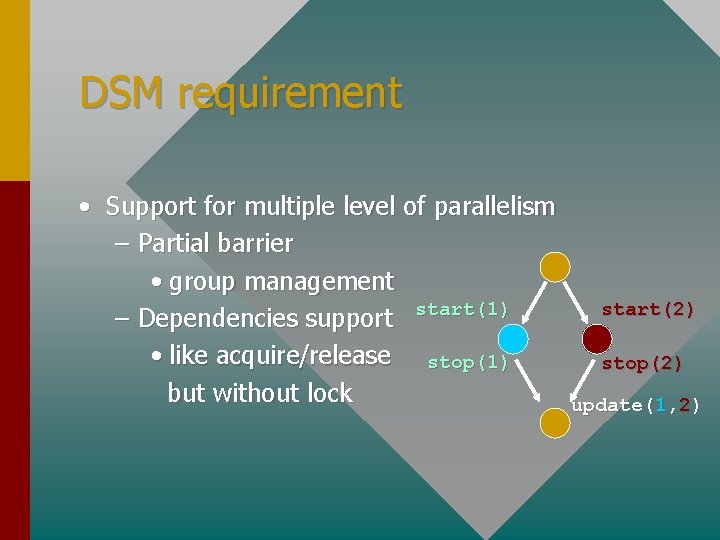

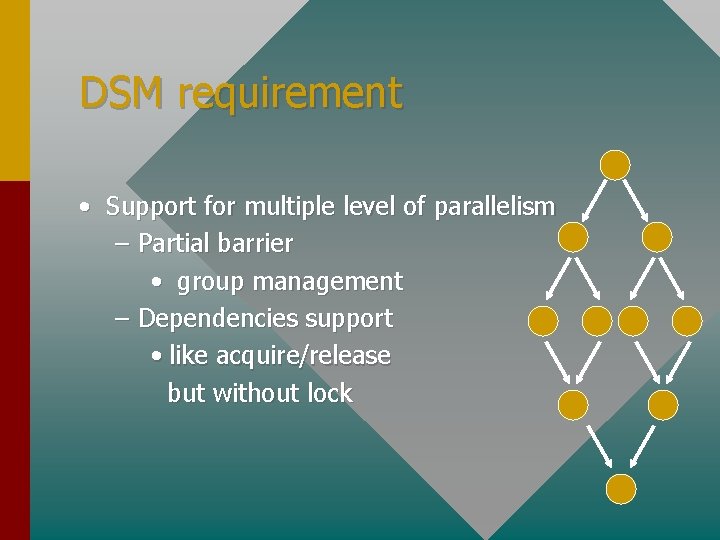

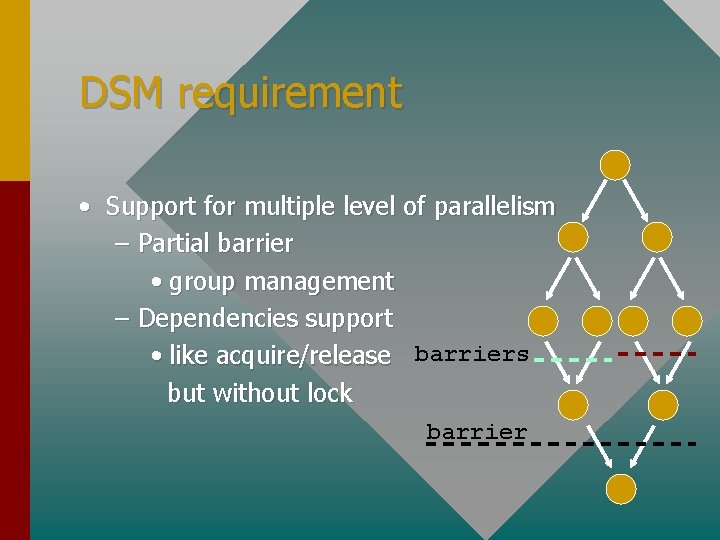

DSM requirement • Support for multiple level of parallelism – Partial barrier • group management – Dependencies support • like acquire/release but without lock

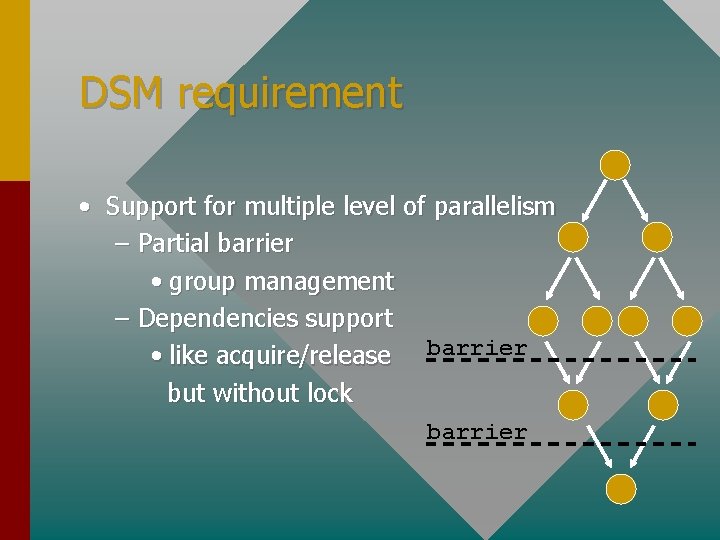

DSM requirement • Support for multiple level of parallelism – Partial barrier • group management – Dependencies support • like acquire/release barrier but without lock barrier

DSM requirement • Support for multiple level of parallelism – Partial barrier • group management – Dependencies support • like acquire/release barriers but without lock barrier

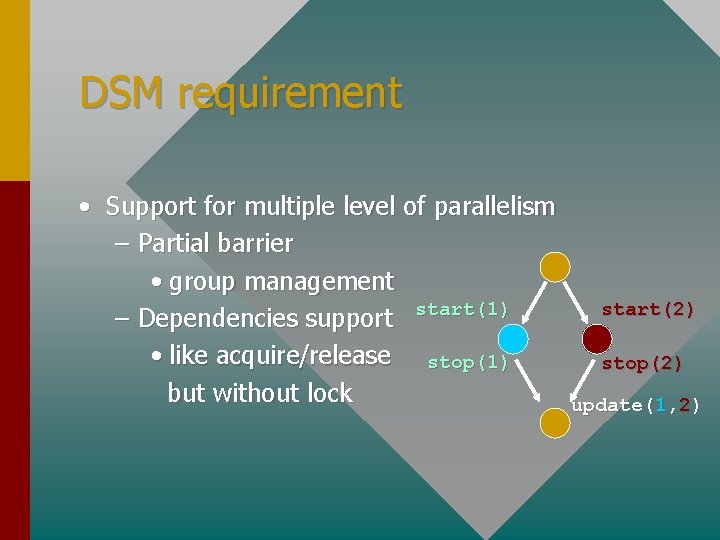

DSM requirement • Support for multiple level of parallelism – Partial barrier • group management – Dependencies support start(1) • like acquire/release stop(1) but without lock start(2) stop(2) update(1, 2)

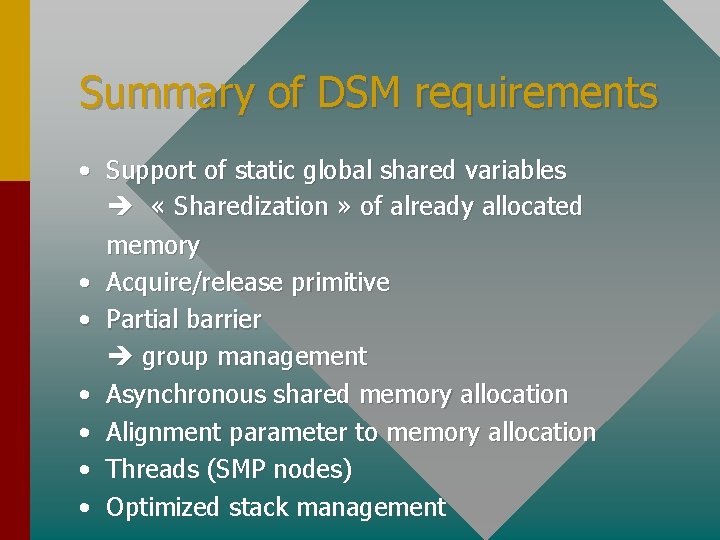

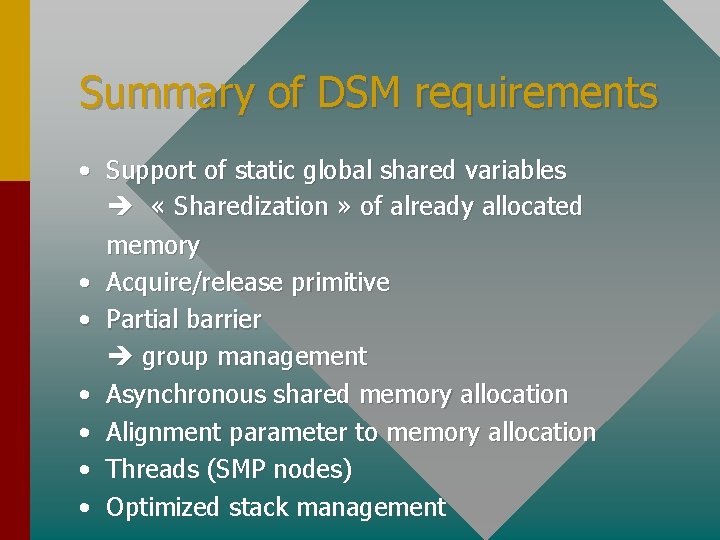

Summary of DSM requirements • Support of static global shared variables « Sharedization » of already allocated memory • Acquire/release primitive • Partial barrier group management • Asynchronous shared memory allocation • Alignment parameter to memory allocation • Threads (SMP nodes) • Optimized stack management

Conclusion • Successfully port Nanos to 2 DSM JIAJIA & DSM-PM 2 • DSM requirement to obtain performance Support MIMD model Automatic thread migration • Performance ?

Sdsm software

Sdsm software ľadovcový nános

ľadovcový nános Asuman sunguroğlu

Asuman sunguroğlu Bedia palabıyık

Bedia palabıyık Embedded os porting

Embedded os porting Amaysim porting

Amaysim porting Christian goal setting

Christian goal setting El doctor iq

El doctor iq Dra lilian perez

Dra lilian perez Suamy perez

Suamy perez Isabel del pino allen

Isabel del pino allen Francisco perez bes

Francisco perez bes Educar para una nueva ciudadanía costa rica

Educar para una nueva ciudadanía costa rica Bartolomé esteban perez murillo

Bartolomé esteban perez murillo Eugenio sanz perez

Eugenio sanz perez Alfredo martinez perez

Alfredo martinez perez Javier ocasio perez

Javier ocasio perez Maura perez

Maura perez Mario perez nasa

Mario perez nasa Reinaldo perez rayon

Reinaldo perez rayon Viridiana perez

Viridiana perez Ceip cabo mayor

Ceip cabo mayor Borja pey perez

Borja pey perez Organización curricular de la ley 070

Organización curricular de la ley 070 José quiroga pérez de deza

José quiroga pérez de deza Ceip bernardino perez

Ceip bernardino perez Modelo anise perez campanero

Modelo anise perez campanero Ovogenes

Ovogenes Nicolette graves

Nicolette graves Daniel perez alcaraz

Daniel perez alcaraz Estel perez

Estel perez Uvetis

Uvetis Perez cervantes abel

Perez cervantes abel Tamara perez inmunologa

Tamara perez inmunologa Juan jesus perez poveda

Juan jesus perez poveda Salvador perez camarena

Salvador perez camarena Caracteristicas de las obras de benito perez galdos

Caracteristicas de las obras de benito perez galdos Adolfo perez brignani

Adolfo perez brignani Desiliencia

Desiliencia Patricia rivera pérez

Patricia rivera pérez El hornero roque perez

El hornero roque perez Mercedes perez gonzalez

Mercedes perez gonzalez Andrea sendin perez

Andrea sendin perez Eva dafonte perez

Eva dafonte perez Javier ocasio-perez

Javier ocasio-perez