Pop Quiz Define Fanin and Fanout Does it

- Slides: 64

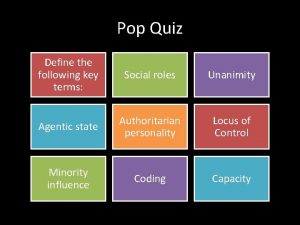

Pop Quiz • Define Fan-in and Fan-out • Does it matter if (what should be done about) code (which) has a high Fan-in and a high Fan-out content? • What is the goal of a Software Quality Management Model? • Why is the Rayleigh model good for quality management? 1

Software Quality Engineering CS 410 Class 11 Complexity Metrics and Models 2

Complexity Metrics and Models • Complexity Metrics and Models: • Provide clues to help focus quality improvement efforts by looking at the program-module level. • Focused on internal dynamics of design and code • Studied by Computer Scientists and Software engineers • In contrast, Reliability and Quality Management Models are: • Unit of analysis is not as granular • Focused on external behavior of process (eg. inspection defects), or product (eg. failure data). • Studied by researchers, reliability experts, and project managers 3

Lines of Code (LOC) • A representation of program size • Can be measured in different ways (chap. 4) – HLL Source Statements – Assembler Statements – Executable lines plus data definitions 4

Lines of Code (LOC) • General assumption: – The more lines of code, the more defects are expected, and a higher defect density (defects per KLOC) is expected • Research has shown that the general assumption may not be true in all cases. – A optimum code size may exist in which expected defect rates would be contained within an acceptable upper limit. – Such an optimum may depend on language, project, product, and environment 5

Lines of Code (LOC) • A curvilinear (p. 76 -77) relationship more accurately describes the relationship between code size and defect density: • Example: Fig. 10. 1 p. 255 • Module size affects defect density: – Small modules require more external interfaces – Large modules become very complex – Key is to use empirical data, historical data, and comparative data to establish a guideline for module size 6

Halstead’s Software Science • Halstead (1977) stated that Software Science is different from computer Science and that Software Science consists of programming tasks. • A programming task is the act of selecting and arranging a finite number of program ‘tokens’ which are basic syntactic units distinguishable by a compiler. 7

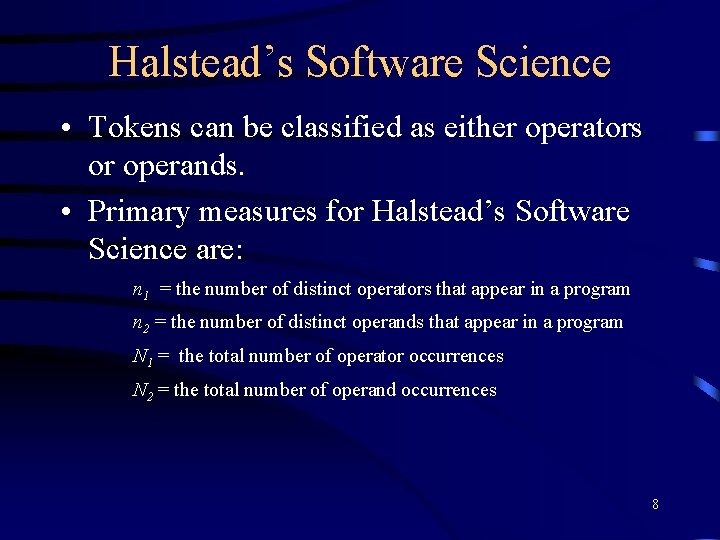

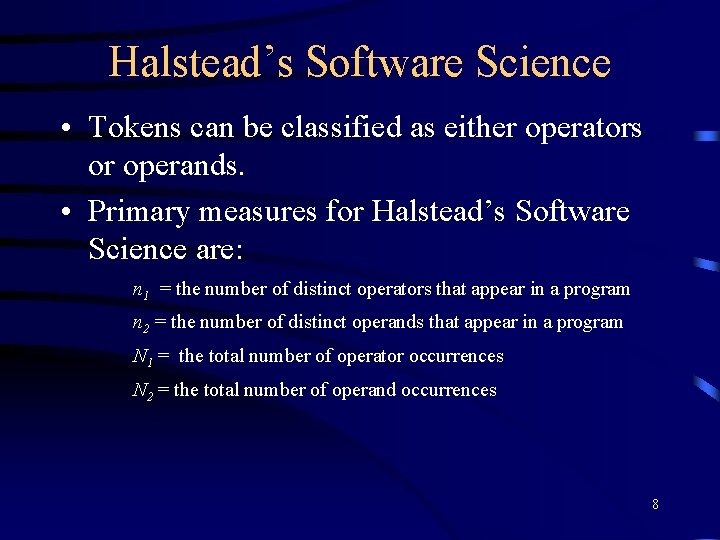

Halstead’s Software Science • Tokens can be classified as either operators or operands. • Primary measures for Halstead’s Software Science are: n 1 = the number of distinct operators that appear in a program n 2 = the number of distinct operands that appear in a program N 1 = the total number of operator occurrences N 2 = the total number of operand occurrences 8

Halstead’s Software Science • Based on the four primitives a system of equations describing the program can be applied: – Total Vocabulary (n) = n 1 + n 2 – Overall Program Length (N) = N 1 + N 2 – Potential Minimum Volume (in bits) for algorithm Volume (V) = NLog 2(n 1 + n 2) 9

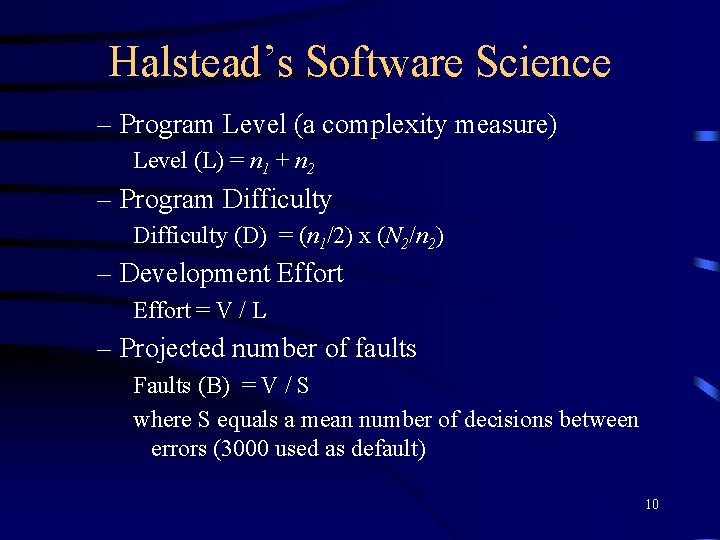

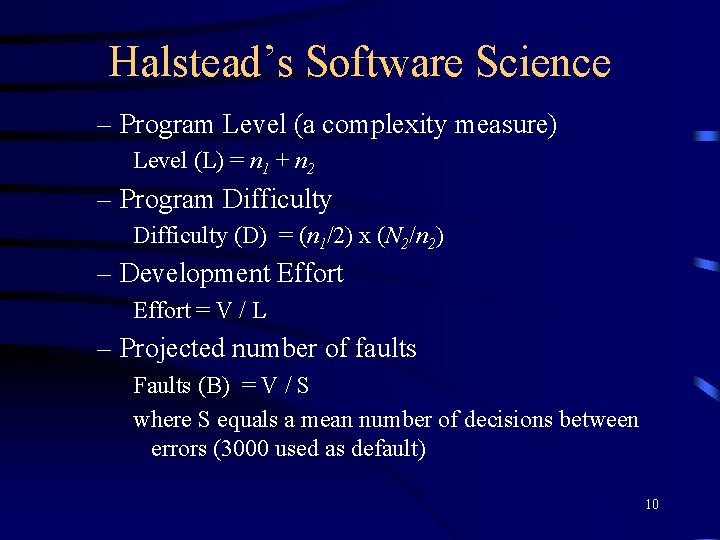

Halstead’s Software Science – Program Level (a complexity measure) Level (L) = n 1 + n 2 – Program Difficulty (D) = (n 1/2) x (N 2/n 2) – Development Effort = V / L – Projected number of faults Faults (B) = V / S where S equals a mean number of decisions between errors (3000 used as default) 10

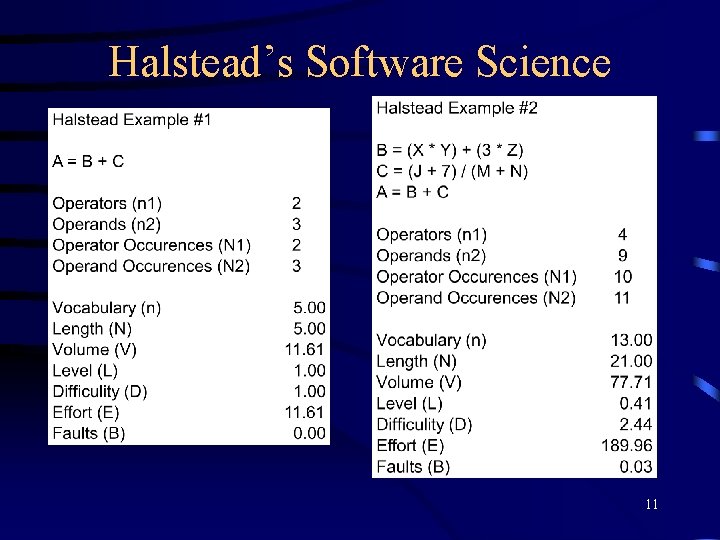

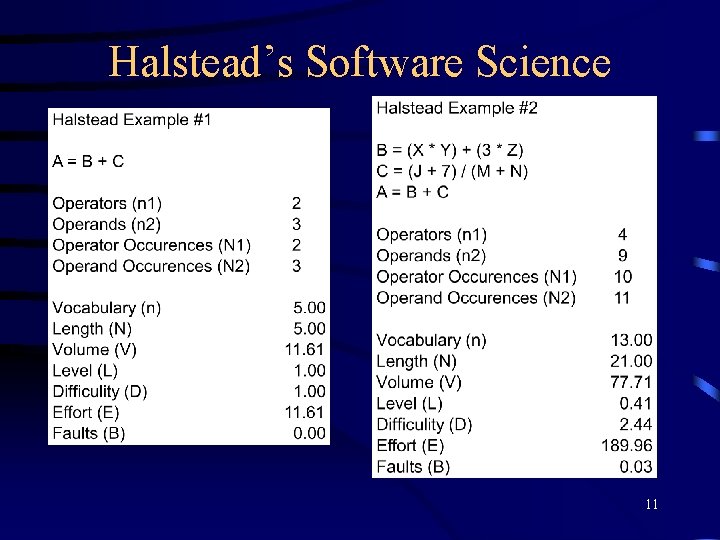

Halstead’s Software Science 11

Halstead’s Software Science • Problems with Halstead’s Software Science: • Faults equation is oversimplified. It simply states that the number of faults in a program is a function of its volume. • Equations do not provide relevant information, only good for comparison • Data for predictions must be available to perform equations, which means code must already be written. 12

Cyclomatic Complexity • Mc. Cabe (1976) designed a system to indicate a programs testability and understandability (maintainability). • A. K. A. Mc. Cabes Complexity Index or CPX • Based on graph theory of cyclomatic number of regions in a (flow) graph • Represents the number of linearly independent paths comprising a program • Gives an upper bound to the number of test-cases that would be required for path testing 13

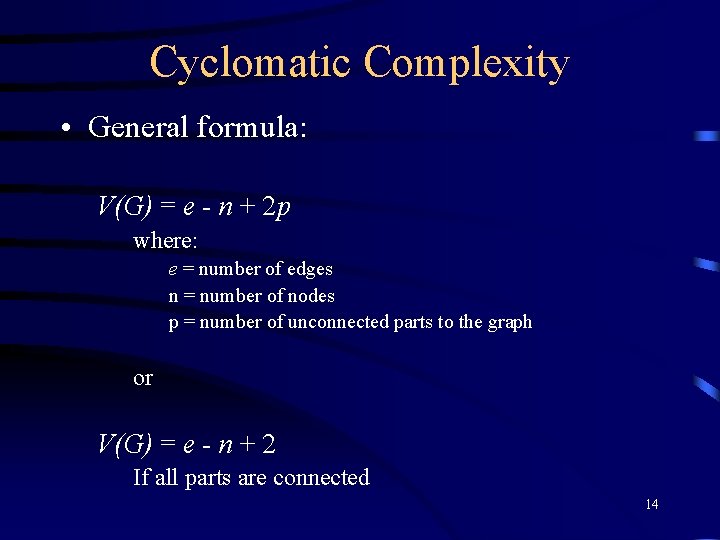

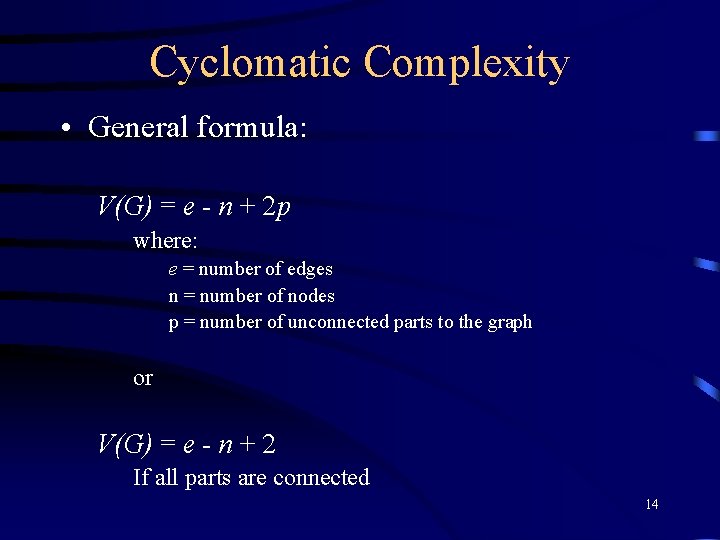

Cyclomatic Complexity • General formula: V(G) = e - n + 2 p where: e = number of edges n = number of nodes p = number of unconnected parts to the graph or V(G) = e - n + 2 If all parts are connected 14

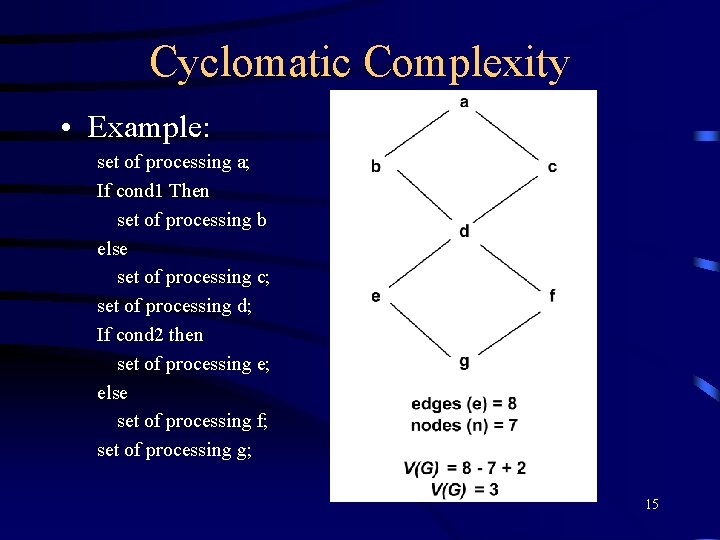

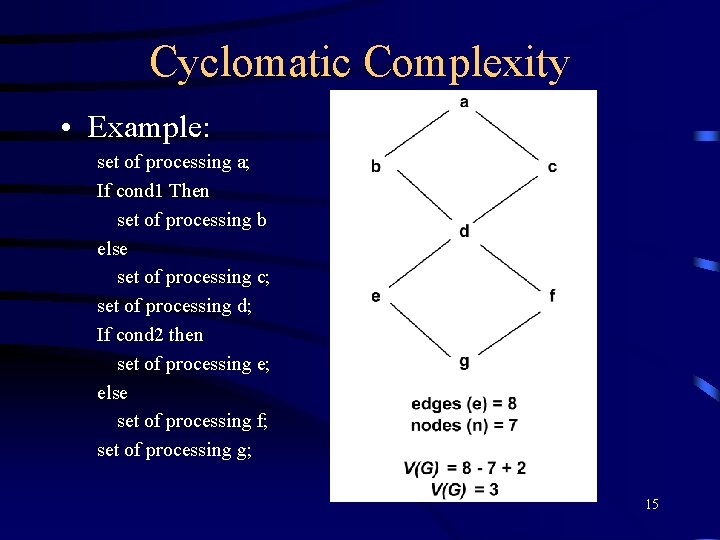

Cyclomatic Complexity • Example: set of processing a; If cond 1 Then set of processing b else set of processing c; set of processing d; If cond 2 then set of processing e; else set of processing f; set of processing g; 15

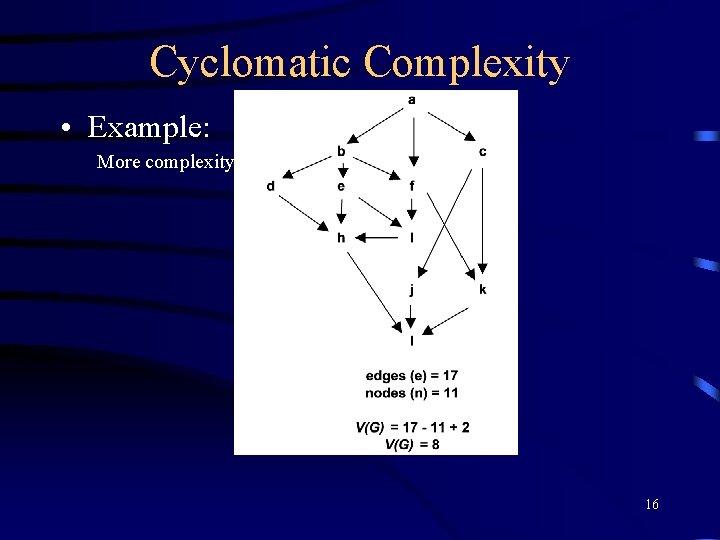

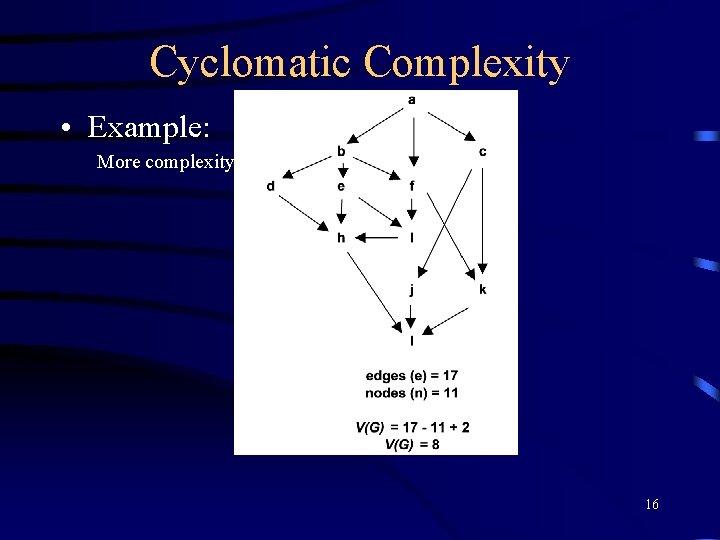

Cyclomatic Complexity • Example: More complexity 16

Cyclomatic Complexity • Cyclomatic Complexity also can be computed based on the decision in a program: – – n binary decisions: V(g) = n + 1 three-way decision: counts as 2 n + 1 binary decisions n-way decision: counts as n - 1 binary decisions loops: count as 1 binary decision • note: does not distinguish between different type of control flow (eg. loops vs. IF-THEN-ELSE) • Cyclomatic complexities are additive – The Cyclomatic Complexity of one large graph is the sum of the individual graph’s complexities. 17

Cyclomatic Complexity • Mc. Cabes recommendation: – To achieve a good testability and maintainability, no program module should have a Cyclomatic Complexity greater than 10. • Cyclomatic complexity correlates strongly with program size (LOC). • There also tends to be a positive correlation between Cyclomatic Complexity and defects. • Cyclomatic Complexity appeals to many software developers because it is tied to decisions and branches (logic). 18

Syntactic Constructs • Studies that look at syntactic makeup of a program. – Shen (1985) • Found a correlation between the number of unique operands and the presence of defects – Lo (1992) • Found correlation between field defects in modules, and LOC, IF-THEN-ELSE’s, DO-WHILE’s, unique operands, and number of calls • DO-WHILE turned out to be the greatest factor, and a positive correlation between DO-WHILE use and defects was discovered (programmers needed training) 19

Structure Metrics • Previous metrics all assume that each module is a independent entity. • Structure metrics take into account that (significant) interactions between modules exist. • Yourdon/Constantine (1979) & Myers (1978) both proposed fan-in and fan-out metrics based on the idea of coupling. 20

Structure Metrics • • Fan-out = number of modules called Fan-in = number of modules called by Module Coupling = “connectedness” Other Factors: – Inputs, Outputs, and Global Variables • Low Module Coupling = relatively low number of inputs, outputs, and calls • High Coupling = relatively high number of inputs, outputs, and calls 21

Structure Metrics • Small/Simple modules are expected to have high fan-in. These modules are usually located at the lowest levels of the system structure. • Large/Complex modules are expected to have low fan-in. • Modules should generally not have both high fan-in and high fan-out. If so - then module is good candidate for redesign (functional decomposition). 22

Structure Metrics • Modules with high fan-in are expected to have low defect levels. – Fan-in is expected to have negative or insignificant correlation with defects • Modules with high fan-out are expected to have higher defect levels. – Fan-out is expected to have positive correlation with defects 23

Structure Metrics • Card and Glass (1990) System Complexity Model: • System Complexity is equal to the sum of structural complexity plus data complexity – where: • structural complexity is equal to mean of squared values of fan-out (fan-in is insignificant) • data complexity is average I/O variables 24

Complexity Metrics and Models Criteria for Evaluation • Explanatory Powers - the metrics/model ability to explain the interrelationships among complexity, quality, and other programming and design parameters. • Applicability - the degree to which the metrics/models can be applied by software engineers to improve the quality of design, code and test. 25

Complexity Summary • Complexity metrics and models describe the complexity characteristics of software components (modules). • Software complexity traditionally has a positive correlation to defects. • A key to achieving good quality software is to reduce the complexity of software designs and implementations. 26

Pop Quiz • What are metrics and models for? • List some advantages of Halstead’s Software Science (you may want some disadvantages, too…) • What is the Back End? • So what’s the Front End? • How good is “good enough”? 27

Software Quality Engineering CS 410 Class 11 b Measuring and Analyzing Customer Satisfaction 28

Customer Satisfaction • Customer satisfaction is the ultimate validation of quality! • Product quality and customer satisfaction together form the total meaning of quality. • TQM links customer satisfaction to product quality, and focuses on long-term business success. • Customer Focus - achieve high Customer Satisfaction • Process - reduce process variation and achieve continuous process improvement • Human side of Quality - quality culture • Measurement and Analysis - goal-oriented measurement 29

Customer Satisfaction • Customer satisfaction is important because: – Enhancing customer satisfaction is the bottom line of business success – Retaining existing customers is becoming tougher with ever-increasing market competition – Studies show that it is five times more costly to recruit a new customer than it is to retain an old customer – Dissatisfied customers tell 17 to 20 people about their (negative) experiences – Satisfied customers tell 3 to 5 people about their 30 (positive) experiences

Customer Satisfaction Surveys – Three type of Survey: • Face-to-Face • Telephone Interviews • Mailed Questionnaires – Face-to-face interviews • interviewer asks questions from pre-structured questionnaire and records the answers • advantage - high degree of validity of the data because the interviewer can note specific reactions and eliminate misunderstandings about questions being asked • disadvantages - cost, interviewer bias, recording errors, training 31

Customer Satisfaction Surveys – Telephone Interviews • Similar to face-to-face interviews • interviewer asks questions from pre-structured questionnaire and records the answers over the phone • advantage - interviews can be monitored to ensure consistency and accuracy • advantage - less expensive than face-to-face • advantage - can be automated (computer-aided) • disadvantage - lack of direct observations, and lack of contacts (due to not scheduling a meeting) 32

Customer Satisfaction Surveys – Mailed Questionnaire • Does not require interviewers and is therefore less expensive • disadvantage - lower response rates, and samples may not be representative of the population (skewed results - only the people who really have something to say may respond) • Questionnaire must be carefully constructed, validated and pre-tested before being used. 33

Customer Satisfaction Surveys • Comparison of three survey methods: 34

Customer Satisfaction Surveys • Sampling methods: – When customer base is very large it is not possible (or feasible) to sample every customer – Customer satisfaction must be estimated based on a sub-set of population – Four types of probability sampling: • • Simple random sampling Systematic sampling Stratified sampling Cluster sampling 35

Customer Satisfaction Surveys – Simple random sampling • Every sample of size n has the same chance of being selected from the population • Method – List each individual is listed once (and only once) – Some mechanical (I. e. a random number generator) process is used to draw the sample – On each draw the already selected individuals are removed from the list – Probabilities of being selected are always equal for each of the remaining individuals 36

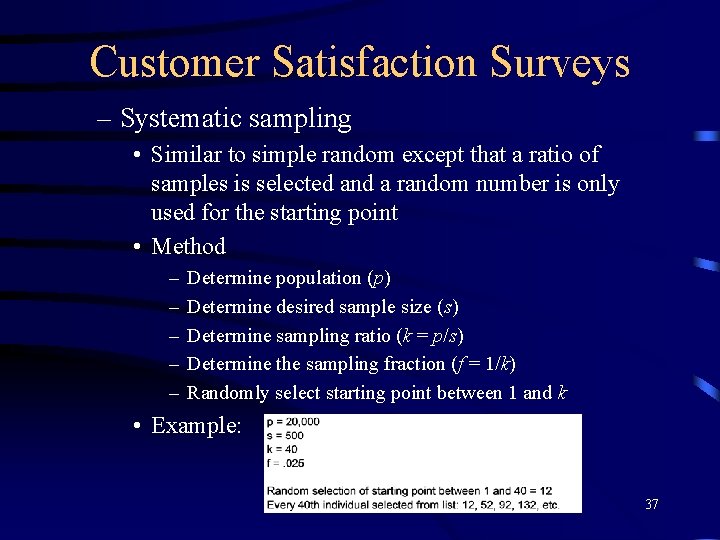

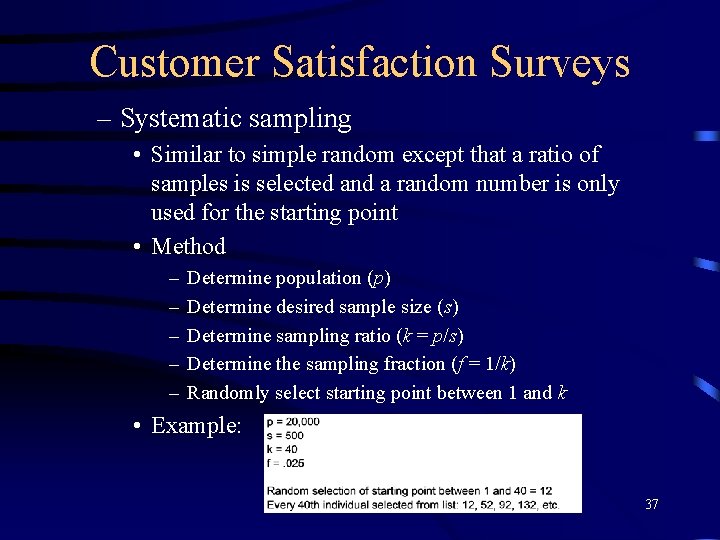

Customer Satisfaction Surveys – Systematic sampling • Similar to simple random except that a ratio of samples is selected and a random number is only used for the starting point • Method – – – Determine population (p) Determine desired sample size (s) Determine sampling ratio (k = p/s) Determine the sampling fraction (f = 1/k) Randomly select starting point between 1 and k • Example: 37

Customer Satisfaction Surveys – Stratified sampling • Individuals are classified into nonoverlapping groups called strata and then simple random samples are selected from each stratum. • Strata selection is usually based on some aspects of the customer environment. – Cluster Sampling • Individuals are group into many clusters (groups) based on some characteristic and then a cluster is selected and sampled. 38

Customer Satisfaction Surveys – Sampling considerations: • Simple random sampling is generally the least expensive sampling method. • Systematic sampling can be biased if: – The list is ordered – Cyclical characteristics conflict with k • Stratified Sampling is usually more efficient, and yields greater accuracy than simple sampling (individuals in each stratum are represented). • Cluster sampling is generally less efficient, but also less expensive than stratified sampling. 39

Customer Satisfaction Surveys • Sample size: – How large should sample size be? – Dependant on: • Confidence level desired • Margin of error that can be tolerated – Larger sample sizes give higher confidence levels and lower margins of error. – Power of sample is dependant on absolute size rather than percentage of population. 40

Customer Satisfaction Surveys • Analyzing Satisfaction Data: – Five-point (Likert scale) is the most commonly used measure. – Run charts can be used to show graphical representation of survey results. • Note - margin of error can also be included. • Example - fig. 11. 3 p. 280 – Data can be viewed as: • percent satisfied: - where are we doing well • percent unsatisfied (neutral, dissatisfied, and very dissatisfied) - where do we need to focus 41

Customer Satisfaction Surveys • Specific Attributes: – Measuring specific attributes of the software helps to provide data which will indicate areas of improvement. – The profile of customer satisfaction with those attributes indicates the areas of strength and weakness of the software product. – Notes: • Must be careful with correlating weakness with priority, it may not always be the desirable case. • More important is correlation of specific attribute to 42 overall satisfaction level.

Customer Satisfaction Surveys Specific Attributes Examples: • IBM - CUPRIMDSO – – – – – Capability Usability Performance Reliability Installability Maintainability Documentation Service Overall • Hewlett-Packard - FURPS – – – Functionality Usability Reliability Performance Serviceability 43

Customer Satisfaction Surveys • Overall Satisfaction – Each attribute has some correlation to the overall satisfaction rating. – Determining the correlation is the key to prioritizing improvement plans. – Methods for determining correlation (pp. 281 -287): • Least squares multiple regression • Logistic regression 44

Customer Satisfaction Surveys • Method for determining priorities: 1. Determine the order of significance of each quality attribute on overall satisfaction by statistical modeling. 2. Plot the coefficient of each attribute from the model (Y-axis) against its satisfaction level (Xaxis). 3. Use the plot to determine priority by: Top to Bottom Left to right if same coefficients Example: Fig. 11. 4 p. 287 45

Customer Satisfaction Surveys • Satisfaction with the company: – Adds a broader view to Customer Satisfaction – Overall satisfaction and loyalty is attributed to a set of common attributes of the company: • • • Ease of doing business Partnership Responsiveness Knowledge of the customer’s business Customer driven 46

Customer Satisfaction Surveys • Key dimensions of company satisfaction: – Technical solutions: quality. reliability, availability, ease of use, pricing, installation, new technology, etc. – Support and Service: flexible, accessible, product knowledge, etc. – Marketing: solution, central point of contact, information, etc. – Administration: purchasing procedure, billing procedure, warranty expiration notification, etc 47

Customer Satisfaction Surveys • Key dimensions of company satisfaction: – Delivery: on time, accurate, post-delivery process, etc. – Company image: technology leader, financial stability, executives images, etc. • Perceived performance is equally as important as actual performance. • Company level data is an important aspect of overall customer satisfaction. 48

Customer Satisfaction Surveys • How good is good enough? • Is it worth it to spend $x to gain y amount of satisfaction? • The key to this question lies in the relationship between customer satisfaction and market share. • Basic assumption - Satisfied customers continue to purchase products from the same company and dissatisfied customers will purchase from other companies. 49

Customer Satisfaction Surveys • As long as market competition exists, then customer satisfaction will be the key to customer loyalty. • Even in a monopoly, customer dissatisfaction will encourage the development and emergence of competition. • Answer to “How good is good enough” Better than the competition. 50

Customer Satisfaction Surveys • Customer Satisfaction Management Process should cover: 1. Measure and monitor overall CS of own company and competition over time. 2. Analyze specific satisfaction dimensions, attributes, strengths, weaknesses, and priorities 3. Perform root cause analysis to identify inhibitors of each dimension and attribute 4. Set satisfaction targets (overall and specific), taking competition into consideration 5. Formulate and implement action plans based on results of 1 -4. 51

Customer Satisfaction Summary – Customer Satisfaction at both the product level and company level must be analyzed and managed. – Company data is valuable for a comprehensive approach to Total Quality Management (TQM) – Product data is valuable for identifying specific areas for product improvements – Challenge - knowing why customer’s chose a competitor. CS surveys only target customers who have chosen your company. May need independent consulting data for this. 52

Pop Quiz • Name several kinds of Customer Satisfaction survey 53

Software Quality Engineering CS 410 Class 11 c AS/400 Software Quality Management 54

AS/400 • What is it? – Application System/400 (AS/400) – A midrange based multi-user, multiprogramming operating system from IBM Rochester, MN • Who uses it? – Small, medium, and large Information Technology (IT) companies, as well as universities, and research organizations. – Customer base is estimated at 250, 000+ 55

AS/400 • How big is it? – Initial release (August 1988) 7. 1 million lines of code (7, 000 KLOC) – Approx. 10 new releases since GA (general availability) – Typical release has 2 million lines of new/changed code – Estimated size of current product 21. 1 million (21, 000 KLOC) lines of code (assuming a 70/30 split of new/changed code per release, and one release per year since GA) 56

AS/400 • Track Record – Market Driven Quality (MDQ - a system based on the TQM management style and corporate culture) implemented in 1990 – Benchmark studies against Motorola, IBM Houston (NASA Shuttle software), and other recognized leaders - 1990 – Received Malcolm Baldrige National Quality Award - 1990 – ISO 9000 Certification - 1992 57

AS/400 • Key AS/400 quality strategy: – – Defect Prevention Process (DPP) Focus on Design Review/Code Inspection (DR/CI) Component test improvements Departmental 5 -UP measurements (key metrics) • • • Customer Satisfaction Post-release defect rate Customer problem calls Fix Response time Number of defective fixes – Quality Recognition – Management commitment – Quality culture 58

AS/400 • AS/400 Software Quality Management System (SQMS) – Goal - to reduce the product defects and increase the customer satisfaction – Five key elements: • • • People In-process quality management Continuous process improvement post-GA product quality management Customer satisfaction management 59

AS/400 • People – Most important element of SQMS – Must be highly motivated to meet quality goals – Must be talented (and trained) to execute and improve the development process • In-process product quality management – Measure the results of various process steps – Determine if the product is on target for achieving the product quality goals – Help define action plans for quality improvements 60

AS/400 • Continuous process improvement – Provides a foundation for improving process – Triggered by customer reported defects, and DPP – Supported by tools and technology that promote process automation, increase defect discovery, or reduce defect injection • Post-GA product quality management – Addresses customer problems (both defect oriented and non defect oriented) 61

AS/400 • Post-GA product quality mgmt (cont. ) – Goals • Fix problems quickly • Fix problems with high quality • Learn from errors that were made • Gather valuable metrics • Feed next release requirements phase • Suggest process improvements 62

AS/400 • Customer satisfaction management – Understand overall customer satisfaction – Analyze customer satisfaction in regard to different parameters of the product – Define product requirements – Help prioritize problems based on customer satisfaction – Monitor critical situations and help to minimize impacts to customer’s business 63

AS/400 • Summary – “There are no silver bullets” – Many quality improvement techniques and solutions exist, but the key is placing them into practice systematically and persistently. – Lack of functional defects is the most basic measure of quality, however Customer Satisfaction represents the final evaluation of the product. 64

What is fanout in vlsi

What is fanout in vlsi Marina fanin

Marina fanin What does the pop in pop art stand for

What does the pop in pop art stand for Fanout

Fanout Fanout

Fanout Cuprimdso

Cuprimdso 2% of 7 billion

2% of 7 billion Holes chapter 1-5 quiz

Holes chapter 1-5 quiz Poetic pop quiz

Poetic pop quiz Poetic pop quiz

Poetic pop quiz Brain pop scientific method

Brain pop scientific method Pop quiz definition

Pop quiz definition Maths pop quiz

Maths pop quiz Pop quiz games

Pop quiz games Swot analysis of davao region

Swot analysis of davao region Reasons of the seasons

Reasons of the seasons Deductive reasoning math examples

Deductive reasoning math examples Deductive vs inductive geometry

Deductive vs inductive geometry John kotter definition of leadership

John kotter definition of leadership Rivet snap head

Rivet snap head Media and popular culture

Media and popular culture Push and pop in microprocessor

Push and pop in microprocessor Pop music conventions

Pop music conventions Pop culture and body image

Pop culture and body image He built a small house called a cocoon around himself

He built a small house called a cocoon around himself Al capone does my shirts comprehension questions

Al capone does my shirts comprehension questions Who said the words go! kiss the world?

Who said the words go! kiss the world? Poetry wordsworth definition

Poetry wordsworth definition Non semantic elements

Non semantic elements Character traits of a tragic hero

Character traits of a tragic hero How does emerson define nonconformity

How does emerson define nonconformity What does peter drost define genocide as?

What does peter drost define genocide as? World war 2 brain pop

World war 2 brain pop Workshop pop art

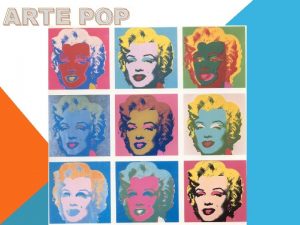

Workshop pop art Unterschied rock und pop

Unterschied rock und pop Forms of pop culture

Forms of pop culture Pumpion shakespeare definition

Pumpion shakespeare definition Pop music facts

Pop music facts Best italian musicians

Best italian musicians Alexander hamilton bottle buddy

Alexander hamilton bottle buddy Modern thai music

Modern thai music Montale guerra fredda

Montale guerra fredda What is alliteration in song

What is alliteration in song Soda pop head

Soda pop head How to write banter

How to write banter Illustrator pop art dots

Illustrator pop art dots Are skinny pop bags recyclable

Are skinny pop bags recyclable Pop-q sınıflaması

Pop-q sınıflaması Centro pop blumenau

Centro pop blumenau Calculation of birth rate

Calculation of birth rate 70 luvun naisartistit

70 luvun naisartistit Parallel slide mechanisms pop up

Parallel slide mechanisms pop up Pop muzika

Pop muzika Contemporary music genre

Contemporary music genre Pop project management

Pop project management 1930 pop culture

1930 pop culture Pop culture activities

Pop culture activities Pop culture definition

Pop culture definition Pop art landscape

Pop art landscape Pop art landscape

Pop art landscape Modern pop art sculpture

Modern pop art sculpture Pop art characteristics

Pop art characteristics Pop art 1950 60

Pop art 1950 60 Que rechaza el expresionismo

Que rechaza el expresionismo Pop art 1950-1960

Pop art 1950-1960