Polar Opposites Next Generation Languages Architectures Kathryn S

- Slides: 41

Polar Opposites: Next Generation Languages & Architectures Kathryn S Mc. Kinley The University of Texas at Austin

Collaborators • Faculty – Steve Blackburn, Doug Burger, Perry Cheng, Steve Keckler, Eliot Moss, • Graduate Students – Xianglong Huang, Sundeep Kushwaha, Aaron Smith, Zhenlin Wang (MTU) • Research Staff – Jim Burrill, Sam Guyer, Bill Yoder

Computing in the Twenty-First Century New and changing architectures § Hitting the microprocessor wall § TRIPS - an architecture for future technology Object-oriented languages § Java and C# becoming mainstream Key challenges and approaches § § Memory gap, parallelism Language & runtime implementation efficiency Orchestrating a new software/hardware dance Break down artificial system boundaries

Technology Scaling Hitting the Wall Analytically … Qualitatively … 35 nm 70 nm 100 nm 130 nm 20 mm chip edge Either way … Partitioning for on-chip communication is key

End of the Road for Out-of-Order Super. Scalars • Clock ride is over – Wire and pipeline limits – Quadratic out-of-order issue logic – Power, a first order constraint • Major vendors ending processor lines • Problems for any architectural solution – ILP - instruction level parallelism – Memory latency

Where are Programming Languages? • High Productivity Languages – Java, C#, Matlab, S, Python, Perl • High Performance Languages – C/C++, Fortran • Why not both in one? – Interpretation/JIT vs compilation – Language representation • Pointers, arrays, frequent method calls, etc. – Automatic memory management costs Ô Obscure ILP and memory behavior

Outline • TRIPS – Next generation tiled EDGE architecture – ILP compilation model • Memory system performance – Garbage collection influence – The GC advantage • Locality, locality • Online adaptive copying – Cooperative software/hardware caching

TRIPS • Project Goals – Fast clock & high ILP in future technologies – Architecture sustains 1 TRIPS in 35 nm technology – Cost-performance scalability – Find the right hardware/software balance • New balance reduces hardware complexity & power – New compiler responsibilities & challenges • Hardware/Software Prototype – Proof-of-concept of scalability and configurability – Technology transfer

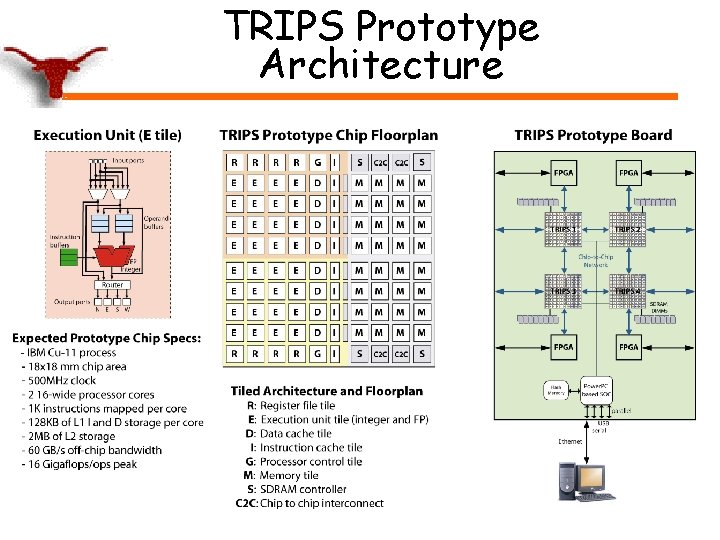

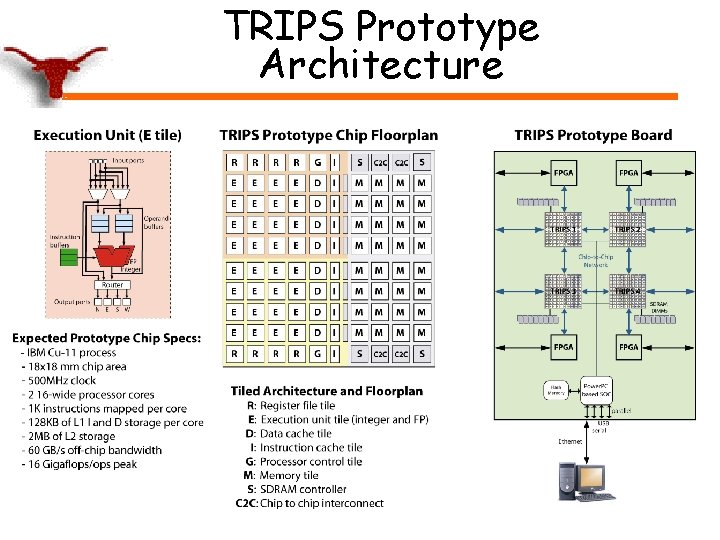

TRIPS Prototype Architecture

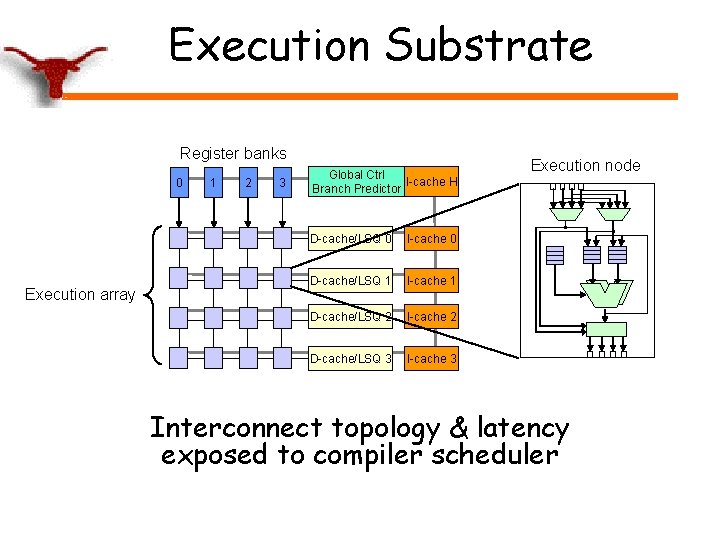

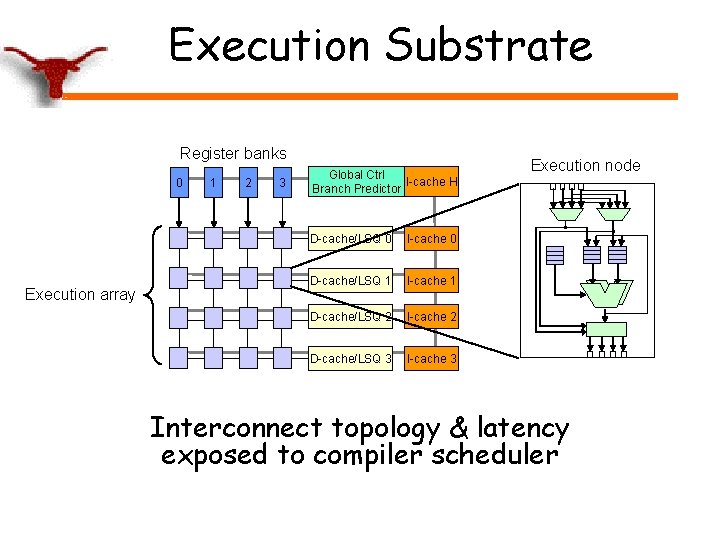

Execution Substrate Register banks 0 Execution array 1 2 3 Global Ctrl I-cache H Branch Predictor D-cache/LSQ 0 I-cache 0 D-cache/LSQ 1 I-cache 1 D-cache/LSQ 2 I-cache 2 D-cache/LSQ 3 I-cache 3 Execution node Interconnect topology & latency exposed to compiler scheduler

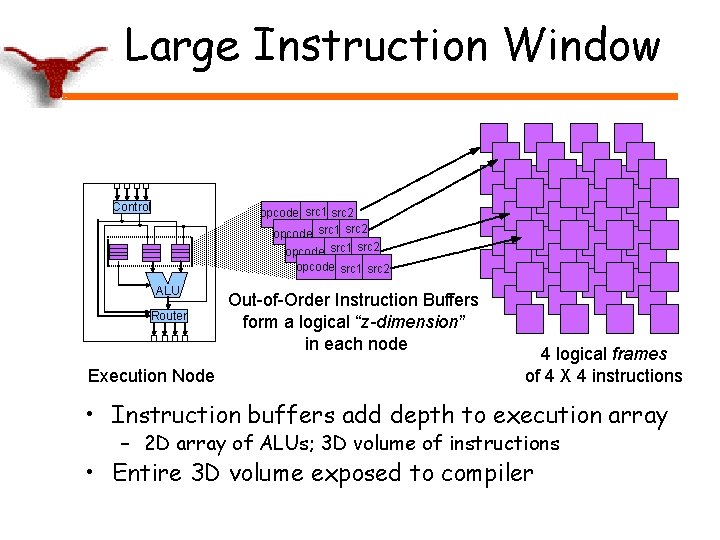

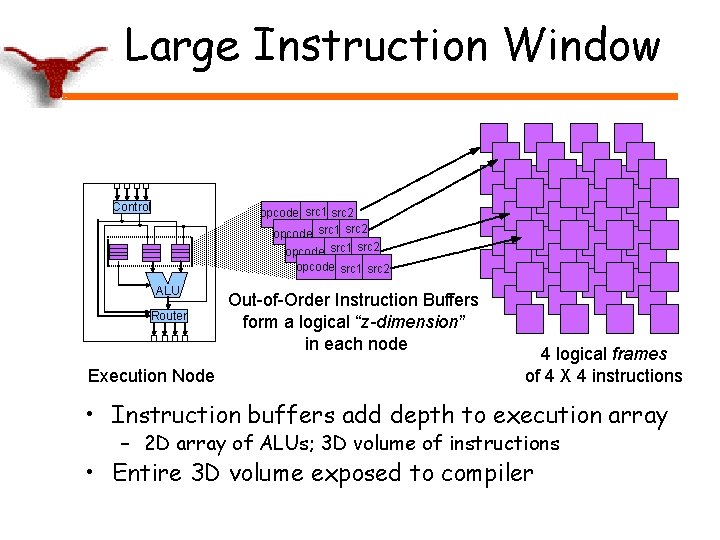

Large Instruction Window Control opcode src 1 src 2 ALU Router Execution Node Out-of-Order Instruction Buffers form a logical “z-dimension” in each node 4 logical frames of 4 X 4 instructions • Instruction buffers add depth to execution array – 2 D array of ALUs; 3 D volume of instructions • Entire 3 D volume exposed to compiler

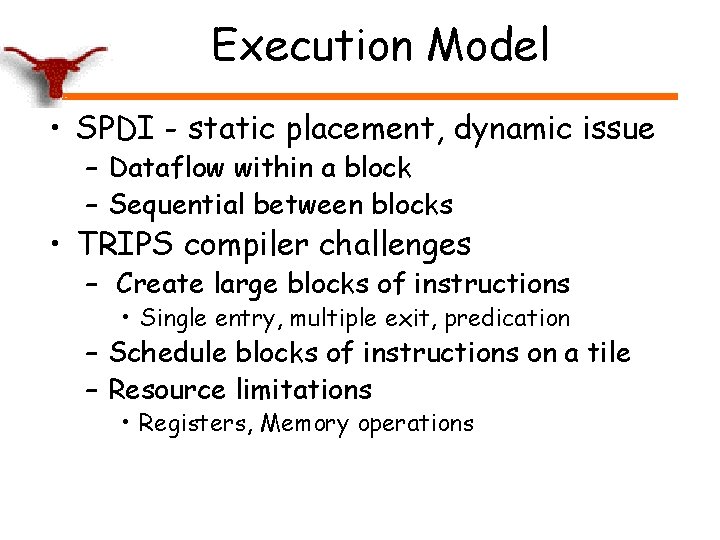

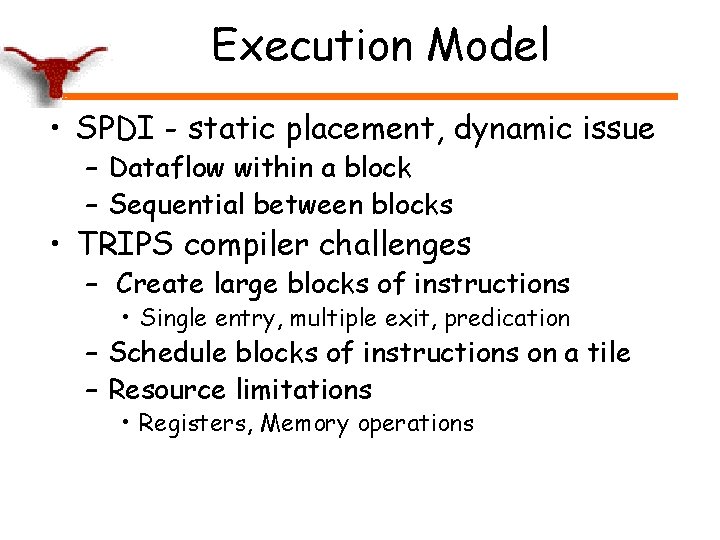

Execution Model • SPDI - static placement, dynamic issue – Dataflow within a block – Sequential between blocks • TRIPS compiler challenges – Create large blocks of instructions • Single entry, multiple exit, predication – Schedule blocks of instructions on a tile – Resource limitations • Registers, Memory operations

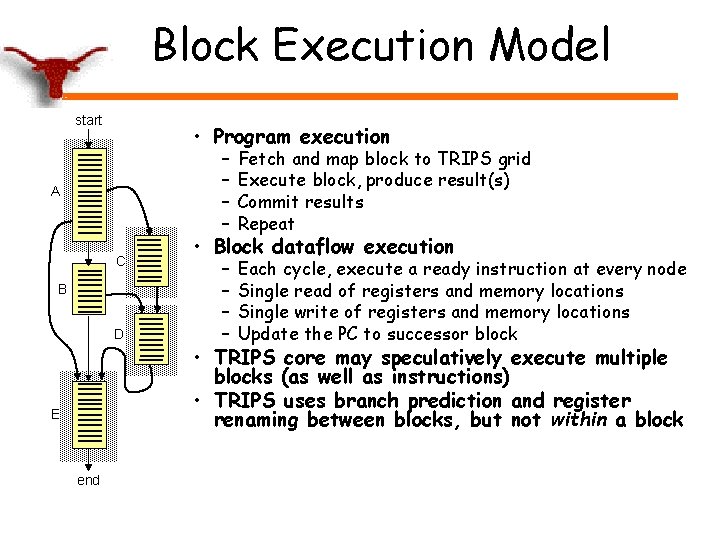

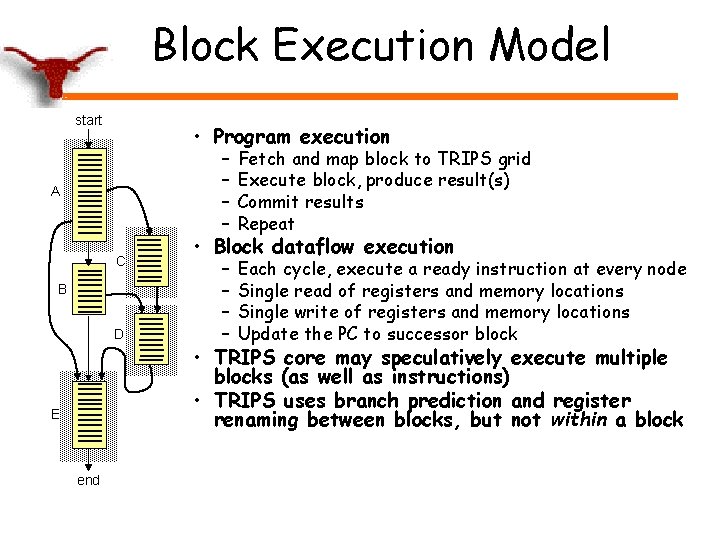

Block Execution Model start • Program execution A C B D E end – – Fetch and map block to TRIPS grid Execute block, produce result(s) Commit results Repeat – – Each cycle, execute a ready instruction at every node Single read of registers and memory locations Single write of registers and memory locations Update the PC to successor block • Block dataflow execution • TRIPS core may speculatively execute multiple blocks (as well as instructions) • TRIPS uses branch prediction and register renaming between blocks, but not within a block

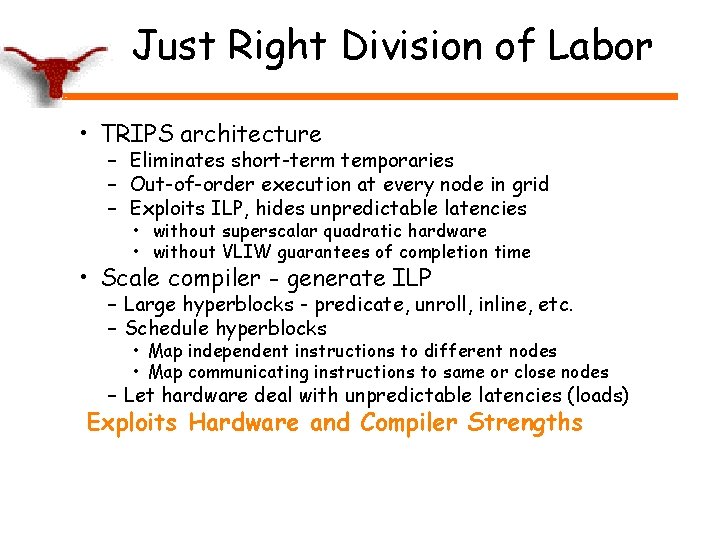

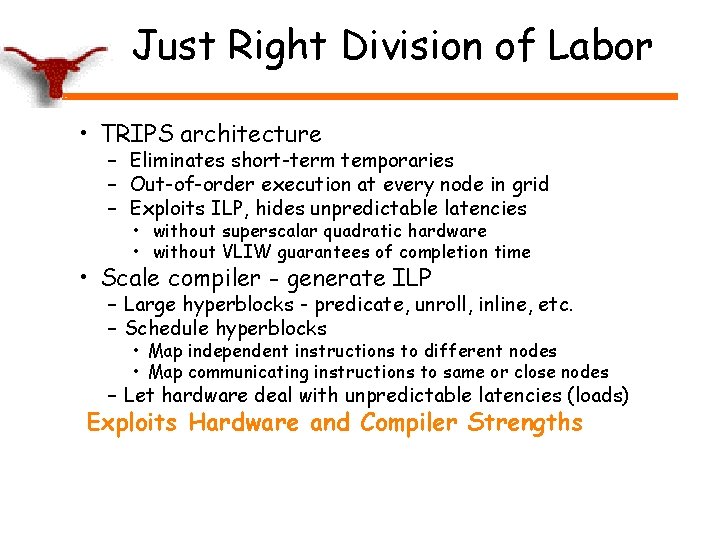

Just Right Division of Labor • TRIPS architecture – Eliminates short-term temporaries – Out-of-order execution at every node in grid – Exploits ILP, hides unpredictable latencies • without superscalar quadratic hardware • without VLIW guarantees of completion time • Scale compiler - generate ILP – Large hyperblocks - predicate, unroll, inline, etc. – Schedule hyperblocks • Map independent instructions to different nodes • Map communicating instructions to same or close nodes – Let hardware deal with unpredictable latencies (loads) Exploits Hardware and Compiler Strengths

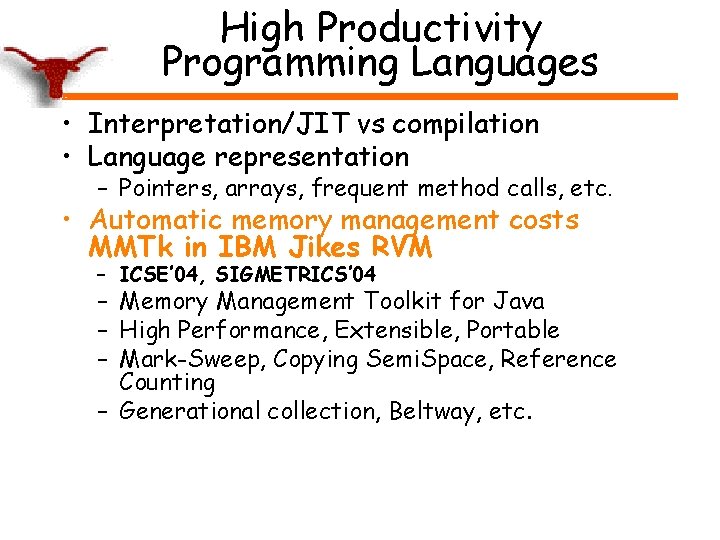

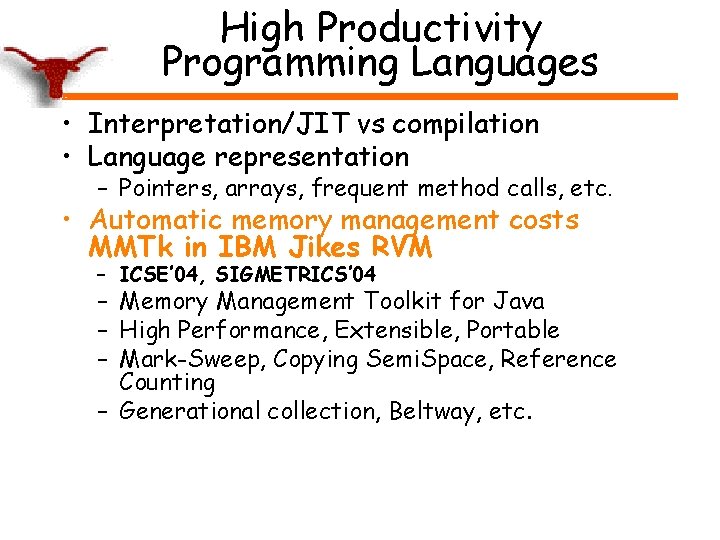

High Productivity Programming Languages • Interpretation/JIT vs compilation • Language representation – Pointers, arrays, frequent method calls, etc. • Automatic memory management costs MMTk in IBM Jikes RVM – ICSE’ 04, SIGMETRICS’ 04 – Memory Management Toolkit for Java – High Performance, Extensible, Portable – Mark-Sweep, Copying Semi. Space, Reference Counting – Generational collection, Beltway, etc.

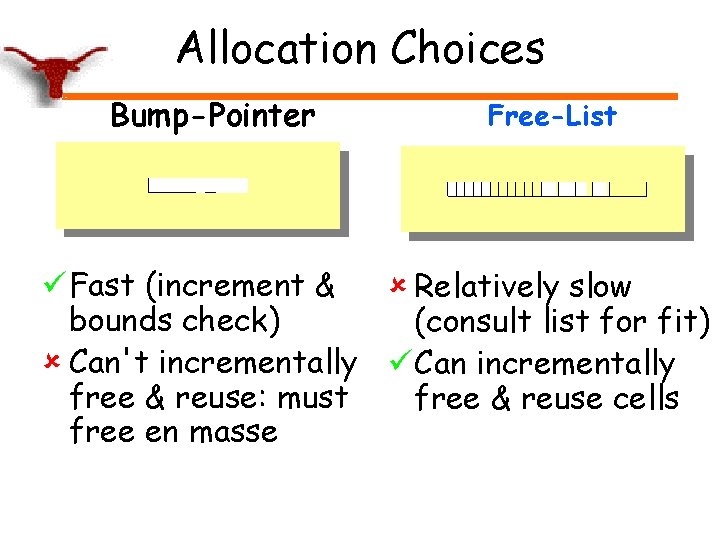

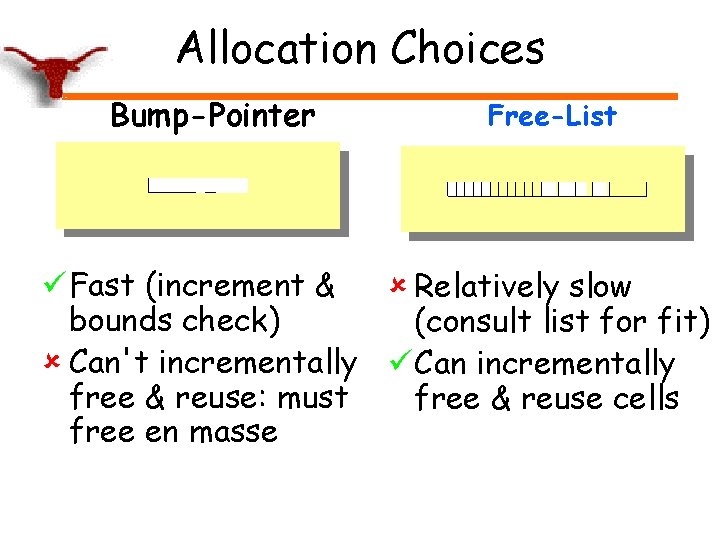

Allocation Choices Bump-Pointer Free-List ü Fast (increment & û Relatively slow bounds check) (consult list for fit) û Can't incrementally ü Can incrementally free & reuse: must free & reuse cells free en masse

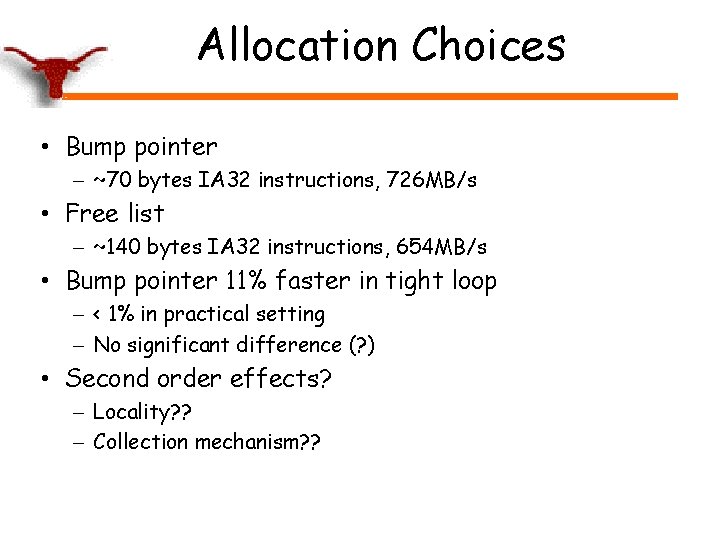

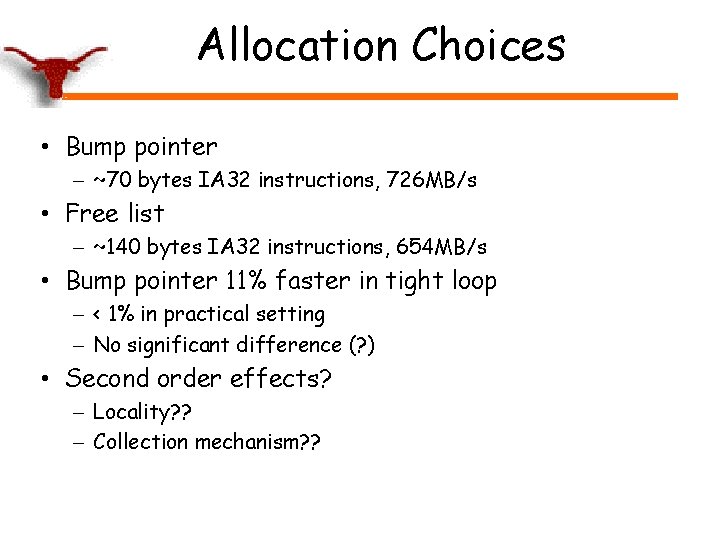

Allocation Choices • Bump pointer – ~70 bytes IA 32 instructions, 726 MB/s • Free list – ~140 bytes IA 32 instructions, 654 MB/s • Bump pointer 11% faster in tight loop – < 1% in practical setting – No significant difference (? ) • Second order effects? – Locality? ? – Collection mechanism? ?

Implications for Locality • Compare SS & MS mutator – Mutator time – Mutator memory performance: L 1, L 2 & TLB

javac

pseudojbb

db

Locality & Architecture

MS/SS Crossover 1. 6 GHz PPC

MS/SS Crossover 1. 9 GHz AMD

MS/SS Crossover 2. 6 GHz P 4

MS/SS Crossover 3. 2 GHz P 4

MS/SS Crossover locality space 2. 6 GHz 3. 2 GHz 1. 9 GHz 1. 6 GHz

Locality in Memory Management • Explicit memory management on its way out – Key GC vs Explicit MM insights 20 yrs old – Technology has and is changing • Generational and Beltway Collectors – Significant collection time benefits over full heap collectors – Collect young objects – Infrequently collect old space – Copying nursery attains similar locality effects as full heap

Where are the Misses? Generational Copying Collector

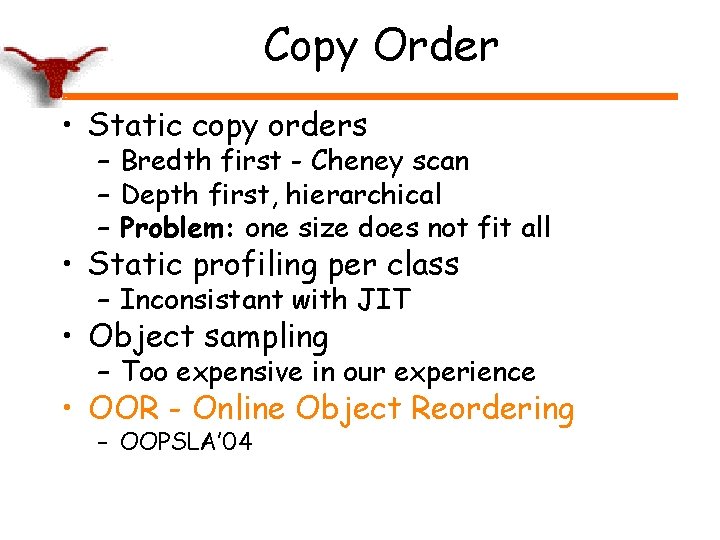

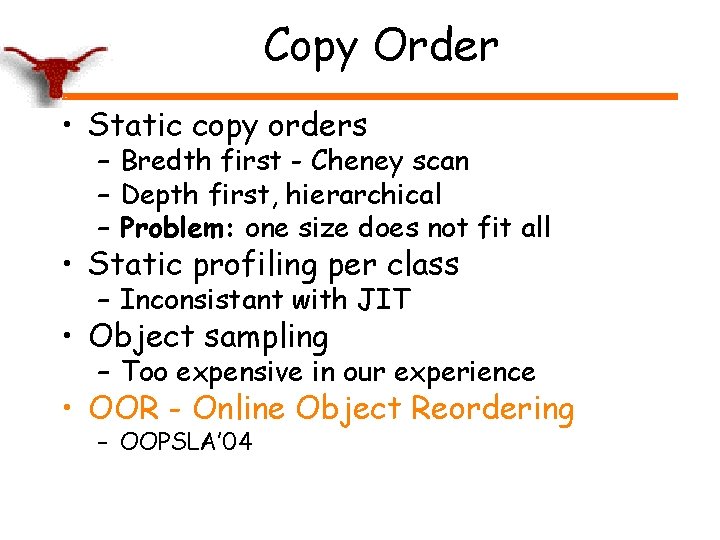

Copy Order • Static copy orders – Bredth first - Cheney scan – Depth first, hierarchical – Problem: one size does not fit all • Static profiling per class – Inconsistant with JIT • Object sampling – Too expensive in our experience • OOR - Online Object Reordering – OOPSLA’ 04

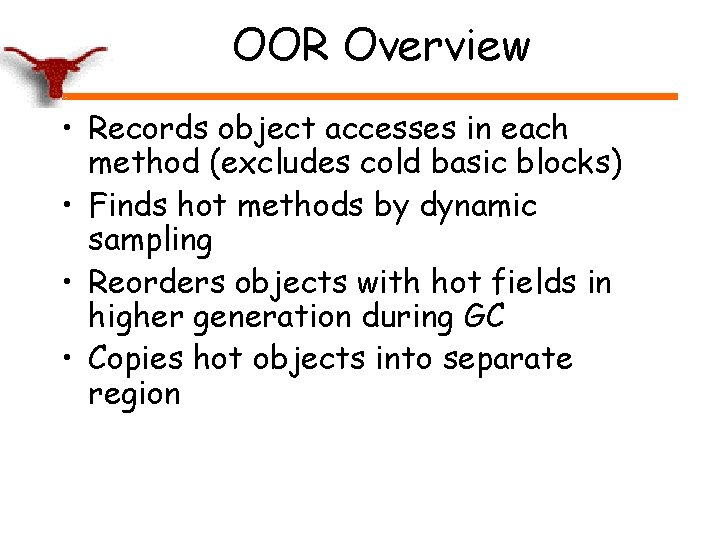

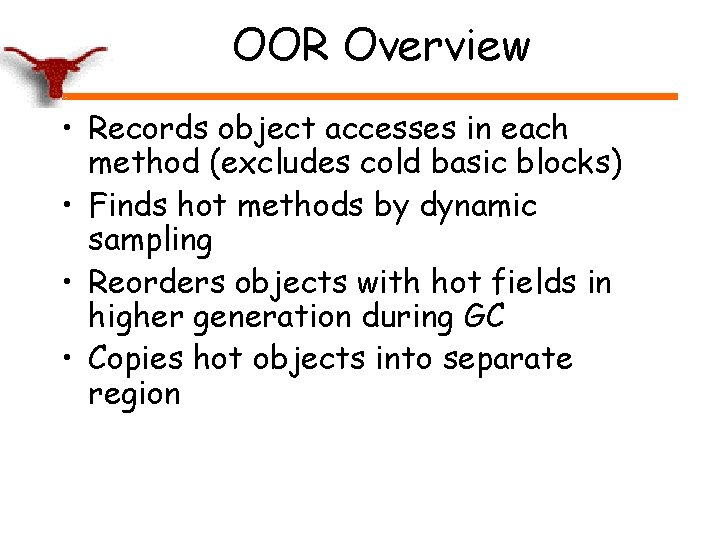

OOR Overview • Records object accesses in each method (excludes cold basic blocks) • Finds hot methods by dynamic sampling • Reorders objects with hot fields in higher generation during GC • Copies hot objects into separate region

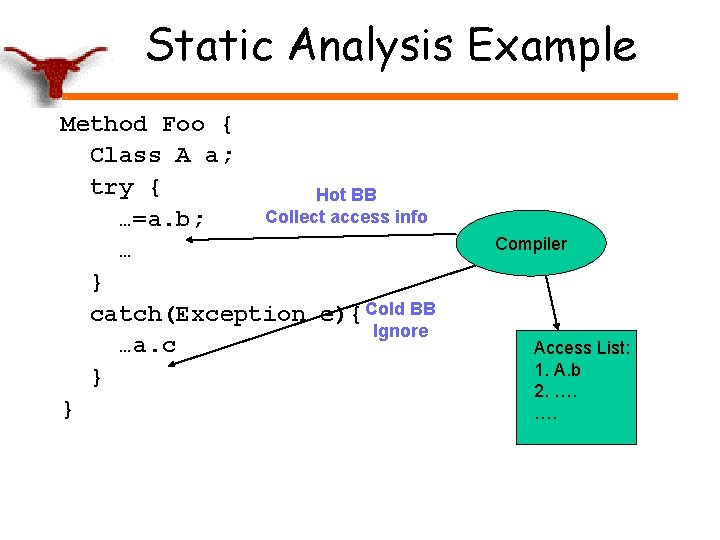

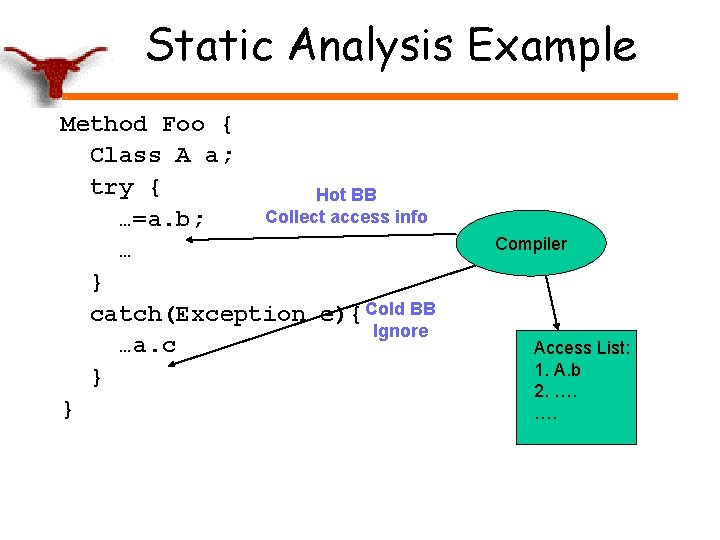

Static Analysis Example Method Foo { Class A a; try { Hot BB Collect access info …=a. b; … } catch(Exception e){ Cold BB Ignore …a. c } } Compiler Access List: 1. A. b 2. …. ….

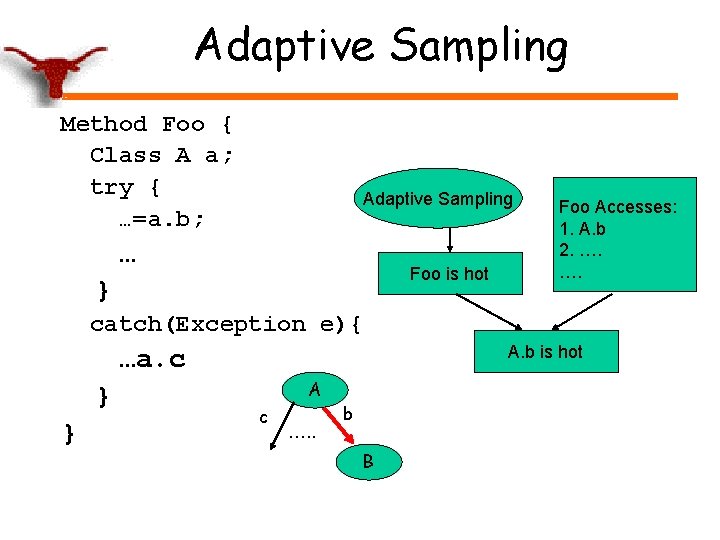

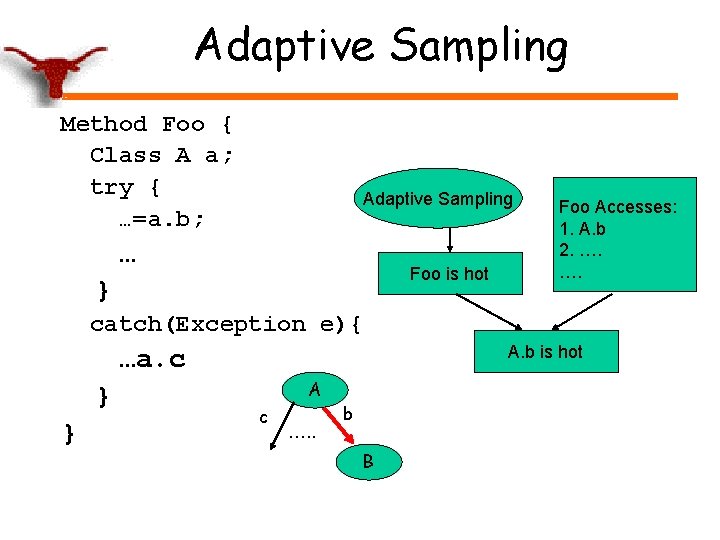

Adaptive Sampling Method Foo { Class A a; try { …=a. b; Adaptive Sampling … Foo is hot } Foo Accesses: 1. A. b 2. …. …. catch(Exception e){ A. b is hot …a. c } } A c …. . b B

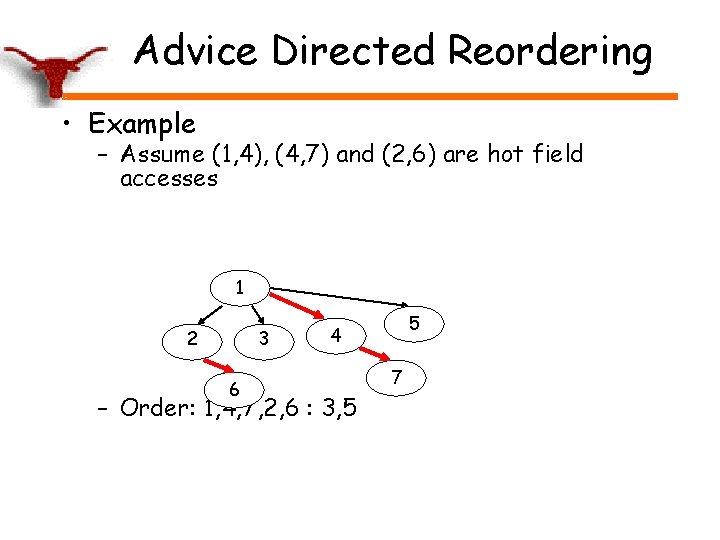

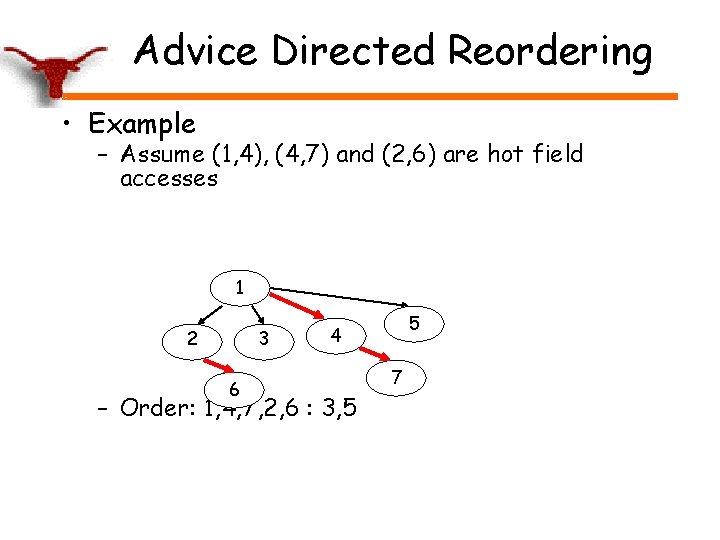

Advice Directed Reordering • Example – Assume (1, 4), (4, 7) and (2, 6) are hot field accesses 1 2 3 6 5 4 – Order: 1, 4, 7, 2, 6 : 3, 5 7

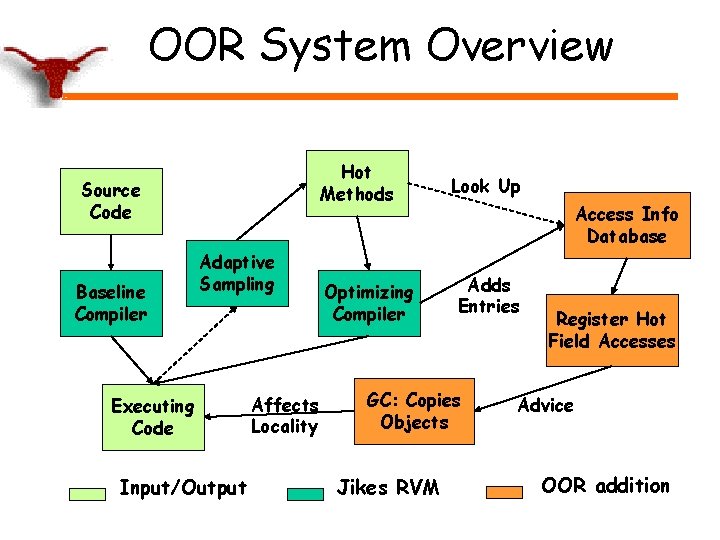

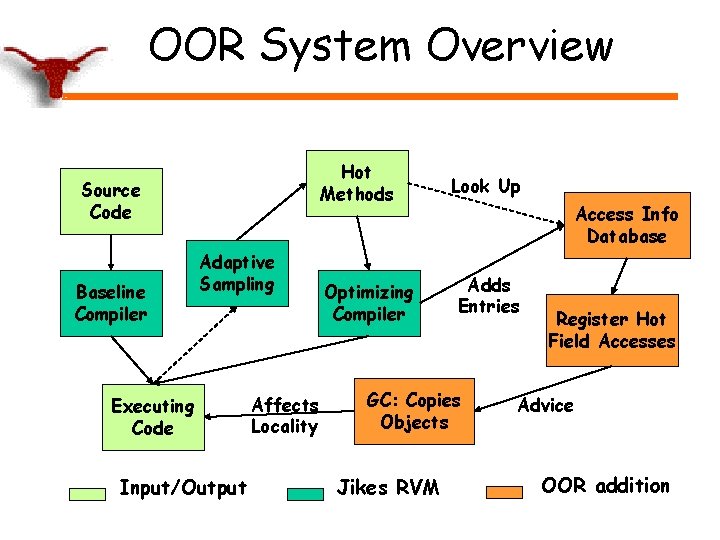

OOR System Overview Hot Methods Source Code Baseline Compiler Adaptive Sampling Executing Code Input/Output Affects Locality Optimizing Compiler Look Up Access Info Database Adds Entries GC: copying Copies Objects objects Jikes RVM Register Hot Field Accesses Advice OOR addition

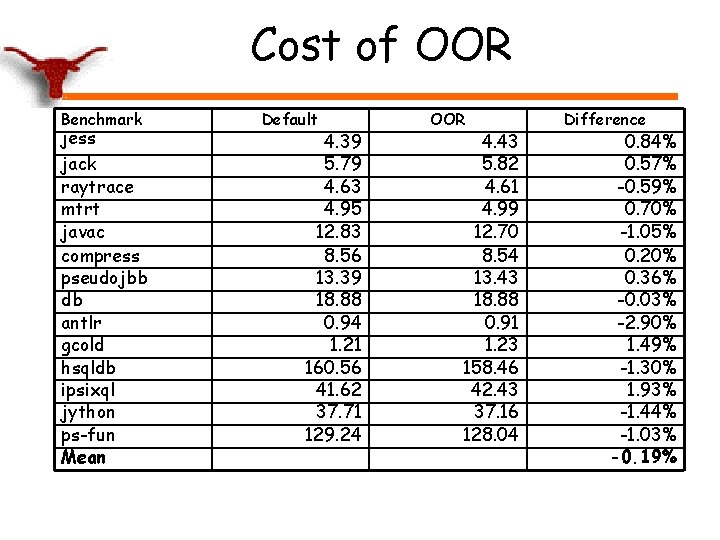

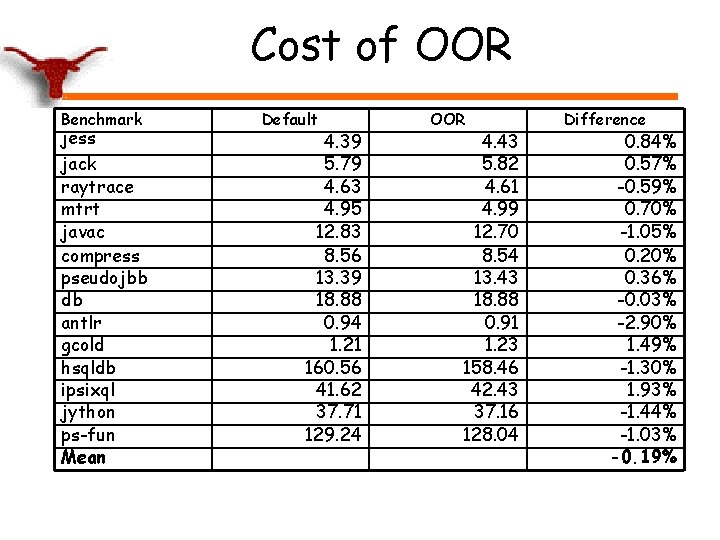

Cost of OOR Benchmark jess jack raytrace mtrt javac compress pseudojbb db antlr gcold hsqldb ipsixql jython ps-fun Mean Default 4. 39 5. 79 4. 63 4. 95 12. 83 8. 56 13. 39 18. 88 0. 94 1. 21 160. 56 41. 62 37. 71 129. 24 OOR 4. 43 5. 82 4. 61 4. 99 12. 70 8. 54 13. 43 18. 88 0. 91 1. 23 158. 46 42. 43 37. 16 128. 04 Difference 0. 84% 0. 57% -0. 59% 0. 70% -1. 05% 0. 20% 0. 36% -0. 03% -2. 90% 1. 49% -1. 30% 1. 93% -1. 44% -1. 03% -0. 19%

Performance db

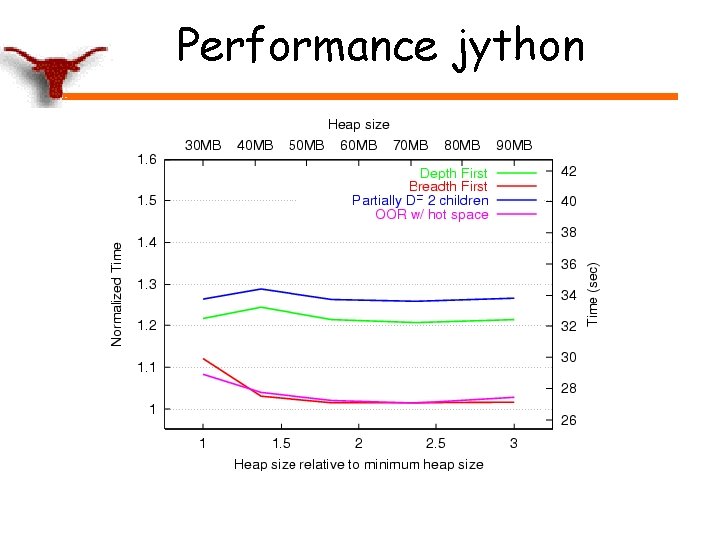

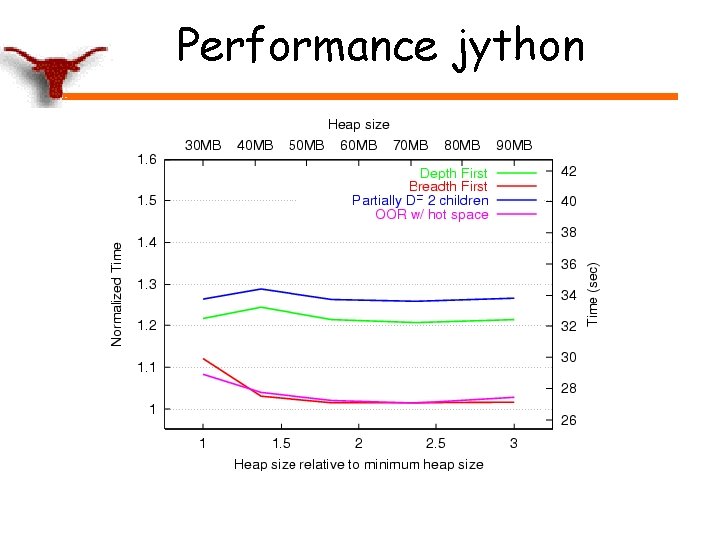

Performance jython

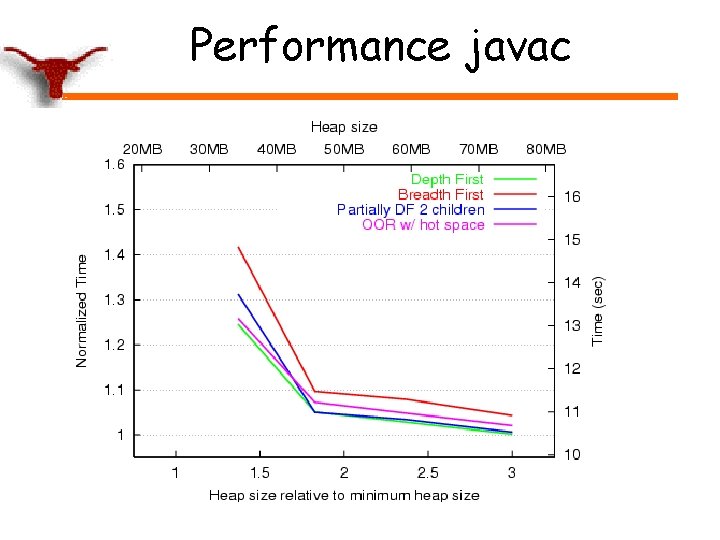

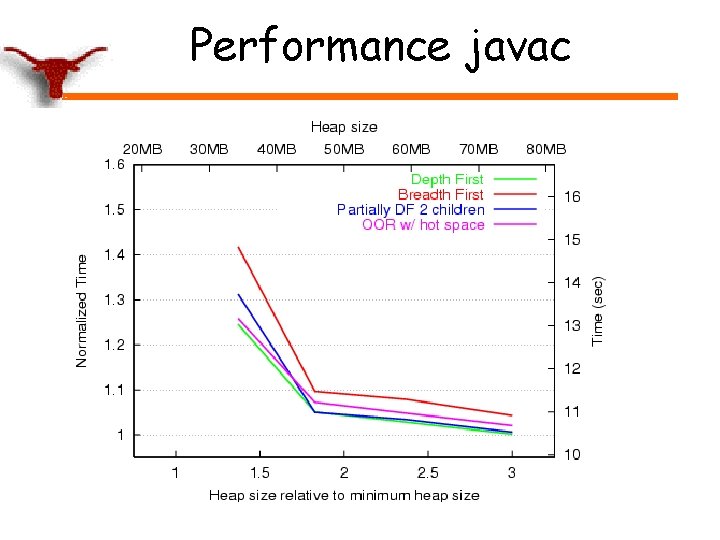

Performance javac

Software is not enough Hardware is not enough • Problem: inefficient use of cache • Hardware limitations: set associativity, cannot predict the future • Cooperative Software/Hardware Caching – Combines high level compiler analysis with dynamic miss behavior • Lightweight ISA support conveys compiler’s global view to hardware – Compiler-guided cache replacement (evict-me) – Compiler-guided region prefetching – ISCA’ 03, PACT’ 02

Exciting Times • Dramatic architectural changes – Execution tiles – Cache & Memory tiles • Next generation system solutions – Moving hardware/software boundaries – Online optimizations – Key compiler challenges (same old…) ILP and Cache Memory Hierarchy

X.next = x.next.next

X.next = x.next.next First gen antipsychotics

First gen antipsychotics Lord you are good and your mercy is forever

Lord you are good and your mercy is forever Uniones metalicas ejemplos

Uniones metalicas ejemplos Qumica

Qumica Essential amino acids arginine

Essential amino acids arginine Polar attractions are

Polar attractions are Polar and nonpolar

Polar and nonpolar Difference between polar and nonpolar dielectrics

Difference between polar and nonpolar dielectrics The next generation

The next generation Clinical judgement model

Clinical judgement model Deca prepares emerging leaders and entrepreneurs

Deca prepares emerging leaders and entrepreneurs Next generation backup

Next generation backup Next generation equipment committee

Next generation equipment committee Next generation lms

Next generation lms Palo alto traps gartner

Palo alto traps gartner Next-generation digital services

Next-generation digital services Struttura pnrr

Struttura pnrr Next generation math standards grade 2

Next generation math standards grade 2 Anil vasudeva

Anil vasudeva Electrical energy

Electrical energy Nys next generation math standards

Nys next generation math standards Next generation assessments

Next generation assessments Next generation enterprise applications

Next generation enterprise applications Next generation soc

Next generation soc Olga vinnere pettersson

Olga vinnere pettersson Educating the next generation of leaders

Educating the next generation of leaders Palo alto networks next generation security platform

Palo alto networks next generation security platform Next generation solution

Next generation solution Next generation sequencing

Next generation sequencing The next generation

The next generation Foothill deca

Foothill deca Next generation trading platform

Next generation trading platform Palo alto networks next generation security platform

Palo alto networks next generation security platform Next gen emr

Next gen emr Financial literacy grade 8

Financial literacy grade 8 Next generation personal finance standards

Next generation personal finance standards Next generation access control

Next generation access control Ride ngsa

Ride ngsa Next-generation smart contracts

Next-generation smart contracts Next generation science standards california

Next generation science standards california Konsep ngn

Konsep ngn